基于机器视觉的鸡胴体断翅快速检测技术

吴江春,王虎虎,徐幸莲

基于机器视觉的鸡胴体断翅快速检测技术

吴江春,王虎虎※,徐幸莲

(南京农业大学肉品加工与质量控制教育部重点实验室,南京 210095)

为实现肉鸡屠宰过程中断翅鸡胴体的快速检测,提高生产效率,该研究利用机器视觉系统采集了肉鸡屠宰线上的1 053张肉鸡胴体图,构建了一种快速识别断翅缺陷的方法。通过机器视觉装置采集鸡胴体正视图,经图像预处理后分别提取鸡胴体左右两端到质心的距离及其差值(d1、d2、dc)、两翅最低点高度及其差值(h1、h2、hc)、两翅面积及其比值(S1、S2、Sr)、矩形度()和宽长比(rate)共11个特征值,并通过主成分分析降维至8个主成分。建立线性判别模型、二次判别模型、随机森林、支持向量机、BP神经网络和VGG16模型,比较模型的F1分数和总准确率,在所有模型组合中,以VGG16模型的F1分数和总准确率最高,分别为94.35%和93.28%,平均预测速度为10.34张/s。利用VGG16建立的模型有较好的分类效果,可为鸡胴体断翅的快速识别与分类提供技术参考。

机器视觉;机器学习;鸡胴体;断翅检测

0 引 言

近年来,受国内外禽流感、非洲猪瘟以及新冠肺炎疫情的影响,中国已逐步取消活禽市场交易,推广集中宰杀,肉鸡行业从活禽交易向以冰鲜鸡或熟制品出售的方式转型升级[1]。随着鸡肉市场需求的增加,消费者对鸡肉品质的要求也逐渐提高,他们常通过感官评定来选择是否购买冰鲜鸡,外观上有明显缺陷的鸡胴体不能被消费者青睐。在肉鸡屠宰过程中,鸡的品种、养殖期、屠宰工艺和设备等因素会造成不同程度的鸡胴体损伤[2]。据调研,生产线上主要的鸡胴体缺陷类型有淤血、断骨和皮损,断骨常发生在两翅区域。虽然大多数肉鸡屠宰厂在生产的各个环节基本实现了机械化,但鸡胴体的质检仍需经验丰富的工人用肉眼识别判断[3]。生产线断翅的人工判断标准为人工检查时无明显肉眼可见的翅膀变形(外/斜翻、骨折、下垂)、翅骨外露、区部残缺等特征。判定结果严重依赖经验,不同检测工人之间的误差较大,且显著受个人主观因素影响,无法避免漏检与误检的发生,从而造成经济损失。若未检出的断翅品流入市场将降低企业产品整体品质,损害企业形象。因此亟需一种技术代替工人进行高效、客观的检测。机器视觉技术具有人眼视物的功能,能对被识别物进行分析和判断[4]。其基本组成可分为硬件和软件系统,其中硬件系统由光源、相机、工控机和计算机组成,软件系统包括计算机软件等组分[5-8]。随着我国智能制造的不断深入,机器视觉凭借高效率、高精确度、无损等优势广泛应用于各个领域[9]。在农业领域,机器视觉可用于水果、蔬菜、坚果等农产品的缺陷检测和分级,或用于农产品生长过程的监测和病虫害的控制[10-13]。此外机器视觉技术还可用于其他食品或食品原料的外观品质、新鲜度的检测和分级,如牛肉新鲜度的测定[14]、双黄蛋的识别[15]、饼干外形残缺品的检出与分类[16]。在禽类屠宰加工过程中,机器视觉可用于鸡胴体及其分割组分的重量预测[7,17-21]和品质检测[22-24]、自动掏膛[25-27]和自动分割[28]等研究。然而现有研究中鸡胴体品质检测的研究对象大多是已分割的鸡翅、鸡胸等组分,对整只鸡胴体的研究较少,此外品质检测研究的缺陷类型集中在淤血、表面污染物、新鲜度、木质化程度等方面,对鸡胴体断翅识别与检测存在研究空白。本研究通过机器视觉系统采集鸡胴体正视图,经过图像预处理后提取特征值,建立快速识别鸡胴体断翅的模型,为鸡胴体品质检测提供技术参考,对促进肉鸡屠宰加工业自动化与智能化、减少工厂劳动力使用、提高生产效率具有一定意义。

1 材料与方法

1.1 试验材料

试验对象为肉鸡胴体,于江苏省某大型肉鸡屠宰线采集图片。从生产线上收集经工人质检的断翅鸡胴体和合格鸡胴体,统一采集其正视图,共获得1 053张样本图片,其中断翅鸡胴体553只,合格鸡胴体500只。代表性断翅和正常样本如图1所示。

图1 部分断翅品和合格品图

1.2 图像采集装置

本研究中图像采集装置各组分的安装和连接方法参考赵正东等[29]研究,安装方位图如图2所示。

图2 图像采集装置简图

1.3 鸡胴体断翅检测方法

1.3.1 图像的预处理

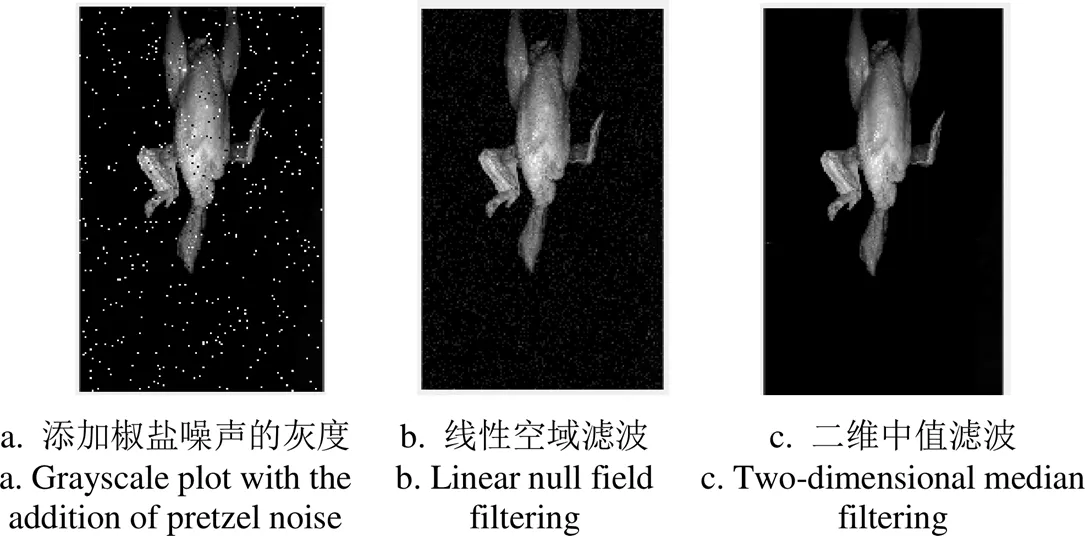

图像噪声是指图像中造成干扰的无用信息,按来源可分为内部噪声和外部噪声,外部噪声由外界环境的变化引起,如光照强度、拍摄背景等,内部噪声由机器视觉系统内部的抖动、数据传输等因素造成[30-31]。图像预处理可减少噪声,强化图像的特征,利于后续提取特征值[32-33]。图像预处理方法有灰度化、图像增强、图像分割和形态学处理等,本研究选用加权平均值法作为灰度化方法,在图像增强中,对比了低通滤波、高通滤波、同态滤波、线性空域滤波和二维中值滤波对图像中噪声的去除效果,根据图像处理前后清晰度的变化选择合适的图像增强方法。如图3所示,低通滤波、高通滤波处理降低了图像的清晰度,同态滤波处理使图像的亮度增加,造成部分信息的丢失,线性空域滤波和二维中值滤波处理后图像的清晰度都得到了提升,为了选择最优,人为在灰度图上增加椒盐噪声,二者对图像中椒盐噪声的去除效果如图4所示。通过观察可以发现二维中值滤波法的处理效果优于线性空域滤波,最终选择二维中值滤波作为本研究的图像增强方法。

本研究比较了最大熵法阈值分割、Otsu阈值分割、迭代法阈值分割和K-means法的分割效果,最终结果如图5所示,这4种方法中以迭代法阈值分割的分割效果最好,因此选择迭代阈值法作为本研究的图像分割方法。

图4 对添加椒盐噪声的灰度图采取不同图像增强方法的处理效果

图5 不同图像分割方法处理效果

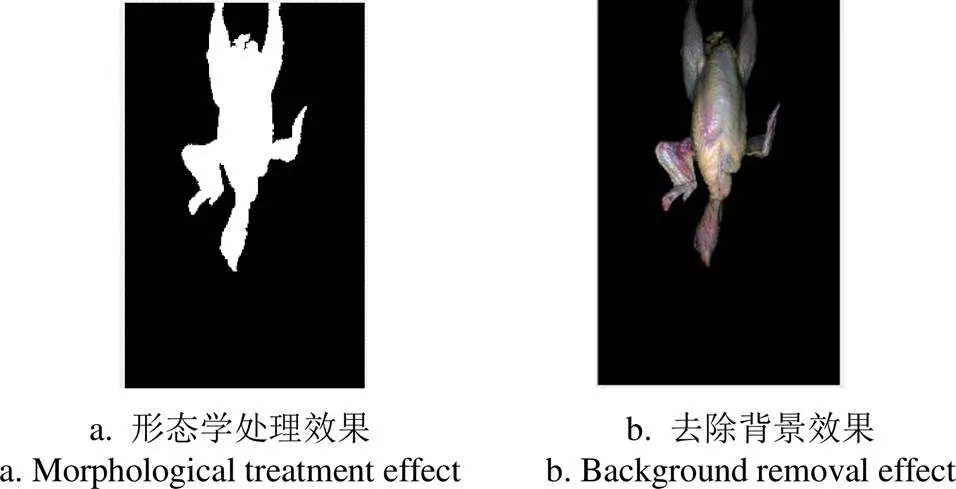

如图5c所示,采用迭代法阈值分割后的二值图仍存在孔洞和白色噪点,因此,在此基础上采用孔洞填充除去鸡胴体区域内部孔洞,选取最大连通域的方法来去除鸡胴体外的白色噪点,如图6a所示。将图6a与原始RGB图像点乘,得到除去背景干扰的RGB图,如图6b所示,并以此图作为提取特征值和建立模型的基础。

图6 图像预处理最终效果

1.3.2 特征值的提取

通过观察断翅品与合格品之间的差别,可发现由于断翅鸡胴体翅膀处骨头断裂,肌肉之间缺少支撑,在重力的作用下断翅向外展开,造成鸡胴体最左/最右端到鸡胴体质心的距离要大于合格鸡胴体,断翅一侧的鸡翅区域最低点高度大于合格鸡胴体,断翅一侧的鸡翅区域在图像上的投影面积要大于合格鸡胴体。基于以上的形状特征和几何特征,提取所有样本相关的特征值。

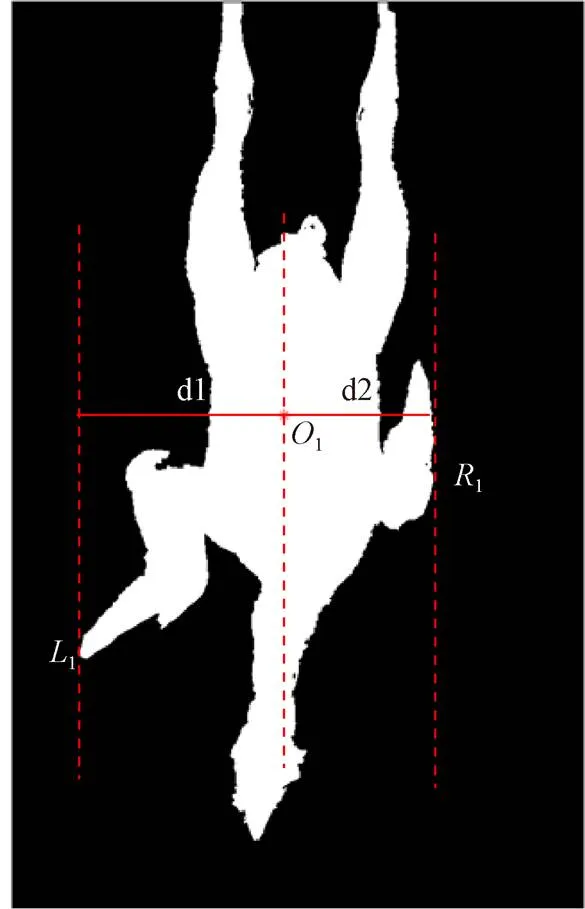

如图7所示,提取鸡胴体左右两端到质心的距离及其差值,利用MATLAB中regionprops()函数计算图像连通分量的质心,记为1(,),再计算出鸡胴体区域最左端和最右端的坐标位点,分别记为1(1,1),1(2,2)。图像左右两端到质心的距离及其差值的计算公式如下:

注:O1为鸡胴体质心;L1为鸡胴体最左端端点;R1为鸡胴体最右端端点;d1为鸡胴体最左端到质心距离;d2为鸡胴体最右端到质心距离。

图像最左端到质心的距离d1=-1,图像最右端到质心的距离d2=2-,左右两端到质心距离差值的绝对值dc= | d1-d2 |。

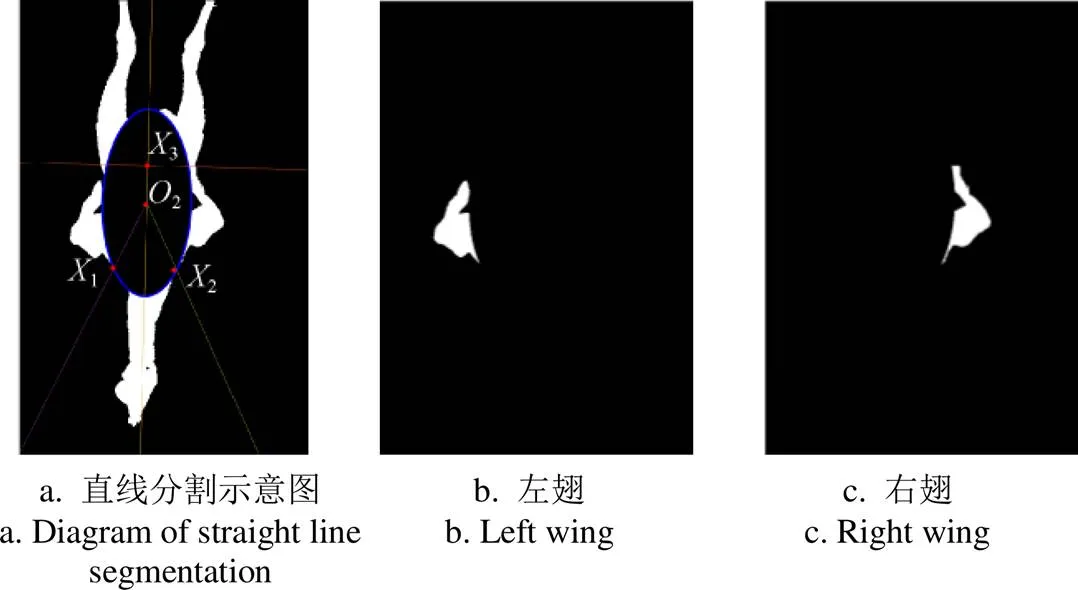

要计算图像中两翅的面积,首先要将鸡翅区域从胴体中分割出来。参考戚超等[18-19]利用鸡胴体躯干部位拟合椭圆来获取鸡胸长和宽的方式,并在此基础上做了改进,获得一种从鸡胴体图像上分割鸡翅区域的方法。该法可分为拟合椭圆和直线分割2个步骤,流程图如图8所示。如图9a所示,拟合椭圆能去除胴体躯干部位像素,一定程度上将两翅和胴体分离,但仅采取椭圆拟合的操作并不适用所有角度的鸡胴体,如图9 b~9d所示,2翅区域与鸡脖、鸡腿区域以及两腿之间还存在不同程度的粘连,因此还需对粘连的像素进行直线分割。

图8 分割鸡翅流程

图9 椭圆拟合分割效果

如图10所示,直线分割的步骤为:提取拟合椭圆的圆心,记为2(,),将圆心沿长轴向下平移三分之二的长轴长度,再沿短轴分别向左向右平移三分之二的短轴长度,得到点1和2,连接21和22,这两条直线可分割粘连的鸡翅和鸡脖像素。

将2沿长轴向上平移五分之二的长轴长度,得到点3,过3做一条平行于短轴的直线,该线可分割粘连的鸡翅和鸡腿像素。

连接2、3,该线可分割两腿间的粘连像素。

根据左右两翅在图中的位置关系,利用regionprops()函数选出左翅和右翅,再用bwarea()函数计算左翅、右翅像素面积,分别记为S1、S2,其中Sr的计算公式如下:

注:图a中,O2为拟合椭圆的圆心;X1为分割左翅的直线与椭圆的交点; X2为分割右翅的直线与椭圆的交点;X3为分割两腿的直线与椭圆的交点

如图11所示提取鸡胴体图像两翅最低点的高度及其差值,图像的大小为×,提取出左右两翅最低点的坐标记为2(3,3),2(4,4),两翅最低点高度的计算公式分别为h1=-3,h2=-4,二者差值记为hc,hc= | h1-h2 |。

注:为水平坐标轴;为纵坐标轴;为图像的长度;为图像的宽度;2为左翅最低点;2为右翅最低点;3为左翅最低点的纵坐标值;4为右翅最低点的纵坐标值。

Note:is the horizontal axis;is the vertical axis;is the length of the image;is the width of the image;2is the left wing minimum;2is the right wing minimum;3is the longitudinal coordinate value of the left wing minimum;4is the longitudinal coordinate value of the right wing minimum.

图11 鸡胴体两翅最低点的高度及其差值的提取

Fig.11 Heights of the lowest point in the two wing and their difference

如图12所示,提取鸡胴体图的矩形度和宽长比。矩形度是一种形状特征,指物体面积占其最小外接矩形的比值,表示物体对其最小外接矩形的充盈程度[34]。宽长比是指物体最小外接矩形的宽度和长度的比值,表示物体与正方形或圆形的接近程度,其值在0~1之间[35-36]。在本文中矩形度记为,宽长比记为。

1.3.3 模型的建立与测试

机器学习按照特征值提取方式可分为浅层机器学习和深度学习,浅层机器学习需手动提取特征值,即依靠研究者的经验和直觉选取合适的特征值,并将特征值输入算法,深度学习可自动提取特征值,特征提取的准确度更高[37-38]。本研究使用的浅层机器学习算法为线性判别模型(Linear Discriminant Analysis,LDA)、二次判别模型(Quadratic Discriminant Analysis,QDA)、随机森林(Random Forest,RF)、支持向量机(Support Vector Machines,SVM)、误差反向传播算法(Error Back- propagation Training,BP),深度学习算法为层数为16的视觉几何群网络(Visual Geometry Group Network,VGG16)。

图12 鸡胴体的最小外接矩形

在浅层机器学习模型的训练中,对上述11个特征值进行主成分分析,选取方差累计贡献率在95%以上的主成分作为模型的输入参数。分别将11个特征值和主成分作为输入参数输入LDA、QDA、RF、SVM、BP模型,在1 053张图中随机选取700张图片作为训练集(断翅品350张,合格品350张),353张图片作为测试集(断翅品203张,合格品150张)。

在深度学习模型VGG16的训练中,输入值为除去背景的鸡胴体RGB图,将1 053张图划分为训练集和测试集。其中训练集为800张图片(断翅品、合格品各400张),并从中划分出30%作为模型训练过程中的验证集,测试集为253张图片(断翅品153张,合格品100张)。所有模型的训练参数如下表所示。

表1 不同模型训练参数

注:LDA为线性判别模型;QDA为二次判别模型;RF为随机森林模型;SVM为支持向量机模型;BP为误差反向传播模型;VGG16为层数为16的视觉几何群网络。

Note: LDA is linear discriminant model; QDA is quadratic discriminant model; RF is random forest model; SVM is support vector machine model; BP is error back propagation model; VGG16 is visual geometric group network with 16 layers.

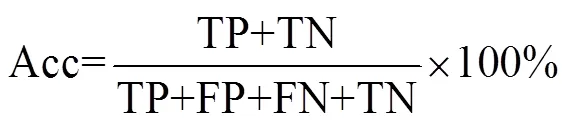

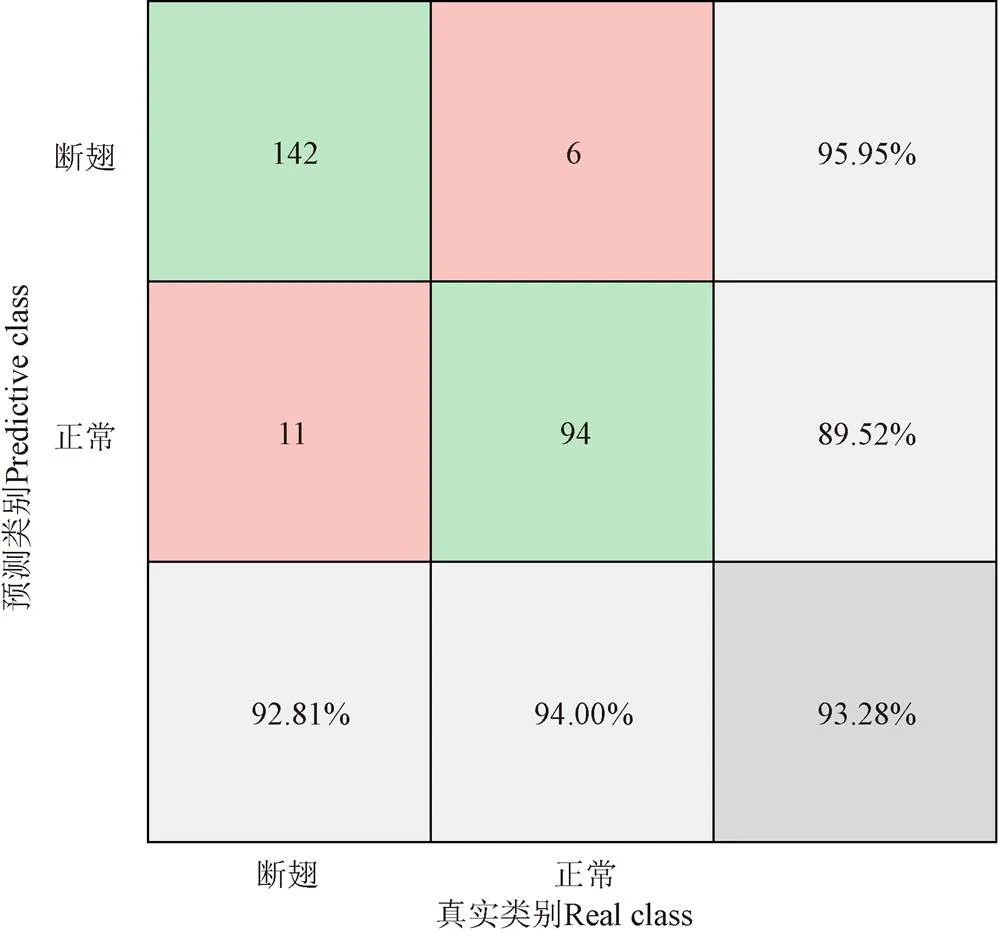

模型分类效果的评判指标为召回率(Recall,Rec)、精确度(Precision,Pre)、F1分数(F1-score)和总准确率(Accuracy,Acc),计算公式如下:

表2 分类结果混淆矩阵表

注:TP为正确地预测为断翅的样本数;TN为正确地预测为正常的样本数;FP错误地预测为断翅的样本数;FN错误地预测为正常的样本数。

Note: TP is the number of samples correctly predicted as broken wings; TN is the number of samples correctly predicted as normal; FP is the number of samples incorrectly predicted as broken wings; FN is the number of samples incorrectly predicted as normal.

2 结果与分析

2.1 主成分分析

如表3所示,前8个主成分的方差累计贡献率为95.20%,能代表11个特征值的大部分信息,并且第8个主成分开始特征值的降幅趋于平缓,因此选取前8个主成分作为模型的输入参数。

表3 主成分方差贡献率

2.2 模型分类结果

如表4所示,在浅层学习模型中,以特征值作为输入参数的 RF模型召回率最高,为91.13%;以特征值作为输入参数的SVM模型的识别精确度、F1分数均高于其它模型,分别为96.28%、92.58%;模型识别总准确率最高的模型是以特征值为输入参数的二次判别模型和支持向量机模型,均为91.78%。特征值经主成分分析降维后再输入分类模型中均降低了模型的总准确率,其原因是主成分分析降维后得到的数据是原始数据的近似表达,降维的同时也损失了原始数据结构,减少了原始数据特征,从而降低分类算法的准确率[38-40]。

表4 浅层学习模型分类效果

注:表中-1表示断翅;1表示正常。

Note: In the table, -1 indicates broken-wing; 1 indicates normal.

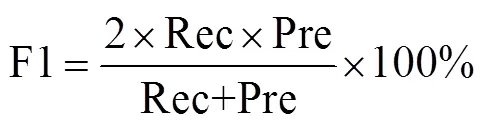

深度学习模型VGG16的分类效果如图13所示,153个断翅品中正确分类的有142个样本,误分类的有11个;100个合格品中正确分类的样本有94个,误分类的有6个。经计算可得该模型的召回率为92.81%,精确度为95.95%,F1分数为94.35%,总准确率为93.28%。在所有模型中,预测时间最短的是以主成分作为输入参数的SVM模型,可在0.000 9 s内判定353张样本图片,平均速度为3.92×105张/s;预测时间最长的模型是VGG16模型,判定253张样本图片需24.46 s,平均速度为10.34 张/s。

经过综合评判VGG16模型的F1分数和总准确率均高于其他识别模型,但其平均预测速度为10.34张/s,远慢于其它模型。其原因是VGG16是深度学习模型,具有结构复杂、层数多的特征,在提高模型的准确率和容错性的同时也导致了运行时间长的弊端[41-42]。因此在后续的研究中可通过简化代码、及时清理变量的方式提高代码的运行速度,或利用参数剪枝、参数量化、紧凑网络、参数共享等方式对深度学习模型进行压缩和增速,从而优化模型、提高预测速度[43-45]。

图14为VGG16模型部分误检与漏检的鸡胴体样本图,如图14a所示,由于少数断翅品翅膀与躯干处的骨头未完全断裂,翅膀展开幅度小,弱化了断翅特征。如图 14b所示,翅膀的大小也会影响结果的准确性,翅膀肥大的合格品在重量的影响下增加了两翅向外伸展的幅度,形成断翅的假象。

注:图中第一行数据分别表示正确地预测为断翅的样本数、错误地预测为正常的样本数、断翅品的识别精确度;第二行数据分别表示错误地预测为断翅的样本数、正确地预测为正常的样本数、合格品的识别精确度;第三行分别表示断翅品的召回率、合格品的召回率、模型的总准确率。

图14 漏检和误检样本

3 结 论

本研究利用机器视觉系统获得经人工质检的鸡胴体断翅品和合格品的正视图,共计1 053张,采用加权平均值法(灰度化)、二维中值滤波法(去噪)、迭代法(阈值分割)的图像预处理方法获得除去背景的鸡胴体图像,并以此图为基础提取了11个特征值。分别将特征值和经降维的主成分导入LDA、QDA、RF、SVM、BP模型,将去除背景的鸡胴体RGB图导入深度学习模型VGG16。综合比较模型的F1分数和总准确率,发现所有模型中以VGG16对鸡胴体断翅品和合格品分类效果最好,F1分数为94.35%,总准确率为93.28%,平均预测速度为10.34 张/s。该模型可为鸡胴体断翅的快速识别与分类提供技术参考。但仍需改善模型的预测速度,并提高对断翅程度轻的断翅品和翅膀肥大的合格品的识别准确率。此外,本研究通过手动捕获鸡胴体的正视图的方式对断翅鸡胴体静态检测,尚未实现断翅鸡胴体的全自动检测,因此在后续研究中需通过信号触发装置实现相机自动曝光和光源自动频闪,从而实现对断翅鸡胴体的实时检测。

[1] 何雯霞,熊涛,尚燕. 重大突发疫病对我国肉禽产业链市场价格的影响研究:以非洲猪瘟为例[J]. 农业现代化研究,2022,43(2):318-327.

He Wenxia, Xiong Tao, Shang Yan. The impacts of major animal diseases on the prices of China’s meat and poultry markets: evidence from the African swine fever[J]. Research of Agricultural Modernization, 2022, 43(2): 318-327. (in Chinese with English abstract)

[2] 李继忠,曲威禹,花园辉,等. 屠宰工艺和设备对鸡胴体自动分割的影响[J]. 肉类工业,2021(4):36-41.

Li Jizhong, Qu Weiyu, Hua Yuanhui, et al. Effect of slaughtering technology and equipment on automatic segmentation of chicken carcass[J]. Meat Industry, 2021(4): 36-41. (in Chinese with English abstract)

[3] Chowdhury E U, Morey A. Application of optical technologies in the US poultry slaughter facilities for the detection of poultry carcass condemnation[J]. British Poultry Science, 2020, 61(6): 646-652.

[4] 王成军,韦志文,严晨. 基于机器视觉技术的分拣机器人研究综述[J]. 科学技术与工程,2022,22(3):893-902.

Wang Chengjun, Wei Zhiwen, Yan Chen. Review on sorting robot based on machine vision technology[J]. Science Technology and Engineering, 2022, 22(3): 893-902. (in Chinese with English abstract)

[5] Brosnan T, Sun D W. Improving quality inspection of food products by computer vision:A review[J]. Journal of Food Engineering, 2004, 61(1): 3-16.

[6] Taheri-Garavand A, Fatahi S, Omid M, et al. Meat quality evaluation based on computer vision technique: A review[J]. Meat Science, 2019, 156: 183-195.

[7] 戚超,徐佳琪,刘超,等. 基于机器视觉和机器学习技术的鸡胴体质量自动分级方法[J]. 南京农业大学学报,2019,42(3):551-558.

Qi Chao, Xu Jiaqi, Liu Chao, et al. Automatic classification of chicken carcass weight based on machine vision and machine learning technology[J]. Journal of Nanjing Agricultural University, 2019, 42(3): 551-558. (in Chinese with English abstract)

[8] Huynh T T M, Tonthat L, Dao S V T. A vision-based method to estimate volume and mass of fruit/vegetable: case study of sweet potato[J]. International Journal of Food Properties, 2022, 25(1): 717-732.

[9] 周宝仓,吕金龙,肖铁忠,等. 机器视觉技术研究现状及发展趋势[J]. 河南科技,2021,40(31):18-20.

Zhou Baocang, Lü Jinlong, Xiao Tiezhong, et al. Research status and development trend of machine vision technology[J]. Henan Technology, 2021, 40(31): 18-20. (in Chinese with English abstract)

[10] 温艳兰,陈友鹏,王克强,等. 基于机器视觉的病虫害检测综述[EB/OL]. 中国粮油学报,(2022-01-11) [2022-08-11]. http: //kns. cnki. net/kcms/detail/11. 2864. TS. 20220302. 1806. 014. html.

Wen Yanlan, Chen Youpeng, Wang Keqiang, et al. An overview of plant diseases and insect pests detection based on machine vision[EB/OL]. Journal of the Chinese Cereals and Oils Association, (2022-01-11) [2022-08-11]. (in Chinese with English abstract)

[11] 刘平,刘立鹏,王春颖,等. 基于机器视觉的田间小麦开花期判定方法[J]. 农业机械学报,2022,53(3):251-258.

Liu Ping, Liu Lipeng, Wang Chunying, et al. Determination method of field wheat flowering period based on machine vision[J]. Transactions of the Chinese Society for Agricultural Machinery, 2022, 53(3): 251-258. (in Chinese with English abstract)

[12] Li J B, Rao X Q, Wang F J, et al. Automatic detection of common surface defects on oranges using combined lighting transform and image ratio methods[J]. Postharvest Biology and Technology, 2013, 82: 59-69.

[13] 闫彬,杨福增,郭文川. 基于机器视觉技术检测裂纹玉米种子[J]. 农机化研究,2020,42(5):181-185, 235.

Yan Bin, Yang Fuzeng, Guo Wenchuan. Detection of maize seeds with cracks based on machine vision technology[J]. Journal of Agricultural Mechanization Research, 2020, 42(5): 181-185, 235. (in Chinese with English abstract)

[14] 姜沛宏,张玉华,陈东杰,等. 基于多源感知信息融合的牛肉新鲜度分级检测[J]. 食品科学,2016,37(6):161-165.

Jiang Peihong, Zhang Yuhua, Chen Dongjie, et al. Measurement of beef freshness grading based on multi-sensor information fusion technology[J]. Food Science, 2016, 37(6): 161-165. (in Chinese with English abstract)

[15] Chen W, Du N F, Dong Z Q, et al. Double yolk nondestructive identification system based on Raspberry Pi and computer vision[J]. Journal of Food Measurement and Characterization, 2022, 16(2): 1605-1612.

[16] 程子华. 基于机器视觉的残缺饼干分拣系统开发[J]. 现代食品科技,2022,38(2):313-318, 325.

Cheng Zihua. The development of incomplete biscuit sorting system based on machine vision[J]. Modern Food Science and Technology, 2022, 38(2): 313-318, 325. (in Chinese with English abstract)

[17] 郭峰,刘立峰,张奎彪,等. 家禽胴体影像分选技术研究新进展[J]. 肉类工业,2019(11):31-40.

Guo Feng, Liu Lifeng, Zhang Kuibiao, et al. New progress in research on image grading technology of poultry carcass[J]. Meat Industry, 2019(11): 31-40. (in Chinese with English abstract)

[18] 戚超. 基于深度相机和机器视觉技术的鸡胴体质量在线分级系统[D]. 南京:南京农业大学,2019.

Qi Chao. On-line Grading System of Chicken Carcass Quality Based on Deep Camera and Machine Vision Technology[D]. Nanjing: Nanjing Agricultural University, 2019. (in Chinese with English abstract)

[19] 陈坤杰,李航,于镇伟,等. 基于机器视觉的鸡胴体质量分级方法[J]. 农业机械学报,2017,48(6):290-295, 372.

Chen Qunjie, Li Hang, Yu Zhengwei, et al. Grading of chicken carcass weight based on machine vision[J]. Transactions of the Chinese Society for Agricultural Machinery, 2017, 48(6): 290-295, 372. (in Chinese with English abstract)

[20] 吴玉红. 基于机器视觉的鸡翅质检研究[D]. 泰安:山东农业大学,2016.

Wu Yuhong. Research on Chicken Wings Quality and Mass Detection Based on Machine Vision[D]. Taian: Shandong Agricultural University, 2016. (in Chinese with English abstract)

[21] 徐京京. 鸡翅质量检测与重量分级智能化装备的设计[D]. 泰安:山东农业大学,2016.

Xu Jingjing. Design of the Chicken Wings Quality Inspection and Weight Classification of Intelligent Equipment[D]. Taian: Shandong Agricultural University, 2016. (in Chinese with English abstract)

[22] Asmara R, Rahutomo F, Hasanah Q, et al. Chicken meat freshness identification using the histogram color feature[C]//IEEE. International Conference on Sustainable Information Engineering and Technology. Batu, Indonesia, 2017: 57-61.

[23] Carvalho L, Perez-Palacios T, Caballero D, et al. Computer vision techniques on magnetic resonance images for the non-destructive classification and quality prediction of chicken breasts affected by the White-Striping myopathy[J]. Journal of Food Engineering, 2021, 306: 110633.

[24] 杨凯. 鸡胴体表面污染物在线检测及处理设备控制系统的设计与开发[D]. 南京:南京农业大学,2015.

Yang Kai. Design and Development of Online Detection and Processing Equipment Control System for Contaminants on Chicken Carcass Surface[D]. Nanjing: Nanjing Agricultural University, 2015. (in Chinese with English abstract)

[25] 陈艳. 基于机器视觉的家禽机械手掏膛及可食用内脏分拣技术研究[D]. 武汉:华中农业大学,2018.

Chen Yan. Research on the Technology of Poultry Manipulator Eviscerating and Edible Viscera Sorting Based on Machine Vision[D]. Wuhan: Huazhong Agriculture University, 2018. (in Chinese with English abstract)

[26] 王树才,陶凯,李航. 基于机器视觉定位的家禽屠宰净膛系统设计与试验[J]. 农业机械学报,2018,49(1):335-343.

Wang Shucai, Tao Kai, Li Hang. Design and experiment of poultry eviscerator system based on machine vision positioning[J]. Transactions of the Chinese Society for Agricultural Machinery, 2018, 49(1): 335-343. (in Chinese with English abstract)

[27] Chen Y, Wang S C. Poultry carcass visceral contour recognition method using image processing[J]. Journal of Applied Poultry Research, 2018, 27(3): 316-324.

[28] Teimouri N, Omid M, Mollazade K, et al. On-line separation and sorting of chicken portions using a robust vision-based intelligent modelling approach[J]. Biosystems Engineering, 2018, 167: 8-20.

[29] 赵正东,王虎虎,徐幸莲. 基于机器视觉的肉鸡胴体淤血检测技术初探[J]. 农业工程学报,2022,38(16):330-338.

Zhao Zhengdong, Wang Huhu, Xu Xinglian. Broiler carcass congestion detection technology based on machine vision[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(16): 330-338. (in Chinese with English abstract)

[30] 高举,李永祥,徐雪萌. 基于机器视觉编织袋缝合缺陷识别与检测[J]. 包装与食品机械,2022,40(3):51-56.

Gao Ju, Li Yongxiang, Xu Xuemeng. Recognition and detection of stitching defects of woven bags based on machine vision[J]. Packaging and Food Machinery, 2022, 40(3): 51-56. (in Chinese with English abstract)

[31] 王坤. 基于机器视觉的花边针自动分拣方法研究[D]. 上海:东华大学,2022.

Wang Kun. Investigation on Automatic Sorting Method of Curved Edge Needle Based on Machine Vision[D]. Shanghai: Donghua University, 2022. (in Chinese with English abstract)

[32] 位冲冲. 基于卷积神经网络的工件识别技术研究[D]. 哈尔滨:哈尔滨商业大学,2022.

Wei Chongchong. Research on Workpiece Recognition Technology Based on Convolutional Neural Networks[D]. Harbin: Harbin University of Commerce, 2022. (in Chinese with English abstract)

[33] 刘德志,曾勇,袁雨鑫,等. 基于机器视觉的火车轮对轴端标记自动识别算法研究[J]. 现代制造工程,2022(7):113-120.

Liu Dezhi, Zeng Yong, Yuan Yuxing, et al. Research on automatic recognition algorithm of axle end mark of train wheelset based on machine vision[J]. Modern Manufacturing Engineering, 2022(7): 113-120. (in Chinese with English abstract)

[34] 张凯,李振华,郁豹,等. 基于机器视觉的花生米品质分选方法[J]. 食品科技,2019,44(5):297-302.

Zhang Kai, Li Zhenghua, Yu Bao, et al. Peanut quality sorting method based on machine vision[J]. Food Science and Technology, 2019, 44(5): 297-302. (in Chinese with English abstract)

[35] 戴建民,曹铸,孔令华,等. 基于多特征模糊识别的烟叶品质分级算法[J]. 江苏农业科学,2020,48(20):241-247.

Dai Jianmin, Cao Zhu, Kong Linghua, et al. Tobacco quality grading algorithm based on multi-feature fuzzy recognition[J]. Jiangsu Agricultural Science, 2020, 48(20): 241-247. (in Chinese with English abstract)

[36] 王慧慧,孙永海,张婷婷,等. 鲜食玉米果穗外观品质分级的计算机视觉方法[J]. 农业机械学报,2010,41(8):156-159,165.

Wang Huihui, Sun Yonghai, Zhang Tingting, et al. Appearance quality grading for fresh corn ear using computer vision[J]. Transactions of the Chinese Society for Agricultural Machinery, 2010, 41(8): 156-159, 165. (in Chinese with English abstract)

[37] 朱博阳,吴睿龙,于曦. 人工智能助力当代化学研究[J]. 化学学报,2020,78(12):1366-1382.

Zhu Boyang, Wu Ruilong, Yu Xi. Artificial intelligence for contemporary chemistry research[J]. Acta Chimica Sinica, 2020, 78(12): 1366-1382. (in Chinese with English abstract)

[38] 王敏,周树道,杨忠,等. 深度学习技术浅述[J]. 自动化技术与应用,2019,38(5):51-57.

Wang Min, Zhou Shudao, Yang Zhong, et al. Simple aclysis of deep learning technology[J]. Computer Applications, 2019, 38(5): 51-57. (in Chinese with English abstract)

[39] 刘广昊. 基于数字图像和高光谱的柑橘叶片品种鉴别方法[D]. 重庆:西南大学,2020.

Liu Guanghao. Identification Methods of Ccitrus Leaf Varieties Based on Digital Image and Hyperspectral[D]. Chongqing: Southwest University, 2020. (in Chinese with English abstract)

[40] 梁培生,孙辉,张国政,等. 基于主成分分析和BP神经网络的蚕蛹分类方法[J]. 江苏农业科学,2016,44(10):428-430, 582.

Liang Peisheng, Sun Hui, Zhang Guozheng, et al. A classification method for silkworm pupae based on principal component analysis and BP neural network[J]. Jiangsu Agricultural Science, 2016, 44(10): 428-430, 582. (in Chinese with English abstract)

[41] 刘雅琪. 基于机器视觉的鱼头鱼尾定位技术的研究[D]. 武汉:武汉轻工大学,2021.

Liu Yaqi. Research on Positioning Technology of Fish Head and Tail Based on Machine Vision[D]. Wuhan: Wuhan Polytechnic University, 2021. (in Chinese with English abstract)

[42] 史甜甜. 基于深度学习的织物疵点检测研究[D]. 杭州:浙江理工大学,2019.

Shi Tiantian. Research on Fabric Defection Based on Deep Learning. Hangzhou: Zhejiang Sci-Tech Unicersity, 2019. (in Chinese with English abstract)

[43] 高晗,田育龙,许封元,等. 深度学习模型压缩与加速综述[J]. 软件学报,2021,32(1):68-92.

Gao Han, Tian Yulong, Xu Fengyuan, et al. Survey of deep learning model compression and acceleration[J]. Coden Ruxuew, 2021, 32(1): 68-92. (in Chinese with English abstract)

[44] 鲍春. 基于FPGA的图像处理深度学习模型的压缩与加速[D]. 北京:北京工商大学,2020.

Bao Chun. Deep Learning Model Compression and Acceleration for Image Processing Based on FPGA[D]. Beijing: Beijing Technology and Business University, 2020. (in Chinese with English abstract)

[45] Han R, Liu C H, Li S, et al. Accelerating deep learning systems via critical set identification and model compression[J]. IEEE Transactions on Computers, 2020, 69(7): 1059-1070.

Rapid detection technology for broken-winged broiler carcass based on machine vision

Wu Jiangchun, Wang Huhu※, Xu Xinglian

(,,,210095,)

Broken-winged chicken carcasses can be one of the most common defects in broiler slaughter plants. Manual detection cannot fully meet the large-scale production, due to the high labor intensity with the low efficiency and accuracy. Therefore, it is a high demand to rapidly and accurately detect broken wings on chicken carcasses. This study aims to realize the rapid inspection of broken-winged chicken carcasses in the progress of broiler slaughter, in order to improve the production efficiency for the cost-saving slaughter line. 1053 broiler carcass images were collected from a broiler slaughter line using a computer vision system. Rapid identification was then constructed for the broken wing defects. Specifically, the front view of the chicken carcass was obtained in the machine vision system. The preprocessing was then deployed to obtain the chicken carcass images without the background, including the weighted average (graying), two-dimensional median filtering (denoising), and iterative (threshold segmentation). The code was also written in the MATLAB platform. After that, a total of 11 characteristic values were calculated, covering the exact distance starting from the left and right ends of the chicken carcass image to the centroid and the difference (d1, d2, and dc), the heights of the lowest point in the two wings and their difference (h1, h2, and hc), the areas of the two wings and ratio of them (S1, S2, and Sr), squareness (R), and width-length ratio (rate). As such, the eight principal components were achieved in the principal component analysis after the reduction of several dimensions. Separately, the principal components and characteristic values were imported into the specific model of linear discriminant analysis (LDA), quadratic discriminant analysis (QDA), random forest (RF), support vector machine (SVM), and BP neural network. Among them, the input parameter of the VGG16 model was from the RGB maps of the chicken carcass with the removed background. Finally, a comparison was made for the F1-scores and total accuracy of each model. Thus, the highest recall rate of 91.13% was achieved in the RF model with the characteristic values as the input parameters among the shallow learning models. The second higher recognition accuracy and F1-score were 96.28% and 92.58%, respectively in the SVM model with the characteristic values as input parameters. The highest total accuracy of model recognition was achieved in the quadratic discriminant and SVM models with the characteristic values as the input parameters, both up to a proportion of 91.78%. Moreover, the F-score and total accuracy of the VGG16 model were the highest among the total model combinations, with respective rates of 94.35% and 93.28%, respectively. In terms of the prediction time of models, the shortest prediction time was obtained in the SVM model with the principal components as the input parameters. Specifically, the capacity was found to determine 353 sample images in 0.000 9 s, with an average speed of 3.92×105images per second. By contrast, the longest prediction time was observed in the VGG16 model, where 24.46 s to determine 253 sample images, with an average speed of 10.34 images per second. In conclusion, the VGG16 model can be expected to serve as the best classification of broken wings in chicken carcasses.

machine vision; machine learning; broiler carcass; detection technology of broken wing

10.11975/j.issn.1002-6819.2022.22.027

TS251.7

A

1002-6819(2022)-22-0253-09

吴江春,王虎虎,徐幸莲. 基于机器视觉的鸡胴体断翅快速检测技术[J]. 农业工程学报,2022,38(22):253-261.doi:10.11975/j.issn.1002-6819.2022.22.027 http://www.tcsae.org

Wu Jiangchun, Wang Huhu, Xu Xinglian, et al. Rapid detection technology for broken-winged broiler carcass based on machine vision[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2022, 38(22): 253-261. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2022.22.027 http://www.tcsae.org

2022-08-26

2022-11-05

国家现代农业产业技术体系项目(CARS-41)

吴江春,研究方向为肉品加工与质量安全控制。Email:2021808112@stu.njau.edu.cn

王虎虎,教授,博士,研究方向为肉品加工与质量安全控制。Email:huuwang@njau.edu.cn