M3SC:A Generic Dataset for Mixed Multi-Modal(MMM)Sensing and Communication Integration

Xiang Cheng ,Ziwei Huang ,Lu Bai ,Haotian Zhang ,Mingran Sun ,Boxun Liu ,Sijiang Li,Jianan Zhang,Minson Lee

1 State Key Laboratory of Advanced Optical Communication Systems and Networks,School of Electronics,Peking University,Beijing 100871,China

2 Joint SDU-NTU Centre for Artificial Intelligence Research(C-FAIR),Shandong University,Jinan 250100,China

3 Ever-Florescence Technology,Nanjing 210000,China

*The corresponding author,email: xiangcheng@pku.edu.cn

Abstract: The sixth generation(6G)of mobile communication system is witnessing a new paradigm shift,i.e.,integrated sensing-communication system.A comprehensive dataset is a prerequisite for 6G integrated sensing-communication research.This paper develops a novel simulation dataset,named M3SC,for mixed multi-modal (MMM) sensing-communication integration,and the generation framework of the M3SC dataset is further given.To obtain multimodal sensory data in physical space and communication data in electromagnetic space,we utilize Air-Sim and WaveFarer to collect multi-modal sensory data and exploit Wireless InSite to collect communication data.Furthermore,the in-depth integration and precise alignment of AirSim,WaveFarer,and Wireless InSite are achieved.The M3SC dataset covers various weather conditions,multiplex frequency bands,and different times of the day.Currently,the M3SC dataset contains 1500 snapshots,including 80 RGB images,160 depth maps,80 LiDAR point clouds,256 sets of mmWave waveforms with 8 radar point clouds,and 72 channel impulse response (CIR) matrices per snapshot,thus totaling 120,000 RGB images,240,000 depth maps,120,000 LiDAR point clouds,384,000 sets of mmWave waveforms with 12,000 radar point clouds,and 108,000 CIR matrices.The data processing result presents the multi-modal sensory information and communication channel statistical properties.Finally,the MMM sensing-communication application,which can be supported by the M3SC dataset,is discussed.

Keywords: multi-modal sensing;ray-tracing;sensing-communication integration;simulation dataset

I.INTRODUCTION

Nowadays,the standardization of fifth generation(5G)communications has been completed and 5G networks have been commercially launched in 2020[1].As the next generation,sixth generation (6G) has attracted tremendous research in academia and industry [2–5].Aiming at enriching the spectrum efficiency and reducing the hardware cost,the 6G communication system is witnessing a major paradigm shift,i.e.,in-depth sensing and communication integration [6–8].Also,the sensing and communication integration facilitates various 6G applications,e.g.,human counting and identification,autonomous driving vehicles,and area map reconstruction,where widely deployed multimodal sensors and communication equipment play an important role [9].Recently,inspired by human synesthesia,an involuntary human neuropsychological trait in which the stimulation of one human sense evokes another human sense,[6]refers to these multimodal sensors and communication equipment as“machine senses”,and further introduces the concept of human synesthesia to multi-modal sensing and communication integration.In the context of “machine senses”,multi-modal sensing-communication integration is referred as Synesthesia of Machines (SoM).The multi-modal sensing-communication integration can be achieved in a significantly intelligent manner via SoM processing,where artificial neural networks(ANNs) serve as the fundamental tool.To properly support the system design of multi-modal sensingcommunication integration,i.e.,SoM,a comprehensive dataset containing aligned sensing and communication environmental information is essential.Specifically,a comprehensive dataset can provide propagation channel information in electromagnetic space and spatial features in physical space,and is also the lifeblood of ANNs’learning ability and the key to the precision of ANNs’output.Meanwhile,such a dataset can support the development of integrated sensingcommunication algorithms,and further evaluate and compare their performance in a fair manner.

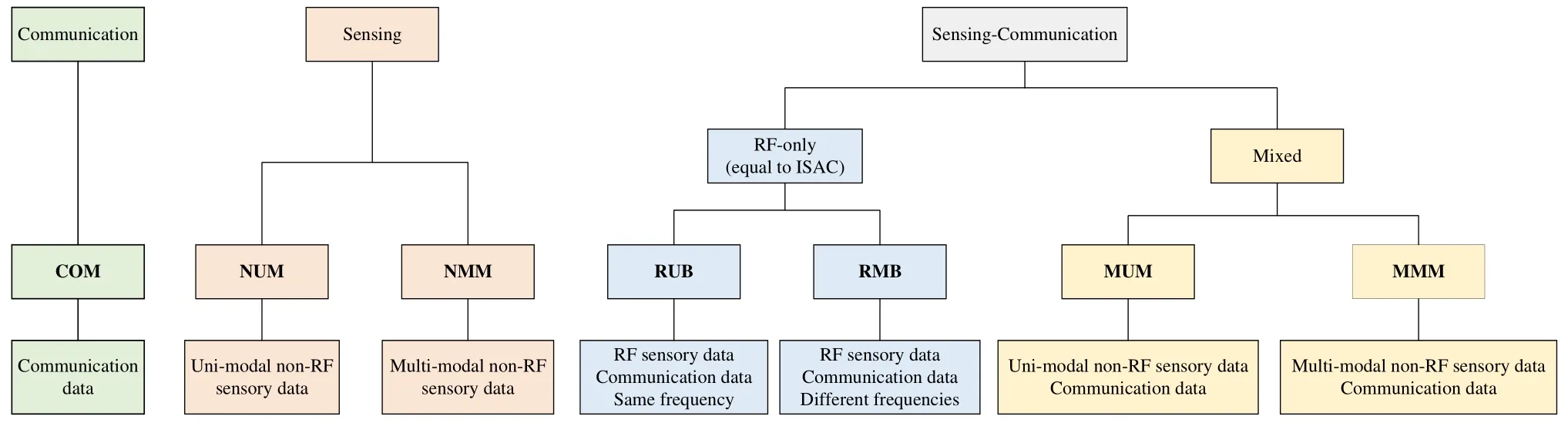

Considering the importance of datasets for the system design,extensive datasets have been developed,which can be classified depending on the application,operating frequency,as well as uni-/multi-modal sensor equipment of system applicable,as shown in Figure 1.Datasets for the communication system,namedcommunications only (COM),solely include wireless channel data in electromagnetic space.For sensing systems,we divide datasets intonon-radio frequency(non-RF)uni-modal(NUM)andnon-RF multi-modal(NMM)datasets.The NUM and NMM datasets include uni-modal and multi-modal non-RF sensory data collected in physical space,respectively.To enrich spectrum efficiency,reduce hardware cost,and facilitate more potential applications,sensing and communications are expected to have an in-depth integration [9].For integrated sensing-communication systems,we categorize datasets as the RF-only dataset and the mixed dataset.The former can support the design of sensing and communication systems operating in RF,i.e.,equal to integrated sensing and communications(ISAC),and we further divide it intoRFonly uni-band (RUB)andRF-only multi-band (RMB)datasets.Specifically,the RUB dataset contains the RF sensory data and the communication data at the same frequency,which can support the research on dualfunctional radar and communications(DFRC).Different from the RUB dataset,the RMB dataset contains the RF sensory data and the communication data at different frequencies.Finally,the mixed dataset includes sensory data and communication data collected in non-RF and RF,respectively.Furthermore,we divide the mixed dataset intomixed uni-modal (MUM)andmixed multi-modal(MMM)datasets depending on whether the dataset contains uni-modal or multi-modal non-RF sensory data.

Figure 1. Classification of existing datasets.

The authors in [10] developed a measurement dataset,named KITTI,for NMM sensing systems.The KITTI dataset consists of calibrated and synchronized RGB images,depth maps,and LiDAR point clouds.To further include the channel information and support the wireless communication application,a RUB measurement dataset in[11],named WALDO,was developed for sensing-communication applications,where sensing and communications were conducted at the same millimeter wave (mmWave) frequency under an indoor scenario.The authors in[12]developed a MMM measurement dataset,named DeepSense 6G,for integrated sensing-communication systems.The DeepSense 6G dataset [12] captured multi-modal sensory data,such as RGB images and light detection and ranging (LiDAR) point clouds,and wireless channel data under sub-6 GHz and mmWave frequency bands.However,the DeepSense 6G dataset [12] ignored the massive multiple-input multiple-output (MIMO) channel information and snowy weather,and thus cannot support the corresponding integrated sensing-communication application.In addition,although KITTI,WLADO,and DeepSense measurement datasets in [10]–[12] can support the validation of fundamental algorithms,it is difficult to flexibly customize desired scenarios owing to the labor and cost concerns.

Due to the limitation of measurement datasets,extensive simulation datasets [13–20] are developed as supplements of measurement datasets.Based upon efficient software with high accuracy,simulation datasets can achieve an excellent trade-off between complexity and fidelity.By flexibly adjusting key parameters,the simulation dataset can further cover diverse application scenarios.In [13],the authors developed a COM dataset,named DeepMIMO.The DeepMIMO dataset [13] intends to promote machine learning (ML) applications related to mmWave and massive MIMO based on ray-tracing technologies.The authors in [14] developed a NUM dataset,named LiDARsim,which includes the simulated Li-DAR point clouds and covers various scenarios.However,the LiDARsim dataset[14]is of low information redundancy due to the uni-modal sensor,and thus cannot support applications requiring high sensing robustness.To address this deficiency,the authors in[15]developed a NMM dataset,named OPV2V.The OPV2V dataset [15] covers 70 vehicular scenarios and consists of RGB images,depth maps,and LiDAR point clouds.To further capture the impact of weather conditions and times of the day,Sunet al.[16] developed a different NMM dataset,named SHIFT.The SHIFT dataset[16]has synthetic RGB images,depth maps,and LiDAR point clouds under diverse vehicular scenarios.Nevertheless,the LiDARsim,OPV2V,and SHIFT datasets in [14–16] for sensing systems cannot support integrated sensing-communication applications.To overcome this limitation,by Wireless InSite simulation platform [21],Aliet al.[17] developed a RMB dataset,named Radar-COM in this paper,where communication systems operated at 73 GHz band,while the radar operated at 76 GHz band.By employing the non-RF LiDAR simulator in [20]and Wireless InSite simulation platform,Klautauet al.[18]developed a MUM dataset,named LiDAR-COM in this paper,consisting of LiDAR point clouds and ray-tracing-based wireless channel data.The LiDARCOM dataset [18] covered various scenarios under mmWave and massive MIMO communications.Although the Radar-COM and LiDAR-COM datasets in[17] and [18] can promote the development of integrated sensing-communication algorithms,their low information redundancy and sensing robustness result in reduced accuracy and applicability.To overcome this drawback,the authors in [19] proposed a MMM dataset,named Vision-Wireless (ViWi).The ViWi dataset consists of ray-tracing-based wireless channel data collected in Wireless InSite and multi-modal sensory data,such as RGB images,depth maps,and LiDAR point clouds.Furthermore,the ray-tracingbased channel data includes sub-6 GHz and mmWave frequency bands and massive MIMO.However,the ViWi dataset [19] leaves out the simulation of different weather conditions and times of the day,making it impossible to be applied in research on integrated sensing-communication related to weather and time of the day.

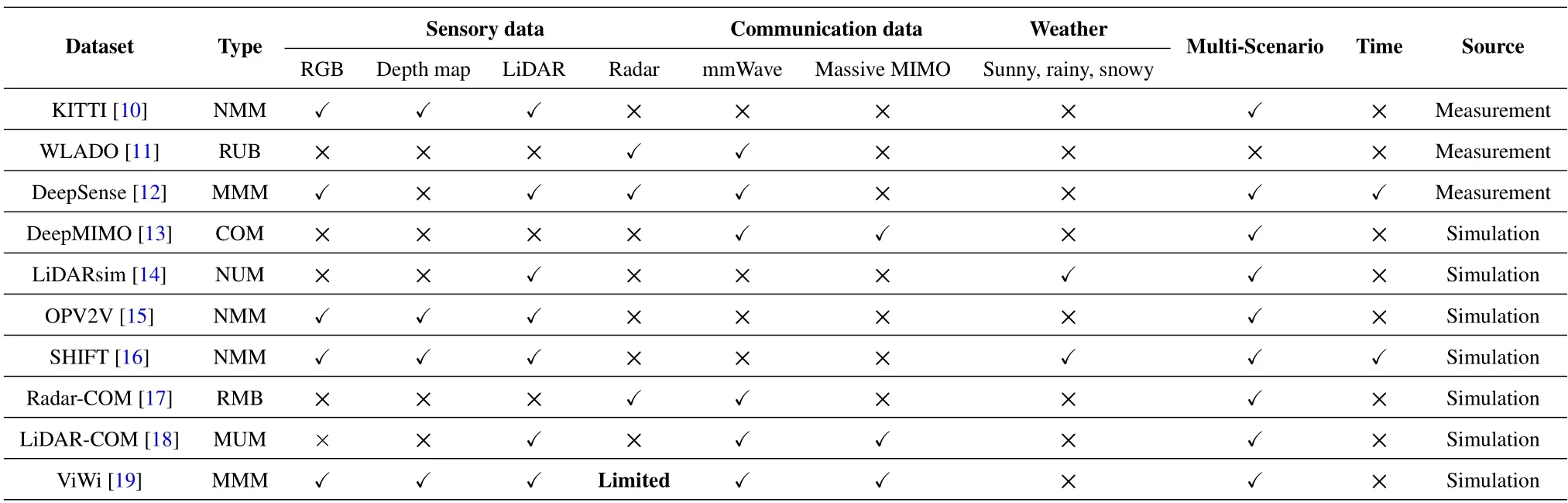

In summary,since sensing-communication integration facilitates diverse applications,the dataset solely for sensing or communication systems is of low applicability.Furthermore,the impact of harsh weather conditions on communication and sensing systems is a crucial research topic as rainy and snowy days can damage the sensing robustness and communication reliability.Consequently,the dataset lacking various weather conditions has limited applications.Meanwhile,due to the respective effects of times of the day and frequency bands on the sensing and communication performance,the dataset without different times of the day and various frequency bands is incomplete.Table 1 lists some typical existing datasets.Currently,a comprehensive MMM simulation dataset,which covers various weather conditions,frequency bands,and times of the day,is still lacking.

Table 1. Typical measurement and simulation datasets.

In this paper,we propose M3SC,a framework of developing simulation MMM datasets for multi-modal sensing-communication integration,i.e.,SoM.Various weather conditions,various frequency bands,and different times of the day are further taken into account.Currently,there is no software tailored for constructing the MMM dataset for integrated sensingcommunication research.However,a comprehensive MMM dataset is a prerequisite for 6G integrated sensing-communication research.To fill this gap,we utilize AirSim[22]and WaveFarer[23]to extract spatial features in physical space and Wireless InSite[21] to capture channel information in electromagnetic space.Note that it is not a simple combination of AirSim,WaveFarer,and Wireless InSite.There are two huge challenges,one is thein-depth integrationof sensing and communications,and the other is theprecise alignmentof physical space and electromagnetic space.For the former challenge,to achieve the in-depth integration of sensing and communications,AirSim and WaveFarer need to meet the communication requirements,such as collecting space-airground-sea environmental information.Nonetheless,AirSim and WaveFarer cannot naturally meet this requirement.Meanwhile,Wireless InSite needs to meet sensing requirements,such as the accurate modeling of the movement of each object and the generation of rainy and snowy environments.Furthermore,the latter challenge requires that physical space in AirSim and WaveFarer and electromagnetic space in Wireless InSite are precisely aligned.For example,the size of scenario,the position of each object,and the weather condition should be the same in physical space and electromagnetic space.The developed M3SC dataset can be accessed from http://pcni.pku.edu.cn/datase t_1.html.Also,wireless communication channel data in the M3SC dataset is published on the exclusive distributor of Remcom Inc.https://www.renkangtech.co m/productinfo/1642251.html?templateId=232054 and https://www.qi-well.com/portal_c1_cnt.php?owner_num=c1_26875&button_num=c1&folder_id=22620.The major contributions and novelties of this paper are outlined as follows.

1.For multi-modal sensing-communication integration,i.e.,SoM,a MMM dataset,named M3SC,is developed and the corresponding generation framework is proposedfor the first time.The M3SC dataset aligns physical space and electromagnetic space,and consists of high-fidelity multi-modal sensory data and precise ray-tracingbased wireless channel data.Furthermore,different times of the day,various frequency bands,and various weather conditions are considered.

2.To achieve the in-depth integration of sensing and communications,in the physical space,set the three-dimensional(3D)coordinate of each vehicle frame by frame to support the continuous movement of multi-vehicles.In the electromagnetic space,rain and snow models are generated to simulate rainy and sunny weather.Also,a large number of internal files are parsed to generate the dynamic scenarios in batch.

3.To precisely align physical and electromagnetic space,detect the size and movement trajectory of each object so that they are the same in physical and electromagnetic space.Furthermore,the flexible setting of weather parameters,employment of LISA algorithm [24],and dynamic adjustment of rain and snow models lead to the consistent weather condition in physical and electromagnetic space.

4.At present,the M3SC dataset includes 1500 snapshots,containing 80 RGB images with 1920×1080 resolution,160 depth maps with 1920×1080 resolution,80 LiDAR point clouds,256 sets of mmWave waveforms with 8 radar point clouds,and 72 channel impulse response (CIR)matrices per snapshot,totaling 120,000 RGB images,240,000 depth maps,120,000 LiDAR point clouds,384,000 sets of mmWave waveforms with 12,000 radar point clouds,and 108,000 CIR matrices.The processing result of the M3SC dataset reveals the effects of weather,time of the day,and communication frequency,on sensory and communication data.

The remainder of this paper is organized as follows.Section II introduces a typical example in the M3SC dataset under vehicular urban crossroads to present the generation framework of the M3SC dataset.Section III illustrates the processing results of sensory and communication data and gives the valuable analysis.Section IV discusses the potential MMM sensingcommunication application that can be supported by the M3SC dataset.Finally,Section V draws the conclusion and future work.

II.GENERATION FRAMEWORK OF THE M3SC DATASET:AN EXAMPLE

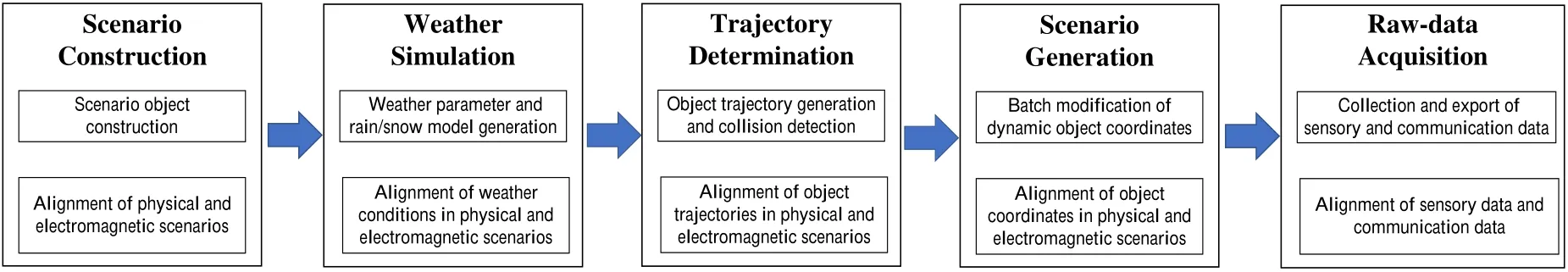

In this section,a typical example/scenario,i.e.,complex high-mobility vehicular urban crossroad scenario,in the M3SC dataset is introduced in detail.Figure 2 gives the generation framework of the M3SC dataset,which contains five steps and will be discussed in the sequel.

Figure 2. Generation framework of the M3SC dataset.

2.1 Construction of Simulation Scenarios at the Initial Time

To develop the M3SC dataset,the first step is to construct the simulation scenario with aligned physical space and electromagnetic space at the initial time.For physical space in AirSim,we utilize a vehicular urban crossroad scenario produced by PurePolygons,named Modular Building Set.The scenario resolution is 2048×2048,which can enable the generation of high-fidelity sensory data.Subsequently,the AirSim simulation platform,a plug-in that is constructed on the efficient 3D game engine Unreal Engine,is leveraged to add vehicles and roadside devices into the vehicular urban crossroad scenario and collect the multimodal sensory data.The vehicular urban crossroad scenario contains 11 base stations (BSs),12 vehicles(9 cars and 3 buses),6 pedestrians,many trees,and various buildings.The BS and vehicle are equipped with multi-modal sensors,including a camera,two types of depth cameras,and a LiDAR device.Furthermore,it is noteworthy that the parameters of sensing equipment can be properly adjusted,including the field-of-view (FoV),resolution,the range of LiDAR,as well as the number of LiDAR channels,etc.This exceedingly increases the scalability of the sensory data.

For physical space in WaveFarer,we utilize 3D models aligned with AirSim,including buildings,trees,pedestrians,and vehicles,ensuring the consistency between the environment features captured by the radars in WaveFarer and the sensors in AirSim.In the vehicular urban crossroad scenario,we introduce ten mmWave radars,including three roadside radars,three front-view onboard radars,and one vehicle with front-,back-,left-,and right-view onboard radars.Each radar is equipped with 8 transmitter(Tx)antennas and 4 receiver (Rx) antennas,totaling 32 virtual sub-channels.The radar utilizes a linear frequency modulated continuous wave(FMCW)operating in the frequency range of 77 GHz to 81 GHz.The azimuth information is obtained with the help of digital beamforming (DBF),allowing for the acquisition of radar point clouds that encompass azimuth,distance,and Doppler velocity.The maximum detection range for a certain radar device is 74.9 m with a range resolution of 0.1499 m.The Doppler velocity range is±47.42 m/s with a velocity resolution of 0.939 m/s.Depending on the requirements of different research tasks,we can flexibly adjust the aforementioned parameters and employ user-defined methods to process radar data to obtain environment features.

For electromagnetic space,we construct a corresponding vehicular urban crossroad communication environment,which precisely aligns with physical space.Specifically,by omitting the fine physical detail,the vehicular urban crossroad scenario constructed in AirSim simulation platform can be imported into Wireless InSite simulation platform.The color of each side of the building is modified and the crosswalk is added.Then,the accurate wireless communication channel data can be obtained via the raytracing technology.The BS and vehicle are equipped with a Tx and a Rx.Note that vehicle-to-vehicle(V2V) links and vehicle-to-infrastructure (V2I) links are considered.The numbers of antenna elements equipped on each BS and each vehicle are 128 and 32,respectively.The frequency bands cover sub-6 GHz,i.e.,carrier frequency is 5.9 GHz with 20 MHz communication bandwidth,and mmWave,i.e.,carrier frequency is 28 GHz with 2 GHz communication bandwidth.As mentioned in [25–27],it is significantly difficult to carry out massive MIMO mmWave channel measurement campaigns under complex highmobility vehicular communication scenarios.Therefore,the communication data in the M3SC dataset can fill this gap.By adjusting the channel-related parameters,more diverse propagation environments with different antenna elements of transceivers and frequencies can be constructed,leading to high scalability of the communication data.

The physical space in AirSim and WaveFarer and electromagnetic space in Wireless InSite are precisely aligned after being constructed.To be specific,the dimension and coordinate of each object are compared and aligned in physical and electromagnetic space at the initial time instant to guarantee the aligned sensory and communication data.

2.2 Weather Simulation

The second step of constructing the M3SC dataset is to carry out the weather simulation.According to[28,29],weather has a significant effect on sensory data and communication data.The M3SC dataset includes three typical weather conditions,i.e.,sunny,rainy,and snowy days.The weather condition simulation in WaveFarer is currently underway and will be completed in the near future.Figure 3 shows the scenarios on sunny,rainy,and snowy days in AirSim and Wireless InSite.For physical space,we use Python to set the parameters of the amount of rain and snow in AirSim.Specifically,the parameters of mimicking rainy and snowy days include Rain,Roadwetness,Snow,and RoadSnow,which represent the amount of rain,the degree of water accumulation on the ground,the amount of snow,and the degree of snow accumulation on the ground,respectively.The value of the aforementioned parameters satisfies[0,1].In the simulation,on the rainy day,the Rain and Roadwetness parameters are set to 0.9 and 1.Different from the rainy day,Snow and RoadSnow parameters are set to 0.5 and 1 on the snowy day.However,due to the lack of accurate modeling of object properties in AirSim,such as material reflectivity,weather conditions only affect camera sensors while not LiDAR sensors.Such a limitation can also be found in other simulation platforms,such as CARLA[30],LGSVL[31],and Deep-GTAV [32].To overcome this limitation of AirSim,we employ a LiDAR point weather augmentation algorithm,named LISA [24],to augment points in existing sunny scenarios.The augmentation algorithm proposes a physics-based hybrid Monte Carlo based LiDAR scatterer simulator for adverse weather conditions,such as rainy and snowy days.To leverage the LISA algorithm,we properly set the reflectivity of objects and LiDAR attributes.Furthermore,the parameters controlling rain and snow in the LISA algorithm are rain rate and snow rate,which are set to 50mm/hr and 10mm/hr in the simulation according to [24],respectively.As a consequence,LiDAR point clouds can include the effects of rain and snow in a lowcomplexity manner.Furthermore,the augmented data has been proven to be effective when being utilized to train neural networks,thus ensuring the accuracy of introducing weather effects in LiDAR point clouds.Specifically,to validate the generated LiDAR point clouds on rainy and snowy days,the authors in [24]utilized the generated LiDAR point clouds on rainy and snowy days as the training set and exploited three state-of-the-art 3D object detection neural networks to train them.Furthermore,the authors tested the LiDAR point clouds collected from rainy and snowy days in reality,i.e.,Waymo Open Dataset [33].The testing result demonstrated that the mean average precision of the LISA algorithm outperforms that of typical data augmentation algorithms.Therefore,the generated Li-DAR point clouds on rainy and snowy days can be effectively utilized to train neural networks.

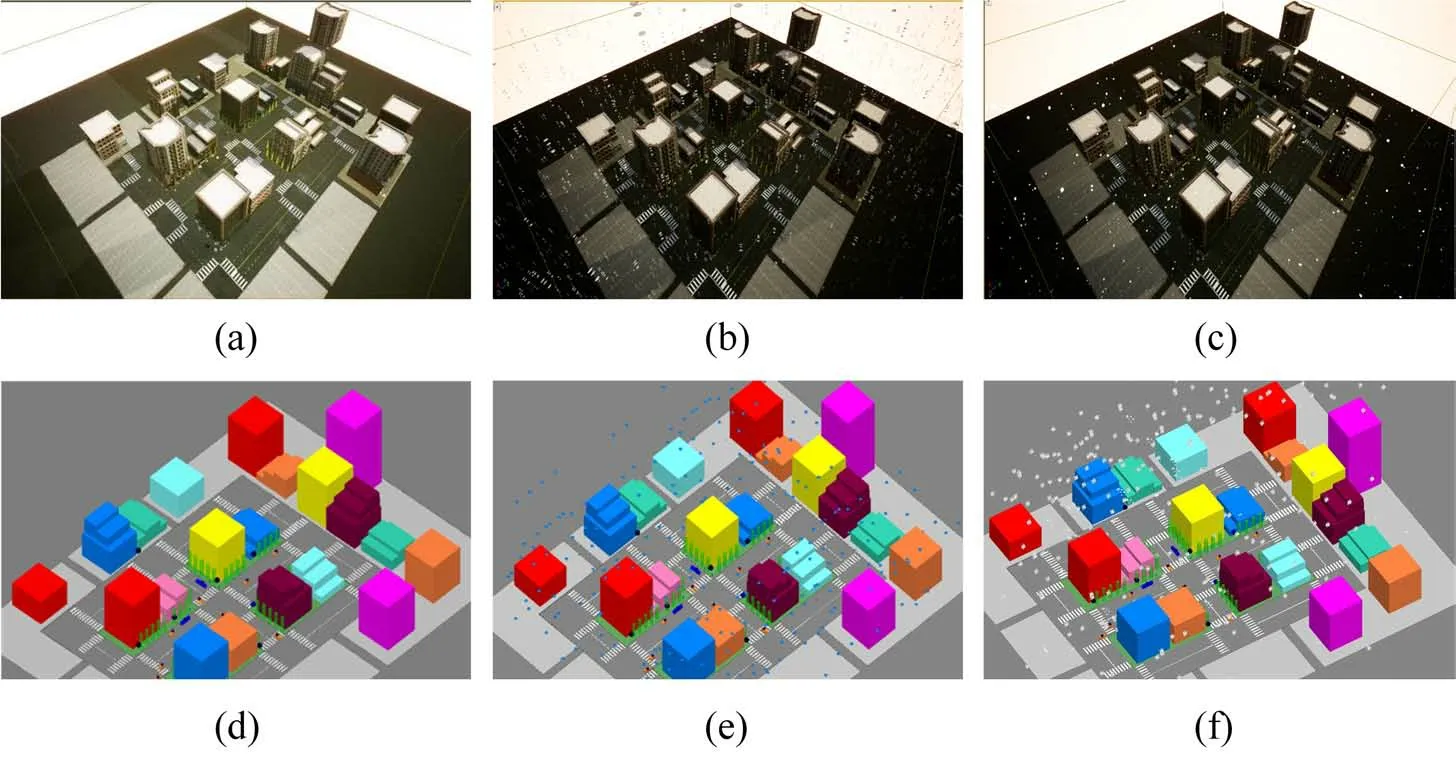

Figure 3. A vehicular urban crossroad environment.(a)Sunny day in AirSim.(b)Rainy day in AirSim.(c)Snowy day in AirSim.(d)Sunny day in Wireless InSite.(e)Rainy day in Wireless InSite.(f)Snowy day in Wireless InSite.

For the communication data,rainy and snowy days in the electromagnetic space can be imitated by introducing rainy and snowy models in Wireless InSite.To be specific,the shapes of rainy and snowy models are set as raindrops and snowflakes,respectively.In addition,on rainy days,the parameters of temperature and humidity are set as 22.2 degrees Celsius and 100%in the simulation.Different from rainy days,the parameters of temperature and humidity are set as-10 degrees Celsius and 20%on snowy days.

The weather conditions of physical space and electromagnetic space need to be consistent.Towards this objective,we properly set the weather parameters in AirSim to align with the weather condition in electromagnetic space.Meanwhile,we accurately modify the rainy and snowy models in electromagnetic space to align with physical space before importing them into Wireless InSite.Meanwhile,the rain and snow parameters in AirSim and the rain and snow rate parameters in the LISA algorithm are adjusted to be consistent.As a result,the M3SC dataset aligns the multi-modal sensory data with the wireless communication data under different weather conditions.

2.3 Trajectory Determination and Collision Detection

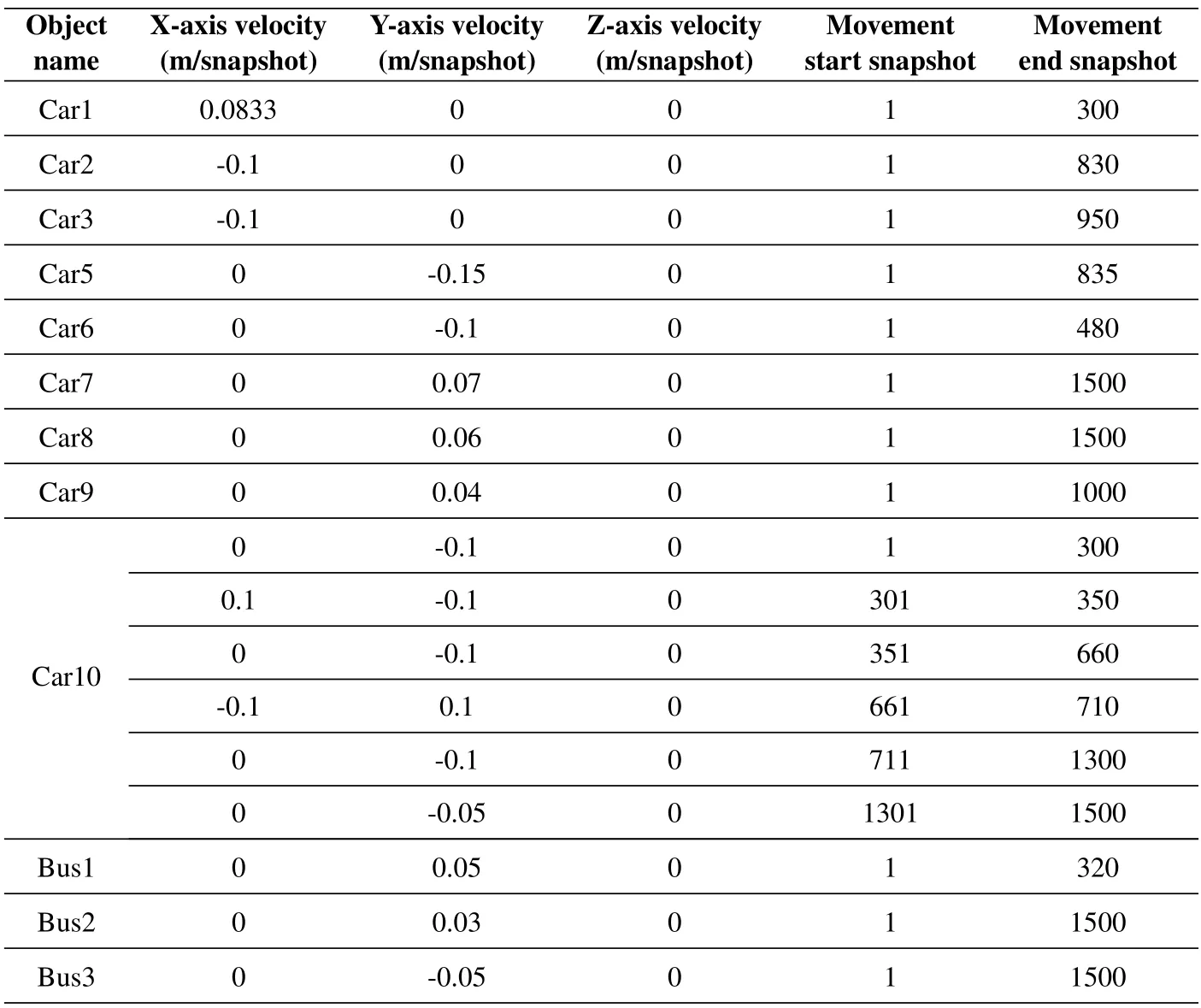

The third step is to determine the trajectories of dynamic objects and carry out collision detection.Tomimic various vehicular movement trajectories,we utilize Simulation of Urban MObility (SUMO) simulation platform to generate the high-fidelity trajectory for each vehicle.In addition,due to the precise alignment of physical space and electromagnetic space,the dynamic vehicle has the same trajectory in AirSim,WaveFarer,and Wireless InSite simulation platforms.To achieve a decent trade-off between time cost and data volume,we set the number of snapshots in the simulation to 1500.Due to the same trajectory in the aforementioned three simulation platforms,Figure 4 only gives trajectories of dynamic vehicles in Wireless InSite simulation platform for clarity.A detailed parameter setting of trajectories is listed in Table 2.According to Figure 4 and Table 2,the M3SC dataset can support the multi-trajectory and multi-velocity vehicle simulation,which is necessary to be mimicked due to its great impact on sensory and communication data [34],[35].By setting different snapshot values,the M3SC dataset can further contain aligned sensory and communication data under different vehicular velocities.

Table 2. The detailed parameter setting of trajectories of dynamic objects.

Figure 4.The scenario and detailed trajectories of dynamic objects in Wireless InSite simulation platform.

Based on the trajectory of the dynamic vehicle,the collision detection mechanism is utilized to ensure the validity of the generated complex high-mobility vehicular scenario.If a certain dynamic vehicle collides,its name and the corresponding collision snapshot can be obtained.As a result,the trajectory of the colliding dynamic vehicle can be properly reset to avoid the unrealistic phenomenon.

2.4 Batch Generation of Dynamic Simulation Scenarios

Based on the trajectory of the dynamic object,the fourth step is to complete the batch generation of the vehicular urban crossroad scenario with 1500 snapshots via efficient simulation software.For physical space in AirSim,positions of dynamic vehicles and sensors are revised in batches via Python.The 3D coordinates of each object and sensor are set frame by frame.Consequently,multi-modal sensory data of dynamic vehicles at each snapshot can be collected.For physical space in WaveFarer,the vehicle and radar positions are controlled via QtScript supported by Wave-Farer.Based on QtScript scripts,we can import the vehicle positions and orientations from an external source.Additionally,the relative positions of the onboard radars are fixed to the corresponding vehicles through the coordinate parameter linkage.As a result,radar data related to dynamic vehicles at each snapshot can be collected in WaveFarer.For the electromagnetic space,a large number of files that store 3D coordinates of dynamic vehicles and their antenna elements in Wireless InSite simulation platform are modified in batches via MATLAB.As a result,1500 vehicular urban crossroad communication scenarios with different positions of dynamic vehicles can be generated automatically.

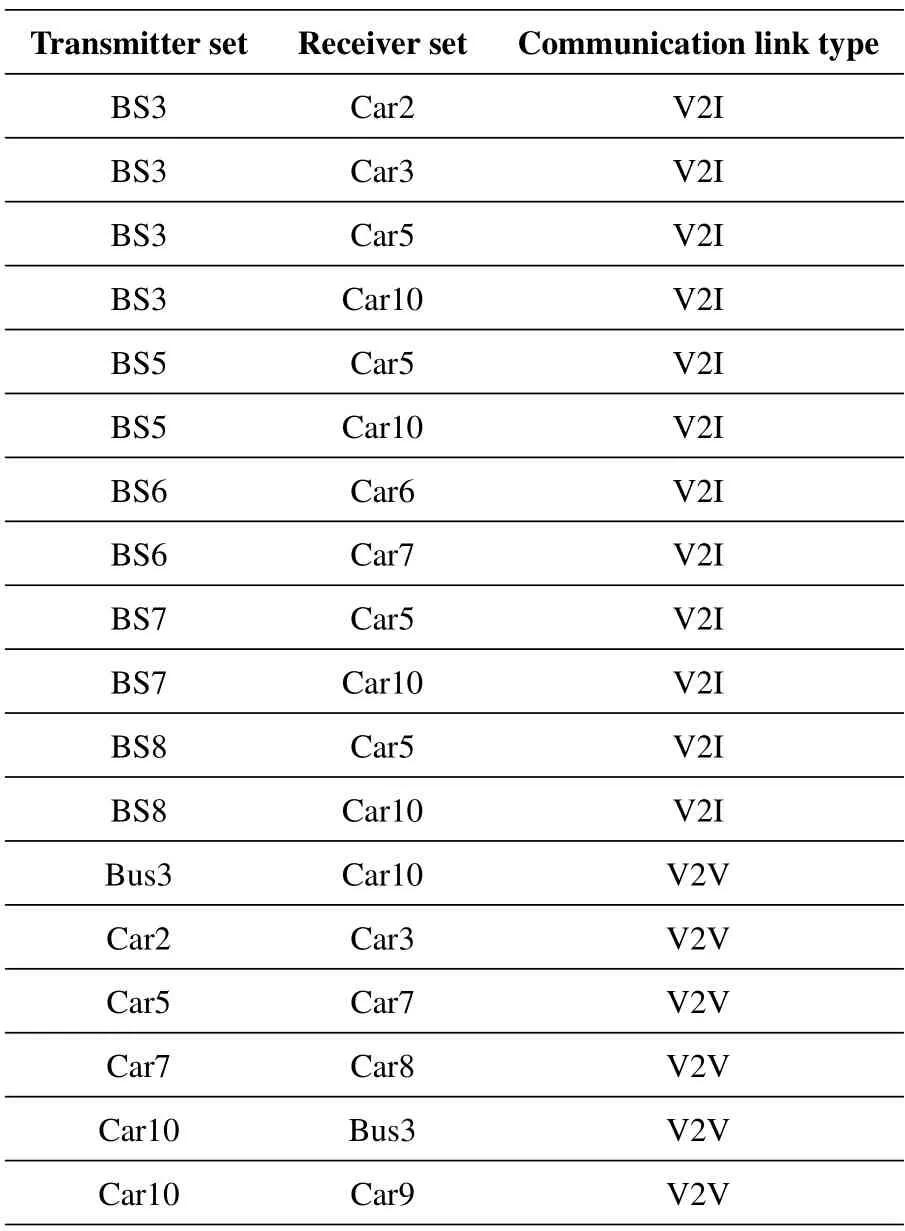

Figure 5 depicts the vehicular urban crossroad scenarios in AirSim,WaveFarer,and Wireless InSite simulation platforms at Snapshot 1,Snapshot 450,and Snapshot 1500.From Figure 5,it can be observed that the positions of the dynamic vehicle in physical space and electromagnetic space at each snapshot are the same.Furthermore,the dynamic vehicle moves from the start snapshot to the end snapshot exactly according to the trajectory,e.g.,Car10 shown in Figure 5,which validates the accuracy of the M3SC dataset.

2.5 Raw-Data Acquisition

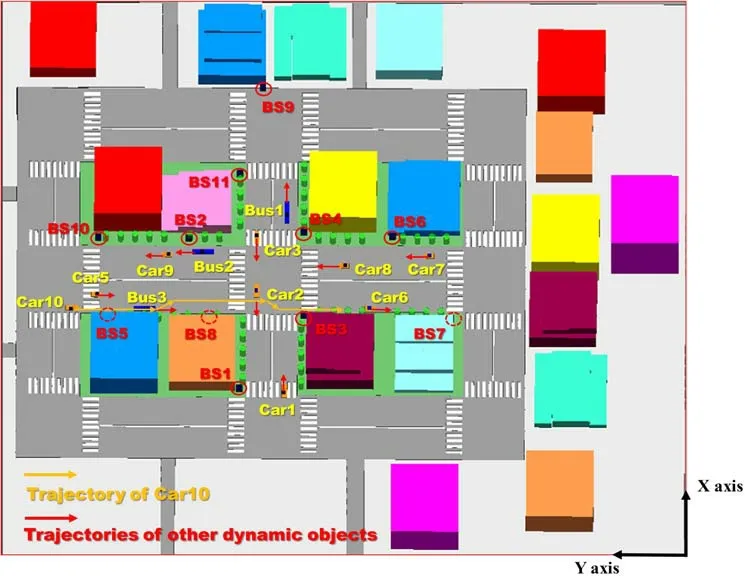

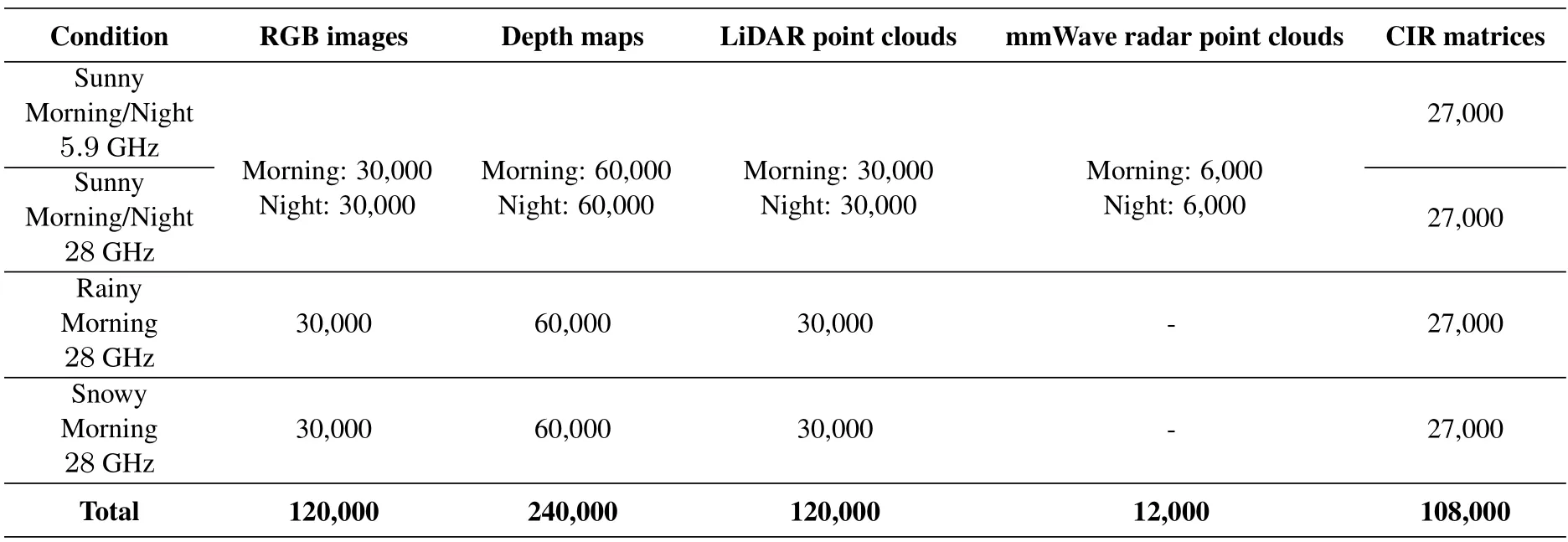

Efficiently acquiring the sensory data and communication data is another challenge and the fifth step aims to complete this challenging work.For physical space,each object,which needs to be moved,is arranged according to the preset coordinates/trajectories.Then,based on the determined parameters and positions of sensors,the sensory data can be directly collected and automatically saved in AirSim and WaveFarer simulation platforms.For the electromagnetic space,the generated 1500 vehicular urban crossroad communication scenarios in Wireless InSite simulation platform are queued for simulation by a script.When all 1500 scenarios are simulated,the corresponding CIR matrices can beexported automaticallythrough Python.For each snapshot,18 typical CIR matrices related to V2V and V2I links,which are listed in Table 3,are successfully exported and stored in “.mat” file.It is worth mentioning that the locations of BS in Wireless InSite simulation platform can be found in Figure 4.Therefore,in a vehicular urban crossroad communication scenario at a certain weather condition,e.g.,sunny day,with 1500 snapshots,there are 27,000 CIR matrices,consisting of V2V links together with V2I links.Then,the collected and exported multi-modal sensory data and wireless communication data are aligned.For clarity,Table 4 properly summarizes the numbers of sensory data,including RGB images,depth maps,Li-DAR point clouds,and mmWave radar point clouds,and wireless communication data,i.e.,CIR matrices.From Table 4,it can be readily seen that there are currently 120,000 RGB images,240,000 depth maps,120,000 LiDAR point clouds,12,000 mmWave radar point clouds,and 108,000 CIR matrices in the M3SC dataset,covering different weather conditions,different times of the day,as well as different frequency bands.Finally,it is noteworthy that,by utilizing efficient software whose accuracy is verified by the measurement,the constructed M3SC dataset is precise and similar with the measurement data.Specifically,for sensory data,the urban crossroad scenario in the M3SC dataset is constructed by Unreal Engine,which can provide an excellent rendering effect.In addition,AirSim and WaveFarer can provide high-fidelity multi-modal sensors with adjustable parameters,including FoV degrees,auto exposure speed,number of channels,and number of points captured per second.For communication data,it is obtained based on the ray-tracing technology.Since the ray-tracing technology generates channel data according to the geometrical optics and uniform theory of diffraction,the raytracing-based data is precise,which is similar with the real-world transmission propagation.

Table 3. Exported V2V and V2I links in a vehicular urban crossroad communication scenario.

Table 4. Numbers of RGB images,depth maps,LiDAR point clouds,mmWave radar point clouds,and CIR matrices.

III.RESULTS AND ANALYSIS

The multi-modal sensory data collected in AirSim and WaveFarer simulation platforms and communication data collected in Wireless InSite simulation platform are presented and analyzed.In addition,the influences of weather,time of the day,and communication frequency on multi-modal sensory and communication data are investigated.Also,the precise alignment between sensory data and communication data is demonstrated.

3.1 Multi-Modal Sensory Data

For the simulation of multi-modal sensory information,four typical sensing cases,i.e.,sunny days in the night,sunny days in the morning,rainy days in the morning,and snowy days in the morning,are considered.

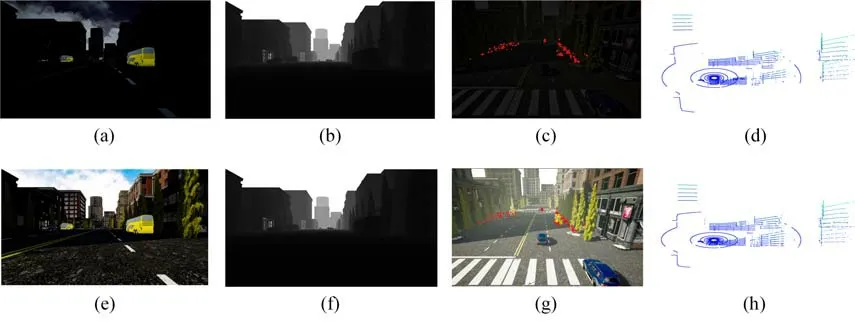

Figure 6 illustrates the RGB images,depth maps,mmWave radar point clouds,and LiDAR point clouds,on the sunny day at Snapshot 1 in the morning and night.It can be observed that the visibility of RGB image in the night is lower than that in the morning.This is due to the poor robustness of cameras to sunlight.On the contrary,depth maps and LiDAR point clouds in the night are similar to those in the morning.This is because that the robustness of depth cameras and LiDAR devices to sunlight is high.Specifically,depth cameras and LiDAR devices utilized in AirSim operate in the near infrared region,and thus are less affected by sunlight and are insensitive to day and night.Similar to depth cameras and LiDAR devices,since the robustness of mmWave radar to sunlight is also high,mmWave radar point clouds in the night are similar to those in the morning.

Figure 6. The sensory data collected by Car5 at Snapshot 1 on the sunny day in the morning and night.(a)-(d)RGB image,depth map,mmWave radar point clouds,and LiDAR point clouds in the night,respectively.(e)-(h) RGB image,depth map,mmWave radar point clouds,and LiDAR point clouds in the morning,respectively.

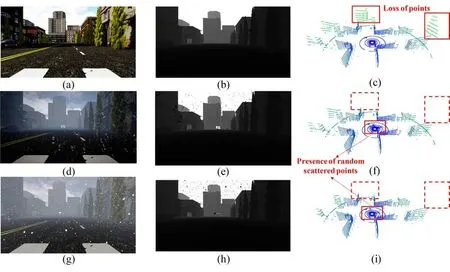

Figure 7 presents the RGB images,depth maps,and LiDAR point clouds in the morning at Snapshot 450 on the sunny,rainy,and snowy days.Compared to the sunny day,RGB images and depth maps have lower visibility on the rainy and snowy days,which is consistent with the observation in[28,36].In addition,Li-DAR points are sensitive to rainy and snowy weather conditions.According to laser attenuation caused by random scattering medium,range uncertainty is increased and points far away from LiDAR are lost,resulting in possible vague and missing points within the real target[24].In addition,large back scattered power from random droplets causes the presence of randomscattered pointsnearby the LiDAR sensors.

Figure 7. The sensory data collected by Car5 at Snapshot 450 in the morning on the sunny,rainy,and snowy days.(a)-(c)RGB image,depth map,LiDAR point clouds on the sunny day,respectively.(d)-(f)RGB image,depth map,Li-DAR point clouds on the rainy day,respectively.(g)-(i)RGB image,depth map,LiDAR point clouds on the snowy day,respectively.

In summary,according to Figures 6 and 7,it can be concluded that the processing result of multi-modal sensory data is realistic,which verifies the acquired multi-modal sensory data in the M3SC dataset.

3.2 Wireless Communication Information

For the simulation of wireless communication information,four typical scenarios,i.e.,sunny days at sub-6 GHz,sunny days at mmWave,rainy days at mmWave,and snowy days at mmWave,are taken into account.Important channel statistical properties,heat maps,and propagation paths under these four typical scenarios are presented.

A basic function,which describes the wireless communication channel,is CIRh(ε,t,τ) with spaceε,timet,and delayτ.By taking the Fourier transform of CIRh(ε,t,τ) with respect of delayτ,the spatialtemporal varying transfer functionφ(ε,t,f) is represented by

where∫{·}denotes the integral operator.Then,the space-time-frequency correlation function (STF-CF)ρφ(ε,t,f;Δε,Δt,Δf)is expressed by

where E{·}represents the expectation operator.Based on the derived STF-CF,channel statistical properties,including space cross-correlation function (SCCF),time auto-correlation function (TACF),and Doppler power spectrum density(DPSD),can be obtained.For the channel,the relationship between the multipath effect and SCCF,TACF,and DPSD,can be given below.The SCCF reflects the spatial correlation between different sub-channels with different paths.The TACF describes the temporal correlation of the channel with the appearance and disappearance of paths.The DPSD represents the received power distribution in the channel with different paths under different Doppler frequency shifts.

By setting Δt=Δf=0,the STF-CFρφ(ε,t,f;Δε,Δt,Δf)can be simplified to the SCCF.Figure 8 presents the normalized absolute Rx SCCF,where Car10 and Car9,as shown in Figure 5(c),are the Tx and the Rx,respectively.Car10 and Car9 are two typical mobile vehicles equipped with large-scale antenna array in the vehicular urban crossroad scenario,and thus the V2V link between them can be viewed as an example in the simulation.In Figure 8,δRis the adjacent antenna spacing at Rx andλis the carrier wavelength.Compared to the mmWave frequency band,the channel at sub-6 GHz exhibits a lower SCCF,which is consistent with the measurement result in [37].This is because that,in comparison of mmWave,the multipath effect in sub-6 GHz is more obvious and channel spatial diversity is larger.Furthermore,the SCCF of mmWave channels on the sunny day is lower than that on the rainy and snowy days.The underlying physical reason is that,on rainy and snowy days,the propagation power attenuation is more significant,resulting in less obvious multipath effect and larger spatial correlation.

By setting Δε=Δf=0 in the STF-CFρφ(ε,t,f;Δε,Δt,Δf),the TACF is obtained.Figure 9 depicts the normalized absolute TACF,where Car2 and Car3,as shown in Figure 5(c),are the Tx and the Rx,respectively.The TACF at mmWave frequency band is significantly lower than that at sub-6 GHz frequency band.The philosophy is that,compared to sub-6 GHz,Doppler spread at mmWave is severer and the channel coherence time is much smaller[38].Furthermore,the mmWave channel on the sunny day exhibits a lower TACF than that on the rainy and snowy days.This is because that there are more multipaths on the sunny day and the corresponding channel is more rapidly-changing.In this case,the appearance and disappearance of paths are rapider and more complex over time.As a consequence,the temporal correlation of the channel at different time instants is smaller,resulting in a lower TACF.

Through the Fourier transfer of the TACF with respect to time interval Δt,the Doppler PSD is obtained.In Figure 10,the normalized DPSD related to the Car10 and Car9 channels is shown.Attributed to the higher frequency in mmWave communications,the channel at mmWave has a larger Doppler spread than that at sub-6 GHz [38,39].Additionally,in comparison with rainy and snowy days,the channel on the sunny day has a steeper distribution of DPSD.This is due to the fact that the line-of-sight(LoS)component on the sunny day is more dominant than that on the rainy and snowy days.

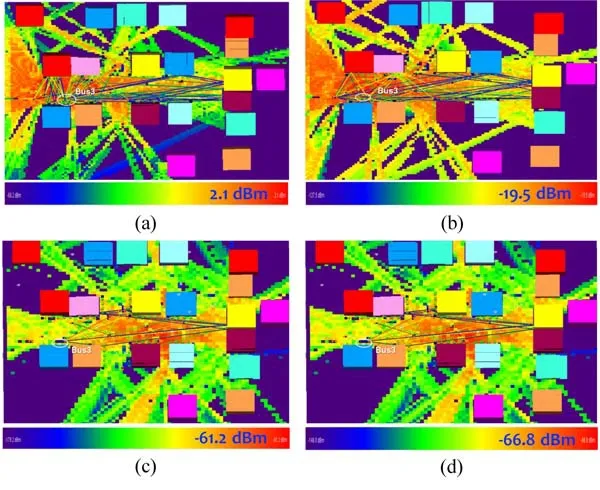

Figure 11 gives the heat map and the propagation path related to Bus3.To obtain the heat map,by utilizing the visualization function in Wireless InSite,the received power via the planar antenna array,i.e.,xygrid,is displayed in the form of the heat map.For example,the maximal received power via the planar antenna array on the sunny day at sub-6 GHz is 2.1 dBm.For the propagation path,Wireless InSite can also show the ray-tracing-based propagation paths in the environment via the visualization function.By obtaining the heat map and the propagation path under different weather conditions and frequency bands,some valuable observations can be found.Compared to the sub-6 GHz frequency band,the path power and the number of propagation paths are much smaller at the mmWave frequency band due to the significantly high pathloss.In addition,compared to the sunny day,the path power and the number of propagation paths are much smaller on the rainy and snowy days owing to the obvious rain and snow attenuation at mmWave communications[40].

Figure 11. Heat maps and propagation paths in Wireless InSite simulation platform.(a) Sunny day at sub-6 GHz.(b) Sunny day at mmWave.(c) Rainy day at mmWave.(d)Snowy day at mmWave.

According to Figures 8-11,it can be concluded that the processing result of wireless communication data is accurate,which validates the wireless communication data in the M3SC dataset.

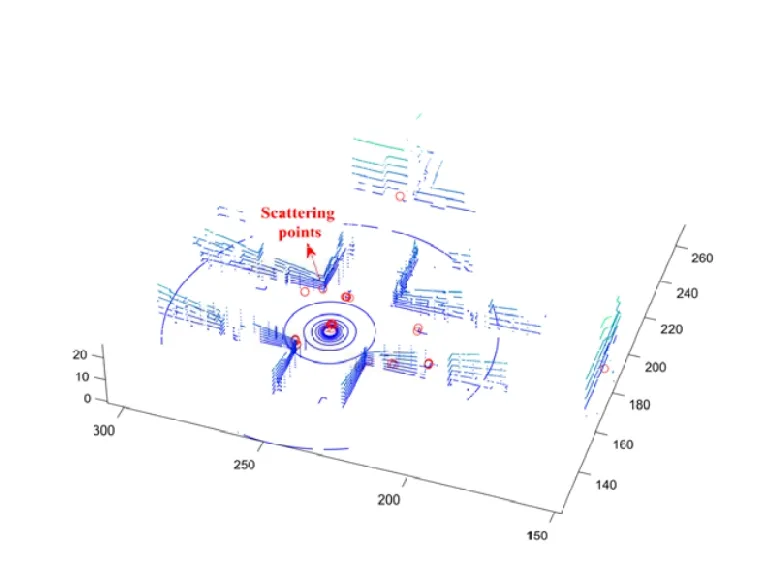

3.3 Aligned Sensory Data and Communication Data

Figure 12 shows LiDAR point clouds in physical space and scattering points in electromagnetic space on the sunny day at the initial time.For LiDAR point clouds,Car1 equips with the LiDAR device.For the scattering point,Car1 and Car2 are the Tx and Rx,respectively,where the carrier frequency is 28 GHz with 2 GHz communication bandwidth.From Figure 12,it can be readily observed that the M3SC dataset has the aligned LiDAR point clouds and scattering points.Specifically,the scattering point exists on the vehicle,building,and trees detected by LiDAR point clouds.This is consistent with the realistic propagation environment.

Figure 12. Mapping between LiDAR point clouds and scattering points at the initial time.

Based on Figure 12,it can be concluded that the aligned sensory data and communication data are obtained.Therefore,physical space and electromagnetic space are precisely aligned in the M3SC dataset.

IV.POTENTIAL MMM SENSINGCOMMUNICATION APPLICATIONS SUPPORTED BY M3SC DATASET

In this section,the potential MMM sensingcommunication applications that can be supported by the comprehensive M3SC dataset are discussed from three perspectives.

1.Channel modeling:The M3SC dataset can be regarded as an important component for the development of novel channel modeling methods.With the help of aligned multi-modal sensory and communication data in the M3SC dataset,the correspondence between objects in physical space and clusters in electromagnetic space can be explored.Meanwhile,the complicated correlation between object parameters and cluster parameters can also be obtained via deep learning algorithms.Furthermore,the obtained correlation can be extended to various weather conditions,times of the day,and frequency bands via the M3SC dataset.

2.Waveform design:The M3SC dataset can support new paradigms of waveform design for more in-depth integration of sensing and communications by providing multi-modal sensing information and accurate real-time channel information.Specifically,the correlation between physical space and electromagnetic space can be utilized to achieve mutual benefits of sensing and communications.The mapping from the information in physical space to the optimal integrated waveform can be explored.The optimal integrated waveform can be used to further enhance the sensing accuracy and robustness of conventional sensors.Additionally,a more effective extraction of electromagnetic space can increase the secrecy rate for security communications.Finally,by M3SC dataset with different weather conditions and different frequency bands,the robustness and real efficiency of the waveform design methods can be evaluated adequately.

3.Perception enhancement:The synchronized multi-modal and multi-vehicle sensing data in the M3SC dataset can support research on perception system enhancement to address the problem of single-vehicle perception in occlusion or harsh weather [41].Based on the M3SC dataset,the information redundancy brought by multiple sensors and vehicles can be quantified.Then,communication resource planning can be carried out to adequately utilize communications as the method of information transmission.The perception algorithm on different weather and different road conditions can also be trained and tested the M3SC dataset.The aligned channel state information and sensory data in the M3SC dataset can support the extraction of physical space localization and perception information from the channel state information of V2V and V2I links.Especially in situations where the sensor’s information is insufficient,such as camera in dim light and Li-DAR on snowy days.

V.CONCLUSION

This paper has developed a MMM dataset,named M3SC,and the generation framework of MMM datasets for sensing-communication integration.The M3SC dataset has achieved the in-depth integration of sensing and communications and precise alignment of physical space and electromagnetic space.Furthermore,the M3SC dataset has contained three weather conditions,i.e.,sunny,rainy,and snowy days,different times of the day,i.e.,morning and night,and various frequency bands,i.e.,sub-6 GHz and mmWave.Currently,there have been 120,000 RGB images,240,000 depth maps,384,000 sets of mmWave waveforms with 12,000 radar point cloud,120,000 Li-DAR point clouds,and 108,000 CIR matrices in the M3SC dataset.The data processing result has shown that the weather,time,and frequency bands have significant impacts on sensory data and communication data.Thanks to the judiciously designed data generation framework and orchestrated cooperation of several simulation platforms,the data processing result has also demonstrated the accuracy of the aligned sensory and communication data.Finally,this paper has discussed the potential MMM sensing-communication applications with the aid of M3SC dataset from three perspectives.Currently,we are constructing a realistic measurement platform for sensing and communication integration.In the future,the obtained measurement data can be utilized as a reference for the M3SC dataset.Furthermore,we can enrich the M3SC dataset by jointly considering different vehicular traffic densities and diversified simulation scenarios,including high-speed train,unmanned aerial vehicle,maritime scenarios,and warfare scenarios.

ACKNOWLEDGMENT

This work was supported in part by the Ministry National Key Research and Development Project(Grant No.2020AAA0108101),the National Natural Science Foundation of China (Grants No.62125101,62341101,62001018,and 62301011),Shandong Natural Science Foundation(Grant No.ZR2023YQ058),and the New Cornerstone Science Foundation through the XPLORER PRIZE.The authors would like to thank Mengyuan Lu and Zengrui Han for their help in the construction of electromagnetic space in Wireless InSite simulation platform and Weibo Wen,Qi Duan,and Yong Yu for their help in the construction of phys ical space in AirSim simulation platform.

- China Communications的其它文章

- Secure and Trusted Interoperability Scheme of Heterogeneous Blockchains Platform in IoT Networks

- Intelligent Edge Network Routing Architecture with Blockchain for the IoT

- Privacy-Preserving Deep Learning on Big Data in Cloud

- PowerDetector: Malicious PowerShell Script Family Classification Based on Multi-Modal Semantic Fusion and Deep Learning

- Dynamic Task Offloading for Digital Twin-Empowered Mobile Edge Computing via Deep Reinforcement Learning

- Resource Trading and Miner Competition in Wireless Blockchain Networks with Edge Computing