Recent progress of machine learning in flow modeling and active flow control

Yunfei Li, Juntao Chang, Chen Kong, Wen Bao

School of Energy Science and Engineering, Harbin Institute of Technology, Harbin 150001, China

KEYWORDS Data-driven modeling;Flow control;Flow field kinematics;Machine learning;Neural networks -applications

Abstract In terms of multiple temporal and spatial scales, massive data from experiments, flow field measurements, and high-fidelity numerical simulations have greatly promoted the rapid development of fluid mechanics. Machine Learning (ML) provides a wealth of analysis methods to extract potential information from a large amount of data for in-depth understanding of the underlying flow mechanism or for further applications. Furthermore, machine learning algorithms can enhance flow information and automatically perform tasks that involve active flow control and optimization. This article provides an overview of the past history, current development, and promising prospects of machine learning in the field of fluid mechanics. In addition, to facilitate understanding, this article outlines the basic principles of machine learning methods and their applications in engineering practice, turbulence models, flow field representation problems, and active flow control. In short, machine learning provides a powerful and more intelligent data processing architecture, and may greatly enrich the existing research methods and industrial applications of fluid mechanics.

1. Introduction

Accompanied by traditional fluid mechanics research, a large amount of data from experiments, flow field measurements and large-scale numerical simulations are provided.In the past few decades,benefit from the development of high-performance computing architecture and sophisticated experimental capabilities and measurement methods,big data has become a notable feature of the development of fluid mechanics.Meanwhile,corresponding methods of processing volumes of data such as database clusters proposed by Perlman et al.are used for data analysis and processing. Although efficient methods are used to process large amounts of data, these processing methods currently rely too much on professional domain expertise and complex algorithms to a certain extent.

The rapid growth of data volume is widespread in various disciplines, obtaining potential and enlightening information from data has gradually become the focus of research.In view of the upgrade of computer hardware architecture, more efficient data storage and transmission, the development of massively parallel algorithms, and the construction of open source frameworks,data-driven research methods have gained more and more attentions from industry and academia.Machine learning, especially deep neural networks, which has unique advantages when dealing with high-dimensional nonlinear problems, is rapidly integrating into the research of fluid mechanics. According to whether the data is labeled,machine learning can be roughly divided into supervised learning, semi-supervised learning and unsupervised learning.

In terms of data analysis in fluid mechanics,machine learning has gradually been applied to the fields of reduced-order modeling, reconstruction and prediction, turbulence model closure, and active flow control with many advantages. Furthermore, the fusion of machine learning and fluid mechanics will also bring challenges to corresponding algorithms, such as embedding physical prior information into models and the interpretability of research conclusions.

While the integration of machine learning and fluid mechanics is thriving, it is more worth noting that why machine learning-related algorithms are effective and under what circumstances failed.Applying machine learning to traditional fluid mechanics is a challenging research field.Researchers need to balance the pros and cons of marveling at the power of machine learning and turning specific ideas into reality. In this context, this article classifies and elaborates the application of machine learning in the field of fluid mechanics in recent years, and hopes that machine learning will further promote the development of fluid mechanics in the future.

The integration between ML and fluid mechanics has gone through a long history. In the 1950s, the perceptron proposed by Rosenblattwas designed to simulate the behavior of the human brain to find a separation hyperplane that linearly divides the training data. Researchers marveled at the classification ability of the perceptron, which has also become the basis of neural networks and later Support Vector Machines(SVM). The neural network was inspired by the research of Hubel and Wieselon the visual cortex of cats. Their experiments showed that the neural network was composed of hierarchical cells for processing visual stimuli. The excitement brought by the perceptron came to an abrupt end with the judgement of Minsky and Paperton the basic idea of machine learning: a single-layer linear perceptron could not solve the Exclusive OR(XOR)problem.The development of neural networks has stalled for the first time.

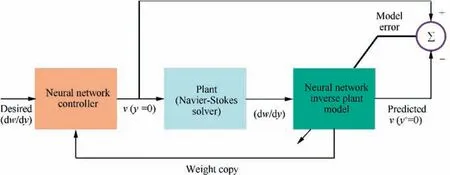

The reawakening of neural networks was accompanied by the backpropagation algorithm proposed by Hinton et al.in the 1980s, and in 1989, the Convolutional Neural Network(CNN) proposed by LeCun et al.based on the backpropagation algorithm was successfully applied to handwritten digit recognition. But these developments have not attracted the attentions of fluid mechanics researchers. In the early 1990s,Teoand Grantet al. applied neural networks to Particle Image Velocimetry (PIV) to resolve problems such as directional ambiguity.Kim et al.constructed a new adaptive controller based on neural network and used it for turbulence drag reduction. The simple control network, which employed suction and blowing on the wall based on the wall shear stress in the span direction, can reduce wall skin friction by up to 20%. However, in view of the traditional learning methods such as SVM still occupied the academic mainstream of statistical learning,the development of neural networks was still in a dormant period.

In the past ten years, with the renewed prosperity of machine learning, especially Deep Neural Networks(DNN), the integration of statistical learning methods and fluid mechanics has once again attracted the attention of many researchers. Kutzand Brenner et al.review the application of machine learning in the field of fluid mechanics in recent years. The prosperity of this integration of disciplines is largely attribute to the maturity of the deep learning architecture and the growth of computing power.The development of machine learning will largely make up for the constraints of fluid mechanics. Meanwhile, this trend will also pose a great challenge to the interpretability of machine learning.

The great success of machine learning in the fields of speech recognitionand signal processingin recent years is significantly different from its application in fluid mechanics. Considering computer vision and speech recognition, machine learning is more like a black box, but for fluid mechanics,researchers are more eager to know the physical information contained in the model and its principles. For unsteady flow,there are high-dimensional, non-linear,and large-scale temporal and spatial characteristics in fluid flow. The ability of machine learning to recognize these characteristics and why it is effective or failed remains to be explored in depth. As far as experiments are concerned,repeated experiments in fluid mechanics such as wind tunnel experiments are costly. Using numerical simulations to prepare large amounts of data will also consume a large amount of computational cost. Whether machine learning algorithms and models are equally effective in multiple experiments and various numerical simulation data remains to be resolved.

In terms of fluid dynamics control, machine learning has gradually emerged, especially interactive learning based on Reinforcement Learning (RL). Reinforcement learningis one of the paradigms and methodologies of machine learning.It is used to describe and solve the problem that agents use learning strategies to maximize returns or achieve specific goals during their interaction with the environment.In the use of RL for fluid dynamics control, the definition and design of the objective optimization function directly affects the optimization effect of the flow field and the difficulty of convergence of the model. In addition, many fluid systems are nonstationary, even for stationary systems, it also requires an expensive cost to design training algorithms to help the model achieve convergence of statistical results.

Moreover, the conclusions drawn from researches related to fluid mechanics should usually be interpretable and generalizable, and the experimental methods or numerical models obtained should have certain performance guarantees. In this regard, the integration of machine learning and fluid mechanics is facing great challenges,which in turn will surely promote the progress of machine learning algorithms.

These non-exhaustive challenges will not prevent the integration of machine learning and fluid mechanics. On the contrary, the problems encountered in the process of fusion of disciplines will inevitably promote the development of the two disciplines.

The structure of this review is as follows: Section 2 will explain the basic principles of ML, and then the research on flow field modeling with ML will be explained in the Section 3. The research on active flow control with ML will be introduced in Section 4. In the conclusion, a summary and outlook on the application of ML in fluid mechanics will be provided.

2. Fundamentals of machine learning

Machine learning is a discipline in which computers build probability statistical models based on data and use the models to predict and analyze data. Simononce gave the following definition of learning:if a system can improve its performance by performing a certain process, it is learning. The basic assumption of machine learning about data is that similar data has certain statistical regularity, which is the premise of machine learning. Machine learning is mainly composed of supervised learning, semi-supervised learning, and unsupervised learning. For supervised learning, the method can be summarized as follows: starting from a given, limited data set,assuming that the data are Independent and Identical Distributed (IID), and assume that the model to be learned belongs to a set of certain functions, called hypothesis space;apply an evaluation criterion to select an optimal model from the hypothesis space, so that the model can predict the unknown test data optimally under the given evaluation criteria.

2.1. Supervised learning

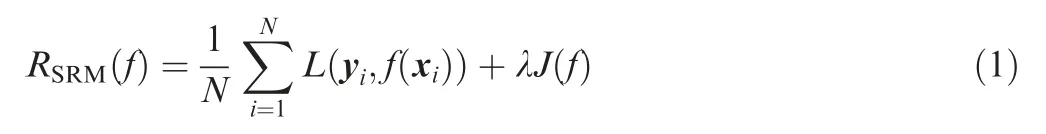

The task of supervised learning is to learn a model so that the model can make a good prediction of its corresponding output for any given input.At the same time,supervised learning is an extremely important branch of statistical learning.Each specific input is an instance, which is usually represented by a feature vector, and the space in which the feature vector is located is called the feature space. The models are actually defined in the feature space.The purpose of statistical learning is to select the optimal model from the hypothesis space. In practical applications,the loss function is usually used to measure the quality of model prediction. The loss function is usually defined as Eq. (1):

where the Structural Risk Minimization (SRM) is a strategy proposed to prevent overfitting, data input (x) and output(y)are sample pairs from a probability distribution,λJ(f)represents a regular term used to weigh empirical risk and model complexity. Regularization conforms to the principle of Occam’s razor: among all possible models, one that can explain the known data well and is very simple is the best model.

2.1.1. Neural networks

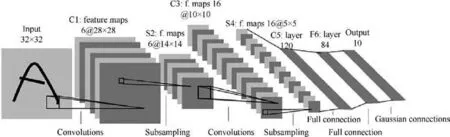

Neural network should be the most popular learning architecture in the field of machine learning in recent years. The universal approximation theorem proposed by Hornik et al.shows that if a feedforward neural network has a linear output layer and at least one hidden layer with any kind of ‘‘squeezing” activation function (such as logistic or sigmoid), it can approximate any measurable function from one finitedimensional space to another with arbitrary precision. The most classical model of the neural network in the context of pattern recognition is the feed-forward neural network, also known as the Multilayer Perceptron (MLP). Feedforward neural network is composed of multi-layer neurons, and the output of each layer serves as the input of the next layer. It is a kind of quintessential deep learning model for modeling high complexity data through multi-layer nonlinear transformation. Convolutional Neural Networks (CNN) proposed by LeCun et al.are a specialized kind of neural network for processing data that has a known, grid-like topology. Compared with fully connected operation, the biggest preponderance of convolution operation is weight sharing and sparse interaction,which also makes the parameters of the convolutional layer much smaller than that of the fully connected layer, and makes it more suitable for image recognition. The LeNet-5 CNN architecture proposed by LeCun et al., the first one successfully applied to handwritten number recognition,is shown in Fig. 1.With its powerful capabilities, CNN has now becomes the cornerstone of object detection,image super-resolution and other fields.

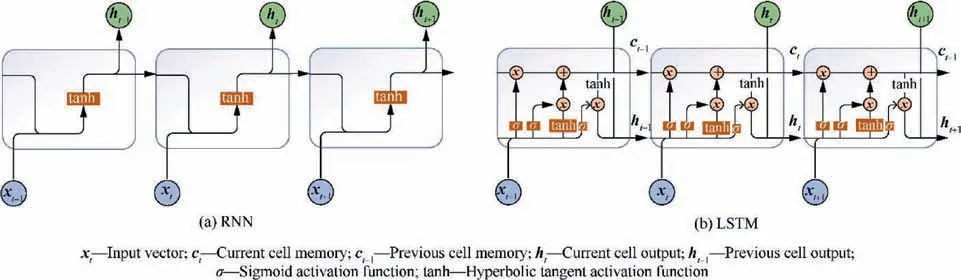

For CNN and feedforward neural networks, the links between neurons are limited to layers, while for Recurrent Neural Networks (RNN), neurons in each layer can also have links to each other. In the 1980s, the RNN proposed by Hopfieldcould not effectively solve the problems of gradient vanishing/explosion,which made it difficult to train the network,and its early application was limited.An epoch-making change appeared in 1997,the Long Short-Term Memory(LSTM)network proposed by Hochreiter and Schmidhubereffectively alleviated the long-term problem of RNN and gradient explosion by constructing a well-designed gate structure in the cell.Nowadays, LSTM plays an indispensable key role in speech recognition,text generationand other fields by virtue of its excellent ability to deal with time-series related problems.The structure of the standard RNN and LSTM are shown in Fig. 2.In addition to the standard LSTM structure, Gers and Schmidhuberproposed an LSTM variant with extra connections called peephole connection: the previous longterm state cis added as an input to the controllers of the forget gate and input gate.In addition,the Gated Recurrent Unit(GRU)cell proposed by Cho et al.in 2014 is a simplified version of the LSTM, and it seems to perform just as well as LSTM. In general, LSTM or GRU cells are one of the main reasons behind the success of RNNs in recent years, in particular for applications in Natural Language Processing (NLP).

It’s worth mentioning that,how to prevent gradient vanishing/exploding is the most important consideration in the process of deep neural network training. In 2015, Ioffe and Szegedyproposed a technique called Batch Normalization(BN) to address the vanishing/exploding gradients problems,and more generally the problem that the distribution of each layer’s inputs changes during training, as the parameters of the previous layers change (which they call the Internal Covariate Shift problem).In addition,techniques such as drop outand early stopping to suppress overfitting are also worthy of attention.

2.1.2. Classification: Support vector machine and decision trees

Fig. 1 Structure schematic of LeNet-5 model adopted from LeCun et al.20

Fig. 2 Structure schematic of RNN and LSTM network (figure was based on the idea from Hochreiter and Schmidhuber24).

Classification is the core problem of supervised learning.When the output variables are distributed with a finite number of discrete labels,the prediction problem turns into the classification problem.For classification problems,the two most commonly used and classic methods are SVM and logistic regression.SVM is a two-class classification model. Its basic model is a linear classifier with the largest interval defined in the feature space. The largest interval makes it different from the perceptron. Furthermore, the SVM can use kernel techniques proposed by Boser et al.,which makes it essentially becomes a nonlinear classifier. In reality, soft interval SVM proposed by Cortes and Vapnikare more suitable for training data which is approximately linearly separable.For a given training data set,the separation hyperplane learned by maximizing the interval or solving the corresponding convex quadratic programming problem is shown as Eq.(2),and the corresponding classification decision function is shown as Eq. (3).

where x represents data input, wand brepresent the weight vector and bias,respectively.Drucker et al.further extended SVM to make it suitable for regression problems. For linearly inseparable training data, learning a nonlinear support vector machine by using a kernel function is equivalent to implicitly learning a linear support vector machine in a highdimensional feature space.

Like SVMs, decision trees are versatile machine learning algorithms that can perform both classification and regression tasks, and even multioutput tasks. Decision trees are also the fundamental components of random forests,which are among the most powerful ML algorithms available today. The decision tree learning process usually includes three steps: feature selection, decision tree generation and decision tree pruning.These ideas are mainly derived from the ID3 algorithm proposed by Quinlanin 1986 and the C4.5algorithm proposed in 1992, as well as CART algorithm proposed by Breiman et al.in 1984.

2.2. Semi-supervised learning

The learner does not rely on external interaction and automatically uses unlabeled samples to improve learning performance,which is semi-supervised learning. For semi-supervised learning,two main categories will be introduced:Generative Adversarial Networks (GAN) and RL, both of which belong to the self-training paradigm.

2.2.1. Generative adversarial networks

So far, the most noticeable success of deep learning has focused on discriminative models, which map highdimensional and complex sensory inputs to low-dimensional class labels, such as SVM and conditional random fields.The generative model learns the joint probability distribution p(x,y) through input data, and then produces the posterior conditional probability distribution p(y|x). Typical generative models include Naive Bayesian Method and Hidden Markov Model (HMM). Traditional generative methods have more difficulties in approximating many difficult probability estimation problems, such as maximum likelihood estimation,which leads to the fact that deep generative models have had less of an impact. The GAN proposed by Goodfellow et al.sidesteps these difficulties.

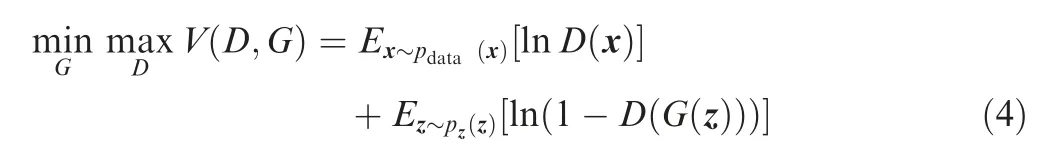

The application of MLP to GAN is the most straightforward application. In addition, some variants such as Deep Convolutional Generative Adversarial Networks (DCGAN)are also worthy of attentions in certain scenarios. GANs are inspired by the two-player minmax game, the model training can be performed by optimizing the value function V(G,D)which is shown as Eq. (4):

where G called generator is a differentiable function represented by a MLP with parameters θ(g) that maps random noise z to the input data space x, D called discriminator is the second MLP that outputs a scalar value to represent the probability that the input data is from the real sample data and not the results of the generator,Eand Erepresent the expectation that the data comes from the input and the noise,respectively.For the actual training of the model,the k-step optimization for discriminator and the 1-step optimization for generator are executed, which makes D always stay near its optimal solution as long as G changes slowly enough.This self-training mode of GAN has attracted a lot of interest,but its inherent training disequilibrium and mode collapse problems pose a great challenge to the convergence of training.In addition, the loss of generators and discriminators cannot indicate the progress of training. The WGAN proposed by Arjovsky et al.introduced the Wasserstein distance to the GAN, instead of the original Kullback-Liebler (KL) divergence, and greatly resolved the problem of disequilibrium of the original GAN.

In the category of generative models, in addition to the GAN already introduced, Variational Autoencoder (VAE)proposed by Kingma and Wellingand PixelRNN proposed by Oord et al.also worthy of attentions. The goals of VAE and GAN are basically the same, they both hope to build a model that generates target data x from latent variable z, but the implementation is different.

2.2.2. Reinforcement learning

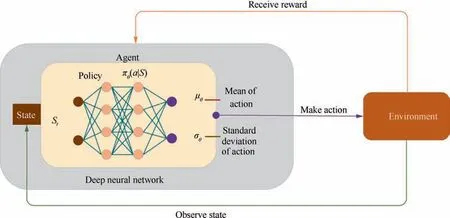

RL is one of the most exciting fields of machine learning today,and also one of the oldest. In RL, a software agent makes observations and takes actions within an environment, and in return it receives rewards. Its objective is to learn to act in a way that will maximize its expected long-term rewards. In other words, the agent acts in the environment and learns by trial and error to maximize its pleasure and minimize its pain.It is this precise approximation that makes it highly suitable for dynamic control problems in fluid mechanics. The RL algorithms can be classified into policy based, a=π(s)defines the agent’s behavior α at a given time with the state s of the system, and value based, v(s) tells us the maximum expected future reward the agent will get at each state. For fluid mechanics,the algorithm based on policy gradient is obviously more suitable for complex multi-scene flow control problems.The schematic diagram of RL based on policy gradient is shown in Fig. 3.

The basic idea of RL comes from the Markov Decision Process (MDP) proposed by Bellmanin the 1950s. Early applications of RL are limited to domains where features can be made manully and have a fully observed lowdimensional state space. In 2015, Mnih et al.used the method of training deep neural networks to build a novel artificial agent,named Deep Q Network(DQN),using end-to-end deep reinforcement learning to directly learn successful strategies from high-dimensional sensory input. DQN is a typical RL algorithm based on value function,to choose which action to take given a state with the highest Q-value (maximum expected future reward will get at each state). But for some continuous action scenes, because DQN assigns a score to any possible action, it is no longer suitable in scenes with infinite possibilities of actions.The method based on policy gradient π(a|s)=P[a|s], is more suitable for scenarios with infinite action possibilities, and it can learn stochastic policies,which means that exploration/exploitation trade off don’t need to be performed. But both of these methods have big drawbacks. That’s why, today, a new type of RL method which we can call a ‘‘hybrid method”: Actor Critic, proposed by Mnih et al.,is more popular.For actor critic,two neural networks will be used,a critic that measures how good the action taken is (value-based), and another is an actor that controls how agent behaves (policy-based). The state of the art deep RL algorithm Proximal Policy Optimization (PPO) proposed by Schulman et al.is exactly based on Actor Critic. We believe that deep RL algorithms based on PPO are more suitable for active flow control problems in fluid mechanics.

Although deep RL has made amazing achievements, it is still confronted with some intractable problems, especially for complex scenes where some specific reward functions are difficult to obtain in fluid mechanics. The reverse RL algorithm proposed by Ng and Russellis designed to find an efficient and reliable reward function.To resolve the problem that the model doesn’t have the ability to planning,that is,the ability to consider subsequent rewards,the value iterative network proposed by Tamar et al.embeds the value iterative planning algorithm through convolution into the deep neural network,making the model have stronger generalization ability than DQN.

2.3. Unsupervised learning

Unsupervised learning is a machine learning paradigm that learns the statistical laws or internal structure of data from unlabeled data. It mainly includes clustering, dimensionality reduction and probability estimation. Unsupervised learning is mainly used for data analysis or pre-processing of supervised learning.The basic idea of unsupervised learning is to perform some compression on a given matrix data, assuming that the result of compression with the smallest loss is the most essential structure.

2.3.1. Dimensionality reduction: Proper orthogonal decomposition, principal component analysis and autoencoder

Proper Orthogonal Decomposition (POD) is the most fundamental data-driven method for modal analysis of the entire unsteady flow field, which is equivalent to Principal Component Analysis(PCA).The principle of POD is that each instantaneous flow field can be represented by the linear weighted sum of orthogonal basis vectors called POD modes.However,since each instantaneous flow field is processed independently in the POD,it is difficult to understand the temporal characteristics of the flow field by the POD mode itself. PCA uses orthogonal transformation to convert the observed data represented by linearly dependent variables into a few data represented by linearly independent variables. The linearly independent variables are called principal components. The number of principal components is usually smaller than the number of original variables. In 1933, Hotellinggeneralized PCA to apply to random variables. It is worth noting that PCA can also use the kernel methodintroduced in SVM,making it possible to perform complex nonlinear projections for dimensionality reduction.

Fig. 3 Deep reinforcement learning schematic (figure was adapted from Brunton et al.42).

Researches have been carried out on the low-dimensional characteristics representation of the flow field using methods such as PCA. However, these dimensional reduction methods are linear and rely on strong assumptions. Recently, deep learning,which is a state-of-the-art non-linear mapping method, is used as a more flexible learning paradigm for low-dimensional representation of data. Autoencoders (AE)are artificial neural networks capable of reducing dimensions and learning efficient representations of the input data, called codings, without any supervision. However, the deviation between input and output is not a compelling reason that really affects the feature extraction effect of the model.Another way proposed by Vincent et al.to force the AE to learn useful features is to add noise to its inputs, training it to recover the original, noise-free inputs.

Locally Linear Embedding(LLE)proposed by Roweis and Saulis another very powerful non-linear dimensionality reduction technique. It is a Manifold Learning technique that does not rely on projections like the previous algorithms. In addition, some other dimensionality reduction methods such as Isomap and t-distributed Stochastic Neighbor Embedding(t-SNE) are also worthy of attention.

2.3.2. Clustering: k-means

Clustering is a data analysis method that combines a given sample into several categories based on the similarity or distance of their features.Intuitively,similar samples are concentrated in similar classes,and dissimilar samples are scattered in different classes. The purpose of clustering is to discover the characteristics of data through the obtained classes, and it has a wide range of applications in the fields of data mining and pattern recognition. The most commonly used clustering algorithms are hierarchical clustering and k-meansclustering. Hierarchical clustering assumes that a hierarchical structure exists between samples. k-means clustering divides the sample set into k subsets,and each sample has the smallest distance to the center of its class.

2.4. Other important topics: Transfer learning and Gaussian process

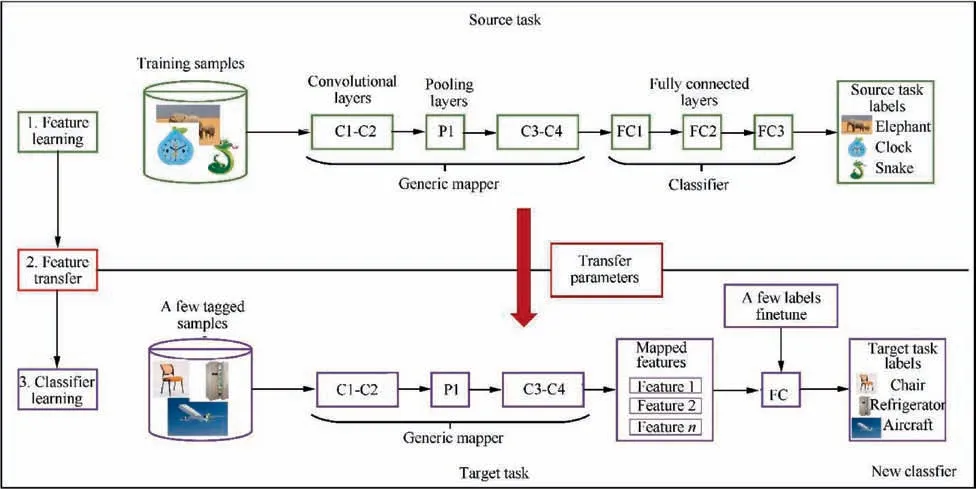

There are also some ML algorithms that are not covered in this review but are also worthy of attention from the fluid mechanics community.For the effective application of many ML algorithms,the premise is that the data used for training model and future prediction must be located in the same feature space and have the same distribution,that is,to meet the requirements of independent and identically distributed. However, this assumption may not be fully satisfied for many practical problems. For example, we are interested in the classification task in one domain, but sufficient labeled training data exist in another domain, where the data distribution of the latter may not be exactly the same as that of our target domain. In this case, transferring the knowledge domain with a large amount of labelled data to the target domain we are interested in will save a lot of effort in labelling data. In recent years,transfer learning has gradually attracted the attention of researchers and has been used to solve such problems.Transfer learning can be defined as the ability of the system to recognize and apply the knowledge and skills learned from previous tasks to new tasks.Different from multi-task learning,learning both source task and target task simultaneously, transfer learning pays more attention to target task, which also makes source and target task no longer symmetric in transfer learning.For transfer learning,an important question in most situations is‘‘when to transfer”.Relying on brute force to transfer irrelevant source domain knowledge to the target domain may be unsuccessful,that is,negative transfer.According to the different domains and tasks of the source and target, transfer learning can be divided into the following categories:inductive transfer learning, transductive transfer learning, and unsupervised transfer learning.The CNN-based transfer learning schematic is shown in Fig.4.We recommend readers to refer to the reviews on transfer learning by Panand Shaoet al.

Fig. 4 Schematic of transfer learning model based on CNN.

Gaussian Process(GP)is a kind of random process in probability theory and mathematical statistics.It is an extension of multivariate Gaussian distribution and is used in ML, signal processing and other fields. The GP can be defined as: for all x=[x,x,···,x], f(x)=[f(x),f(x),···,f(x)] obeys the multivariate Gaussian distribution, then f is a Gaussian process, which can be expressed as Eq. (5):

where μ(x)represents the mean function and returns the mean of each dimension, κ(x,x) represents the covariance function(also called kernel function)and returns the covariance matrix between the each dimension of two vectors. A GP is uniquely defined by a mean function and a kernel function,and a finitedimensional subset of a Gaussian process obeys a multivariate Gaussian distribution. The kernel function is the core of the GP that generates a covariance matrix (correlation coefficient matrix) to measure the ‘‘distance” between any two points.The most commonly used kernel function is the Gaussian kernel function, which is also called the Radial Basis Function(RBF)kernel.GP regression naturally supports the prediction uncertainty (confidence interval) of the model, and directly outputs the probability distribution of the value of the prediction point. However, as a non-parameter model, the GP regression needs to solve inverse matrix for all data points in the process of each inferring. When the data volume is large,the GP becomes very intractable. For a more detailed introduction to the Gaussian process,please refer to Rasmussen’swork.

3. Flow modeling with machine learning

For nearly a century,the basic laws of physics such as conservation laws have deeply occupied the dominant position in the research of fluid mechanics, and have greatly promoted the research and application of fluid mechanics. However, for the flow with high Reynolds number that is common in the aerospace, using Direct Numerical Simulation (DNS) based on directly solving the Navier-Stokes equations to obtain the flow field parameters will consume huge computing resources,which is far from the degree of practical engineering application. On this basis, many turbulence models have been developed to approximate these conservation equations, or specific experiments can be performed to obtain corresponding results. However, considering that the experiment largely depends on the specific configuration,and the numerical simulation cannot control the flow in real time, the reconstruction and prediction of flow field parameters based on ML and the active flow control based on RL provide a new avenue for traditional fluid mechanics research.

For complex high-dimensional unsteady flow fields, traditional flow characteristics representation methods, such as time serialization of the pressure on the surface, cannot comprehensively clarify the spatial-temporal effects of flow.Data-driven methods such as dimensionality reduction can extract the key features and main patterns of flow that are used to describe the essential internal structure of the data.Among these data-driven methods, POD, proposed by Lumley,is one of the most notable examples. With the improvement of numerical calculation capabilities and the enrichment of experimental measurement methods, fluid mechanics is gradually becoming a research field with abundant data,which means that it is gradually applicable to ML algorithms.

The flow field feature representation and turbulence modeling based on machine learning will be elaborated in this section respectively.

3.1. Flow field representation

Pattern recognition and data mining are the core of machine learning algorithms. Due to the inherent high-dimensional nonlinearity of the flow field, it is particularly intractable to predict and analyze the spatial-temporal behavior of the flow field. We discuss the reconstruction and prediction of flow field,followed by the super-resolution and denoising.Furthermore, the extraction of flow features and the reduced-order model will also be involved.

3.1.1. Reconstruction and prediction

Nowadays, the multi-dimensional aerodynamic database for aeronautical engineering is widely used in the fields of design,optimization and control,etc.The term multidimensional refers to multiple parameters, such as altitude, Mach number, and angle of attack. The amount of data required often increases exponentially as the number of dimension increases,also known as a dimensional disaster. To reduce computational consumption, a common strategy is to find a suitable surrogate model.Commonly used surrogate models can be divided into three categories, namely multi-fidelity models, simplified models based on projection, and data fitting models. The latter, also known as data-driven models. Compared with simplified projectionbased models such as POD,data-driven models aim to establish the mapping relationship between parameters in a‘‘black box”manner.The reconstruction and prediction of flow field parameters are formally based on this concept.

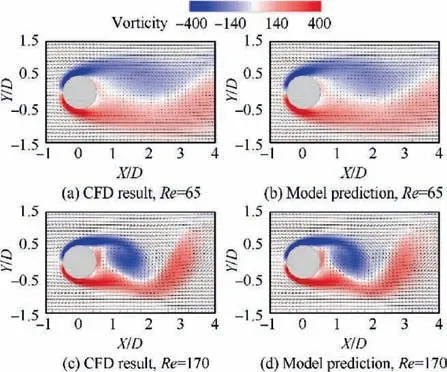

Fig. 5 Comparison of instantaneous flow field of cylinder between model prediction and CFD results.60

One interesting application of ML in fluid dynamics is to investigate the mapping relationship between fluid parameters.By data-driven methodology, a model can be regressed by establishing the intrinsic connections between these parameters. The fusion CNN constructed by Jin et al.with or without a pooling layer uses the pressure of the cylinder surface to predict the wake velocity.The comparison results of its prediction of instantaneous velocity field of the cylinder are shown in Fig. 5.This model can simultaneously capture accurate spatial-temporal information of the flow field around the cylinder,and the features that are invariant of small translations of pressure fluctuations on the cylinder surface in temporal dimension. Aiming at the unsteady flow field prediction of the flow around a cylinder, Lee and Youused GAN to extract fluid dynamics characteristics, and analyzed the impact of the proposed physical loss function on the prediction results. The physical loss function is designed to explicitly provide conservation information to the model. Compared with using generative methods to construct models, the most important enlightenment of Lee and You’s research is to remind researchers of the importance of combining prior physical conservation laws with ML models. Furthermore, Han et al.designed a novel hybrid neural network using the ConvLSTMnetwork to capture spatiotemporal dynamics mapping from high-dimensional complex flow fields without using any explicit dimensionality reduction methods, and predict the future flow field around a cylinder based on the captured features. The research of Han et al.shows that it may be more effective to combine LSTM and convolution to study the temporal effect of unsteady flow field. In addition,LSTM is also used to predict turbulent shear flowwhich aims to evaluate the feasibility of using neural networks to predict low-order representations of near-wall turbulence. In addition to predicting the turbulence characteristics, Kim and Leeused CNN to predict the local heat flux by assuming that the local heat flux in the normal direction of the wall could be explicitly expressed through the multi-layer nonlinear neural network according to the shear stress and wall pressure fluctuation of the nearby wall. In addition to comparing the Root-Mean-Square Error (RMSE) and correlation coefficient between the model prediction results and DNS, the statistical properties such as Probability Density Function (PDF) and high order moment are also analyzed and compared. Raissi et al.used a coupled neural network with discrete spatialtemporal data as input to solve the velocity field and pressure field around the cylinder and the motion of the structure,instead of using the numerical discrete method to solve the fluid mechanics equation and the dynamic equation of the structure motion. Given some limited and scattered information about the velocity field, the lift and drag on the surface of the cylinder are predicted. As a part of the loss function,the governing equation plays the role of regularization.

Combining these studies, it can be found that embedding necessary flow field information such as flow control equations and conservation laws into the neural network model can deepen the interpretability and generalization ability of the ML model. In addition, the specific skills of building models also need to consider specific problem scenarios, such as whether to use LSTM networks related to time series when reconstructing unsteady flow fields. More importantly, in the analysis of results, statistical analysis of turbulence characteristics is essential, such as turbulence energy spectrum.

In the field of aeronautical engineering, obtaining the flow field around the airfoil is an important factor for obtaining pressure and skin friction, as well as for studying flow separation, transition, wake vortex, etc. These parameters and flow characteristics are very important for the design of aircraft wings and helicopter rotor blades. Traditionally, the flow field around an airfoil is obtained by solving the Navier-Stokes equation on a computational grid with appropriate boundary conditions. However, when optimizing the airfoil and solving the fluid-structure interaction, the CFD calculation is still relatively time-consuming,which requires a lot of iterative calculations. The emergence of efficient deep learning tools has brought a new modeling paradigm to physical systems. By deploying machine learning technology, existing scientific databases can be used for aerodynamic modeling, analysis and design.

The use of ML to predict the flow field around the airfoil has also made gratifying progress. The flow field around the airfoil is considered as a function of its geometry, angle of attack and incoming flow Reynolds number.Sekar et al.used CNN to extract the geometric features of the airfoil and input them into the MLP together with the Reynolds number and the angle of attack to predict the flow field around the airfoil.Based on the same idea, Zhangand Yilmazet al. used CNN to predict the lift coefficient of the airfoil, and the CNN-based model has smaller geometric constraints in terms of prediction accuracy. Hocˇevar et al.utilized Radial Basis Function Neural Network(RBFNN)to estimate the turbulent wake.Based on the same idea,Bhatnagar et al.constructed a ML framework based on CNN to predict the flow field of different airfoils under variable flow conditions, making it possible to study the effects of airfoil shape and flow conditions on aerodynamic parameters in real time.Combining these studies,it can be found that the prediction of the flow field around the airfoil is usually achieved by constructing the mapping relationship between the geometric parameters of the airfoil and the surrounding flow field parameters. In essence, the use of deep neural networks to build models is more like a modeling method that accurately implements interpolation, and its ability to extrapolate and generalize remains to be verified.

In addition,drogue detection is a basic problem in the close docking stage of autonomous aerial refueling. Wang et al.used CNN to achieve highly robust drogue detection,avoiding the traditional method that requires artificial features to be placed on the drogue. The experimental results show that the method based on deep neural network is more excellent in accuracy, detection speed and robustness than traditional methods.Besides,the reliability of the Engine Electronic Controller (EEC) is an important issue affecting the safety of aircraft engines, and it is of great significance to accurately evaluate its reliability.Wang et al.used Bayesian deep learning to propose a reliability assessment method for the Mean Time Between Failures (MTBF) in the design phase considering complex products and uncertain task profiles.This reliability assessment method can accurately evaluate the MTBF of the EEC without referring to physical experiments.

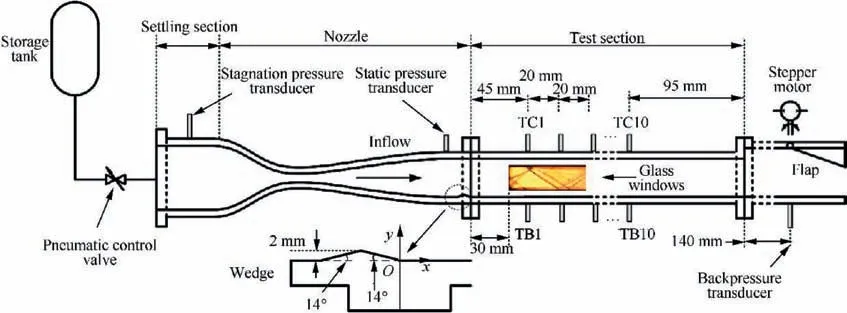

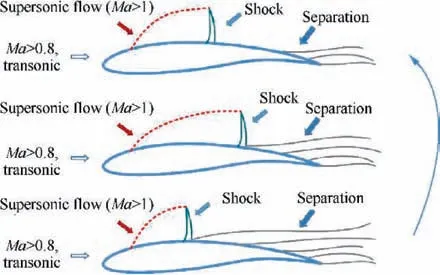

For the transonic or supersonic cascades of aero-engine compressors,the high back pressure generated by the combustion chamber causes the shock wave structure in the cascade channel to be gradually pushed toward the cascade lip, which will cause a stall in severe cases.As a result,the intake capture flow rate of the cascade drops sharply.Therefore,for the compressor cascade of an aero engine, accurate, efficient and prompt airflow status monitoring is indispensable.Traditional state monitoring methods mainly include gas path analysis,installing miniature accelerometers on rotor blades,and collecting vibration data of blade cascades.However, due to their inherent limitations, these methods are difficult to intuitively and comprehensively reflect the state of the flow field.More efficient methods for monitoring the flow field conditions are urgently needed.The traditional method of obtaining flow field structure based on schlieren is shown as Fig.6.The flow field reconstruction method based on deep learning can reconstruct a more comprehensive flow field based on less local information, which provides a new way to develop a prompt and efficient flow field state monitoring method.

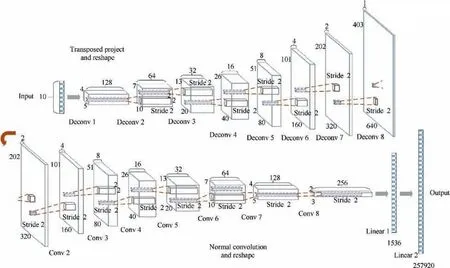

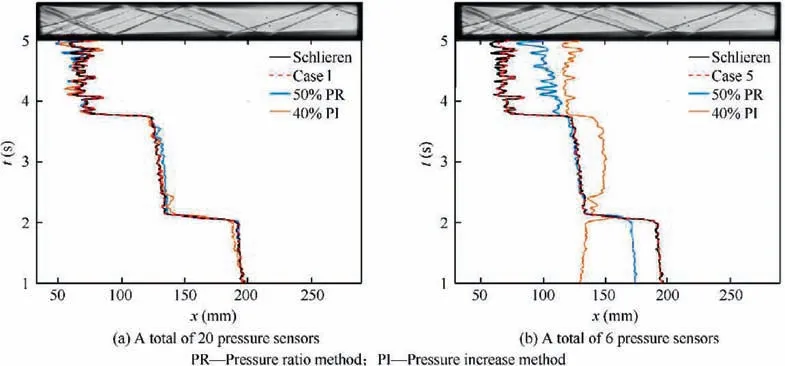

In terms of flow field reconstruction, Li et al.used transposed convolution network as shown in Fig. 7and residual network to reconstruct the flow field of supersonic cascade SAV21 under the condition of fixed incoming Mach number and continuous change of back pressure,and obtained the flow field image of cascade channel by using discrete pressure value of cascade wall surface.The reconstruction relative error in the test set was less than 3.5%. In addition to using experimental data,Li et al.explored the ability of deep neural networks to identify the shock wave structure of the flow field under the complex and variable working conditions of variable incoming Mach number and back pressure based on numerical simulation data. In terms of the isolator of scramjet engine, Kong et al.used the CNN of the fusion path to achieve highprecision flow field reconstruction, and the detection accuracy of the leading edge of the shock train was more robust than traditional methods.The comparison results of the shock train leading edge location detection between CNN-based model and traditional methods are shown in Fig. 8.In essence,Liand Kongconstructed the mapping relationship between wall pressure information and flow field structure,but only considered the change of flow field structure during the rise of back pressure, and did not further explore whether there was hysteresis in the decline of back pressure.

In addition to the state monitoring of the flow field, the fault diagnosis of rotating machinery is also of great significance in the industry.Recently,fault diagnosis methods based on ML have become a research hotspot. In this respect, Li et al.proposed a CNN-based infrared thermal imaging fault diagnosis method. According to the bearing data, Zhang et al.converted the original signal into a two-dimensional image, and used CNN to extract and classify fault features,which effectively improved the accuracy of bearing fault diagnosis. Considering that the acquisition cost of fault samples is relatively expensive and inevitably contains noise, the identification performance of the diagnosis model is not ideal. The weighted extension neural network constructed by Wang et al.built a corresponding fault diagnosis model for small samples turbo-generator sets with noise through different types of connection weights and improved correlation functions.

In general,in terms of flow field reconstruction and prediction, most of the existing researches use convolutional neural networks. In addition, considering the unsteadiness of flows,some researches combine LSTM with convolution to reveal the unsteady spatial-temporal effects of flow and have made some preliminary progress. In principle, using ML to reconstruct and predict flow fields may be a technical challenge but is ordinary in physical principles. The latest developments in ML-based methods are mathematically based on function approximation, which is also common in fluid research. The difference is that the previous fluid research based on function approximation mainly combines dimensional analysis and physical laws, and the mathematical form is explicit, with few input and output.In contrast,with ML-based approaches,the requirements for functions can be more freely specified and tailored to specific problems than ever before.

It is worth noting that deep learning often means that the amount of effective data that used for model training far exceeds the number of parameters of the model, which will lead to the occurrence of overfitting and the poor extrapolation ability of the model, making it impossible to accurately predict the unknown situations. Deep learning once again attracted the attention of the public is the excellent performance of the CNN on the ImageNetdata set in 2012, but the training data set for problems such as image classification or speech recognition is very large, making the prediction of unknown data very likely falls within the range of interpolation in the data set. In other words, the predictive ability of the model depends on the data used for training, that is, the model can only learn the intrinsic features embedded in the data. Although fluid mechanics is gradually becoming a data-rich subject by virtue of numerical simulation and increasingly abundant experimental measurement methods, it is still very different from the application mode of image classification.We believe that the establishment of a large enough,labeled fluid database for a certain scenario will further promote the deployment of machine learning-based algorithms.

Fig. 6 Schematic of scramjet isolator model installed in direct connected wind tunnel used for monitoring airflow state.77

Fig. 7 Architecture of transposed convolutional network proposed by Li et al.78 (‘‘Deconv” denotes transposed convolution; ‘‘Conv”denotes convolutional operation; and ‘‘Linear” denotes fully connected layer).

3.1.2. Super-resolution and denoising

For the practical application of aeronautical engineering,obtaining the complex structure of the turbulent flow field,whether it is numerical simulation or experimental fluid mechanics, has always been a long-term challenge. In CFD,complex details of turbulent structures can be obtained using DNS with billions of grids, but this requires costly computing resources. For experimental fluid mechanics, PIV or schlieren can be used to capture major large structures well,but the spatial resolution is limited by the inherent characteristics of the camera and external devices. Considering that it is difficult to obtain fine-scale structures in turbulent flow in many cases of practical engineering, it is essential to enhance the spatial resolution of the flow field. Recent developments in superresolution technologies based on deep learning algorithms for estimating high-resolution images from low-resolution images using Artificial Intelligence (AI) have received increasing attentions.

Fig. 8 Comparison of detection results of leading edge of shock train between CNN and traditional methods with different configuration of pressure sensors.77

Super-Resolution (SR) refers to the process of reconstructing the corresponding high-resolution image from the observed low-resolution image.Super-resolution belongs to the category of inverse problems.For a low-resolution image,there may be multiple different high-resolution images corresponding to it,subsequently, prior information is usually added to normalize the solution of high-resolution. In traditional methods, the prior information could be learned through several examples of low-high resolution images that appear in pairs. The SR based on deep learning directly learns the end-to-end mapping function from low resolution images to high-resolution images through neural networks.

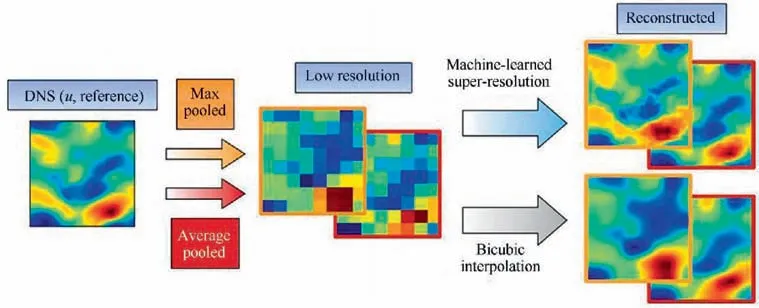

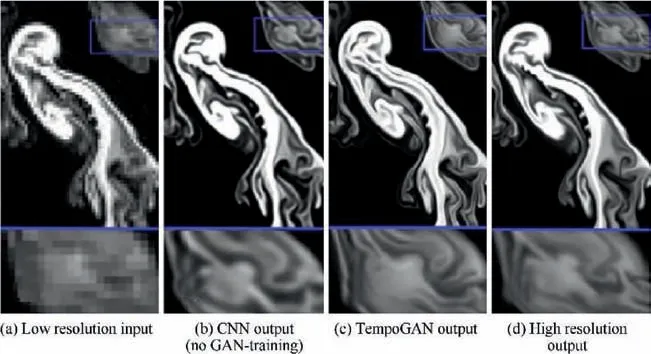

Recently, Dong et al.proposed the Super-Resolution Convolutional Neural Network (SRCNN) with only three convolutional layers to solve the problem of image superresolution. Compared with traditional interpolation and sparse coding methods, the accuracy of image reconstruction was greatly improved. In addition, a highly accurate SR method based on a Very Deep Convolutional Network(VDSR)proposed by Kim et al.by combining the CNN with the residual structurefurther improves the accuracy of image super-resolution. Recently, the hybrid Downsampled Skip-Connection/Multi-Scale (DSC/MS) model proposed by Fukami et al.carried out the research of turbulence superresolution for two-dimensional cylinder wakes,and the results showed that the model could reconstruct very accurate turbulence structures from extremely coarse turbulence images,which holding great potential in revealing the physics of complex turbulence sub-grid scale. In addition, the turbulent energy spectrum can be reconstructed accurately.More importantly, research by Fukami et al.indicates that traditional super-resolution methods based on filter operation have lowpass characteristics and may not be suitable for highfrequency problems such as turbulence. The super-resolution workflow of the turbulent flow field structure is shown in Fig. 9.The Multiple Temporal Paths CNN model (MTPC)proposed by Liu et al.takes the time series of the turbulent velocity field as input, covers both temporal and spatial information,and three temporal paths are designed to fully capture the characteristics in different time ranges.The results indicate that the model captures the turbulent characteristics such as the kinetic energy spectrum very well. The research of Liuand Jinet al. further pointed out that carefully designing multiple paths with different operations in the model may be crucial to capture the flow characteristics of different spatialtemporal scales.In addition to supervised learning,some other studies have been proposed by Xie et al.as shown in Fig.10 and Deng et al.respectively to conduct turbulence superresolution by virtue of GANs. The research of Xie et al.applied conditional GAN to the four-dimensional data set for the first time.More importantly,it proved that it is possible to train a generator with temporal coherence through the time discriminator.Large-Eddy Simulations(LES)may also benefit from the super-resolution by leveraging high-resolution data on a smaller domain to enhance the resolution on a larger imaging system.

In general, the end-to-end super-resolution method based on deep neural networks has higher reconstruction accuracy than traditional interpolation-based methods, and the data input format is more arbitrary. In the selection of specific machine learning methods, based on the existing research results, supervised learning does not perform better than generating antagonistic models. At the same time,it is also necessary to take into account the multi-scale spatial-temporal effects between input data to design a detailed model structure.

PIV is an important non-intrusive quantitative velocity measurement technique,which obtains motion vectors by analyzing continuous particle image records. This is a crucial problem that has both large input space(different particle size,concentration,and image noise)and large output space(different potential flow regimes,velocity ranges,and sub-pixel accuracy estimates). The great success of ML, especially deep learning in the image field, has provided researchers with a lot of inspiration to use deep neural networks to perform PIV speed estimation.For experimental fluid mechanics,much effort is devoted to improving the spatial resolution of PIV data. Cai et al.used CNN to extract velocity vector fields from PIV images, which allows to improve the computational efficiency without reducing the accuracy.To better extract PIV velocity from the particle images, the PIV-DCNN model proposed by Lee et al.with four level regression deep CNN was developed. In addition to using ML techniques to reconstruct the velocity field from PIV images, it can also be used to remove spurious values in the velocity field.Another way to remove spurious values is to train an autoencoder to learn useful features by adding noise to its inputs and to recover the original, noise-free inputs. The success of the ML-based model on a large number of synthetic and experimental particle images means that it paves a whole new way for accurate PIV analysis using supervised deep learning models.Especially the method based on ML can more accurately extract the fine structure of complex flow field, which greatly improves the spatial resolution of PIV. Further efforts are needed in terms of robustness and computational efficiency.

Fig. 9 Schematic of super-resolution reconstruction of turbulent velocity field.87

Fig. 10 Fluid flow with different resolutions.89

3.1.3. Flow feature extraction

Pattern recognition and data mining are the core applications of ML. Even in a simple aerodynamic configuration, fluid motion will exhibit complex spatial and temporal characteristics. Before the analysis of complex flows, the extraction and analysis of the dominant factors or modes of flow has become a recognized research premise. The process of extracting the features of the dominant factor of the flow field usually means the modal decomposition of the flow field. The modal decomposition of the flow field can be roughly divided into two categories, data-driven methods such as POD and Dynamic Mode Decomposition (DMD), and operator-driven methods such as Koopman analysis.The former is mainly based on flow field data, such as CFD calculation results or experimental measurements, while the latter is based on the linearized Navier-Stokes equation. Among these modal decomposition methods of flow fields, the most representative one is POD,which defines an orthogonal linear transformation from physical coordinates into a modal basis. POD was first introduced by Lumleyas a mathematical technique to extract coherent structures from turbulent flow fields. Subsequently, Sirovichproposed the snapshot method in order to solve the problem that the covariance matrix cannot be effectively solved in the actual fluid mechanics applications. Due to the significant reduction in the consumption of computing resources and the occupation of memory,the snapshot method has now been widely used in fluid data to determine the POD mode in fluid mechanics. For a more detailed overview of the modal decomposition of flow fields we refer readers to the review articles by Tairaand Berkoozet al.

DMD is another major flow field mode decomposition method, which decomposes time-resolved data into different modes,with each mode having a separate oscillation frequency characteristic. Compared with POD, DMD does not require any prior physical knowledge about the flow field.It is a purely data-driven analysis method. It is difficult to rank the decomposed modes to determine which mode is more physically important. Moreover,for POD, since each instantaneous flow field is dealt with separately in POD method,which is difficult to exploit the temporal effects of unsteady flow field. Most importantly,considering that these flow field modal decomposition methods are linear and based on strong physical assumptions,the types of flow that can be analyzed are limited.For the transonic buffeting problem of NACA0012 airfoil,Kou et al.can accurately identify the dominant flow modes by combining the High-Order Dynamic Mode Decomposition(HODMD) with mode selection criteria, which will be helpful to develop a more concise Reduced-Order Model (ROM)structure. More detailed introduction about DMD we refer readers to the article by Schmid.

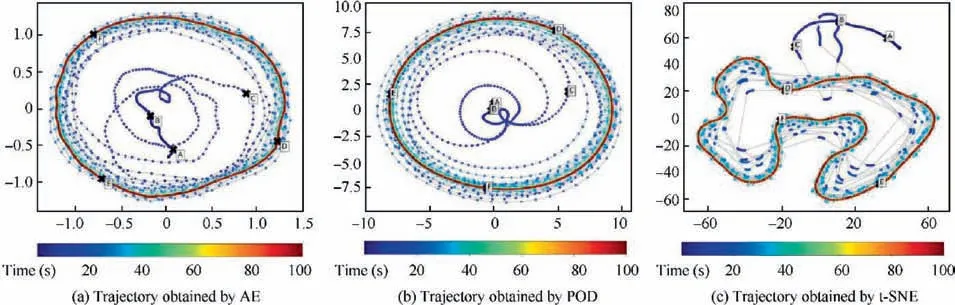

Autoencoder (AE), which is a state-of-art non-linear mapping method,is utilized as a more feasible way to express lowdimensional representations. Omata and Shirayamaproposed a low-dimensional representation method of the temporal behavior of the flow field based on deep AE to visualize the temporal and spatial structure of the unsteady flow field of an airfoil. And the temporal behavior of the spatial structure of the flow field can be visualized as a trajectory in the feature space.The trajectories of the spatial-temporal structure of airfoil flow fields obtained by different methods are shown in Fig. 11.In general, the study by Omata and Shirayamaexplained the difficulties in processing time series data of unsteady flow fields, and proposed a method to visualize and compare the spatiotemporal structure of unsteady flow fields.The method consists of two stages,namely mapping the spatial structure of the instantaneous flow field to a low-dimensional feature space and displaying the mapped data in the form of time series. It should be noted that the physical meaning of axes in low-dimensional eigenspaces is not explained in detail by Omata and Shirayama.

The important significance of flow feature extraction is that the core goal of flow research is to discover the mechanism,restore a flow process to its essence, recognize the dominant mechanism in the Navier-Stokes equation, and understand the terms in the equation that control the process. The MLbased method, especially the deep autoencoder, can provide richer and more intuitive explanations in terms of dimensionality reduction and feature extraction compared with the previous POD. Therefore, compared to the way in which supervised learning builds the relationship between model parameters,deep autoencoders based on unsupervised learning may be more effective in revealing deep-level mechanisms in fluid research.

3.2. Turbulence modeling

Turbulence is a common physical characteristic in fluid flow.A sufficient understanding of boundary layer turbulence on airfoils is very important for its performance. In the combustion chamber, intense turbulence increases the mixing of fuel and air, improves overall combustion efficiency and reduces emissions. For airfoil design, fuel consumption can be reduced by delaying the generation of boundary layer turbulence over the wing surface. The representation of turbulent motion is very challenging because it involves a wide range of spatialtemporal scale effects and has a strong chaotic character.The introduction of machine learning strategies provides a new research perspective for turbulence modeling.

3.2.1. Neural network modeling

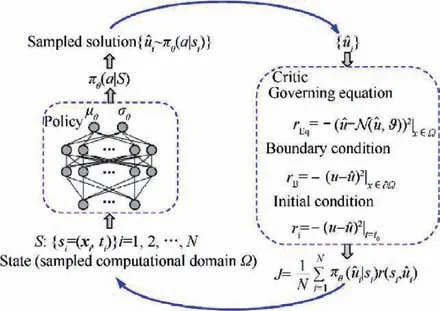

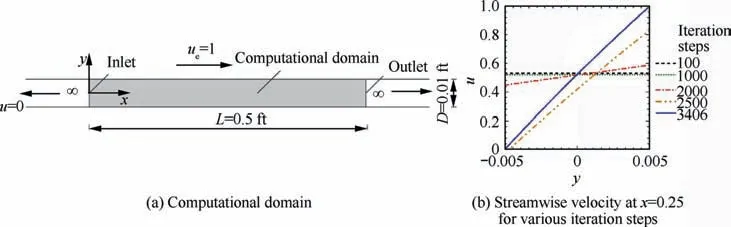

In the past few decades, neural networks have been used to model fluid systems,among which one of the most widely used is to solve differential equations using neural networks. Based on the universal approximation theoremof neural networks,Dissanayake and Phan-Thientransformed the numerical problem of solving Partial Differential Equations (PDE) into the problem of solving unconstrained minimum values.Lagaris et al.proposed to incorporate the feedforward fully connected neural network with adjustable weights into the differential equation solution to solve the initial value and boundary value problems. In the following years, the research of using neural network to solve differential equations gradually fell into a trough. With the prosperity of deep learning, this field showed vitality again. A natural analogy between differential equations and RL maybe exists faintly. E et al.proposed a new algorithm for solving parabolic partial differential equations and Backward Stochastic Differential Equations (BSDE), which is based on the analogy between BSDE and RL. Moreover, the gradient of the solution acts as the policy function, and the loss function is given by the error between the prescribed terminal conditions and the BSDE solution. Wei et al.proposed a rule-based general self-learning method based on deep reinforcement learning to solve nonlinear Ordinary Differential Equations (ODE) and PDE which is shown as Fig. 12,and embed the equations as critics into the network, which is reasonable from the physics perspective. It is worth noting that the research of Wei et al.pointed out that discrete-time solutions can be treated as multi-tasks with the same control equation.Considering the solutions are temporally continuous,the current parameters of the network provide a good initialization for the next time step, which makes the model possesses the characteristics of transfer learning. The feasibility of this method is verified by using Deep Reinforcement Learning (DRL) to solve twodimensional steady state Couette flow, which is shown as Fig. 13.

Fig. 11 Proposed visualization of spatial-temporal structure of airfoil’s flow field as a trajectory.99

Fig. 12 DRL schematic for solving differential equations(actions are defined as candidate solutions of differential equations and are sampled from output policies of policy network,states are defined as sampling points of continuous solution domain of differential equations and are input of policy network).103

In addition, Raissi and Karniaintroduced the hidden physical model that can use the underlying physics laws expressed by time-dependent and nonlinear PDEs to extract the patterns from the high-dimensional data generated from the experiment. We also noticed that the physical-informed neural networkwhich is capable of encoding underlying physical laws that govern the data set and can be described by PDEs. The research by Raissi and Karniadakisreminds us of the possibility of using ML-based models to reveal latent variables and reduce the number of parametric studies. Furthermore, it pointed out that embedding physical information in the model when studying complex physical systems is crucial for effectively extracting information in scenarios with scarce data.

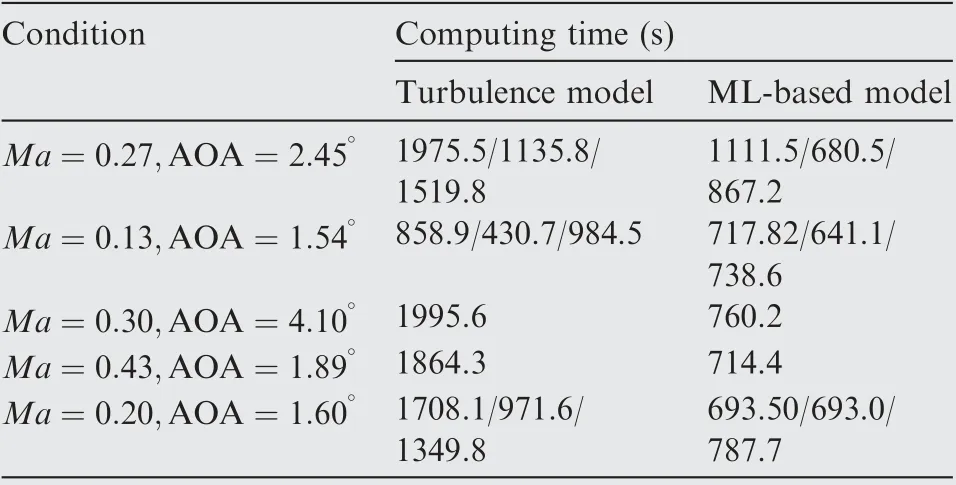

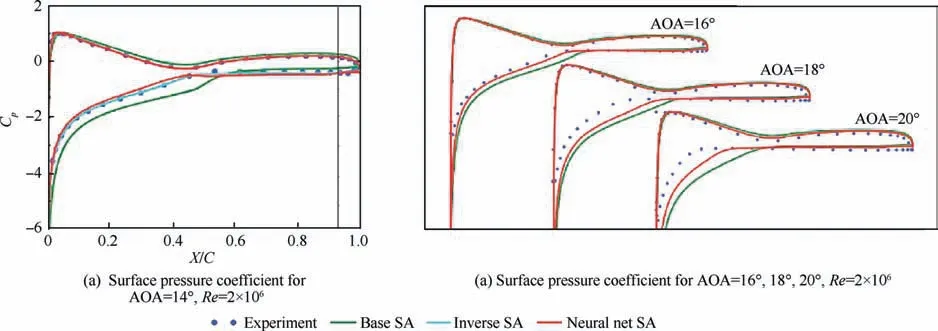

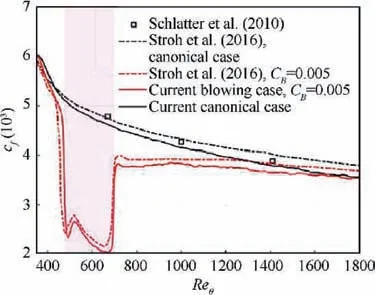

The use of neural networks to enhance or improve turbulence models is another hot topic in neural network modeling.For high Reynolds number flows around airfoils,Zhu et al.used RBF neural network to build separately models for different regions respectively, and directly constructed the mapping relationship between the turbulent eddy viscosity and the mean flow variables instead of the original PDEs. Moreover,the partition modeling strategy proposed by Zhu et al.can customize the features and model parameters of different regions, which allows the model to more accurately capture the flow characteristics of different regions. Furthermore, the Latin Hypercube Sampling (LHS) method used by Zhu et al.when constructing training samples also provides a basis for the construction of the database. The comparison of the time required for flow field calculation between the turbulence model and ML-based model is shown in Table 1.In addition,Yang and Xiaoused Random Forest(RF) and neural network to improve the four equations k-ω-γ-Aturbulence transition model by building the mapping relationship between mean flow variables and correction terms, and found that pressure gradient of streamwise played an important role in the physical information and interpretability of the model by analyzing the relative importance of each feature in the RF model. In terms of using field inversion and ML to enhance the prediction ability of turbulence model, Singh et al.proposed to infer the spatial distribution of model discrepancies through inverse modeling, and to reconstruct discrepancy information from a large amount the inverse problems into corrective model forms through ML.The research of Singh et al.showed that when the model forms are reconstructed by neural networks and embedded in the standard solver, the data-driven Spalart-Allmaras (SA) model can provide more accurate prediction of lift coefficients and stall onset angles. The comparison between the predicted results of the improved SA model and the original model on the surface pressure coefficients of airfoils at different angles of attack is shown as Fig. 14.

In fluid mechanics, Milano and Koumoutsakosused neural network to reconstruct the near-wall flow field in turbulent channel flow generated by DNS,and compared the reconstructed results with POD, indicating that this method provided improved reconstruction and prediction capability.For viscoplastic fluids, Muravleva et al.proposed the use of ML to construct a reduced-order model of Bingham medium duct flow.In terms of using neural networks to find turbulence models, Gamahara and Hattoriused neural networks to find new subgrid models of the Subgrid-Scale(SGS)stress in LES. Considering the pressure fluctuations on the airfoil surface, aerodynamic disturbances will leave certain characteristics in the pressure exerted on the airfoil surface. To what extent can the characteristics of these disturbances be resolved directly from the measured pressure,and the aerodynamic disturbances on the airfoil surface based on ML have also made preliminary progress. In this respect, Hou et al.used machine learning algorithms to investigate the extent to which the characteristics of these disturbances can be parsed from surface pressure measurements. Two different ML architectures were constructed, and the results showed that the ML model integrated with the dynamic system architecture had higher recognition accuracy. It is worth mentioning that overfitting can be reduced by injecting random noise into the input pressure data in these two methods. For porous media flow,Wang et al.constructed a neural network simplification method for multi-scale problems by constructing a dimensionality reduction space and considering multi-continuum information, which does not need to be solved like POD.

The generalization ability of ML in fluid mechanics,that is,the ability of extrapolation, has attracted much attention in practical applications. The neural network’s prediction of unknown data is more inclined to the data falling within the probability distribution range of training data,that is,the data satisfying the interpolation attribute. Thus, the selection of neural network models should be cautious.Common methods of model selection include regularization, which has been introduced before, and cross validation. Although fluid mechanics has gradually become a data-rich subject, there are still few valid data and the cost of obtaining it is still very expensive. For contexts where training data is scarce, commonly used cross-validation methods include S-fold crossvalidation and leave-one-out cross-validation.

Fig. 13 DRL to solve differential equation of steady-state Couette flow.103

Table 1 Comparison of calculation time of turbulence model and ML-based model for flow field around airfoil.106

3.2.2. Turbulence model closure

Reynolds-Averaged Navier-Stokes(RANS)models are widely used in aeronautical engineering because of their tractable computational abilities.Most two-equation RANS models rely on the Linear Eddy Viscosity Model (LEVM) to achieve the Reynolds stress closure, which assumes a linear relationship between the Reynolds stress and the mean strain rate. However,these models cannot provide satisfactory prediction accuracy in many flows related to aeronautical engineering,such as curved flow,collision flow,and separation flow.Even if a more advanced nonlinear eddy viscosity model is proposed, it has not been widely used in aeronautical engineering due to its inability to provide sustained high performance and difficulty in convergence. Recently, there has been increasing interest in applying ML methods to provide improved Reynolds stress closure to improve the predictive ability of RANS models.

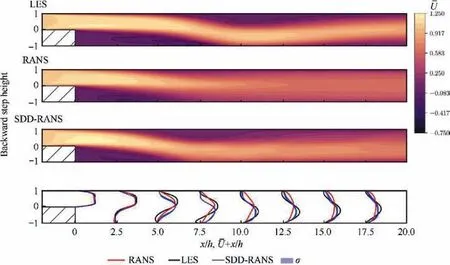

For turbulence modeling, the use of ML to study turbulence model closure is a very active field. Most recent datadriven research is evaluating the uncertainty of the RANS model. It is generally believed that the RANS model is much easier and efficient to implement in engineering than the DNS model and the LES model. The RANS model relies on the Boussinesq hypothesis, which, however, is inconsistent with the underlying physical laws of many common flows,resulting in inaccurate predictions. Therefore, it is indispensable to evaluate the uncertainty of the RANS calculation results. Ling and Templetonused three different ML algorithms: SVM, Adaboost decision trees, and RF to evaluate the uncertainty of the RANS calculation results under different configurations of based on different RANS eddy viscosity assumptions. And markers were also developed to indicate when underlying assumptions of RANS model break down.In addition, Tracey et al.investigated the uncertainties of RANS models for flame combustion in turbulent mixed layers and turbulent anisotropy in non-equilibrium boundary layer flows, respectively. In addition to using the introduced artificial neural network to calculate and express the uncertainty of the RANS model,Edeling et al.used the Bayesian model to express the uncertainty of the total solution with the probability box (p-box) to express the parameter variability across flows.In addition,the data-driven framework proposed by Geneva and Zabarasusing Bayesian deep neural networks can not only improve the predicted results of RANS,but also provide probability boundaries for fluid quantities such as pressure and velocity. The prediction results of the improved RANS model and the original model for the backward step flow are compared with the results of LES model,as shown in Fig. 15.The uncertainty of the turbulence model includes not only the uncertainty of the model form,but also the cognitive uncertainty caused by the limited training data. Using ML to quantify the uncertainty of the RANS model and improve its predictive ability has far-reaching research potential.

Fig. 14 Comparison of pressure coefficient between results of base SA model and ML-augmented SA model for S809 airfoil.108

In general,the use of ML to evaluate the uncertainty of the RANS model usually refers to the use of supervised learning methods to indicate areas where the RANS model has higher uncertainty due to specific model assumptions. Supervised learning compares RANS results with high-fidelity results in different flow configurations, and acts as a binary classifier to mark areas with poor RANS model accuracy. The key to this work is how to develop features, error metrics, and verification procedures to ensure that the tag classification algorithm is extended to new flow configurations.

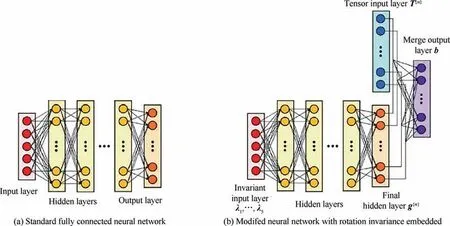

Modeling the parameters of the turbulence model is also one of the research directions.The extreme separation of temporal and spatial scales in turbulence makes it extremely costly to resolve all scales in numerical simulations. The usual approach is to truncate these small scales and use a closure model to simulate their impacts on large scales. Commonly used methods usually include RANS model and LES model.However, these models may require careful tuning to fully match the data from numerical simulations and experiments.Tracey et al.modeled the source term of the SA model which is a one-equation closure to the RANS equations that models the transport of turbulent kinetic energy. In addition,some researches on modeling the SGS stress in LES are also worthy of attention. The uniform neural network model proposed by Wang et al.provided closure for all the components of SGS stress and considered the symmetry of SGS stress at the same time, and verified the proposed model through isotropic LES turbulence. Moreover, the research of Wang et al.found that in addition to the gradient of the filtered velocity vector, the SGS stress is also related to the second derivative of the filtered velocity vector. More importantly, they found that different SGS stress components have different dependencies on the input features,but there are certain rules.Maulik et al.used convolution and deconvolution to obtain the closure terms of two-dimensional Kraichnan turbulence to account for SGS turbulence effects.Accordingly,Maulik et al.explored the use of ML to dynamically infer the applicable areas of specific turbulence model hypotheses,so as to improve the prediction ability of turbulence dynamics for a wide range of problems. It is worth highlighting that the landmark research in turbulence modeling comes from Ling et al.,this study pointed out that embedding Galilean invariance properties into machine learning models is critical to achieving high performance and the structure of its model is shown as Fig. 16.. The Tensor Basis Neural Network(TBNN) model can not only predict anisotropic eigenvalues,but also predict anisotropic tensors while maintaining Galileo invariance. Research by Ling et al. proved that deep neural networks can provide improved Reynolds stress closure. This reminds us the importance of embedding known physical knowledge or laws into ML frameworks,which we believe will become more and more important in future during the integration of ML and fluid mechanics.For more detailed data-driven turbulence modeling, please refer to the excellent review provided by Duraisamy et al.and Durbin.

In general, the research on the closure of turbulence model combined with ML can be divided into two categories: quantitative determination of the uncertainty of RANS model and modeling of Reynolds stress in RANS model and SGS stress in LES model. Most of the ML algorithms used are based on supervised learning, and semi-supervised learning algorithms such as generative adversarial models may not be suitable for these scenarios.

Fig. 15 Normalized stream-wise mean velocity (U-) contours of backward step flow with Reynolds number of 500 (top is LES highfidelity solution,middle is baseline RANS low-fidelity solution followed by data-driven ML augmented RANS solution, lastly is streamwise mean velocity profiles for all simulations at different cross-sections).118

Fig. 16 Schematic of neural network architecture proposed by Ling et al.123 (b —Normalized Reynolds stress anisotropy tensor; T(n)—Isotropic tensor basic; λ1,λ2,···,λ5 —Five invariant tensors; g(n) —Scalar coefficients weighting the basic tensors).

A growing number of studies indicate that it is becoming increasingly indispensable to explicitly incorporate parts of physical information such as symmetry,constraints,or conservation laws into ML architectures.This is of great significance for promoting the interpretability of data-driven ML algorithms. The combination of ML and the closure of turbulence models presents some unique challenges and opportunities.The interpretability and generalizability of the model are the cornerstones of the research. A well-designed model will provide reasonable assumptions and judgments for previously unobserved flow phenomena. It is also important to recognize that the components of neural networks are not far away from the well-known toolbox of linear modeling. For CNNs, the convolution operation is essentially a filtering operation. The parameters of the convolution kernel are not determined by some physical laws or intuitions, but are determined during the model training process. Furthermore, the linear filtering operation in CNN usually uses key nonlinear techniques to enhance the nonlinear expression ability of the model, such as local pooling and activation functions. Therefore, we have not completely lost the interpretability of the ML model.What really needs to be explained is the measures taken to improve the approximate accuracy of the model.

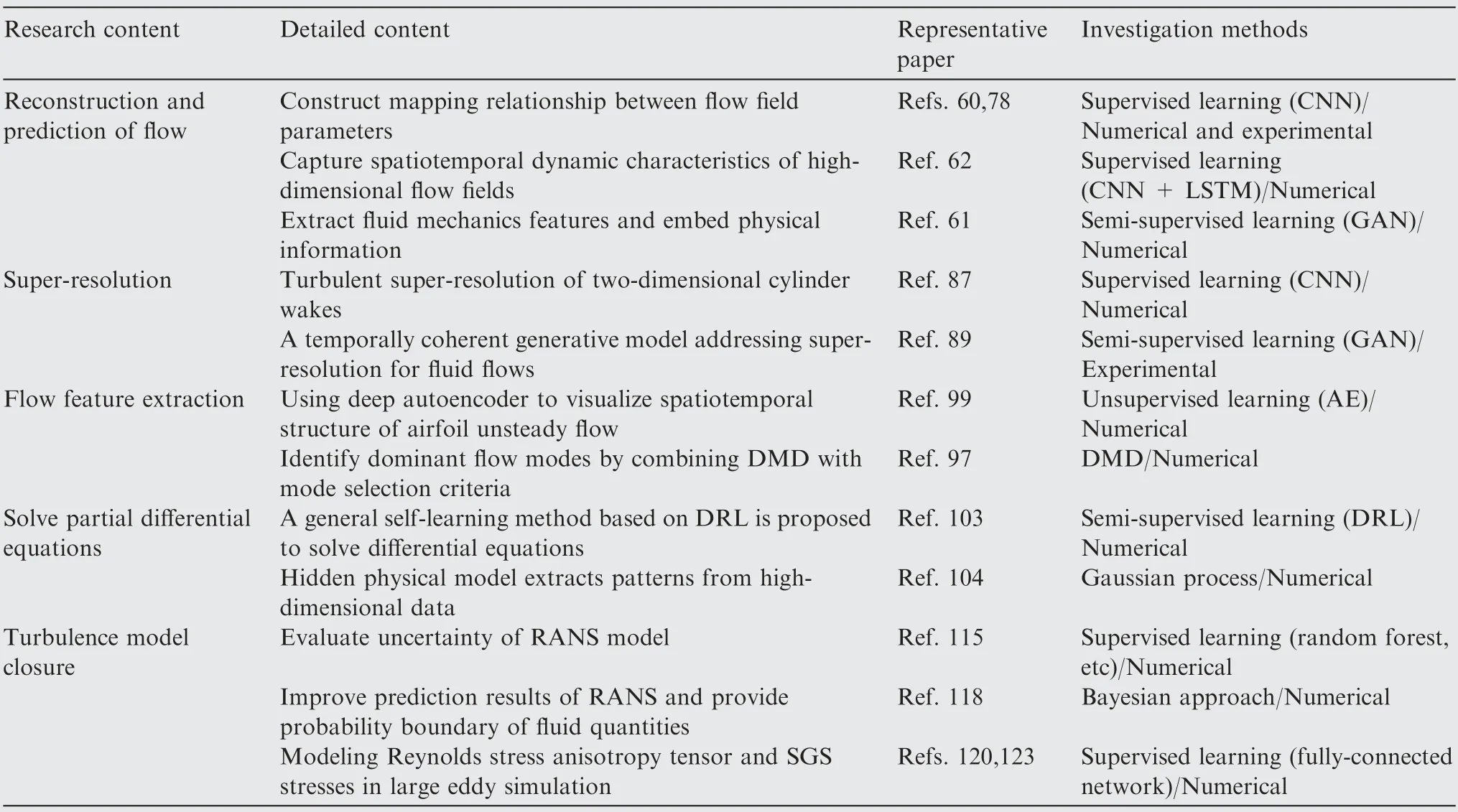

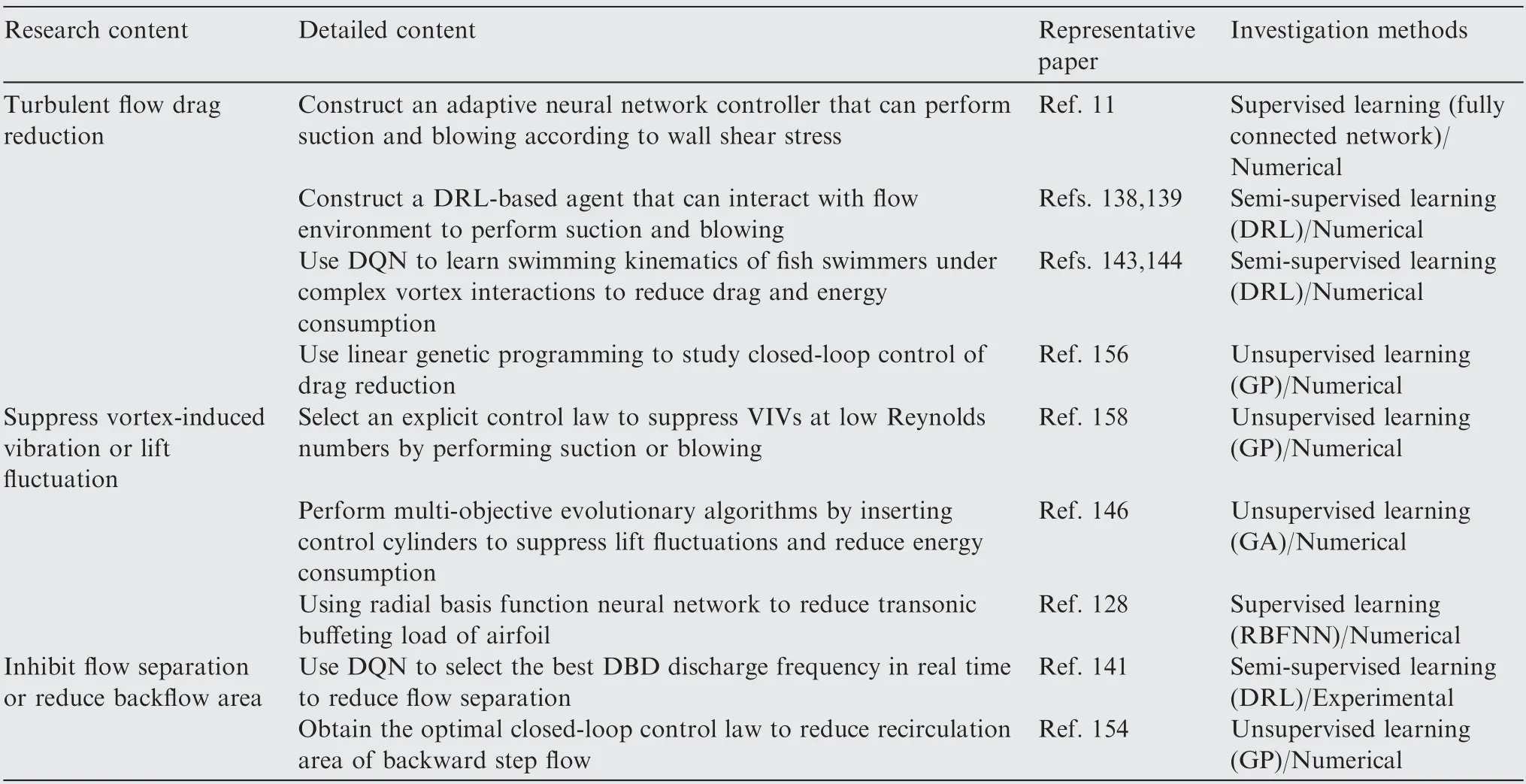

3.3. Challenges for flow modeling

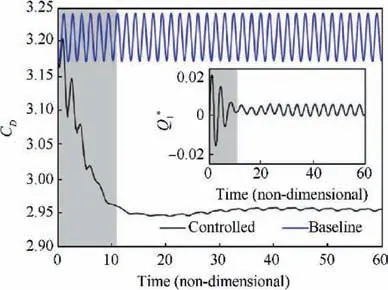

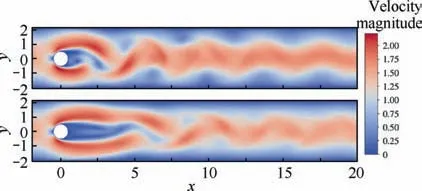

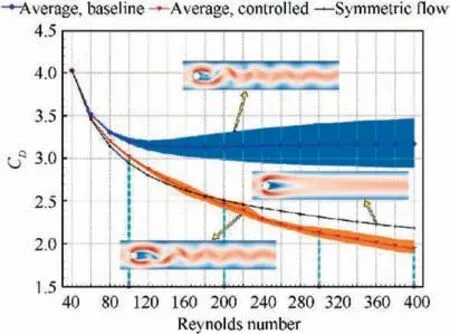

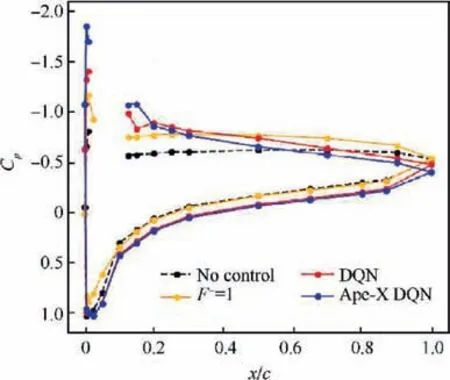

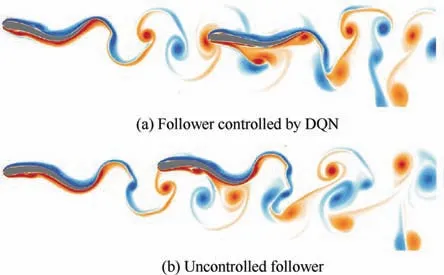

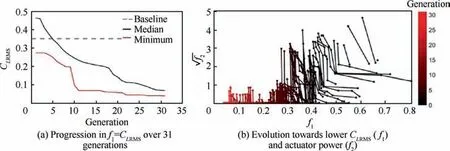

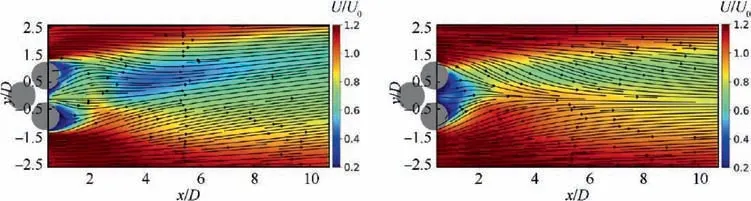

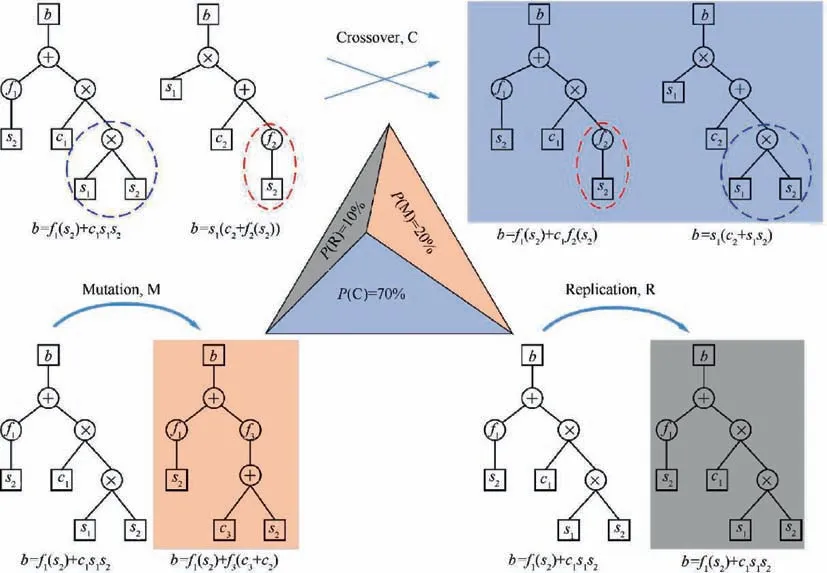

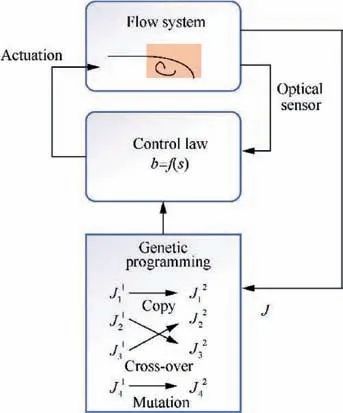

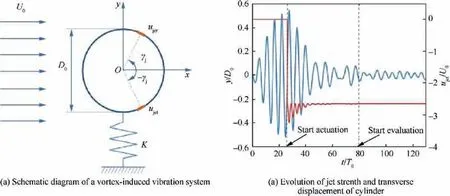

In spite of recent successes, several challenges remain. For data-driven flow dynamics models,the most important characteristics are interpretability and generalization ability.In addition, the physical characteristics of unsteady flow, such as multi-scale, latent variables, sensitivity to noise and disturbance, etc., need to be carefully considered when constructing a ML-based architecture.In addition,blindly using ML-based technology to use available data to predict the physical quantities of interest should be avoided, which will not help reveal the impact of latent variables and supplement the shortages of traditional analysis methods.In general,flow modeling based on ML can be divided into two major tasks:discovering unknown flow physical knowledge and improving turbulence models by integrating known physical knowledge. When considering the selection of specific models,whether it is for supervised learning or generative adversarial models, known physical constraints or conservation laws should be incorporated into the model. This is the key to the further development of fusing ML and turbulence modeling. The content and research methods of several main aspects of flow modeling carried out by ML are summarized in Table 2.