Optimal video communication strategy for intelligent video analysis in unmanned aerial vehicle applications

Yongqing XIE, Zhongo LI,*, Jin QI, Ki ZHANG, Bixun ZHANG,Feng QI

a Institute of Systems Engineering, Academy of Military Sciences, PLA, Beijing 100141, China

b National Engineering Laboratory for Video Technology, Peking University, Beijing 100871, China

KEYWORDS

Abstract For Unmanned Aerial Vehicles(UAV),the intelligent video analysis is a key technology in intelligent autonomous control,real-time navigation and surveillance.However,poor UAV wireless links would degrade the quality of video communication, leading to difficulties in video analysis. To meet the challenges of packet-loss and limited bandwidth in adverse UAV channel environments, this paper proposes a parameter optimization mechanism for UAV intelligent video analysis. In the proposed method, an Optimal Strategy Library (OSL) is designed to optimize the parameters for video encoding and forward error correction. Adapted to the packet-loss rate and bandwidth in practical UAV wireless network, the proposed OSL can facilitate the encoding of video sequences and the recovery of degraded videos with optimal performance. Experimental results demonstrate that the proposed solution can keep intelligent video analysis working efficiently with adverse UAV wireless links, and is capable of maximizing the inference accuracy of Multi-Object Tracking (MOT) algorithms in various scenarios.

1. Introduction

Recent development in Artificial Intelligence(AI)and communication technology has remarkably strengthened video analysis and video transmission technologies, promoting the intelligent applications of Unmanned Aerial Vehicles (UAV).Intelligent UAV systems have been widely applied in areas such as military, business and public security because of the outstanding flexibility and mobility of the systems. However,several problems hamper the applications of intelligent UAVs.Primarily,it would take prohibitive time cost to execute related AI algorithms (e.g. video recognition1–4or object tracking5,6)using airborne low-power processors,which are far from meeting the real-time processing requirement. Moreover, most AI algorithms based on deep learning network necessitate GPUs,which can be hard to equip in UAVs.Additionally,it is usually difficult to flexibly tailor AI algorithms to UAV video contents or multi-tasks. Therefore, the real-time processing of UAV videos would be an efficient solution to the promotion of intelligence reconnaissance of UAVs.

Despite the remarkable progress in intelligent video analysis technology,there still exist certain theoretical problems and application limitations. In view of the theoretical problems,since AI technologies based on deep learning network are inherently unexplainable, these AI technologies strongly relies on high-quality labeled data in most applications. Due to the difference between training data and inference data, the trained model may be incapable of processing unlearned data.In the inference stage, if the intelligent video analysis algorithms are deployed in a visual scene deviating from the training data scene, the algorithm performance will be degraded dramatically. The uncertain reductions cause reliability problems and present a potential risk in the application of intelligent video analysis algorithms. In terms of application limitations, current intelligent video analysis technologies including deep learning cannot well represent complex visual information with limited data samples and computing units.Meanwhile, it is difficult for these video analysis technologies to meet the requirements of general and complex visual perception tasks. All the aforementioned challenges will hinder the application of video analysis technologies.

In several surveillance applications,the UAV is required to scout objects information under confrontational conditions which impose high packet-loss, high bit-error-rates, limited bandwidth and strong random time variability on the channel environment of UAV video communication systems. Since video data with high compression ratios are more sensitive to channel errors, the channel errors are inevitable to degrade video quality in poor channel environments. In particular,UAVs can transmit videos only by wireless networks,in which packet-loss and bandwidth limitations may appear alternately or simultaneously.The simultaneous high packet-loss and low bandwidth channels would degrade video quality dramatically and induce the intelligent video analysis algorithms to work in non-robust status.Therefore,how to maintain the efficiency of intelligent video analysis algorithms in the poor channel environment is an urgent problem.

To overcome the problems resulted from the poor UAV channel environment, this paper proposes a parameter optimization mechanism for UAV intelligent video analysis. In the proposed method, an Optimal Strategy Library (OSL) is designed so that the parameters in Video Encoding (VE) and Forward Error Correction (FEC) can be optimized for realtime UAV videos.7In a channel with packet-loss and limited bandwidth,both the VE and FEC parameters should be jointly optimized to realize the globally optimal performance, so that the intelligent video analysis algorithm can achieve its highest inference accuracy. In addition, how to generate the OSL is also discussed in the paper. Simulations demonstrate that the proposed OSL can provide the optimal VE and FEC parameters for different intelligent video analysis algorithms in various video analysis tasks and video communication environments. Our main contributions can be summarized as follows.

(1) We propose a dynamic adaptation mechanism for UAV intelligent video analysis. For a video communication channel in UAV wireless networks, the packet-loss and bandwidth are usually dynamic along with the flight of UAVs. Therefore, FEC and VE parameters should be adapted to the packet-loss and bandwidth situation in real time.The proposed dynamic adaptation mechanism can efficiently mitigate the degradation of video quality and keep the intelligent video analysis algorithm working for the UAV wireless network.

(2) We establish a generation flow for the parameter optimization. The parameters for video encoding and forward error correction are set as the optimal parameters according to the performance comparison of an intelligent video analysis algorithm at different packet-loss rates and bandwidths. The optimal parameters are recorded in the OSL. Then, for a video analysis task,the OSL can provide a set of optimal parameters to maximize the inference accuracy of the intelligent video analysis algorithm in various scenarios.

The remainder of this paper is organized as follows.Section 2 reviews the related works.In Section 3,the proposed OSL is described in details. The experimental results are presented in Sections 4 and 5 concludes the paper.

2. Related works

2.1. Video transmission system in UAVs

The current mainstream UAV video transmission system adopts the digital image transmission method,mainly selecting 2.4 GHz and 5.8 GHz frequency band channels,and the encoding methods including full I-frame,H.264/H.265 and custom encoding.It can regenerate and restore the drone videos for multiple times without reducing the quality. It also has the characteristics of easy processing,flexible scheduling,high quality,high reliability,and convenient maintenance.8

DJI UAVs (Spreading Wings s1000)9use Lightbridge2 and OFDM technologies to transmit 1080p Full HD image data within a maximum range of 5 km. The protocol used in Lightbridge2 technology is different from the TCP/IP, and the aircraft does not need to establish a handshake with the ground.When packet-loss or data errors occur during data packet transmission,no retransmission is required.The aircraft will send the latest signal back to the remote controller in time, leading to a delay of about 50–100 ms of the DJI image transmission system.Ehang UAVs (GHOSTDRONE2.0)10are capable of transmitting 720P to 1080P resolution images using 2.4 G and 5.8 GHz data link transmission with a stable transmission distance of less than 1 km and a delay of about 300 ms. ZEROTECH UAVs (DOBBY)11use 2.4 G and 5.8 GHz as the data link transmission,and the highest resolution is 720P.The delay is about 200 ms under good conditions, and the transmission distance is 200 m. UESTC develops the bird carrying image transmission device for military using analog and digital signal transmission, with a communication distance of about 1 km and a delay of about 100 ms.

Equipped with an HDMI interface to enable users to watch videos shot by the drone in real time,the 3DR solo drone12in the US has an image delay of approximately 180 ms.However,its transmission distance is still not ideal, with a radius of about 800 m. The third-generation UAV Behop developed by Parrot13in France is equipped with a fisheye camera with a 1920×1080 video resolution and an image transmission range of about 300 m. The Flacon8+,14developed by Inter,transmits videos through 2.4 GHz and 5 GHz radio modules,with the video resolution being 1080P, the flight distance 1 km, and the time delay 200 ms. The MD4 series of UAVs developed by Microdrones15in Germany uses analog and digital signals to transmit videos.The image quality is D1 format,the transmission distance 3–5 km, and the time delay about 300 ms.

2.2. Intelligent video analysis of UAVs

Both computer vision and UAV technologies are developing rapidly.In the last decade,target recognition and tracking play a significant role in UAVs.16–20They promote an emerging research direction of multi-module collaboration and generalization of tracking algorithms.It is expected that the UAV target tracking system can flexibly combine its units according to the task requirements. Meanwhile, multiple UAV collaborations can also improve the accuracy and robustness of target tracking. With the progress of target tracking, the UAV can be controlled or operated in the remote-control operation mode, which can significantly reduce human intervention.Ultimately, intelligent video analysis can provide visual perceptions for UAV autonomous controlling and operation.

Target tracking analyzes the video sequence of UAVs by calculating the size and posture of the target in each frame.Meanwhile, it should also have robustness, adaptability, and real-time performance. Generally, target tracking can be classified into Single Object Tracking (SOT) and Multi-Object Tracking(MOT).In SOT,the objective is to lock onto a single object in the image and track it until it exits the frame.MOT is a task that takes an initial set of object detections, creates a unique ID for each of the initial detections, and then tracks each of the objects as well as maintains the ID assignment as they move through multiple frames in a video. In UAV video surveillance scenes, MOT is a common and critical problem.

One of the challenges of MOT is a multi-target tracking benchmark designed to standardize multi-target tracking evaluation.It creates a framework that provides a large number of datasets, as well as a general-purpose evaluation tool that includes a variety of metrics such as recall,precision,and running time. MOT17 is one of the most popular benchmarks containing several training and test video sequences in unconstrained environments filmed with both static and mobile cameras. Its tracking and evaluation are performed by image coordinates. All sequences have been annotated with a high accuracy, strictly following a well-defined protocol. In 2016,Bewley et al.5proposed Simple Online and Realtime Tracking(SORT), a practical multi-object tracking method mainly for online and real-time applications to effectively associate objects. Bewley exploited the power of Convolutional Neural Network (CNN) based detection in the context of MOT and provided a pragmatic tracking approach based on the Kalman filter and the Hungarian algorithm. The new detector can improve tracking performance by up to 18.9%, while the tracker updates at a rate of 260 Hz, which is over 20× faster than other state-of-the-art trackers. However, the number of identity switches returned by SORT is relatively high, because the employed association metric is accurate only when the state estimation uncertainty is low.In view of this problem,Nicolai et al.6. integrated appearance information to improve the performance of SORT in 2017, and then proposed Simple Online and Realtime Tracking with a Deep Association Metric(Deep-Sort).They overcame this problem by replacing the association metric with a more informed metric that combines motion and appearance information. In particular, a trained CNN is applied to discriminate pedestrians on a large-scale person re-identification dataset.By integrating this network,they also increased the robustness for missing and occlusion objects while keeping the system easy to implement, and efficient as well as applicable for online executions.

Despite rapid progress achieved in MOT research, few works studying MOT of UAVs have been conducted, particularly for the confrontational condition applications with poor channel environments. This paper focuses on this problem to promote the application of the current MOT algorithm for UAVs.

3. Proposed optimal strategy method

3.1. System composition and information relation of optimal strategy system

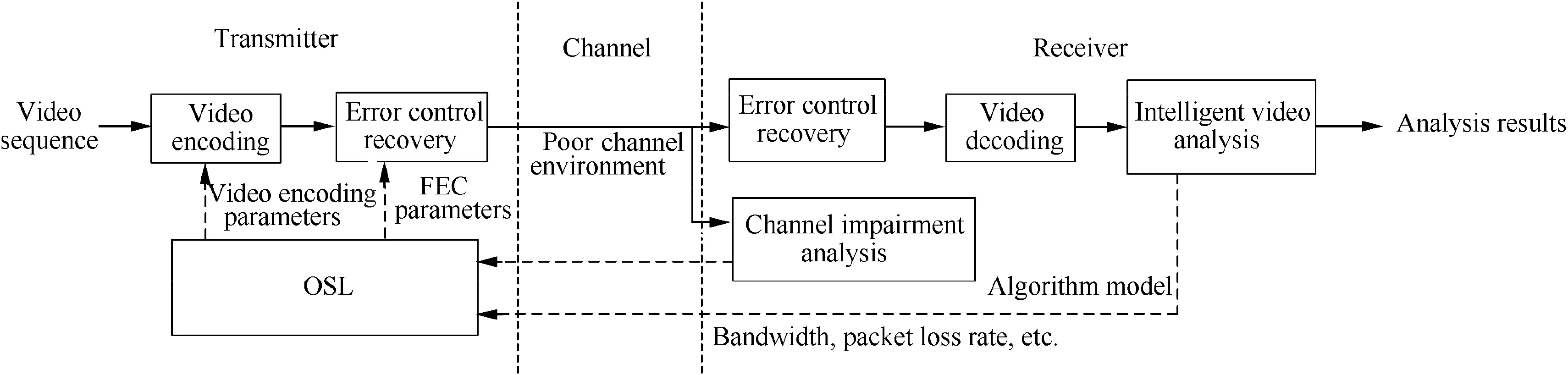

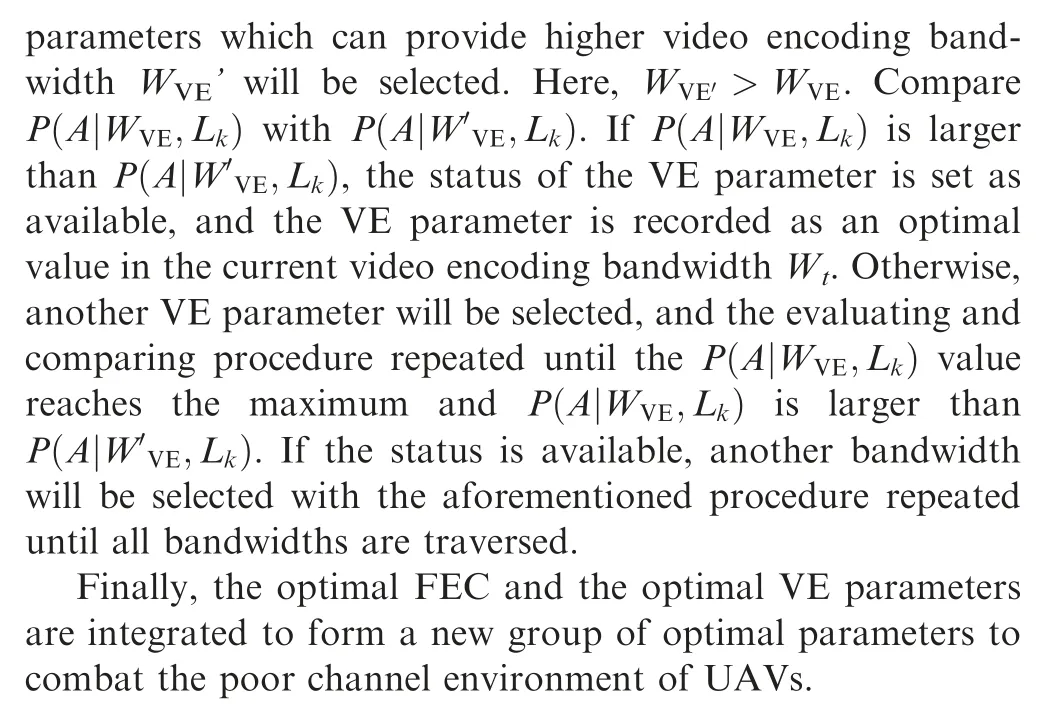

Two parts are included in our optimal strategy system. As shown in Fig.1,the first part is the application system of intelligent video analysis,composed of a video encoding module,a channel impairment analysis module,an error control recovery module, a video decoding module, an OSL and an intelligent video analysis module. Relationship of these modules in the application system is described as follows.

(1) For an intelligent video analysis model adapted to the bandwidth, packet-loss rate and other information fed back from the receiver, the transmitter selects the optimal VE and FEC parameters from the OSL to encode the video and transmit the packets, respectively.

(2) After the video stream reaches the receiver over a poor wireless channel, data recovery is first performed, the video is then decoded, and the intelligent video analysis is finally conducted.

(3) The receiver analyzes the wireless channel and feeds back the channel information to the transmitter in real time.Adapted to the channel environment,the transmitter adjusts the VE and FEC parameters in real time to avoid the dramatic degradation of video quality. The real-time feedback and adaptive mechanism facilitate the intelligent video analysis model to maintain its highest accuracy in the poor channel environment.

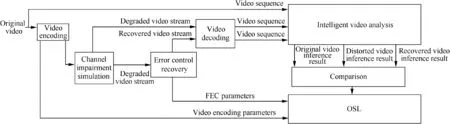

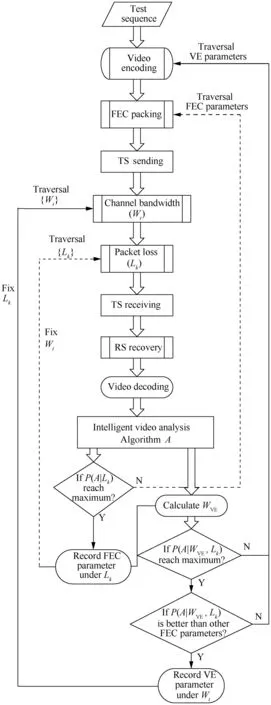

As shown in Fig.2,the second part is the generation system of OSL for an intelligent video analysis algorithm. Different from the poor channel environment in the application system,the channel impairment simulation module is proposed to generate degrade video stream.In addition,a comparison module is added to determine whether the VE and FEC parameters in the OSL need to be recorded. The relationship of these modules in the generation system is described as follows.

Fig.1 Application system of intelligent video analysis.

Fig.2 Generation system of OSL.

(1) Original video sequences are successively processed by the video encoding module first,and then the same video sequences are inputted into the intelligent video analysis module.

(2) The encoded video streams and their corresponding encapsulated video streams using FEC parameters are respectively inputted to the channel impairment simulation module. Then, two degraded video streams are generated. The error control recovery module recovers the degraded FEC encapsulated video streams to obtain the recovered video streams.Both the video streams are decoded by the video decoding module before being respectively inputted into the intelligent video analysis module.

(3) The intelligent video analysis module analyzes the original, the degraded, and the recovered video sequences,obtaining their corresponding inference results.

(4) In the comparison module, these three inference results are compared to determine whether the performance of the intelligent video analysis model is efficient and whether the VE and FEC parameters of the recovered video stream should be recorded in the OSL.

(5) The channel impairment simulation module simulates channel impairments until all kinds of UAV channel impairments are traversed. For each kind of channel impairment,the optimal VE parameter and FEC parameter can be selected and recorded in the OSL.

3.2. Intelligent video analysis of UAVs

3.2.1. Illumination for UAV channel environment

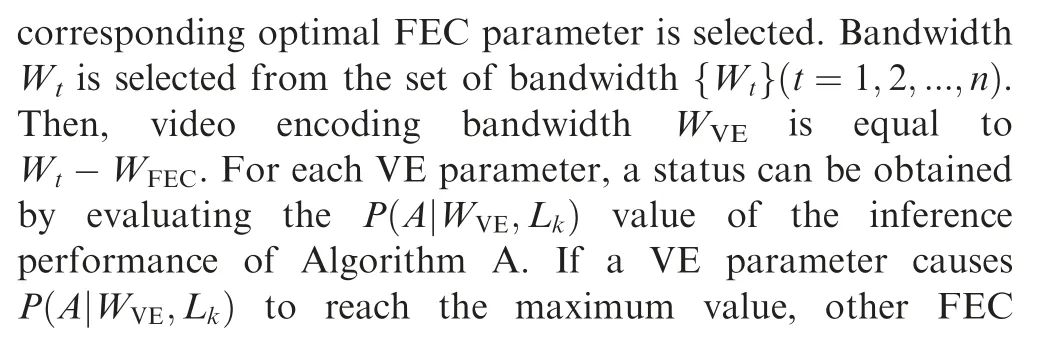

If an intelligent video analysis Algorithm A works in a poor channel environment with a bandwidth W′and a packet-loss rate L′, there are

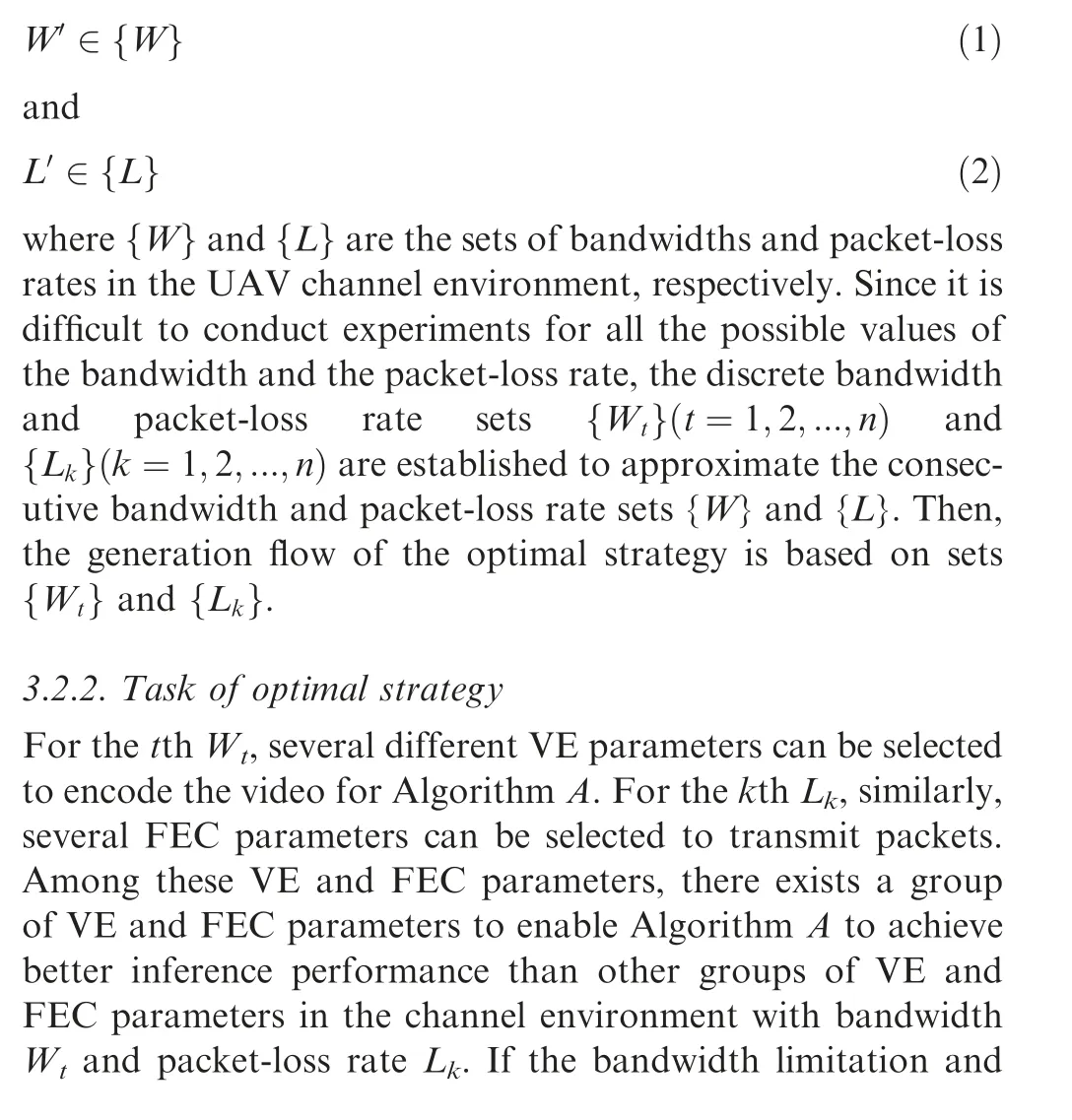

Fig.3 Flow chart of optimal strategy generation.

4. Simulation experiments

4.1. Implementation details

The UAV simulation platform consists of a video encoding device,a network simulation test bed,a video decoding device and an intelligent video analysis model evaluation device. The video encoding device and video decoding device are two Tseries ThinkPad laptops with JAVS codec and RS UDP transmission software.In the JAVS codec software,we adjust three VE parameters {Fi,Ri,RC}, i=1,2,...,n, which represent a set of resolution, frame rate and quantization accuracy, respectively. In the RS UDP transmission software, Reed-Solomon codes RS(m,n)are used as FEC parameters.The network simulation test bed is a SPIRENT network-emulation-and-simula tion. The evaluation device of the intelligent video analysis model is a desktop with NVIDIA TITAN GPU. In the experiment, we show the dynamic change of UAV communication channels, where the specific parameters in Subsection 3.2 are listed as follows.

Wt={4 Mbps, 2 Mbps, 1.5 Mbps, 1 Mbps, 768 kbps,384 kbps, 256 kbps}

Lk={1%, 5%, 10%, 15%, 20%}

RS(m,n)={(10,9), (12,10), (15,11), (22,16), (30,20),(45,30), (60,35), (80,50), (110,55)}

Fi={30, 25, 20, 15, 10,1}

Ri={1080P, 720P, 4CIF, CIF}

RC is set as CBR_FRM.

4.2. Datasets

These degraded video sequences are processed with two classical MOT algorithms, Sort5and DeepSort.6The processed results are evaluated on a public available MOT17 benchmark with 7 1080P video sequences. The ground truth annotations of the 7 video sequences are released. We use the 7 video sequences in the MOT17 benchmark for the performance analysis of the Sort and DeepSort methods. The corresponding degraded and recovered video sequences’ annotations of the 7 video sequences in the MOT17 benchmark are processed by the intelligent video analysis model evaluation device, and the tracking results are respectively evaluated by the benchmark.

4.3. Evaluation metrics

To evaluate the performance of the two MOT methods, we adopt the widely used CLEAR MOT metrics,21including Multiple Object Tracking Precision (MOTP) and multiple object tracking accuracy (MOTA), which combine False Positives(FP), False Negatives (FN), and Identity Switches (IDS). In addition,other MOT evaluation metrics Including ID F1 score(IDF1), FragMentation (FM), Mostly Tracked (MT) and Mostly Lost (ML) are also adopted in our experiments. Each evaluation metric is described as follows.

(1) IDF1: The ratio of correctly identified detections over the average number of ground-truth and computed detections.

(2) MOTA: Summary of overall tracking accuracy in terms of false positives, false negatives and identity switches.

(3) MOTP: Summary of overall tracking precision in terms of bounding box overlap between ground-truth and reported location.

(4) FP: The total number of false positives.

(5) FN:The total number of false negatives(missed targets).

(6) Identity switches (IDs): Number of times that the reported identity of a ground-truth track changes.

(7) FM: Number of times that a track is interrupted by a missed detection.

(8) MT: Percentage of ground-truth tracks that have the same label for at least 80% of their life span.

(9) ML: Percentage of ground-truth tracks that are tracked for at most 20% of their life span.

4.4. Performance analysis

4.4.1. Performance of packet loss

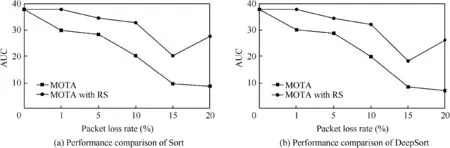

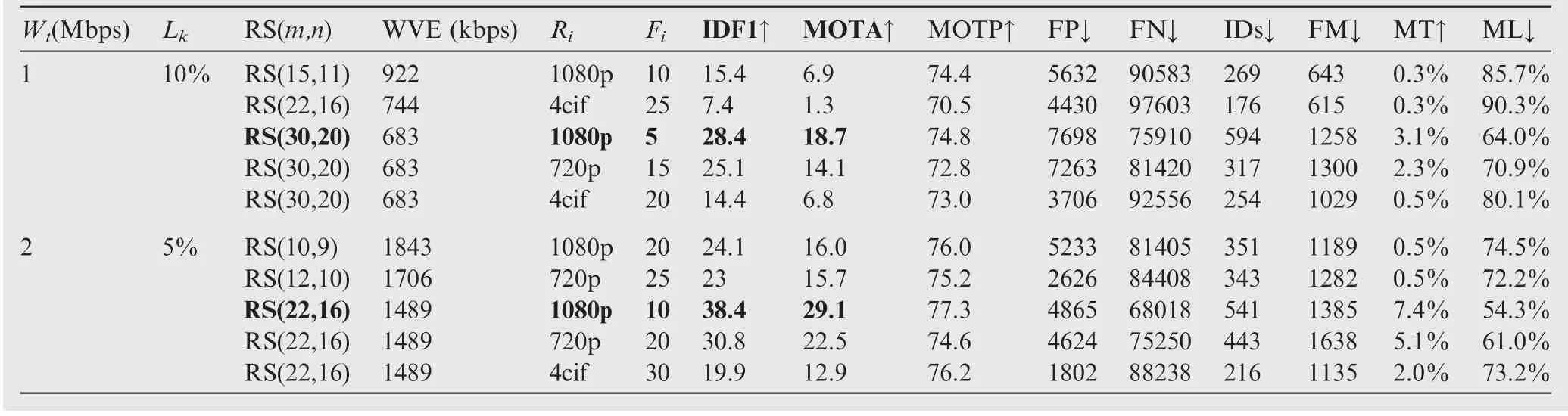

The performance of Sort and DeepSort at 5 packet-loss rates and RS(m, n) recovery at each packet-loss rate is listed in Tables 1 and 2, respectively. IDF1 and MOTA are chosen to represent the inference accuracy(AUC)of the two MOT algorithms.The best performance of IDF1 and MOTA and its corresponding RS(m,n) at each packet-loss rate are set as bold type in these Tables. The performance comparison with and without the RS code is shown in Fig.4, where Sort and DeepSort can choose an efficient RS code respectively to keep the performance at a higher AUC score than those without the RS code, even in a higher packet-loss environment.

With the increase of the m,n in RS(m,n)code,the ability to resist packet-loss become stronger. However, video encoding obtains less bandwidth. Because larger m and n mean higher fault tolerance and that RS(m,n)code occupies larger channel bandwidth. The efficiency of the video encoding bandwidth with the RS code is n/m.

4.4.2. Performance of joint limited bandwidth with packet-loss

In this subsection, two instances are listed to illuminate the efficiency of the proposed method when it works over a wireless channel with limited bandwidth and packet-loss.As shown in Table 3, for a UAV channel with 1 Mbps bandwidth and 10% packet-loss rate, several FEC and VE parameters are listed.Parameters RS(30,20)and 1080p with 5 fps enable better performance of the DeepSort algorithm than other param-eters. Similarly, parameters RS (22, 16)and 1080p with 10 fps enable the same algorithm to achieve the best performance in a channel with a 2 Mbps bandwidth and 5% packet-loss. In comparison with different resolutions and frame-rates using the same FEC parameters and WVEbandwidth, the MOTA scores of sequences with high resolutions and low framerates are higher than those of the sequences with low resolutions and high frame-rates. It reveals that the resolution of a UAV video may be more important than its frame-rate for the DeepSort algorithm. In other words, the quality of each video frame may dominate the performance of the MOT algorithm.Consequently,in an MOT task of UAVs,a camera with a high resolution may be more efficient than one with a high frame-rate.

Fig.4 AUC comparison of two MOT algorithms with and without RS code.

Table 3 Performance of DeepSort at two bandwidths with packet-loss of two loss rates.

Therefore,for any channel environment,the proposed OSL can provide an optimal set of VE and FEC parameters,enabling the intelligent video analysis algorithm to achieve its best performance in the current channel environment. The experimental results aforementioned also demonstrate that the proposed optimal strategy method can mitigate the disadvantages of packet-loss and limited bandwidth within UAV channels for intelligent video analysis applications.

5. Conclusions

In this paper, we propose a parameter optimization mechanism for intelligent video analysis to cope with the challenging packet-loss and limited bandwidths in adverse UAV channel environments.In the proposed method,VE and FEC parameters can be optimized by the proposed OSL which is adaptive to the packet-loss rate and bandwidth in practical UAV wireless networks. Given an MOT video analysis task and a practical UAV wireless network, the proposed OSL can provide a set of optimal VE and FEC parameters to encode the video sequence and recover the degraded video. The simulation experimental results demonstrate that the proposed solution can obviously keep the MOT algorithms working efficiently and maximize the inference accuracy of the algorithms in the case of adverse UAV wireless links. In our future work, the OSL may be extended to further improve the performance by taking other video analysis tasks of video communication application into account.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

CHINESE JOURNAL OF AERONAUTICS2020年11期

CHINESE JOURNAL OF AERONAUTICS2020年11期

- CHINESE JOURNAL OF AERONAUTICS的其它文章

- Event-triggered control for containment maneuvering of second-order MIMO multi-agent systems with unmatched uncertainties and disturbances

- Battery package design optimization for small electric aircraft

- Coactive design of explainable agent-based task planning and deep reinforcement learning for human-UAVs teamwork

- Two-phase guidance law for impact time control under physical constraints

- Adaptive leader–follower formation control for swarms of unmanned aerial vehicles with motion constraints and unknown disturbances

- An aggregate flow based scheduler in multi-task cooperated UAVs network