A Real Plug-and-Play Fog: Implementation of Service Placement in Wireless Multimedia Networks

Jianwen Xu,Kaoru Ota,Mianxiong Dong*

Department of Sciences and Informatics,Muroran Institute of Technology,Muroran 0508585,Japan

Abstract: Initially as an extension of cloud computing,fog computing has been inspiring new ideas about moving computing tasks to the edge of networks.In fog,we often repeat the procedure of placing services because of the geographical distribution of mobile users.We may not expect a fixed demand and supply relationship between users and service providers since users always prefer nearby service with less time delay and transmission consumption.That is,a plug-and-play service mode is what we need in fog.In this paper,we put forward a dynamic placement strategy for fog service to guarantee the normal service provision and optimize the Quality of Service (QoS).The simulation results show that our strategy can achieve better performance under metrics including energy consumption and end-to-end latency.Moreover,we design a real Plug-and-Play Fog (PnPF)based on Raspberry Pi and OpenWrt to provide fog services for wireless multimedia networks.

Keywords: wireless multimedia networks; fog computing; service placement; quality of service

I.INTRODUCTION

Fog computing or fog networking,first raised byCiscoin 2012 [1],is an architecture that adopts small and medium-sized devices near end-user or at the edge of the network to provide substantial capacity of storage,communication,management,and control.Rather than building all the infrastructures at the centralized area of the communication network,fog computing focuses on how to achieve decentralization of network structure.As a result,a wide range of issues such as reducing transmission delay,self-organizing and emergency management can be better solved in simpler ways with less time and energy cost.Along with the current Internet of Things (IoT)boom,fog is just suitable with it in supply and demand.That is,IoT needs timely response for millions of sensors,devices,and machines since outdated information would no longer be useful within the massive multimedia big data being generated every single second.Instead of setting one or a few servers/data centers to undertake all the storage and processing tasks,we are able to allocate the workload to multiple smaller devices as fog nodes.Not only dedicated fog devices such as Cisco Unified Computing System (UCS)servers at the edge of the network,routers,switches,wireless access points (WAPs),video surveillance cameras etc.can also play the roles of fog service providers [2].Therefore,short distance,lightweight computing resource,and mobility support together can form an ideal platform for IoT devices and services.

Service placement is a concept used in cloud computing which aims at shifting among different service providers without considering about implementation,compatibility,integration and interoperability issues.Usually,the components of a complete process of service placement in cloud computing may vary according to the complexity of the service or application itself.In [3] Kaisler and Money summarize the main issues of service placement in cloud as acquisition,implementation,security issues and economics in orders of the difficulty of realization.Acquisition mainly refers to what users may make choices of changing providers while there exist services at different costs,QoS or failure rates.Implementation is about the balance between supply and demand,that is,how providers satisfy the needs of users as well as setting rules or standards.Security and privacy issues are also very essential here including the consideration of authorization and authentication (by providers themselves or third parties),users' rights (time limit and scope of migration),etc.Economics is about how providers can make more profit by enlarging the scope of services and attracting more users.We take the Amazon Elastic Compute Cloud (Amazon EC2)[4] as an example.As one of the largest cloud computing platforms,EC2 can provide additional paid service for migrating data volumes between Elastic Block Stores (EBSs)in different capacities and cost.The formal EBS can also be reserved temporarily but charge for extra expenses.

Fog computing always has to face a more complicated relationship between users and providers in service placement.As fog users,just because of our requirement for lower latency and cost,we choose the distributed fog service as a solution.Thus,there may exist no fixed relationship between users and providers.In comparison with the condition of cloud,supply and demand here can be loose and provisional.That is,users always prefer service provider with suitable distance,performance and price according to the current situation,a fixed supply and demand relationship may not fit here.As a result,selecting appropriate locations and suitable fog providers for users' current needs can be very essential in building up an efficient fog network.

In this paper,we put forward a dynamic placement strategy in a three-tier wireless multimedia network to provide plug-and-play fog (PnPF)services to mobile users in wireless multimedia networks.

·We study the related work about service placement in traditional Ethernet/wireless networks and find out the suitable direction in solving this problem;

·We design a dynamic service placement strategy for fog computing in a three-tier wireless multimedia network model to reduce latency and energy consumption;

·We carry out simulations to compare the performance of our strategy with existed ones under the same experimental settings.The analysis results show that our strategy can achieve less latency and energy consumption.

·We design a real Plug-and-Play Fog based on Raspberry Pi 3 Model B and OpenWrt Project to provide fog services for wireless multimedia networks.

This paper is divided into seven sections to cover all aspects of the research.Section II introduces related works in fog computing,wireless multimedia networks,and service placement.Section III designs the three-tier wireless multimedia network and formulates the problems to solve.Section IV proposes a dynamic service placement strategy for fog computing in wireless multimedia networks.Section V carries out simulations and evaluates the performance.Section VI proposes an implementation of PnPF service for wireless multimedia networks.Section VII summarizes all the work.

As an extension of a conference paper,in this paper,first we add the content of introducing service placement and cite recently published papers in section I and II.Second,we propose an optimized service placement algorithm based on the former one and re-complete the simulation in a larger number of repeat times.Use ratio of devices is added as a new metric.Third,we add the implementation of the plug-and-play fog computing using Raspberry Pi and OpenWrt.

II.RELATED WORK

In this section,we introduce related work about fog computing,wireless multimedia networks,and service placement.

2.1 From cloud to fog

Ever since Bonomiet al.introduced the concept in 2012 [1],fog computing has become a hotspot in both academics and industry.The research team led by Ivan Stojmenovic regard fog computing as an important class of Cyber-Physical System (CPS)for smart things and machine-to-machine applications.In their view,fog exists between the edge and cloud areas while sharing the upstream workload with low latency and cost.They also discuss security and privacy issues in fog computing such as user authentication and authorization,malicious cyber-attacks [5].Taoet al.try to integrate cloud computing and fog computing into Vehicle-to-Grid (V2G)networks to enable 5G technology in the future.They illustrate a V2G network environment embedded with a three-layer system model including both permanent cloud and temporary cloud to supply high-performance network services to electric vehicle drivers and Internet access devices [6].Davyet al.summarize and analyze the challenges of achieving Edge-as-a-Service (EaaS)just like in cloud architecture.They regard EaaS as a new network interface to provide customized network service to geographically distributed users [7].Liet al.study the cross field of edge computing,IoT,deep learning and explore new research directions that may exist [8][9].Wuet al.combine fog computing and information-centric networking (ICN)in realizing security services for social networks [10].Liet al.design a manufacture inspection system using convolutional neural network (CNN)and fog computing in solving the efficiency problem [11].

2.2 Wireless multimedia network

Wireless multimedia network is a broad concept which includes the cases of mesh,sensor network,and other network structures.Its recent related researches are mainly about QoS and sustainability.Nanet al.focus on the problem of bandwidth and resource allocation in wireless multimedia social networks (WMSN)and design a distributed strategy using Stackelberg Game model to solve the competition between desktop users and mobile users in WMSN [12].Shenget al.pay attention to cooperative relaying in wireless multimedia networks and propose methods to save energy in transmission and ensure reliability and QoS in practical routing [13].Hameedet al.design a video quality prediction model and put forward error correction scheme to prevent packet loss and quality degradation in compression and transmission of video contents [14].Liuet al.focus on the fast-start delivery of video for future network architectures using a caching method in content-centric network [15].Otaet al.survey about deep learning for mobile multimedia networks and applications [16].

2.3 Service placement

The concept of service placement has literally existed for long,yet its importance is not noticed by the public until cloud computing emerges.Early in 2011,Breitgandet al.fromIBMstudy the service placement model in federated clouds to maximize profit as well as guarantee QoS according to the Service Level Agreements (SLA).They analyze common problems in minimizing provisioning costs and design an architecture called reservoir to integrate the various components [17].There are also researches on service placement of distributed cloud system which can be regarded as a prototype of fog in solving the urgent need of latency-sensitive services.Steineret al.introduce the challenges in researches on distributed cloud systems including latency sensitivity,storage capacity and different components of application communication [18].Zhanget al.study the service placement in a geographically distributed data center and design the system architecture in the case of the single service provider and multiple users in competition with each other [19].Altmannet al.put forward a comprehensive cost model to make service placement decisions on hybrid clouds.In their design,both public cloud and private cloud can together supply service migration to guarantee QoS and low cost [20].Wanget al.focus on how to predict the future cost using both offline and online methods in mobile micro clouds.They define the migrations of service in multiple time slots and predict the network conditions in a possible range [21].Ouyanget al.focus on unleashing the limitation from the user mobility for mobile edge computing in [22].They regard service placement as the key to settle the problem of long-term cost budget constraint and apply Lyapunov optimization in designing optimized algorithms.Selimiet al.apply service placement in community network to implement lightweight micro-clouds.Their heuristic method can significantly reduce the chunk losses in video streaming services provided by micro-clouds [23].Souzaet al.put forward service placement strategies for cloud and fog computing paradigm.They also design specific resource models for the tasks offloaded between cloud and fog [24].

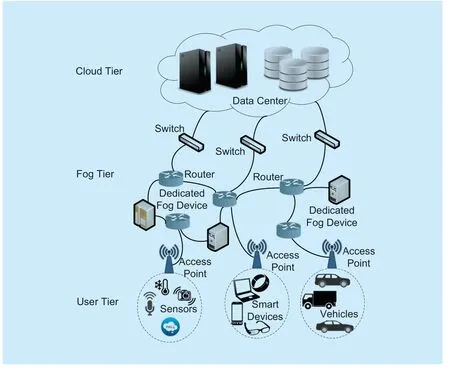

Fig.1.A three-tier fog network model.

III.PROBLEM FORMULATION

In this section,we design a three-tier network model and formulate the problems to solve.

3.1 System outline

As shown in figure 1,a fog network includesCloud Tier,Fog Tier,andUser Tier.Cloud Tieris the tier of data center which stores all the original data could be requested by users.Fog Tieris made up of routers,access points and dedicated fog devices providing relatively small capacity but real-time and low-cost services.

User Tierstands forThingssuch as sensors,smart devices,vehicles generating multimedia big data and requesting for storage and processing from upper tiers.Here we useC,FandUto represent three tiers in whichF= {f1,f2,f3,...} and U = {u1,u2,u3,...} are fog and user nodes.In our design,a useruimay send out requests for services from the fog network.Cloud Tiermainly plays the role of providing necessary support for data query and storage and serving as an alternate service provider to cope with the requests toFog Tier.

We consider two service placement models,the time-slot model and the real-time model,to simulate the process of fog service from different aspects.In time-slot model,we assume that in each time-slot or we can regard it as a round of service placement,each useruisends out a task request and together carving up all the limited computing resources in the network.In real-time model,computing resources in upper tiers have occupation time which means only the idle resource provider can be requested,that is to say,once occupation time is finished the resource can be requested again.For users,the next task request would be available until the last one is finished.

Moreover,inUser Tierbecause of different users such as sensors and portable devices,task types and data types in transmission are also different.For example,a video camera may have to upload video files without interruption and need more than one dedicated fog devices to help handle video streaming.Another smartphone only needs to edit some texts online and one non-dedicated fog device can undertake.

In summary,our goal is to find an optimal service placement strategy to allocate the computing resources and achieve trouble-free running of fog service in this three-tier wireless network model.

3.2 Performance metrics

To evaluate the performance of placing service in our designed three-tier fog network,we choose latency and energy consumption as the main metrics.End-to-end latency or oneway delay refers to the time cost of a packet traveling from one end device to another.In our model,we consider two kinds of latencies in service placement,transmission latencyand propagation latencythat directly relate to service placement and can show gaps in performance between different strategies more intuitively.

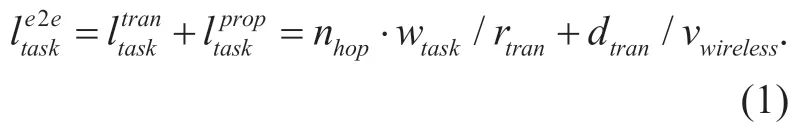

As shown in Equation (1),nhop,wtaskanddtranstand for number of forwarding hops,workload and distance between two nodes of a task,respectively.Andrtran,vwirelessare wireless transmission rate,wave propagation speed (here we usec,the speed of light in air)of the fog provider.

For energy consumption,we apply the linear model power consumption measurements in [25].In Equation (2),we consider the situations of both the broadcast/point-to-point (bc/p2p)and send/receive (send/recv).mandbare linear coefficients.In our fog network model,request messages fromuibetween across different tiers are broadcast,and acknowledge messages and packets of data are point-topoint.

IV.SERVICE PLACEMENT STRATEGY

In this section,we design a two-way matching strategy based on the dynamic service placement (DSP)one in [26] to solve the tasks requested byUser Tierfor less time cost and energy consumption.

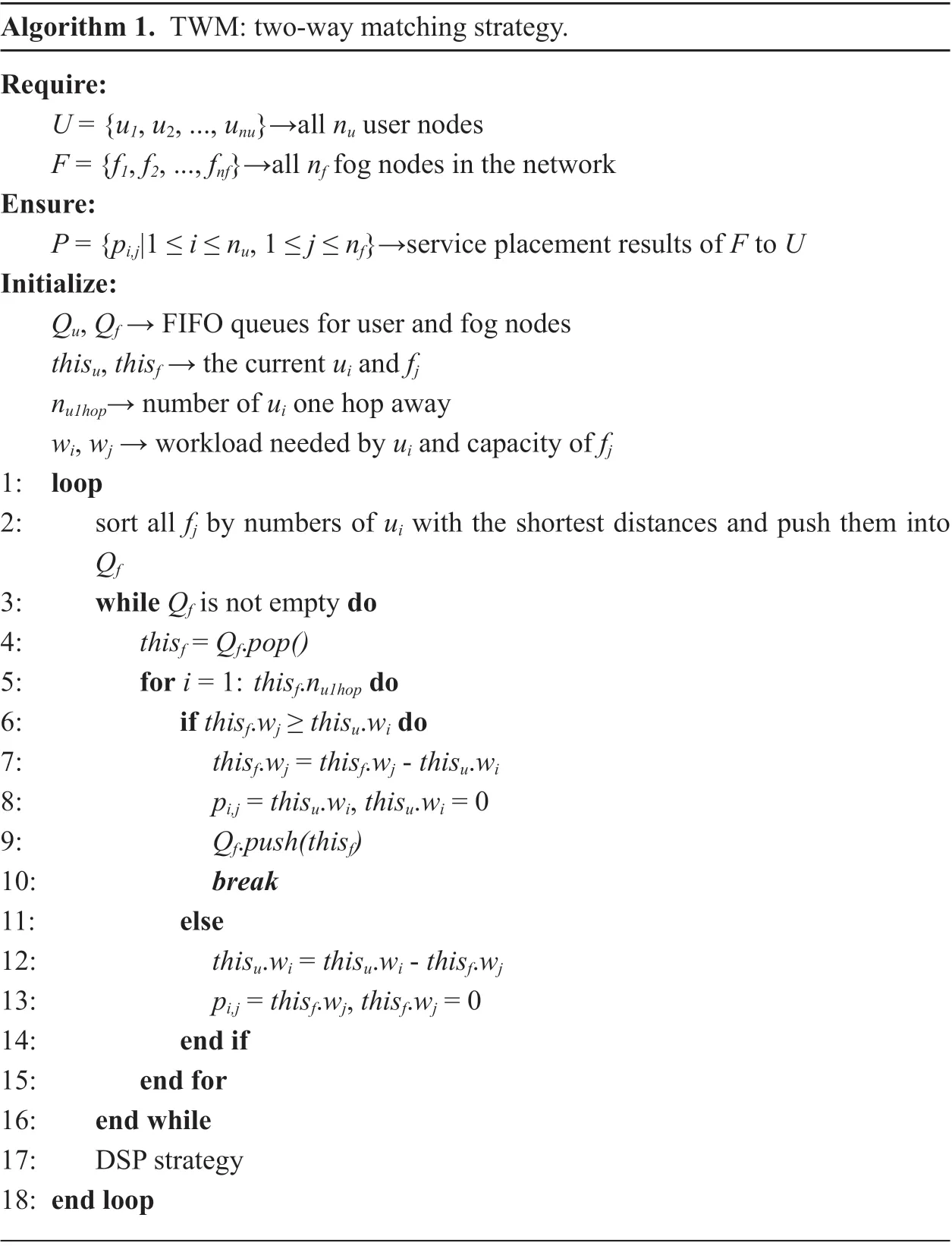

As shown in Algorithm 1,our target here is to find all service placement results between eachuiinUandfjinF.QuandQfare two First in first out (FIFO)queues to maintain the order of placing service fromFtoU.thisuandthisfrespectively stands for the currentuisending request andfjproviding service.wiandwjare workload needed byuiand workload capacity offj.

Algorithm 1.TWM: two-way matching strategy.Require: U = {u1,u2,...,unu}→all nu user nodes F = {f1,f2,...,fnf}→all nf fog nodes in the network Ensure: P = {pi,j|1 ≤ i ≤ nu,1 ≤ j ≤ nf}→service placement results of F to U Initialize: Qu,Qf → FIFO queues for user and fog nodes thisu,thisf → the current ui and fj nu1hop→ number of ui one hop away wi,wj → workload needed by ui and capacity of fj 1: loop 2: sort all fj by numbers of ui with the shortest distances and push them intoQf 3: while Qf is not empty do 4: thisf = Qf.pop()5: for i = 1: thisf.nu1hop do 6: if thisf.wj ≥ thisu.wi do 7: thisf.wj = thisf.wj - thisu.wi 8: pi,j = thisu.wi,thisu.wi = 09: Qf.push(thisf)10: break 11: else 12: thisu.wi = thisu.wi - thisf.wj 13: pi,j = thisf.wj,thisf.wj = 014: end if 15: end for 16: end while 17: DSP strategy 18: end loop

Based on the original dynamic service placement solution,we apply the idea of twoway matching.Before the process of DSP which letuilook for their service providers,we also consider the opposite situation.That is,from the perspective of the service provider,we suppose allfjaim at finding the most suitable service customers.In this case,the saved time and energy can serve moreui.

First in line 2,in each loop,we start by sortingfjinstead ofuito make local optimums as much as possible.Here we introduce a new variablenn1hopto represent the number ofuiwhen deciding the nearest fog node for each one.As a result,eachfjcan take turns from small to large choosing nearby customers.

Time complexity of Algorithm 1 isO((nu)2+nfnn1hop+nu((nf)2+nf)).Fornn1hopwe can get the mean value asnu/nf.As a result,the answer isO(nu2+nunf2).

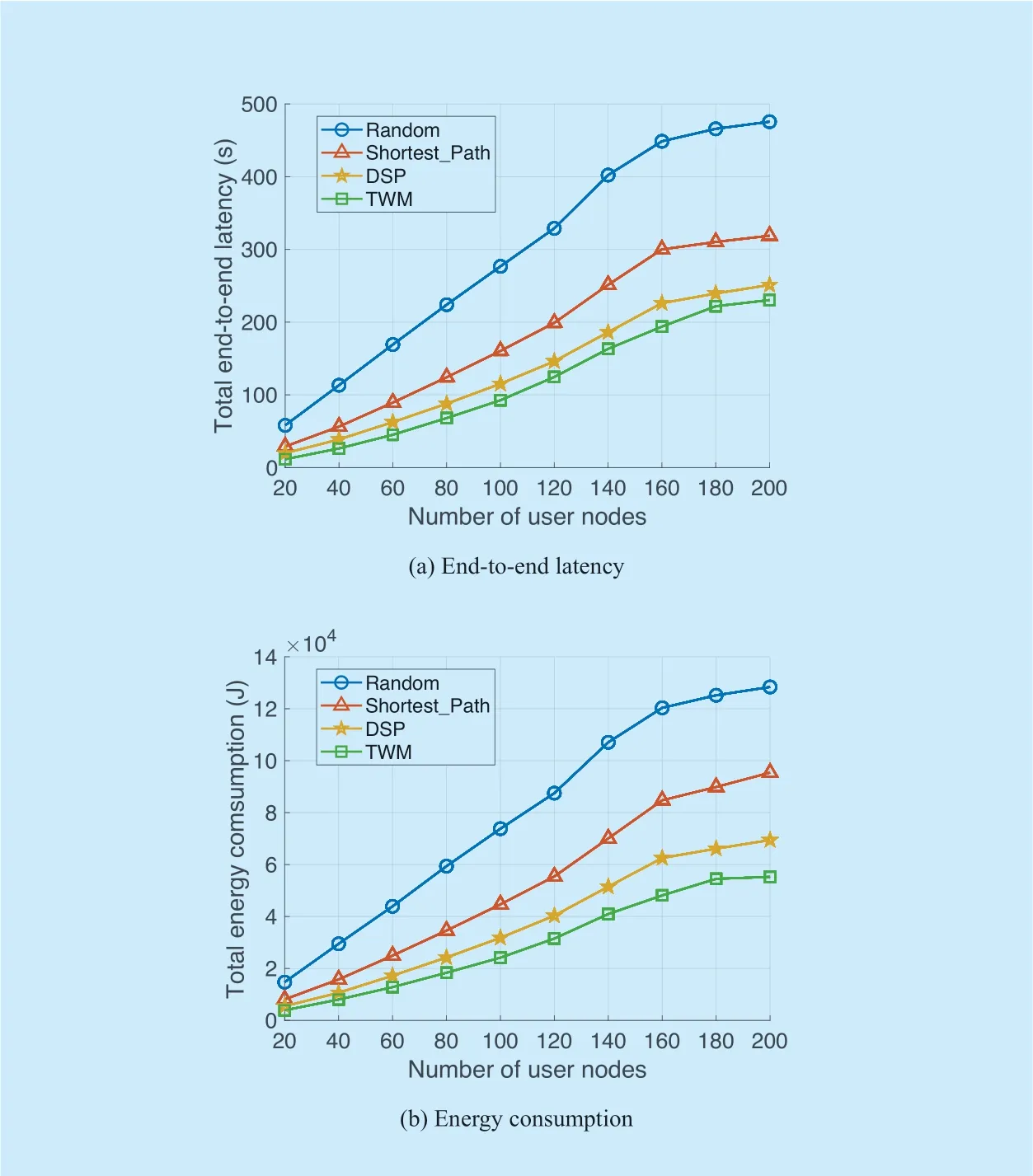

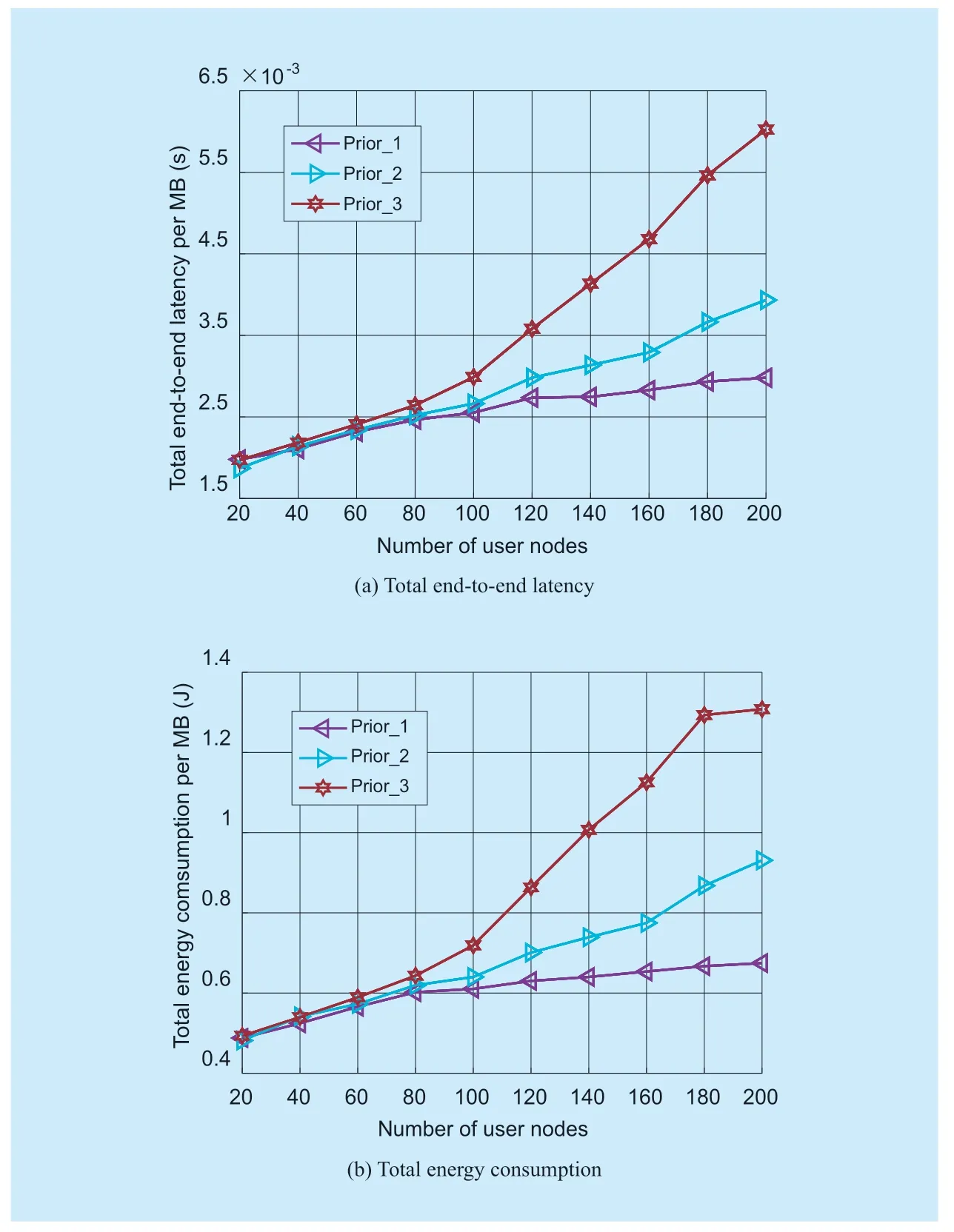

Fig.2.Simulation results of three placement strategies.

Here we consider four content formats of multimedia packets in the experimental simulation.Requests for tasks in multimedia content formats of text (1 KB ~ 256 KB),image (512 KB ~ 2 MB),audio (512 KB ~ 5 MB),and video (1 MB ~ 20 MB)have different size scopes from 1 KB to 20 MB,which get differentiated in our placement strategy.Besides,we also take into account three priority groups in sorting the requests from all user nodes.

V.SIMULATION AND ANALYSIS

In this section,we carry out simulations to evaluate the performance of our designed service placement strategy.The simulation scenario is a500×500 m2open area in which we set up 30 fog nodes (20 routers and 10 dedicated fog devices).Workload capacities of routers and dedicated devices are {4,8,16} MB and {32,64} MB.Numbers of user nodes range from 20 to 200 to test the results of latency and energy consumption facing different numbers of task requests.We set time-slots for request sending and service placing.We repeat each group of simulations with different numbers of users 100 times.The simulation environment is MATLAB R2018b.

5.1 Latency and energy consumption

As shown in figure 2,we compare the simulation results of our designed two-way matching (TWM)strategy with a random method and existed method originally applied in mobile micro-cloud [18] using the idea of shortest path.The latency and energy consumption here are only about fog service.

First in figure 2(a),blue,red,yellow and green broken lines stand for total end-to-end latency of random,shortest path methods,DSP and proposed TWM,respectively.All four lines show alike variation tendency as the number of user nodes increase from 20 to 200 that smoothly rise and then slow down until stabilize.The overall trend can be explained that when the number of user nodes is relatively small compared to the available fog service,we are able to place suitable service to requests in all sizes and priorities of workload without worrying about excessive transmission distances or energy cost.As the demand for service resources continues to grow,supply gradually approaches saturation.Then the analysis among three methods,from top to bottom,TWM shows the least latency in placing service to a different number of fog nodes and the gap becomes larger and larger as the number of users increases.Since in Section IV we consider both the selecting processes from users and providers,service resources may get better utilized.

Second in figure 2(b),the patterns of three lines are similar to figure 2(a).The only difference is that the gap between blue line (Random)and red line (Shortest_Path)seems to be narrower than the latency result.Excluding the influence of scales in two subfigures,the case can be explained that shortest path method from cloud computing may not consider the influence of geographical distribution characteristic which may drag down its performance in fog computing,especially energy consumption.

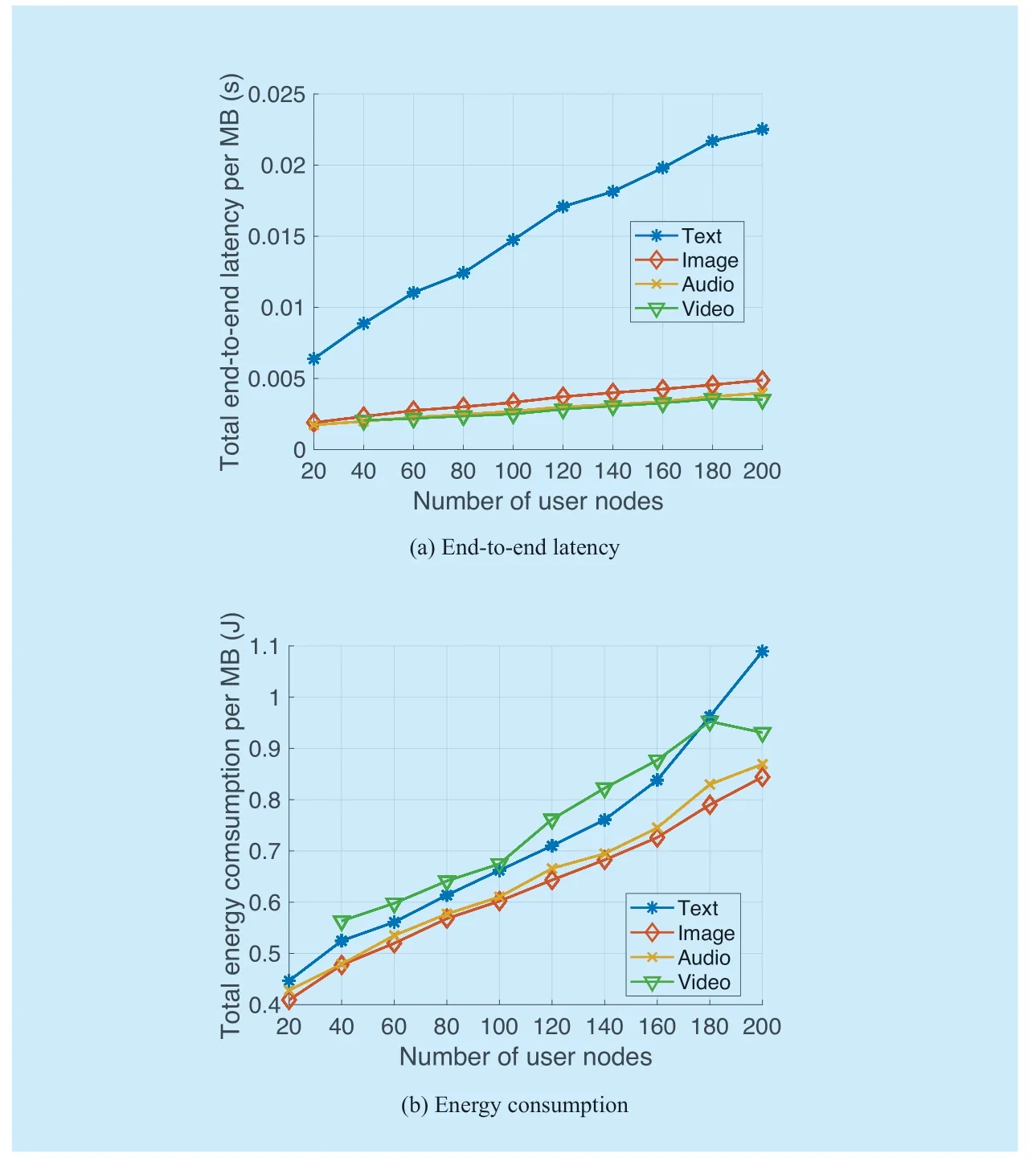

5.2 Results of content formats and priorities

To figure out how our TWM algorithm treats requests in different multimedia content formats and priorities and further evaluate the performance of our placement strategy in details.

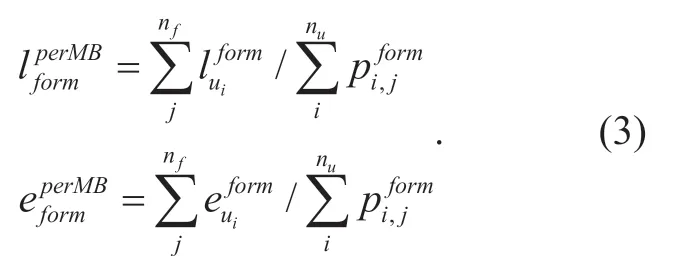

Equation (3)gives the methods of calculating latency and energy cost per MB of four different multimedia content formats.Here we count only the part of latency and energy cost of the specific content format and the workload being placed to get the ratio value.

As shown in figure 3,here we calculate the results of four different multimedia content formats by dividing the size of workload (MB).First in figure 3(a),blue line of Text with the smallest size scope leads from beginning to end as number of user nodes increases.As shown in Equation (1),part of the total latency,propagation latencyhas nothing to do with the requested workload.And since each multimedia content format is requested in the same possibility,it is just because of the small size of workload that a lot of extra time cost is on the propagation latency.Compared to other content formats,to place the same size of service resources for smaller Text,transmission distances may increase exponentially.Moreover,the other three content formats show nearly coincident growth trends.Video with the largest task size scope is slightly higher than Image and Audio in numerical value.

Then in figure 3(a),energy consumption of Text is no longer significantly leading in value.The two multimedia formats with the middle average size of the single file format cost less energy per MB during transmission.Compared with latency,energy cost also has a part independent of workload size but may not appear gap like figure 3(a).This could be explained by the difference in Equation (1)and (2),that is,linear coefficientis a constant whileis related to the transmission distance.

Fig.3.Simulation results of four multimedia content formats.

Fig.4.Simulation results of three priorities.

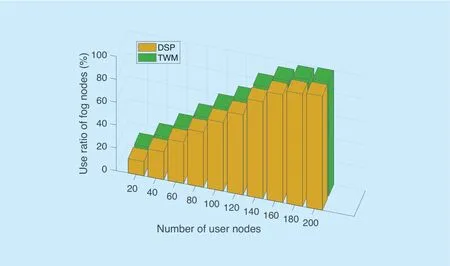

Fig.5.Use ratios of DSP and TWM.

Besides multimedia content formats,we also consider the results of fog service placement in different priorities.The calculation method is the same with Equation (3).We treat requested tasks by three levels of priority.Figure 4(a)and 4(b)show a similar pattern overall.Results of both latency and energy consumption meet the experimental expectation that requests in high priority can get placed fog service in low latency and energy consumption.

Lastly,in this section,we compare TWM with DSP for use ratios of all fog nodes.As figure 5 depicts,TWM improve the performance of service placement while keeping relatively unchanged device use ratio.

VI.THE REAL PLUG-AND-PLAY FOG

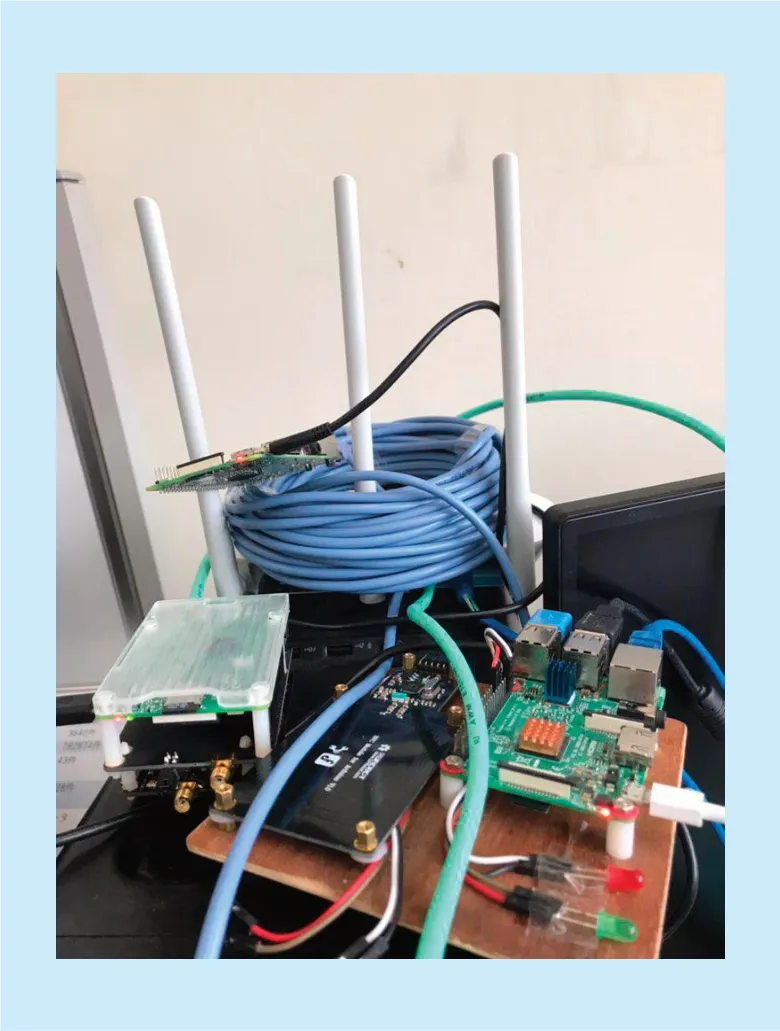

In this section,we focus on the implementation of PnPF using Raspberry Pi 3 Model B [27] and OpenWrt Project [28].

According to the network model in figure 1,we set one RP3B as a server to save the original multimedia files.Then two RP3Bs as two fog nodes are providing computing service to users nearby.There exist wired connections among three RP3Bs.We choose OpenWrt 18.06.1 as the embedded operating system for fog nodes to achieve routing and other computing functions.

A proposed PnPF is shown in figure 6,here we suppose users inUser Tierare sending requests for processing multimedia files stored inCloud Tier.There are mainly three kinds of processing tasks,image,audio and video.All multimedia files are handled by FFmpeg [29] for transcoding.We build our experiment software in ARM Linux by cross-compilation.

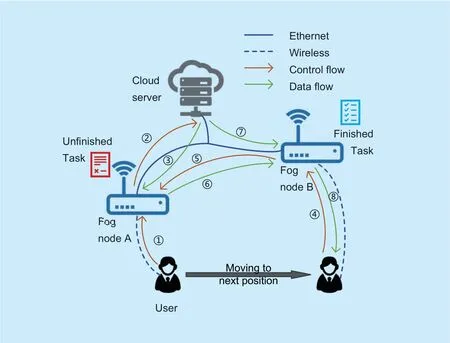

As shown in figure 7,we use curved arrows in different colors to display control flow and data flow in our design.Control flows use UDP protocol and data flows use TCP flows for convenience.

Our experimental goal is to simulate the case that when user moves while requesting for fog services.In order to dynamically place services based on real-time model.That is,rather than at the risk of high packet loss rate continuing to connect to the original node or staying in place waiting for task being finished,we partition the task from the beginning.The process of PnPF service placement is as follows.

·① User sends a request to node A nearby.

·② Node A requests file from cloud server.

·③ Node A downloads file from server and process.

·④ User moves near Node B and sends message to it.

·⑤ Node B tells A to take over the unfinished task.

·⑥ Node A sends finished parts of the task and the work state information to B.

·⑦ Node B downloads the rest part of the file and finish processing.

·⑧ Node B returns the result to user.

When a request for a multimedia file is received by fog node (A),the original video downloaded from cloud server will then be cut into several small parts.These video parts are going to be processed in order at fog node (A).Once the user moves to another place that the fog node (A)is far away (We perform service switching by RSSI values in real time).PnPF then chooses a new fog node to take over the unfinished task.That is,finished parts and the current work state information (e.g.the number of next part of the task)are transmitted to fog node (B)by TCP protocol.Until all the parts are done,the current fog node in service will send back the result to user after putting the parts together.

We set three types of requests for file transcoding in different multimedia content formats,video,audio,and image.For video file,in ③ of figure 7 we cut the complete stream into clips of 5 seconds after downloading from server into node A.At the moment node A receives a message from node B (⑤),all video clips finished at Node A will be sent to node B,as well as the next number of clip to process.At last,node B concats all the video clips in the original order.For audio file,the length of clips is 20 seconds.And image without cutting,since the time cost is relatively low.

Fig.6.A PnPF network made up of three RP3Bs.

Fig.7.The process of service placement in PnPF.

The design of workspace of each single task in PnPF is as follow.We use the timestamp (ms)to express the task which includes a work status (downloading,transcoding,completed)and storage for temporary results.For example,in the task of transcoding,we fetch the clips,process and rename them,then save at the storage space.An extra service will keep reading the current work status and display at the user interface.

As a result,with the help of PnPF,we are able to enjoy the portable fog service being placed nearby.

VII.CONCLUSION

In this paper,we focus on the problem of service placement for fog computing in wireless multimedia networks.First,we study the related works about fog computing and service placement methods applied in hybrid cloud services.We design a dynamic service placement strategy to provide fog service to mobile users to solve the requested task.Our experimental setup includes requests in four multimedia content formats and three priorities and different numbers of user nodes.The simulation results show that our proposed strategy can reasonably place service to users in need and achieve better performance in latency and energy consumption compared with existed methods.

In the future,we are going to consider more details in network model design.The model we have now still exists a gap between the actual situation.Our target is always to put the idea into practical use one day.The strategy is also limited to the current experimental setting,we will include more performance metrics to further enrich existing evaluation dimensions to finally achieve the plug-andplay fog service.

ACKNOWLEDGMENT

This work is partially supported by JSPS KAKENHI Grant Numbers JP16K00117,JP19K20250 and KDDI Foundation.

- China Communications的其它文章

- Distributed Optimal Control for Traffic Networks with Fog Computing

- TVIDS: Trusted Virtual IDS With SGX

- A Sensing Layer Network Resource Allocation Model Based on Trusted Groups

- New Identity Based Proxy Re-Encryption Scheme from Lattices

- A Novel Shilling Attack Detection Model Based on Particle Filter and Gravitation

- Application of Neural Network in Fault Location of Optical Transport Network