基于定期竞争学习的多目标粒子群优化算法

刘明 董明刚 敬超

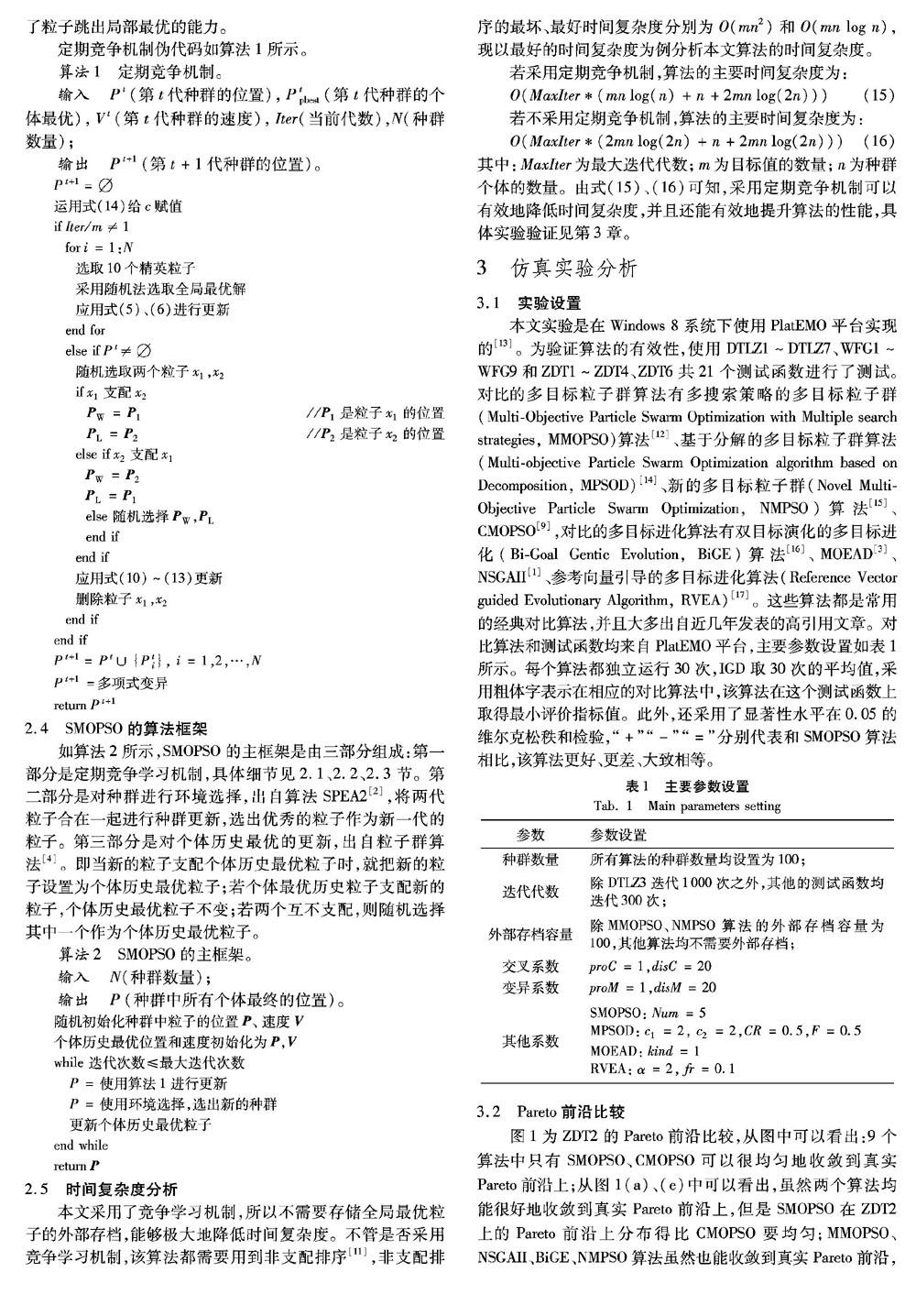

摘 要:为提高种群的多样性和算法的收敛性,提出一种基于定期竞争学习机制的多目标粒子群算法。该算法将多目标粒子群算法和竞争学习机制相结合,即每隔一定迭代代数便使用一次竞争学习机制,很好地保持了种群的多样性;同时,该算法不需要全局最优粒子的外部存档,而是从当前代种群中选取一部分优秀的粒子,再从这些优秀的粒子中随机选取一个作为全局最优粒子,能够有效提升算法的收敛性。将提出的算法与基于分解的多目标粒子群算法(MPSOD)、基于竞争机制且快速收敛的多目标粒子群(CMOPSO)算法、参考向量引导的多目标进化算法(RVEA)等8个算法在21个标准测试函数上进行了比较,结果表明,所提算法的帕累托(Pareto)前沿更加均匀,在世代距离(IGD)上会更加小。

关键词:多目标优化;粒子群优化;定期竞争;竞争学习机制;全局最优选取策略

中图分类号: TP183; TP301.6

文献标志码:A

Abstract: In order to improve the diversity of population and the convergence performance of algorithm, a Scheduled competition learning based Multi-Objective Particle Swarm Optimization (SMOPSO) algorithm was proposed. The multi-objective particle swarm optimization algorithm and the competition learning mechanism were combined and the competition learning mechanism was used in every certain iterations to maintain the diversity of the population. Meanwhile, to improve the convergence of algorithm without using the global best external archive, the elite particles were selected from the current swarm, and then a global best particle was randomly selected from these elite particles. The performance of the proposed algorithm was verified on 21 benchmarks and compared with 8 algorithms, such as Multi-objective Particle Swarm Optimization algorithm based on Decomposition (MPSOD), Competitive Mechanism based multi-Objective Particle Swarm Optimizer (CMOPSO) and Reference Vector guided Evolutionary Algorithm (RVEA). The experimental results prove that the proposed algorithm can get a more uniform Pareto front and a smaller Inverted Generational Distance (IGD).

Key words: multi-objective optimization; Particle Swarm Optimization (PSO); scheduled competition; competitive learning mechanism; global best selection strategy

0 引言

现实生活中普遍存在的优化问题都可以归结为多目标优化问题,而解决多目标优化问题的一个有效途径就是进化算法,常见的多目标进化算法有带精英策略的快速非支配排序遗传算法 (Non-dominated Sort Genetic Algorithm II, NSGAII)[1]、提升Pareto前沿的进化算法 (Strength Pareto Evolutionary Algorithm, SPEA2)[2]、基于分解的多目标进化算法(MultiObjective Evolutionary Algorithm based on Decomposition, MOEAD)[3]。粒子群优化(Particle Swarm Optimization, PSO)算法[4]也属于进化算法之一,最初用来解决单目标优化问题,由于其具有实现简单、计算成本低、效率高等优点,如今也被广泛应用到多目标问题优化上,因此,大量的多目标粒子群算法被提出。

在多目标粒子群算法中,种群的多样性和算法的收敛性是影响算法性能的两个关键因素[5]。为了提高算法的收敛性,Hu等[5]提出了基于并行网格坐标系的自適应多目标粒子群算法,通过平行单元坐标系统反馈回来的信息来进行动态调整,很好地提升了算法的收敛性。Zhang等[6]提出了一种基于竞争机制[7]的多目标粒子群(Competitive Mechanism based multi-Objective Particle Swarm Optimizer, CMOPSO)算法,能够很好地提升算法的收敛性,并且不需要外部存档,而是在每一代种群中选取10个精英粒子作为全局最优粒子集合,再从10个精英粒子中随机选取2个精英粒子,运用竞争机制对2个粒子进行比较,选取优胜的粒子作为全局最优粒子来引导种群的进化。韩敏等[8]提出了基于高斯混沌变异和精英学习的自适应多目标粒子群算法,提出了收敛性贡献这一概念作为自适应参数的依据和精英学习方法,很好地提升了算法的收敛性。为了提升种群的多样性,Cheng等[9]提出了一个有效的多目标粒子群教与学优化(Particle Swarm Optimization and Teaching-Learning-Based Optimization, PSO-TLBO)算法, 即将粒子群算法和教与学算法结合,主要应用粒子群算法来更新种群,同时每隔一定代数便运用一次教与学算法,能很好地保持种群的多样性。Balling[10]提出了Maximin策略,该策略能够自动地“奖励”分散的解,“惩罚”聚集的解,整体偏向于分布分散的解,能很好地提升种群的多样性。

综上所述,学者们在针对多目标粒子群优化算法上取得了大量的优异成果,但多目标粒子群算法由于收敛速度快导致容易陷入局部最优或者早熟这一缺陷仍然没有得到很好地解决,因此提升种群多样性与算法收敛性仍然是一个值得研究的领域,故本文提出了一种基于定期竞争学习的多目标粒子群优化(Scheduled competition learning based Multi-Objective Particle Swarm Optimization, SMOPSO) 算法。

本文的主要工作如下:

1) 提出一种定期竞争学习机制,可以很好地提升种群的多样性。该机制将多目标粒子群算法和竞争学习机制相结合,每隔一定迭代次数[10]便运用竞争学习机制进行一次种群更新,即把种群中的粒子随机两两配对进行比较,失败的粒子将向优胜的粒子学习,优胜的粒子则向其保存的个体历史最优粒子学习,这使得每个粒子都有可能成为全局最优粒子,能很好地提升种群的多样性。

2) 提出一种新的全局最优粒子的选择方式,可以很好地提升算法的收敛性。它采用了基于非支配解排序[17]和拥挤距离[1]的共同排序,选取前10个粒子作为精英粒子[9],然后采取随机法从精英粒子中随机选取一个作为全局最优粒子来引导其他粒子的更新。此外,该方法不需要存储全局最优粒子的外部存档,能极大地降低算法的时间复杂度。

1 相关工作

1.1 PSO算法

4 结语

为提升种群多样性和算法的收敛性,本文提出了一种基于定期竞争机制的多目标粒子群算法,它将多目标粒子群算法与竞争学习机制相结合,既考虑到了种群的多样性,又能兼顾收敛性。首先,利用本文提出的多目标粒子群优化策略,可以很好地提升算法的收敛性,再定期使用竞争学习机制,提升种群的多样性。并且,该算法不需要存储全局最优粒子的外部存档,极大地降低了算法的时间复杂度。经实验验证,本文算法能够在收敛性和多样性之间取得良好的平衡,很好地提升了算法的性能。

SMOPSO算法的未来研究方向如下:1)随着社会的发展,多目标优化问题会变得越来越复杂,将该算法向更多目标方向发展是一个很值得研究的领域;2)可以试着引入局部搜索策略,提高算法的收敛精度,以期获得更好的收敛性,从而进一步提升算法的性能。

致谢:本文算法的实现应用了安徽大学BIMK团队开发的多目标进化算法PlatEMO开源平台。对BIMK团队提供的帮助,在此致以衷心的感谢!

参考文献:

[1] DEB K, PRATAP A, AGARWAL S, et al. A fast and elitist multi-objective genetic algorithm: NSGA-II [J]. IEEE Transactions on Evolutionary Computation, 2002, 6(2):182-197.

[2] LAUMANNS M. SPEA2: improving the strength Pareto evolutionary algorithm, Technical Report Gloriastrasse 35 [R/OL]. [2018-03-09]. https://ci.nii.ac.jp/naid/10017663175.

https://ci.nii.ac.jp/naid/10017663175

[3] ZHANG Q, LI H. MOEA/D: a multiobjective evolutionary algorithm based on decomposition [J]. IEEE Transactions on Evolutionary Computation, 2007, 11(6): 712-731.

[4] KENNEDY J, EBERHART R C. Particle swarm optimization [C]// Proceedings of the 1995 IEEE International Conferenceon Neural Networks. Piscataway: IEEE, 1995: 1942-1948.

[5] HU W, YEN G G. Adaptive multiobjective particle swarm optimization based on parallel cell coordinate system [J]. IEEE Transactions on Evolutionary Computation, 2015, 19(1): 1-18.

[6] ZHANG X, ZHENG X, CHENG R, et al. A competitive mechanism based multi-objective particle swarm optimizer with fast convergence [J]. Information Sciences, 2018, 427: 63-76.

[7] CHENG R, JIN Y. A competitive swarm optimizer for large scale optimization [J]. IEEE Transactions on Cybernetics, 2015, 45(2): 191-204.

[8] 韓敏,何泳.基于高斯混沌变异和精英学习的自适应多目标粒子群算法[J].控制与决策,2016,31(8):1372-1378. (HAN M, HE Y. Adaptive multi-objective particle swarm optimization with Gaussian chaotic mutation and elite learning [J]. Control and Decision,2016,31(8):1372-1378.)

[9] CHENG T, CHEN M, FLEMING P J, et al. An effective PSO-TLBO algorithm for multi-objective optimization [C]// Proceedings of the 2016 IEEE Congress on Evolutionary Computation. Piscataway: IEEE, 2016: 3977-3982.

[10] BALLING R. The maximin fitness function, multi-objective city and regional planning [C]// EMO 2003: Proceedings of the 2003 International Conference on Evolutionary Multi-Criterion Optimization, LNCS 2632. Berlin: Springer, 2003: 1-15.

[11] ZHANG X, TIAN Y, CHENG R, et al. An efficient approach to nondominated sorting for evolutionary multiobjective optimization [J]. IEEE Transactions on Evolutionary Computation, 2015, 19(2): 201-213.

[12] LIN Q, LI J, DU Z, et al. A novel multi-objective particle swarm optimization with multiple search strategies [J]. European Journal of Operational Research, 2015, 247(3): 732-744.

[13] TIAN Y, CHENG R, ZHANG X, et al. PlatEMO: a Matlab platform for evolutionary multi-objective optimization [Educational Forum][J]. IEEE Computational Intelligence Magazine, 2017, 12(4): 73-87.

[14] DAI C, WANG Y, YE M. A new multi-objective particle swarm optimization algorithm based on decomposition [J]. Information Sciences — Informatics and Computer Science, Intelligent Systems, Applications: An International Journal, 2015, 325(C): 541-557.

[15] LIN Q, LIU S, ZHU Q, et al. Particle swarm optimization with a balanceable fitness estimation for many-objective optimization problems [J]. IEEE Transactions on Evolutionary Computation, 2018, 22(1): 32-46.

[16] LI M, YANG S, LIU X. Bi-goal evolution for many-objective optimization problems [J]. Artificial Intelligence, 2015, 228: 45-65.

[17] CHENG R, JIN Y, OLHOFER M, et al. A reference vector guided evolutionary algorithm for many-objective optimization [J]. IEEE Transactions on Evolutionary Computation, 2016, 20(5): 773-791.