An Overview of Interactive Immersive Services

Xiaozhi Yuan,Qingyang Wang,Linfeng Zhang,Li Peng,Xiaojie Zhu,Jinlan Ma,Zhan Liu,Yuxiang Jiang

Institute of Mobile and Terminal Technology,China Telecom Research Institute,Guangzhou 510630,China

Abstract: Immersive services are the typical emerging services in current IMT-2020 network.With the development of network evolution,real-time interactive applications emerge one after another.This article provides an overview on immersive services which focus on real-time interaction.The scenarios,framework,requirements,key technologies,and issues of interactive immersive service are presented.

Keywords: framework;interactive immersive service;key technology;requirement;scenario

I.INTRODUCTION

Immersive multimedia services are multimedia services that provide users with high-realistic experience.An immersive multimedia service may involve multiple parties,multiple connections,and the addition or deletion of resources and users within a single communication session [1].The high-realistic immersive experience can be realized based on the combination of multimedia technologies such as perceptual information acquisition,media processing,media transmission,media synchronization and media presentation.

With the rapid development of new multimedia communication technologies and network evolution,future networks will support emerging services including Ultra-HD 4K and 8K video,augment reality (AR) and virtual reality (VR),and interactive and holographic telepresence for near-real-time communication experiences.For traditional audio and video services,latency and jitter are both important indicators affecting quality of service (QoS),while the requirements of packet loss rate and packet error rate are relatively low.In contrast with traditional quality of experience (QoE),which is generally applicable to regular audio/video services,immersive experience is able to more precisely portray the extreme and vivid scene experiences brought by emerging interactive network services [2].These emerging interactive network services are more sensitive to capabilities provided by network,for example,bandwidth,end-to-end (E2E) latency,jitter,synchronization,etc.The global commercial deployment of IMT-2020 network can satisfy the high-bandwidth,low-latency,and high-reliability requirements of AR/VR applications.Therefore,AR/VR services are developing under the current network architecture based on the immersive experience,and widely applied in education,training,entertainment,medical treatment,industrial manufacturing,and other areas.

The boundary of immersive experience is extended to the entire physical space by holographic and light field three-dimensional (3D) display technologies.The ideal naked-eye hologram can break the limitations of head-mounted display (HMD) and optical glasses.On the other hand,haptic services provide realistic restoration and real-time interaction in haptic dimension.The development of sensor technology will help to realize real-time interactive immersive olfactory and gustatory experience in the future.Moreover,digital human and Metaverse,which contain immersive applications with real-time interaction and multi-dimensional perception,emerge as the hot concepts of next 10 years.Immersive services are gradually changing from immersive experience services to interactive services with real-time perception and interaction of multiple media information.

In recent years,major operators,equipment manufacturers,research organizations and institutions have carried out in-depth research on immersive services such as VR,AR,holographic communication,and haptic communication.There are many working groups and standardization activities related to immersive services and immersive media in 3rd Generation Partnership Project(3GPP),Telecommunication Standardization Sector of ITU (ITU-T),International Organization for Standardization (ISO),International Electro technical Commission (IEC),and Institute of Electrical and Electronics Engineers (IEEE).For example,there are research projects related to VR,AR,extended reality (XR) and haptic services in 3GPP[3–10],while there are recommendations related to immersive live experience (ILE) in ITU-T [11–16].In March 2016,the IEEE Standards Association approved the creation of the IEEE P1918.1 standards working group,responsible for standardization of Tactile Internet related areas [17].In addition,the IMT-2030 (6G) Promotion Group has already started the research of 6G as well as corresponding scenarios and use cases.In the published reports“White Paper on 6G Typical Scenes and Key Capabilities”[18]and“White Paper on 6G Vision and Candidate Technologies”[19],eight typical scenes of 6G which including immersive cloud XR,holographic communication,sensory interconnection,intelligent interaction,communication for sensing,proliferation of intelligence,digital twins,and global seamless coverage,are proposal in the reports.In another published report “White Paper on 6G Network Architecture Vision and Key Technologies Prospect”[20],a framework of immersive multisensory network is proposal.Other technical reports related to the requirements and key technologies of immersive services,are under study currently.

In 3GPP,there are some Technical Reports(TR)and Technical Specifciation (TS) which study interactive services.The TR 23.700-60[3]studies key issues,solutions,and conclusions on the support of advanced media services,e.g.,high data rate low latency services,AR/VR/XR services,and tactile/multi-modality communication services.The TS 26.118 [4] defnies operation points,media proflies and presentation proflies for VR for streaming services.The TR 22.847[5] studies new scenarios and identify use cases and potential requirements for immersive real time experience involving tactile and multi-modal interactions,and provides gap analysis with existing requirements and functionalities on supporting tactile and multimodal communication services.The TR 22.987 [6]presents use cases in telecommunication services that are developed by applying haptic technology,and justifeis accepting haptic technology in telecommunication services by investigating readiness for its use in the telecommunication system and the level of benefti for the user experience.The TR 26.918[7]identifeis potential gaps and relevant interoperability points that may require further work and potential standardization in 3GPP by collecting comprehensive information on VR use cases,existing technologies,and subjective quality.The TR 26.928 [8] collects information on XR in the context of 5G radio and network services.The information involves defniitions,core technology enablers,a summary of devices and form factors,core use cases,relevant client and network architectures,APIs and media processing functions,key performance indicators and QoE metrics for relevant XR services.The TR 26.998 [9] collects information on glass-type AR/MR devices in the context of 5G radio and network services.The information involves formal defniitions for the functional structures of AR glasses,core use cases for AR services over 5G,media exchange formats and proflies relevant to the core use cases,necessary content delivery transport protocols and capability exchange mechanisms,etc.The TR 26.999 [10] provides reference test material and test results for improved usability of technologies in the TS 26.118 [4] which includes several VR media proflies for video and a single media proflie for audio with different confgiuration options.In addition,Recommendation ITU-T H.430 series identify general requirements and high-level functional requirements[11],the general framework and high-level functional architecture[12],service scenarios and several use cases[13],service confgiuration,media transport protocol and signalling information of MPEG media transport(MMT)for ILE services[14]and haptic transmission for ILE systems[15],and reference models for presentation environments[16].

In 2021,a new work item [21] related to interactive immersive services was created by Question 8 of Study Group 16 in ITU-T.This work item focus on key requirements of interactive immersive services such as holographic remote surgery,multi-user remote collaboration,and immersive navigation.Interactive immersive services refer to services which involve in collection,processing,and transmission of interactive information to support real-time interactions among immersive service users or objects.Interactive information refers to the information delivered between event sites and remote sites during immersive interaction,which includes audio,video,text,haptics,etc.

The goal of this article is to provide an overview on interactive immersive services.The scenarios,framework,requirements,key technologies,and issues of interactive immersive services are presented in following sections.Finally,a general view of interactive immersive services is concluded.

II.SERVICE SCENARIOS

Interactive immersive services contain three characteristics: immersive,interactive,and real-time.The service scenarios of interactive immersive services can be divided from different technical perspectives.And the scenarios can be further subdivided into a series of specifci use cases based on applied areas,such as entertainment,education,medicine,training,tourism,industry,and so on.In this article,the scenarios are presented in two divided dimensions.

2.1 Scenarios Divided by Different Media Presentation Technologies

According to different media presentation technologies,interactive immersive services can be divided into three main scenarios:XR,holographic,and haptic[22,23].

XRrefers to all real-and-virtual combined environments and associated human-machine interactions generated by computer technology and wearables.It includes representative forms such as VR,AR,and mixed reality(MR),and the areas interpolated among them [8].Wearables contain HMDs for VR,optical glasses for AR and MR,and handheld mobile devices with location tracking and camera.XR provides users with full immersive experience and realtime interaction through the high-restored simulation of fvie senses and high-realistic combination of virtual and real.Typical use cases for XR services are immersive on-line game,360-degree conference meeting,AR guided assistant,3D shared experience,and so on[8].

Holographicpresentation is a technology that records phase and amplitude of scattered light on the surface of objects by interferometry,and then reconstructs the 3D image of objects by the principle of diffraction.The ideal holographic presentation provides users 360-degree stereo images without any wearable.Holographic communication is an emerging method that transmits the holographic data of captured object through the network,restores the stereo image by holographic presentation technology and interacts with it in real time.Typical use cases for holographic services are holographic call,holographic conference,holographic medicine,holographic remote training,holographic digital space,and so on[13].

Hapticis a sense perceived by touching an object.It involves tactile senses which refers to the touching of surfaces,and kinaesthetic senses or the sensing of movement in the body [6].Haptic services indicate any communication-related services that involve delivering haptic through network.Combining haptic with visual and auditory sense,haptic services provide users with multi-modality interaction and feedback.Haptic emoticon delivery and customized alerting are the typical use cases for haptic services[6].

2.2 Scenarios Divided by Different Types of Interaction

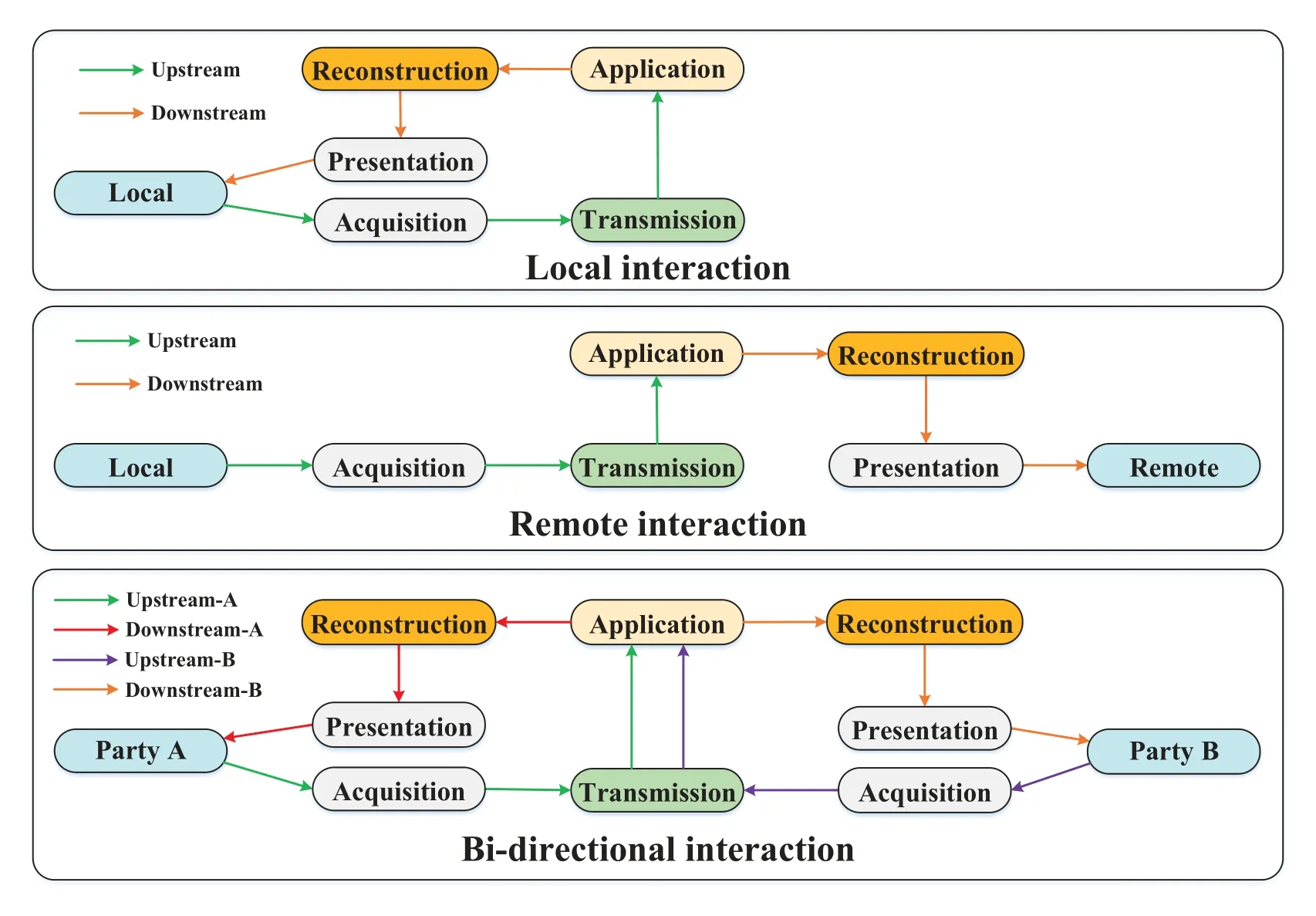

According to different objects and types of interaction,interactive immersive services can be divided into three scenarios: local interaction,remote interaction,and bi-directional interaction.As shown in Figure 1.

Figure 1.Scenarios divided by different types of interaction.

Local interaction.In the scenario of local interaction,the acquisition and presentation are both at the local site.The media downstream is rendered and reconstructed at the cloud or edge.Local users interact with the application through transmitted network.Most XR local services belong to local interaction,such as immersive 360-degree video,VR offline games,etc.[7].

Remote interaction.In the scenario of remote interaction,the acquisition captures the users,objects and surrounding environment at the local site.After transmission through networks and reconstructed at the cloud or edge,the presentation restores the stereo image.Users at the remote site can see the restored stereo image and interact with it in real time through voice and other means.The typical use case of remote interaction is telepresence[13].

Bi-directional interaction.Bi-directional interaction is an extension of remote interaction.Both sides of communication party have acquisition and presentation.The interactive information of any communication party is acquired,transmitted through network,and reconstructed at the cloud or edge of the opposite.The typical use case of bi-directional interaction is remote control [13].After the interactive information of the controller is collected and transmitted over the network,it is restored to the operated object in real time by auxiliary devices such as mechanical arms.The changes on the object will be captured by acquisition and fed back to the stereo image presented by the controller in real time.The extension of bi-directional interaction is multi-party interaction,including manyto-one remote collaboration and multi-party real-time communication[13].

III.FRAMEWORK

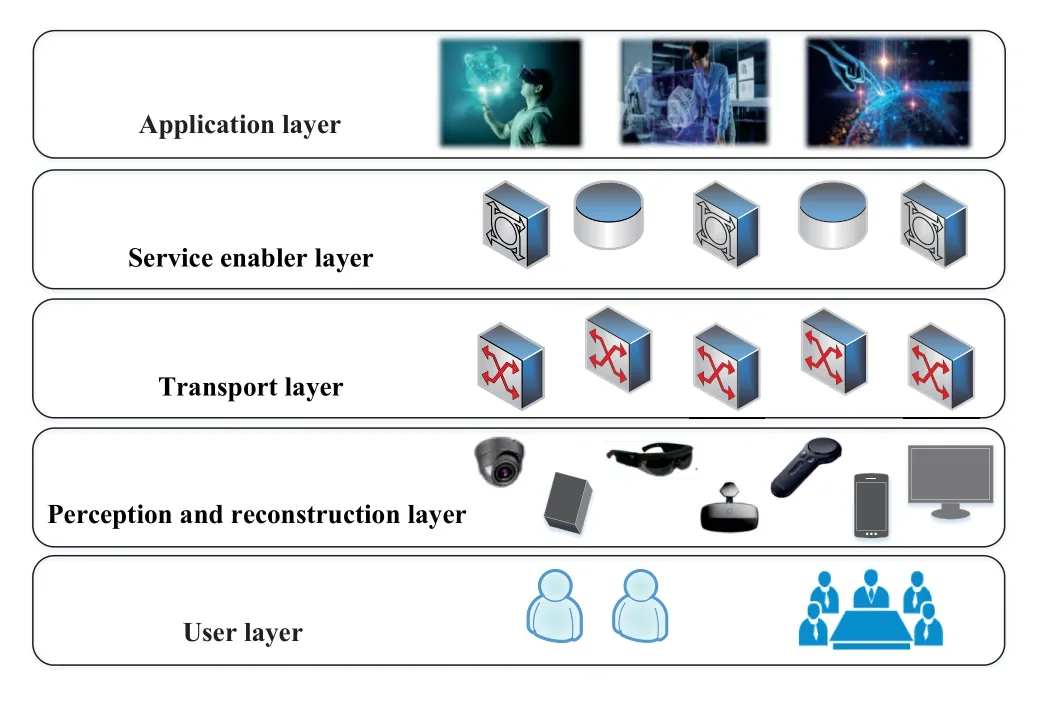

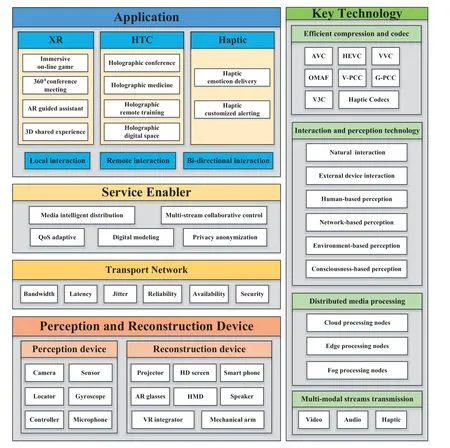

In order to provide users with immersive,multidimensional perceptive and real-time interactive applications,the interactive information such as video,audio,haptic and text need to be acquired,processed,transmitted,and reconstructed for interactive immersive services.The framework of interactive immersive services[21]is illustrated in Figure 2.Referenced the framework of immersive multi-sensory network in the report“White Paper on 6G Network Architecture Vision and Key Technologies Prospect” [20] published by IMT-2030(6G)Promotion Group,it consists of application layer,service enabler layer,transport layer,perception and reconstruction layer,and user layer.The framework aims to support the real-time interaction and service control of interactive immersive services.The devices in the perception and reconstruction layer,capture and represent the interactive information from the user layer.The transport layer transmits uplink interactive media from the perception and reconstruction layer,as well as downlink interactive media from the service enabler layer.The service enabler layer provides service capabilities,such as media intelligent distribution,multi-stream collaborative control,QoS adaptive,digital modeling,and privacy anonymization.These service capabilities are invoked by application layer to support and develop various applications in accordance with different service requirements.

Figure 2.Framework of interactive immersive services[21].

User layercontains users,objects and surroundings.Compared with traditional audio and video services,the interacted objects of interactive immersive services are all component elements of a specifeid space,including humans,objects,and scenes,which can be either real or virtual.The objects and scenes involve the real elements of the space and the virtual stereo images reconstructed by computer algorithms[24].The interactive information which refers to the information delivered among immersive service users or objects,contains many forms of multimedia such as movement,expression,audio,video,text,haptic,and operation[25].

Perception and reconstruction layerconsists of a series of perception devices and reconstruction devices.Perception devices mainly contain cameras,sensors,locators,gyroscopes,handheld controllers,microphones and so on [26].The visual,auditory,haptic,and other media information of the user layer is captured,encoded,and pre-processed by perception devices.Then these devices convert the interactive information into upstream for network transmission.

Reconstruction devices include display devices such as laser projectors and high-defniition (HD) screens,HMDs such as AR glasses and XR integrated machines,external devices such as smart terminals and speakers,and auxiliary devices such as mechanical arms[26].These devices decode the downstream processed by the service enabler layer,and restore it to the user layer as the corresponding interactive information.

Transport layerprovides routing and delivery capabilities for uplink and downlink media streams.The transport layer also provides QoS guarantee for truly immersive scenarios.For example,the transport layer is recommended to support true hologram transmission at normal human size in some holographic scenarios [27].Since extremely low latency and deterministic delay are crucial for truly immersive scenarios to avoid motion sickness[28],the transport layer is required to support the real-time interaction and transmitting data accurately.

Service enabler layerconsists of distributed enablers and storage servers.The enablers provide media processing and service control capabilities for toplayer applications.The storage servers are used to store user data,address of routing nodes,distribution of computing resources,and service policies.

Application layerprovides visualized,interactive,immersive applications by invoking the enablers of the service enabler layer.Specifcially,the interactive immersive services that include 3rd party services,invoke the service capabilities of the enablers of the service enabler layer to develop various applications such as immersive cloud XR,holographic communication,haptic communication,and intelligent interaction.

IV.REQUIREMENTS

The scenarios,use cases,and interactive objects of interactive immersive services are more diverse than traditional audio and video multimedia services.On the other hand,interactive immersive services possess multi-dimensional senses and media interaction.Therefore,a variety of corresponding terminal devices are required to provide capabilities of acquisition and reconstruction.In addition,a large number of media streams generated by interaction require a highperformance transport network providing QoS guarantee to ensure the real-time interaction.More importantly,media processing,data security,computing resource scheduling,and other requirements of different applications need a series of service-based capabilities to complete in coordination.

4.1 Multiple Interactive Objects

Traditional 2D multimedia services such as video call,interact with users who are real in the physical world.Interactive immersive services break the limitation of human-to-human interaction in the physical world.The space and area of interaction are expanded to the virtual world and the environment in which virtual and real are integrated.The objects of interaction are expanded to all the elements of the whole space or environment,including real human and objects,and virtual stereo images that reconstructed from reality or modeled by computer algorithms,such as digital human.Therefore,interactive immersive services are required to support the integration and interaction of virtual and real.

4.2 Multiple Terminal Devices

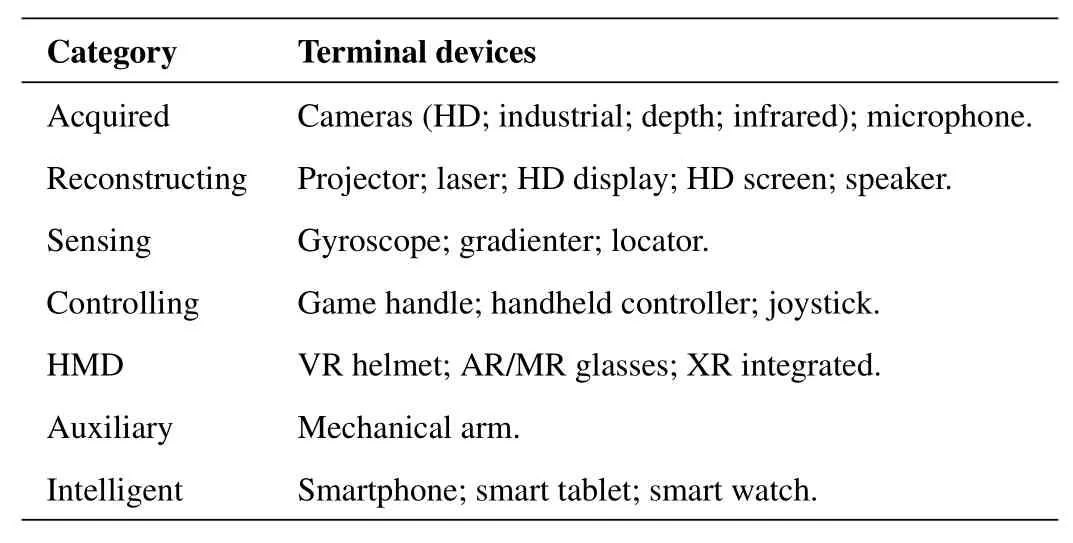

Interactive immersive services possess varied use cases and interactive objects.Therefore,corresponding terminal devices are required to provide interactive interface,signal acquisition,network access,content presentation,codec,media pre-processing,format conversion and other functions.Common categories of terminal devices are listed in Table 1.The lightweight,mobile and HD demands of terminal devices are expected to be addressed by cloud computing technology and low-latency multi-access edge computing(MEC)network.

Table 1.Categories of terminal devices.

4.3 Multi-Dimensional Media Interaction

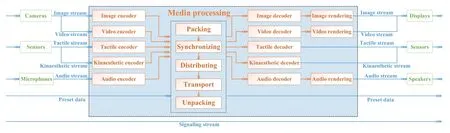

Interactive immersive services support visual,auditory,haptic,olfactory,gustatory,and somatosensory media interactions.Figure 3 shows a typical E2E media process.

Figure 3.Media process.

Encoding.The visual,auditory,and haptic information is acquired by cameras,sensors,and microphones,and converted by the encoders into corresponding media streams such as image stream,video stream,tactile stream,kinaesthetic stream,audio stream,etc.The media streams are converted into standard formats before media processing.Moreover,effciient encoding is important to reduce network bandwidth requirements.

Packing.After further compression,the media streams are integrated and packaged.For example,a TCP/IP packet typically consists of a header and a data section that contains the address information of the sender and receiver.During the process of integrating and packing,the specifci streams that involve biometric information will be modifeid based on user privacy or security requirements.

Synchronizing.Strict synchronization is required between concurrent media streams from different cameras and sensors to ensure HD reconstruction and real-time interaction.In addition to traditional signaling streams,audio streams,and video streams,these concurrent streams also include haptic media streams such as touch and smell.Each concurrent stream can share the same or allocate different QoS policies such as bandwidth,latency,jitter,etc.Strict synchronization of these concurrent streams should be guaranteed at determined intervals among multiple channels.

Distributing.The packets are distributed to transport network by selecting routing nodes based on the destination address and routing algorithm.Furthermore,the packets are distributed to the cloud or edge nodes by coordinating computing resources in accordance with the computation demands of applications.

Transport.The distributed packets are transmitted through the network which provides satisfactory performance indicators such as high bandwidth,low delay and jitter,high reliability,etc.

Unpacking.The packets are unpacked while arriving at the opposite.For reliability reasons,a small number of packets may be lost during transmission.Also,the receiver needs to determine if the received package is complete while unpacking.

Decoding.The unpacked packets are restored to the audio,video,and haptic streams by the corresponding decoders.The decoded haptic streams,including tactile streams and kinaesthetic streams,are reconstructed to the haptic information by corresponding sensors.

Rendering.The decoded image,video,and audio streams are rendered in order to improve the defniition of video and the fdielity of audio,and reconstructed to users through screens,displays and speakers.The media streams can be rendered at the cloud or edge depending on the scenarios.Cloud computing provides computation and storage resources by deploying servers with powerful computing capability.Edge computing provide the support for computation in networks and the low latency services by accessing to the proximity of the end-users.

4.4 High-Performance Transport Network

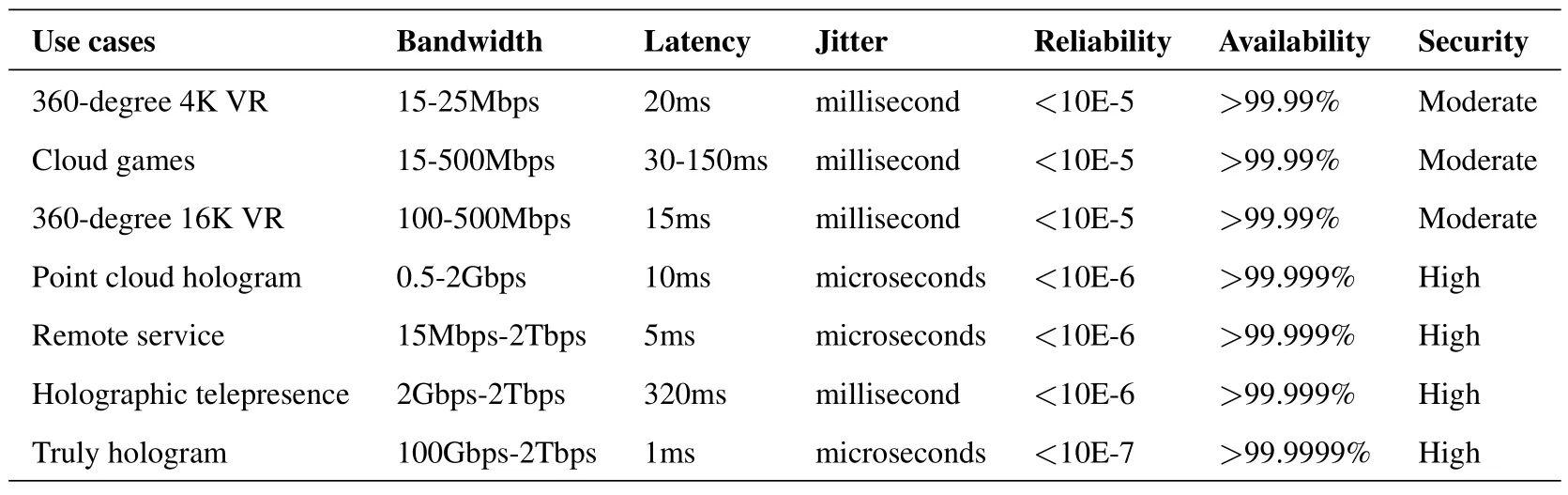

The massive real-time interactive media streams ask for higher requirements and challenges to the performance of transport network.QoS is defnied as the collective effect of service performances,which determine the degree of satisfaction of a user of a service.It is characterized by the combined aspects of performance factors applicable to all services.In terms of interactive communications,QoS parameters are mainly characterized by bandwidth,latency,jitter,reliability,availability,and security.The QoS requirements of some interactive immersive services are list in Table 2 according to existing research.

Table 2.QoS requirements[29–36]of interactive immersive services.

Ultra-high bandwidth.Immersive XR and holographic services provide users with a fully immersive and ultimate experience involving the distribution and transmission of massive media data.When varied sensory information are transmitted coordinately,the amount of data increases with the increase of sensory types.Therefore,the communication network is required to provide a higher bandwidth.Taking immersive XR at 16K resolution as an example,the requirement of network transmission rate is 0.98 Gbit/s.In holographic communication,a hologram photo is about 7-8 GByte in size,or 56-64 Gbit in size.The requirement of transmitted rate is 1.68-1.92 Tbps[37]for the same defniition of video when converted at 30 frames per second.For a hologram,the bandwidth requirement for the media stream is 2.06 Gbps to 1 Tbps[38].

Ultra-low latency.The E2E latency of an immersive XR service must be less than 20 ms [8] to avoid feeling dizzy.Holographic remote manipulation usually requires lower latency of a few milliseconds[37].The latency related to haptic services need to be kept within 1ms because the haptic sensitivity of a human body is about 1 ms[39].

Sensitive E2E jitter.The E2E jitter refers to the variation in latency within a flow of packets transmitted E2E across a network from a source to a destination endpoint.The proposed range of jitter can be very sensitive(microseconds),sensitive(millisecond)and not sensitive[33].For strong interactive services such as remote control and truly hologram,a jitter of microseconds is required to guarantee the real-time interaction.

High reliability.The reliability is defnied as the number of data packets successfully received at the destination relative to the total number of data packets sent.In a general way,it refers to the loss rate of packets transmitted E2E across a network.Traditional VR online video services usually require a maximum failure rate between 10E-3 to 10E-5.Holographic and haptic services require a higher range of reliability of 10E-6 to 10E-7 as a result of multi-stream collaboration and synchronization.

Extremely high availability.The availability refers to the probability that a system will be operationalwhen a demand is made for service.The availability requirement of immersive XR services is 99.99%[30],and that of holographic services is 99.999%[30].Haptic services require higher availability because of higher real-time interactivity.Especially for holographic manipulation services combined with haptic communication,such as holographic remote surgery,the network needs to provide 99.9999%[37]extremely high availability to ensure the real-time and security of remote surgery.

Security level.Holographic telepresence and haptic manipulation may involve the biometric information.Considering of security and privacy measures,the sensitive information should be protected as a high priority in order to avoid leaking and unauthorized access.

4.5 Service-Based Capabilities

Depending on media processing,data security,computing resource scheduling,and other requirements of different applications,a series of service-based capabilities need to complete in coordination[21,40].

Media intelligent distribution.The real-time interactive immersive experience presents new challenges to the high throughput and ultra-high speed content distributing capabilities of networks.Firstly,for service controlling,massive media data needs to be distributed to appropriate or specifci processing nodes according to data type,priority,and computing requirements.For example,it is better to process rendering that involved a large number of computations on cloud nodes with strong computing power,while process holographic representations on edge nodes with lower E2E latency[25].And media codecs can be distributed to multiple computing nodes in the network for collaborative processing.Secondly,media intelligent distribution is required to provide effciient distributing policies which can select appropriate media servers or database according to the types,attributes of user,and service requirements.For example,invoking from the most appropriate model database based on the modeling properties,downloading from the nearest application server.

Multi-stream collaborative control.Interactive immersive services involve multi-channel audio streams,video streams,image streams,text streams,control signal streams,haptic streams,holographic streams,etc.Each channel may map to a separate stream with stringent in-time requirements to ensure an internally consistent.Especially for holographic services,it is required to support about 800-1000 concurrent streams [32].When realizing haptic communication,interactive haptic information captured by several sensors is recommended to be transmitted synchronously with video and audio signals[41,42].When realizing real-time multi-user collaboration,synchronous media representation for multiple users is required.Therefore,interactive immersive services are required to coordinately control the establishment,interaction,synchronization,and integration of concurrent media streams based on scenarios and policies.

QoS adaptive.Compared with the QoE of traditional audio and video services[2],the QoE of interactive immersive services depend on the QoS of network(such as bandwidth,latency,packet loss,etc.) and the capabilities of the terminal device(such as resolution,frame rate,bit rate,viewing angle,etc.).Combined with AI and big data technology,QoS adaptive is the capability to generate QoS policies that match the service requirements with the real-time network status through collecting and acquiring the distribution and status of network resources,and to schedule and manage the network and terminal devices based on new QoS policies to ensure the QoE under different service requirements.

Digital modeling.Digital modeling makes the digital representation of physical entities possible.It is a key capability for realizing fully immersive interaction[43].Depending on different scenarios,there are online modeling based on media data and fast modeling based on model data for interactive immersive services.Online modeling based on media data is the capability to restore the captured objects with 100%fdielity by media data.It maps from physical entities to digital virtual bodies.The amount of media data that needs to be processed in real-time is usually massive.Quick modeling based on model data is a process of generating a digital virtual body from other digital virtual body,which uses the pre-set digital model as the base,and appends the key information of the object such as its characteristics and attributes to the digital model and renders for presentation.Generally,the amount of media data to be processed in quick modeling is much smaller than online modeling.

Privacy anonymization.Holographic telepresence and haptic manipulation may involve the collection,transmission,and presentation of biometric information.Considering of privacy [25],the sensitive information of user including biometric information,user data,location,positioning,and path tracking is required to be anonymized in most scenarios.Under specifeid authentication or payment scenarios,accurate biometric information such as fnigerprints,faces,and even retinas of user,is required to be provided in a secure environment.In addition,due to the user’s personalization,the specifeid media streams may be added,deleted,replaced,and masked by virtual backgrounds,video fliters,mosaics,etc.

V.KEY TECHNOLOGIES

Based on the service requirements above,four key technologies for interactive immersive services are presented below.

5.1 Efficient Compression and Codec Technologies

To realize an immersive multi-sensory experience,multi-dimensional media information needs to be acquired,compressed,transmitted,and reconstructed.For 3D video and holographic reconstruction,the huge amount of raw data consumes corresponding network bandwidth.By using effciient image compression and video codec schemes,the bandwidth requirements of immersive media can be reduced to a certain degree.Therefore,effciient media compression and codec technologies are the key technologies to implement high-realistic interaction.

Common media types include audio,image,video,text,hologram,volumetric,haptic,and signal.Several codec technologies and standards related to interactive immersive services are list as following.

5.1.1 AVC,HEVC,VVC

Advanced video coding (AVC) [45,46] is developed in response to the growing need for higher compression of moving pictures for various applications such as videoconferencing,digital storage media,television broadcasting,Internet streaming,and communication.AVC allows motion video to be manipulated as a form of computer data and to be stored on various storage media,transmitted,and received over existing and future networks and distributed on existing and future broadcasting channels.

High effciiency video coding(HEVC)[47,48]represents an evolution of the existing video coding.Based on supporting AVC series standards,HEVC:1)supports for higher bit depths and enhanced chroma formats,including the use of full-resolution chroma are provided;2)supports for scalability enables video transmission on networks with varying transmission conditions and other scenarios involving multiple bit rate services;3)supports for multi-view enables representation of video content with multiple camera views and optional auxiliary information;4)supports for 3D enables joint representation of video content and depth information with multiple camera views.

Versatile video coding (VVC) [50,51] is a video coding technology with a compression capability that is substantially beyond that of the prior generations of such standards.Some key application areas for VVC particularly include ultra-HD video,video with a high dynamic range and wide color gamut,and video for immersive media applications,in addition to the applications that have commonly been addressed by prior video coding standards.

At present,the main codec technologies used for HD video are AVC and HEVC.With the same picture quality guaranteed,the compression ratio of HEVC can be increased by about 30% compared with AVC,up to 600:1.The 122nd ISO/IEC JTC 1/SC 29/WG 11 meeting shows that the compression ratio of VVC can be increased by about 40%,and the image compression ratio can reach 800:1 to 1000:1.

5.1.2 OMAF

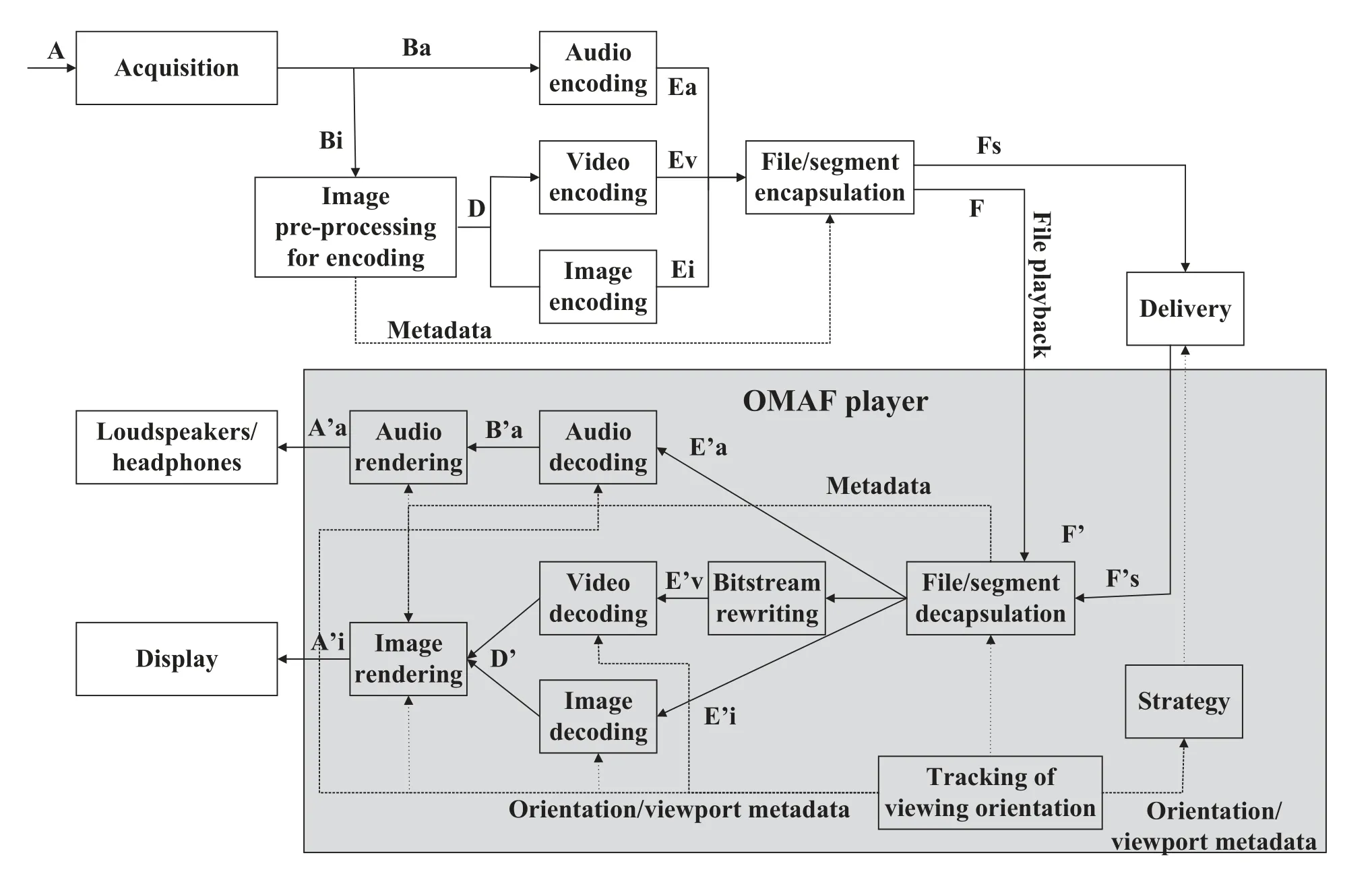

Moving Picture Experts Group(MPEG)has developed a series of standards (ISO/IEC 23090) for immersive media called MPEG-i,which includes an omnidirectional media format(OMAF).The Standard ISO/IEC 23090-2[44]specifeis the omnidirectional media format for coding,storage,delivery,and rendering of omnidirectional media,including video,images,audio,and timed text.Omnidirectional image or video can contain graphics elements generated by computer graphics but encoded as image or video.They are divided into projected,fsiheye and mesh types,and differs while rendering and preprocessing before encoding.Figure 4 shows a content flow process for omnidirectional media with projected video.

Figure 4.Content flow process for omnidirectional media with projected video[44].

OMAF is the frist international standard on immersive media format and compatible with existing standards,including coding,flie format,delivery signaling,and includes metadata information of encoding,projection,packing,and viewport orientation[53].

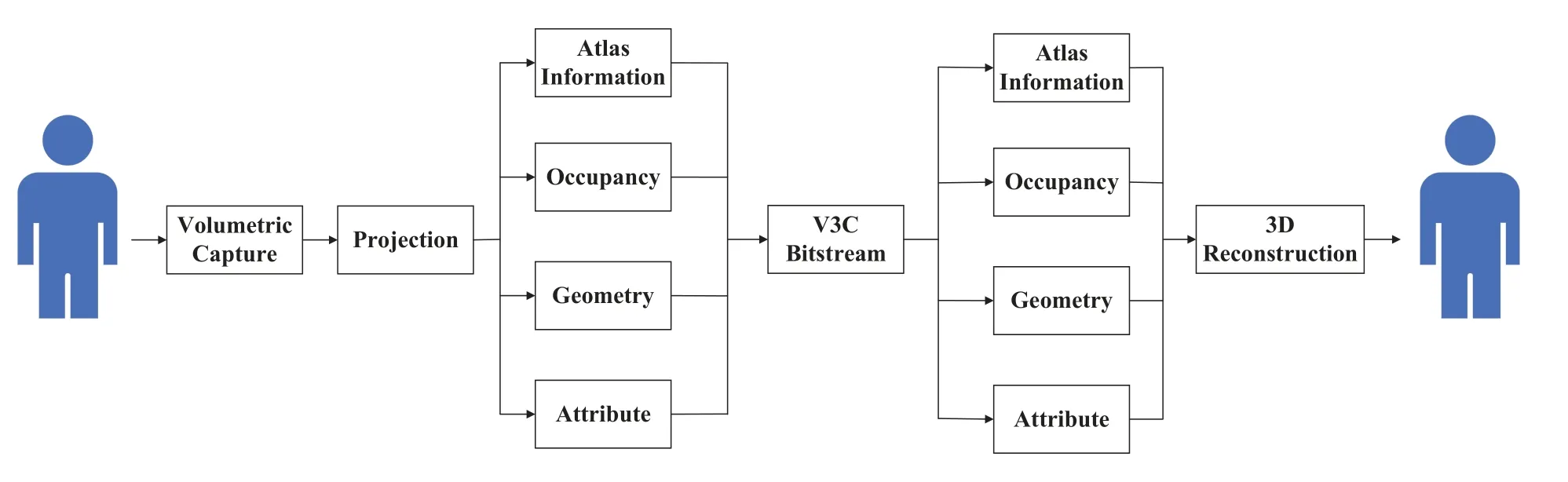

5.1.3 V3C,V-PCC,G-PCC

Visual volumetric video is a sequence of visual volumetric frames,and can be represented by a large amount of data which can be costly in terms of storage and transmission.Volumetric videos differ from regular videos in the aspects of content format,capturing,streaming,and analytics[54].Standards ISO/IEC 23090-5 [49] specifeis a generic mechanism for visual volumetric video coding(V3C).As shown in Figure 5,visual volumetric frames can be coded by converting the 3D volumetric information into a collection of 2D images and associated data.The converted 2D images can be coded using widely available video and image coding specifciations.The coded images and the associated data can then be decoded and used to reconstruct the 3D volumetric information.V3C can be used by applications targeting volumetric content,such as point clouds,immersive video with depth,mesh representations of visual volumetric frames,etc.Visual volumetric media,such as 3D objects,scenes,and holograms,can be encoded and transmitted with point cloud format [55].A point cloud is a 3D data representation used in diverse applications associated with immersive media including VR,AR,immersive telepresence,etc.A point cloud consists of a set of individual 3D points.Each point,in addition to having a 3D position,i.e.,spatial attribute,may also contain a number of other attributes such as color,reflectance,surface normal,etc.Point clouds in their raw format require a huge amount of memory for storage or bandwidth for transmission.For example,a typical dynamic point cloud used for entertainment purposes usually contains about 1 million points per frame,which at 30 frames per second amounts to a total bandwidth of 3.6 Gbps if left uncompressed[56].

Figure 5.Volumetric media conversion[49].

MPEG has selected two distinct compression technologies for the point cloud compression(PCC)standardization activities: video-based PCC(V-PCC)[49]and geometry-based PCC (G-PCC) [57].In addition to V3C,V-PCC is another encoding mechanism based on visual volumetric video.V-PCC is mainly used for dense point clouds,such as holographic digital human body that emphasize texture and detail in medical diagnostics.While G-PCC is used for sparse point clouds,such as reconstruction of remote holographic live performance in large space.

5.1.4 Haptic Codecs

In March 2016,the IEEE Standards Association approved the creation of the IEEE P1918.1 standards working group.The core activities of the working group can be classifeid into three distinct areas: def-i nitions and use-cases,haptic codecs,and reference architectural framework[17].Among them,the work in IEEE P1918.1.1 (Haptic Codecs for the Tactile Internet)mainly focuses on the standardization of a corresponding tactile codec,but there is no codec standard formally published at present.

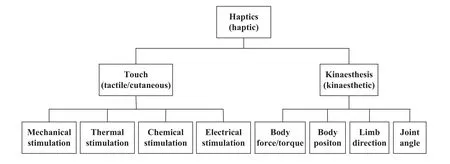

In terms of haptic codecs,Standard ISO 9241-910[52]considers that haptics consist of touch and kinaesthesis,as shown in Figure 6.Touch includes such diverse stimuli as mechanical,thermal,chemical,and electrical stimulation to the skin.Specifci nerves and sensing organs in the skin respond to these stimuli with different spatial and temporal resolutions.Kinaesthesis refers to the position/direction of various parts of the body and the external force/torque applied to them.Therefore,force/torque,position,direction,and angle,belong to the category of kinaesthesis.

Figure 6.The components of haptics[52].

There are two compression methods of IEEE P1918.1.1 for tactile information: waveform-based representation and compression of tactile signals,and feature extraction for parametric representations and classifciation [58].Also,there are two compression methods for kinaesthetic information: kinesthetic data reduction based on Weber’s Law,and integration of kinesthetic data reduction and stability-ensuring control schemes [58].Currently IEEE P1918.1.1 is considering Weber’s Law (perceptual deadband based)as the coding standard for kinaesthetic information.However,for tactile information,IEEE P1918.1.1 has no defniite coding standard yet.

5.2 Interaction and Perception Technologies

Low-latency interaction and perception are critical to immersive experience and real-time interaction.For example,to avoid motion sickness caused by HMDs,the motion to photons(MTP)latency is required to be less than 20 ms [8] for strong interactive AR/VR applications.

For common immersive systems such as XR,the interactive modes are mainly divided into external device interaction and natural interaction.External device interaction refers generally to the interaction between application systems and physical devices except terminals,which includes mobile phone interaction,remote control interaction,fniger ring interaction,watch interaction,wristband interaction.Natural interaction refers to the interaction with the terminal through user’s body and speech,which can be divided into voice interaction,gesture interaction,headmovement interaction,eye-movement interaction,and somatosensory interaction.

Traditional perception techniques are mainly based on the perception of optical images,locators,and sensors.With the rapid development of perception technology,future perception tends to integrate communication with perception,which can be divided into human-based perception,environment-based perception,network-based perception,and consciousnessbased perception.Human-based perception techniques include face recognition,human feature tracking,and so on.Environment-based perception uses big data and AI technology to get data from Internet of Things(IoT) devices,and combines the location information provided by lidar,base station,and satellite positioning to get the real-time state of the surrounding environment.Network-based perception obtains information about terminals and devices through changes of network state and QoS.Consciousness-based perception mainly obtains information and variables based on the change of object’s ideology,and its representative technologies are semantic communication and braincomputer interface.

5.3 Distributed Media Processing

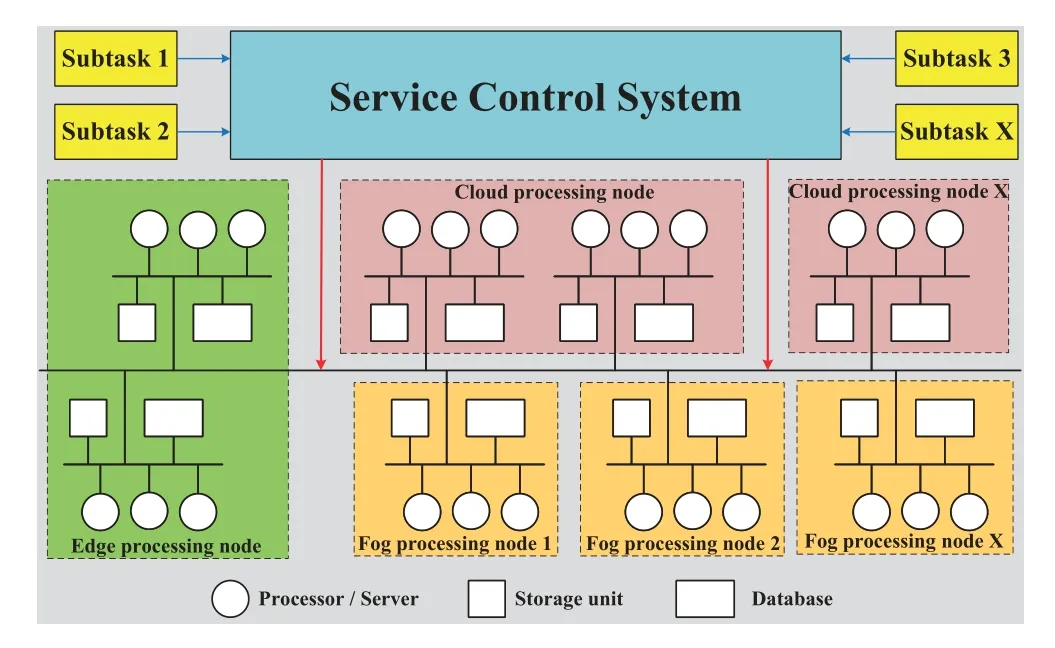

Distributed media processing is the technology that connects multiple servers with different locations or processing functions through networks,completes media data processing tasks under the unifeid management and coordination of service control system.As shown in Figure 7.

Figure 7.Distributed media processing.

Combining AI technology with cloud computing,edge computing,fog computing,and computing resources of terminal devices,distributed media processing can implement the following functions.

-Depending on service requirements,compression and encoding can be accomplished collaboratively by selecting nearby or idle cloud processing nodes and fog processing nodes distributed across network locations.

-Depending on the type and attributes of objects,for online modeling that requires real-time processing of large media data,a series of cloud processing nodes with corresponding computing power can be selected.For fast modeling,generic models can be invoked from a database that is close to the service point,and complete modeling at the edge processing nodes.

-According to service policies,the media data that to be integrated and synchronized,is handled by multiple processing nodes with corresponding functions.

-Video buffering,pre-rendering,and decoding,can be accomplished by terminal devices together with rendering servers and media decoders of edge nodes.

-Shared flies and generic models can be stored in the edge nodes and databases near the user side.

5.4 Multi-Modal Streams Transmission

The XR and tactile services enable multi-modal interactions which may involve multiple types of media streams such as video,audio,haptic streams,and other sensor data for more immersive experience.The multi-modal data [3,5] is defnied to describe the input data from different kinds of devices/sensors or the output data to different kinds of destinations which required for the same task or application.

For a typical multi-modal communication service,there can be different modalities affecting the user experience.These modalities contain video and audio media,information perceived by sensors about the environment(e.g.,brightness,temperature,humidity,etc.),and haptic data.Furthermore,the multiple modalities can be transmitted at the same time to multiple application servers for further processing in a coordinated manner,in terms of QoS coordination,traffci synchronization,power saving,etc.

The 3GPP TR 22.847 [5] gives an overview of a multi-modal interactive system.Multi-modal outputs are generated based on the inputs from multiple sources.The modality is a type or representation of information in the multi-modal interactive system.Multi-modal interaction is the process during which information of multiple modalities are exchanged.Modal types consist of motion,sentiment,gesture,etc.Modal representations consist of video,audio,haptics (vibrations or other movements which provide haptic or tactile feelings to a person),etc.

In order to support the multi-modal communication services,it needs to construct the transmission architecture for visual,audible,and haptic signals [41].Traditional multi-modal transmission schemes design delivery mechanisms for visual,audio,and haptic streams separately.However,it doesn’t consider the potential correlation among modalities during transmission.As a result,the traditional multi-modal transmission schemes cannot perform well for the multimodal streams.

An architecture of cross-modal transmission strategy is illustrated in[42],which contains a simple and effciient delivery mechanism as well as a suitable and effective signal restoration procedure.This is the frist work to solve transmission issue in multi-modal applications by using correlation among modalities and considering schemes from both stream delivery and received signal processing.

The architecture mainly consists of the humansystem interface as the master terminal,the teleoperator as the slave terminal,and wireless transmission network among them.Specifcially,the master terminal (receiver) is composed of a haptic control device for position-orientation input and force-feedback output as well as a video display.The slave terminal(sender) contains haptic perception sensors installed in a mechanical arm as well as a video camera.Moreover,edge computing technique is used to fulflil diverse requirements of multi-modal streams on network resources and performance indexes on communication qualities.The edge nodes near to terminals undertake caching and computing tasks during transmissions.

VI.KEY ISSUES

The existing IMT-2020 network already supports most immersive services including VR and AR applications.Data Channel technology enables enhanced IMS networks to support not only VoIP voice and video streams,but also AR communication streams.The technical report of ITU-T SG13 Focus Group NET-2030 [32] indicates that the holographic type communication (HTC) is the typical service for the IMT-2030 future network.In addition,the standards working group IEEE P1918.1 provides a reference framework for Tactile Internet and related use cases[59].However,immersive services with high fdielity immersive experience and real-time interaction challenge to network architecture,device performance and mobility,and service key technologies.

6.1 Network Architecture

The existing network architecture cannot satisfy the requirements of HTC and haptic services.

Firstly,HTC requires data Gbps to Tbps transmission rates depending on the size of projected objects[31].According to the key technical capabilities of IMT-2020 network given by ITU,the peak rate of enhanced Mobile Broadband (eMBB) is only 20 Gbps,and the user experience rate is only 0.1 Gbps-1 Gbps.

Secondly,HTC requires provided E2E latency of sub-milliseconds.In theory,the transmitted latency of ultra-Reliable Low-Latency Communications(uRLLC) can be as low as 1 ms.But it is almost impossible in long-distance communications due to the physical limitation of light speed.Thirdly,HTC combined with haptic,such as holographic remote surgery,requires lower latency and higher reliability.Transport protocols such as TCP/IP and UDP used by existing networks,compromise between low latency and high reliability,and cannot satisfy both.

Therefore,an enhanced,future-oriented immersive communication network architecture is required to satisfy the requirements of ultra-high bandwidth,ultralow latency,and ultra-high reliability for interactive immersive services.

6.2 Device Performance and Mobility

The terminal devices of immersive XR services are typically represented by VR integrator and AR glasses,which mainly implement functions such as control signal acquisition,video decoding,rendering and reconstruction,network access,providing user interface,and user authentication.VR services build a fully virtual environment with HMDs,while AR and MR services use high-transparency optical glasses or holographic optical glasses to implement virtual overlay.With the high-defniition development of immersive services,resolution,frame rate,degree of freedom,latency,interactivity,tracking and perception,wearing comfort,vertigo,and other performance indicators of XR devices are increasing.At the same time,more and more mobile service scenarios require lightweight and mobile XR devices.

For holographic services,in theory,holographic display technology can not only implement 360-degree 3D projection,but also the interaction between the projected images and users.Ideal holograms,also known as naked-eye holograms,require no wearables.However,the current holographic display technology mostly focuses in the optical processing of image rendering,and requires the help of projection media such as screen.There is still a considerable gap from nakedeye hologram.The holographic acquisition and reconstruction devices are usually complex and fxied because of the strict optical environment.Moreover,the large amount of collected,transmitted,and reconstructed data,the high computational power of processing,rendering and reconstruction,bring heavily burdens on devices.

The problem of insuffciient performance of terminal devices can be solved by rendering on cloud in the future.With the help of cloud computing,edge computing and AI technology,it can further reduce costs and computing dependence,and promote lightweight and mobility of terminal devices.

6.3 Service Key Technologies

Interactive immersive services involve a variety of application scenarios and require intelligent media distribution,multi-stream collaborative control,digital modeling,and other capabilities to provide a series of service-based capabilities for the strong interaction and deep integration of the digital and physical world.The implementation of these service capabilities corresponds to a series of related technologies,such as effciient compression and codec,interaction and perception,and distributed media processing.As more and more new applications and service scenarios emerge,more potential service key technologies will arise.In addition,there are still gaps or technical bottlenecks in the research area for some known key technologies.

For example,in terms of compression and codec technologies,there is no formal standard aiming at truly hologram codec.Meanwhile,the IEEE P1918.1.1 has done a lot of work on tactile codec[17,58,59],but it has no defniite standard yet.For the multi-modal services,due to essential difference among the integrated visual,audio,and haptic signals,the technical challenge is to guarantee quality of multi-modal services while delivering and processing the collaborative modality signals[41,42].

Therefore,exploring potential key service technologies,investigating existing key technologies deeply,and promoting the international standardization process synchronously,are conducive to improving the whole service system.

VII.CONCLUSION

Interactive immersive services are the typical representative services of the future network and Metaverse.In this article,we describe the defniition,service scenario,framework,service requirements,and key technologies of interactive immersive services.Corresponding to the framework shown in Figure 2,the general view of interactive immersive services is illustrated in Figure 8.

Figure 8.General view of interactive immersive services.

This article also analyses and discusses the existing key issues of network architecture,device performance,and key technologies in the current service development,and gives the direction to solve them.Future work will continue to make a profound study in designing network architecture,refniing service requirements,and exploring potential technologies.

- China Communications的其它文章

- IoV and Blockchain-Enabled Driving Guidance Strategy in Complex Traffic Environment

- LDA-ID:An LDA-Based Framework for Real-Time Network Intrusion Detection

- A Privacy-Preserving Federated Learning Algorithm for Intelligent Inspection in Pumped Storage Power Station

- Secure Short-Packet Transmission in Uplink Massive MU-MIMO Assisted URLLC Under Imperfect CSI

- Multi-Source Underwater DOA Estimation Using PSO-BP Neural Network Based on High-Order Cumulant Optimization

- An Efficient Federated Learning Framework Deployed in Resource-Constrained IoV:User Selection and Learning Time Optimization Schemes