IoV and Blockchain-Enabled Driving Guidance Strategy in Complex Traffic Environment

Yuchuan Fu ,Changle Li,* ,Tom H.Luan ,Yao Zhang

1 State Key Laboratory of Integrated Services Networks,Xidian University,Xi’an 710071,China

2 Research Institute of Smart Transportation,Xidian University,Xi’an 710071,China

3 Northwestern Polytechnical University,Xi’an 710072,China

Abstract: Diversified traffic participants and complex traffic environment(e.g.,roadblocks or road damage exist)challenge the decision-making accuracy of a single connected and autonomous vehicle (CAV) due to its limited sensing and computing capabilities.Using Internet of Vehicles (IoV) to share driving rules between CAVs can break limitations of a single CAV,but at the same time may cause privacy and safety issues.To tackle this problem,this paper proposes to combine IoV and blockchain technologies to form an efficient and accurate autonomous guidance strategy.Specifically,we first use reinforcement learning for driving decision learning,and give the corresponding driving rule extraction method.Then,an architecture combining IoV and blockchain is designed to ensure secure driving rule sharing.Finally,the shared rules will form an effective autonomous driving guidance strategy through driving rules selection and action selection.Extensive simulation proves that the proposed strategy performs well in complex traffic environment,mainly in terms of accuracy,safety,and robustness.

Keywords: autonomous driving guidance;blockchain;communication range;Internet of Vehicles;reinforcement learning

I.INTRODUCTION

At present,machine learning performs well in many aspects of connected and autonomous vehicles(CAVs),such as cooperative adaptive cruise control(CACC) and collision avoidance [1–5].Although CAVs can complete a variety of driving tasks,navigation in complex traffic environment remains a difficult task for CAVs,which challenges the accuracy and adaptability of their driving decisions[6].On one hand,the traffic scenarios involved in a single CAV are limited.On the other hand,current advanced navigation systems can plan the shortest distance route and identify traffic congestion,but they cannot update all state changes on the road,such as temporary roadblocks or road damage.Therefore,achieving fully autonomous driving requires more than a collection of isolated capabilities of each vehicle.

Thanks to the development of the Internet of Things(IoT)technology,Internet of vehicles(IoV)as an important application in the field of intelligent transportation,enables vehicles not only to talk to each other,but also to interact with the driving environment[7–9].The learned driving experience and rules of vehicles can be shared,addressing the capability limitations of individual CAVs,helping them to make appropriate autonomous decisions in complex traffic scenarios[10].In addition,autonomous driving strategies could be learned through reinforcement learning(RL).

RL is one of the powerful machine learning tools for intelligent decision making and control in autonomous driving [11].There is a lot of research work that applies RL to learn autonomous driving strategies.Weietal.[3] and Desjardinsetal.[12] propose to use RL for CACC systems.Yuetal.[13] utilize coordination map and multi-agent reinforcement learning(MARL) for autonomous driving.Xiongetal.[14]apply RL to learn autonomous driving strategies and collision avoidance.

Combining RL and IoV is a promising scheme for driving rules learning and sharing.However,when sharing learned driving rules,user privacy issues may exist.At the same time,malicious nodes may also share false information.Therefore,how to learn and extract driving rules,as well as share them securely are still worth studying.Recently,blockchain technology,with its characteristics of transparency and immutability,is widely used in the Internet of Things(IoT)as an effective way to securely store and share data[15–17].Therefore,this technology can also be applied to ensure user privacy and data security during the sharing of driving rules.

In light of the existing works,an IoV and blockchain-enabled autonomous driving guidance strategy is proposed to navigate CAVs in complex traffic environment.Specifically,a RL-based algorithm is adopted for learning autonomous driving strategies.Moreover,a driving rule extraction method is developed to obtain the learned driving experience.To ensure the security and reliability of the driving rule sharing process,a blockchain-enabled architecture is proposed.The major contributions of this paper are summarized as follows:

·DrivingRuleSharingArchitecture: A blockchain and IoV enabled architecture is proposed to solve the problem of a single CAV involving limited traffic scenarios by safely sharing driving rules between CAVs,which can be used to guide CAVs make accurate decisions in complex traffic environment.

·RL-basedAlgorithm: Autonomous driving strategy is learned by RL-based algorithm,which fully considers various factors such as driving safety,traffic participants,and roadblocks.

·PerformanceValidation:Simulation results prove effectiveness and accuracy of the developed proposal in terms of decision-making accuracy,safety,and robustness.

The remainder of this paper is organized as follows.In Section II,we review related work on RL for autonomous driving and blockchain.Section III gives an overview of the proposal,including scenarios and system components.Section IV discusses the driving rules extraction and sharing process.Section V evaluates the performance of the proposed strategy and presents the simulation results.Finally,Section VI concludes this paper and gives future work.

II.RELATED WORK

In this section,the existing works on RL-based autonomous driving strategy and blockchain technology for IoV are introduced.

2.1 RL-Based Autonomous Driving

Currently,RL-based algorithms have been widely studied for autonomous driving strategy learning.For urban traffic environment,a modular decision making algorithm based on RL and model-checker for autonomously navigate intersections is proposed in[18].The authors in[19]apply deep Q-learning to learn how a vehicle can effectively cross an intersection based on the distance and speed of other vehicles.The success rate of behavior recognition is satisfactory,but the definition of the collision area in this paper does not reflect the actual collision situation of the vehicle.Wei et al.[3]proposed to utilize supervised reinforcement learning(SRL)-based algorithm for CACC system to achieve longitudinal vehicle dynamics control.Similarly,Desjardinsetal.[12] show how to use RL to develop a vehicle controller for a CACC system.This method can effectively improve the performance of CACC,but the learning method and simulation environment can be improved.In [20],authors use RL to design a data-driven CACC algorithm for the exclusive bus lane(XBL),which does not rely on accurate knowledge of bus dynamics.Hoeletal.[6] combined the concepts of planning and deep reinforcement learning to propose a general decisionmaking framework for autonomous driving,which applied well in two highway scenarios.Xiongetal.[14]use the deep deterministic policy gradient (DDPG)algorithm in reinforcement learning combined with safety control to achieve autonomous driving and collision avoidance.More recently,Yuetal.consider the collaborative driving of autonomous vehicles as a multi-agent scenario,and combine MARL and coordination map to achieve coordinated driving between vehicles in a highway scenario [13].Although these methods show satisfactory results and effective strategies,they mainly rely on the capabilities of a single vehicle,and whether they can cope with complex scenarios remains to be verified.In addition,the repetitive calculation of a single vehicle will cause a waste of resources,and these methods do not take full advantage of the advantages of vehicle interaction.

2.2 Blockchain for IoV

With the application of IoT technology in vehicular network,data sharing between vehicles and the surrounding environment (e.g.,other vehicles and infrastructures)becomes important and common,which also brings user privacy and data security issues that cannot be ignored.Guoetal.[17] propose a trust access authentication scheme that to apply edge computing enabled blockchain network to promote vehicle data sharing.Kangetal.[15] design a data sharing scheme based on blockchain technology and vehicle reputation to ensure high-quality data sharing in the IoV.This scheme has a huge advantage over traditional reputation schemes in terms of ensuring the security of data sharing.Both of the above schemes effectively prevent unauthorized data sharing.More recently,the authors of [21] use vehicular blockchain technology to improve the accuracy of vehicle cooperative positioning (VCP) by improving data quality.However,the application scenarios considered by the authors are limited.For the problem that it is difficult for vehicles to evaluate the credibility of received messages in nontrusted traffic environments,Yangetal.propose a decentralized trust management system based on blockchain technology and Bayesian inference model[22].In [23],a vehicular blockchain-based collective learning framework is proposed for autonomous driving.This framework overcomes the shortcomings of single-vehicle intelligence and centralized methods.However,the paper does not give a detailed introduction to the application scenarios and driving models.In order to solve the security problem in vehicular communication systems (VCSs),the authors in [24]proposes a heterogeneous framework of dynamic security key management based on blockchain,which has better performance than the structure of the central manager.Chengetal.propose a semi-centralized traffic signal adjustment mode using blockchain to adjust the traffic signal lights according to the dynamic characteristics of the vehicle[25].It can be seen that blockchain technology is a promising method for ensuring security of data sharing in IoV.

These huge efforts have led to the rapid development of autonomous driving.However,CAVs still face some challenges in autonomous navigation in complex traffic environment.Specifically,in most current methods,each CAV independently learns autonomous driving strategy and then makes decision.Therefore,because the scenarios involved in a single CAV are limited and the navigation system information is not updated in a timely manner,erroneous decisions are prone to occur in complex traffic environment.Therefore,this paper takes the research one step further by combining IoV,blockchain and RL.The learned driving rules can be safely shared,thereby guiding CAVs to make more accurate decisions.

III.SYSTEM DESCRIPTION

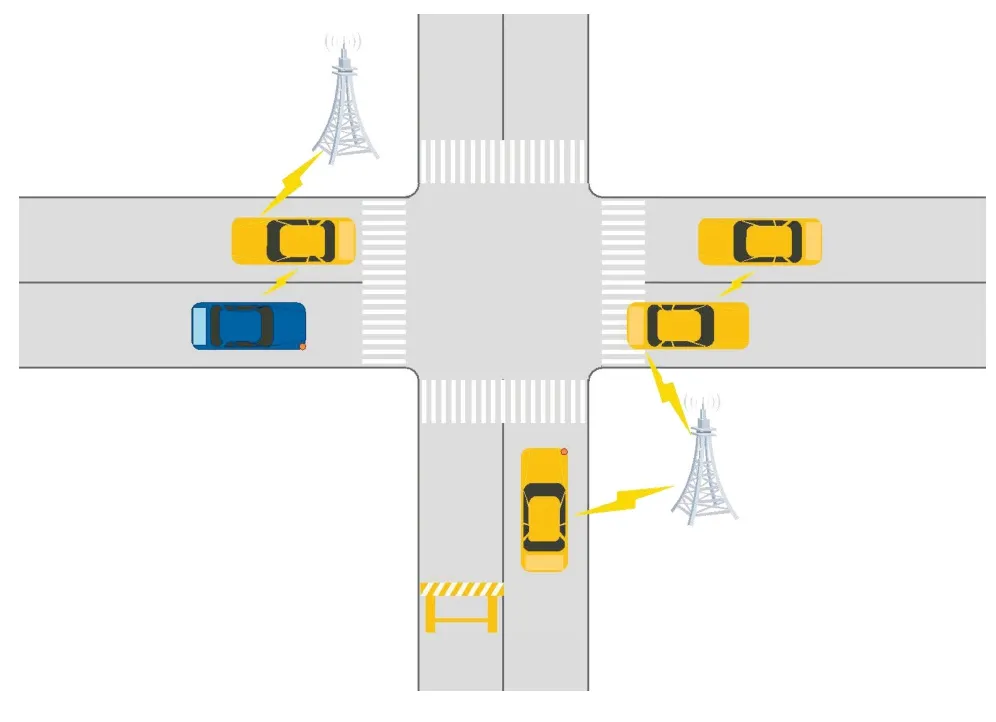

In this section,the traffic scenarios considered in the paper is firstly described,as shown in Figure 1.Then,we carefully analyze important influencing factors and model the scenario as a RL problem.

Figure 1.Illustration of a complex driving scenario considered in this paper,including complex interactions between vehicles,intersections,and roadblocks.

3.1 Scenario Description

As shown in Figure 1,the road layout in the scenario includes two-way lanes,intersections,and roadblocks (long-term or temporary).Traffic participants are mainly CAVs,that is,object vehicle (OV) and other CAVs.Among them,OV is shown in blue,and other CAVs are shown in yellow.The setV={v1,v2,...,vn}is used to represent other CAVs in the scenario,wherenrepresents the total number of them.The setRB={rb1,rb2,...,rbm}is utilized to represent roadblocks in the scenario,wheremrepresents the total number.In addition,all CAVs travel along the road from the origin to the destination by autonomous driving.The red dot of a CAV indicates its intention to steer.

When a CAV navigates in this scenario,on one hand,objects around the CAV,such as other CAVs and road obstacles,will pose a threat to it,thereby hindering the safety of autonomous driving.When the leading vehicle brakes urgently,the following vehicle fails to take effective measures,which may cause a traffic accident.Therefore,to ensure driving safety,the vehicle should detect potential dangers in advance and perform appropriate braking.However,due to sensor errors and computing performance of a CAV,there are varying degrees of uncertainty in sensing and computing,which poses challenges to autonomous driving decisions.

On the other hand,changes in road conditions can affect the effectiveness of driving behavior of the CAV.For example,a roadblock closes a road.If the CAV uses on-board sensing system to detect the obstacle and apply to brake,it may not pass through the road for a long time.Therefore,autonomous driving in complex traffic environment requires appropriate strategies for different situations.

3.2 Modeling Scenarios

The traffic scenarios can be modeled as Markov decision processes (MDP).The RL-based method can be used to learn the autonomous driving strategy of CAVs.Therefore,next the state space,action space,and reward will be refined.

3.2.1 State Space

The state space of a traffic participant (OV and other CAVs) at timetconsists of three parts,which can be expressed as:

wherept(t)={xt(t),yt(t)}is the position of the CAV;vt(t)represents the velocity;ht(t)represents the heading.For the state space of a roadblock at timet,because the roadblock generally does not move,it mainly includes position and size:

wherepr(t)={xr(t),yr(t)}is the position of the roadblock;lr(t) andwr(t) represent the length and width of the roadblock,respectively.Therefore,the state space at timetcontains the state of the OVsOV(t),other CAVs,and roadblockswhich can be expressed as:

3.2.2 Action Space

Generally speaking,a CAV completes autonomous driving by controlling acceleration and steering.Therefore,the action spacea(t) at timetconsists of the above two parts,and can be expressed as:

whereaa(t)andas(t)are the acceleration and steering at timet,respectively.As we all know,when the vehicle is moving,the speed change too fast will cause discomfort to the passengers.Therefore,in general,the absolute value of vehicle acceleration should not exceed 4m/s2[26].In consideration of vehicle size and driving safety,the steering angle is selected within the range of[-40°,40°].

3.2.3 Reward

The design of the reward function will affect the effectiveness of a CAV for autonomous driving strategy learning.In this paper,a simple reward function is designed,which simplifies the learning process while ensuring driving safety and traffic rules.

·Drivingsafety: A fundamental problem pursued by autonomous driving is to ensure driving safety.Therefore,traffic accidents during driving need to be punished.

·Drivingdirection: The vehicle needs to drive in the direction indicated by the road,otherwise,it will be punished.

whereθ(t)is related to heading of the CAVh(t),andθ>0,indicating the angle between the direction in which the vehicle is traveling and the direction of the road.The parameterw2>0 is the weight.

·Roadboundary: CAVs should stay in the lane and be penalized when they drive out of the road boundary

Therefore,the overall reward function is the linear sum of the above three parts:r(t)=r1(t)+r2(t)+r3(t).The parametersw1,w2,andw3are used to normalize the three rewards,respectively.

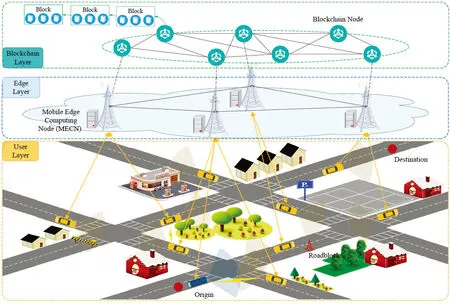

IV.DRIVING GUIDANCE STATEGY

In this section,IoV and blockchain-enabled driving guidance strategy is introduced.First,the RL-based individual driving rule learning process is described.Then,the architecture and process of blockchainbased driving rule sharing is described in detail.Finally,how our proposal guides autonomous driving is introduced.The proposed system architecture is shown in Figure 2.

Figure 2.System architecture for autonomous driving guidance.

4.1 Individual Driving Rule Learning

4.1.1 RL-Based Autonomous Driving

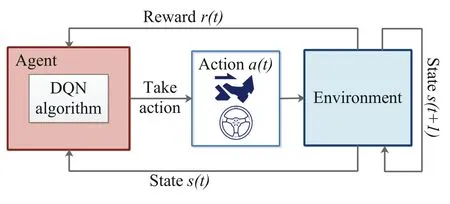

According to the modeled scenario,each CAV uses deep Q-learning (DQN),a typical RL algorithm,to learn autonomous driving strategies[27],as shown in Figure 3.DQN is an algorithm based on value iteration,which combines deep learning with reinforcement learning.

Figure 3.The proposed autonomous driving strategy using DQN.

DQN uses one main network to generate the current Q value,and uses another target network to generate the Target Q.For structures of main network and target network in DQN,we use a four-layer fully-connected feedforward neural network to meet non-linear mapping while reducing complexity.The underlying reason is that the state space is mainly non-image information such as position and velocity.In order to adapt to the characteristics of DQN,we discretize the action space.Accelerationaa(t) is divided into nine levels,which can be represented by the following set:{-4m/s2,-3m/s2,...,3m/s2,4m/s2}.For steeringas(t),the action can be selected from the following set:{-40°,-30°,-20°,...,30°,40°}.As described above,the restricted action space takes into account passenger comfort and driving safety.During training,DQN is updated in the same way as Q-learning[11]:

whereα,r,andγare the learning rate,reward,and discount factor,respectively;Q(s′,a′) is the optimal future value of state-action value function;Q(s,a) is the old value.The loss function is as follows:

whereθmeans the network parameter is the mean square error loss.

4.1.2 Driving Extraction

Ideally,once a CAV has determined its destination,the task can be performed by autonomous driving.In other words,the CAV’s autonomous driving system can plan driving routes and respond to events during driving,such as performing appropriate turns.It would also be able to recognize various traffic signs and choose the appropriate action.However,in the face of complex traffic environment,the performance of a single CAV is challenged.First,a single CAV involves in limited traffic scenarios.In addition,the navigation information may be not updated in real time.Therefore,achieving fully autonomous driving requires sharing of driving rules between CAVs to achieve cooperation.

The process of machine learning is opaque,and it is difficult to get the driving rules directly.A trained reinforcement learning strategy can choose the appropriate action for a given state,which allows driving rules to be extracted by:

IfthestateisS*,

ThenexecuteactionA*.

However,such perception-based driving rules may not work in some cases.For example,different locations may require different actions even if the states are similar.In order to quickly guide CAV to make accurate decisions in complex environments,map information,destinations,and the state of the environment are combined.The map information contains the exact location of roads and landmarks,and each CAV can be quickly accessed.When a CAV finishes driving on a road section and encounters a new situation,the corresponding driving rules can be extracted for reference by other CAVs.The driving rule can be extracted as follows:

IftheLocationL*isreached,

AndtheDestinationisD*,

AndthestateisS*,

ThenexecuteactionA*,

where LocationL*is a special location,such as the previous intersection of the segment.By combining the above information,the perception and judgment of complex environments and tasks can be reduced.

4.2 Blockchain-Based Driving Rule Sharing

The driving rules learned by each CAV can be shared with each other to form a high-performance autonomous driving strategy.However,the trust of the information received in such an environment is a big issue.The underlying reason is that CAVs may share incorrect information for various reasons.Therefore,an architecture combining IoV and blockchain technology is proposed for reliable driving rule sharing.

4.2.1 An Overview of the Proposed Architecture

The proposed architecture is a three-layer structure,including user layer,edge layer,and blockchain layer,as depicted in Figure 2.

The user layer can also be considered a vehicular network layer.CAVs equipped with on-board units(OBUs) can obtain services by communicating with mobile edge computing nodes(MECNs),that is,roadside units(RSUs).At the same time,in this layer,each CAV uses the on-board sensing system to obtain data and execute individual driving rule learning,and uploads the extracted driving rules to the edge layer.In this process,user privacy and data security are important issues.Therefore,blockchain is adopted to deal with these issues.In addition,the CAV that requires autonomous driving guidance will interact with the edge layer and download existing driving rules.After selecting the driving rules,the CAV uses these rules to assist in driving decisions.The detailed driving guidance strategy will be described in Subsection 4.3.In particular,it is assumed that the structure of the RL model trained by each CAV is consistent,and the initialization parameters are consistent,so that it is convenient for other CAVs to use.

In the edge layer,each CAV communicates with the nearest MECN to obtain services,that is,data upload and download.MECNs have strong data storage and computing capabilities and can support the operation of the blockchain network.Similarly,latency can also be reduced compared to uploading data to the cloud.

In the blockchain layer,the blockchain network running on MECNs provides distributed information storage and sharing services for authorized CAVs,which can greatly protect user privacy and data security.In addition,all authorized CAVs,especially CAVs with better performance and CAVs that have encountered new scenarios,serve as providers of driving rules.CAVs with poor performance and CAVs unfamiliar with road traffic scenarios are the requesters of driving rules.

The process of sharing driving rules,selecting driving rules,and driving guidance will be described in detail in the following.

4.2.2 Secure Driving Rule Sharing

The previous subsection learns autonomous driving strategies by using the DQN algorithm.Existing work has proved that this method can make accurate driving decisions in some scenarios [18,28].However,the sensing and computing capabilities of each CAV may be different,so the accuracy of its decision-making is also different.In addition,in complex scenarios,the performance of a single CAV may be poor due to unfamiliarity with the environment and limited information obtained.Therefore,this blockchain-enabled driving rule sharing strategy is proposed,which encourages some CAVs to use the shared driving rules to improve their learning efficiency and guide them to make accurate decisions.

1)Vehicleauthorization: Each true and legitimate CAV will be authorized by the system to obtain its public and private keys for encryption.For example,CAViwill obtain public keyand private key

2)Uploadofdrivingrules: SupposeCAVihas extracted the driving ruleruiand intends to share the rule with other CAVs,CAViwill send a data upload request containing public keyhash of the latest blockh(blockt-1)(i.e.,next block to be generated isblockt,driving rulerui,and timestamp to the nearestRSUm.The request message is signed with the private keyofCAVito ensure that the information has not been tampered with.Therefore,the request message can be expressed as:

After receiving the request information,theRSUmwill verify the validity of the request through the following aspects.The request comes from an authorized CAV and is digitally signed;the request points to the end of the blockchain;the driving rule in the request performs a reasonable action,that is,the acceleration and steering angle do not exceed the limits.Once the request is verified,RSUmwill send a response message toCAVi,which adds public keyand private keyofRSUm:

The symbol‖in equation is used to separate different content in the request message.Similarly,CAViwill verify after receiving the response information.If the response is correctly verified,then the driving rule can be uploaded.TheRSUmwill then broadcast the upload record,which will be recorded in the blockchain after consensus.Conversely,messages that fail verification will be discarded and will not be forwarded further.

Each RSU collects driving rules upload records from CAVs within the communication range.During a period of time,the primary (RSUleader) generates new data blocks with timestamps and broadcasts them to other RSUs on the blockchain for review and verification.At the same time,theRSUleaderwill aggregate the uploaded records and pack them into a block,and add the hash of the current block and the previous block.If other authorized RSUs reach consensus on the block,the block will be added to the end of the blockchain.

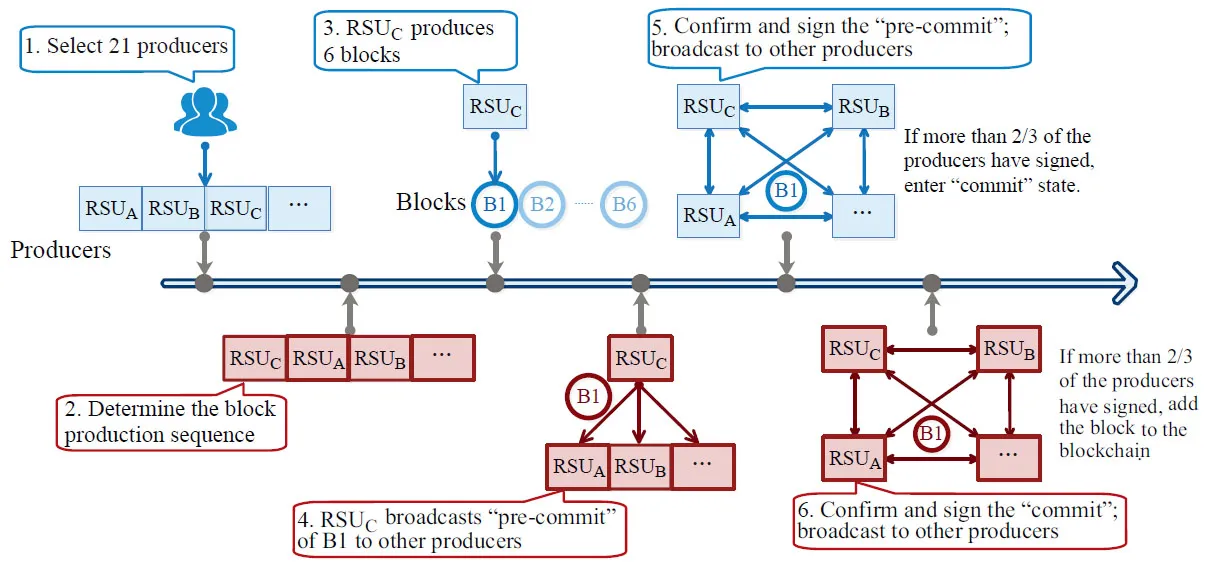

In order to improve the efficiency of the blockchain and save resources,the consensus algorithm uses the Fault Tolerance-Delegated Proof of Stake (BFTDPoS).This consensus algorithm takes advantage of Byzantine Fault Tolerance(BFT)and Delegated Proof of Stake(DPoS)to achieve a block generation interval of up to 500ms[21,29].The specific algorithm steps are as follows:

· Select 21 producers from authorized RSUs by voting;

· Determine the order of the block production;

· Each producer produces 6 blocks continuously at 0.5s intervals;

· After the producer produces a new block,it broadcasts to the remaining 20 producers,and the block enters the“pre-commit”state;

· Producers confirm and sign the status,then broadcast to other producers.After more than 2/3 of the producers have signed and passed,the block changes from“pre-commit”to“commit”status;

· Producers confirm and sign the “commit” status of the block,and then broadcast it to the network.After more than 2/3 of the producers have signed the “commit” status,the block will be added to the end of the blockchain.

Each block will be broadcast immediately after production,as shown in Figure 4.In particular,while the block producer waits for 0.5s to produce the next block,it will also receive the confirmation results of the previous block.It indicates that the production of the new block and the confirmation of the old block are performed simultaneously.

Figure 4.Algorithm process of BFT-DPoS.

3)Requestanddownloadofdrivingrules: SupposeCAVjrequires the use of driving rules shared by other CAVs for autonomous driving guidance.It first accesses the nearbyRSUn(mandnmay or may not be equal) to download the latest block in the blockchain and then performs driving rules selection.The specific driving rules selection process will be introduced in Subsection 4.3.AfterCAVjselects the driving rules that need to be downloaded,it will send a download request toRSUn.

whereRjrepresents the set of driving rules thatCAVjchooses to download.

After listening to the request,theRSUnfirst verifies whether the identity ofCAVjis authorized,and then searches the blockchain for the existence of the requested data.If the data is not stored inRSUn,the RSU storing the data sends the data toRSUnfor sharing;otherwise,the data can be directly shared byRSUntoCAVj.Specifically,RSUnwill use public keyofCAVjto encrypt the shared data and provideCAVjwith a one-way access link.WhenCAVjvisits the link and downloads the data,the link will become invalid.After downloading driving rules,CAVjwill make a payment for the shared data and generate a data download record to add to the blockchain,similar to the process of Upload of driving rules.

4.3 Driving Guidance Strategy

When using the driving rules shared by other CAVs for autonomous driving guidance,the following issues need attention,namely,driving rules selection and action selection.

4.3.1 Driving Rules Selection

We consider that a CAV(such as OV)enters an unfamiliar environment and its autonomous driving model is not comprehensive enough.Rather than using its own model or retraining it,it can use driving rules shared by other CAVs to learn current strategies.In addition,if a CAV (such as OV) has insufficient performance to cope with complex traffic environments,it can take advantage of driving rules shared by other CAVs.When the CAV is about to reach the next section,it can access the nearest RSU to request services,and select the appropriate driving rules as a guide.As described above,driving rules uploaded by a CAV include informations such as location,time stamp,and destination.The driving rule can be represented as:

whereCiis the conditional attributes ofrui,including locationLi,driving destinationDi,stateSi,and timestampTi.Aiis the execute action ofrui.In order to choose the appropriate driving rules (also the data provider),data filtering is performed from two aspects,that is,similarity and inconsistency.

1)Similarity: The CAV mainly uses several indicators to evaluate the similarity with the shared driving rules,such as the current location,destination,and timestamp.Specifically,the destination can refer to the final position to be driven by the CAV,or it can mean that there are overlapping sections in the navigation planning route in the future and the driving directions are the same.Using these indicators,the CAV chooses the driving rule with conditional attributes closest to the CAV as guiding strategy.

2)Inconsistency: If there are inconsistencies in the selected driving rules,the older rules are eliminated because their information may be outdated in changing traffic scenarios.For example,temporary roadblocks have been removed and the road is accessible.Inconsistency mainly means that the actions performed by these driving rules are ambiguous.For example,the action of ruleruiis turning while the action of rulerujis going straight.Forrui=Ci∪Aiandruj=Cj∪Aj,ifCi=CjbutAi/=Aj,compareTiandTjand delete the earlier driving rule.

4.3.2 Driving Rules Selection

After selecting and downloading the required driving rules,the CAV(such as OV)can use them to generate an overall action space to assist in autonomous driving strategy learning.Regarding the choice of actions to be performed,a simple strategy is adopted.First,the selected driving rules are sorted according to the similarity.Then,we take the union of the actions performed by these driving rules to form an overall action spacea*(t)=.It can be inferred that>amin(t)and<amax(t),which meansa*(t)⊂a(t).In other words,by using driving rules shared by other CAVs,the optional action space is reduced,which avoids wrong decisions to a certain extent.As described above,action spacea(t)consists of accelerationaa(t) and steeringas(t).Let OV selects an action from this overall action spacea*(t),and can preferentially select the one with the highest similarity according to the order of driving rules.As described above,action spacea(t) consists of accelerationaa(t) and steeringas(t).Next,the OV uses the overall action space to continue learning its own RL-based autonomous driving model.According to this strategy,the OV can automatically navigate to the destination with appropriate speed and heading in the area.

V.SIMULATION RESULTS AND DISCUSSIONS

In this section,the performance of the proposed driving guidance strategy in complex traffic environment is demonstrated in terms of accuracy,safety,and robustness through simulation.The simulation platforms are adopted TensorFlow and Matlab.First,different simulation scenarios are designed to evaluate the accuracy and safety of our proposal for autonomous driving decisions.Then,the security and robustness of the system with or without the use of blockchain technology are verified.

5.1 Simulation Settings

The simulation scenario mainly includes two-way single lanes,four intersections,CAVs,and roadblocks(long-term or temporary),as shown in Figure 2.Different simulation scenarios are generated by setting different positions and states of roadblocks,and different origin-destination (OD) of OV.All CAVs can use on-board sensors to sense the surrounding environment,obtain status information of other nearby CAVs,and identify roadblocks in simulation.We added noise to the location information to make the simulation more practical.

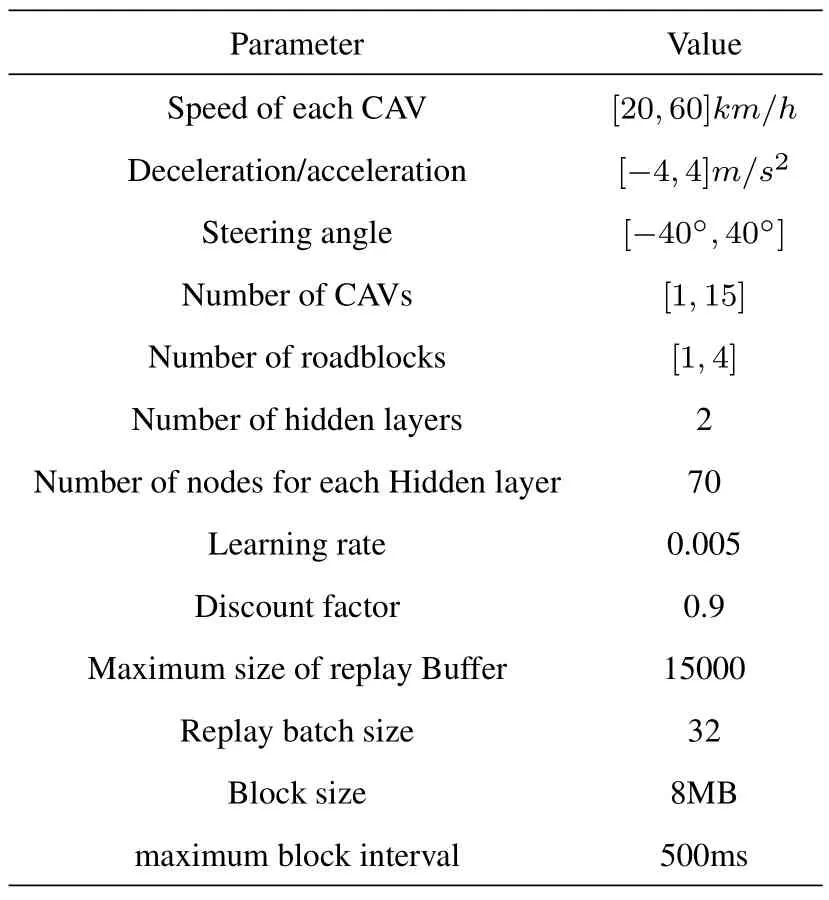

At the beginning of the simulation,All CAVs ran-domly assigned the OD,and they all traveled along the road.The speed of a CAV is between 20km/hand 60km/h,and the deceleration/acceleration is between -4m/s2and 4m/s2.Considering driving safety,the steering angle is selected within the range of[-40°,40°].The number of CAVs in the simulation are set to 2-10.Long-term or temporary roadblocks exist randomly on the road.The number of roadblocks are set to 1-4.When a CAV interacts with other vehicles or encounters a roadblock,it needs to respond accordingly,such as braking,and changing the route by turning at an intersection.The next part is the simulation parameters for DQN.For structures of main network and target network in DQN,a four-layer fullyconnected feedforward neural network is used,that is,there are 2 hidden layers and the number of nodes in each hidden layer is 70.The learning rate is 0.005,and the discount factor is 0.9.The maximum size of the replay buffer and replay batch size are set to 15000 and 32,respectively.For the parameters of blockchain network,the maximum block interval is 500ms,and the size of block is 8MB.Table 1 lists the detailed parameters setting.

Table 1.Parameter setting.

Based on the above simulation settings,performance evaluation is performed from the following three aspects,that is,accuracy,safety,and robustness.Accuracy,which indicates the ratio of the OV successfully traveling from origin to destination under the autonomous driving strategy.Safety,which represents the ratio of accidents that occur during the OV driving under an autonomous driving strategy.Robustness,which can be regarded as an evaluation index of security,is mainly used to evaluate the influence of malicious nodes on the performance of driving guidance strategies with or without the application of blockchain technology.

In addition,the following strategies are used to compare simulation performance.

·Ourproposal: Driving guidance strategy using DQN and blockchain technology.

·DQN-basedAlgorithm: Each CAV applies DQN for individual driving rule learning and decision making,which indicates that no driving rules are shared.

·Rule-basedAlgorithm: Each CAV uses the driving rules set in advance for individual decisions,which indicates that no driving rules are shared.In particular,in order to ensure driving safety,a large safety distance is set to avoid accidents.

·Sharingw/oblockchain: Blockchain technology is not applied during the sharing of driving rules,other simulation settings are consistent with our proposal.This strategy is mainly used to evaluate the robustness of our proposal.

5.2 Simulation Results

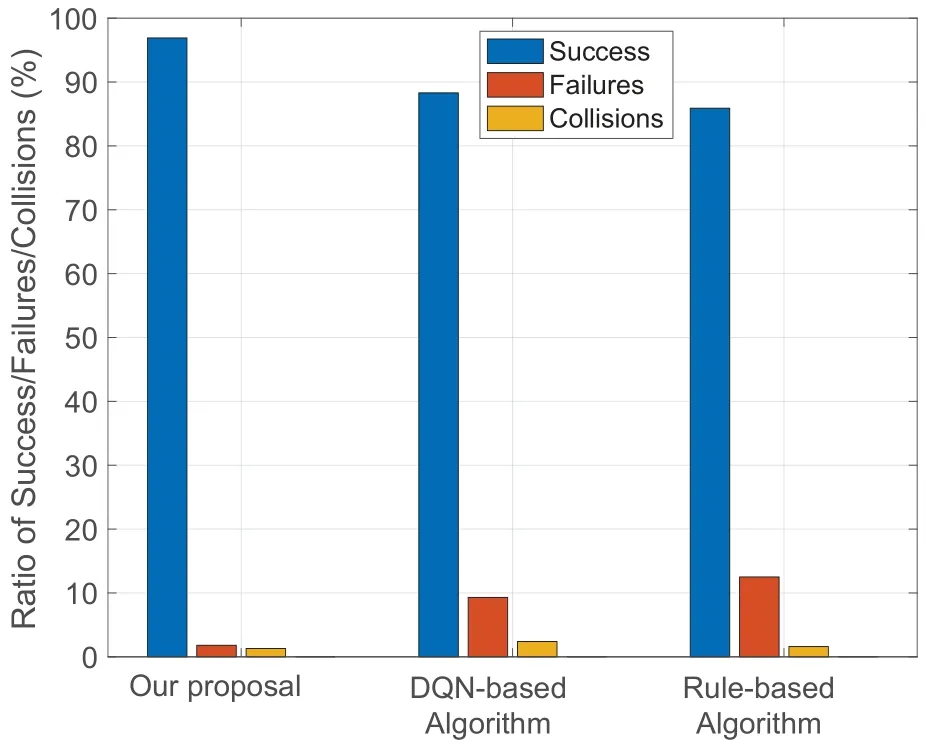

First,we verified the overall performance of our proposal by evaluating the accuracy of the strategy.The accuracy of the decision includes controlling the vehicle acceleration to avoid accidents and controlling the steering angle to determine the driving direction.Therefore,this indicator is divided into three aspects:ratio of success,ratio of failures,and ratio of collisions,which respectively represent the ratio of the CAV successfully drive from origin to destination,and the ratio of the CAV failed to reach the destination(without accidents),and the ratio of accidents.The main reason for the failure was that the traffic scene changed and CAV failed to make the correct decision.For example,when a long-term roadblock suddenly appeared,CAV chose to stop instead of modifying the driving route.

Figure 5 shows the results.It can be seen that compared with DQN-based and Rule-based algorithms,our proposal has the highest ratio of success(96.9%),which indicates that our proposal can make more accurate autonomous driving decisions in the same simulation scenario.Accordingly,the ratio of success of the DQN-based algorithm and Rule-based algorithm are 90.5% and 86.9%,respectively.Comparing the ratio of failures and ratio of collisions,it can be found that the factors affecting the accuracy of autonomous driving are not accidents.Because the ratio of accidents caused by the three algorithms in simulation is similar,they are our proposal (1.8%),DQN-based algorithm(2.4%),and rule-based algorithm(1.6%).This shows that for complex traffic environment,sharing driving rules is an effective way.The reason is that for the DQN-based algorithm,a single CAV cannot learn all the driving strategies.For the rule-based algorithm,due to the limited driving rules set in advance,it may not work well when encountering new scenarios.

Figure 5.Overall performance evaluation of different strategies.

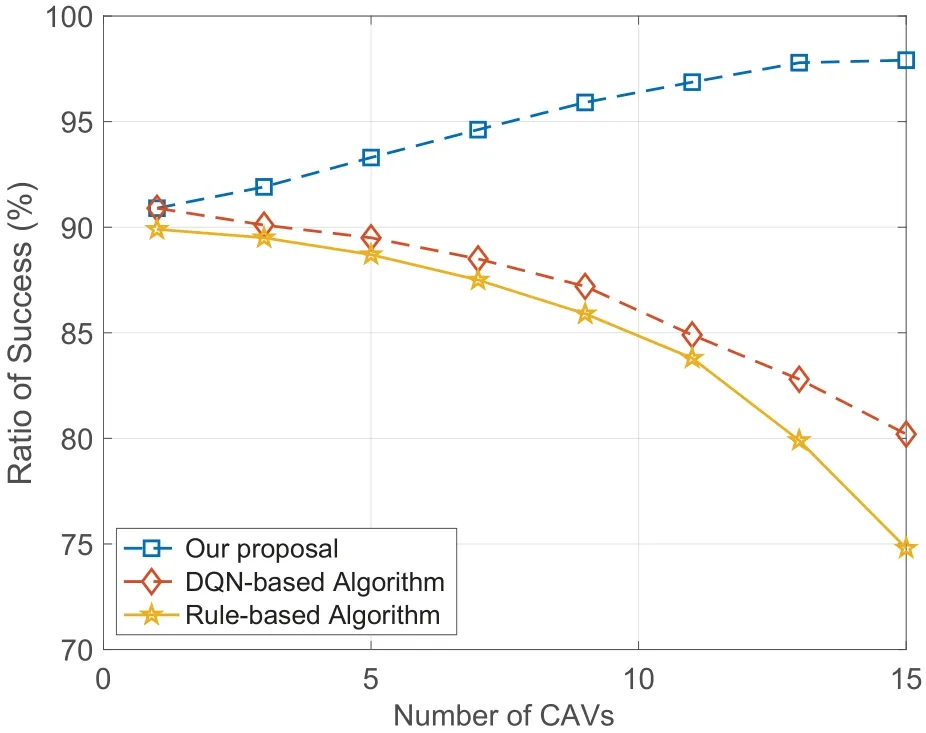

Next,the ratio of success of different algorithms are evaluated as the number of CAVs changed.As can be seen from Figure 6 that when there is only one CAV in the simulation scenario,the performance of the three algorithms is similar.In this case,none of the three algorithms share driving rules.As the number of CAVs increases,the performance of our proposal will gradually increase to relatively stable.The underlying reason is that an increase in the number of CAVs means more driving rules available for sharing.However,to a certain extent,shared driving rules are more adequate,and CAVs in the scene will increase the risk of accidents.For the other two comparison algorithms,the increase in the number of CAVs will have an impact on driving safety,so performance will gradually decrease.In particular,the rule-based algorithm is less adaptable to the environment.

Figure 6.Ratio of success vs. number of CAVs.

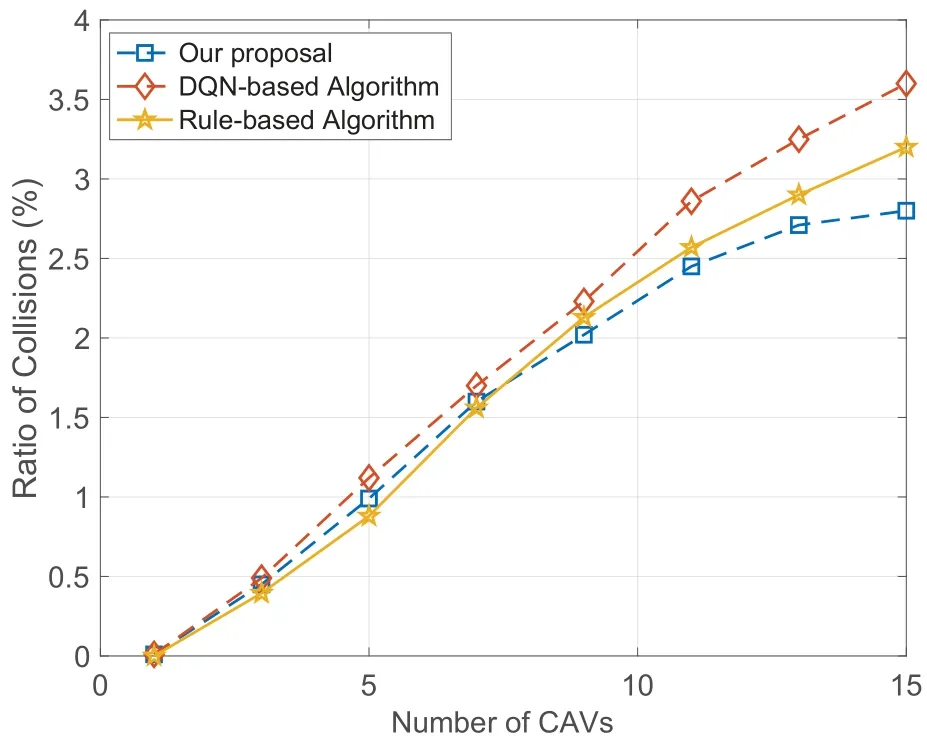

To further determine the effect of the number of CAVs on the performance of the algorithm,the ratio of collisions of different algorithms are evaluated as the number of CAVs changed.The simulation results are depicted in Figure 7.As the number of CAVs increases,the ratio of collisions caused by the three algorithms do not change much.Moreover,the performance of the three algorithms is similar.Combining Figure 5 and Figure 6,it can be concluded that traffic accidents are not the main factor affecting the accuracy of decisions.We can find that there will be more accidents when there are a large number of CAVs.This is because the more behaviors that need to interact between CAVs at this time,and there are more likely that some emergency situations cannot be avoided.However,in the complex traffic scenario,it is obvious that the algorithm without driving rules sharing is more inclined to take a braking action to avoid obstacles when detecting a roadblock.Therefore,when there is a long-term roadblock,CAVs guided by DQNbased and rule-based algorithms often cannot reach their destination,thereby reducing the ratio of success.

Figure 7.Ratio of collisions vs. number of CAVs.

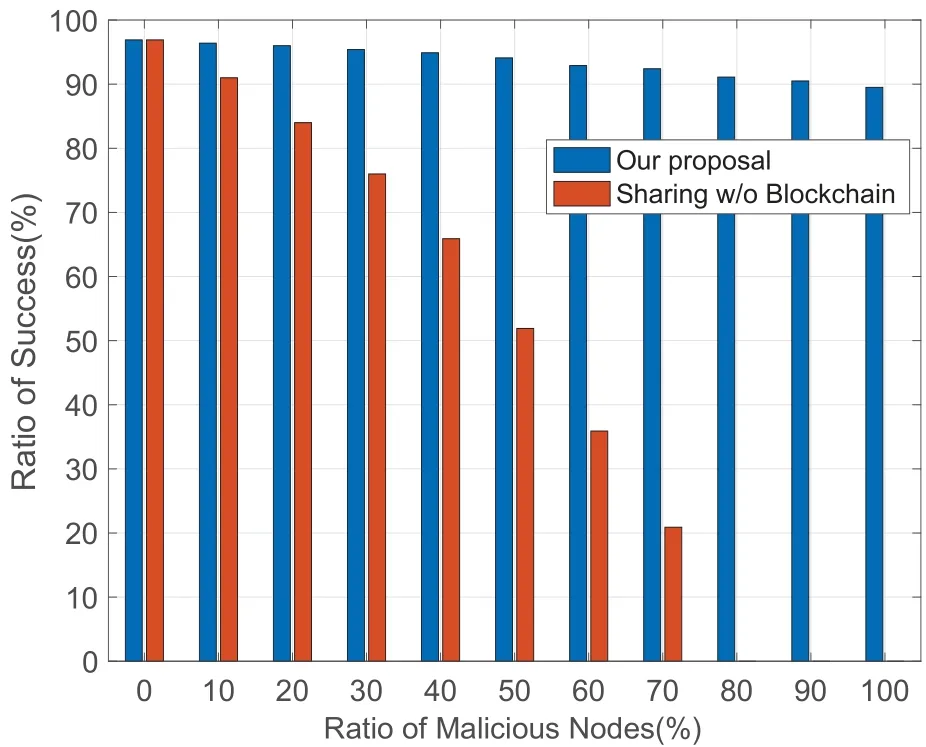

Finally,the impact of the application of blockchain technology on system performance is evaluated by setting different ratio of malicious nodes.In the simulation,the performance of our proposal and sharing w/o blockchain is compared,and the results are shown in Figure 8.It can be seen that as the ratio of malicious nodes gradually increases,the performance (ratio of success)of the two algorithms will be affected.In addition,malicious nodes will provide some wrong driving rules to influence autonomous driving decisions.When the ratio of malicious sharers exceeds 50%,it is difficult for CAV to use the shared driving rules to make accurate decisions,so the performance of the sharing w/o blockchain strategy will drop sharply.For our proposal,due to the application of blockchain technology,its performance is less affected.The reason is that the blockchain has information that cannot be tampered with,which can facilitate the tracking of malicious nodes.Without the application of the blockchain strategy,it is difficult to detect malicious nodes on the one hand,and the shared information may be tampered with,so performance is difficult to guarantee for sharing w/o blockchain strategy.

Figure 8.Ratio of success vs. ratio of malicious nodes.

In summary,in complex traffic environment,sharing driving rules among CAVs can effectively compensate for the impact of decision accuracy.In addition,when sharing driving rules,the use of blockchain technology can ensure the robustness of the system by protecting user privacy and data security.

VI.CONCLUSION

This paper has proposed an autonomous driving guidance strategy,which combines IoV,RL,and blockchain technologies for efficient and accurate navigation in complex traffic environment.Particularly,we have modeled traffic scenarios and used DQN to learn autonomous driving strategies.Then,a driving rule extraction method is proposed that combines state,location,and destination.Next,in order to ensure the security of the sharing process of driving rules,a three-layer architecture has been proposed,which uses vehicular blockchain technology.Based on shared driving rules,a CAV would be able to make accurate driving decisions.Extensive simulation results have proved that the proposed strategy performs well in complex traffic environment in terms of accuracy,safety,and robustness.In future work,we consider combining incentive mechanisms and reputation evaluation mechanisms to encourage more CAVs to share driving rules while selecting higher quality information.

ACKNOWLEDGEMENT

This work was supported by the National Natural Science Foundation of China(62231020,62101401),the Fundamental Research Funds for the Central Universities(ZYTS23178)and the Youth Innovation Team of Shaanxi Universities.

- China Communications的其它文章

- Spatial Modeling and Reliability Analyzing of Reconfigurable Intelligent Surfaces-Assisted Fog-RAN with Repulsion

- An Efficient Federated Learning Framework Deployed in Resource-Constrained IoV:User Selection and Learning Time Optimization Schemes

- ELM-Based Impact Analysis of Meteorological Parameters on the Radio Transmission of X-Band over the Qiongzhou Strait of China

- Multi-Source Underwater DOA Estimation Using PSO-BP Neural Network Based on High-Order Cumulant Optimization

- Secure Short-Packet Transmission in Uplink Massive MU-MIMO Assisted URLLC Under Imperfect CSI

- A Privacy-Preserving Federated Learning Algorithm for Intelligent Inspection in Pumped Storage Power Station