QoS-Aware Congestion Control with Online Learning

Lei Zhang,Kewei Zhu,Yong Cui,*,Yong Jiang

1 Zhongguancun Laboratory,Beijing 100094,China

2 Department of Computer Science and Technology,Tsinghua University,Beijing 100084,China

Abstract: In emerging applications such as industrial control and autonomous driving,end-to-end deterministic quality of service (QoS) transmission guarantee has become an urgent problem to be solved.Internet congestion control algorithms are essential to the performance of applications.However,existing congestion control schemes follow the best-effort principle of data transmission without the perception of application QoS requirements.To enable data delivery within application QoS constraints,we leverage an online learning mechanism to design Crimson,a novel congestion control algorithm in which each sender continuously observes the gap between current performance and pre-defined QoS.Crimson can change rates adaptively that satisfy application QoS requirements as a result.Across many emulation environments and real-world experiments,our proposed scheme can efficiently balance the different trade-offs between throughput,delay and loss rate.Crimson also achieves consistent performance over a wide range of QoS constraints under diverse network scenarios.

Keywordst:congestion control;quality of service;online learning

I.INTRODUCTION

Applications such as video conference,live,cloud gaming require end-to-end communication with a certain throughput with stringent delay,i.e.,quality of service (QoS) requirements.Quality of service is a control mechanism used to solve network problems such as network congestion,and is used to provide better network service capabilities for specific services.If we fail to achieve application data transmission in line with pre-defined application-specific targets,it will be impossible to achieve comprehensive QoS performance.Pre-defined QoS indicators,including acceptable minimum throughput,maximum delay and packet loss(e.g.,receiving at least 95%of data packets with a delay of less than 200 ms),are used to satisfy the application requirements.

The traditional transmission mechanism only provides best-effort services,and cannot provide any transmission with respect to QoS indicators including throughput,delay and packet loss.New applications such as autonomous driving and industrial control introduce more stringent transmission requirements[1,2],and QoS assurance has become one of the research hotspots in industry and academia.

Congestion control (CC)[3—5] is a fundamental issue for data transmission that has been the focus of attention for more than thirty years.Traditional algorithms follow a best-effort principle of data transmission,and hence there is no guarantee of QoS constraints for any flow.These schemes cannot achieve consistent performance in different network scenarios.For example,Cubic[3] can achieve high throughput in low-loss scenarios,but in high-loss networks,the throughput drops severely.

Recently,many efforts have been made to add different signals in network protocols to allow diverse application flows to perceive specific requirements,but these attempts cannot satisfy the targets in different network scenarios.These algorithms show some limitations.First,the performance of these algorithms varies in time,even in the same network path.For instance,BBR[6] and PCC[7] achieve high throughput in one real-world link but exhibit an evident degradation in the second measurement with lower throughput and higher delay.Second,the performance of existing congestion control schemes is quite unstable across different network paths.Similar observations have been shown in Pantheon[8].In the Colombia-Brazil link,LEDBAT[9] achieves the better performance than other state-of-the-art algorithms,whereas it achieves nearly the lowest throughput than others in the Brazil-Colombia link.

With the diverse requirements of emerging applications,the learning-based congestion control[8,10—14] has been proposed to learn adaptive control policies.These schemes define one objective function representing a single specified trade-off of requirement.However,offline learning algorithms inherently face the generalization problem.Although online learning algorithms perform at least acceptable when facing new network conditions,convergence time is a fundamental problem for them.And beyond that,they are limited to optimize only one objective function while ignoring the diversity of QoS requirements.Therefore,none of the existing schemes can perform well in achieving pre-defined QoS requirements.

In this paper,we first discuss how to leverage learning method to achieve different specified-QoS requirements that impose the upper bounds on delay and packet loss rate and a lower bound on throughput.There are two main challenges in achieving this goal:1) how to achieve consistent QoS performance over a wide range of paths,such as cellular links,highbandwidth wired links,or transoceanic links; 2) how to guarantee the fast and stable convergence to the predefined QoS.

To tackle these challenges,we present Crimson,an online learning-based congestion control to satisfy end-to-end QoS constraints.We present Crimson’s design,its evaluation and discuss its limitations.In summary,we make the following contributions:

・ We present a QoS-driven online learning algorithm to guide the adjustment of sending rate.To avoid the local optimum,we design a flexible multiple dimension gradient algorithm to compute “rate gradient”.To minimize the effects of the gradient offset between throughput and delay on performance,we design a rate guarantee mechanism to adjust the rate gradient.

・ We design a rate control scheme that utilizes the rate gradient from the online learning algorithm to achieve the QoS-specified performance.To tackle the inaccurate measurement and convergence problem,we incorporate domainknowledge to improve rate control to satisfy endto-end QoS constraints.

・ Extensive experiments with Crimson,Cubic,BBR,PCC,Indigo and Vivace,in a controlled environment and real-world links.The results show that Crimson can efficiently balance the fundamental trade-offs between throughput,delay and packet loss.Crimson also achieves consistently high performance over a wide range of QoS constraints under different network scenarios.

The rest of this paper is structured as follows.Section II discusses the state-of-the-art congestion control schemes and their shortcomings.Section III presents the design of Crimson.Section IV describes the Crimson’s implementation and Section V evaluates our approach over a wide range of network scenarios.The discussion of this paper is presented in Section VI.Finally,Section VII concludes the paper.

II.RELATED WORK

Various congestion control schemes regulate their rules by hand-crafted or learning-based mechanisms.These approaches achieve differentiated end-to-end performance under their utility functions or objective functions.The development of congestion control is divided into three stages[15].

At the early stage,congestion control schemes such as DECBit[16] and Tahoe [17] deal with congestion that all flows and users followed.Subsequently,Cubic,NewReno[18] and Vegas[4] became the default deployments.These schemes respond to the feedback of network conditions by increasing and decreasing congestion windows(cwnds)to acknowledgments(ACKs) and losses.The main goal of these solutions is to address the network congestion problem,rather than to meet application QoS requirements.

In the second stage,many recent congestion control algorithms including Copa[19] and BBR are designed based on their understanding of throughput/latency trade-off and have no perception of the QoS requirements.Some other congestion control algorithms,such as Sprout[20] and Verus[21],are endto-end protocols designed for specific network scenarios like cellular network or Wi-Fi network.They depend on parameter setting and have difficulty tracking the link rate accurately.Besides,they also fall short in terms of generalization to various performance or changing network environments.

More recently,in the third stage,some research works propose congestion control schemes with an objective function to guide the process of data transmissions.One objective function represents a single user-specified trade-off of requirement.For example,Remy[10] generates a decision tree guided by an objective function of throughput and delay by offline learning.Indigo[8]offline learns to“imitate”the oracle rules which are constructed with ideal cwnds given by the emulated bottleneck’s bandwidth-delay product(BDP).Orca[14] designs two levels of control which combines Cubic and a learning-based agent.And its agent offline optimizes the factor of updating cwnds for Cubic based on a deep reinforcement learning algorithm.

Online learning-based schemes,such as PCC and Vivace[13],incorporate the utility function to generate a rate-based learning algorithm.PCC’s default utility function involves throughput and loss rate.Vivace adopts a more complex utility function that replaces the absolute value of round-trip time (RTT) with the“RTT gradient”.With carefully engineered objective functions or utility functions,the learning-based algorithms could to a certain extent guarantee some desirable properties (e.g.,low latency,fair convergence).However,these learning-based algorithms cannot provide a guarantee to diverse QoS requirements.

III.DESIGN

In this section,we present here in detail the design of Crimson.We first describe Crimson’s design goal and architecture.Then we depict the QoS-based gradient descent algorithm and rate control mechanism.

3.1 Design Goals

Crimson is designed to an adaptive congestion control to satisfy diverse end-to-end QoS performance.Our design is guided by the two main points as follows:

・ We want to control the sending rate to adapt to network conditions and QoS performance.The policies of congestion control are not handcrafted instead of adaptive to both various network conditions and QoS requirements.Recently,machine learning methods automatically learn policies without pre-defined rules.Among machine learning,most offline learning methods assume that the data follow the same distributions which are not the case for real-world traffic flows.Therefore,we should choose an appropriate learning method to learn from the diverse specified QoS requirements and real-world traffic patterns.

・ QoS performance is particularly important for applications.Hence,we want to control the rate to reach the specified-QoS requirements as soon as possible.Meanwhile,the solution that satisfies end-to-end QoS requirements should reach the specified performance fast and stably.However,it is difficult to achieve the goal by simply applying existing learning algorithms.Therefore,domain-knowledge should be used to guarantee fast and stable convergence.

We argue that these guidelines enable our design to be robust against diverse QoS constraints of applications and to guarantee stable convergence.

3.2 Crimson Architecture

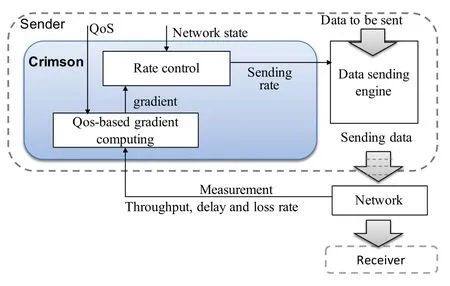

According to the above design goals,we propose a congestion control algorithm based on online learning.As shown in Figure 1,Crimson is composed of a rate-gradient computing module and a rate control algorithm.The gradient learning module is mainly based on QoS-aware gradient descent to learn the rate change.During the rate-gradient learning process,we also solve problems of local optimization and gradient disappearance by different Crimson’s guarantee mechanisms.

Further,the rate control algorithm determines the current sending rate of the sender according to the rate gradient.Generally,the measurement error due to jitter in the network environment will cause the gradient too large or too small.Therefore,we leverage the domain knowledge to correct the abnormal gradient and convergence problem which can ensure the diverse deterministic QoS requirements.

Figure 1.Crimson’s architecture.

3.3 QoS-Based Gradient Descent

At the beginning of Crimson,the applications provide their QoS requirements as the desired max-min bound for each dimensional performance metric,i.e.,a 3−dimensional value Q=(Q1,Q2,Q3).In detail,Q1represents the application requirement of minimum throughput;Q2represents the application requirements of maximum delay; andQ3represents the application requirement of maximum packets loss rate.

Another valueQ′t=(Q′1,Q′2,Q′3) represents the current measurement value under a certain sending rate at timet.The each dimension of measurement valueQ′tis defined as:Q′1represents the average throughput during a Measurement Interval (MI);Q′2represents the 95th percentile delay during the MI;andQ′3represents the observed packet loss rate at the current time.The goal of Crimson is that the value ofQ′meets the value ofQ,which means that the result of measured performance meets the application requirements.In particular,Q′1≥Q1,Q′2≤Q2andQ′3≤Q3.

The optimization objective of the online algorithm is to minimize the loss function,i.e.,the euclidean distance between the specified-QoSQand the current measurementQ′at timet:

If the throughput inQ′does not meet the requirements inQ,the Euclidean distance is calculated.Conversely,if the requirements inQare met,the Euclidean distance is zero.Similarly,the calculation of Euclidean distance for delay and packet loss rate is the same.In congestion control,there is a certain correspondence between the different sending rates and QoS performance.We suppose that the measurementQ′tcan be regraded as a function of “rate gradient”ε,i.e.,Q′t:=f(εt).

To reduce the variance of the estimated valueQ′t,we set an-step average ofLas the loss function in practice.Taking the relationship betweenQtand rate gradientεinto account,the actual loss function is described as:

In practice,if eachQ′iis satisfied the bound ofQi,we regard asf(εt)=Qi.That is to say,we only update gradients when the specified-QoS requirements are not satisfied.

We use a gradient descent algorithm to update theεt.The gradient ofJwith respect to rate gradientεtcan be derived as:

Since it is non-trivial to model the relation between the rate gradientεtand the measurementmtat the timet,we can not easily obtain the analytic expression of the functionf.Here we use a numerical method to approximate▽εtf(εt):

Finally,the rate gradient can be updated by the following formula.

whereαis the learning rate.Additionally,it is difficult to choose the proper learning rateα.An adaptive learning rate algorithm Adam[22]is a first-order optimization algorithm that is less sensitive to the choice of learning rate than the basic gradient descent algorithm.It also has advantages in non-convex optimization problems.Therefore,we combine Adam algorithm to update the gradient▽εtf(εt)in practice.

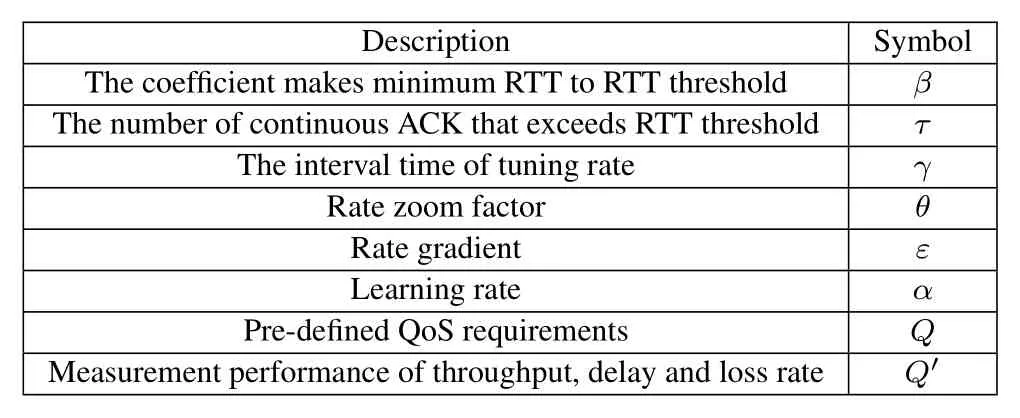

Table 1.Crimson’s parameters.

In the gradient learning process,there is the two problems:

1)Local optimum problem.The calculation of rate gradient in Crimson is not simply using the distance betweenQandQ′,but according to the actual situation,when the throughput does not meet the requirements of Crimson,the gradient is calculated for the throughput.Similarly,the delay only exceeds the corresponding gradient of delay is obtained only when QoS needs.This gradient calculation method not only meets Crimson’s goal,but also avoids local optimum.

2)Vanishing gradient problem.The situation where the local optimum occurs should be the measured performance of“insufficient bandwidth and excessive delay”.At this time,the gradient of throughput and delay will cancel each other.This situation occurs because the network conditions do not meet the current QoS requirements.Hence Crimson maintains a performance point close to QoS.When the network recovers,it will break the balance of the two gradients canceling each other.Once the network conditions satisfy the QoS requirements,Crimson will make the performance reach the target.

3.4 Crimson’s Rate Control Algorithm

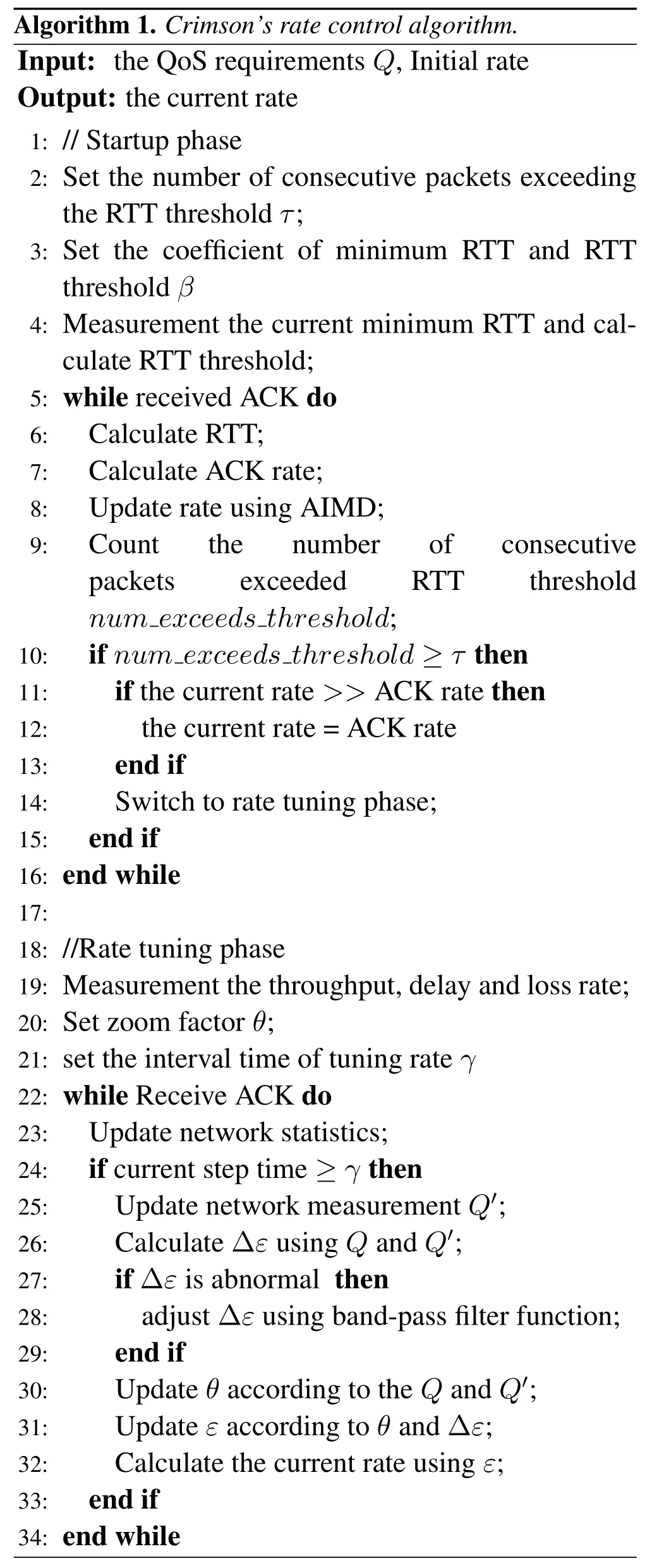

Algorithm 1 summarizes the process of Crimson’s rate control.The rate-control algorithm is divided into two phases,startup and rate tuning phase.Startup phase leverages slow start process to avoid congestion when it just starts,while rate tuning phase is responsible for keeping the performance of Crimson fluctuating near QoS requirements of applications.

Crimson’s rate control begins with a startup phase in which the sender chooses sending rate at the first MI.The startup phase follows an additive increase multiplicative decrease(AIMD)process.In initial process,a default coefficient of minimum RTT and RTT thresholdβis set.Then the RTT threshold is computed as,

If the number of data packets that continuously exceed the RTT threshold exceeds the specified numberτ(see in Table 1),Crimson enters the rate tuning phase.

In rate tuning phase,Crimson’s rate control works by translating the rate gradients to sending rates.Suppose the current sending rate isr.In the next MI,the sender will change the sending rate withr(1+ε).Crimson utilizesεto deduce the direction and extent to which the rate should be changed.

In general,accurate measurements require a long observation time,yet that slows reaction to changing environments.The rate gradient can be excessively high due to unreliable measurements in each MI.For example,from startup phase changing to rate tuning phase,an abnormal gradient value may be computed due to the initial value ofε.To address this problem,we leverage domain-knowledge to introduce a bandpass filter function to adjust ∆ε.

To guarantee the fast and stable convergence,Crimson introduces a“zoom factor”θ.It starts with a conservatively small numberθ0.Thenθis dynamically adjusted according to the gap between measurement and specified-QoS.Intuitively,when the measurement is far away from the specified-QoS,θis set a larger number to achieve fast convergence.Correspondingly,when closing to the target,θis set to a smaller number to ensure stable convergence of Crimson.The sender repeatedly changes the rate in the different direction(increase or decrease)according to the adaptiveθ.

IV.IMPLEMENTATION

In this section,we describe the implementation and interface of Crimson.

4.1 Implementation

We implement a user-space prototype of Crimson based on UDP using Python.In Crimson,the sending and receiving events are implemented by the messagetriggered mechanism.And we change the sender-side congestion control with Crimson.The sender program receives the QoS requirement from applications,and controls the packets sending behavior through data sending engine(see in Figure 1),while the receiver receives packets from the sender and return ACKs.

Algorithm 1.Crimson’s rate control algorithm.Input: the QoS requirements Q,Initial rate Output:the current rate 1: //Startup phase 2: Set the number of consecutive packets exceeding the RTT threshold τ;3: Set the coefficient of minimum RTT and RTT threshold β 4: Measurement the current minimum RTT and calculate RTT threshold;5: while received ACK do 6: Calculate RTT;7: Calculate ACK rate;8: Update rate using AIMD;9: Countthenumberofconsecutive packetsexceededRTTthreshold num threshold;10: if num exceeds threshold ≥τ then 11:if the current rate>>ACK rate then 12:the current rate=ACK rate 13:end if 14:Switch to rate tuning phase;15: end if 16: end while 17:18: //Rate tuning phase 19: Measurement the throughput,delay and loss rate;20: Set zoom factor θ;21: set the interval time of tuning rate γ 22: while Receive ACK do 23: Update network statistics;24: if current step time ≥γ then 25:Update network measurement Q′;26:Calculate ∆ε using Q and Q′;27:if ∆ε is abnormal then 28:adjust ∆ε using band-pass filter function;29:end if 30:Update θ according to the Q and Q′;31:Update ε according to θ and ∆ε;32:Calculate the current rate using ε;33: end if 34: end while exceeds

The implementation of Crimson has two phases,i.e.,the startup and rate tuning phase.The parameters and their descriptions are listed in Table 1.In the startup phase,we leverage AIMD as a basic algorithm and setβ=1.25 andτ=3.That is to say,when three continuous ACKs exceed the RTT threshold,the startup phase ends.In the rate tuning phase,we set the decision intervalγto two minimum RTT.And the sender adjusts the action continuously according to the decision interval until the performance measurement reaches the QoS requirement.Then Crimson keeps the action stable.If the performance does not meet the requirements due to the change of network conditions,Crimson will enter the rate tuning phase again.

4.2 Interface

Due to the diversity of application requirements,Crimson provides the interface for applications to set their specified-QoS requirements for performance or preferences.That allows Crimson to perceive the target value of application performance directly.Depending on the interface,Crimson can optimize the performance towards the specified-QoS indicators,which improves the utilization of limited network resources effectively.

V.EVALUATION

Here,we demonstrate the advantages of Crimson over different TCP and learning-based schemes.In particular,we illustrate Crimson’s consistent high performance in different networks (§5.1),fairness and friendliness (§5.2),overhead of Crimson (§5.3) and real world performance(§5.4).

5.1 Consistent High-completion-rate

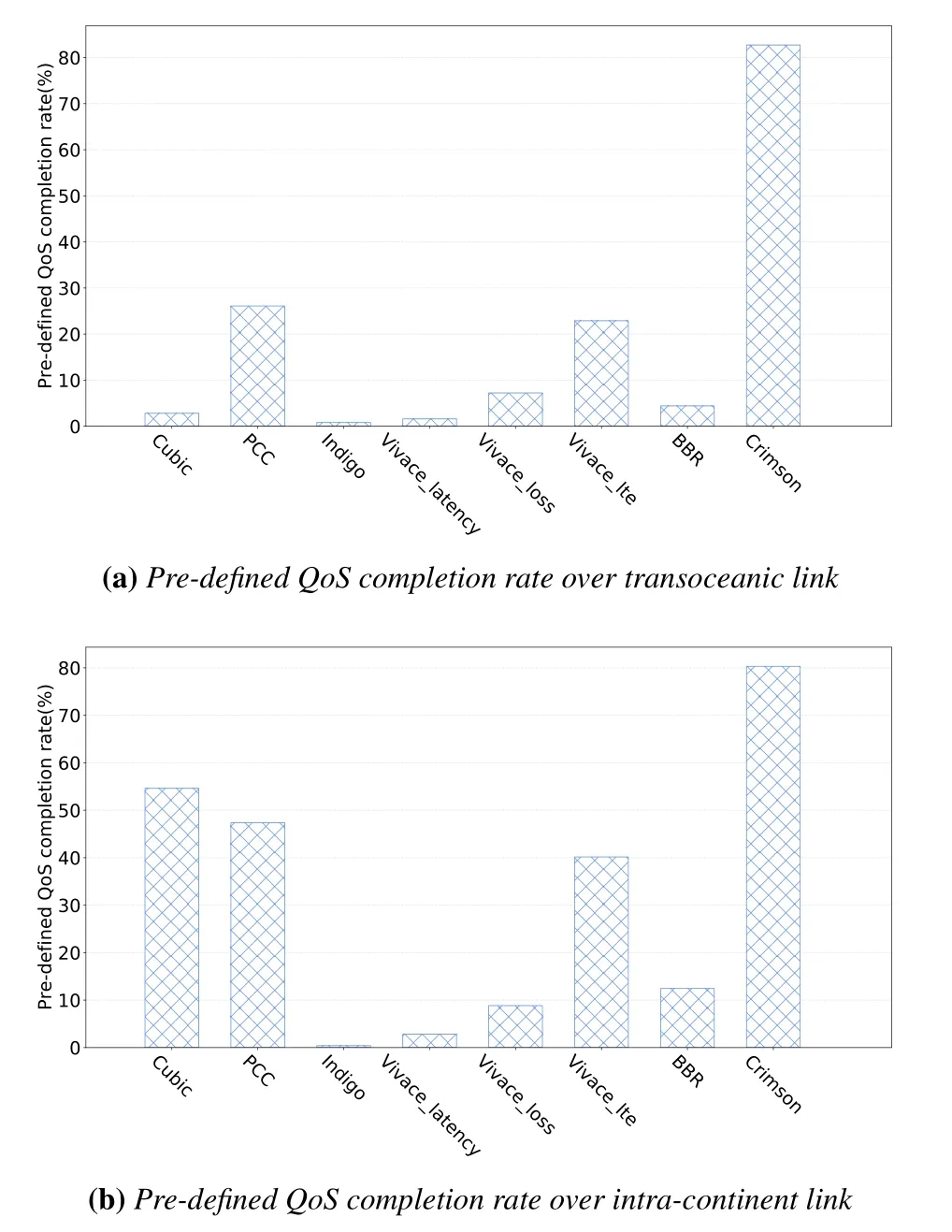

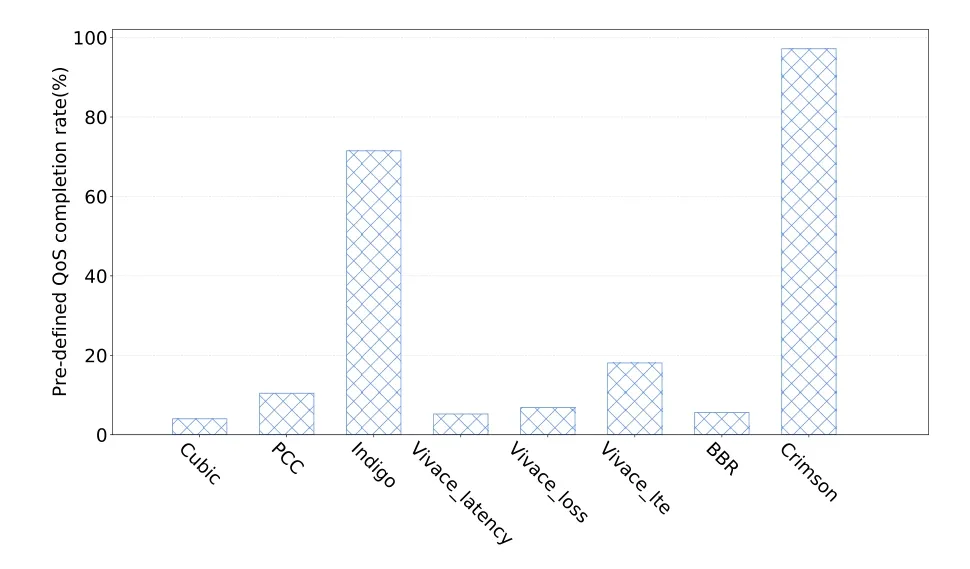

Completion rate means the ratio of the target achieved intervals to all time intervals.We demonstrate that Crimson achieves consistent high completion rate over different network conditions on emulated environments.First we evaluate Crimson over the emulated environments calibrated to the real path from AWS Korea to China(as shown in Figure 2a).This link has an average 77.72 Mbps bandwidth,101 ms minimum RTT and 94 packets drop-tail queue buffer.Crimson achieved target completion rate of around 50% more than that of PCC.The second real path from AWS California to Mexico(as shown in Figure 2b)with average 114.68 Mbps bandwidth,90 ms minimum RTT and 450 packets drop-tail queue buffer.And Crimson achieved target completion rate of around 25% more than that of Cubic.We also test Crimson over an emulated stable link(from Pantheon[8]).The link has an average 12 Mbps stable bandwidth,60 ms minimum RTT and a drop-tail queue with 60 packets of buffer.As shown in Figure 3,Crimson achieves the highest completion rate among all the schemes.Thus,Crimson outperforms other schemes over both stable and dynamic environments.

Figure 2.Pre-defined QoS completion rate over wide-area link.

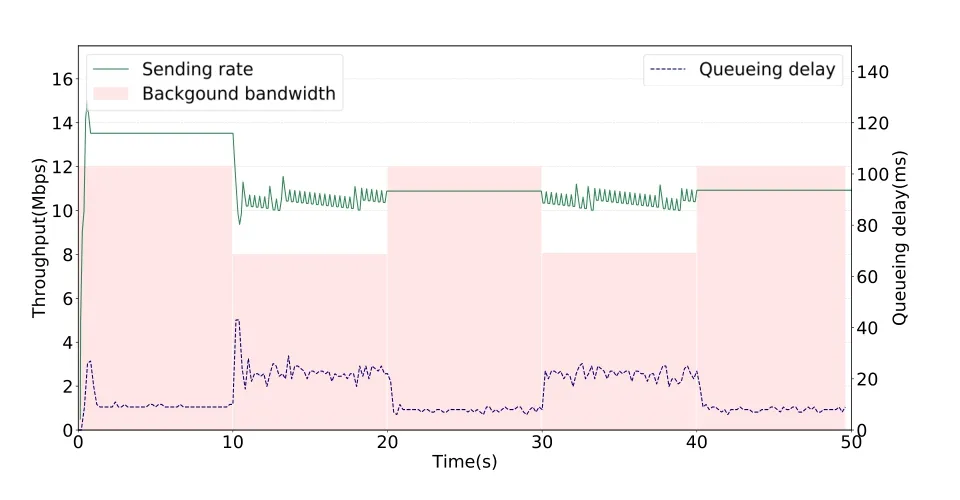

Phase analysis.Figure 4 shows that Crimson enters different phases over time.In the beginning,Crimson enters the startup phase using the slow start algorithm.Then its sending rate increases rapidly within one second.When the RTT of packets increases to the threshold,Crimson enters the rate tuning phase.During the second phase,Crimson keeps sending rate stable when the QoS requirements are satisfied.In contrast,when the requirements are not satisfied,the sending rate of Crimson will fluctuate near the requirements according to the gradient of the performance measurement and the target until the network condition recovers to satisfy the target.

Figure 3.Pre-defined QoS completion rate over stable link.

Figure 4.The behavior of Crimson over dynamic link.

5.2 Fairness and Friendliness

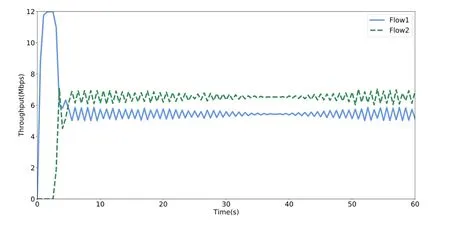

Fairness of Crimson.To understand the behavior of Crimson facing other Crimson flows,we set up two competing Crimson flows with the same target simultaneously and each flow runs continuously for 60 seconds.Figure 5 depicts the fairness property between two Crimson flows with the same QoS requirements.As expected,Crimson can achieve acceptable fairness with other Crimson flows and keep the throughput fluctuating near the pre-defined requirement.

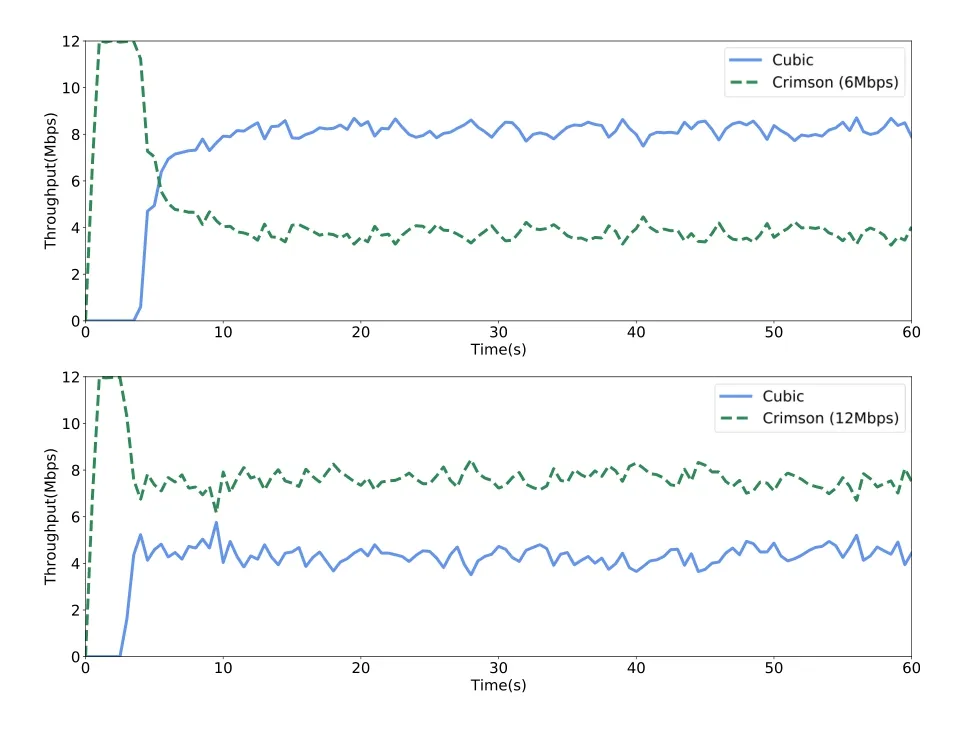

Friendliness with TCP flows.To evaluate the Crimson’s friendliness with TCP flows,we examine different target flows of Crimson competing with Cubic flow on our testbed.We choose Cubic flow as the reference flow on the fact that Cubic is the default deployment in the Linux kernel.In our experiments,we start two flows from the sender to the receiver using Crimson with different requirements.We set up the target of Crimson’s flow with 12 Mbps throughput and 6 Mbps throughput respectively.

The results are shown in Figure 6.We report the throughput of Crimson flow and Cubic flow over time.When competing with Crimson flow with nonpreemptive target,Cubic flow performs more aggressively to occupy more bandwidth of the link.However,if Crimson has an aggressive target,it achieves higher throughput than that of Cubic.Thus the friendliness of Crimson depends on its target setting.

Figure 5.Dynamic behavior of competing Crimson flows with the same pre-defined QoS.

Figure 6.Dynamic behavior of Crimson with different predefined QoS vs.Cubic flow.

5.3 Overhead

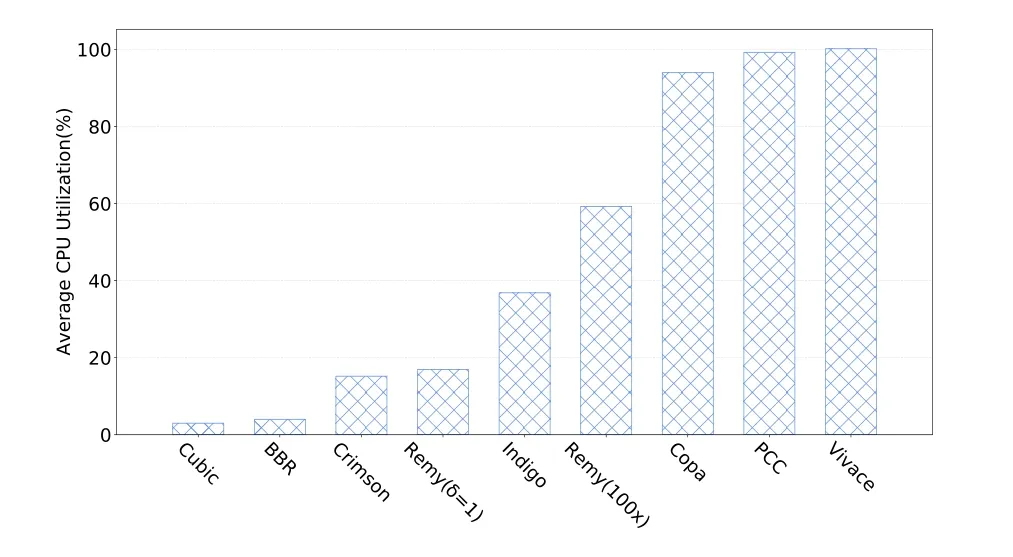

To investigate the overhead of Crimson and compare it with the off-the-shelf congestion controls,we set up an emulated network with 12 Mbps bottleneck link and 60 ms RTT.The experiment sends traffic from the sender to the receiver over 60 seconds for each time.We measure the average CPU utilization of different schemes on the sender.On account of Cubic and BBR implemented in the kernel,we evaluate the CPU utilization of iperf for sending their traffic.

Figure 7.Overhead of Crimson compared with other schemes.

The results are shown in Figure 7.It is worth noting that the overhead of different offline-trained models is not the same due to their different complexity.For example,the overhead of Remy(100x) (provided by[23])is 40%higher than that of Remy(δ=1)(provided by [10]).As we expected,Crimson,similar to Remy(δ=1),achieves a lower overhead than that of other schemes except for BBR and Cubic.But as the calculation of gradient consumes little computing resource,the overhead mainly comes from the process of packet sending and ACK receiving.If we implement Crimson in a more efficient language(such as C or C++language)instead of Python,it could be possible to achieve even lower overhead.

5.4 Real World Performance

We evaluate Crimson over wide-area Internet paths spanning two continents.The receiver is located in a cloud server in the USA.The sender is located inside our campus and connected to the receiver through the wide-area network.

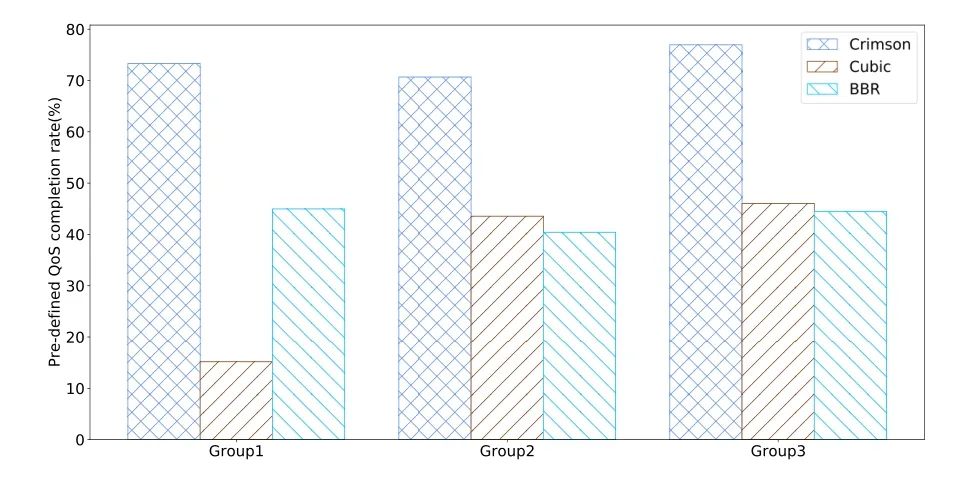

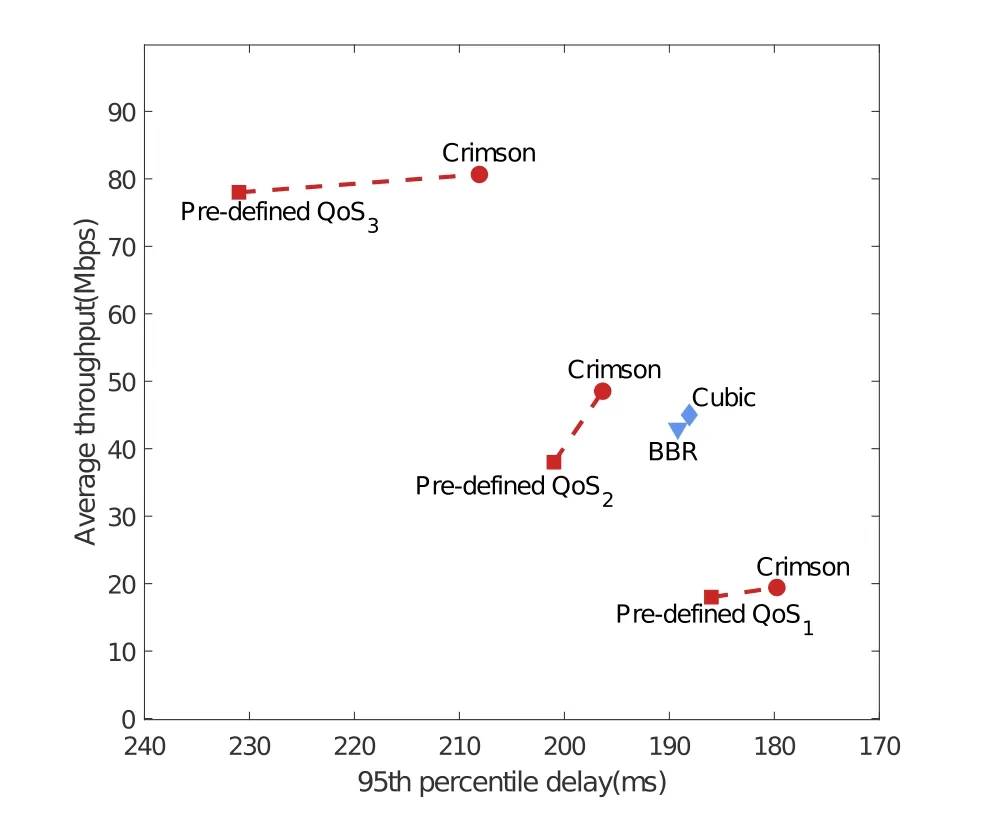

To evaluate the pre-defined QoS completion rate of Crimson,we choose 20 pre-defined QoS for Crimson,then test Crimson,Cubic and BBR at 3 different time of one day and divide them in 3 groups.Intuitively,for a given congestion control scheme,the higher predefined QoS completion rate is obtained,the more application requirements can be satisfied.As shown in Figure 8,at the different time of the day,Crimson achieves a higher pre-defined QoS completion rate than that of Cubic and BBR.That means,Crimson can tune itself to adapt to both network conditions and pre-defined QoS.It also uses network resources more efficiently than that of Cubic and BBR,which have no pre-defined QoS.Furthermore,we test the average performance of Crimson with Cubic and BBR.The result is shown in Figure 9.The average throughput and 95th percentile delay achieved by Crimson are significantly different under three different pre-defined QoS.Guided by pre-defined QoS,Crimson can not only satisfy the corresponding requirement but achieve better performance in both throughput and delay.According to Figure 9,Crimson with pre-defined QoS1achieves lower latency,while Crimson with pre-defined QoS3achieves higher throughput.And the average performance of Crimson satisfies the requirement of the three pre-defined QoS.

Figure 8.Pre-defined QoS completion rate over intercontinent link(CN-USA).

Figure 9.Performance over inter-continent link(CN-USA).

VI.DISCUSSION

Crimson is a QoS-aware congestion control scheme that optimizes its performance according to predefined QoS.Although the performance of Crimson is encouraging,there still remain some issues to be solved.

Coexistence with different pre-defined QoS.A potential concern for Crimson is how high-throughput flows affect low-latency flows.When the bandwidth of the network cannot satisfy all flows,the buffer of the network will be occupied.Thus,the latency of all flows will tend to be the same.As the low-latency flows cannot satisfy their pre-defined QoS,the performance will fluctuate around their pre-defined QoS.

Unreasonable pre-defined QoS.Intuitively,all congestion controls can not achieve the performance that breaks through the limitation of network conditions.When receiving an unreasonable pre-defined QoS,Crimson failed to satisfy the pre-defined QoS certainly.According to Algorithm 1,∆εcannot keep zero,so the sending rate acquired from Crimson will fluctuate near the largest bandwidth of the network environment and fail to converge.To solve the problem,Crimson needs to estimate the feasibility of the predefined QoS and designs a scheme to guarantee convergence when receiving an unreasonable pre-defined QoS.We leave it as our future work.

Multi-dimensional QoS indicators.To ensure QoS indicators,we have selected three key parameters including throughput,delay and loss rate.In addition,we can also add more parameters to the pre-defined QoS,such as jitter and reliability.In Crimson,only a simple extension of the gradient descent algorithm can satisfy the requirements of multi-dimensional QoS indicators.

Fairness problem.Fairness is an important aspect of the congestion control algorithm.Although the proposed algorithm improves the QoS indicators,it should also demonstrate multi-metric fairness in the real world.We leave it in our future work.

VII.CONCLUSION

We proposed Crimson,a congestion control based on an online learning algorithm.Crimson leverages a QoS-based gradient descent algorithm to learn rate gradient according to the gap between the current measurement and pre-defined QoS.Then it can control the sending rate based on the rate gradient to meet applications’ QoS requirements.Extensive experimentation reveals that Crimson efficiently balances different trade-offs between the throughput,delay and loss rate and achieves consistent performance over a wide range of QoS requirements and network scenarios.

ACKNOWLEDGEMENT

This work is supported by the National Natural Science Foundation of China under Grant 62132009 and 61872211.

- China Communications的其它文章

- Quality-Aware Massive Content Delivery in Digital Twin-Enabled Edge Networks

- Edge-Coordinated Energy-Efficient Video Analytics for Digital Twin in 6G

- Unpredictability of Digital Twin for Connected Vehicles

- Endogenous Security-Aware Resource Management for Digital Twin and 6G Edge Intelligence Integrated Smart Park

- Digital Twin-Assisted Knowledge Distillation Framework for Heterogeneous Federated Learning

- Design and Implementation of Secure and Reliable Information Interaction Architecture for Digital Twins