Visual inspection of aircraft skin: Automated pixel-level defect detection by instance segmentation

Meng DING, Boer WU, Jun XU,b, Abdul Nsser KASULE,Hongfu ZUO,b

a College of Civil Aviation, Nanjing University of Aeronautics and Astronautics, Nanjing 210016, China

b Key Laboratory of Civil Aircraft Health Monitoring and Intelligent Maintenance of CAAC, Nanjing 210016, China

KEYWORDS Aircraft skin;Automatic non-destructive testing;Defect inspection;Instance segmentation;Machine vision

Abstract Skin defect inspection is one of the most significant tasks in the conventional process of aircraft inspection.This paper proposes a vision-based method of pixel-level defect detection,which is based on the Mask Scoring R-CNN.First, an attention mechanism and a feature fusion module are introduced,to improve feature representation.Second,a new classifier head—consisting of four convolutional layers and a fully connected layer—is proposed, to reduce the influence of information around the area of the defect.Third,to evaluate the proposed method,a dataset of aircraft skin defects was constructed,containing 276 images with a resolution of 960×720 pixels.Experimental results show that the proposed classifier head improves the detection and segmentation accuracy,for aircraft skin defect inspection,more effectively than the attention mechanism and feature fusion module. Compared with the Mask R-CNN and Mask Scoring R-CNN, the proposed method increased the segmentation precision by approximately 21%and 19.59%,respectively.These results demonstrate that the proposed method performs favorably against the other two methods of pixellevel aircraft skin defect detection.

1. I ntroduction

According to the industry standards for Civil Aviation of The People’s Republic of China MH/T 3019-20091and the related airworthiness standards of civil aviation authorities from other countries,2skin inspection of civil aircraft by visual testing is a highly significant step for ensuring the structural integrity and airworthiness of aircraft. Aircraft skin inspection can detect surface defects, such as paint detachment, cracks, and corrosion, to prevent the occurrence of potential risks. Even to this day, visual inspection by airport ground crew is still the most widely used approach for aircraft skin inspection. Nevertheless, human visual inspection has potential problems and deficiencies. First, because the visual inspection of a civil aircraft usually includes 21 position points from the nose to the tail of the aircraft and more than 100 inspection items,3human visual inspection is time-consuming and inefficient. Second,the results of visual inspection are easily affected by subjective factors of the inspector, for example, fine cracks may not be detected because of inspector fatigue or boredom. Third, several objective factors, such as bad weather or excessively high or low temperature, also reduce the efficiency and accuracy of human visual inspection. Finally, skin inspection of several specific regions at high locations of the aircraft may risk the safety of the inspectors.For these reasons,this paper proposes an automated defect inspection method for aircraft skin, to replace manual inspection.

In the era of Industry 4.0,automatic nondestructive testing,using computer vision, plays an important role in smart manufacturing, enabling real-time evaluation and monitoring of quality.4Automated surface inspection is an application of computer vision to nondestructive testing: it uses computer vision equipment to determine whether defects are present in collected images. Providers of civil aviation operation and maintenance services exploit computer-vision-based systems for automated aircraft skin inspection, to increase the efficiency and safety of the inspection and maintenance processes.5Generally, the core technology of an aircraft skin inspection system includes two main components: the hardware platform and the software algorithm.The inspection system requires a hardware platform that has good flexibility and mobility. Fixed-morphology climbing robots and drones are currently used for aircraft skin inspection and can capture clear images of the aircraft skin.6Therefore, software algorithms have become the key to upgrading the performance of aircraft skin inspection systems. Following this principle, the work described in this paper aimed to develop an inspection algorithm to detect pixel-level aircraft skin defects.

In the last decade, computer-vision-based skin inspection has been applied in various industrial fields, such as aircraft manufacturing and maintenance,7-8inspection of products’surfaces,9-10and smart agriculture.11In general, the existing methods of computer-vision-based skin or surface inspection can be roughly grouped into two categories: those based on traditional methods and those based on deep learning.The former methods usually use threshold segmentation—based on edges, brightness, and color information—to detect defects.Although the performance of these methods is excellent in controlled background environments and specific brightness conditions, their generalization ability cannot satisfy the requirements of complex real-world scenarios because of the presence of background noise. For example, the threshold value of segmentation has to be varied for different defects and it is difficult to design an adaptive segmentation method that is robust to changes in the background environment. In recent years, deep-learning-based methods of defect detection have been increasingly attracting attention from both the academic and industrial worlds. These methods are further divided roughly into two categories according to their different outputs: classification-based methods, which output the bounding box of the defect,and segmentation-based methods,which output the classification results of each pixel of the defect. Generally, the appearance of an aircraft skin defect is irregular. Therefore, it is not appropriate to label skin defects by using the rectangular bounding boxes obtained by classification-based methods. Formally due to the variable shape and varying scale of the damage areas,instance segmentation is usually used to accurately identify and locate each defect in an image in automated surface inspection. Instance segmentation is a subcategory of image segmentation, which can be formulated as the problem of classifying pixels with partitioning of individual objects; it is used to perform object detection and semantic segmentation simultaneously. One of the most representative and successful algorithms for instance segmentation is Mask R-CNN,which has excellent generalization ability and is simple to train because it is an extension of the Faster R-CNN detector, by adding a mask predictor.12Many automated surface inspection approaches that directly use the framework of Mask R-CNN have already shown promising results in various application areas, such as road damage detection,13manufacturing defect detection,14fastener damage detection,15and leather defect segmentation.16

To provide more reliable and accurate information for the tasks of aircraft skin defect assessment that follow detection,this study is aimed at developing a defect detection method with state-of-the-art performance in the framework of Mask Scoring R-CNN,17which is an improved version of Mask RCNN. To the best of our knowledge, this study was the first attempt to improve the framework of Mask Scoring R-CNN to address the problem of aircraft skin defect detection. The proposed method can effectively and automatically detect damaged areas ranging from 40 pixels to 90000 pixels in area.Therefore, it can tackle the existing problems of human visual inspection.

The remainder of this paper is organized as follows. Section 2 discusses the related work.Section 3 presents the details of the proposed method. Section 4 describes and analyzes the experimental results and compares the proposed algorithm with state-of-the-art algorithms. The conclusions are drawn in Section 5.

2. Related works

2.1. Vision-based surface defect inspection

Surface defect inspection is an important research topic with broad applications in the field of computer vision. In real and complex industrial environments, surface defect detection often faces many challenges, such as the large variation in defect scale, the diversity of defect types, and noise interference. It is difficult for traditional methods of defect detection to solve these problems.In recent years,because of the successful application of deep-learning-based methods—represented by the Convolutional Neural Network (CNN)—in various fields of computer vision, many methods of defect detection based on deep learning have become widely used in various industrial scenarios. Deep-learning-based methods have become the mainstream of visual defect inspection.

Deitsch et al. used the VGG19 neural network to identify defective photovoltaic module cells in electroluminescence images with a resolution of 300 × 300 pixels.18Liang et al.classified code defects of plastic containers by using the ShuffleNet network in complex backgrounds.19Cha et al. used a sliding window and a CNN-based classification network to perform the location and recognition of surface defects.20Although this method can perform defect location, its speed is slow and its location error is large. Tao et al. proposed a CNN cascading architecture for performing localization and detecting defects in insulators.21Zhang et al. used the YOLO detector to locate defects on bridge floors.22Although this target detection algorithm can locate a defect, it cannot segment the defect to obtain its shape and size.Generally,the main purpose of vision-based defect inspection is to perform the recognition, location, and size estimation of defects. Therefore,image segmentation methods have been increasingly used for defect detection. For example, Zou et al. proposed a semantic segmentation model for automatic crack detection.23However,such methods cannot classify different defects. In contrast to semantic segmentation, instance segmentation algorithms can detect, segment, identify, and locate defects simultaneously.Therefore,this study is aimed at developing a method of defect detection by using instance segmentation.

2.2. Instance segmentation for defect inspection

Instance segmentation can be considered to be a combination of semantic segmentation and object detection. It first detects the different targets (object detection) in the image and then masks the category to which each pixel belongs (semantic segmentation).24Generally, semantic segmentation does not distinguish between different instances that belong to the same category. In contrast, instance segmentation can do this.According to the definition of instance segmentation, it consists of two main categories: one-stage approach represented by SOLO25and two-stage approach represented by Mask RCNN.Both types of algorithms have their advantages.Generally, the two-stage instance segmentation has high accuracy but is slightly inferior to the one-stage algorithm in terms of computational speed. Considering the high requirements for segmentation accuracy in aircraft skin damage detection, the two-stage method is chosen as the basic framework in this paper. Mask R-CNN is recognized as the first successful two-stage instance segmentation method.26It is an improved version of Faster R-CNN that accomplishes the task of pixel-level object recognition by adding an object mask prediction branch in parallel. One of the advantages of Mask RCNN is its ease of generalization with regard to other related tasks. Therefore, it is widely used for defect detection tasks in various application areas. One of the latest improvements of Mask R-CNN is Mask Scoring R-CNN, which predicts the Intersection over Union (IoU) between the input mask and ground-truth mask by introducing the Mask-IoU into the Mask R-CNN framework.17Generally, this method outperforms Mask R-CNN because it prioritizes better predictions of masks. Consequently, this paper proposes a highperformance pixel-level method of aircraft skin defect detection by improving the framework of Mask Scoring R-CNN.

Instance segmentation can separate the defect regions and count the number of defects. Recently, instance segmentation has been widely used in surface inspection. Xi et al. presented a method of gear pitting detection, based on Mask R-CNN.27Compared with applications in other branches of industry,few studies in the literature have focused on aircraft skin inspection.28Siegel and Gunatilake designed a method, combining wavelet-based image enhancement with a neural network, to classify the cracks in,and corrosion of,aircraft skin.29Alberts and Siegel improved the neural network of this algorithm to distinguish between healthy and cracked regions.30To differentiate between cracks and scratches on the aircraft skin,Mumtaz et al. proposed a method that combines discrete cosine transform, contourlet transform, and a dot product classifier.31Moreover, several algorithms for aircraft skin inspection using image processing approaches, such as edge detection and Hough transform, have been proposed and tested on specific areas of aircraft, such as the oxygen bay,radome latch, air inlet vent, engine, and pitot probe.6In summary,to the best of our knowledge,there is no published work on methods of aircraft skin defect detection using instance segmentation. This paper proposes an approach that uses an improved Mask Scoring R-CNN framework to perform pixel-level defect detection of the aircraft skin.

To sum up, compared with the existing research, the main contributions of this paper are summarized as follows:

(1) To learn more significant features for defect detection,an attention mechanism and a feature fusion module are added,in serial,to the framework of the Mask Scoring R-CNN. The feature maps from the outputs of the backbone network are sequentially input to the two added modules to enhance the ability to distinguish the defect, ignore the redundant invalid information,and fuse features with different scales.

(2) A new classifier head,which contains four convolutional layers and a fully connected layer, is proposed. Experimental results show that the proposed classifier head plays a more important role than the attention mechanism and the feature fusion module in improving the accuracy of defect detection.

(3) To analyze and assess the performance of the proposed method of defect detection,276 images were collected to construct a dataset of aircraft skin defects.To the best of our knowledge,this is the first public dataset specifically for aircraft skin defect detection.

(4) Based on the first and second contributions, in the framework of Mask Scoring R-CNN, a method of aircraft skin defect detection is proposed. The results of comparative experiments with state-of-the-art methods of instance segmentation validate the effectiveness of the proposed method.

3. Methodology

3.1. Framework of proposed method

As shown in Fig. 1, the proposed framework consists of three parts.The purpose of the first part is to generate feature maps.In this study,because of the limited size of the dataset used,the feature maps were directly extracted by a pre-trained ResNet-5032neural network. The second part is a Region Proposal Network (RPN), which is used to generate proposals that describe areas that may contain a target, which are called Regions of Interest (ROIs). The third part contains three branches. The first branch is the classifier head that is used to regress the bounding box coordinates and classify the associated classes. In the second branch, to segment the object at the pixel level,the mask head is used for the binary mask computation. The final branch is the Mask-IoU head, which is used for mask scoring evaluation. The basic framework of the proposed method is inherited from Mask Scoring RCNN, which is an improved version of Mask R-CNN with an additional branch—called the Mask-IoU head—for learning the Mask-IoU-aligned mask score. This additional head takes, as input, the concatenation of the feature map from the ROIAlign operation and the predicted mask, and consists of four convolutional layers and three fully connected layers.During the training process, RPN proposals with an IoU greater than 0.5 are used as training samples, and the L2loss function JmaskIoUis used for regressing Mask-IoU.

This paper presents an instance segmentation method of aircraft skin defect detection in the Mask Scoring R-CNN framework. Because of the irregularities of skin defects and to enhance the ability to represent defect features, feature map generation is improved by the addition of an attention mechanism and a feature fusion module. To reduce the influence of the information surrounding proposals, and to improve the positioning accuracy of the defect area and the recognition accuracy of the defect category, a new classifier head is proposed, which contains four convolutional layers and a fully connected layer.

The proposed method as a whole is used for multitask learning, and its loss function can be written as.

3.2. Attention mechanism

It is well-known that the strategy that adds the attention mechanism to feature representation can both focus on important features and suppress those that are unnecessary. This study used the Convolutional Block Attention Module (CBAM)33to increase the representation power of each feature map from the outputs of the backbone network. As shown in Fig. 2,CBAM consists of two submodules:the channel attention submodule and the spatial attention submodule.

The channel attention submodule is used to exploit the inter-channel relationship of features. Feature maps with strong discrimination are screened out by the channel attention submodule. Specifically, supposing that the feature map F ∈Rm×n×Cis the output of a ResNet block of the backbone network, a global maximum pooling operation and a global average pooling operation are used for the feature maps of each channel Fi∈Rm×n,i=1,2,...,C, to generate two feature vectors: the max-pooled feature MC=[MC1,MC2,...,MCC] and average-pooled feature AC=[AC1,AC2,...,ACC], respectively.

where max() denotes the largest element of the matrix and average() denotes the average of all elements of the matrix.The two vectors MCand ACare then sent to a multilayer perceptron, which consists of a C-dimensional input layer, an Rdimensional hidden layer (where R = C/4), and a Cdimensional output layer. Two new feature vectors, M′Cand A′C,output by the multilayer perceptron are merged using elementwise summation and then normalized by the sigmoid function to obtain the channel attention weight vector WC∈RC.Each element of the channel attention weight vector is multiplied elementwise by the feature map of the corresponding channel to obtain a new feature representation F′ ∈Rm×n×C.

The spatial attention submodule focuses on the location of the informative part of the image.F′denotes the inputs of this submodule. First, the maximum pooling and average pooling operations are used to compute the maximum and average values,respectively,along the channel axis and obtain two matrices: MS∈Rm×nand AS∈Rm×n.Second, these two matrices are concatenated to generate a feature tensor TS∈Rm×n×2.Third,the network—with a convolution layer with a 7 × 7 kernel and a sigmoid function—is used to generate the final spatial attention weight matrix WS∈Rm×n. Computing the Hadamard product of each channel of F′(i)and WSobtains the output feature map F′′∈Rm×n×C, which contains more key information in both the spatial and channel domains.

3.3. Feature map fusion

In general, images of aircraft skin defects have two typical characteristics. The first is the large variation in the scale of the defect area, and the second is the irregularity of the edges of the defect area.To better detect and segment defects of different scales and shapes, this study adopted a feature map fusion strategy. It is well-known that feature maps from the shallow convolutional layers of ResNet include detailed information that is necessary for defect location, and feature maps from the deep convolutional layers include more semantic information that facilitates defect classification. This results in the loss of detailed information, such as very small defects and irregular defect edges,in high-level feature maps.By combining detailed and semantic information, feature map fusion can enhance the feature representation capability of the net-

3.4. Classifier head

In the framework of Mask Scoring R-CNN,the classifier head,which provides the category of each target and the location of the corresponding bounding box, plays an important role.Specifically,the size of the bounding box is the most important factor in determining the layer whose feature map is used to represent the target feature; it can also affect the accuracy of the defect area segmentation and the object category used to select the channel of the final target mask. The original classifier head of Mask Scoring R-CNN consists of two fully connected layers in series; these are followed by two parallel fully connected layers, of which one is for object classification and the other is for bounding box regression.

Generally, Fully Connected (FC) layers are more spatially sensitive than convolutional layers and are suitable for determining the class of objects,while convolutional layers are more suitable for localization regression tasks.35When the FC layer is connected to the convolutional layer,the information of the convolutional layer first needs to be flattened into a vector in a certain order before being used as the input of the FC layer.Therefore, the FC layer can integrate the local information with category discrimination in the convolutional layer, and is suitable for object classification.

Along this line of thought, our study designed a new network structure for the classifier head to improve its performance. Concretely, four convolutional layers in series are used to re-encode the feature map corresponding to the bounding box. The sizes of the outputs of the convolutional layers are the same. The re-encoded feature map is then mapped to a vector through a FC layer. Finally, two parallel fully connected layers are used: one for object classification and the other for bounding box regression.The details of this new classifier head are shown in Fig. 4. Each convolution process is activated by a Rectified Linear Unit (ReLU) activation function.

4. Experimental results

4.1. Dataset

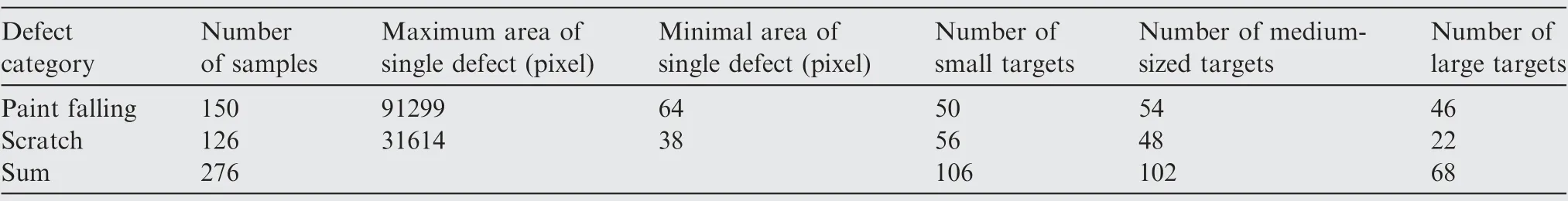

For the vast majority of machine learning based algorithms,datasets which can offer training and test samples are the foundation of related research work.Nevertheless,until now,there has been no public dataset that could be used to evaluate aircraft skin defect detection methods.Therefore,it was necessary to construct a special dataset for this purpose.The constructed dataset contains 276 images—with a resolution of 960 × 720 pixels—of aircraft skin defects on the fuselage, wings, and tail of A320 and B737 aircraft under maintenance. These images,as shown in Fig. 5, were captured manually or by drones and can be divided into two categories according to defect type: there are 150 images of surface paint detachment and 126 images of surface scratches. Details of the dataset are shown in Table 1.Each set of images in the dataset was divided into a training set and a test set with the ratio of 4:1. During the training process, data augmentation techniques, including random flipping and random rotation, were used to prevent overfitting. To facilitate comparison with the Mask R-CNN and Mask Scoring R-CNN, the Labelme software was used to label all samples of the dataset, pixel by pixel, according to the format of the COCO dataset.36

4.2. Experimental details

The proposed method was evaluated according to the Mask Scoring R-CNN benchmark. It was assessed by using the

Table 1 Details of dataset used in our experiments.

Average Precision (AP)as the evaluation metric.Precision(P)is the proportion of prediction results that are correct,and can be defined as.

where True Positives (TP) is the number of positive samples that are correctly classified and False Positives (FP) is the number of positive samples that are incorrectly classified. A correctly classified sample is the one for which the IoU is greater than a threshold.The IoU,between a predicted region and the ground truth, is defined as.

AP is the average of multiple IoU values,where the threshold of IoU(to determine whether a sample is considered a positive match) varies from 0.5 to 0.95 with a step size of 0.05.APTMand APTBdenote the average precision,using IoU threshold T,to identify whether a predicted Mask(M)and bounding Box(B)are positive, respectively. Therefore, APTMdenotes the accuracy of pixel-level segmentation and APTBdenotes the accuracy of detection, taking the bounding box as output.Moreover, the three values APS, APM, and APLassess the prediction precision of targets with different scales. APSis the prediction precision of a small target, whose area is less than 322 pixels. APMis the prediction precision of a medium-sized target,whose area is between 322 and 962 pixels.APLis the prediction precision of a large target, whose area is greater than 962 pixels.

The hardware used for network training was a GPU server equipped with an Intel(R) Xeon(R) E5-1620 v4 CPU @3.50 GHz with 64 GB of RAM and an NVIDIA GTX 1060 with 6 GB of RAM. The proposed method was implemented in Python 3.6.10. The parameter settings used in the training procedure for the experiments are summarized as follows.The Adam optimizer was used to update the network weights,the initial learning rate was set to 0.001,and the cosine cooling strategy was used for the learning rate update. The backbone network in the experiment was ResNet-50, whose weights had been pre-trained on ImageNet.

4.3. Ablation study

The proposed method is based on the Mask Scoring R-CNN,with the addition of the attention mechanism and feature fusion module and the newly designed classifier head. Therefore,the original Mask Scoring R-CNN was used as the base-line in this experiment,to assess the contribution of each added or improved module to the performance of the method.

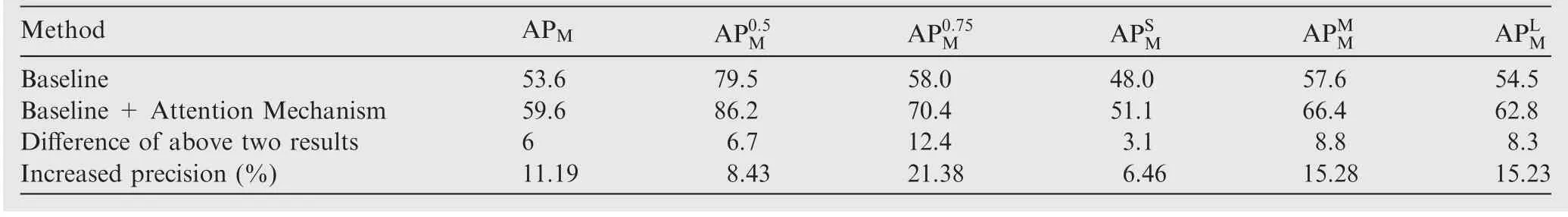

Table 3 Comparison of segmentation performance before and after attention mechanism was added.

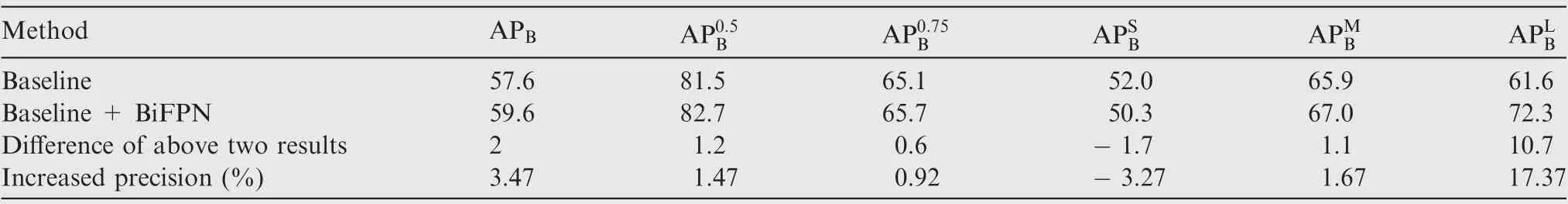

Table 4 Comparison of detection performance before and after feature fusion module was added.

(1) Attention mechanism: Tables 2 and 3 show that the defect detection performance and segmentation performance improved overall after the attention mechanism was added.Specifically,APBand APMincreased by 4.7 and 6,respectively.As shown in Table 3, for the segmentation results represented by the masks,all six values of AP increased after the attention mechanism was added. However, the rightmost column of Table 2 shows that APLBdecreased by 7.8 (corresponding to 12.66%) for the detection result represented by the bounding box. The reason for this result is that the large amount of background information in the bounding box reduced the positioning accuracy of the bounding box because of the irregularity of the defect area.

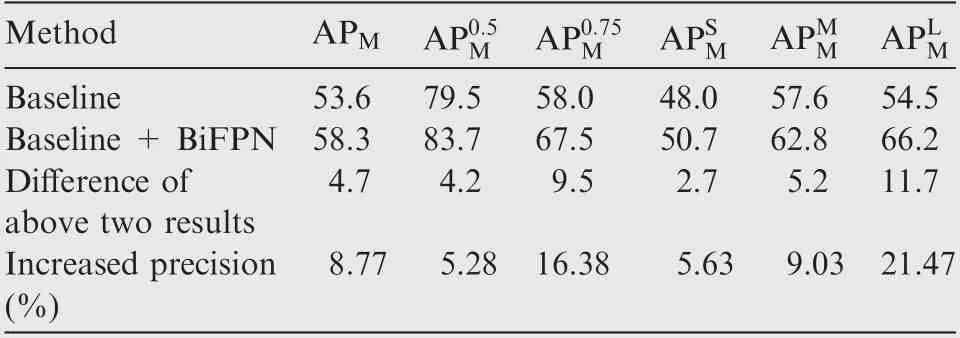

(2)Feature fusion module:Tables 4 and 5 show the following results.First,although introducing the feature fusion module to the framework of Mask Scoring R-CNN improved the defect detection and segmentation performance, this perfor-mance improvement is less obvious than that caused by the introduction of the attention mechanism. Second, the feature fusion module increased APLBand APLM(representing the detection and segmentation ability for large targets) by 10.7 and 11.7, respectively. In contrast, the feature fusion module reduced APSB(representing the detection ability for small targets) by 1.7. The differences between APLB(in Table 2) and APSB(in Table 4) prove that the feature fusion module and the attention mechanism are complementary to each other with respect to the detection performance for large targets and small targets.

Table 5 Comparison of segmentation performance before and after feature fusion module was added.

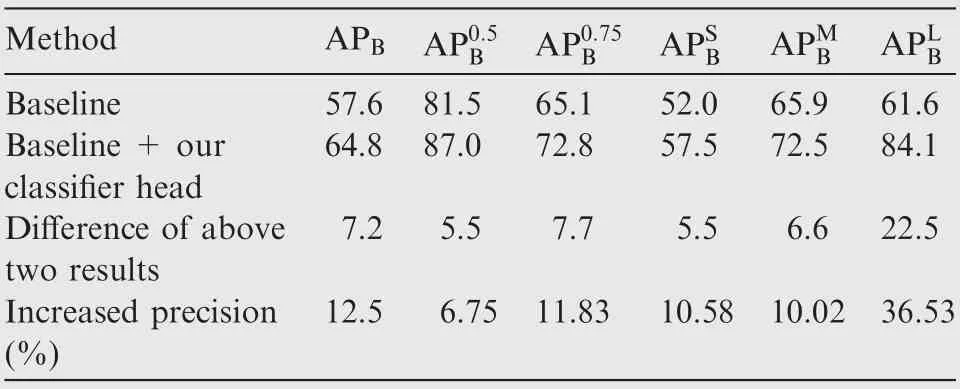

Table 6 Comparison of detection performance before and after improved classifier head was added.

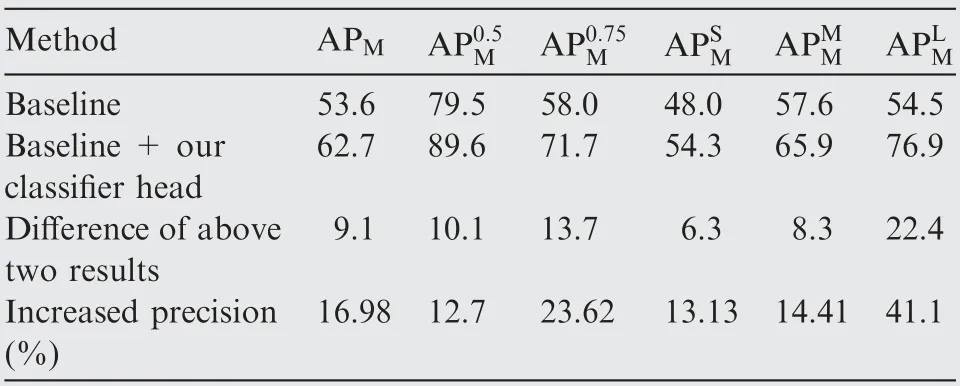

Table 7 Comparison of segmentation performance before and after improved classifier head was added.

(3) Improved classifier head: The proposed method has a new classifier head that uses four convolutional layers instead of two fully connected layers. The results in Tables 6 and 7 show that this classifier head significantly improved the accuracy of surface defect detection and segmentation.By comparing Table 6 with Table 7,it can be observed that the improved classifier head increased APBand APMby 12.5 % and 16.98 %, respectively; these increases are greater than those caused by adding the attention mechanism (8.16 % and 11.19 %) or the feature fusion module (3.47 % and 8.77 %).Therefore, it can be concluded that the improved classifier head plays the most important role in improving the detection and segmentation accuracy.

4.4. Comparison with other methods

This section compares the proposed method with two representative instance segmentation methods: Mask R-CNN and Mask Scoring R-CNN. As described above, Mask Scoring R-CNN is an improved version of Mask R-CNN with an added Mask-IoU head, and the proposed method was developed from Mask Scoring R-CNN specifically for the task of aircraft skin defect detection. The following experiment consists of two parts: quantitative and qualitative comparisons.

(1) Quantitative comparison:The results of the quantitative comparison are shown in Fig. 6. It is evident that the proposed method achieved the best performance with respect to all six indexes. Compared with Mask RCNN, the proposed method increased APBand APMby approximately 27.66 % and 20.49 %, respectively.With respect to APBand APM, the experimental results of the proposed method exceeded those of Mask Scoring R-CNN by 21 % and 19.59 %, respectively. Moreover,by comparison of the six indexes denoting the ability to detect and segment small (APSB, APSM), medium-sized(APMB, APMM), and large (APLB, APLM) targets, it can be observed that the proposed method achieved the best overall performance of the three methods. The ability of the proposed method to detect multiscale targets is very important for the successful detection of aircraft skin defects.

(2) Qualitative comparison: As shown in Fig. 7, six representative images of aircraft skin defects—consisting of three paint detachment samples and three scratch samples—were randomly selected as test samples, to compare the detection performance of the three methods.Fig. 7(a) shows the six test images and contains paint falling and scratch. Generally, the shapes of the paint falling area and the scratch area are shown as stripes and blocks respectively. The second column shows the corresponding ground truth, labeled by Labelme, and the experimental results of Mask R-CNN,Mask Scoring R-CNN, and the proposed method are presented in Fig. 7(c)-Fig. 7(e), respectively. According to the experimental results on test images paint falling 1 and paint falling 2, it is apparent that the segmentation results using Mask R-CNN and Mask Scoring R-CNN are relatively non-smooth: the edges of the defect area are relatively smooth and cannot reflect the edge details of the defect regions. In contrast, the results of the proposed method can better reflect the defect contour information and better match the ground truth. The comparison results show that the detection accuracy of the proposed method is higher than that of the other two methods.From the results on test samples paint falling 3, scratch 1, and scratch 2, it can be observed that the proposed method performed a more detailed detection of the protruding part of the defect, the edges and corners of the defect are clearly visible, and the segmentation result is more consistent with the ground truth. The test image scratch 3 is strongly affected by light, the defect in the lower left corner (red rectangle of the last row of Fig. 7(a)) has almost the same hue as the background,and it is difficult to distinguish this defect even with the naked eye.Consequently,none of the three methods could fully detect all parts of the defect. However, only the proposed method can detect the defect in the upper part of the image. This result demonstrates, to some extent, the robustness of the proposed method.

5. Conclusions

(1) This paper proposes a method of computer-vision-based pixel-level detection of aircraft skin defects. In the framework of Mask Scoring R-CNN, the design of the proposed method introduces a new classifier head, an attention mechanism, and a feature fusion module, to improve the accuracy of defect detection.

(2) The results of ablation experiments demonstrate that the proposed classifier head,which increased APBand APMby 12.5 % and 16.98 % respectively, plays the most important role in improving the detection and segmentation accuracy, compared with the attention mechanism and feature fusion module.

(3) The results of quantitative and qualitative comparison experiments demonstrate that the proposed method significantly outperforms the existing methods, such as Mask R-CNN and original Mask Scoring R-CNN.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

This study was supported by the National Natural Science Foundation of China (Nos. U2033201 and U1633105).

CHINESE JOURNAL OF AERONAUTICS2022年10期

CHINESE JOURNAL OF AERONAUTICS2022年10期

- CHINESE JOURNAL OF AERONAUTICS的其它文章

- Full mode flight dynamics modelling and control of stopped-rotor UAV

- Effect of baffle injectors on the first-order tangential acoustic mode in a cylindrical combustor

- Experimental study of hysteresis and catastrophe in a cavity-based scramjet combustor

- Flow control of double bypass variable cycle engine in modal transition

- Effects of chemical energy accommodation on nonequilibrium flow and heat transfer to a catalytic wall

- A reduced order model for coupled mode cascade flutter analysis