Generative Trapdoors for Public Key Cryptography Based on Automatic Entropy Optimization

Shuaishuai Zhu,Yiliang Han

1 College of Cryptography Engineering,Engineering University of People’s Armed Police,Xi’an 710086,China

2 Key Laboratory of Network and Information Security under the People’s Armed Police,Xi’an 710086,China

Abstract:Trapdoor is a key component of public key cryptography design which is the essential security foundation of modern cryptography.Normally,the traditional way in designing a trapdoor is to identify a computationally hard problem,such as the NPC problems.So the trapdoor in a public key encryption mechanism turns out to be a type of limited resource.In this paper,we generalize the methodology of adversarial learning model in artificial intelligence and introduce a novel way to conveniently obtain sub-optimal and computationally hard trapdoors based on the automatic information theoretic search technique.The basic routine is constructing a generative architecture to search and discover a probabilistic reversible generator which can correctly encoding and decoding any input messages.The architecture includes a trapdoor generator built on a variational autoencoder(VAE)responsible for searching the appropriate trapdoors satisfying a maximum of entropy,a random message generator yielding random noise,and a dynamic classifier taking the results of the two generator.The evaluation of our construction shows the architecture satisfying basic indistinguishability of outputs under chosen-plaintext attack model(CPA)and high efficiency in generating cheap trapdoors.

Keywords:generative model; public key encryption;indistinguishability model;security model;deep learning

I.INTRODUCTION

With the rising of artificial intelligence(AI)technologies,more and more online applications tend to be smart AI agents,and the working environment of information security science is definitely entering into the AI era.The AI chips and the largely improved bandwidth are new types of resources in AI networks,such as Internet of things,Internet of vehicles and smart city networks.Naturally,the design of cryptography and secure protocols needs to taking the advantage of these resources to introduce new features and improve performance in secure applications.But security basestone of modern cryptography is largely built on manually designed ciphers and their criterions[1–4].

Along with the development of AI applications on generic computing platforms,such as the smart devices,the online smart agents,and the smart networks,new requirements for cryptography design appears to adjust the new application environment,especially for the public key encryption schemes(PKE)which take the key role in secure protocols.We extracted three basic requirements in designing new PKEs.

Firstly,with more operation flow appearing in AI applications than traditional ones,the PKE schemes potentially needs to evolve to easily generate new function breaches.For example,the online smart service needs to respond and accommodate the varieties of customer’s requirements,which may change every day[5,6].The pattern of the secure protocols along with their encapsulated cihpers during new transaction needs to evolve with large probability.

Secondly,with the increase of computing and bandwidth resources,we need to bring down the cost of ciphers by generating a serial of sub-optimal but cheap candidates in each communication instance.One ideal solution is applying the one-time cipher for each transaction session[7,8].But so far,it is impractical to change each cipher configuration because of fixed cipher standard in each scenario,unless the real onetime cipher mode is applied like military and government core database,which costs far more in funds and efforts than the standard ciphers.

Finally,security protocols in new appeared applications,such as mobile networks[5,6],data mining[9],and IoT[10,11],have changed their functioning roles.For a PKE scheme,its function turns from secure communication to data protection during functional operations[11].Thus,we need PKE schemes to be constructed on more brief and well built-in trapdoors than ever before,so as to generate more flexible cryptographic protocols in AI entities evolved systems.

The traditional way of designing a cipher relies on manually constructing the map between a plaintext and its coordinate ciphertext.Potentially weak points and bugs may exist with large probability during the manually designed computing flow.Especially,all the post-quantum PKE ciphers consists of complex components to cover the mathematical map with secure strength of different levels.The trapdoors applied in these PKE ciphers originate from rigid hard problems,such as SVP on a lattice,but the ciphers are not rigidly equal to solving the hard problem,and the simple patching and updating cannot fundamentally eliminate the potential weaknesses.So we need a more direct way to design PKE ciphers explicitly relying a trapdoor that constructed by a hard problem.

One of the fundamental advantages of automatic learning system is the statistically simulate a distribution through its modeling upon structures and parameters.Packs of sub-optimal distributions containing arithmetically latent but practically available trapdoors can be generated.As a simple but effective way,it is well recognized that a learning structure,such as a probability graph model,can automatically find a statistical pattern through input samples,so that we can naturally generate a map that increases the output entropy close to a maximum.Following this inspiration,we design a trapdoor structure with the methodology of automatic learning.

Our contributions of this paper are summarized in three aspects.Firstly,so far as we know,we firstly introduce and make full use of the AI learning methods in generating and managing PKE schemes; Secondly,we discuss the availability and security strength of practically functioning trapdoors extracted from learning models; Thirdly,we automatically generate a serial of PKE ciphers with our new prototype of trapdoors and secure parameter configurations.With these contributions,we try to construct an automatically learning and generating framework to generate suboptimal trapdoors for new types of PKE schemes satisfying the above requirements.

The rest of the paper is organized as follow:In Section II,we make a brief summary of related works;In Section III,we give some necessary preliminaries;In Section IV,we present the detailed description of the generative trapdoor construction applying the variational autoencoder,based on which a new generative PKE is constructed by a simple wrap of the trapdoors;Then,we give our theoretical analysis and quantitative evaluation in Section V and Section VI; Finally,the paper concludes in the last section.

II.RELATED WORKS

2.1 Traditional PKE Design

In traditional PKE design,discovery of a desirable trapdoor is the basic step in scheme construction.After the Diffie-Hellman’s famous routine in new direction of cryptographic design,all the well-known PKE schemes,including RSA[12],ElGamal[13]and McEliece[14],are based on their ingeniously designed trapdoors.The availability and security are built in the graceful mathematical structures of the trapdoors,such as the factoring problem of large numbers,and decoding problem of linear codes.With the theoretical quantum algorithm and the possible availability of quantum computers,researchers refer to designing post-quantum PKE schemes,based on the new trapdoor structures developed from SVP[15,16],LWE[17],RLWE[18]and NTRU[19].But all the above trapdoor instances are mathematically concrete transformation,which cannot satisfy the new requirement of PKE design,such as pattern evolvement,negotiable cryptographic protocols and lower cost of new trapdoor construction,et.al..So researchers begin to explore new tools to design PKE schemes that is more suitable for AI based computing environment.

2.2 Generative Secure Communication

The possibility of designing cryptography schemes with the automatic learning techniques such as machine learning and deep learning is discussed firstly in[20].In the research of PKE based key encapsulation mechanism(KEM),early works focused on how to build secure channels to establish session keys using the method of machine learning[21–23].These automatic approaches cannot directly generate secure KEM protocol instances,and their secure keys cannot evolve during further communication.Then for a long period,the research process seems quite hard in handling automatic learning details such as the discrete data training problem[24],the computation overload problem[25]and a less practical outcome.But in recent years,with the widely application of AI technologies and the development of supporting hardware,pure AI based secure communication is becoming an prospective pattern in the future.In 2014,the wellknown learning model called generative adversarial network(GAN)[26]appeared,and then it is immediately applied to train a map between an arbitrary input and a target output.The optimized map is then naturally be treated as an encryption or an decryption algorithm.Compared with a mathematical concrete algorithm,the map from a GAN is automatically acquired through statistical adjustment during the training phase.In 2016,Google Brain team[27]published their first secure communication model with automatic negotiated encryption scheme whose security is guaranteed by a passive security model in which the adversary is a third party similar passive learner.As in their demonstration,the receiver can decrypt the message(a 16 bits message sampled in a normal distribution)with overwhelming probability,while the adversary cannot avoid approximately 50%of decoding error with overwhelming probability.But in the continues work of[28],the Google brain’s model is found insecure under the attack of stronger adversaries.

From the view of recent works about the combination of AI and security[29,30],although there are obvious advantages following the automatic ways of designing PKE schemes using current AI learning frameworks,but the routine is still before dawn with few available methodologies to acquire the same feathers and required security levels as those of traditional PKE designing routines.Many critical problems in security,efficiency and extended functions need to systematically studied.

III.PRELIMINARIES

3.1 Notations

We noteE(v)as the average entropy observed from variablev,which stands for a vector,a tensor or a coded binary sequence on a computing platform.The functionh(v)is used to compute the entropy of the variablev.Learning models are denoted as the form ofenc(·),dec(·),encoding(·),anddecoding(·),in which elements contained in(·)are their coordinate legal parameters.Matrices,weights and biases are noted with Bolded characters,such as Wencstands for the weights in all learning networks.notes randomly sampling anxfrom the distributionD.

3.2 Trapdoors for Public Key Encryption

Definition 1.Let T be the randomly chosen secret message set,a trapdoor is a cluster of one way maps based on the identical transformation,noted as T:y=f(x,u),u∈T,in which f(·)is a deterministic polynomial-time algorithm,satisfying f−1(y)is a non-deterministic polynomial-time algorithm,while f−1(y,u)is a deterministic polynomial-time algorithm.

Usually,assumingP /=NP,we needf−1(·)as an NP complete problem for a Turing computer.Given the trapdoorT:y=f(x,u),it is computationally hard to recoverxand the secret messageufor a Turing computer,such as the factoring problem,Hamilton loop problem,and linear decoding problem,et.al..So far as we know,each type of existing PKE mechanisms applied in secure communication are basically an encapsulation of a specifically chosen trapdoor whose security relies on the hardness computing the reverse the trapdoor,such as the large number factoring of RSA,the decoding problem in McEliece[31],and the discrete logarithm of ECC[32],et.al..

Definition 2.A generative trapdoor is a special trapdoor based on a statistically approximating map withoverwhelming probability,noted as T:P{y ←limf(x),u∈T}→1.

In the above definition,givenu∈Tandx,the generative trapdoor is acquired by repeatedly sampling in the neighborhood off(x,u),to find a statistical bound off(x),denoted as lim f(x).After the sampling result is steady,we accept the value off(x,u)as a trapdoor instancey.

In this paper,we assume the generative trapdoor is a generalized type of trapdoor,which slightly stretches the restrictions of traditional trapdoors in practical instances.So we can statistically approximate the state with a maximum entropy to generate a serial of trapdoors for the PKE scheme deployment.

3.3 Variational Autoencoder

An autoencoder is a typical generative learning model,which is originally presented to de-noise the codes on a noisy channel.After the generalized applications of deep learning networks,many types of autoencoders with rich functions have been constructed.Here we give a formal one defined in[33].

Definition 3.An autoencoder is a dual structured network,which takes a coded vector x∈{0,1}n as input,and maps to a middle vector y∈{0,1}d by a deterministic function fθ(x)=σ(Wx+b),and then tries to recover x by another deterministic function.

The functionsfandgare parameterized byθ={W,b},θ′={W′,b′},and an activating functionσ.W and W′are usuallyn×dweight matrices,andbandb′are bias vectors to set global filters.The aim of autoencoder optimization is to tuneθ∗,θ′∗by the parameter constrain of equation(1),

in whichNis the scale of x,andLis the loss function for each samplex(i)as the loss function.Usually,we use the squared error.

Then the accuracy of an autoencoder is defined by the probability in equation(2),

Definition 4.In the first map f,if we assume the output of each layer as the parameters of a specific distribution Dµ,and resample a new value byD(µ←σ(Wx+b))as the output of at least one layer,then the model is called variational autoencoder.

For the sake of briefness,in the variational autoencoder of this paper,we letin whichM(x(i))is the mean value andV(x(i))is the variance,we can re-sample the value ofy(i)from the distribution.

3.4 IndistinguishabilitySecurityUnder Chosen-plaintext Attack

The indistinguishability is a basic security requirement for PKE schemes to avoid attacks in cryptographic protocols.We give a basic definition of indistinguishability security under Chosen-plaintext Attack(INDCPA).

Definition 5.The IND-CPA security model is defined by the following game between a challenger C and an adversary A:

Step 1.C initializes a PKE scheme instance pke ←{encrypt(·),decrypt(·),pp,k}and releases its public parameters pp.

Step 2.C generates a challenged PKE key pair{pk∗,sk∗},and makes pk∗public,while keeps sk∗secret.

Step 3.A can arbitrarily choose plaintexts to query their corresponding ciphertexts.

Step 4.C randomly chooses a bit b ←{0,1},and generates c ←encrypt(pk∗,b)as the challenged ciphertext.Then C sends c to A.

Step 5.A outputs a bit b′.If b=b′,A wins the game.

If the advantage for A in the IND-CPA game is defined by,

Definition 6.For any A with bounded computing resources,if the advantage for A to win the game in Definition 5 is a negligible variable o(k),in which k is the secure parameter of a PKE scheme,the scheme satisfies IND-CPA security.

IV.GENERATIVE TRAPDOORS BASED ON VARIATIONAL AUTOENCODER

In this section,we discuss our routine in designing generative trapdoors based on the variational autoencoder.In traditional PKE design,the trapdoor is a manually designed deterministic map and the probabilistic output is normally obtained through later patching upon the original PKE primitive,such as the optimal padding for RSA.In this paper,we construct the trapdoor with more compact and convenient routing with probabilistic output.The basic method is to make a statistical approach toward the input sample set,by applying the feature of an autoencoder in duplicating a given distribution,while deploying a variational output for functionfto generate probabilistic output.We give our details of our generative trapdoor realization,including three aspects:the system architecture,the design of each model,and finally a simple demonstration of trapdoor encapsulation for PKE:gPKE.

4.1 System Architecture

Similar with GAN in finding an unknown map,the basic purpose of our generative model is trying to automatically construct a map between the plaintexts and ciphertexts.Compared with GAN,the major difference is our training objects are messages which are unbearably hard in extracting common features and model generalization.But when we turn our observing object to the single bit,it becomes possible in hiding and recovering its features showed in entropy optimization of the ciphertext.So focusing on the simplest scenario,our architecture consists of a VAE as the generative model,a ciphertext classifier as the adversarial model,which is built in the encoding model in the VAE.

To automatically constructing a trapdoor,the generative model tries its best in conceal the information in the plaintexts by tuning parameters to reach an entropy maximum,while keeping a satisfying accuracy during the reversing of the trapdoor.The adversarial model tries to extract features from the vast output of the generative model to accumulate advantage in distinguish different plaintexts.

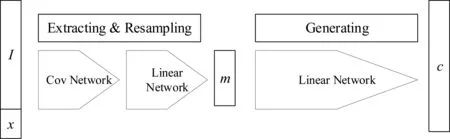

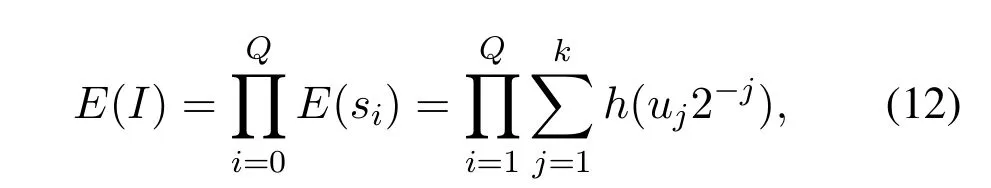

In details,the generative VAE consists of two models:one is the encoding model,which contains a convolutional network(Cov)and a linear network(L1);the other one is the decoding model,which is a linear network(L2)simulating the process of an one-way linear encoding,see Figure 1.The input and output of the VAE are vectors which is coded in float data type with the precision ofk.In the following sections,for the sake of convenience,we only assume the data is coded on binary computing platforms.

Figure 1.System architecture of generative trapdoors.

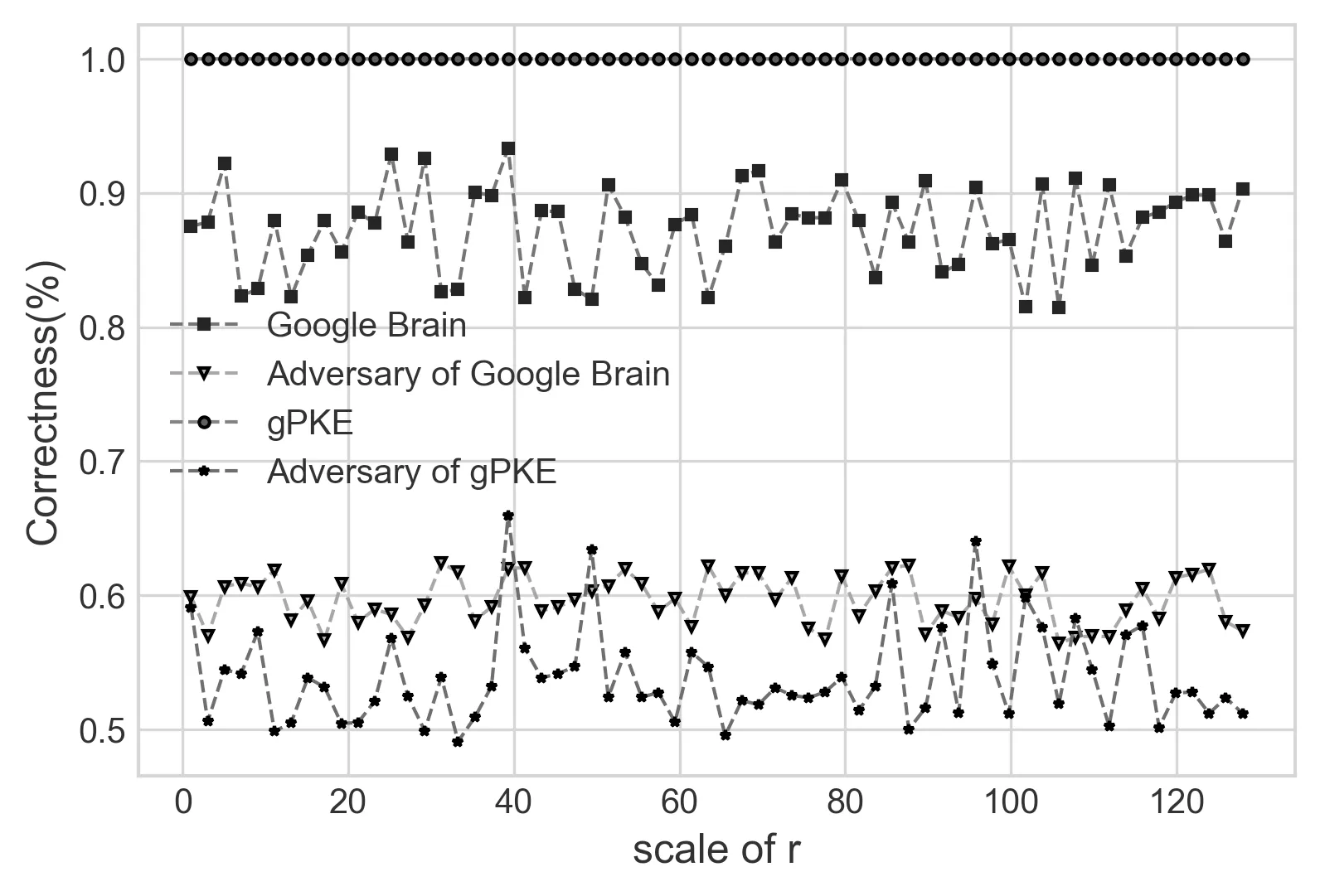

Figure 2.Correctness of recovering the plaintext.

4.2 Trapdoor Modeling

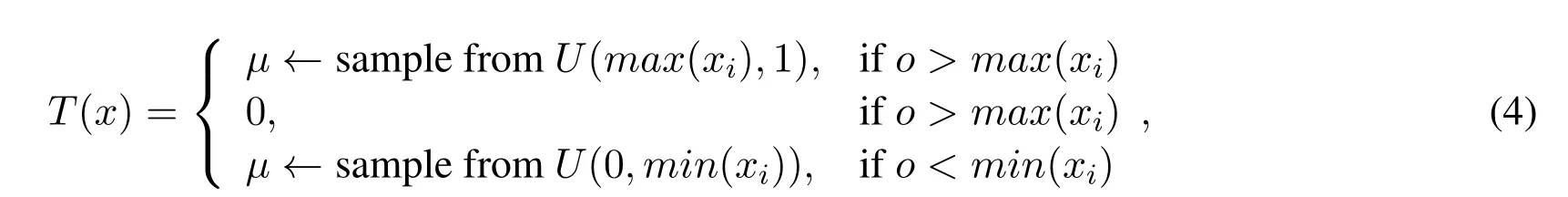

Parameter Initialization.Let the width of input layer ben,and letm∈{0,1}.c∈Rris ar-dimension vector uniformly sampled from(0,1),x∈ Rsis a fixeds-dimension vector,andis a variational padding,satisfyingn=r+s.Letθ={We,be,Wd,bd},in which{We,be}is the encoding parameters,while{Wd,bd}is the decoding parameters.The VAE takesI={c||x}Qwith the scale ofQas its input samples for its generative model,and takesI′={c||x′}Qas the input samples of its adversarial model.Then we construct a functionT(x)for encapsulating vectorxwith equation(4),in whichxiis the coordinate ofxwith the indexiin 0< i < s,andois the output component of tensor coordinate yielded by the previous layer.T(x)is a wrapping vector forxin the decoding model.Then the system initializes a generative model and an adversarial model to automatically generate a trapdoor,satisfying negligibleAdvθ.

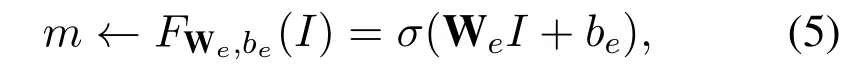

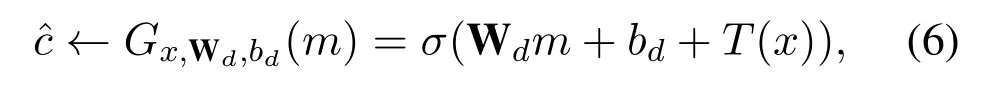

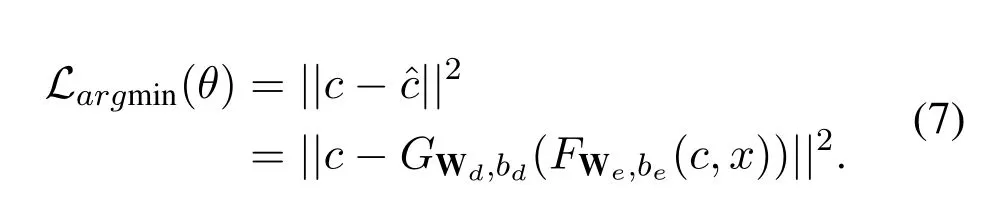

Generative Modeling.TakingIas the input,the encoding model is described as,

while the decoding model is,

in whichσ(·)is a non-linear activating function.Then the VAE trainsθby the following loss function,

Then,tuningθunder the training phase driven byIuntil reaching an overwhelming probability of correctness.Finally,for each samplecinI′,a tag from{0,1}is paired withcaccording to the result of the encoding model.

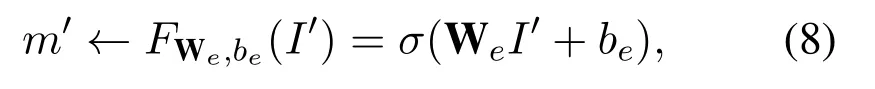

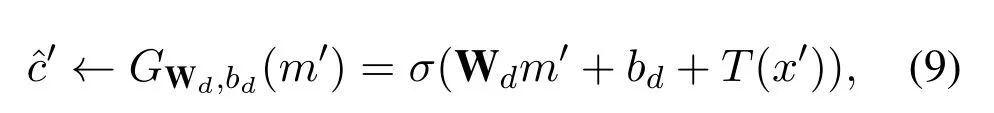

Adversarial Modeling.We need another model with the same parameter settings asθto test the indistinguishability of VAθwithout the knowledge ofx.TakingI′as the input,the encoding model is described as,

while the decoding model is,

in whichσ(·)is a non-linear activating function.Then the VA takes all theas input,and makes predictions of eachm′by the encoding model.If the correctness of the adversarial model shows an significant offset from ideal indistinguishability,such as 50%,orAdvθis non-negligible,The inputIneeds to be re-sampled to construct a new generative model until the significance ofAdvθdisappears.

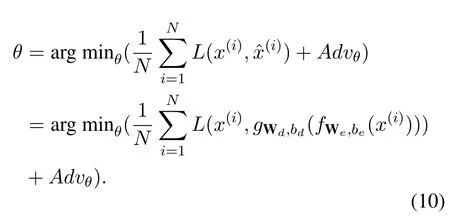

The global parameter of the trapdoor model is described as,

4.3 Trapdoor Encapsulation for Public Key Cryptography

Before applying in any further practical encryption scenarios,the trapdoor model needs to be wrapped into a cryptographic algorithm.Complying with the standard cryptographic primitive model,we encapsulate the trapdoor model into a public key encryption algorithm between two users,Alice and Bob,with the following four steps:

Parameter initialization.Alice randomly picks a secret vectorx∈ Rs,computesT(x),and generates two random sample setsI={c||x}QandI′={c||x′}Q,in whichcandc′arer-dimension vectors uniformly sampled from(0,1).Then Alice constructs an VAEθtrapdoor instance,and takesIas the input to optimizeθ={We,be,Wd,bd}.

Key generation.Let(T(x),Wd,bd)be the public key,and(x,We,be)be the secret key.

Encryption.Takingm∈{0,1}and a public key(T(x),Wd,bd)as the input of decoding modelG,the coding result is computed as.

Decryption.Takingcand(x,We,be)as the input,the encoding modelFoutputs.

Correctness.We briefly analyze the correctness of the above gPKE.As the gPKE is a one-bit prototype,the correctness of the decryption algorithm count on the ability to solve the binary classify problem with the input ofOn decryption,the encoding model computesto recoverm.As the gPKE is built on a dual architecture including the encoding modelFand the decoding modelGafter the generative training.Then according to Definition 3,we have,

in whichc||x∈IandAdvθis the advantage in correctly decodingcwithoutx.

If theAdvθis negligible after adversarial modeling,the correctness of gPKE closely fits the accuracy of the wrapped VAE model in the trapdoor encapsulation.The hyper-parametersnanddin Definition 3 have major influences toward the accuracy,which needs systematic tests to reveal.In this paper,we letd=1 andn=r <384,avoiding the potential low accuracy problem and yielding a high decoding probability close to 1.

As the above PKE scheme is based on a generative trapdoor,we note the scheme as the generative PKE(gPKE).After being optimized,the gPKE can be applied as the long-lived,short-lived or one-time PKE scheme according to the parameter scale of its generative VAE model.

Possible generality in further construction.The generality lies in two ways:further construction upon the gPKE and direct encapsulation of the generative trapdoors.From the angle of PKE design methodology,the gPKE encapsulating our generative trapdoor can work with any type of PKE systems,as long as these PKE systems are designed upon explicit or latent one-way map.But the encapsulation needs different strategies and techniques to obtain different functions.Basically,we can extract the one-way map from the newly appeared PKE schemes,such as the new constructions in identitybased encryption[34],attribute-based encryption[35,36]and searchable encryption[37,38].The security bases of all the realization instances of these PKE primitives are implicitly or explicitly one-way maps,in which the trapdoor messages are the secret key corresponding to either the unique identity or the attribute set.Applying the generative trapdoor,these one-way maps is naturally replaceable with functional consistency.

V.ANALYSIS

5.1 Security Analysis

The security is a major concern for a PKE scheme,whether in the theoretical level or the practical deployment level.In this section,given the public key and structure of the VAE model,we will theoretically analyze the computational hardness in recovering the original message.Then,we will give the illustration of the provable security theorem by solving the INDCPA security problem based on our model of generative trapdoor.

Theorem 1.With the knowledge of decoding model(Wd,bd)of a VAE instance and a sample set I whose scale is bounded by{p,c}2Qk,A can recover an output with negligible entropy decline E(I)−E(m)≤o(k).

Proof.We assume that the input and output are binary coded with the precision ofk.We prove the conclusion by computing the entropy change during the data forwarding in the VAE model.Then we need to observe the output of the linear network of the encoding model,and compute its average entropy change.

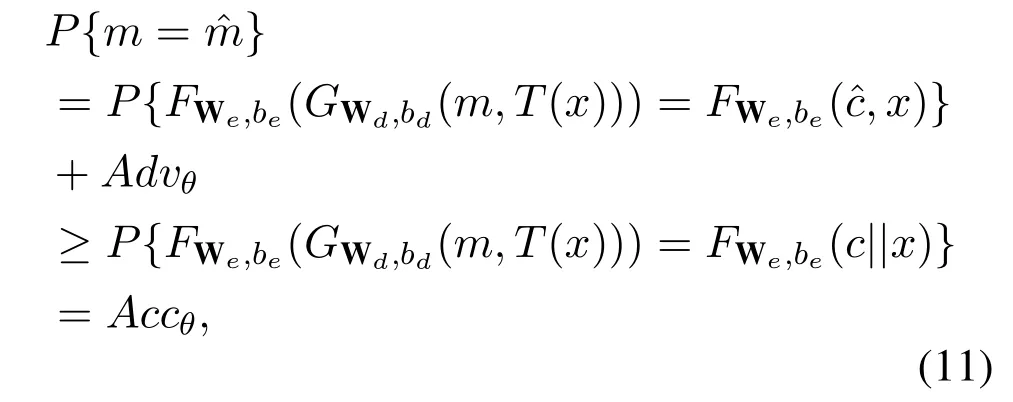

AsIis sampled fromU(0,1),andsi∈I,we can compute the average entropy ofIfrom each sample,

in whichuj∈{0,1}.

In equation(12),for any two samplessiandsj,we have|h(si)−h(sj)|≤2−j·r−k,so for any two sample set instancesI0andI1,|E(I0)−E(I1)|≤(2r)−2Qkholds.Then the average entropy of any randomly sampledIis around the entropy peak with negligible difference.So in the encoding model,we haveE(I)≥E(OL1)with overwhelming probability.

Then,we assume the resampling process would not decline the entropy,we can compute the entropy during the encoding,

Also,we recall that the back-propagation and forwarding in theCovwith loss function of max-entropy and KL divergence cannot decline the average entropy,then,the decline of average entropy in theCovdrops toE(I)−E(OL1)≤(2−j ·r−k)Q.

When we set the output width ofL1 as binary,thenE(I)−E(m)≤(2−j−k)Qwhich is negligible.

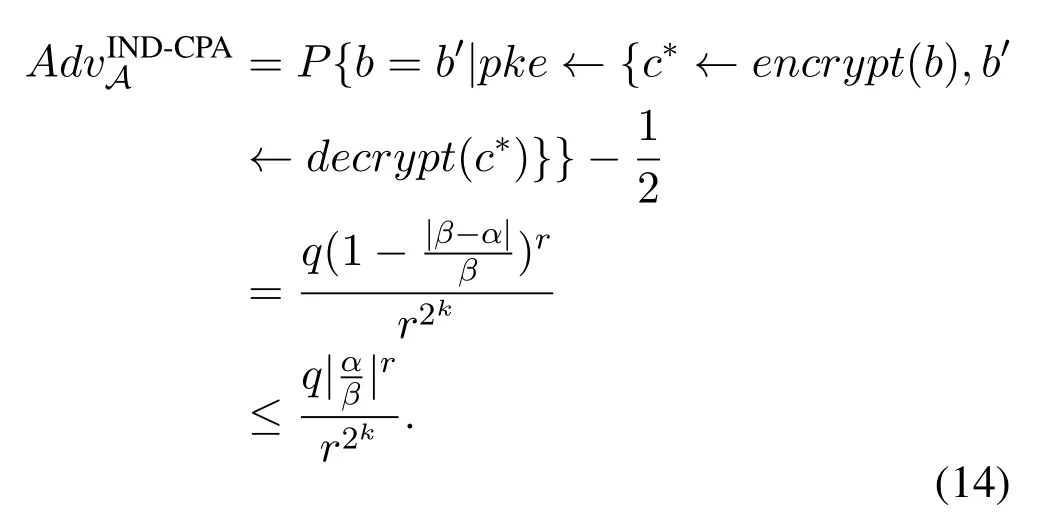

Theorem 2.For a generated model VAEθ with θ={We,be,Wd,bd},the gPKE is IND-CPA secure against any polynomial-time adversary.

Proof.According to Definition 5,we initiate the game to evaluate the advantage of the adversaryA.

Step 1.Crandomly picks a secret vectorx∈Rk,computesT(x),and generates two random sample setsI={c||x}QandI′={c||x′}Q,in whichcandc′arer-dimension vectors uniformly sampled fromU(0,1).ThenCconstructs an VAEθtrapdoor instance,and takesIas the input to optimizeθ={We,be,Wd,bd},and takesI′as the input to verifyθ.Finally,Cinitializes a gPKE scheme instancegpke ←{encrypt(·),decrypt(·),pp}by wrapping the trapdoor.and releases its public parameterspp={k,r,Q}.

Step 2.Cgenerates a challenged PKE key pair{pk∗=(Wd,bd),sk∗=x},and makespk∗public,while keepssk∗secret.

Step 3.Amakes the encryption query by arbitrarily choosingmi∈{0,1},and runningci ←encrypt(pk∗,mi).Arepeats the encryption query forqtimes and constructs a table containing each query result(mi,ci)with the scale ofq,in whichiis the query index,andq ≤2Qk.

Step 4.Crandomly chooses a bitb ←{0,1},and generatesc∗←encrypt(pk∗,b)as the challenged ciphertext.ThenCsendsctoA.

Step 5.Aoutputs a bitb′.Ifb=b′,Awins the game.

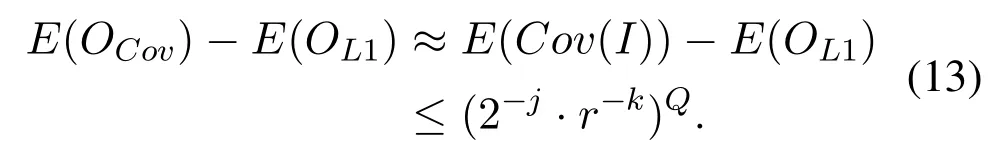

We note the coding in the resampling as 0←(0,α]and 1←(α,1),in whichαis dynamically average output ofCovmodel.For each query,Acomputes aβas the guess ofα.Then,we can compute the advantage ofAin the game,

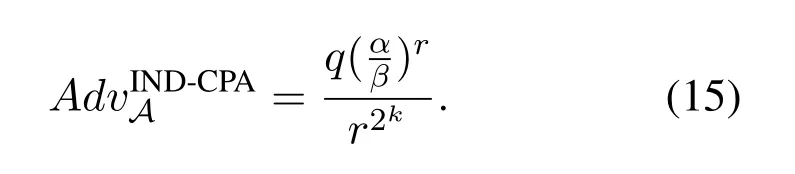

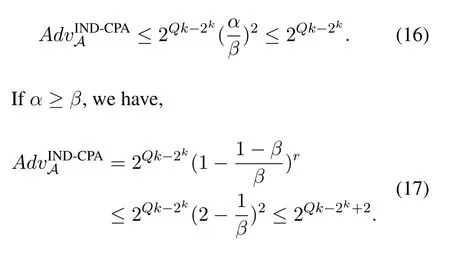

Ifα<β,we have,

Briefly,letr=2,then,

In fact,gPKE parameters satisfyr ≫2 and 2k ≫Qk.

5.2 Efficiency

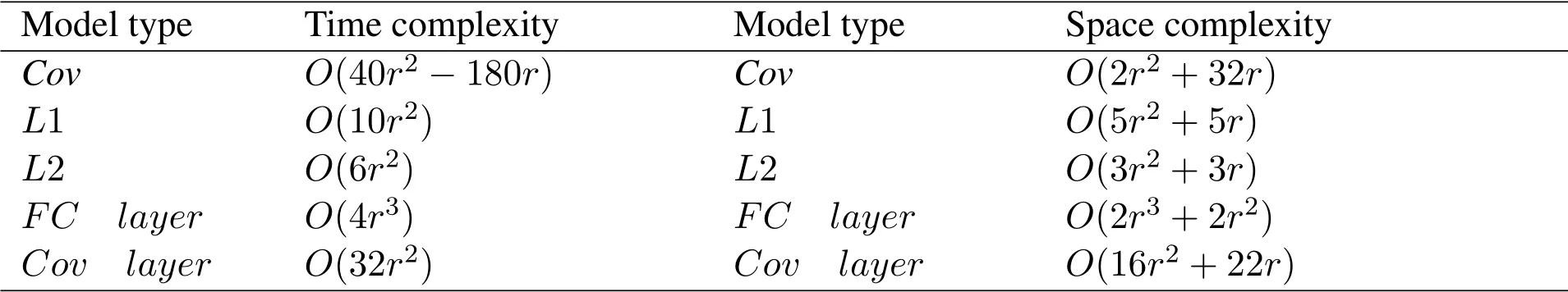

In this section,we analyze the computing complexity ofCov,L1 andL2 of our generative trapdoor model in the phase of training and generating.As a comparison,we analyzed the corresponding components in Google Brain’s model,in which the learning network consists of a 2r ×2r(r=16,32,64,respectedly)full connection linear layer(FC layer)and four 1-D convolutional layers with independent settings.From the comparison in Table 1,our convolutional component has no fundamental advantage over Google Brain’s model,while our linear component is much less complex withr2level.

Table 1.Computing complexity analysis.

VI.EVALUATION

6.1 Configuration

In order to practically evaluate the correctness and running costs of our generative model,we deploy our gPKE with optimized parameters mentioned in Section IV.Briefly,we put all the encoding process and decoding process in one script,because of the training phase is our major efficiency concern in practice.The platform contains a challenger’s process handling the training and decoding phases and a computationally powerful adversary backend handling the encoding process.The challenger’s terminal accepts queriesand generates the coordinate responses according to Definition 5.The adversary’s backend collects all the responses for each plaintext,and builds the guess oracles for the IND-CPA game.

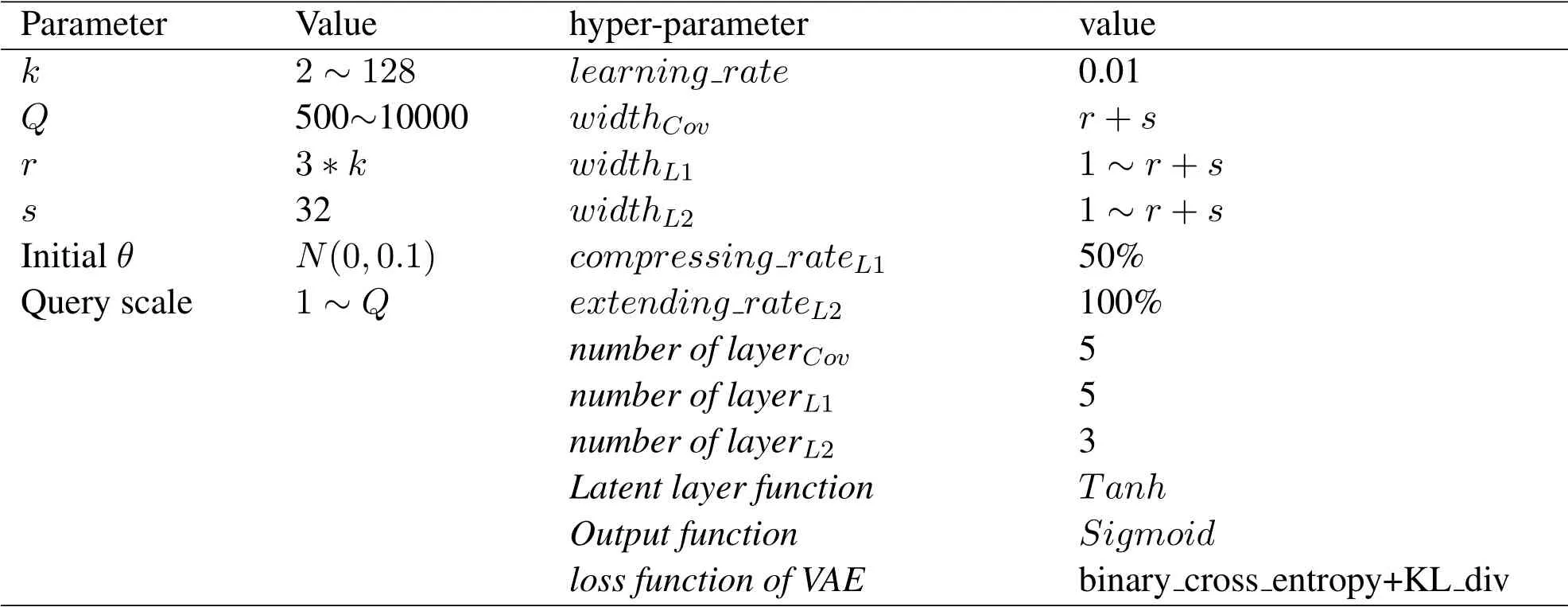

The challenger’s terminal is a Lenovo laptop with Core i7-2430M CPU@2.4GHz and 8GB ram.The trapdoor model’s hyper-parameters and gPKE parameters are listed in Table 2.The adversary’s backend is a server running a 3.4GHz Core i5-7500 CPU and 16GB ram.All scripts are coded with python 3.6 in Spyder 3.1,and the learning models are constructed by pyTorch 1.1.

Table 2.Parameter configuration.

6.2 Results

Correctness.In this section,we demonstrate the correctness comparison of Google Brain’s adversarial model and our model with similar parameter configuration,see Figure 2.The running data showing the correctness of our gPKE scheme is collected in two aspects.Firstly,given a specific plaintext,the probability of the ciphertext be correctly recovered is collected.In our gPKE 1-bit instance,the correctness is 100%,while the Google Brain’s model is around 82%~93%in their 16-bit instance,as they didn’t offer a one bit model without satisfying IND-CPA security.Secondly,under the assumption of a third party adversary,e.g.Eve in Google Brain’s model,the probability for the adversary to recover the chosen plaintext without access to the secret key is collected.

Complying with the semantical proof in Theorem 2 in Section V,the advantage of the adversary in gPKE is obviously lower than the advantage of Eve in Google Brain’s model.But still,we can see the correctness in the IND-CPA game is basically above 0.5,which shows that the advantage of adversary is slightly larger than zero,and the gPKE instances are suboptimal ones.

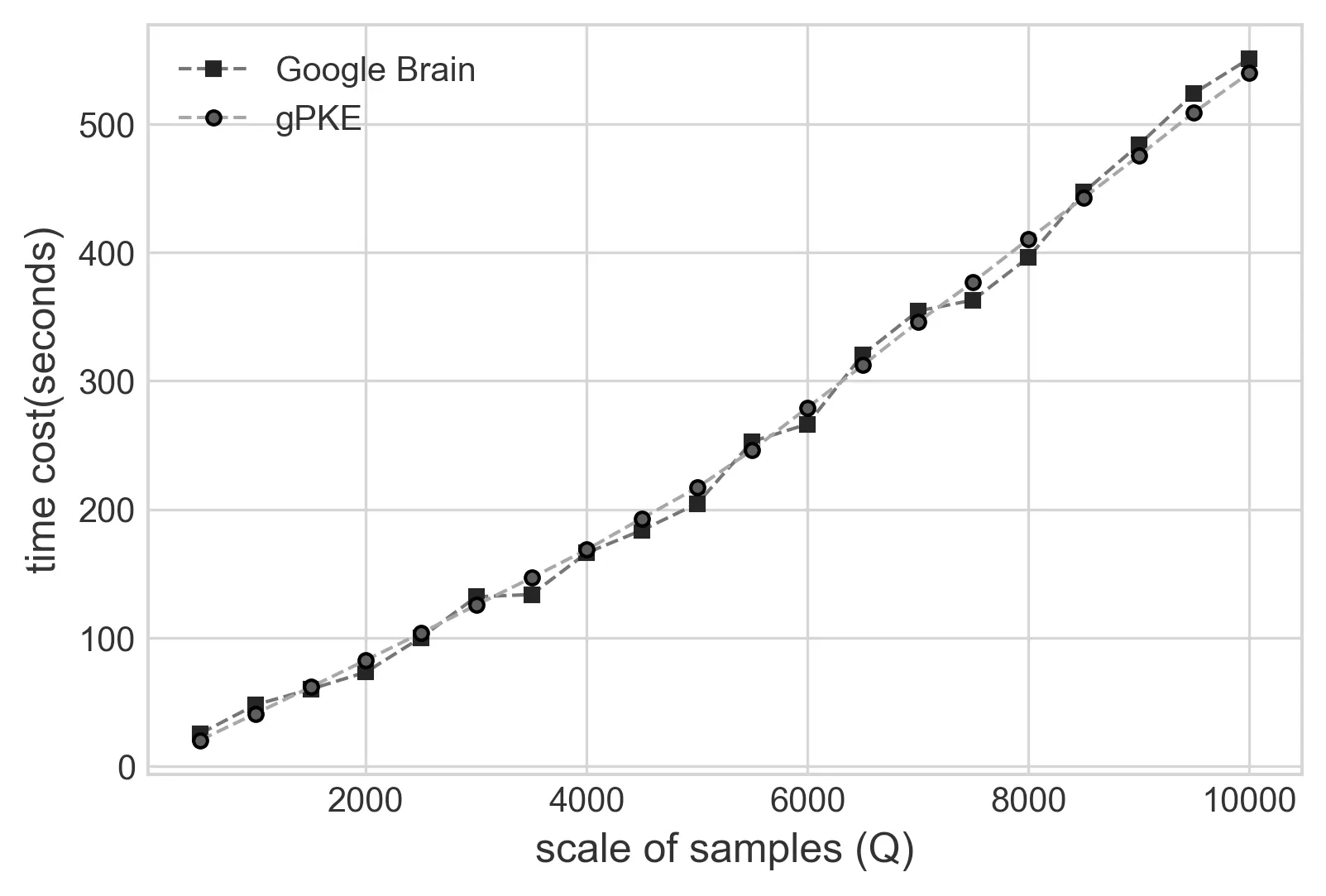

Cost of Training.In the model of Google Brain and our gPKE,the time cost of training is closely related to the scale of the input samples.In Figure 3,we letk=128 and prepared the time cost of training with the scale ofQ.As the time cost of each training step is within negligible turbulence,we set the same training steps in the two models,and observe the tendency with the scale of input samples increasing.On the same computing platform,the time cost results are close to each other with approximately linear increment.But as a matter of fact,gPKE can achieve steady trapdoor parameters with less than 10000 samples,128 batches and 15 training epoches(less than 1172 steps).Then the cost of training is surely acceptable for gPKE with further optimized input parameters.

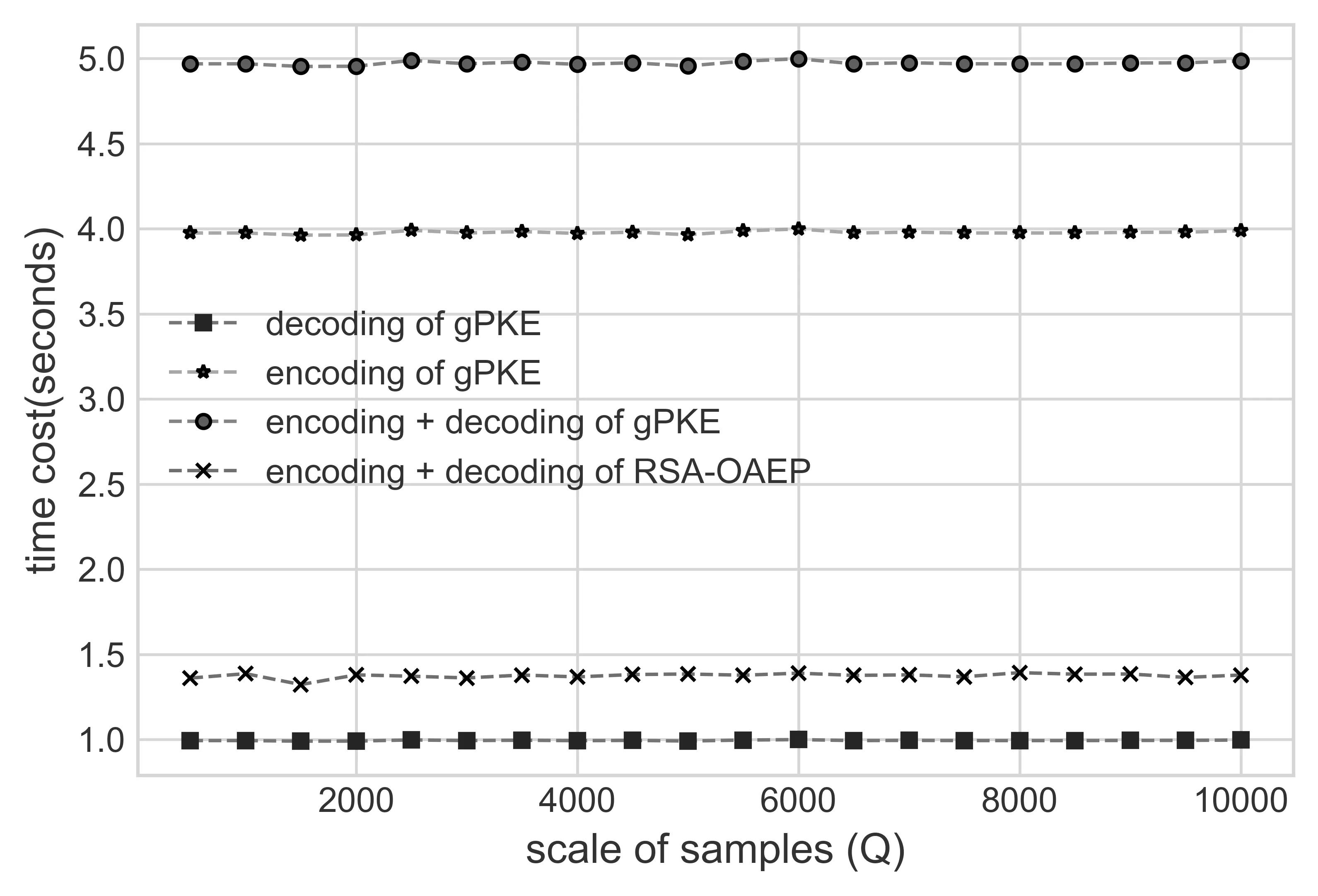

Cost of Encoding and Decoding.Finally,we tested the performance of independent encryption and decryption models of our gPKE.The results are shown in Figure 4 in which each result point is collected from a batch of 1000 single-bit implementations,approximately 1Kbytes input data.From the results,we can see the encoding model(decryption in gPKE)costs almost three times of seconds more than the decoding model(encryption in gPKE),because the data fowarding in the convolutional network costs more CPU cy-cles than in the linear network.Besides,in our optimized version of gPKE,the decoding model(L1)of VAE consists of three linear layers,whileL2 has 5 linear layers.As the efficiency varies on different computing platforms and Google Brain didn’t offer any official results about the time costs,we didn’t demonstrate the time cost of Google Brain’s asymmetric scheme.But their learning model(a 2N ×2Nfully collected network and a convolutional network with four 1-D layers)is more complex than gPKE,such that the processing efficiency tends to be worse than gPKE.As a comparison,we tested the performance of RSA-OAEP in the same computing environment,which showed the gPKE can work well with a comparable degree of computing complexity as RSAOAEP without considering the training cost.Beside,both learning models in encoding and decoding can be largely improved with further coding optimization and more accelerating hardware.Overall,through the test,the performance of gPKE is acceptable in handling the same operations as the classic PKE.

Figure 3.Time cost of training.

Figure 4.Time cost of gPKE instances for 1Kbytes.

In this section,we roughly tested the performance of generating and deployment of the gPKE with relatively conservative parameter and hyper-parameter settings to guarantee the availability of our model.But so far,as we can barely find any convincing standards in AI-based cryptographic development,especially in the aspects of security and functional ability,many features and functions are still unknown and need to make further study in the future.

VII.CONCLUSION

AI based cryptography is a prospective way of modern cryptographic design and analysis.In this paper,we introduced the definition of AI based trapdoor design for the first time,and more specifically,it is a generative learning model in acquiring a desirable trapdoor with maximum entropy.In the model,we constructed a VAE-like combining structure based on a convolutional network and two linear coding networks with an additional resampling layer to obfuscate each output.Then applying the generative trapdoor,we designed a new style of PKE encapsulation,which technically achieved the IND-CPA security,and showed better substitutability and flexibility in practical customization,especially in communication networks consisting of AI agents.

Compared with the existing trapdoor mechanisms,our model is more explainable and practical in its data handling flow and in security mechanism.In the generative model,training phase is the most resource consuming part,in which optimized parameters are generated during the procedure of training steps.In Google Brain’s asymmetric system,Bob needs more than 10000 steps to efficiently correct errors during communication.In gPKE,we only need more than 500 random samples in 1172 training steps to initiate satisfying trapdoor.Through our performance test,the encryption and decryption in the 1-bit instance cost less than 5ms without any algorithm optimization.

There are still many unsolved problems during the construction of our generative model,especially in the performance stability against IND-CPA adversary,and occasionally,the advantage may reach as much as 0.1 out of no reason in the IND-CPA game.Also,the IND-CCA and IND-CCA2 adversaries should be considered to improve the security strength in the future.Besides,the ciphertext extension is a major problem with currentlyrtimes longer than the plaintext.In the future work,we will try to improve the generative model and the construction of gPKE to support elastic input and output,and further improve the efficiency of deployable instance of gPKE.

ACKNOWLEDGEMENT

The authors thank all the reviewers and editors for their valuable comments and work.This paper is supported by the National Natural Science Foundation of China(No.61572521,U1636114),National Key Project of Research and Development Plan(2017YFB0802000),Natural Science Foundation of Shaanxi Province(2021JM-252),Innovative Research Team Project of Engineering University of APF(KYTD201805),Fundamental Research Project of Engineering University of PAP(WJY201910).

- China Communications的其它文章

- Two-Timescale Online Learning of Joint User Association and Resource Scheduling in Dynamic Mobile EdgeComputing

- SHFuzz:A Hybrid Fuzzing Method Assisted by Static Analysis for Binary Programs

- SecIngress:An API Gateway Framework to Secure Cloud Applications Based on N-Variant System

- A Safe and Reliable Heterogeneous Controller Deployment Approach in SDN

- Distributed Asynchronous Learning for Multipath Data Transmission Based on P-DDQN

- A Fast Physical Layer Security-Based Location Privacy Parameter Recommendation Algorithm in 5G IoT