AI Assisted PHY in Future Wireless Systems:Recent Developments and Challenges

Wei Chen,Ruisi He,Gongpu Wang,Jiayi zhang,Fanggang Wang,Ke Xiong,Bo Ai,Zhangdui Zhong

1 State Key Laboratory of Rail Traffic Control and Safety,Beijing Jiaotong University,Beijing 100044,China

2 School of Computer and Information Technology,Beijing Jiaotong University,Beijing 100044,China

3 School of Electronic and Information Engineering,Beijing Jiaotong University,Beijing 100044,China

Abstract:Nowadays,the rapid development of artificial intelligence(AI)provides a fresh perspective in designing future wireless communication systems.Innumerable attempts exploiting AI methods have been carried out,which results in the state-of-the-art performance in many different areas of wireless communications.In this article,we present the most recent and insightful developments that demonstrate the potentials of AI techniques in different physical layer(PHY)components and applications including channel characterization,channel coding,intelligent signal identification,channel estimation,new PHY for random access in massive machine-type communication(mMTC),massive multiple-input multiple-output(MIMO)power control and PHY resource management.Open challenges and potential future directions are identified and discussed along this research line.

Keywords:artificial intelligence,wireless communications,physical layer.

I.INTRODUCTION

Future wireless communication systems are expected to enhance the key capabilities of 5G,providing extremely high throughput(e.g.,Tbps),low latency(e.g.,us)and dense devices(e.g.,107/km2),and support full wireless coverage(space-air-ground-underwater)and high mobility[1].A key enabler technology for designing future wireless systems is artificial intelligence(AI).

AI,especially deep learning(DL),achieves great success in domains such as computer vision and natural language processing in recent years,where no rigid mathematical models can accurately capture all effects in signals.Communications includes many different areas of research,and some of the existing designs have already achieved performance close to the theoretically optimal when there exist accurate and tractable mathematical models.For complex communications scenarios that are difficult to describe with concrete mathematical models,AI could be a potential solution in order to provide promising new benefits.In addition,AI has been proved be a powerful tool in solving linear inverse problems[2].

This article focuses on the AI assisted wireless physical layer(PHY),which is a fast growing area of investigation.We introduce the most recent and insightful developments that demonstrate the state of the art capabilities of AI techniques in channel characterization,channel coding,intelligent signal identification,channel estimation,new PHY for random access in the massive machine-type communication(mMTC),massive multiple-input multiple-output(MIMO)power control and PHY resource management,and discuss on the novel challenges brought by AI.

II.CHANNEL CHARACTERIZATION

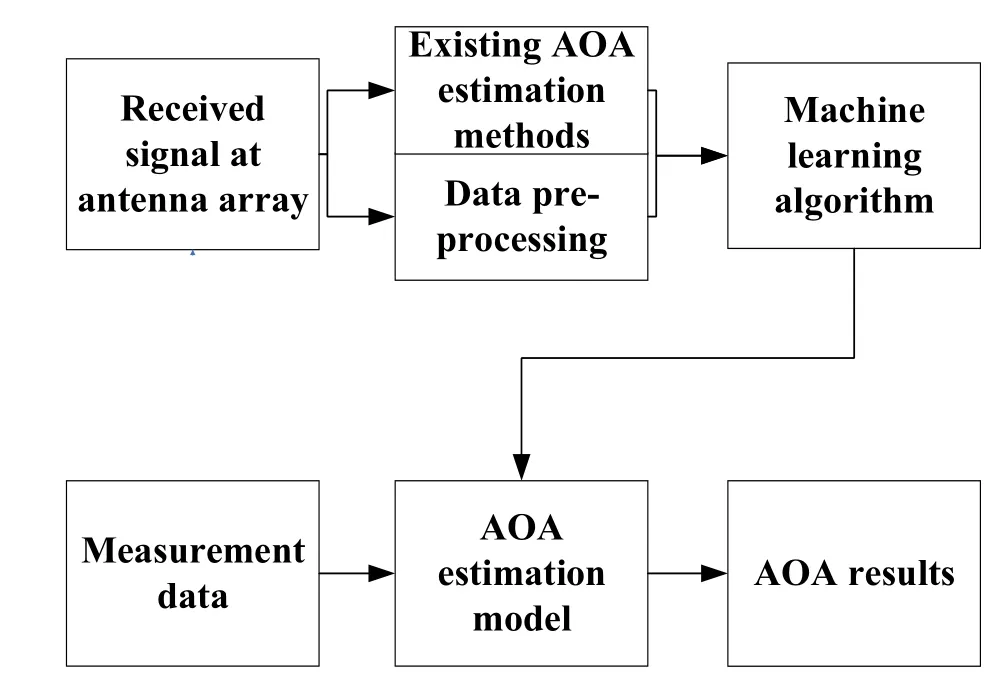

Figure 1.The framework of AI-based channel angle estimation.

AI assisted wireless channel characterization has recently received a lot of attention.For the data-driven channel characterization and modeling,data processing capability is the key constraint factor that determines the effectiveness and accuracy.Common data preprocessing requirements include:data collection,extraction,preprocessing,etc.Specifically,researchers need to collect the data from channel measurement campaign.At the same time,noise and abnormal values in the obtained data need to be removed to obtain effective data needed for channel characterization and modeling.AI-based algorithms,thanks to its excellent performance in data processing,classification and regression,can be used as an efficient solution for data preprocessing in channel research.In[3],Huang et al.discuss the challenges and opportunities of machine-learning-based data processing techniques for dynamic channel characterization.In addition,an AI-based algorithm provides a feasible solution for channel parameter extraction,such as channel angle information.A framework of AI-based angleof-arrival(AoA)estimation is shown in Figure 1.It is expected that the proposed framework in Figure 1 provides an improved accuracy compared with the traditional algorithm.Considering that the complex environment,varying radio channels,and random noise and interference may have unpredictable effects,AIbased channel data processing and parameter extraction are still quite challenging.

The most widely used AI technique for channel characterization is clustering of multipath components(MPCs)[4],as clustered MPCs are widely observed in radio channels and cluster structure is widely used in channel modeling[5].For the dynamic channels,AI assisted tracking of MPC or cluster is of great significance for dynamic channel characterization and modeling[6].Generally,tracking MPCs is to identify the movement/trajectory of each MPC in consecutive snapshots from measurement data,whereas clustering MPCs is to find the groups of MPCs that having similar characteristics.Essentially,both tracking and clustering of MPCs are to analyze the distribution pattern in multiple data domains,and they significantly help to improve accuracy of wireless channel characterization and modeling.Nevertheless,for the most existing work,the tracking and clustering of MPCs proceed independently,i.e.,MPCs are either tracked first and clustered later,or clustered first and tracked later.In this sense,the accuracy of the first stage may impact on the accuracy of the next one.More importantly,for time-varying channels,the evolution pattern of MPCs is an important characteristic that needs to be considered during clustering.Hence,instead of clustering MPCs in each snapshot,some recent research moves to identify the clusters in consecutive snapshots[7],where the evolution pattern of MPCs is considered during the clustering.

Another application of AI algorithms in wireless channel is intelligent communication scenario identification based on channel feature.Scenario identification can be generally considered as a classification problem,which can be well solved by AI algorithms.In[8],Huang et al.propose a line-of-sight(LOS)and non-line-of-sight(NLOS)identification scheme,which is the first to use angle information to identify LOS/NLOS scenario,and the error rate is significantly reduced.However,the current scenario identification is mostly limited to LOS/NLOS recognition.The realistic communication scenarios are much more complicated.Therefore,the research on scenario identification based on channel data is still insufficient and needs further exploration,and extended identification techniques can be developed for further specific scenario identification.

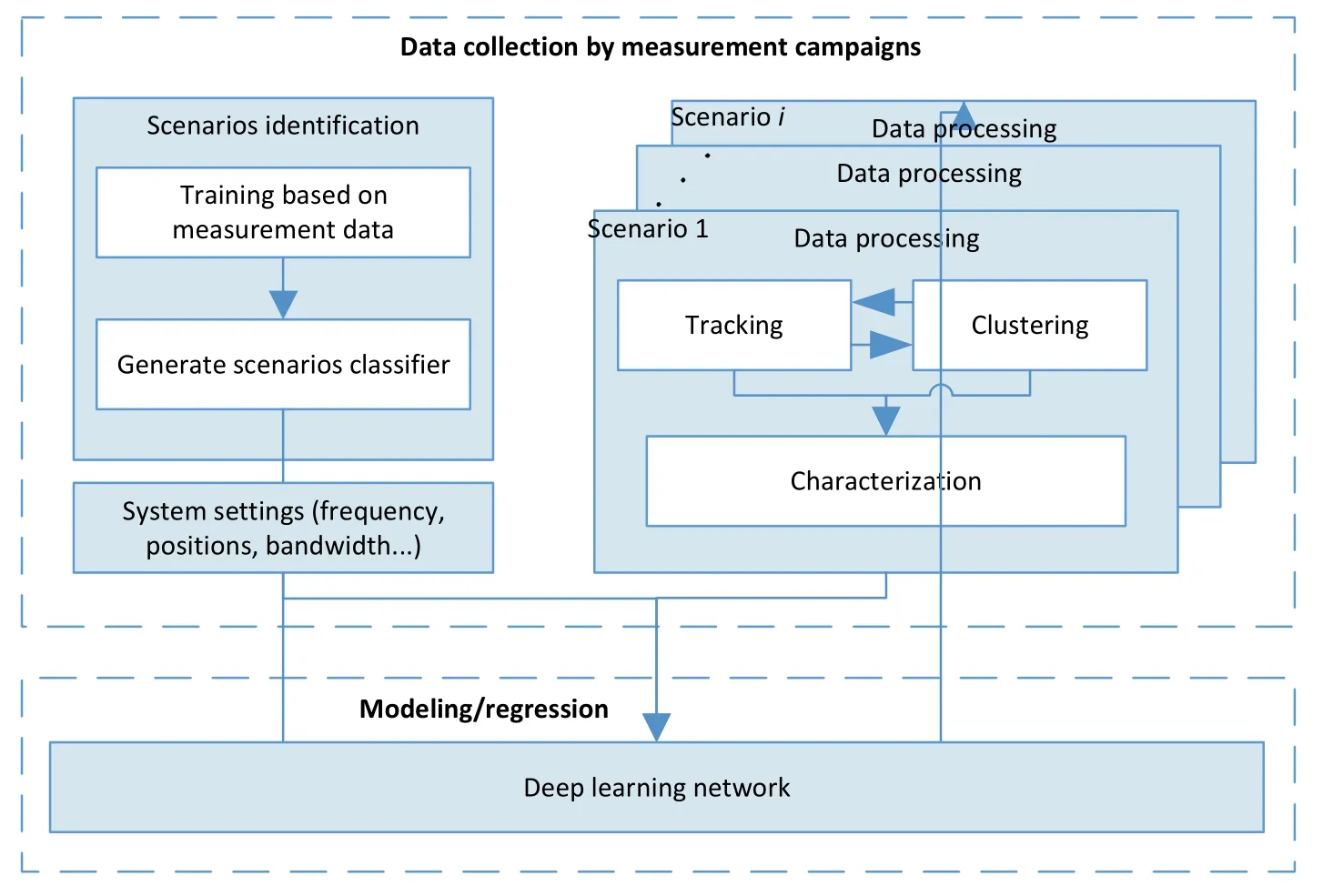

Figure 2.AI-based channel modeling framework in[3].

AI-based channel modeling has also drawn great attention,because enormous access equipment,new propagation scenarios,richer frequency bands,and increasing antenna elements generate large datasets.An expected approach is to use training datasets to establish regression models to predict channel characteristics.A universal AI-based channel modeling framework is proposed in[3]and shown in Figure 2.Note that AI-based algorithms are sensitive to the variety of training data,therefore,the performance can be improved by using more training data.One factor limiting such development and application of AI-based channel modeling is the acquisition of training data from representative scenarios.With the continuous expansion of communication scenarios and frequency bands in future wireless systems,it is challenging to carry out a large number of measurement campaigns for each case.In this situation,the data acquisition based on simulated channels is a feasible alternative.

III.CHANNEL CODING

The PHY transmitter and receiver design typically relies on a mathematical channel mode.Guided by the coding theory and principles of modern engineering,Polar,Turbo and LDPC codes with large block lengths are able to achieve performance close to the Shannon limit under additive white Gaussian noise(AWGN)channels.However,there are many problems that are not well solved yet.Firstly,we may not know the optimal techniques and/or computationally efficient algorithms for a given model.Traditional AWGN capacity-achieving codes such as LDPC and Turbo codes with small block lengths have poor performance when encoder/decoder delay is restricted.The design of current communication systems consist of several individual components such as channel coding and modulation,and this block-wise approach is not guaranteed to be global optimal.Jointly optimizing these components is intractable or requires very high computational power.Secondly,accurate channel models and accurate channel state information may be not known.For example,deriving the channel response in molecular communication is challenging,as its reaction-diffusion mechanism involves coupled and non-linear partial differential equations,which has no closed-form solution in general.

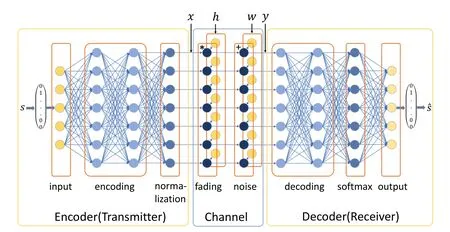

Figure 3.The structure of channel autoencoder(s,x,h,w and y denote digital data,the transmitted symbol vector,channel response,noise and the received symbol vector).

Most attempts applying DL in this regime can be classified into two categories,i.e.,i)designing a neural decoder for a given canonical encoder,and ii)jointly designing both the neural encoder and decoder,which is also known as channel autoencoder(AE)as shown in Figure 3.Designing the channel AE is relatively more challenging,partly because it involves the processing of the wireless channel during the training process.One of the challenges arises in the channel model deficit case,where we cannot train the neural network by backpropagating the gradient through the channel layers.In[9],Aoudia and Hoydis propose to train the receiver using the true gradient,and the transmitter using a gradient that is estimated and approximated through sampling of the channel distribution.Components of the transmitter and the receiver are trained in an alternative way until a stop criterion is satisfied.An alternative approach is to apply a generative adversarial network(GAN)to model a differentiable generative model of the channel,and use the learned channel model instead of a real channel to train the channel AE.Another recent breakthrough brought by the channel AE is the construction of new codes that comply with low-latency requirement.In[10],Jiang et al.design low-latency efficient adaptive robust neural(LEARN)codes using bidirectional recurrent neural network(RNN)outperforms convolutional code in block code setting.The success of DL has also been leveraged in the design of joint source and channel coding(JSCC).Optimality of separately designing source coding and channel coding in Shannon’s theorem assumes no constraint on the complexity of the design,which is not possible owing to the complexity and delay constraints in practice.In[11],Bourtsoulatze,Kurka and Gunduz propose an AE based deep JSCC,which is the first time an end-toend joint JSCC architecture is trained for transmitting high-resolution images over AWGN and fading channels.

These recent developments in DL based channel coding brings new challenges.The propagation characteristics of wireless communications makes it quite different to other domains such as computer vision and natural language processing.To obtain an optimal and robust channel coding design for various environments,a natural idea is to consider complex models with a large number of parameters in general.However,the computing resource is highly limited in many wireless communication use cases.One promising direction is to design light yet efficient structure via compressing the model and/or inventing new architecture,for example,using automatic machine learning.Furthermore,difficulty in training a DL model increases when the network goes deeper and the searching space of the problem goes larger.Communications is a field of rich expert knowledge about how to model problems in a concrete manner.How to inject expert knowledge into the design of DL model for channel coding is another challenge attracting increasing attentions.Model-aided wireless AI offers a potential pathway to design efficient architectures.

IV.INTELLIGENT SIGNAL IDENTIFICATION

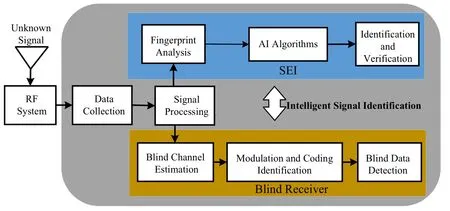

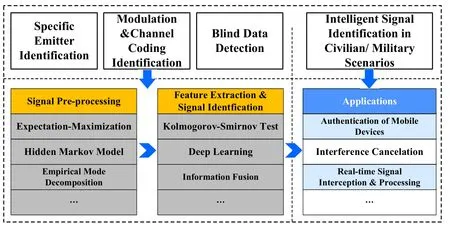

Figure 4.The block diagram of the intelligent signal identification.

With the advent of the mobile internet and the internet of things,spectrum scarcity and wireless network security have become one of the main challenges of the future mobile communication system due to the explosive growth of wireless devices and traffic.To address the issues,intelligent signal identification has emerged accordingly,which can ensure the security of the wireless network and improve spectrum utilization.Specific emitter identification(SEI)is a technique for designating the unique transmitter of a given signal by using the radio frequency(RF)fingerprints,which are originated from the imperfection of the transmitter’s hardware.In general,each of the transmitter distortions called the“DNA”has specific characteristics,which can be used to recognize the different emitters with same modulation parameters.SEI has been applied in many mobile communication systems,especially in the field of security.For example,in the authentication of the mobile device,the SEI can generate a collection of authenticated wireless devices and it can implement intrusion detection by comparing the intruder with the stored collection.Another interested application of the SEI is for the fight against counterfeiting.In this case,material of the manufacturing line can be regarded as the specific fingerprint to detect counterfeit products.

Automatic modulation classification(AMC)and channel coding identification are the techniques for judging the modulator and the encoder of a transmitter utilizing the data we have received,and the prior information of the transmitter and channel conditions are unknown.Moreover,the blind data detection detects the information bits combined with the recognition techniques.The recognition of the modulation and channel coding are the essential step before the demodulation and signal detection,which plays key roles in various civilian and military applications.The blind data detection directly affects the reliability of the communication systems.In the communication systems,the friendly signal should be securely transmitted and received,thereagainst,the malicious signals and users should be located,identified,and jammed.In such conditions,the advanced intelligent signal identification is required for real-time signal interception and processing,which is significant for processing in electronic warfare operations.In addition,it can also be applied in commercial systems,such as the soft defined radio(SDR).Figure 4 describes the process of the intelligent signal identification in the communication receiver.

From the working states of the emitters which are to be identified,the SEI schemes can be categorized into two classes:the schemes using the features of the transient signal and the ones using the features of the steady-state signal.The transient signal is difficult to be captured and it is sensitive to the channel condition.Thus,researchers mainly focus on the steady-state signal and many methods have been proposed such as the cumulant-based,high-order spectrum-based and other time-frequency features-based approaches.

Recently,some AI-based SEI schemes have been studied,which greatly improve the recognition accuracy.Wang et al.[12]have highlighted that DL for the specific emitter identification,which used the convolutional neural networks,can achieve the higher identification accuracy compared to the traditional methods used the features that is defined by the experts.In[13],the authors proposed an adversarial learning technique for identifying rogue RF transmitters and classifying trusted ones by designing and implementing generative adversarial nets(GAN).The widely adopted modulation classification algorithms can be categorized into two classes,i.e.,the likelihood-based(LB)approaches and the feature-based(FB)approaches.In the Bayesian sense,the LB method is optimal,however,it is sensitive to the complex electromagnetic environment.The FB method identifies the modulator by extracting features from the received signal.By choosing proper features,it is able to provide nearoptimal classification methods.Combined with the AI algorithms,the FB methods are more robust against the channel conditions.In[14],the DL-based radio signal classification was proposed,which considered a rigorous baseline method using higher order moments and strong boosted gradient tree classification.Lately,the channel coding identification using AI was also investigated.In[15],the authors proposed a novel identification algorithm for Space-Time Block coding(STBC)signal.The feature between Spatial Multiplexing(SM)and Alamouti(AL)signals was extracted via adapting convolutional neural networks after preprocessing the received sequence.Figure 5 describes the methods used in the intelligent signal identification,where signals are firstly preprocessed and then feature extraction and signal identification are applied.

Figure 5.The methods used in the intelligent signal identification.

With the development of the communication industry,the electromagnetic environment is more and more complex.How to solve the signal identification problem in the practical channel conditions becomes more challenging.It is desired to invent new recognition methods of high precision,more robust,and low complexity.Considering a non-cooperative communication case,a receiver is incapable of getting the prior information of the transmitter.How to design a complete blind receiver,which simultaneously estimates the channel conditions,recognizes the modulation and channel coding,and detects the information bits,is still very challenging.In the SEI techniques based on the fingerprint of physical layer,the quality of the receiver used to receive the samples is crucial.A low precision receiver may introduce the new fingerprints,which have the negative effects on the SEI.Thus,it is important to design new identification algorithms to compensate the performance degradation due to receiver distortions.

V.CHANNEL ESTIMATION

Channel estimation,a key technology for wireless systems,attracts much attention in the past decades.Recently,new design and implementation of AI assisted channel estimators become a hot research topic.During the past three years,mainly two AI algorithms are exploited to aid channel estimation:expectation maximization(EM)and neural network(NN).EM methods are utilized for blind channel estimation while NN approaches aim to enhance estimation or detection performance from a large number of available data.

Ye et al.investigate DL based channel estimator and signal detector for orthogonal frequency division multiplexing(OFDM)systems[16].They use deep neural networks(DNN)to estimate channels implicitly and recover the data symbols directly.Inspired by image processing techniques,a new channel estimator based on convolutional neural network(CNN)is introduced in[17]where the channel parameters are considered as a low-resolution image to be refined by a superresolution network and a denoising image restoration network.Yang et al.take the first step in employing DNN to estimate doubly selective channels in[18].Ma and Gao design an end-to-end DNN architecture composed of dimensionality reduction network and reconstruction network to respectively mimic the pilot signals and channel estimator for wideband massive MIMO systems in[19].Compared with traditional channel estimators such as least squares(LS)and linear minimum mean-square error(LMMSE),these AI assisted channel estimators can obtain reduced meansquare error(MSE)and lower BER,especially when the channel models are non-linear/non-stationary/non-Gaussian,or when the number of training symbols are limited[16-18].

Figure 6.Grant-free RA techniques for mMTC.

Even though these AI assisted channel estimators claim to outperform the traditional estimators,there are at least three open challenges to be addressed before their extensive application.First,high computational complexity.NN-based channel estimators have a large number of parameters to be configured,including network structure and coefficients,learning rates,and batch size,which have to be calculated in an offline manner.Huge computational load leads to not only non-negligible time delay,but also large energy consumption.Second,parameter and data sensitivity.Even these parameters are set,a small change of one parameter can result in considerable gap in output.Meanwhile,NNs are trained by using synthetic data in different conditions of channels and transceivers.For instance,different SNRs require various data and hence NN coefficients are changed at each SNR.Last but not least,the underlying principles for outperformance.Till now,there is no theoretical analysis that can demonstrate or explain how and why,and on which conditions can deep learning,i.e.,DNN,achieves less MSE than LS and LMMSE.

VI.PHY DESIGNS FOR RANDOM ACCESS IN MMTC

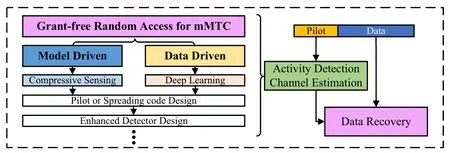

As one of the three major scenarios of 5G,mMTC has the special features of massive number of devices,small-sized packets,low or no mobility,energyconstrained and sporadic transmission,which cannot be supported by the traditional grant-based access method,as the excessive control packets will cause low spectral efficiency,redundant handshaking procedure will cause large latency and energy consumption,and a limited number of orthogonal preambles per cell will cause serious collision.Therefore,it raises new challenges for the development of random access(RA),and calls for new PHY designs and receiver algorithms.As a promising alternative,the grant-free RA scheme for mMTC has recently attracted significant attention,where the active devices directly communicate with the base station(BS)without waiting for a grant.

The modern sparse signal processing based grantfree RA scheme triggers the opportunity for optimized system design tailored to B5G/6G.As shown in Figure 6,active devices simultaneously send their nonorthogonal pilot(for activity detection and channel estimation)and data to the BS.By exploiting the sporadic active property of the mMTC,the active device detection and the channel estimation can be formulated as a sparse signal recovery problem.In particular,the BS can conduct a two-phase decoding procedure,where the activity detection and channel estimation are jointly performed using the pilot in the first phase,and then the data recovery is performed in the second phase by using least square estimation with the knowledge of estimated channel state information(CSI)of active devices.Various research activities have shown drastic improvements for RA,thanks to proper pilot design(or spreading code),sophisticated receiver algorithms,and more complex models that leverage signal structures and prior information beyond the sparsity.For example,in[20],Xiao et al.utilize the information of data length diversity to improve the performance of the receiver.

Many work consider model driven solutions,e.g.,using approximate message passing(AMP)for activity detection.Furthermore,it is showed that massive multiple-input multiple-output(MIMO)provides an opportunity to leverage the structure of multiplemeasurement vector(MMV)to enhance the detector,and the performance of the activity detection can be significantly improved.In[21],Chen,Sohrabi and Yu considers two enhancements for the AMP-based detector,i.e.,i)when the large-scale fading coefficients of the devices are known to BS,the detector is designed taking the statistics of fast fading component into consideration,and ii)when the large-scale fading coefficients of the devices are unknown to BS,the detector is designed considering the statistics of both large-scale fading and fast fading components.In addition to the AMP,sparse Bayesian learning[22-24]which has superiors performance for sparse/low rank minimization and is flexible to adapt to structured signal models is a promising technique for activity detection with extra domain information.

Another line of research is the data driven paradigm.The key of the sparse signal processing oriented grantfree RA for mMTC is the sparse signal recover problem,which has no closed-form solution and is solved by using complex iterative algorithms.A promising direction of growing interests nowadays is to employ DL techniques to develop fast yet accurate algorithms for the sparse linear inverse problem.Recently,Li et al.propose an approach to jointly design the pilot sequence matrix and the activity detector by using the AE[25].Specifically,the proposed solution consists of an AE and a hard thresholding module.The AE includes two parts,i.e.,i)the encoder which imitates the noisy linear measurement procedure,and ii)the decoder which conducts the sparse reconstruction.Furthermore,by dividing the complex signal into real and imaginary parts and using the standard AE for real numbers,the proposed AE can be adopted for dealing with complex signals.In[26],Ye et al.apply DL by parameterizing the intractable variational function with a specially designed DNN to incorporate random user activation and symbol spreading.In[27],Bai,et al.propose a DL method with a novel block restrictive activation nonlinear unit to capture the block sparse structure in wide-band wireless communication systems,which enhances the active user detection and channel estimation performance in mMTC.In[28],Chen et al.propose an end-to-end trainable DL architecture with adaptive depth by adding an extra halting score at each layer,which is suitable for the case that the number of active users varies.These data driven methods can solve the sparse signal recover problem with a low computational complexity.

While modern RA techniques provide promising gains,further investigation is necessary to understand their true potential in mMTC.One challenging is the synchronization issue.Many existing solutions are only applicable to devices with no mobility,where non-orthogonal pilots are assigned for different devices and the Timing Advance(TA)is assumed to be available so that signals from different devices can be received at the BS synchronously.Furthermore,unlike scheduled access,RA fails to leverage specific channel and traffic characteristics.The marriage of RA and data-driven methods could unleash new RA designs,where the BS could learn from data of a specific IoT scenario and exploit the information to enhance device activity detection.

VII.MASSIVE MIMO POWER CONTROL

Massive multiple-input multiple-output(MIMO),which has been one of the key technologies of 5G mobile systems,essentially groups together a large number of antennas at the transmitter and/or receiver to provide super high spectral and energy efficiency[29].For the massive antennas in massive MIMO systems,one of the major challenges that arises is the optimal power control policy in both downlink and uplinks.For example,the uplink power control in massive MIMO systems with classical linear reception is often a non-convex problem and cannot be solved with low complexity[30,31].Increasing the power of one user may inflicts huge interference to other users.It is challenging to find a real-time convex power control algorithm for the network with hundreds of antennas and users.

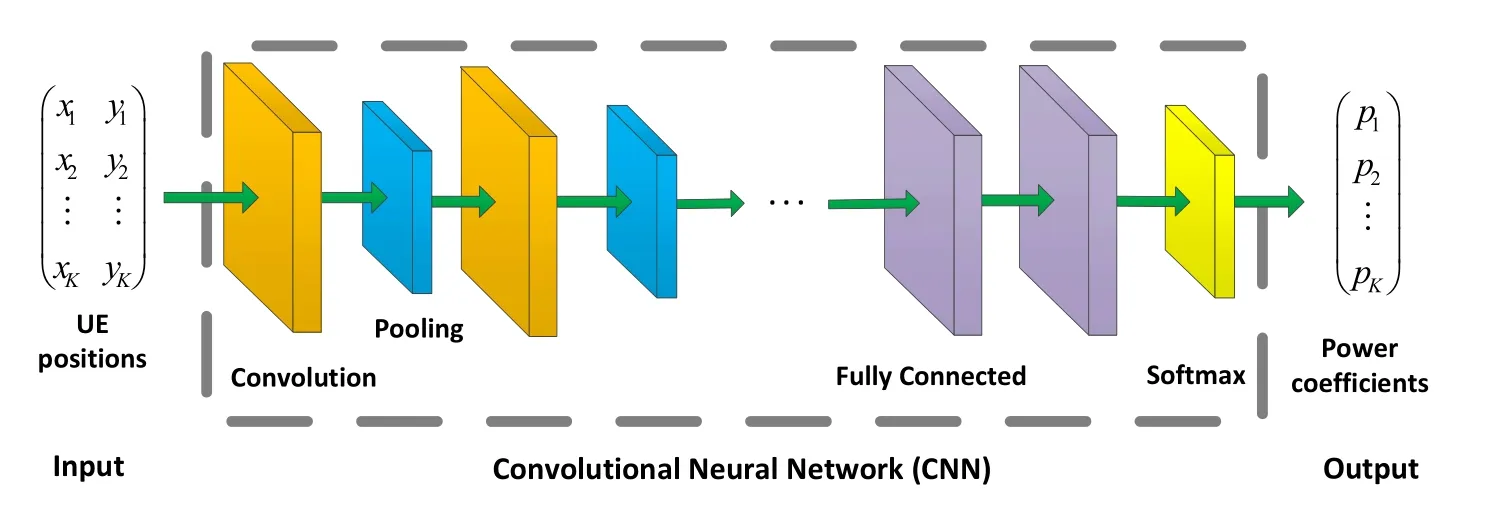

Figure 7.CNN based power control for massive MIMO systems.

As a kind of machine learning techniques,deep learning has obtained much interest for the solution of optimal power control policy in massive MIMO systems.Basically,the optimal power control is a combinatorial search problem.Compared with traditional optimization methods(greedy-like and orthogonal matching pursuit algorithms),the deep learning technique can greatly reduce the computational complexity[32].Inspired by this,as shown in Figure 7,there are some recent papers that try to use deep learning to solve the power allocation problem of uplink and/or downlink in massive MIMO.For example,the authors in[33]studied max-sum-rate and maxmin power allocation problem in the uplink of massive MIMO systems through artificial neural networks(ANN),in which takes positions of users as input and obtain the results of power allocation as output.A DNN is trained to learn the map between the positions of users and the max-min or max-prod optimal power allocation policies in the downlink of massive MIMO systems,and then use positions of UEs as input to predict the power allocation as output[34].

However,most of previous works are based on the assumption of perfect users’position and the largescale fading information.This information may be not incorrect and even achieve a local-minimum.Moreover,it is practical to consider sophisticated receivers and the scalability in the context of deep learning approaches.Developing a robust and low-complexity deep learning technique to solve the power control problem in massive MIMO should be considered.Moreover,the performance of different objects for power control in the uplink of massive MIMO with different receivers is of significant interesting.

VIII.PHY RESOURCE MANAGEMENT

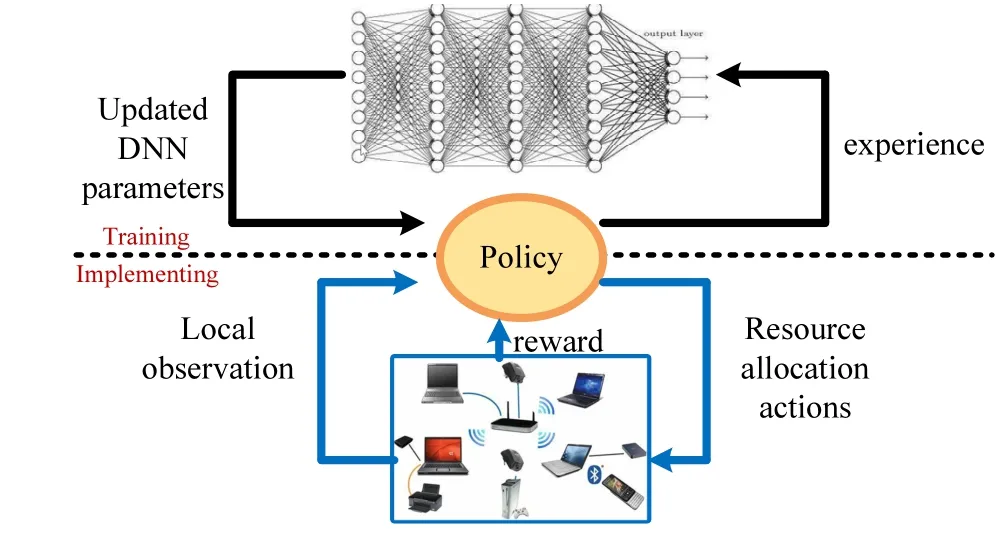

Due to the limitation of communication resources including frequency band,power and time,it is essential to maximize the resource utilization efficiency.To this end,various resource allocation schemes have been reported towards different system design objectives,such as sum-rate maximization,secrecy rate maximization,power minimization and energy efficiency maximization[35].Traditionally,resource allocation was mainly optimized by using convex optimization theory or game theory.Such theories show very strong capability in finding the global optimal solution or Nash equilibrium to maximum system physical layer resource efficiency by solving deterministic optimization problems.However,they are mathematical model-based theories or with statistical assumptions and sometimes only take into effect in small-scale and quasi-static network systems,because in large-scale and dynamic cases,resource allocation problems often involve multiple variables,multiple constraints and multiple objectives,requiring iterative computing with relatively high complexly,or have to be solved frequently to be adapted to the fast change of network states,e.g.,channel,link topology and traffic.

Recently,researchers have begun to apply DL to perform PHY resource management in various systems and networks,such as cognitive radio networks and massive MIMO systems.DL presents multi-layer computation models which are capable of learning efficient representations of data with multiple levels,finding the hidden laws of wireless scenarios including channel features and traffic patterns,and enabling a powerful data-driven technique to problems that are lack of accurate models or with high computational complexity.It has been demonstrated that DL is able to achieve significant performance enhancement over traditional methods.In[36],a method referred to as RF Learn was presented based on DL to enable system extract spectrum knowledge from unprocessed I/Q samples directly in the RF loop,which helps solve the fundamental problems of modulation and OFDM parameter recognition.Particularly,deep reinforcement learning(RL)provides a good treatment of service requirements hard to exactly model and is not subject to any optimization approaches[37].The correspondence of parameters and solutions of a PHY resource allocation optimization problem are able be learned by deep RL.RL is powerful to address sequential decision making by maximizing a numeric reward while interacting with unknown environment,as illustrated in Figure 8.Mathematically,RL problem is often modeled as a Markov decision process(MDP).The RL framework presents native analysis for sequential decision making under uncertainty in resource allocation problem especially in dynamic wireless environment.In Figure 8,a centralized training architecture,where a central controller gathers experiences from agents and compiles the reward for DQN training.In the implementation,each agent collects a local observation of the wireless network and then uses its local copy of the trained DQN to guide its resource allocation in a distributed manner.To alleviate the impact of environment non-stationarity,the agent may take turns to change its action to stabilize the training.

Figure 8.The deep RL training and implementation architecture for resource allocation in wireless networks.

Different deep neural network architectures have different strengths and weaknesses in different applications.There is no single architecture being the best on in dealing with all kind of resource allocation tasks.Wireless resource allocation is with its own unique characteristic that are worth redesigning an appropriate neural network.Moreover,it is still unclear whether existing network architectures best suit the requirements of resource allocation and theoretically understanding the convergence properties of training neural network architectures with new purpose and efficient training approaches also largely remain unknown.Additionally,it is extremely hard to build an efficient simulator with high enough fidelity that guarantees the output resource allocation policies as effective as expected when applied to real wireless systems.

IX.DISCUSSIONS AND CONCLUSIONS

This article presents the recent developments AI assisted PHY techniques.It has been observed in recent years that AI,especially DL,becomes a powerful enabler successfully used to realize designs superior to traditional designs in many cases.To fully leverage the potentials of AI and use these techniques in practice,the following research directions call for more investigations.

New PHY components.AI might lead to a revolutionary PHY design that is distinct to the block by block design in traditional communication systems.Hidden structures and features of the radio propagation environments could be mined and exploited to boost the communication capacity.Component correlations in the temporal,spatial and spectral domains could be extracted and employed to reduce the pilot overhead and feedback overhead.

Theoretical developments.AI provides benefits in complex communications scenarios that are difficult to describe with concrete mathematical models.However,the capability of AI is still unknown so that we are not able to provide achievable capacity bounds and make a guarantee on the robustness for wireless communications.We also lack theory that could guide us to create a neural network design that is appropriate for some target task.

Limitations in reality.A large part of the credits for the success of AI should be given to the production of massive data nowadays.However,in comparison to other fields,e.g.,computer vision,we do not have a sufficiently large amount of data in wireless communications.Building world-wide open access real-world data sets could be one solution.It would also be helpful if existing expert knowledge could be injected into AI designs.Furthermore,the computing resource and storage resource are highly limited in many wireless communication use cases.It would a promising direction to design a unified,light yet efficient structure to address various tasks at the mobile devices.

Universality of AI-based channel model.Future wireless systems will involve more frequency bands and scenarios,and may face cross-system integration with other existing communication systems such as satellite and maritime communications.All these will make future wireless systems more complex and diverse,and also bring challenges to the universality of channel models.In particular,AI-based channel model is sensitive to the variety of training data,and it is challenging to collect enough training datasets in all scenarios.Evolved AI-based channel modeling needs to extract a universal propagation mechanism from different datasets with limited scenarios to meet the growing needs of future wireless systems.

ACKNOWLEDGEMENT

This work is supported by the Fundamental Research Funds for the Central Universities(2020JBM090,2020JBZD005); National Key R&D Program of China(2018YFE0207600,2020YFB1807201);the Key-Area Research and Development Program of Guangdong Province(2019B010157002);the Natural Science Foundation of China(61671046,61911530216,6196113039,U1834210);the Beijing Natural Science Foundation(L202019);the State Key Laboratory of Rail Traffic Control and Safety(RCS2021ZZ004,RCS2020ZT010)of Beijing Jiaotong University;NSFC Outstanding Youth Foundation under Grant 61725101;the Royal Society Newton Advanced Fellowship under Grant NA191006.

- China Communications的其它文章

- Secrecy-Enhancing Design for Two-Way Energy Harvesting Cooperative Networks with Full-Duplex Relay Jamming

- Adaptive Maxwell’s Equations Derived Optimization and Its Application in Antenna Array Synthesis

- A 16-QAM 45-Gbps 7-m Wireless Link Using InP HEMT LNA and GaAs SBD Mixers at 220-GHz-Band

- A Wideband E-Plane Crossover Coupler for Terahertz Applications

- Terahertz Direct Modulation Techniques for High-speed Communication Systems

- A Semi-Blind Method to Estimate the I/Q Imbalanc of THz Orthogonal Modulator