Computing Power Network:The Architecture of Convergence of Computing and Networking towards 6G Requirement

Xiongyan Tang,Chang Cao,Youxiang Wang,Shuai Zhang,Ying Liu,Mingxuan Li,Tao He

Future Network Research Department,China Unicom Network Technology Research Institute,Beijing 100048,China

Abstract:In 6G era,service forms in which computing power acts as the core will be ubiquitous in the network.At the same time,the collaboration among edge computing,cloud computing and network is needed to support edge computing service with strong demand for computing power,so as to realize the optimization of resource utilization.Based on this,the article discusses the research background,key techniques and main application scenarios of computing power network.Through the demonstration,it can be concluded that the technical solution of computing power network can effectively meet the multi-level deployment and flexible scheduling needs of the future 6G business for computing,storage and network,and adapt to the integration needs of computing power and network in various scenarios,such as user oriented,government enterprise oriented,computing power open and so on.

Keywords:6G;edge computing;cloud computing;convergence of cloud and network;computing power network

I.INTRODUCTION

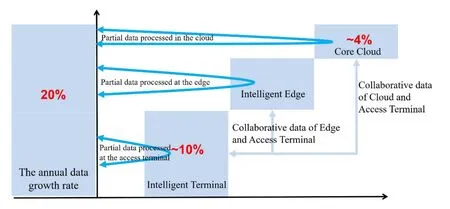

Many operators in North America,Europe and Asia were granted 5G licenses in 2019,marking the formal commercialization of 5G technology.With the continuous improvement of mobile communication speed,reliability,the massive increase in connections,as well as the inseparable combination of 5G and technologies like cloud computing,big data,mobile Internet,artificial intelligence,the 6G era is no longer far away from us.From the analysis and prospect of 6G[1],it can be seen that 6G will substantially meet the future communication needs,including more comprehensive connections,greater transmission rate,and more intelligent application solutions.In 6G era,human beings will step into the intelligent society.Intelligence refers to the sum of knowledge and intellect,mapping to the digital world is “data+ computing power +algorithm”.The algorithm needs to be implemented by scientists.Massive data comes from people and things from all walks of life.Data processing requires a large amount of computing power.Computing power is the basic platform of intelligence which consists of a large number of computing devices.Research shows that in 2020,intelligence will be dominated by human brain,supplemented by computer brain,represented by various intelligent terminals;From 2025 to 2030,human brain and computer brain will assist each other,intelligent manipulator,assisted driving,and intelligent manufacturing will appear widely;From 2030 to 2040,it will gradually enter the“machine intelligence” society in which human brain defines rules and computer brain performs tasks automatically,with new service forms of machine steward,unmanned driving,and unmanned factory appearing widely.At the same time,the total amount of global data is still growing,and it is expected to be 47 ZB in 2020 and 163 ZB in 2025,with 20%annual compound growth,most of which comes from Asia Pacific(about 40%),North America(about 25%)and Europe(about 15%).The number of servers installed in global data centers will be 62 million units in 2020,with a yearly growth rate of about 4%;Intelligent terminals(including mobile phones / M2M / PC,etc.) will have an annual compound growth of about 10%.Due to the technology constraints,the computing power of a single chip will approach its peak after 5nm,which also makes the growing space of traditional intensive data center computing power and intelligent terminal computing power face great challenges[2].To support the continuous growth of data in the era of machine intelligence,only the two-level architecture of Access Terminal+Data Center can’t meet the requirement of data processing,and the computing power will necessarily spread from the Cloud and Access Terminal to the Edge.There will be a three-level architecture for data processing:Access Terminal,Edge,and Data Center,and the processing capacity of the Edge has begun to grow rapidly,especially with the comprehensive construction of 5G network,its large bandwidth and low latency will accelerate the computing power demand diffusion from the Access Terminal and Cloud to the Edge[3,4].The schematic diagram of the three-level computing power architecture is shown in Figure1.Meanwhile,as a kind of resource,computing power has the universal characteristics of electricity.Similar to grid operators,network operators can also develop a unified platform for sharing resources,which collects and schedules idle computing power resources distributed throughout the network.In this way,users who provide computing power can also obtain certain benefits through the platform.For those who need computing power resources,they can obtain the computing power resources anytime and anywhere from the network,and they don’t even need to care who the providers are.In specific AI applications,computing power can also be combined with algorithms and continuously optimized by the AI application developers,so as to achieve the separation of computing power providers,computing power users and computing power developers,and build a virtuous cycle of business model.

Figure1.Three-level computing power architecture of cloud/edge and access terminal.

Computing power network stimulates the protocol innovation of network addressing,based on addressing only according to network index,computing power index is extended.The network can automatically collect all the information about computing power and resource location,and then address to appropriate nodes for service after executing the distributed control protocol.From the perspective of service,users do not care about the deployment location of services in the network,but only show their own service requirements(including network requirements,such as bandwidth,delay,packet loss,etc.;computing power requirements,like how much computing power is needed or what kind of computing task needs to be completed),the network automatically finds the optimal resources to meet the above requirements by addressing,and deploys several services in serial or parallel way.Finally,computing power network separates edge service provider,edge network provider,infrastructure provider and user,and makes network become the intermediary of multi-agent,heterogeneous and widely distributed computing power provider.

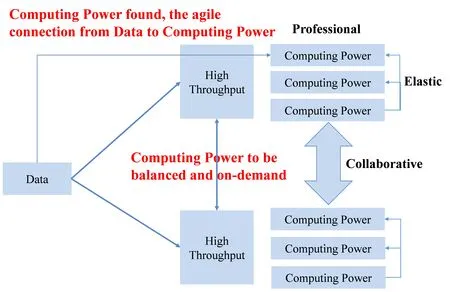

Figure2.Demand for efficient computing power on the network.

II.EFFICIENT COMPUTING POWER AND COMPUTING POWER NETWORK

The concept of cloudification network was first introduced in 2009.It has been developing continuously with the rise of SDN/NFV technology.Cloudification network means that the network is designed for users to access the cloud and cloud connection,it combines the network service ability with cloud service ability to provide cloud service users and operators with the user experience of Integrated Cloud and Network[5–7].Similar to the development of cloud computing in 2019,with the rapid development of AI technology,efficient computing power will gradually become a key element supporting the development of the intelligent society.And the demand for efficient computing power on the network is also increasing,including the following three aspects,as shown in Figure2.

Firstly,efficient computing power requires a professional network.Focusing on dedicated scenarios can complete more calculations with lower power consumption and cost.At present,video and image analysis are relatively mature scenes with the greatest demand for computing power,which basically run through all intelligent scenes in all industries.Video analysis and processing at the Edge and in the Cloud requires high throughput,which depends on two key indicators,network bandwidth and latency.The larger the bandwidth and the lower the latency is,the greater the data throughput is.Secondly,efficient computing power requires an elastic network.Such as the overallocation of computing power,the agility provided by computing power,and the elasticity of 100mslevel light computing,elastic data processing requires the network to provide agile connection establishment,bandwidth and service level flexibility adjustment capabilities between data requirements and computing power resources.Thirdly,efficient computing power requires a collaborative network.From the cooperation between multiple cores inside the processor,to the “balance of computing power” among multiple servers in the data centers,and then to the“on-demand computing power” of the entire network edge,the purpose of collaboration is to make full use of computing power resources,and the bearer network needs to support the balance of computing power,traffic scheduling and congestion management between multiple edges,between the edge and the core.To achieve high-efficiency computing power,a new network architecture with deep integration of“computing+network”is needed to realize high throughput,agile connection from data to computing power,balanced and on-demand computing power.Because of its close relationship with computing,we call this network architecture Computing Power Network,which means a network organization method that flexibly allocates and schedules computing resources among the cloud,network,and edge according to the needs of computing services.Through the deep integration technology of“computing+network”,combining individuals to enterprises,the urgent needs of worldwide AI services,and their own network service capabilities and idle IT resources,operators can fully provide universal AI computing power for the whole society,making computing power a new generation of universal standardized products after voice,short message,private line and traffic services.The value of this product is higher than traffic services,since it can combine user latency demand,computing power and computing location demand,take full advantages of operator 5G network access capabilities,the number of edge computer rooms,wide optical fiber coverage and surplus IT resources,even be packaged into multi-level and multi-type computing power services for different user levels and needs,and ultimately provide powerful “Energy” and “Nourishment” for the AI society.Especially when the AI service is combined with 6G and edge computing in the future,higher requirements are put forward for the network’s extremely low delay,the coordination from the computing power node to the edge and between the edge nodes.The computing power network will escort the business mode of AI+6G+MEC.To achieve this,the next generation network of operators must also be constructed according to the requirements of providing computing power services.The convergence of computing and network will become an important trend of network evolution after the convergence of cloud and network.

Figure3.Demand for efficient computing power on the network.

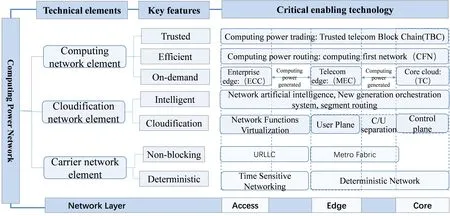

III.THE KEY TECHNIQUES OF COMPUTING POWER NETWORK

Computing power network needs three technical elements:carrier network,cloudification network,computing network,of which carrier network is the base.In 5G era,the technology of URLLC and End-to-End Deterministic Network is introduced to meet the needs of vertical industries such as VR / AR and Industrial Computing.Meantime,in order to achieve the goal of Non-blocking Network,it is necessary to extend the leaf-spine architecture of data center to the metropolitan area to build Metro fabric[8].In terms of cloudification network element,network AI technology will play a great role in the operation and maintenance,management,fault prediction and other aspects of the computing power network,and the network needs to be further clouded in order to improve the service delivery efficiency.The computing network element mainly includes three aspects:computing power generation,computing power scheduling(routing)and computing power trading.The overall goal is to make the network an infrastructure providing AI computing power for the whole society.The key technical elements are shown in Figure3.

Figure4.Non-blocking metro fabric architecture.

3.1 Carrier Network Element:To Make Nonblocking and Deterministic Network Connections

Ultra-Reliable and Low-Latency Communication(URLLC):In addition to the optical fiber latency caused by the transmission distance,on the equipment layer level,the greatest impact on the latency is the Wireless Access Network,with the data processing latency of ms 10ms;the second is the Data Communication Network and the Cloud Core Network,with the light load latency of 1ms,but if congestion occurs,the latency will sharply increase by tens of times;the least is the Optical Transmission,with the latency of 100us.Therefore,the main contradiction in the latency problem lies in how to reduce the latency of the Wireless Access Network,how to avoid congestion between the Carrier Network and the Core Network,and how to provide deterministic latency.URLLC is an end-toend concept,which only refers to the URLLC in 5G RAN,the indexes of URLLC are defined in 3GPP RAN TR38.913,including control plane latency of 10ms,user plane latency of 0.5ms,mobility interruption time of 0ms,and reliability of 99.999%[9].Nonblocking Metro Fabric:The main solution to solve low latency in the Data Communication Network is to avoid congestion.From“voice/broadband service as the center” to “cloud / computing service as the center”,the previous forwarding technology and complex network cannot adapt to the change and need to be reconstructed.The requirement of cloud computing for DC has developed from“DC as one computer”to“DCN(Core and Edge)as one computer”,and the corresponding Carrier Network also needs to evolve from“DCN as one non-blocking router”to“Metro Fabric as one non-blocking router”in the future,shown in Figure4,so as to realize the deterministic network latency capability of less than 1ms.

Deterministic Network:DetNet refers to the ability to provide deterministic service guarantee for the services carried in a network domain,which includes latency,delay jitter,packet loss rate and other indicators.DetNet can send multiple copies of the sequence data flow through multiple paths and eliminate copies at or near the destination.Congestion can be eliminated by adjusting packet transmission and allocating sufficient buffer space for critical flow.There is no fault detection and recovery cycle,and each packet is copied and taken to or near to its destination.So a single random event or a single device failure will not cause any packet loss[10].

Time Sensitive Networking(TSN):It refers to a set of protocol standards being developed by TSN study group in IEEE802.1.The standards define the time sensitive mechanism of Ethernet data transmission,which adds determinacy and reliability to the standard Ethernet,so as to ensure that Ethernet can provide stable and consistent service level for the transmission of key data.TSN standards can be applied to many vertical fields,such as industrial and automotive networks,to provide service guarantee for those service with deterministic and reliability requirements[11].

Figure5.Computing first network hosting computing power routing.

3.2 Cloudification Network Element:Continuous Promotion of Intelligent Network and Network Cloudification

Control Plane Intelligence:Along with the network flexibility brought by the separation of control and user plane,the cloudification of control functions and three-level distributed deployment,the complexity of integration,operation and maintenance is also rising rapidly.The traditional way of artificial operation and maintenance is difficult to support.In the future,big data analysis and artificial intelligence will be gradually introduced to replace artificialities,but the over complex network protocols will also increase the difficulties of AI algorithms.In addition to the control plane intelligence,the protocols and network topology of the forwarding plane also need to be simplified.Intelligence and simplification also constitute the basis of the “Network Automatic Driving”.Network Cloudification:Network control plane includes strong real-time functions (such as reliability,algorithm,protocol processing) and weak real-time functions(such as management,configuration).Weak realtime functions are the first to be clouded and can be flexibly deployed in the cloud.After strong real-time functions are clouded,the functions which need to be centrally controlled may be close to the controlled devices and deployed in the network center or the edge cloud,while some real-time distributed control functions will be embedded in the devices in the form of micro services.The hierarchical cloudification control functions not only retain the agility and openness brought by the cloudification,but also can meet the real-time system requirements on demand.

3.3 Computing Network Element:Trusted,Efficient and On-demand Network for Computing Services

Figure6.Application scenario of 5G campus+AI.

On-demand Edge Computing:MEC means multiaccess edge computing,of which the “M” includes multiple access methods such as MBB and WLAN,it can be regarded as a cloud server running specific tasks on the edge of the Telecom Network.MEC provides application developers and content providers with ultra-low latency,high bandwidth,and a service environment that can obtain wireless network information in real time,so as to provide differentiated services for terminal users [12].MEC is deployed at the edge of the Access Ring and Access Aggregation Ring near the Base Station,which makes the content source close to the terminal users at the most extent,and even enables the terminals directly to access the content source locally,thus reducing the end-to-end service response latency from the data transmission path.At present,more and more subdivided fields hope to achieve industry customization based on the Telecom Network,which can provide open platforms through MEC to realize cooperative service innovation in the telecom industry and vertical industries.The MEC defined by ETSI is a platform with open wireless network capabilities and open operation capabilities,MEC can provide wireless network information,location information,services enabling control and other services for the third-party applications running on its platform host by means of open API.Computing power network is a way of computing power information exchange and computing power collaborative service between MEC sites via the distributed control plane in the network.It serves for MEC businesses,especially when MEC moves towards micro edge business mode in 6G era.Efficient Computing First Network:In the edge computing and even ubiquitous computing scenarios,due to the limited computing power resources of a single site,multi-site cooperation is required,the existing architecture generally manages and schedules by the centralized orchestration layer,which has shortcomings like weak scalability and poor scheduling performance.The future network architecture needs to be able to support different computing functions,so that services can be instantiated in real time at different distances from the client,according to business requirements and network conditions.In order to solve these problems,a new architecture of the integration of computing and networking based on distributed networks,Computing First Network(CFN),emerges as the times require CFN is located above the network layer and is implemented by the form of overlay.CFN publishes the current computing power status and network status as routing information to the network,and the network routes computing task packets to the corresponding computing nodes to achieve optimal user experience,computing resource utilization,and network efficiency.CFN connects the distributed computing clusters through the CFN router.Its basic function is (to route) based on the routing information of Service ID and Data ID in the request,while advanced functions include data prefetching and others that can improve efficiency and user experience.The way in which CFN carries computing power routes is shown in Figure5.In addition,CFN also defines a common set of computing interfaces between CFN and services,and uses open routing protocols to discover abstract computing resources generate and maintain the topology and routing at the computing resource level[13,14].

The core value that CFN can provide for applications is to unload the computing tasks of terminals to appropriate computing nodes,edges or central clouds based on the available computing power and algorithms of the current network,as well as the ability of CFN to flexibly match and dynamically schedule the computing resources,so as to support service computing requirements and ensure user experience.Trusted Telecom Block Chain:In order to apply block chain technology in the Telecom Network,the concept of“Token”is introduced in ITU related standards for data or resource sharing and trading.With the development of the communication network technology,many services have put forward new requirements for broadband and latency.For the sake of improving user experience,massive data in the future need to be processed locally or at the edge,which can reduce the network load and achieve lower latency.To open up the computing power of edge computing,operators need to build a trusted computing power sharing and trading platform.In terms of construction mode,we may consider to combine IT platform technology with block chain technology,and adopt more efficient means such as block chain alliance,so that we can better meet the needs of supervision and audit.When it comes to actual deployment,the block chain platform or application can be installed and deployed on the edge computing server to provide support in terms of block chain technology and capability for different application scenarios.

Figure7.Application scenario of 5G+cloud X.

IV.TYPICAL APPLICATION SCENARIOS OF COMPUTING POWER NETWORK

Computing power network is closely related to the maturity of 5G and AI technology.The first mature application scenarios will mainly focus on the realization of automatic identification and auxiliary decisionmaking systems with the help of video and image AI technology.These scenarios put forward high requirements for network bandwidth,latency and computing power,such as MR / AR / camera / robot and other flexible wireless access methods,large bandwidth network required by HD video transmission,millisecond level deterministic latency in the industrial field,AI inferential capabilities based on image analysis,computing capabilities required by image rendering,burst and fragmented computing power processing capabilities,credibility of computing power processing to realize the requirements of quantifiable and tradable value.Up to now,related application scenarios exist in operators’5G ToB and ToC fields.

4.1 Operator ToB Scenario of ‘‘5G Campus+AI”

The impetus for enterprises to build 5G Campus comes from video services,including monitoring,intelligent production,and automatic driving in the campus,which require more bandwidth and higher realtime processing capabilities.Firstly,these kinds of services have such high requirements on data privacy that the original data is not allowed to be uploaded to the public cloud.Secondly,in order to avoid the significant increase in the cost of private lines and data centers,some of the original data is analyzed and processed locally and stored in the central data center on demand.Thirdly,When outdoor and IT room scenarios coexist,there are certain requirements for noise and power consumption,such as natural cooling without fan,wider ambient temperature,and lower power consumption.Finally,some AI applications related to security and production in the enterprise require low latency at millisecond level.The scenario of 5G Campus+AI is shown in Figure6.

Figure8.Application scenario of computing power trading via computing power token.

At the edge of the enterprise,it is necessary to support the U plane function of the Core Network and video AI processing under the constraints of limited power consumption and space,and consider sharing the computing power of multiple edges to form a resource pool to maximize the efficiency of computing power.Some enterprise AI training empowerment services can be provided on the public cloud.

4.2 Operator ToC Scenario of ‘‘5G + Cloud X”

The biggest challenge of Cloud X service (shown in Figure7)experience is bandwidth and latency,among which the processing latency of 5G NR and Core Network is relatively stable,and the transmission latency is greatly affected by the network environment (such as congestion) and the transmission distance.Taking Cloud VR as an example,the end-to-end latency needs to be less than 40ms for a good experience,less than 20ms for an ultimate experience.However,the server and terminal processing latency has exceeded 15ms.So,the total latency left for the“5G network+transmission+MEC”is less than 5ms if the ultimate experience needs to be guaranteed.

In order to ensure the service experience and meet the strict latency requirements,the Core Network architecture of C/U separation needs to be considered,here,the U plane moves down to the edge of the operator and provides services close to the users.Cloud X service mainly relies on the help of GPU computing power,in order to reduce the service cost per user,it is important to improve the efficiency of computing power.For the purpose of ensuing the service experience,it is necessary to reduce the occurrence of network delay jitter,increase the perception and prediction ability between the service and the network,actively balance the resources according to the network status when distribute service.

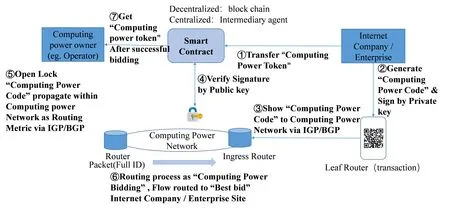

4.3 To Open Computing Power,Operator Providing Tradable Computing Power Tokens

In the intelligent society,tens of thousands of Internet companies are traffic acquirers and computing power demanders,while operators become the owners of computing power and the leaders of traffic,with the advantages of a large number of edge computer rooms and edge computing power after network cloudification.In order to adapt to the development of the intelligent society,a new service model that dynamically and quickly matches the above supply and demand relationship is:Internet companies / third parties call edge computing power from operators on demand,obtain traffic from operators’computing power network,so as to save a lot of edge computing asset investment and traffic acquisition costs;Operators take the advantages of edge computer rooms and network cloudification,monetize“network connections and related digital assets(computing and storage)”.This new service model based on the sharing economy brought by the intelligent society can be shown in Figure8 in conjunction with the technical solutions.

What needs to be noted is that “Computing Power Token” is equivalent to the computing power provided by the operator’s computing sites,that is,“Computing Power Token” is a commercial abstraction of the computing power provided by a computing site,while“Computing Power Code”is a technical abstraction.“Computing Power Token”is transmitted in the computing power network in the form of“Computing Power Code”.

V.CONCLUSION

Based on the characteristics of the computing power service in 6G era,this paper discusses the development trend of the network from the Integration of Cloud and Network to the Integration of Computing and Network,and also analyzes the computing power network in 6G era.The computing power network provides operators with a new service mode,that is,combining with the universal service of computing power,a computing power sharing platform will be constructed to connect all the computing power providers and highvalue customers with bandwidth and delay requirements in various industries,so as to provide reliable,guaranteed and comprehensive services.The new services that can be provided by the integration of computing and network are closely related to network delay,bandwidth cost and public credibility,and operators have obvious resource and ability advantages in all these three aspects.While where there is an innovation,there is a challenge.Of course,computing power network is no exception,so below issues need to be settled down before achieving the final goal:

•How to measure computing power,how to map computing power demand with business service demand and quality of experience?

•How to design or extend protocols to make the routing reasonable with the consideration of computing power parameters and meet business needs?

•How to quickly synchronize the computing power information of the whole network under the constraints of utmost low delay,low packet loss and other business indicators through the edge collaboration between edge nodes?

In the future,with the introduction of new technologies and the transformation of equipment,the computing power network will definitely be able to operate as a universal service that service providers can manage well,which is also an important direction of the integration of network and service in 6G era.

ACKNOWLEDGEMENT

This work was supported by the National Key R&D Program of China No.2019YFB1802800.

- China Communications的其它文章

- Future 5G-Oriented System for Urban Rail Transit:Opportunities and Challenges

- Multi-Stage Hierarchical Channel Allocation in UAV-Assisted D2D Networks:A Stackelberg Game Approach

- Performance Analysis of Uplink Massive Spatial Modulation MIMO Systems in Transmit-Correlated Rayleigh Channels

- Development of Hybrid ARQ Protocol for the Quantum Communication System on Stabilizer Codes

- Coded Modulation Faster-than-Nyquist Transmission with Precoder and Channel Shortening Optimization

- Analysis and Design of Scheduling Schemes for Wireless Networks with Unsaturated Traffic