Primary User Adversarial Attacks on Deep Learning-Based Spectrum Sensing and the Defense Method

Shilian Zheng,Linhui Ye,Xuanye Wang,Jinyin Chen,3,Huaji Zhou,Caiyi Lou,Zhijin Zhao,Xiaoniu Yang,*

1 Science and Technology on Communication Information Security Control Laboratory,Jiaxing,314000,China

2 College of Information Engineering,Zhejiang University of Technology,Hangzhou,310012,China

3 Institute of Cyberspace Security,Zhejiang University of Technology,Hangzhou,310012,China

4 School of Communication Engineering,Hangzhou Dianzi University,Hangzhou,310018,China

Abstract: The spectrum sensing model based on deep learning has achieved satisfying detection performence, but its robustness has not been verified.In this paper,we propose primary user adversarial attack(PUAA) to verify the robustness of the deep learning based spectrum sensing model.PUAA adds a carefully manufactured perturbation to the benign primary user signal, which greatly reduces the probability of detection of the spectrum sensing model.We design three PUAA methods in black box scenario.In order to defend against PUAA, we propose a defense method based on autoencoder named DeepFilter.We apply the long short-term memory network and the convolutional neural network together to DeepFilter,so that it can extract the temporal and local features of the input signal at the same time to achieve effective defense.Extensive experiments are conducted to evaluate the attack effect of the designed PUAA method and the defense effect of DeepFilter.Results show that the three PUAA methods designed can greatly reduce the probability of detection of the deep learning-based spectrum sensing model.In addition, the experimental results of the defense effect of DeepFilter show that DeepFilter can effectively defend against PUAA without affecting the detection performance of the model.

Keywords: spectrum sensing; cognitive radio; deep learning;adversarial attack;autoencoder;defense

I.INTRODUCTION

With the rapid development of communication services such as the Internet of Things [1] and 5G [2],the demand for spectrum usage is becoming more and more urgent.However, under the current fixed spectrum allocation policy, the average spectrum utilization rate of licensed frequency bands is exceptionally low [3].The contradiction between the intense spectrum usage demand and the low spectrum usage efficiency has become more and more prominent.In order to alleviate this situation,cognitive radio technology is proposed to dynamically use the spectrum“holes”that the primary user (PU) is not using temporarily, so as to improve the spectrum utilization without interfering with the PU[4].Spectrum sensing[5]is the premise of realizing this opportunistic spectrum usage technology,and its task is to determine whether there is a PU signal in the current frequency band.At present,there are a large number of methods to solve the problem of spectrum sensing,such as energy detection[6],cyclostationary feature-based detection [7], eigenvaluebased detection [8] and frequency domain entropybased detection[9].These methods are mainly based on artificially designed features or decision statistics to complete the decision.With the development of deep learning technology, some recent works [10–12] directly use deep neural networks to automatically learn features from data to complete spectrum sensing tasks.In some complex situations, better detection performance than traditional methods has been obtained.

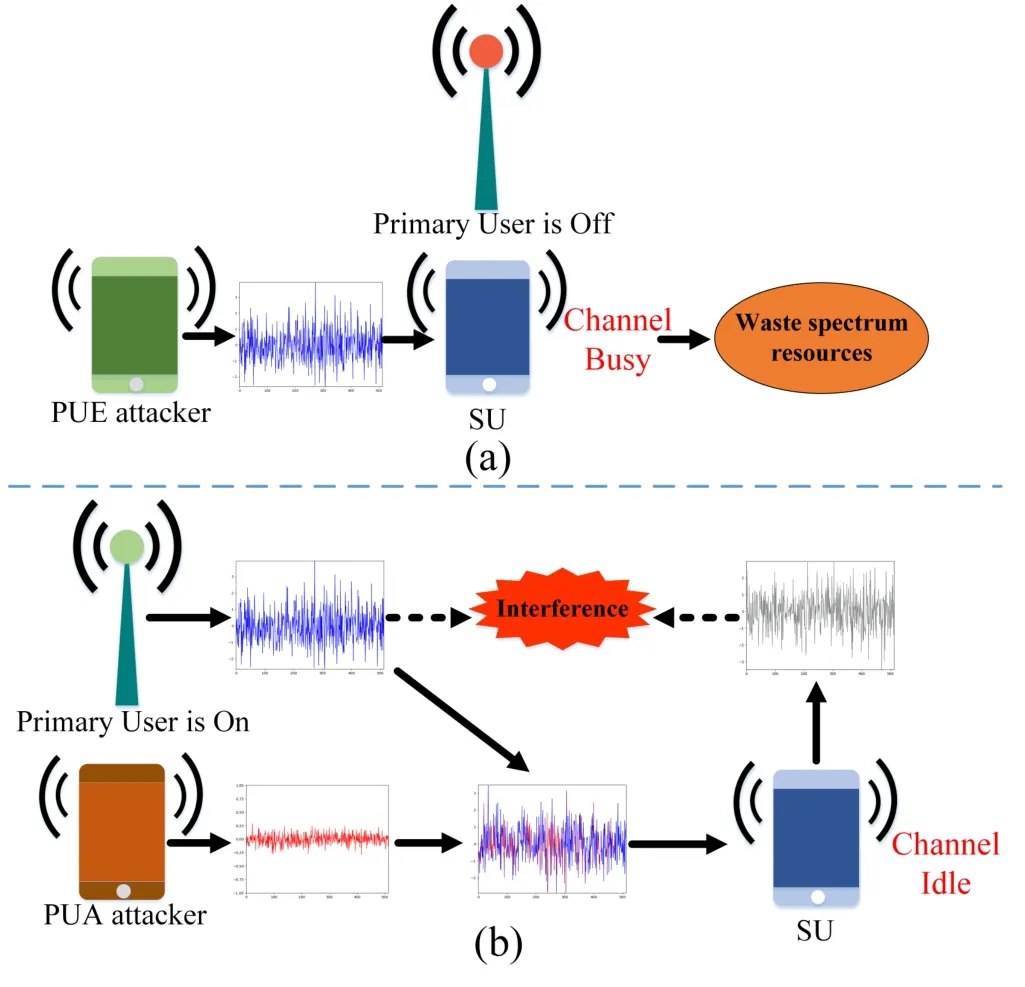

However,spectrum sensing methods are vulnerable to malicious attacks [13–22].One of the major attacks is primary user emulation attack (PUEA) [14].As shown in Figure 1(a),when the PU does not send a signal, the attacker will send a signal similar to the PU’s signal features and pretend to be the PU.At this time, the SU may judge the current frequency band as being occupied by the PU, thus losing the opportunity to use the spectrum holes.Therefore,PUEA is an attack against the probability of false alarm(Pf)of spectrum sensing, with the purpose of increasing thePfof SU.After the attack is carried out, the attacker can use the corresponding spectrum for his own use or just to prevent normal SU from using the spectrum hole, affecting the normal communication services of SU,and causing waste of spectrum resources.

Different from PUEA,in this paper we study the attack on spectrum sensing when the PU transmits the signal.We call this attack primary user adversarial attack(PUAA).The attack scenario of PUAA is shown in Figure 1 (b), when the PU transmits the signal,the attacker sends the adversarial perturbation,and the signal arriving at the spectrum sensing node is the signal superimposed by the PU’s signal and the adversarial perturbation(as well as the background noise).The purpose of the attack is to make the SU misdetect the PU signal,and then access the channel to cause harmful interference with the PU.We use PUAA to verify the robustness of the spectrum sensing model based on deep learning.Robustness refers to the security issues of deep learning-based models in adversarial attack scenarios.If the model has the robustness problem,it can’t defend the adversarial attack,so it can be attacked by the form of adding slight perturbation to the input,resulting in the wrong output.We will propose specific PUAA methods in the paper.In order to deal with this kind of attacks,we will also propose corresponding defense methods to improve the security of the spectrum sensing method based on deep learning.A.Related Work

Figure 1. Attack scenarios of PUEA and PUAA.(a)PUEA scene,(b)PUAA scene.

For spectrum sensing attack, PUEA [13–17] and spectrum sensing data falsification attack(SSDF)have been extensively studied[18–22].As discussed above,the implementation process of PUEA is that the malicious user imitates the signal characteristics of the PU, causing the SU to identify the malicious user as the PU,so that the SU vacates the frequency band being used.As for SSDF,malicious attackers send false local information to neighbor nodes or fusion center(FC),causing the FC receiving the wrong information to make a wrong decision [23].From the above, it can be found that neither PUEA nor SSDF is an adversarial attack.The adversarial attack refers to the adversary adding carefully manufactured disturbances to the deep learning-based spectrum sensing model input to make the model misclassified.The research on spectrum sensing adversarial attack mainly focuses on spectrum poisoning attack[24–26].These works used jamming attacks to attack the spectrum sensing model,making the model predict wrong results.However,these works did not describe specific methods of the adopted jamming attacks.Different from the above works,in this paper,we present the concept of PUAA and propose specific PUAA algorithms to study the robustness of the deep learning-based spectrum sensing method[12].

On the other hand, in spectrum sensing tasks, the defense methods against PUEA and SSDF have been widely studied[27–37].Because these defense methods aim at PUEA and SSDF, they can not effectively defend against adversarial attack.For the defense of adversarial attacks, Sagduyuet al.[25] proposed a defense method, which deliberately makes a small amount of incorrect transmission operations to deceive the training data collected by the adversary in order to achieve the purpose of defense.However,this method requires a balance between the performance of the secondary transmitter and the defense effect.Different from this work, we propose a defense method based on autoencoder which does not require any operation from the secondary transmitter.That is to say,the SU does not need to perform incorrect transmission operations, and only needs to perform filtering operation through the proposed method before spectrum sensing to achieve defense.

B.Contributions and Structure of the Paper

The contributions of this paper are mainly as follows:

• We propose PUAA, which is an attack aimed at reducing the probability of detection (Pd) of the deep learning-based spectrum sensing model.We design three PUAA methods in black box scenario to attack deep learning-based spectrum sensing model to verify the vulnerability of the current spectrum sensing model.

• We propose a defense method based on autoencoder named DeepFilter.We combine the long short-term memory network(LSTM)and the convolutional neural network (CNN) to DeepFilter.This enables DeepFilter to extract the temporal and local features of the input signal at the same time, so as to achieve a better defense effect against adversarial signals without affecting the performance of the spectrum sensing model.

• We conduct extensive experiments to verify the effectiveness of the proposed attack and defense methods,specifically:

a) We attack the deep learning-based spectrum sensing model through PUAA methods designed.The experimental results show that the three PUAA methods can significantly reduce thePdof the model.

b) We explore the influence of the decision boundary of the spectrum sensing model on the attack effect.The experimental results show that even if the decision boundary of the model is changed,PUAA can still attack the spectrum sensing model.

c) We explore the effectiveness of DeepFilter,specifically: firstly, we verify the defense effect of the DeepFilter; subsequently, we study the impact of DeepFilter on the spectrum sensing model without attack; finally,we study the defense effect of DeepFilter against the adversarial signals generated by different attack methods.Experimental results show that DeepFilter can achieve defense against PUAA without affecting the detection performance of the spectrum sensing model and it can defend against a variety of attacks.

The rest of the paper is organized as follows.In Section II,we discuss system model and problem formulation.In Section III, we describe the designed PUAA methods.In Section IV, we give a detailed description of the proposed defense method based on autoencoder.Experimental results are show in Section V and conclusions are made in Section VI.

II.SYSTEM MODEL AND PROBLEM FORMULATION

2.1 Spectrum Sensing Based on Deep Learning

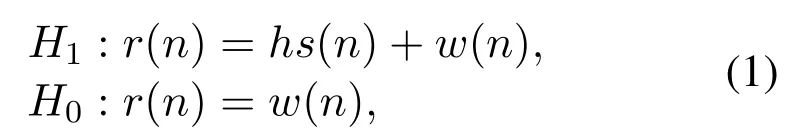

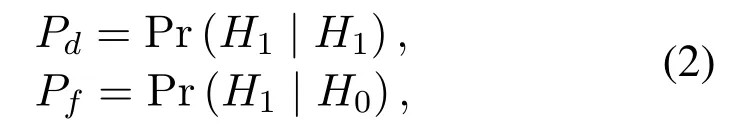

Spectrum sensing can be expressed as a binary hypothesis test problem as follows:

whereH1andH0represent the channel is busy and idle respectively,s(n) represents the transmitted signal,w(n) represents the noise,r(n) represents the received signal andhrepresents the channel gain.The performance of spectrum sensing is usually represented by the probability of detection and the probability of false alarm,which is defined as follows:

where Pr(·)represents probability.

The deep learning-based spectrum sensing model[12]uses power spectrum of the received signal as input.The power spectrum can be expressed as follow:

wheredft(·) represents discrete Fourier transform,abs(·) means to calculate the absolute value of the signal, andrepresents the signal after power normalization ofr(n).According to the binary hypothesis test problem about spectrum sensing described above,this deep learning-based spectrum sensing model treats the problem as a binary classification problem and uses a CNN network to solve the problem.In the stage of inference, the spectrum sensing model makes the following decision:

wheretnoiserepresents the confidence of the noise class corresponding to the softmax output, andγis a certain threshold(0< γ <1).This threshold can be set according to the required probability of false alarm.

2.2 Problem Formulation

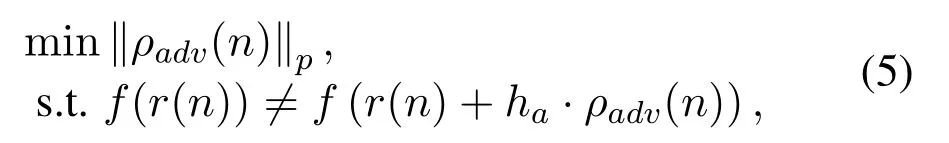

The PUAA on spectrum sensing task can be expressed by the following:

whereρadvrepresents adversarial perturbation,∥·∥prepresentslpnorm,f(·) represents spectrum sensing model,harepresents channel gain.It should be noted that in this paper,we assume an additive white Gaussian noise(AWGN)channel.For other channels,in the process of generating adversarial perturbation,the influence of channel propagation needs to be taken into account in the generation process of adversarial perturbation as the limiting condition.For the PUAA on the single-node spectrum sensing model,the aim of the attack is to add the minimum perturbation to the benign PU’s signal to make the model’s prediction fromH1toH0.

The defense process against adversarial signals can be expressed as follows:

whereg(·)represents the process of signal processing by the defense method,r(n)′represents the signal after been processed,radv(n)represents adversarial signal,expressed as follows:

The defense process is that it processes the adversarial signalradv(n) to obtain no attack effect signalr(n)′, and the spectrum sensing model has the same predicted result for original signalr(n)andr(n)′.

III.ATTACK METHOD

We explore the robustness of the spectrum sensing model[12]by PUAA.The model uses the power spectrum as input,and the process of obtaining the power spectrum from the signal is a process that cannot be derived.Therefore, we design three PUAA methods based on black box attack methods to explore the robustness of the spectrum sensing model.These methods do not depend on the gradient of the model.

3.1 PUAA Based on GA

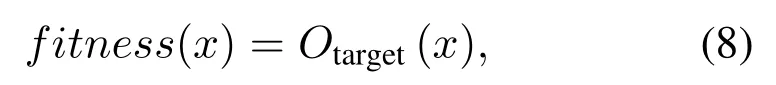

We firstly design a PUAA method based on genetic algorithm (GA).GA is a method of searching for the optimal solution by simulating the natural evolution process[38].We take the generation process of adversarial signal as an optimization problem and design an attack method based on GA.Firstly,the benign signal is copied to the specified population size,and then random noise is added to mutate them to generate the primary adversarial signals.These adversarial signals are updated through the crossover and mutation process of GA.Subsequently, the candidate solution with the highest score is selected from the updated adversarial signals through the fitness function.Furthermore,the selected candidate solutions are crossed and mutated to update the new population until the number of iterations is reached or the desired adversarial signals are generated.Crossover is the exchange of corresponding positions in the two parents, while mutation is to change the value of a certain position in the signal.For the fitness function, we select the output of the target class in the last layer of the model as the fitness function expressed as follows:

whereOtarget(·)represents the confidence level of the target class output by the model,xrepresents the model input.In the process of generating adversarial signals, we limit the power of the adversarial perturbation by power normalization:

whereNrepresents signal length of the adversarial perturbation,Prepresents the amount of adversarial perturbation power that needs to be limited.That is to say,in the iterative process,when the power of the adversarial perturbation is greater thanP,it is restricted by power normalization so that its power does not exceedP.

3.2 PUAA Based on CS

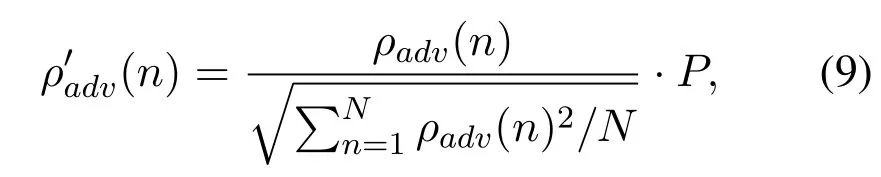

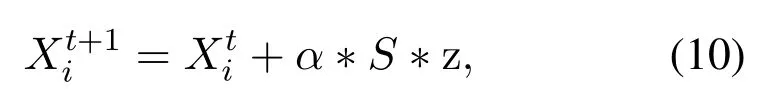

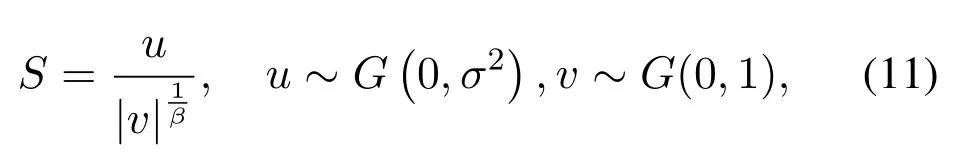

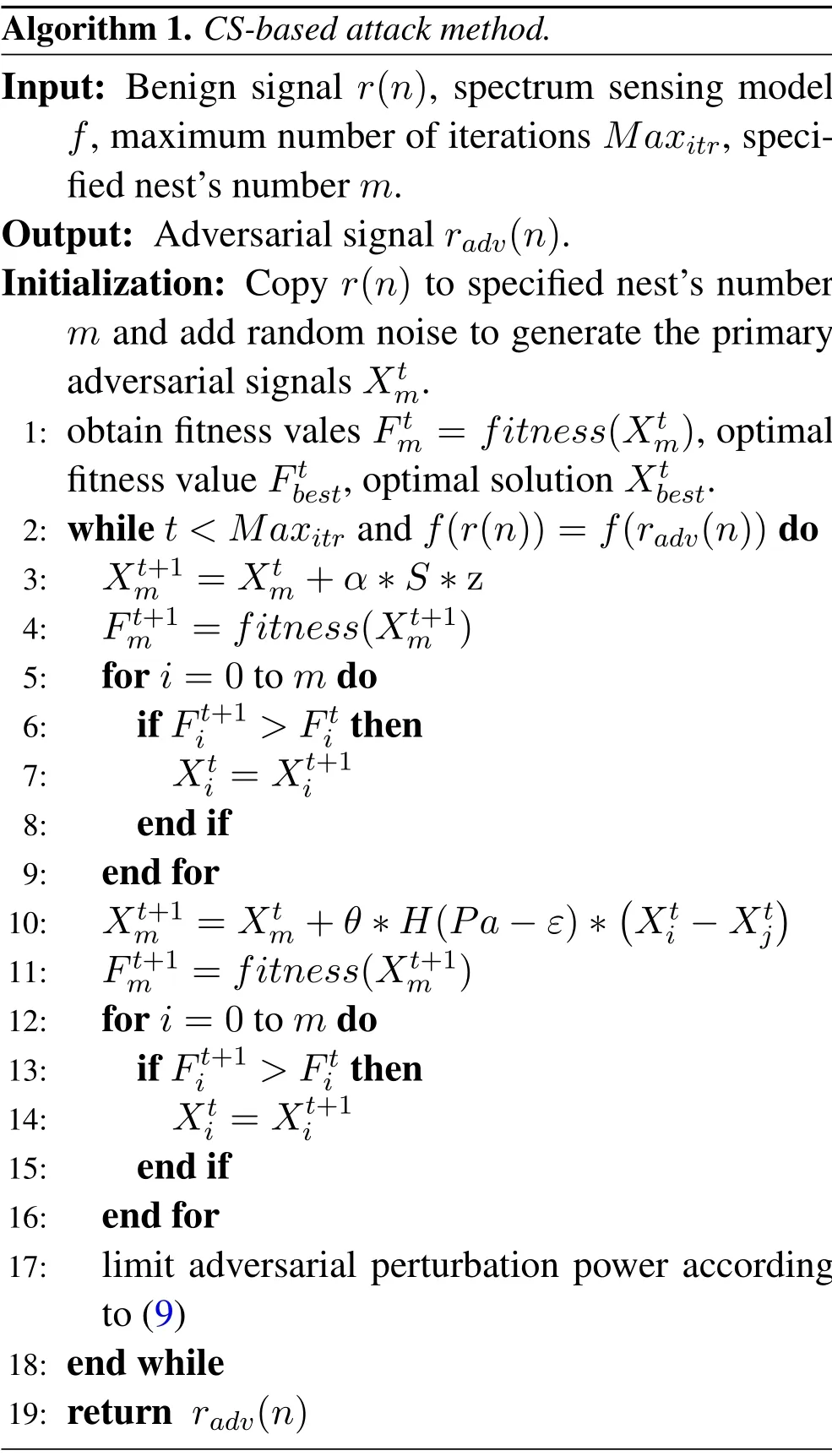

Similar to GA, cuckoo search algorithm (CS) [39] is also used to construct the adversarial signal.The CS is a heuristic algorithm that imitates the cuckoo’s brooding behavior in nature.Algorithm 1 shows the process of constructing adversarial signals based on the CS.First, the benign signal is copied to the specified number of nests,and then the difference between nests is expanded by adding random noise to generate primary adversarial signals.New solutions are generated through Levi flight and local random walk.Finally by comparing the pros and cons of the old and new solutions through the fitness function,the nest is updated.We also usefitness(x) as the fitness function, and also use the (9) to limit the power of the adversarial perturbation during the process of generating adversarial signals.Levi flight can be expressed as follow:

whererepresents new solution,represents old solution.αis step size scaling factor,zis an array of random numbers of standard normal distribution with the same dimension as,andSis step size:

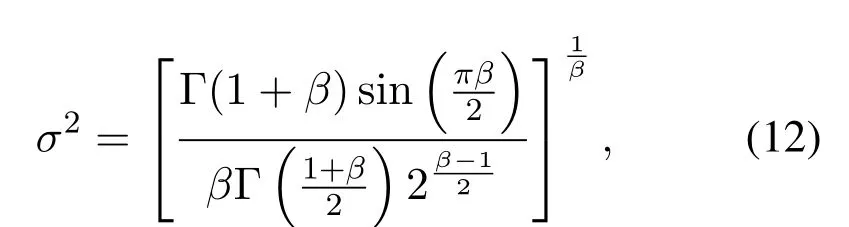

uandvare two variables that obey the Gaussian distribution,βis a hyper parameter,σ2is calculated through the following formula:

Algorithm 1. CS-based attack method.Input: Benign signal r(n), spectrum sensing model f,maximum number of iterations Maxitr,specified nest’s number m.Output: Adversarial signal radv(n).Initialization: Copy r(n) to specified nest’s number m and add random noise to generate the primary adversarial signals Xtm.1: obtain fitness vales Ftm = fitness(Xtm),optimal fitness value Ftbest,optimal solution Xtbest.2: while t

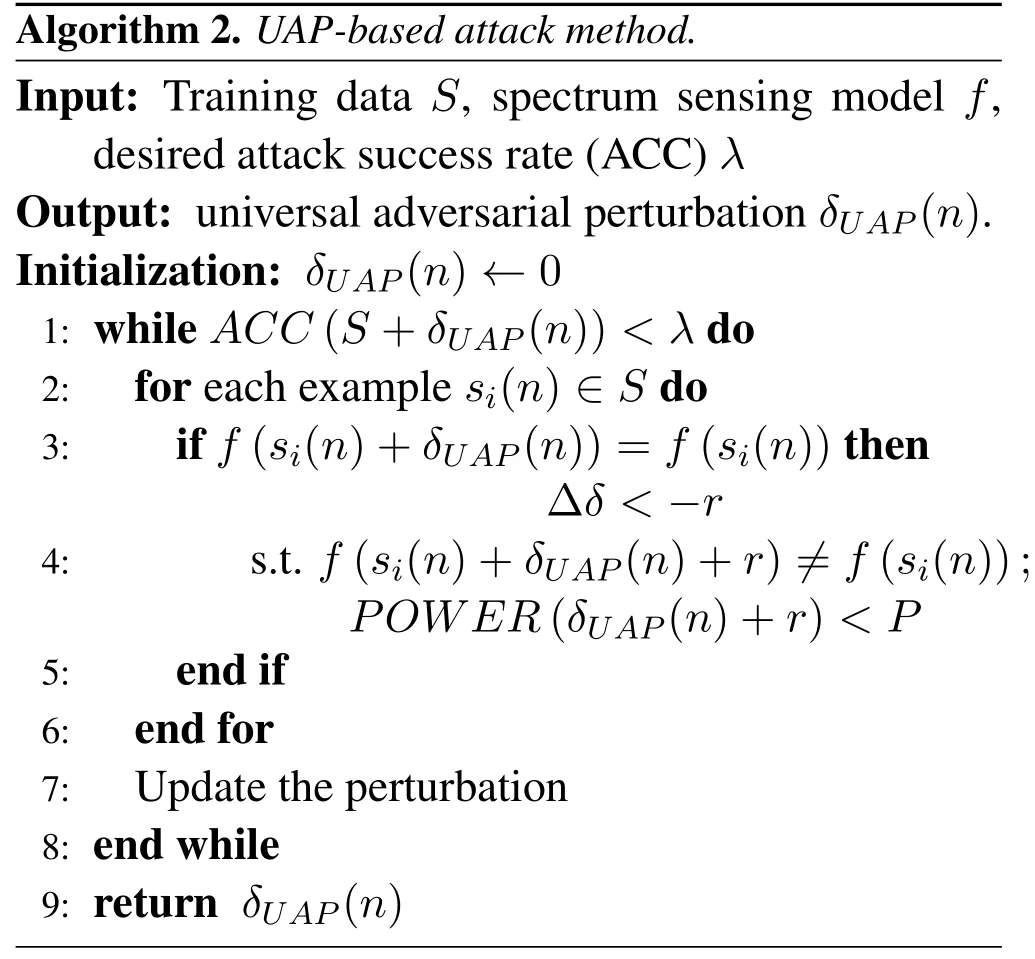

Algorithm 2. UAP-based attack method.Input: Training data S, spectrum sensing model f,desired attack success rate(ACC)λ Output: universal adversarial perturbation δUAP(n).Initialization: δUAP(n)←0 1: while ACC(S+δUAP(n))<λ do 2: for each example si(n)∈S do 3: if f(si(n)+δUAP(n))=f(si(n))then 4:∆δ <−r s.t. f(si(n)+δUAP(n)+r)=f(si(n));POWER(δUAP(n)+r)

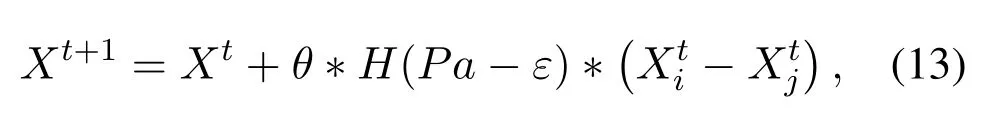

whereθandεare random numbers that obey a uniform distribution,H(x)is jump function,XiandXjare any other two nests.

3.3 PUAA Based on UAP

In addition to the above two attack methods, we also study the robustness of the spectrum sensing model through PUAA based on universal adversarial perturbation (UAP) [40].The process of constructing UAP is shown in Algorithm 2 below.The training process of spectrum sensing model can be regarded as the process of making spectrum sensing model adapt to the data distribution of training set.After the training is completed, the spectrum sensing model can correctly predict the benign signals in the test set because the data distribution of the test set and the data distribution of the training set are usually the same or close.From algorithm 2, it can be found that the construction process of UAP can be understood as looking for a perturbation that can destroy the data distribution of the data set.More specifically, the constructed UAP will make the data set disobey the original data distribution as much as possible after UAP being superimposed on the data set.Therefore, UAP can make the signal not belong to the data distribution of the original test set after being superimposed on the benign signal,and can make the spectrum sensing model predict wrong result.

IV.DEFENSE METHOD

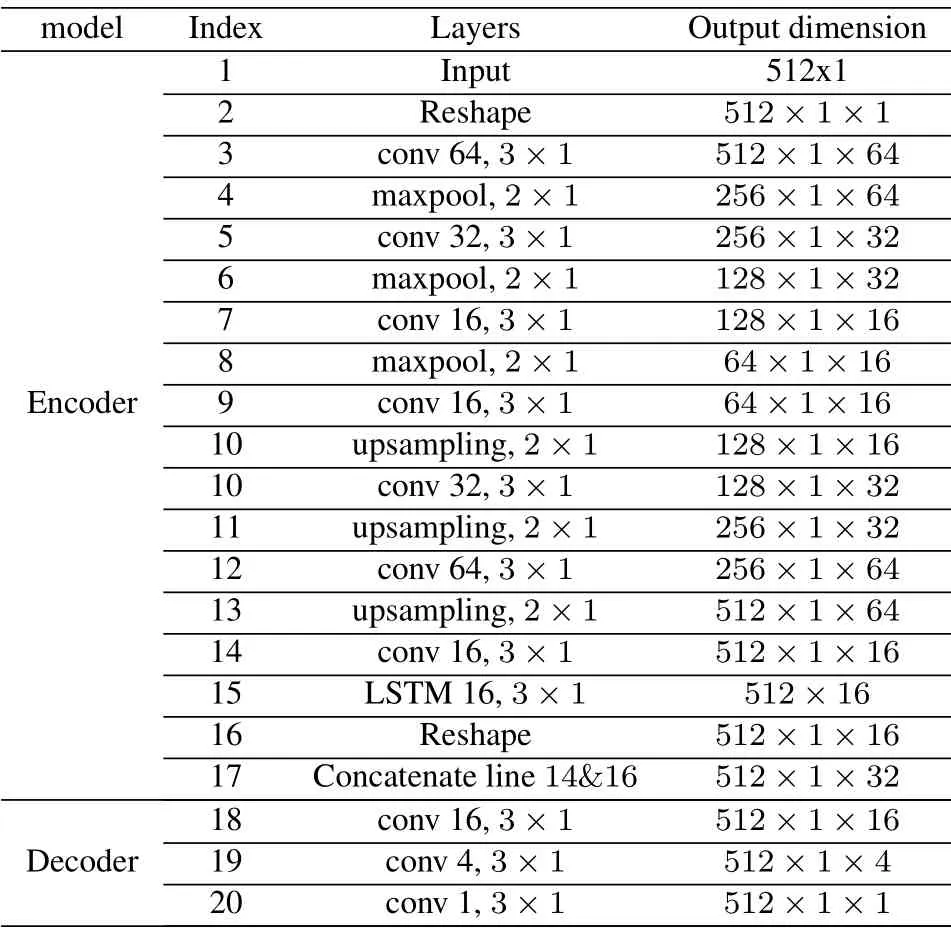

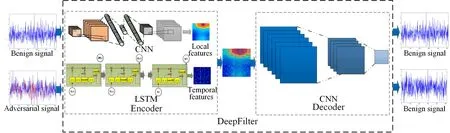

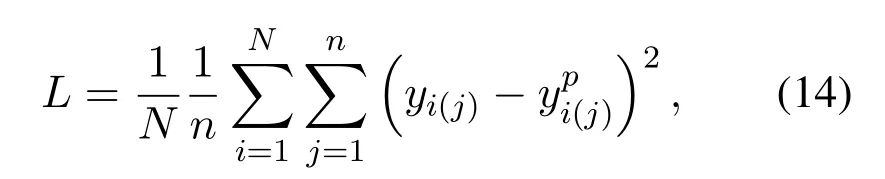

The proposed defense framework based on DeepFilter is shown in Figure 2 below.DeepFilter is constructed based on the autoencoder structure[41],consisting of an encoder and a decoder,and its specific structure is shown in Table 1.CNN is widely used in autoencoder[42–44].Different from previous work, the autoencoder we constructed needs to process the radio signals.In order to reconstruct the signal as much as possible and achieve effective defense effect, the LSTM[45]and CNN are combined to enable the encoder to extract the temporal and local features of the input signal.As shown in Figure 2, the encoder first extracts local and temporal features of the input signal through CNN and LSTM,and then cascades the extracted features as the input of the decoder.The decoder recovers the original signal from the features extracted by the encoder.The loss function is mean square error function,i.e.,

Table 1. The DeepFilter network structure constructed.

Figure 2. Defense framework based on DeepFilter.DeepFilter is composed of encoder and decoder.The encoder extracts the local and primary features,and the decoder reconstructs the signal according to the extracted features.DeepFilter does not affect the benign signals and can process the adversarial signals as benign signals.

whereNis the number of training samples,nrepresents the length of signal,yi(j)represents the real data of thei-th signal at thej-th position,represents the model’s prediction for thej-th position of thei-th signal.

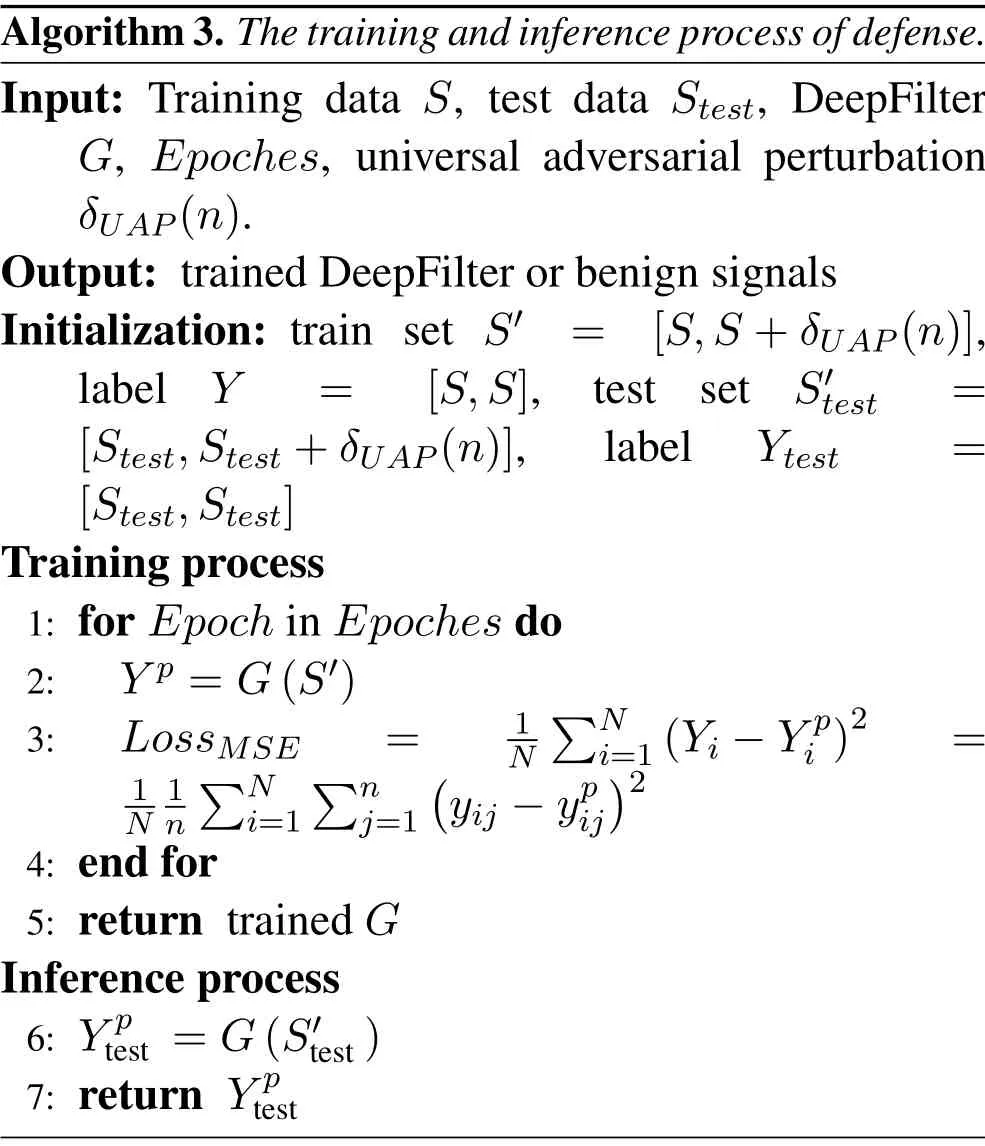

Algorithm 3.The training and inference process of defense.Input: Training data S, test data Stest, DeepFilter G, Epoches, universal adversarial perturbation δUAP(n).Output: trained DeepFilter or benign signals Initialization: train set S′ = [S,S+δUAP(n)],label Y = [S,S], test set S′test =[Stest,Stest+δUAP(n)], label Ytest =[Stest,Stest]Training process 1: for Epoch in Epoches do 2: Y p =G(S′)3: LossMSE = 1 N images/BZ_110_762_1874_810_1920.pngNi=1(Yi −Y pi )2 =11 Nn images/BZ_110_410_1941_458_1986.pngN i=1 images/BZ_110_523_1941_571_1986.pngn j=1(yij −ypij)2 4: end for 5: return trained G Inference process 6: Y p test =G(S′test)7: return Y p test

The training and inference process of DeepFilter is shown in Algorithm 3.It should be noted that we only use the UAP-based adversarial signals,benign signals and AWGN to train Deepfilter,and no other adversarial perturbation is used for training.In the inference process, DeepFilter performs filtering operations on the input adversarial signal,so as to realize the defense against the adversarial signal.

V.EXPERIMENTAL RESULTS

5.1 Data Generation and Experimental Setup

The data used is similar to [12].The generated signal includes 8 modulation types: BPSK, QPSK,16QAM,PAM4,CPFSK,GFSK,64QAM and 8PSK.The SNR of each type of signal ranges from-20dB to 20dB,with an interval of 2dB.The length of each signal is 256.For each modulation type, 1000 samples are generated for each SNR as training data, and 500 samples are used as test data.The noise data in the training set is AWGN, its length is also 256, and the number of noise samples is the same as the signal.

The experiments were conducted on a server equipped with intel XEON 6420 2.6GHz X 18C(CPU), Tesla V100 32GiG (GPU), 16GiB DDR4-RECC 2666 (Memory), Ubuntu 16.04 (OS), Python 3.6,Keras-2.2.4.The training process of the spectrum sensing model is the same as[12].For the GA-based PUAA attack method,the hyper parameters are set as follows: the number of iterations is 100, the population size is 80, the elite pop size is 20, and the variation rate is 0.005; for CS-based attack method, the number of iterations is 100,the population size is 80,thePais 0.25,αis 0.2 and theβis 1.5.In order to make DeepFilter be able to defend against the attack of adversarial signal, in addition to the normal data set, we also use adversarial signals to train the Deep-Filter as shown in Algorithm 3.The UAP is added to the normal data set as the adversarial signal data set,and its truth label is normal data set.The batch size of the training DeepFilter is 512, the epoch is 30.SGD with momentum was used as the training method with a momentum factor of 0.9 and the initial learning rate is 0.01.

5.2 Attack Result

5.2.1 Attack Results of Different PUAA Methods

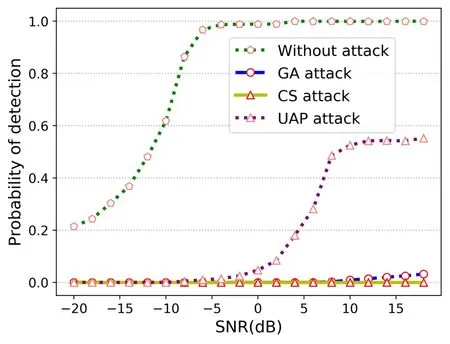

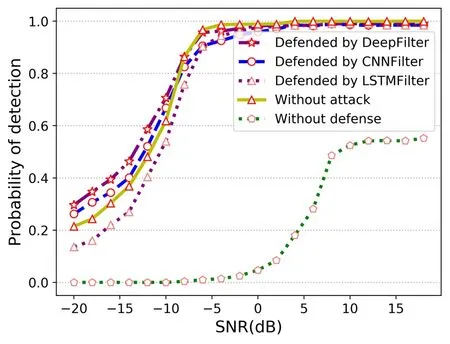

We attack the deep learning-based spectrum sensing model [12] through three PUAA methods designed,which aim to make the model’s prediction of benign input signal change fromH1toH0.In the attack process of the above three attack methods, the adversarial perturbation’s power added to the benign signal is limited not to be greater than the benign signal.In the process of decision, the spectrum sensing model sets the decision boundary parameterγaccording to a certainPf.In this experiment, we do not consider the impact of thePfon the attack effect, and fix thePfto 0.1.The experimental results are shown in the Figure 3, which shows the influence of the adversarial signals generated by the above three methods on thePd.It can be found from the experimental results that the deep learning-based spectrum sensing method can be successfully attacked in a black box scenario.Further analysis shows that among the three methods, UAP has the worst attack effect.Compared to the model’sPdwhen it is not attacked, thePdat high SNR is reduced by more than 40%.The adversarial attack method based on GA and CS can almost reduce the model’sPdto 0%.This shows that deep learning-based spectrum sensing model is vulnerable to these PUAA attacks.This shows that the robustness of the model is not strong enough, the model has security vulnerability, and it can not defend the PUAA attack.

Figure 3. Attack results of different attack methods.

5.2.2 Influence ofPfon Attack Effect

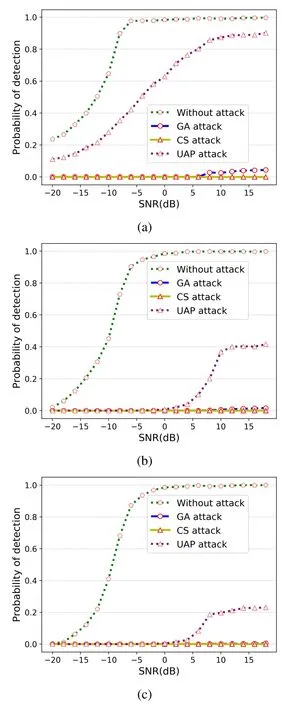

The spectrum sensing model’s decision boundary is set by thePf.In this experiment,the influence ofPfon the attack effect is explored.We hope to investigate whether thePfcan be used as a method to improve the robustness of the model by exploring the impact of differentPfon the attack effect.We carry out experiments withPfof 0.2,0.01 and 0.001.The experimental results are shown in Figure 4, from which we can find that the higherPf,the worse attack effect of each attack method.Moreover,thePfhas a greater impact on the attack effect of the UAP,but has little effect on adversarial signals generated based on the GA and the CS algorithm.According to our analysis, if thePfis higher, theγis lower, and the model is less likely to predict the signal asH0, and the more difficult it is to be attacked.Therefore, this leads to the higher thePf, the worse the attack effect againstPd.The reason that the UAP is more sensitive to thePfis that the UAP realizes the attack by generating a perturbation that can make the model mispredict the signals in the training set as much as possible.Therefore,this attack method is more sensitive to the decision boundary of the model.The other two methods are to generate an adversarial perturbation for each sample to realize the attack.Compared with UAP, the decision boundary has less influence on them.Nevertheless, it can be found that although UAP attack can be defended to a certain extent by increasing thePf, it sacrifices the false alarm performance of the model.What’s more,changing the decision boundary cannot defend against GA-based and CS-based attack methods.Therefore,the robustness of the model cannot be improved by changingPfin the general sense.

Figure 4. Influence of different Pf on attack effect.(a)Pf =0.2,(b)Pf =0.01,(c)Pf =0.001.

5.2.3 The Impact of Power Limitation on Attack Effect

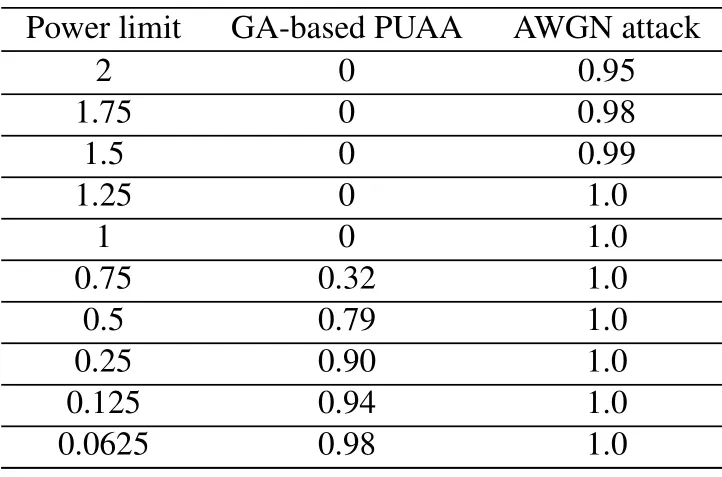

In the above experiments, our limit on the power of adversarial perturbation is to make it not exceed the power of benign signal.That is to say,if the power of the benign signal isP,the added adversarial perturbation’s power will not exceedP.In this section,we explore the impact of different power limits on the GAbased PUAA attack effect, and we conducted experiments under condition that thePfis 0.001 and SNR is 18 dB.In addition,we compare the attack effect of PUAA with the traditional AWGN attack, i.e., using AWGN as the attacking signal.Under different power limits,thePdof the spectrum sensing model after the attack is shown in Table 2.The power limit valuesin the table represent the ratio of adversarial perturbation power to benign signal power.For example,0.75 means that the power of adversarial perturbation does not exceed 0.75 *P.It can be found from the table that when the power of the adversarial perturbation reduce to 1, its power is smaller, attack success rate is lower.For AWGN, when the power limit is 1.5, it reduces thePdof the spectrum sensing model by only 0.01.When both attacks reduce thePdof the model by 0.02,the power of AWGN is 28 times bigger than that of the adversarial perturbation generated by the GA-based PUAA attack method.This shows that adversarial perturbation is different from AWGN,and its attack effect is much better than AWGN when the same power is used.

Table 2. Influence of power limitation on attack effect.

5.3 Defense Result

In this section,we explore the defense effect of Deep-Filter on adversarial signals,the impact on model performance,and the defense effectiveness of other attack methods.The experiments were conducted under the condition that thePfwas 0.1.

5.3.1 Defense Effect on UAP

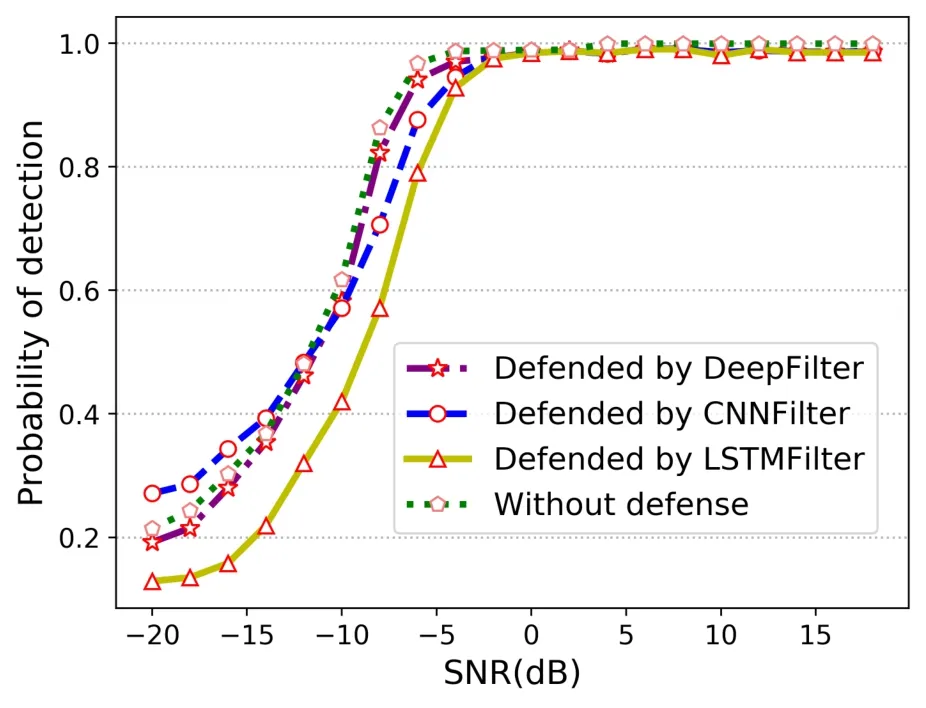

In order to verify the defense ability of the defense method, we reconstruct the signal through DeepFilter.We compare the defense effect of DeepFilter with autoencoder based on CNN (CNNFilter) and autoencoder based on LSTM (LSTMFilter).The encoder structures of CNNFilter and LSTMFilter are the same as the CNN and LSTM in the DeepFilter’s encoder,respectively.The decoder structure of CNNFilter and LSTMFilter is the same as the decoder in DeepFilter.The adversarial signals processed by these autoencoders are fed into the spectrum sensing model to verify the effectiveness of the defense.The experimental results are shown in the Figure 5.It can be found that DeepFilter has a better defense effect than CNNFilter and LSTMFilter.This benefits from the fact that Deep-Filter can extract better features than CNNFilter and LSTMFilter.Further observation can be found that after the adversarial signal is processed by DeepFilter and CNNFilter at low SNR, the model’sPdis higher than the model’sPdwhen it is not attacked.This is because these two autoencoders over-process the adversarial signals.That is to say, while removing part of the adversarial perturbation, they also remove part of the normal noise, so that thePdof the model has been improved to a certain extent.However, the autoencoder based on LSTM behaves the opposite,as it under-processes the adversarial signals,thus thePdof the model is reduced.

Figure 5. The defense effect of different methods on UAP.

5.3.2 Impact on The Spectrum Sensing Model’s Performance

In a real-world defense process,the defender dose not know whether the received signal is an adversarial signal or a benign signal.Therefore, it is required that the DeepFilter does not affect the received benign signal.We explore the impact of DeepFilter on benign signals.The effects of CNNFilter and LSTMFilter on the benign signals are provided for comparison.The experimental results are shown in Figure 6.It can be found that although the CNNFilter improves thePdof the spectrum sensing model at low SNR, thePfof the model reaches 0.276, far greater than the preset value 0.1.LSTMFilter reduces thePdof the model,but thePfis 0.106,which is closer to the preset value.Because DeepFilter combines the capabilities of CNN and LSTM networks to extract features,it can extract the local and temporal features of the signal without affecting the original signal as much as possible.Therefore, thePdandPfare closer to the situation without defense.This shows that the Deep-Filter will not affect the performance of the spectrum sensing model when it is not attacked.

Figure 6. Impact on model performance, DeepFilter-Pf=0.114,CNNFilter-Pf=0.276,LSTMFilter-Pf=0.106.

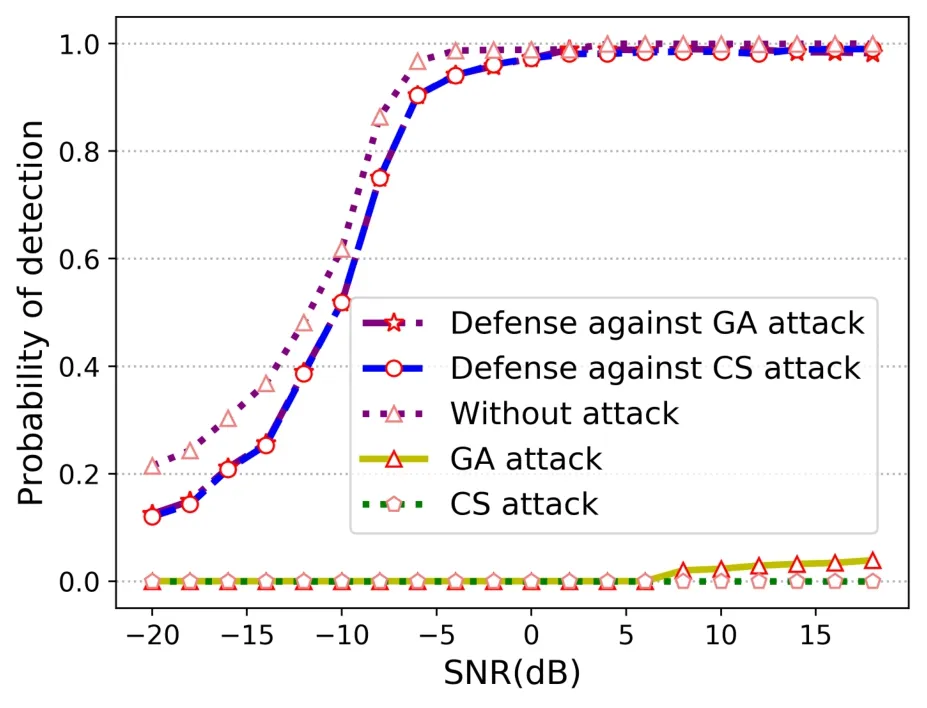

5.3.3 Defense Effect on GA-Based and CS-Based Attack Methods

We use adversarial signals generated by UAP-based attack methods to train DeepFilter.In the actual attack process, the adversary may launch an attack on the model through other attack methods, which requires the DeepFilter to defend against the adversarial signals generated by different attack methods.Therefore,we explore the defense effect of the DeepFilter against different attack methods.We generate adversarial signals through GA-based and CS-based attack methods.The generated adversarial signals are processed by the DeepFilter and then put into the model for prediction.The experimental results are shown in Figure 7.It can be seen from the figure that thePdof the model has been greatly improved after the adversarial signal is processed by DeepFilter, and it is very close to thePdof the model when it is not attacked.This shows that the DeepFilter can effectively deal with the attack of the adversarial signals generated by different attack methods.It should be noted that DeepFilter is only trained on the adversarial signals generated by UAPbased PUAA attack method,but it can also defend the GA-based and CS-based PUAA attacks.This shows that we only need the prior information of one attack method to defend against multiple attack methods,and we don’t need to know all the prior information of possible attack methods.

Figure 7. Defense effect on GA-based and CS-based attack methods.

VI.CONCLUSION

We have studied the robustness of the deep learningbased spectrum sensing method and designed three PUAA methods.The experimental results show that these attack methods can attack spectrum sensing model successfully.We have also verified that the model cannot achieve defense against PUAA by changingPf.This verifies that deep learning-based spectrum sensing model is vulnerable to PUAA.Aiming at improving the security of spectrum sensing model to PUAA,we have proposed DeepFilter to defend against PUAA.Experimental results show that DeepFilter can achieve effective defense against adversarial attacks without affecting the performance of the model and it can defend against the adversarial signals generated by different attack methods.

However, we did not conduct experiments in overthe-air scenarios.In addition, we did not consider the impact of the spectrum environment’s change on the system’s attack and defensive performance.In the dynamic spectrum environment, Cognitive Learning Framework(CL) [46]can adapt to dynamic environment and dynamic tasks.In this environment, the attack and defense effects of the proposed PUAA attack and defense methods on CL are worth exploring.In future work,we will extend the experiment to physical scenarios and explore the influence of different channels on attack effect.Moreover, we will explore the attack effect of the proposed PUAA and the defense effect of the proposed defense method in the dynamic spectrum environment.

ACKNOWLEDGEMENT

This research was supported by the National Natural Science Foundation of China under Grant No.62072406, No.U19B2016, No.U20B2038 and No.61871398, the Natural Science Foundation of Zhejiang Province under Grant No.LY19F020025,the Major Special Funding for “Science and Technology Innovation 2025” in Ningbo under Grant No.2018B10063.

- China Communications的其它文章

- An Overview of Wireless Communication Technology Using Deep Learning

- Relay-Assisted Secure Short-Packet Transmission in Cognitive IoT with Spectrum Sensing

- Frequency-Hopping Frequency Reconnaissance and Prediction for Non-cooperative Communication Network

- Passive Localization of Multiple Sources Using Joint RSS and AOA Measurements in Spectrum Sharing System

- Specific Emitter Identification for IoT Devices Based on Deep Residual Shrinkage Networks

- Intelligent Spectrum Management Based on Radio Map for Cloud-Based Satellite and Terrestrial Spectrum Shared Networks