An Overview of Wireless Communication Technology Using Deep Learning

Jiyu Jiao,Xuehong Sun,2,*,Liang Fang,Jiafeng Lyu

1 School of Physics and Electronic-Electrical Engineering,Ningxia University,Ningxia 750021,China

2 School of Information Engineering,Ningxia University,Ningxia 750021,China

Abstract: with the development of 5G, the future wireless communication network tends to be more and more intelligent.In the face of new service demands of communication in the future such as superheterogeneous network,multiple communication scenarios, large number of antenna elements and large bandwidth, new theories and technologies of intelligent communication have been widely studied,among which Deep Learning (DL) is a powerful technology in artificial intelligence(AI).It can be trained to continuously learn to update the optimal parameters.This paper reviews the latest research progress of DL in intelligent communication,and emphatically introduces five scenarios including Cognitive Radio (CR), Edge Computing(EC),Channel Measurement(CM),End to end Encoder/Decoder (EED) and Visible Light Communication (VLC).The prospect and challenges of further research and development in the future are also discussed.

Keywords: artificial intelligence; wireless communication; deep learning; cognitive radio; edge computing; channel measurement; end-to-end encoder and decoder;visible light communication

I.INTRODUCTION

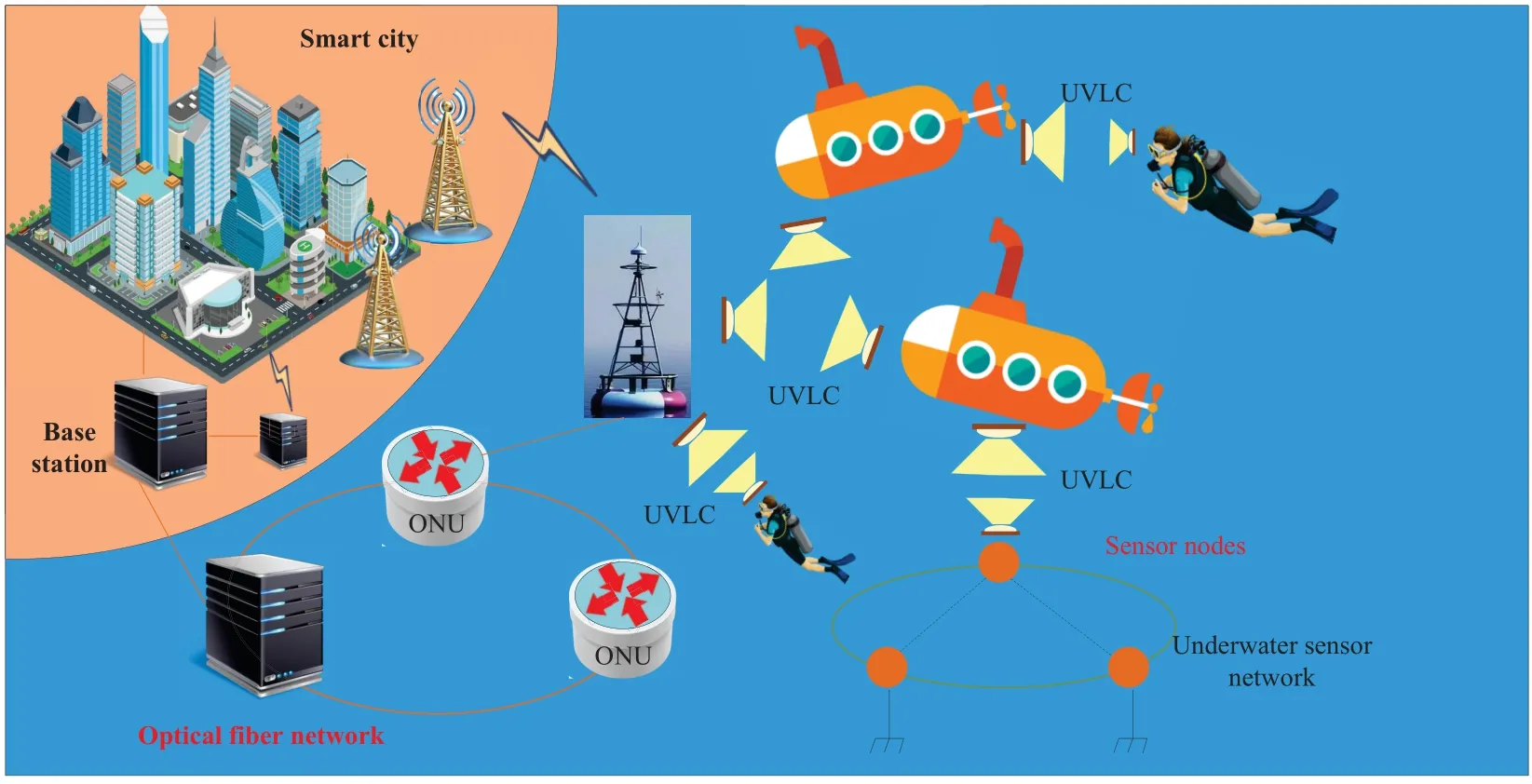

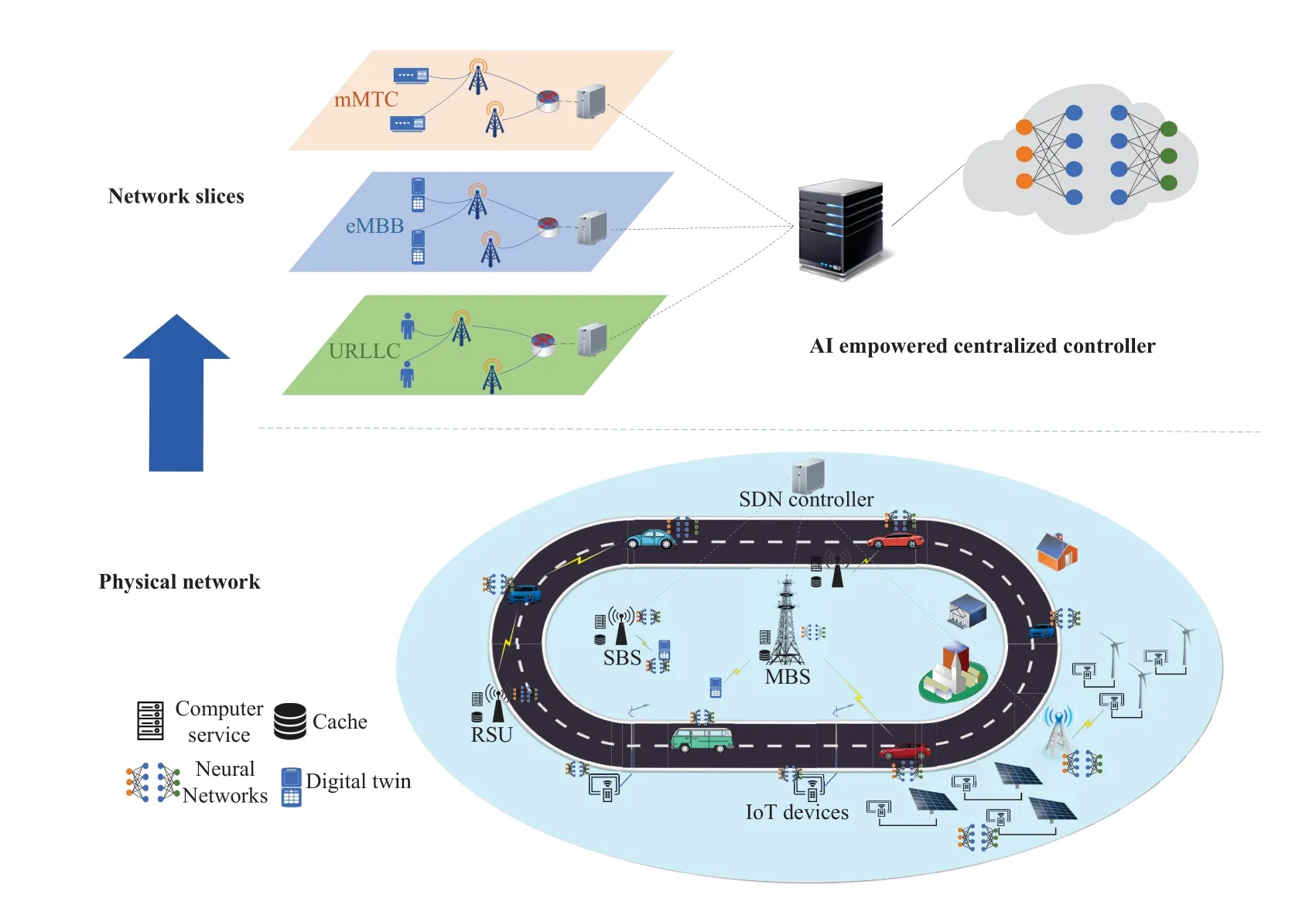

With the maturity and commercialization of 5G, it promotes the popularization of all kinds of intelligent communication terminals and flow-intensive applications, and the trend of Internet of Everything is imminent.This brings great challenges to the design of wireless systems,such as spectrum sensing,spectrum efficiency,low latency transmission,computing power and high throughput.Therefore, intelligent wireless communication network with self-organization, selfconfiguration and self-recovery functions is an inevitable trend to accomplish tasks that are difficult to be pre-programmed by human beings.There are three main application scenarios in 5G,including Enhanced Mobile Broadband (eMBB), Ultra Reliable Low Latency Communication (URLLC)[1] and Mass Machine Type Communication(MMTC)[2].Each person has different user needs and network requirements,so a more flexible and adaptive network is needed to provide services with different Quality of Service(QoS).Cognitive Radio (CR), Edge Computing (EC), Channel Measurement(CM),End to end Encoder/Decoder(EED) and Visible Light Communication (VLC) are promising technologies in 5G,which provide lower latency and more flexible services.Nowadays, with the characteristics of large amount of information,knowledge explosion and fast transmission speed, people’s way of thinking and learning are constantly challenged.Faced with the ever-changing technologies and rapidly changing knowledge,people need better organizational thinking and better meaning construction,so as to achieve a deeper understanding of knowledge content.Under this background, the rapid development of artificial intelligence(AI)technology in recent years has brought new ideas to solve the difficulties encountered in the development of wireless communication.DL has played an important role in the development of AI,which is based on a deep understanding of knowledge,not just rote memory and simple application.It can directly learn the hidden rules from the massive data,and then use these rules to make the corresponding prediction or decision.

As a key technology in the future wireless communication network, CR allows secondary users to use the idle spectrum of authorized primary users for opportunistic communication.This is a promising technology to cope with the shortage of spectrum resources[3].Spectrum sensing is a basic task of CR.Its purpose is to obtain cognitive information about authorized spectrum use and primary user presence in specific geographic dimensions.When the primary user is active,the cognitive user must detect the presence of the primary user with a high probability and clear the channel or reduce the transmission power within a certain period of time.Spectrum Sharing is a new method to share spectrum resources among different radio systems when spectrum resources are scarce at present.As the key technologies in CR,Modulation Recognition (MR) and Frequency Hopping Recognition(FHR)play important roles in signal detection and classification, which help to improve the security of communication system and the utilization of spectrum resources.This is critical for both civilian and military applications.

To address the burden of cloud computing and the high latency in conventional networks, EC provides storage and computing capabilities in the mobile network edge near the user [4, 5].EC is originally designed to bring data closer to the data source.Its applications are launched on the edge side and generate faster network service responses to meet the industry’s basic needs in realtime service, application intelligence, security and privacy protection, etc.EC is between the physical entity and the industrial connection, or on top of the physical entity.Therefore, EC has the advantages of low latency,reduced energy consumption of the whole system, massive data processing and location awareness, which greatly improves the user experience.

In recent years, DL has become a hot topic in the field of CM[6].It is already ahead of some of the latest traditional communication algorithms in many aspects, such as channel estimation[7], channel prediction[8],and channel state information(CSI)feedback[9].Channel estimation can be used as a mathematical representation of the influence of channels on input signals.A good channel estimation is the algorithm that minimizes the estimation error.Through channel estimation algorithm, the receiver can receive the impulse response of the channel.In modern wireless communication system, channel information has been fully utilized.The acquisition of perfect CSI plays an important role in the communication system.Channel prediction becomes important if the CSI being sent becomes potentially obsolete due to channel fluctuations.Channel prediction can help to improve the performance of various operations performed at the transmitter or receiver,such as adaptive encoding and modulation,decoding processing,channel equalization,and antenna beam forming.CSI describes the attenuation factor of the signal on each transmission path,i.e.,the value of each element in the channel gain matrix H, such as signal scattering, environmental attenuation, and distance attenuation.CSI feedback can make the communication system adapt to the current channel conditions,thus providing a guarantee for high reliability and high speed communication in the multi-antenna system.

The modern communication field is based on signal processing algorithm,which has a relatively complete statistical and information theory basis, and can be proved to be optimal.However, in a real communication system, most of the modules are nonlinear and can only be approximately described by these algorithms.The existing communication system design is modular, the process of channel processing is divided into a series of sub modules, each sub module has an independent processing function, such as source coding, channel coding, modulation, channel estimation,channel equalization and so on.This kind of design is more simple in practical engineering;But it can’t guarantee end-to-end optimization.It is very complex to use the traditional method to realize the end-to-end integrated system.Due to the development of DL, the design of communication system based on self encoder is a new idea.Neural network (NN)learns the distribution of data through a large number of training samples, and then predicts the results; It can be used for joint optimization of end-to-end system, which is better than the existing methods.The end-to-end communication system models based on DL can be divided into two categories: deterministic channel model and unknown channel model.

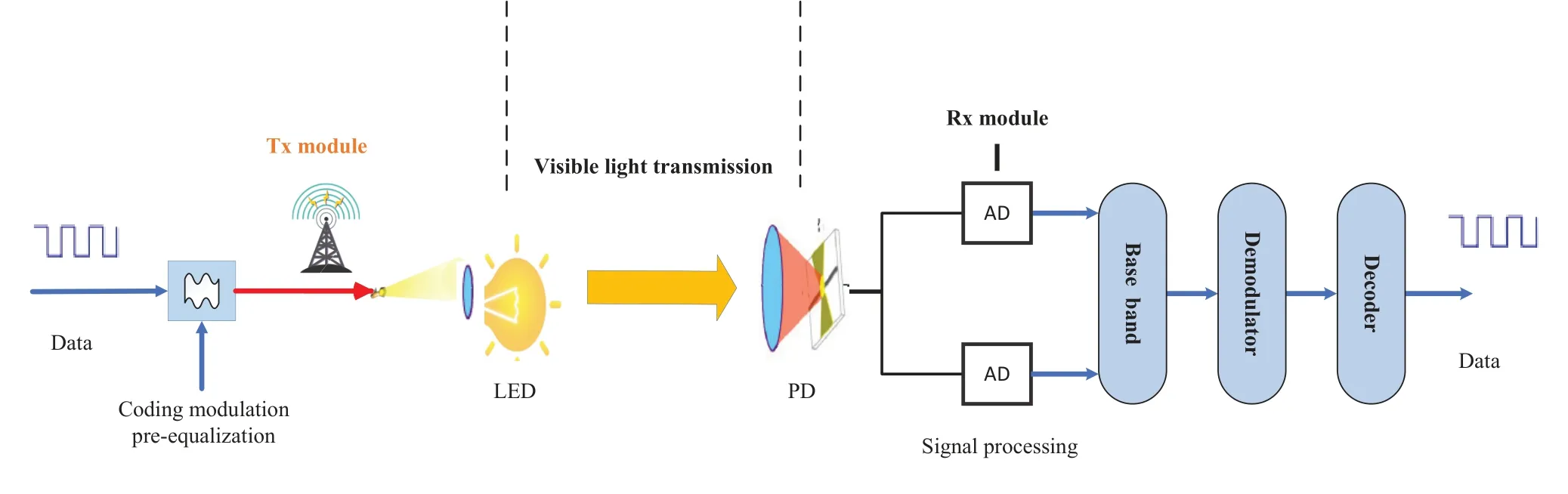

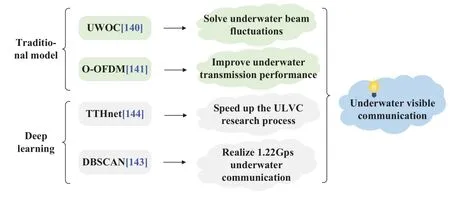

VLC transmits information by using visible light sources such as Light Emitting Diode (LED) to emit light signals with high speed and light changes that are hard to distinguish by naked eyes.It is a promising field of research in modern communication.In addition to expanding spectrum resources for mobile communication,it also has the technical characteristics of high speed,large capacity,security and energy saving.The existing 5G and 6G solutions for RF communications are energy-intensive,while LEDs for lighting and display are already ubiquitous,so VLC could be used to achieve universal light interconnection in the future.Innovative applications such as Light Fidelity(Li-Fi),light positioning and smart home systems prove that the integration of VLC with lighting or display is not just a dream.This indicates that Li-Fi will lead to a greater technological change.As a part of AI,DL has shown great potential in nonlinear mitigation, which will greatly promote the research of VLC.This paper introduces the latest research progress of DL in VLC, including Underwater Visible Light Communication (UVLC), nonlinear mitigation algorithm, and jitter compensation.

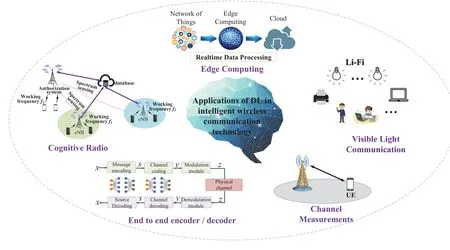

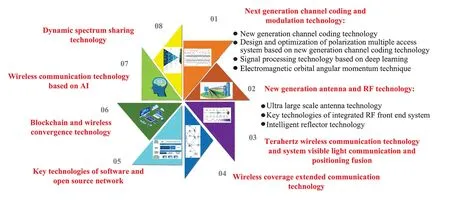

This paper reviews the application of DL in intelligent wireless communication networks,and expounds the value of the combination of DL and wireless communication networks from two aspects: the development of DL and common algorithms.We summarize the application of DL technology in spectrum management, edge side application reasoning and wireless transmission in wireless communication networks(corresponding to CR,EC,CM and EED respectively).In addition to traditional wireless communication,DL is also widely used in VLC.Therefore, for convenience, this paper classifies the application scenarios of DL in wireless communication networks, as shown in Figure 1, mainly including CR, EC, CM,EED,VLC,etc.Then we analyze and summarize their principles, applicability, design methods, advantages and disadvantages in solving wireless communication problems.Finally,around the existing limitations,this paper points out the future development trend and research direction of intelligent wireless communication technology, hoping to provide help and reference for the follow-up research in the field of wireless communication.

Figure 1. Application of DL in intelligent wireless communication technology.

The rest part of this paper is arranged as follows.In section II, we introduce the development of DL and several common DL algorithms.In section III,IV,V,VI and VII,we review applications of DL in CR, EC, CM, EED and VLC.Finally, In section VIII, we draw a conclusion and summarize the future work for DL in intelligent communication.

II.DEPP LEARNING

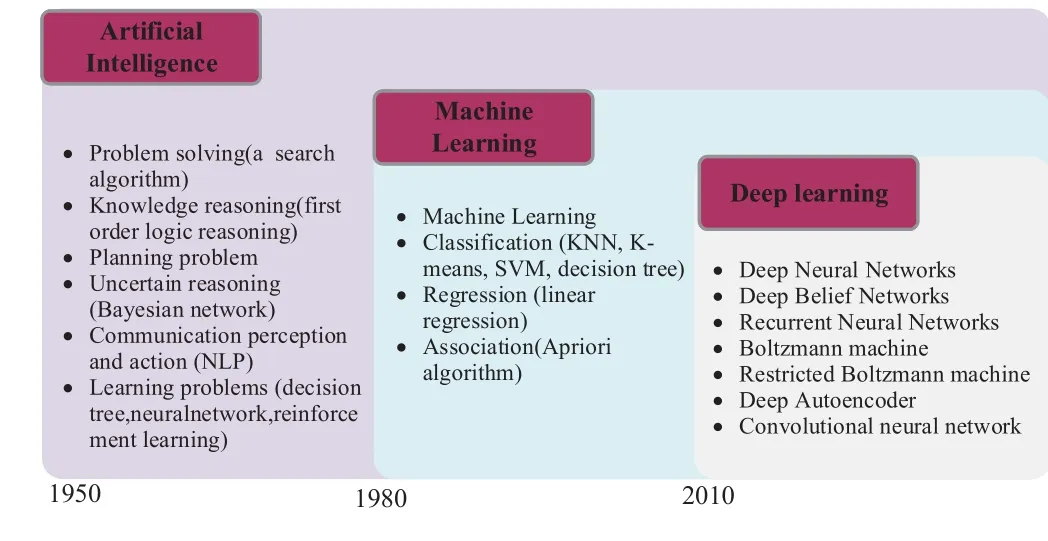

DL refers to machine learning(ML) algorithms that use neural networks.As shown in Figure 2, DL is a new field of ML research, whose motivation is to build neural networks that simulate the human brain for analytic learning.It simulates the human brain’s mechanisms for interpreting data.DL combines lowlevel features to form more abstract high-level representation attribute categories or features to discover distributed feature representations of data.The term“Deep”in DL refers to the depth of a network, while an artificial neural network(ANN)can be shallow.

Figure 2. The relationship among AI,ML and DL.

2.1 Development of DL

2.1.1 Basic Idea of DL

Let’s assume that we have a systemS.It has n layers(S1.,..,Sn).It has an inputIand an outputO.It can be graphically expressed as this:I →S1→S2→...→Sn →O.If the outputOis equal to the inputI, that is, there is no loss of information after the change of inputIthrough this system,and it remains unchanged,which means that there is no loss of information when inputIgoes through each layer ofSi, that is, at any layer ofSi,it is another representation of the original information.For DL, we need to learn features automatically.Let’s assume that we have a bunch of inputI(like a bunch of images or text).We design a systemS(withnlayers).By tuning the parameters in the system so that its output is still the inputI,we can automatically obtain a series of hierarchical features of the inputI,namelyS1.,..,Sn.Then its idea is to stack multiple layers,that is,the output of one layer is used as the input of the next layer.In this way, the input information can be expressed hierarchically.

Shallow learning is the first wave of ML.In the late 1980s, the invention of Back Propagation (BP)[10]for artificial neural networks set off a wave in ML based on statistical models.It is found that BP can make an ANN model learn statistical rules from a large number of training samples,so as to predict unknown events.This statistics-based ML approach has many advantages over the previous system based on artificial rules.Although this ANN is also called Multi-layer Perceptron(MLP),it is actually a shallow model containing only one layer of hidden nodes.In the 1990s,a variety of shallow ML models have been proposed successively,such as Support Vector Machine(SVM),Maximum Entropy Model (MEM), Logistic Regression(LR)and Boosting.The structure of these models can basically be regarded as a layer of hidden nodes(such as SVM and Boosting)or without a layer of hidden nodes(such as LR).

DL is the second wave of ML.In 2006, Geoffrey Hinton, a professor at the University of Toronto in Canada, and his team published a paper that set off a wave of DL in academic and industrial circles.There are two main viewpoints in Ref.[11]: the ANN with multiple hidden layers has excellent feature learning capacity, and the features learned can more essentially describe the data,which helps a lot for visualization or classification;the difficulty of deep neural network(DNN) training can be effectively overcome by“layer-by-layer initialization”.In Ref.[11], the layerby-layer initialization is achieved through unsupervised learning.

The limitation of the shallow-structure algorithm lies in its limited capacity to express complex functions in the case of limited samples and computing elements.Its capacity to generalize complex classification problems is limited to some extent.DL can achieve complicated function approximation by learning a deep nonlinear network structure, express the distributed representation of input data, and demonstrate a powerful capacity to learn the essential characteristics of data sets from a few sample sets.

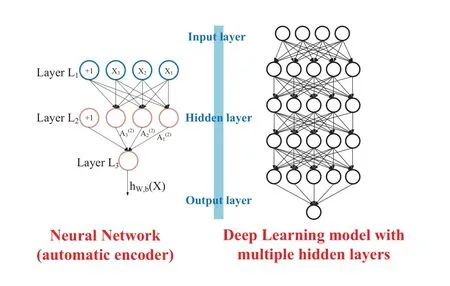

As shown in Figure 3,there are similarities and many differences between DL and traditional neural networks.The similarity between the two lies in that DL adopts a similar hierarchical structure of NN.The system consists of a multi-layer network, including an input layer, multiple hidden layers and an output layer.Only nodes of adjacent layers have connections,while nodes of the same layer and cross-layer have no connections.Each layer can be regarded as a LR model.This hierarchical structure is relatively close to the structure of the human brain.In the traditional NN,BP is adopted,a gradient descent method.On the whole, DL is a layer-wise training mechanism.The reason is that if BP is adopted,for a deep network with more than 7 layers,the residual propagation to the first layer has become too small,and the so-called gradient diffusion appears.

Figure 3. Simple model of DL and traditional NN.

2.1.2 DL Training Process

If all layers are trained at the same time,the time complexity is too high.If only one layer is trained at a time,the deviation is transferred from layer to layer.This presents the opposite problem to supervised learning,that is, there are too many neurons and parameters in the deep network,resulting in serious underfitting.In 2006, Hinton proposed an effective method to build a multilayer NN on un-supervised data.The concrete method is to build neurons in each layer.In this way,only one single-layer network is trained at a time; after all layers are trained, Hinton uses the wake-sleep algorithm for tuning.The weights of other layers except the topmost layer are changed to bidirectional,so that the topmost layer is still a single-layer NN,while the other layers are turned into graph model.Upward weights are used for“cognition”and downward weights are used for“generation”.All weights are then tuned using the wake-sleep algorithm.To unify cognition and generation is to ensure that the generated top-layer representation can recover the bottom-layer nodes as accurately as possible.

There are two training processes for DL.The first is to use bottom-up unsupervised learning.It uses uncalibrated data to train the parameters of each layer.This step can be regarded as an unsupervised training process or a feature learning process.The second is top-down supervised learning, where calibrated data is used for training and errors are transmitted from the top down to fine-tune the network.

2.2 Examples of DL

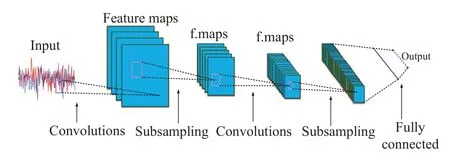

2.2.1 Convolutional Neural Network Convolutional Neural Network (CNN) is a kind of ANN, which is one of the typical deep feedforward neural networks.CNN has become a research hotspot in the field of speech analysis and image recognition,and now it is widely used in the field of communication.The weight sharing network structure of CNN makes it more similar to biological NN, which reduces the complexity of network model and the number of weights.In the traditional ML algorithm,different methods are used to extract features, but if CNN learns the filter by itself, it means that the network will automatically extract features.Figure 4 shows the different convolutional layers used for various feature extraction.

Figure 4. A typical CNN architecture[4].

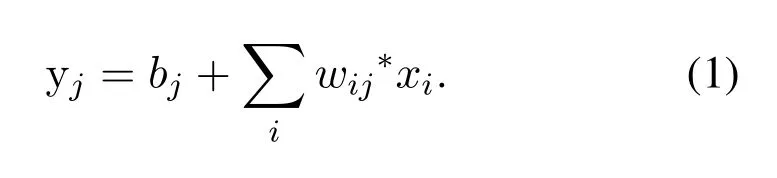

The input of the convolution stage is a threedimensional array composed ofn1two-dimensional feature maps of the size ofn2×n3.Each feature map is denoted byxi.The outputyof this stage is also a three-dimensional array, which is composed ofm1feature maps of the size ofm2×m3.In the convolution stage,the weight of the connection between the input feature mapxiand the output feature mapyjis denoted aswij,and the convolution kernel[12]that can be trained is equal toK2×K3.The output feature map can be expressed as follows:

In this equation,*is the two-dimensional discrete convolution operator;bjis an offset parameter that can be trained.

In the nonlinear stage, the features obtained in the convolution stage are screened according to certain principles.The screening principle is usually to adopt the way of nonlinear transformation to avoid the problem of insufficient presentation of linear model.In the nonlinear stage, the features extracted in the convolution stage are taken as input to carry out nonlinear mappingR=h(y).The nonlinear operation of traditional CNN adopts nonlinear saturation functions such as sigmoid,tanh or softsign[13].In recent years,the nonlinear unsaturation function ReLU is mostly used for the CNN[14].When training gradient descent,ReLU has a faster convergence rate than the traditional nonlinear saturation function, so when training the entire network,the training speed is also much faster than the traditional methods[14].The equations of the four nonlinear operation functions are expressed as follows:

Sigmoid:

Tanh:

Softsign:

ReLU:

In the subsampling stage,each feature map is operated independently.Either average pooling or maximum pooling is usually used.After pooling, the resolution of the output feature map is reduced, but the features described by the high resolution feature map can be kept well.Some convolutional neural networks eliminate the subsampling stage completely and reduce the resolution by setting the sliding step of the convolutional kernel window to be larger than 1 in the convolution stage[15].

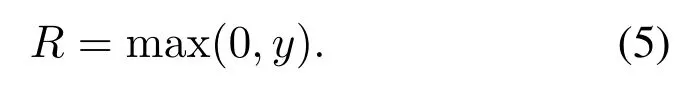

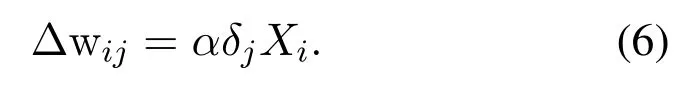

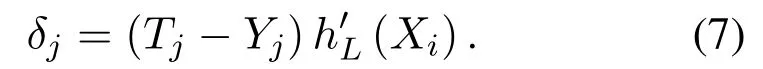

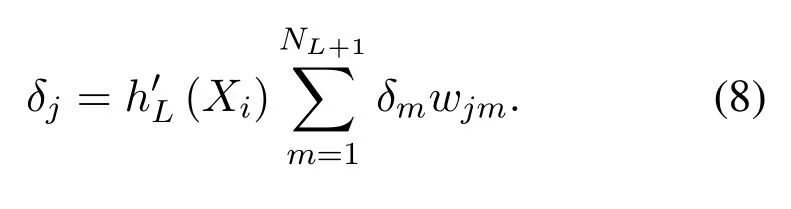

For any layerLof the convolutional network, the updated equation[16] of the weightwijbetween thei −thinput featureXiand thej −thoutput featureYjis as follows:

When theLlayer is the last layer of the convolutional network,δjis as follows:

In this equation,Tjis thej −thexpected label;h′(x)is the derivative of the nonlinear mapping functionj= 1,2,...,NL;In Eq.(6),whenLlayer is not the last layer,L+1 layer is the next layer, thenδjis as follows:

In this equation,NL+1is the number of output features of theL+1 layerm= 1,2,...,NL+1;Wjmis the weight between thej −thoutput ofLlayer(as thej −thinput ofL+1 layer)and them −thoutput ofL+1 layer.

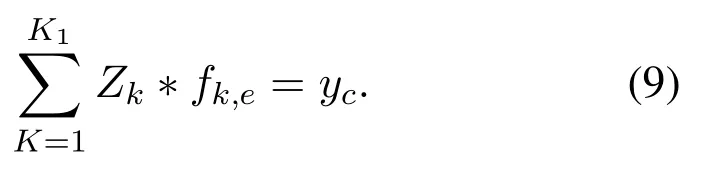

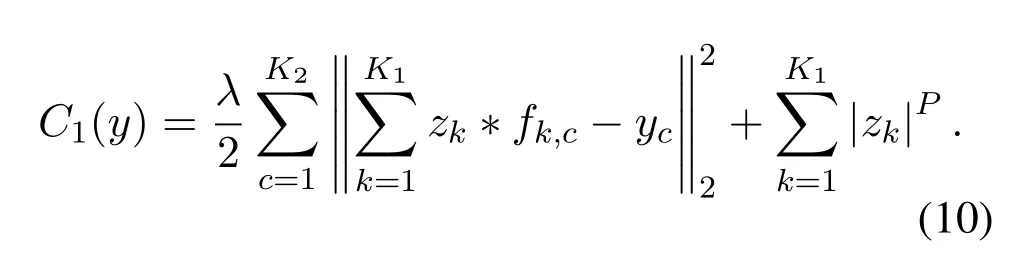

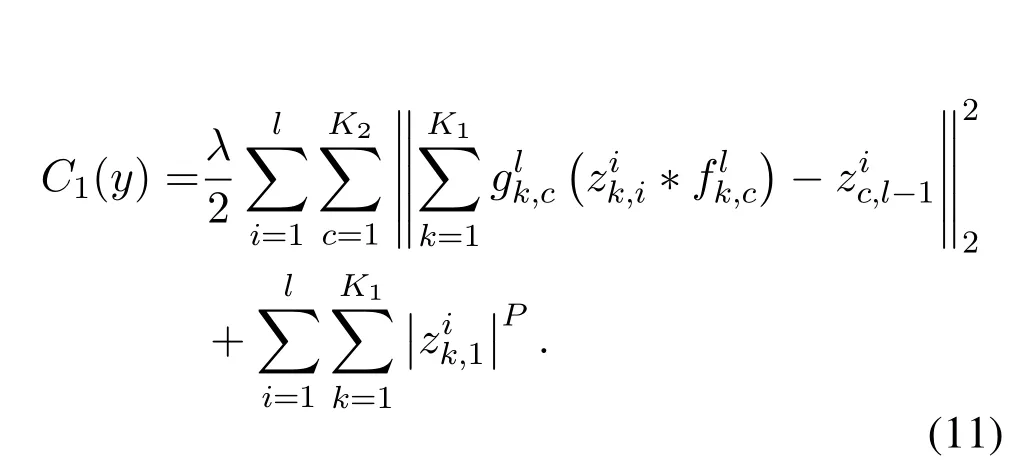

Now let’s briefly introduce a typical deep feedback network: deconvolution network.Deconvolution network is a regularization method for sparse decomposition and reconstruction of signals by priori learning.The input signalyis composed ofK0feature channelsy1,y2,...,yk0, of which any channelyccan be regarded as the convolution ofK1hidden layer feature maps and filter bankfk,c.

Since Eq.(9)is an underdetermined function,to obtain its unique solution, it is necessary to introduce a regular term on the feature mapzk, and this regular term makes the feature mapzktend to be sparse.So the cost function is as follows:

In this equation, the first term is the error between the input image and the reconstruction result;the second term is the sparsity degree of the feature map,which is thep−norm,andpis generally taken as 1;λis the weight coefficient of the balance reconstruction error and the sparsity of the feature map.The singlelayer deconvolution network is superimposed by multiple layers to obtain the deconvolution network.In the deconvolution network,the cost function of the singlelayer deconvolution network is the sum of the cost function of all the input signals in the current layer.

In this equation, the first term is the error between the reconstruction target of the previous layer and the current layer,whereis the feature map of the current layer;is the filter bank of the current layer;is the feature map of the previous layer;represents the connection between the input feature map and the output feature map in the same layer,and it is a fixed binary matrix.It is generally assumed that the first layer is fully connected and the following layers are sparsely connected.The second term is the sparsity of the feature map;λis the weight coefficient to balance the reconstruction error and the sparsity of the feature map.

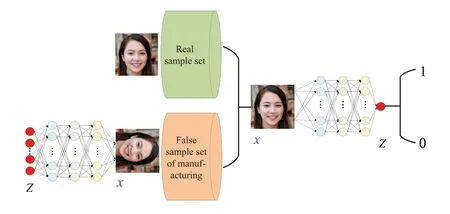

2.2.2 Generative Adversarial Nets

In Generative Adversarial Nets (GAN), there are two NN.One is the generator,which is used to receive random numbers to minimize the gap with the target;One is the discriminator, which is used to receive the data generated by the generator and to maximize the gap with the target.In the original GAN theory, we don’t need that G and D are NN,we just need to fit the corresponding generating and discriminating functions.But in practice,deep neural networks are generally used as G and D.An excellent GAN application needs a good training method,otherwise the output may not be ideal due to the freedom of the NN model.The typical GAN model structure is shown in Figure 5.

Figure 5. Typical GAN model.

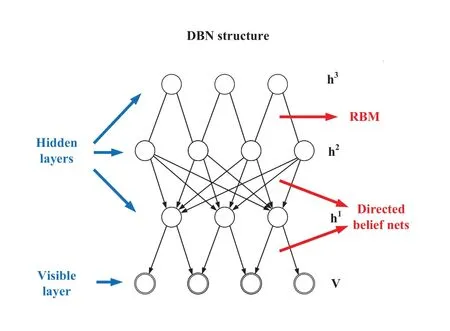

2.2.3 Deep Belief Networks

DBN is composed of multiple neurons, which are divided into dominant neurons and recessive neurons.Dominant neurons are used to receive input,and recessive neurons are used to extract features.Therefore,recessive neurons are also called feature detectors.The connections between the top two layers are undirected and form federated memory.The other lower layers have directed connections above and below the connections.The bottom layer represents the data vector, and each neuron represents one dimension of the data vector.Restricted Boltzmann Machines (RBM)are the components of DBN.The process of training DBN is carried out layer by layer.In each layer, the hidden layer is inferred by the data vector,and then the hidden layer is regarded as the data vector of the next layer.In fact,each RBM can be used as a clusterer independently, and the specific DBN network structure is shown in Figure 6.

Figure 6. DBN network structure.

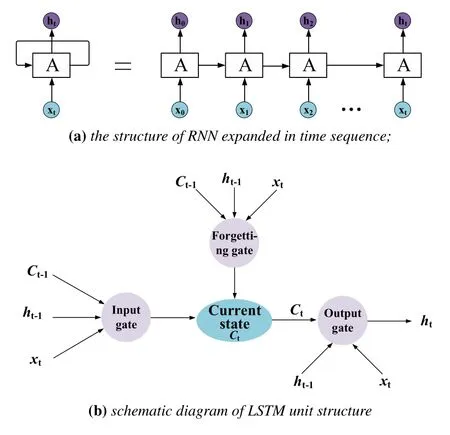

2.2.4 Recurrent Neural Networks

Recurrent Neural Network (RNN) is originally designed to describe the relationship between the current output of a sequence and previous information.In the network structure, RNN will remember the previous information,and then use the previous information to influence the output of subsequent nodes.RNN can be regarded as the result of the same NN being copied indefinitely.For optimization reasons, a true infinite loop is impossible in real life.

To solve the problem of long-term dependence,different interval of useful information and different length, Long Short-Term Memory (LSTM) is proposed.LSTM is a special network structure with three“gates”.LSTM relies on some “gates” to make information selectively affect the state of every time sequence in the NN.The“gate”structure is a combination of a sigmoid NN and a bitwise multiplication operation.It is called a gate because the fully-connected NN layer, which uses sigmoid as the activation function,outputs a value between 0 and 1 describing how much information the current input can pass through this structure.The specific RNN network structure is shown in Figure 7.

Figure 7. RNN network structure.

2.2.5 Restricted Boltzmann Machine

RBM is a kind of random NN model with twolayer structure, symmetrically connected and no selffeedback,with full connections between layers and no connections within layers.RBM has only two layers of neurons.The first layer,called the dominant layer,consists of the dominant neurons and is used to input training data.The other layer,called the recessive layer, consists of recessive neurons and acts as a feature detector.The difference between RBM and DNN is that RBM does not distinguish between forward and reverse,and the state of the visible layer can be applied to the hidden layer,while the state of the hidden layer can also be applied to the visible layer.The training process of RBM is actually to find a probability distribution that can best generate training samples.

2.2.6 Boltzmann Machine

Boltzmann Machine (BM) is a random NN, because it uses Boltzmann distribution as the activation function, so it is called BM.BM is a network of symmetrically connected neuron-like units that randomly decide to turn on or off.It introduces statistical probability into the state changes of neurons.The equilibrium state of the network obeys Boltzmann distribution.The network operation mechanism is based on simulated annealing algorithm.BM combines the advantages of multi-layer feedforward NN and discrete Hopfield network in the aspects of network structure,learning algorithm and dynamic operation mechanism.It is based on discrete Hopfield network.It has the capacity of learning and can find the optimal solution through a simulated annealing process,but its training time is longer than that of BP network.

2.2.7 Deep Autoencoder

The general idea of this algorithm is to treat the hidden layer of NN as an encoder and decoder.The input data is encoded and decoded by the hidden layer.When it reaches the output layer, the output results should be consistent with the input data as far as possible.One advantage of this approach is that the hidden layer captures the features of the input data and leaves them unchanged.Compared with PCA,DA can be understood as adding several hidden layer networks.There are two main ways to achieve DA:one is through NN,and the other is through CNN.

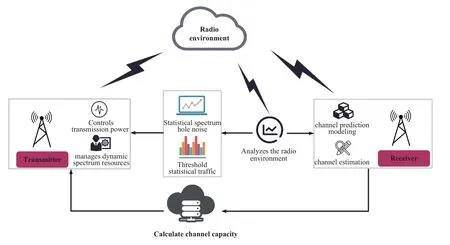

III.APPLICATION OF DL IN COGNITIVE RADIO

CR has the capacity to perceive the environment and the capacity to learn and adapt to the changes of the environment.It also has the characteristics of high reliability of communication quality and reconfigurability of system function modules.By means of learning and understanding, it adaptively adjusts the internal communication mechanism and changes the specific wireless operation parameters(such as power,carrier modulation and coding)in real time to adapt to the external wireless environment,so as to independently find and use the idle spectrum.It can help users choose the best and most suitable service for wireless transmission,and even can delay or initiate transmission according to the existing or upcoming wireless resources.The general CR architecture is shown in Figure 8,and the radio environment analysis is usually part of the DL enhancement.In this part, we first introduce the application of DL in CR.We mainly focus on spectrum sensing,MR,and FHR as they are key technologies in CR.We also explain how DL can help solve problems in CR.

Figure 8. A general CR architecture.

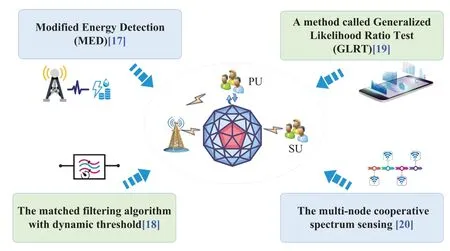

3.1 Spectrum Sensing

CR can detect idle spectrum in real time and implement intelligent spectrum management strategy.This greatly improves communication efficiency.Spectrum sensing is one of the key technologies in CR system.It can sense the spectrum holes in the frequency band, so that the available frequency band can be allocated accurately and timely, so as to improve the spectrum utilization.Therefore, experts and scholars of many famous scientific research institutions at home and abroad have done a lot of research on spectrum sensing,and achieved fruitful results.As shown in Figure 9, some references have proposed detection methods and performance studies on spectrum sensing technology[17–20].

Figure 9. Some detection methods on spectrum sensing technology.

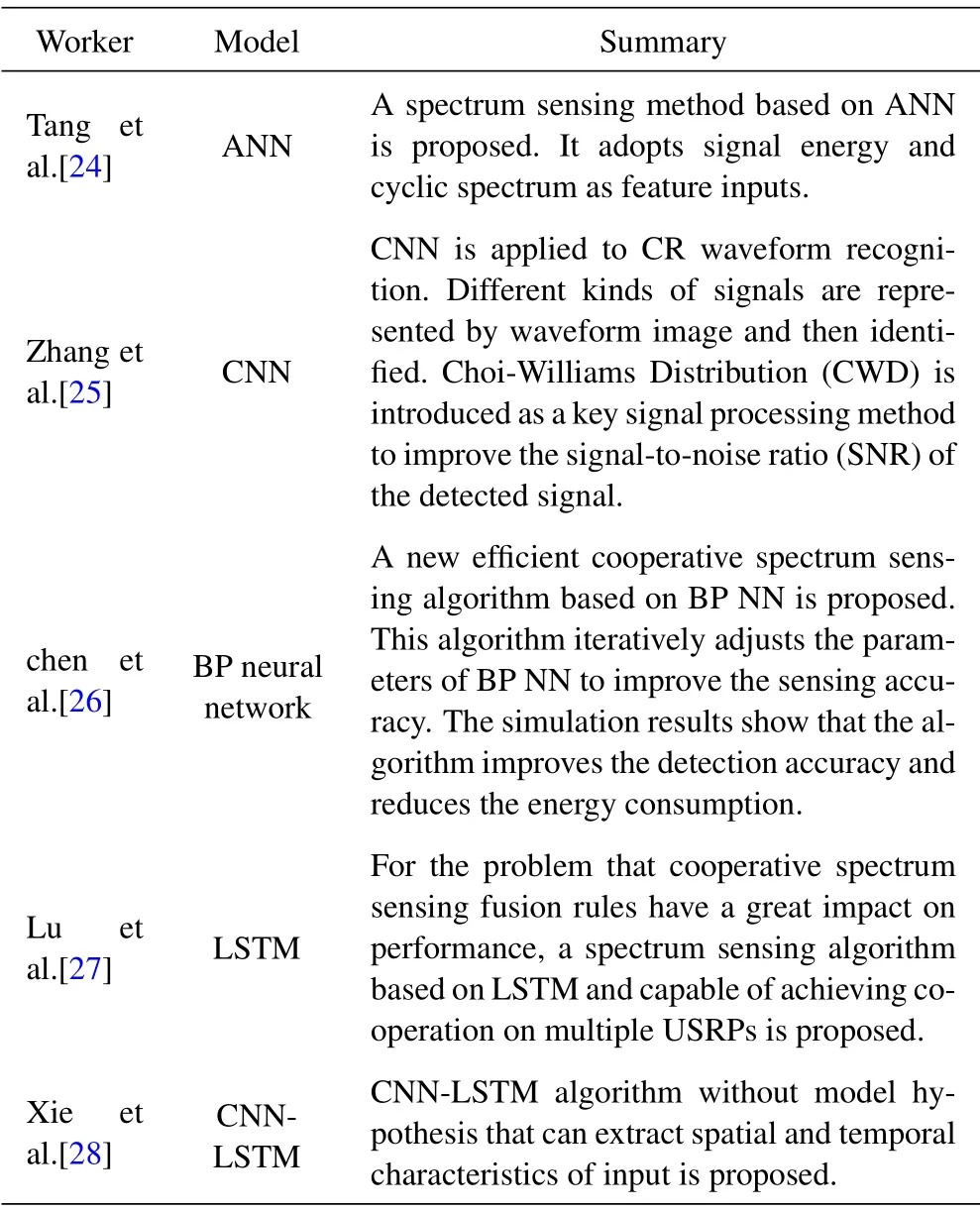

It is found in the research process of spectrum sensing method that with the advent of the era of big data and the enhancement of computing power, the traditional spectrum sensing algorithm can not make use of the information in the channel effectively, the detection performance is poor at low SNR and the training complexity of some algorithms is high,and it can not meet the intelligence requirements of CR system.Therefore,DL is applied to the spectrum sensing process of CR.We have concluded some DL applications in spectrum sensing in Table 1.

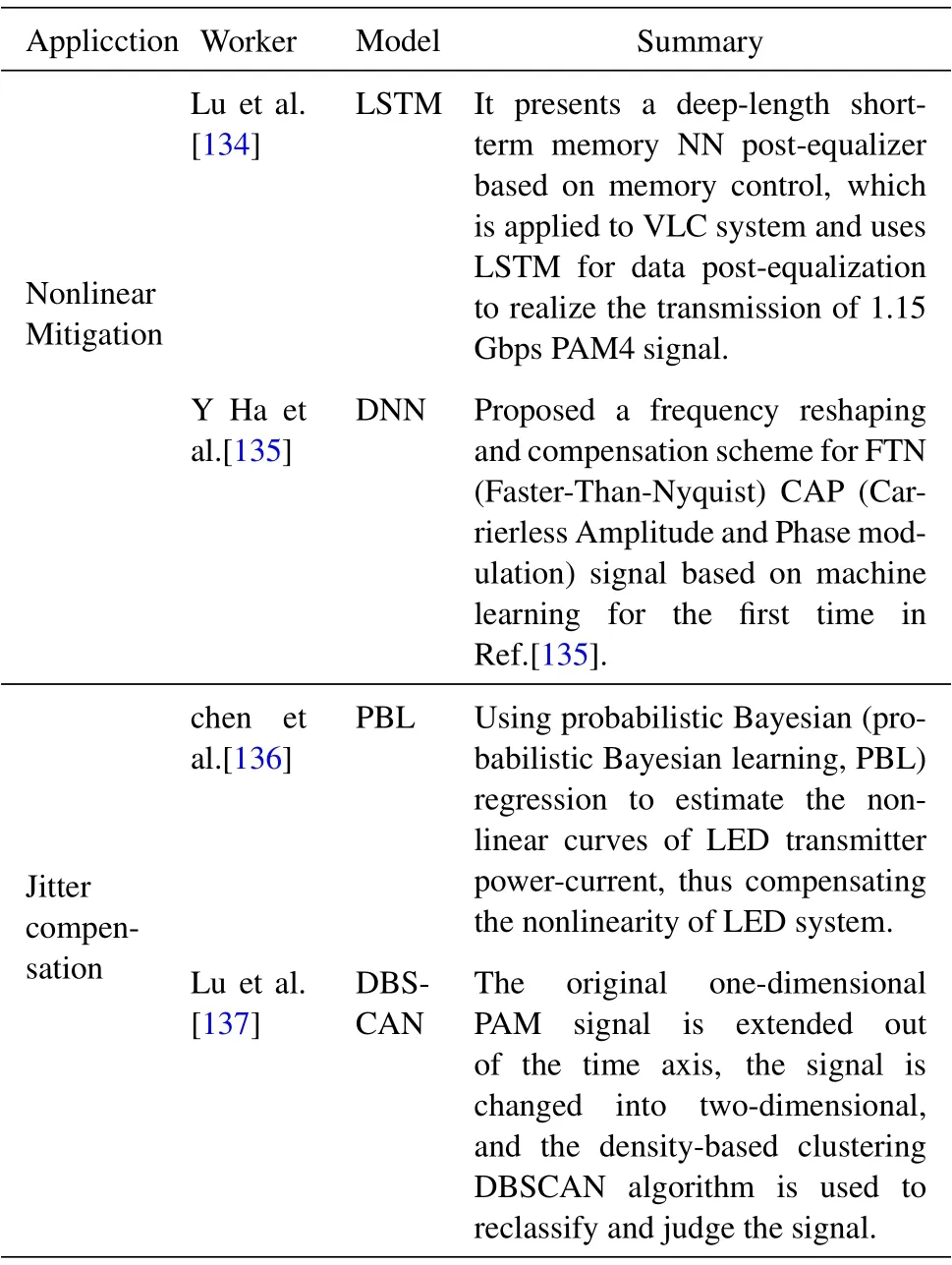

Table 1. Summary applications of recent DL in Spectrum Sensing.

In Ref.[21], the NN is implemented by Levenberg-Maquardt algorithm in the case of single and multichannel energy detectors.It is used to solve a set of objective functions with minimal iteration and to improve the efficiency of cognitive radio network(CRN).In addition, the proposed system in Ref.[21] consists of intelligent units trained by ANN to fuse all the data for use by secondary users.Detection and false alarm probability are the basic indexes used to evaluate the overall performance of the design system.

In Ref.[22], in view of the disadvantage that traditional energy detection is difficult to detect the signal of the primary user at low SNR, CNN is used to improve the performance at low SNR using Universal software radio peripheral national instruments 2900 devices.It is proved that the frequency spectrum sensing method based on CNN has higher accuracy than the energy detection method under low SNR.The performance of CNN in extracting two-dimensional image features is usually better than other ML algorithms.The system for spectrum sensing is still based on manual operation.The depth and breadth of data mining is insufficient.There are a large number ofhistorical data,multiple heterogeneous and unlabelled data types, and multidimensional of integration platform,In Ref.[23],a composite NN architecture based on spectrum sensing system is proposed,This system includes three layers: spectrum sensing layer, data processing layer and situation analysis layer, which can help achieve the bottom-layer data processing and high dimensional spectrum analysis.In Ref.[23], an audio intelligent service type analysis module is designed, and MC BLSTM is proposed to perform DL for audio data to achieve automatic semantic recognition and pressure recognition.

Many challenges are faced for the application of DL to spectrum sensing.There are many models for DL,and different models are suitable for different scenarios.For different algorithm models of DL, whether simple signal processing mode can be combined with DL is a problem to be verified.One of the key factors for the success of DL is that a large amount of training data is needed.To classify the perceptual signals,feature extraction is needed.Therefore, which features of the perceptual signals should be extracted will be the focus of research.It is one-sided to measure the spectrum sensing technology based on DL only by the DL model evaluation method or the traditional spectrum sensing performance standard, so more targeted methods need to be proposed.

3.2 Spectrum Sharing

Spectrum Sharing is a key technology in CR, among which Dynamic Spectrum Sharing (DSS) is the key to improve the efficiency of spectrum utilization.It dynamically and flexibly allocates spectrum resources for different modes of communication technologies in the same frequency band, which is of great significance in achieving wide coverage of 5G network by using low frequency band.Blockchain technology is an emerging hotspot vigorously developed by different countries in the world.It makes use of cryptography principle and consensus mechanism, which is the key to ensure the fairness, credibility, coconstruction and sharing of 6G network.Providing solutions to solve problems such as lack of security and trust mechanism in the dynamic spectrum sharing system.In the dynamic spectrum network, there arensspectrum providerss={si(i=1,...,ns)}andndspectrum demandersD={Di(i=1,...,nd)}Thei −thspectrum provider has the total spectrum resources of(i=1,...,ns) and the spectrum demand(t)(i=1,...,ns)of the Poisson distribution with parameter(i=1,...,ns) The spectrum demand(t)(i=1,...,nd) ofndspectrum demanders obeys the Poisson distribution with parameter(i=1,...,nd).Let’s assume that all spectrum demands are distributed independently.For spectrum provider(i=1,...,nd),in order to ensure the service quality, it usually gives priority to ensuring its own spectrum demands.Therefore, the actual shareable spectrum resource(i=1,...,ns) provided by the spectrum provider is as follows:

DL can realize extremely strong AI functions after learning training samples, but cannot understand the“logic relationship”based on current research.Therefore,block-chain technology is placed in the favorable position.Since block chain is a management technology for process data, all data recorded in a chain are arranged in a time-based logical sequence.If data on a block chain are set as training samples in logic sequence, DL can learn through data on the block chain, thus “learning” more abstract concept.Therefore,it seems that block chain are quite different from DL,but block-chain technology has its unique features to provide supports to learn learning data for DL.Besides, the intelligent contract mechanism on a block chain can also be connected to DL, triggering intelligent contract through in-depth learning output or using intelligent contract results as DL input.As shown in Figure 10, the introduction of smart contracts, EC and AI makes the blockchain have the advantage of resource sharing.

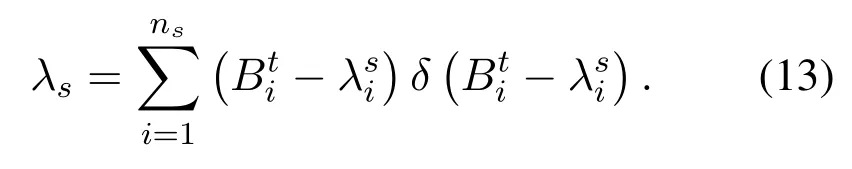

With the popularization of 5G and the continuous growth of bandwidth demand,it is difficult to meet the current social needs by using traditional algorithms to achieve spectrum resource sharing.Therefore, we integrate blockchain with spectrum resources and apply DL to spectrum sharing.Table 2 shows some classic work conclusions on some researchers applying block chain and DL in spectrum sharing technology in recent year.

Table 2. Summary of spectrum sharing technology.

In Ref.[34] proposes a solution that does not limit the transaction function and is oriented to the privacy leakage problem of the consortium chain, aiming at the problem that attackers can obtain the privacy information recorded on the block-chain through the data mining algorithm.Spectrum Sensing Data Falsification (SSDF) attacks can seriously affect the performance of CR.While sharing resources, it also needs to deal with security issues in real time.This requires a robust CR architecture and protocol that provides the integrity to share data between cognitive users.Therefore,In Ref.[35]proposes a spectrum sharing protocol based on active blockchain,which utilizes blockchain to provide security against SSDF attacks.

In order to protect the confidentiality of spectrum sharing transmission and prevent eavesdropping, In Ref.[30]proposes an opportunistic interference framework assisted by source cooperation to protect the spectrum sharing system from eavesdropping.In Ref.[36]proposes the eavesdropping attack in the 5G spectrum sharing system based on priority, and analyzes the security performance of the sharing system through the security interrupt probability.The results show that the transmission with lower priority can help the system with higher priority to achieve better security performance.In Ref.[31] proposes a framework based on blockchain to achieve the safe and efficient spectrum sharing of human-to-human and machine-tomachine coexistence in 5G heterogeneous network.

In the context of scarce spectrum resources, AI is needed to allow new “secondary” systems to access unused resources of the primary system in places where coordination is impossible, without modifying the primary system.ML is a promising way to identify the primary system and adjust channel access appropriately.

In Ref.[32] studies the ability of Feedforward DL,LSTM and RNN to detect primary user communication patterns.Spectrum monitoring is the key to realize efficient spectrum sharing.In Ref.[37]proposes the use of neural networks to identify various radio technologies and signal classification associated with interference.It uses CNN to perform signal classification,and adopts six well-known CNN models to train ten kinds of signals.

In Ref.[33]proposes a technology that uses 5G supported bidirectional cognitive DL nodes and DSS of long and short term memory.It adopts radio cyclic prefixed orthogonal frequency division multiple access (OFDM) for joint spectrum allocation and management.In Ref.[38] proposes the potential, advantages and challenges of applying blockchain and AI to DSS at 6G or higher versions.Through an example of DSS in a DRS architecture, the simulation results show the effectiveness of Deep Reinforcement Learning (DRL) to maximize users’ profit margin.It also discusses solutions to reduce blockchain overhead and facilitate access to AI training data.

To sum up,in support of the rapid growth of broadband services and “increasingly demanding” user experience quality requirements, we can hardly see the efficient utilization of current radio spectrum resources.Instead, there are prevalent problems in the real radio network, such as low efficiency of resource management modes and shortage of spectrum resources.To solve the shortcomings of radio resources that restrict the development and application of radio communication, the application of DL to spectrum sharing has gradually become a potential.

Multiple-input-multiple-output is regarded as one of the most promising technologies in 5G wireless communication system, and has a bright prospect in the future dynamic spectrum access[39].Combination of beam-forming CR technology and DL can give full play to the advantage of DL in easily treating multidimension data structure.DL network model has various types,and for spectrum sensing,the topic that urgently needs to be solved is how to establish a network has reasonable structure and simple hierarchy.This requires further derivation to combination of DL and spectrum sensing.Liu et al.[40] have made some efforts in this aspect.Users disobeying rules or being pure malicious may modify air interface to simulate main users in CR.RF fingerprinting aims at identifying transmitter by characterizing features special to equipment existing in analog signals from transmitter.Appearance of the learning technology of confrontational machine requires research on anti-interference in the future spectrum sensing.

3.3 Modulation Recognition

MR is one of the technologies of CR in the field of communication.For commercial use,MR has the following applications.In cooperative communication scenarios, MR is usually used in adaptive modulation communication systems.The traditional modulation module is replaced by the adaptive modulation module.According to the different channel states,the adaptive modulation module dynamically selects the modulation mode from the pool of optional modulation types to meet the transmission requirements.In non-cooperative systems, it is important to detect friendly signals and demodulate them safely.The receiver usually does not know the modulation mode of the received signal.At this point, MR can determine its modulation mode.This is the key step of signal demodulation.

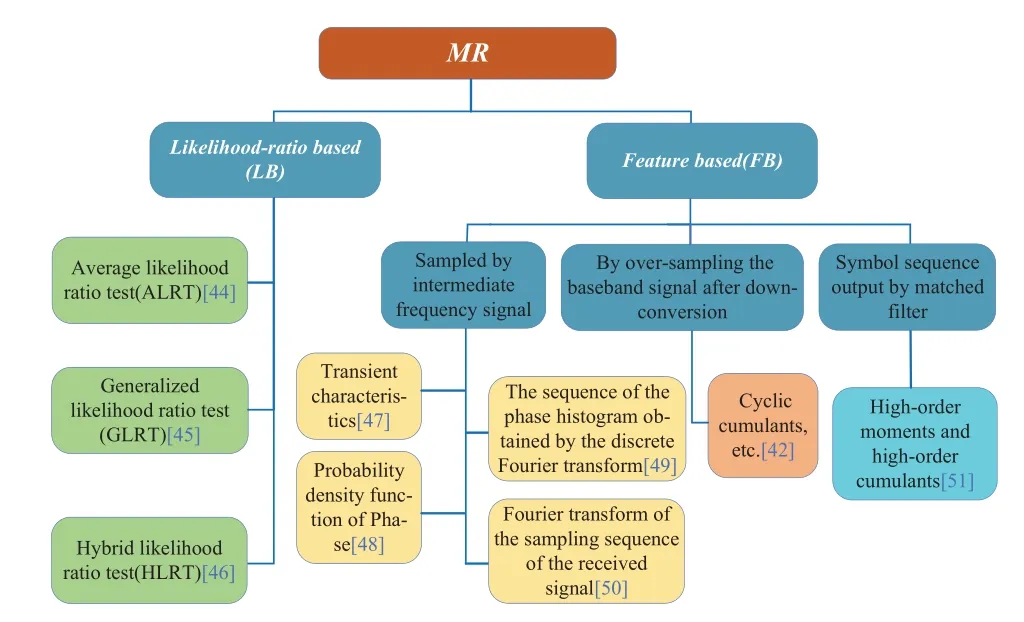

With the development of network function virtualization and software-defined network technology, the field of information technology is gradually transitioning from a dedicated system to a universal system.In a software-defined radio system,MR can support the digital signal processing module at the receiving antenna to dynamically configure the modulation mode to recognize the received signal, and improve the flexibility of the module to meet the design requirements[41, 42].For multimedia transmission applications, the network needs to dynamically adjust the transmission rate to meet the QoS requirements according to the different transmission services[43].The transmission rate can be adjusted by adjusting the modulation mode of information transmission.At this time,the receiver adopts MR to adapt to the modulation change requirements of the adaptive modulation communication system, so as to provide the lower technical support for the upper application.As shown in Figure 11,MR can be theoretically divided into likelihood ratio based (LB) and feature based(FB)MR[42,44–51].

Figure 11. Research on Classification of MR.

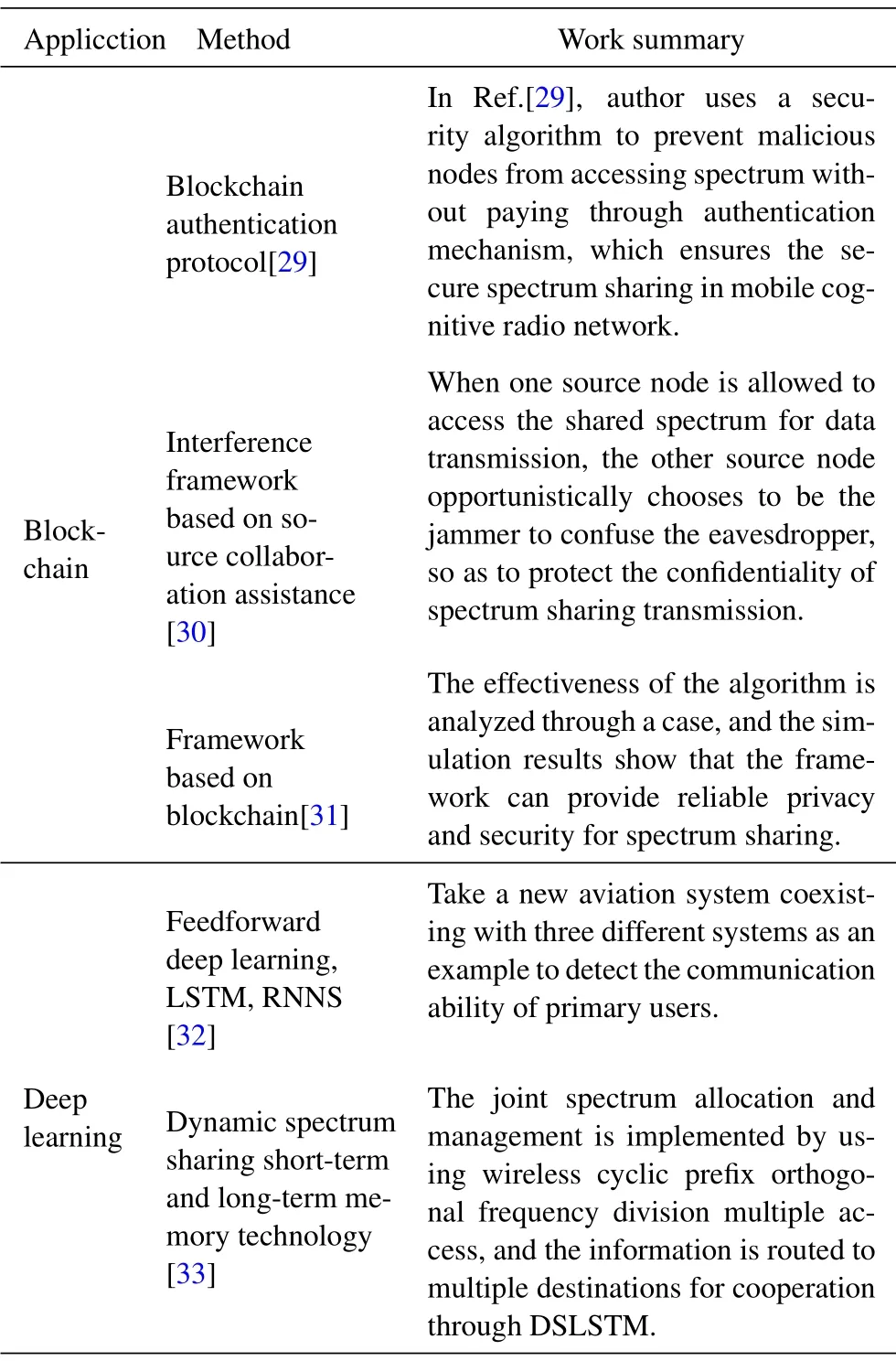

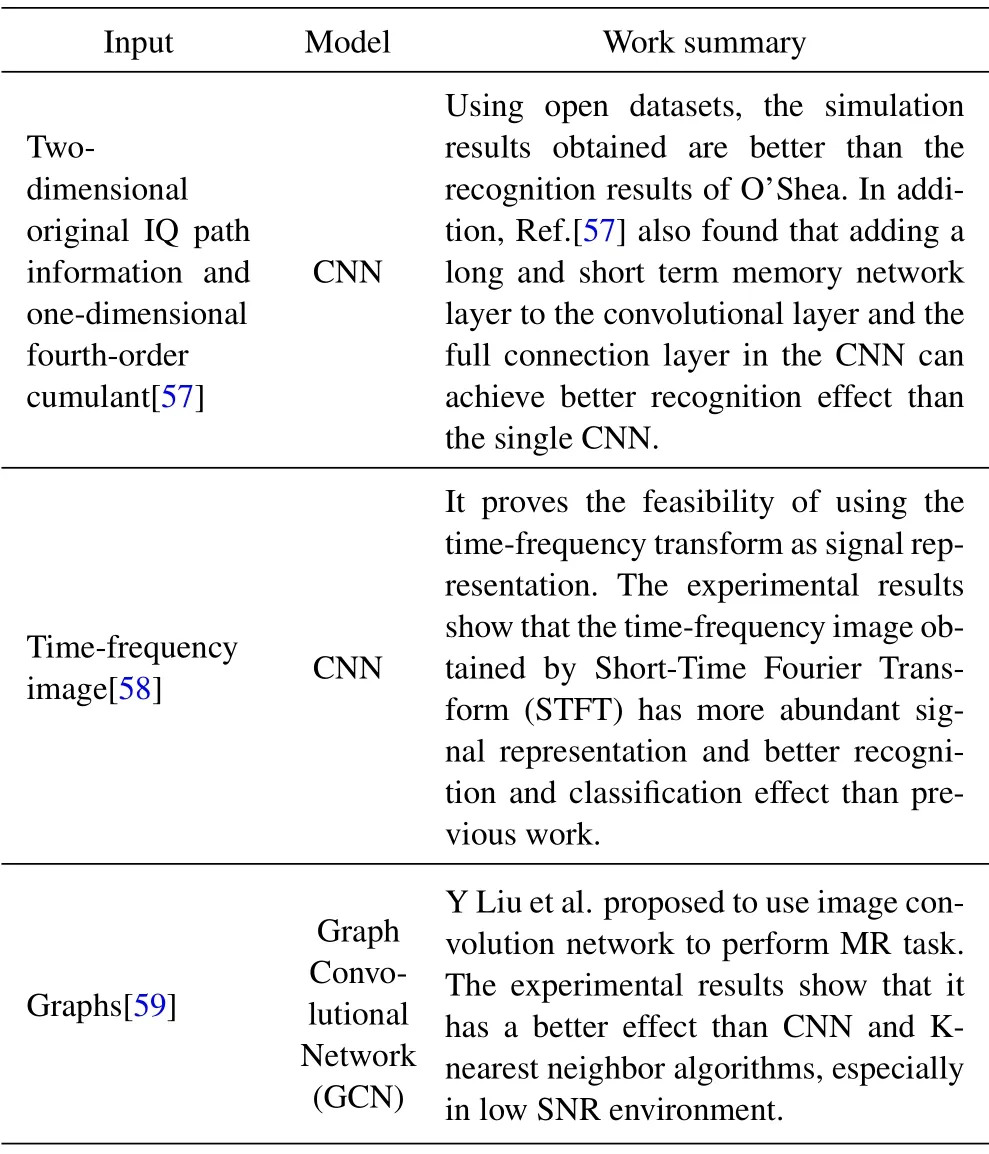

In the traditional feature-based MR, ML is usually used as a classifier with input as a signal feature and output as a modulation mode[52].With the rapid development of DL in recent years,many DL-based MR methods have been proposed.The MR technology based on DL proposed in recent years usually adopts more complex NN to complete the function of feature extraction and classifier at the same time,showing different properties and performance from the traditional methods.We have concluded some DL applications in MR in Table 3.

Table 3. Summary applications of recent DL in MR.

O’Shea et al.[53] outlined the application of DL in radio signal processing and used GNU Radio platform to generate public datasets for MR.The dataset includes 11 modulated signals at 20dB.This dataset also provides a reliable benchmark for researchers working in MR,as well as a measure against which techniques can be quickly compared.Subsequently, O’Shea et al.[54] did not manually extract any features, but directly fed the original IQ two-channel signals of the dataset into the CNN, and successfully recognizing and classifying 3 analog modulated signals and 8 digital modulated signals.

In Ref.[55], the influences of convolutional kernel size in CNN, network depth and the number of neurons in each layer on MR performance are studied.The CNN, the residual network, the initial network and the convolutional-based long and short term DNN are identified and classified.Experimental results show that the proposed network structure can complete the task of recognition and classification,and the MR effect is not limited by the network depth.In the same year, Karra K et al.[56] designed a layered DNN, which could not only recognize the type of modulated signal, but also recognize the type of dataand the specific order of the signal,and achieved good simulation results.

Shi Y et al.[60] studied various scenarios encountered in a real wireless communication environment,not just the ideal state we usually assume,such as the presence of interference signals or deception signals,and unknown modulated signal types, and used open dataset to design and simulate different scenarios and get good classification effect.

As can be seen from the above studies, the reason why DL attracts much attention is that it can directly find the implicit high-dimensional mapping relationship from input to output from a large number of sample data.It does not need to manually extract features by itself,but has a strong representational capacity.However, in the current research, the features extracted based on DNN convolutional layer have not clear physical significance, and the design and improvement of the network also rely on experience,and no unified standard has been formed.Therefore, MR based on DL is worth further research.

What requires close attention is that DL technology has shown a trend of being easily attacked by the learning technology of confrontational machine, and such algorithm can easily suffer from confrontational attack especially when DL is used in radio signal MR tasks.Sadeghi et.al[61] have tried to make white-box and black-box attack based on MR network of CNN.Results show that these attacks can greatly reduce classification performances through extremely small inputinterference.Compared with traditional interferences conditions (attackers only send random noise),such confrontational attacks require smaller transmission power, which therefore, requires close attention in future research.

3.4 Frequency Hopping Recognition

Frequency hopping communication has the advantages of strong anti-fading and jamming ability, high confidentiality, low probability of interception and strong networking ability.It is widely used in military and civilian communication fields[62].However,these advantages also create problems for non-cooperative reception.For example, in radio supervision, military communication reconnaissance and countermeasure,the receiver lacks a priori information such as frequency hopping pattern,frequency hopping period and modulation mode.Therefore,how to achieve the effective detection and reception of frequency hopping signal, and then extract the signal features, and achieve the recognition and sorting of frequency hopping signal is still a research difficulty and hot spot.

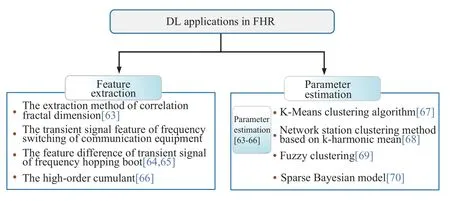

At present,the research of frequency hopping classification and recognition is mainly divided into two categories, As shown in Figure 12, One is the sorting based on feature extraction[63–66], the other is frequency hopping signal sorting based on parameter estimation[67–70].This method is to use the related classifier to sort the frequency hopping signal when the parameters such as hopping period, hopping time and power of the frequency hopping signal have been estimated.

Figure 12. DL applications in FHR.

To recognize superimposed transmission modes In reconfigurable wireless terminals based on softwaredefined radio technology,in Ref.[71],a pattern recognition method using time-frequency distribution as nonlinear signal processing technology is proposed.Attention has been focused on distinguishing the two modes (FH-CDMA and DS-CDMA) associated with the two standards (Bluetooth and IEEE 802.11b) in the indoor environment.Two features are studied in Ref.[71]: the standard deviation of instantaneous frequency;the maximum duration of the signal.Multiple hypothesis tests have been carried out on the classification signal.In particular, four categories have been studied.The selected networks are feedforward backpropagation neural network(FFBPNN)and SVM.The experimental results show that the proposed method achieves good results, and the comparison between two distributions and two classifiers indicates that CW distribution and SVM provide the optimal classification performance.

In Ref.[72], aiming at the problem of frequency hopping MR, a recognition method based on timefrequency energy spectrum texture features is proposed.SVM is used to train, classify and recognize the preprocessed feature sets after parameter optimization.The experimental results show that the multidimensional feature vectors extracted by the proposed method have high stability, sufficiency and separability,especially in the case of low SNR,and the recognition accuracy of frequency hopping modulation under different SNR is higher than that of previous work.In Ref.[73], a frequency hopping MR system based on CNN is proposed to address the difficulty of feature extraction and the lack of representation ability designed by human.First, the frequency hopping signal is transformed into a two-dimensional time-frequency diagram, and then the background noise is filtered by Wiener filter algorithm.In this way, the debugging recognition field is transformed into the image recognition field.Then,the designed 11-layer CNN is used to train the time-frequency image per hop extracted by connected domain detection and bilinear interpolation algorithm.The experimental results show that the network structure can complete the task of recognition and classification, and the method in Ref.[73]can identify 8 kinds of frequency hopping modulation signals well,and the average recognition rate is better than that of previous work.

Exploration on application of DL in CR started within 10 years,and most research results have shown in the recent 3 years.From the perspective of CR,neutral network can be deemed as an arbiter that can adapt to complicated channel environment; From the perspective of DL,most current CR classic algorithm thoughts can be seen in data pretreatment of neutral network.One of the popular features of DL is that it enables users to use a complex model with a relatively easier mode,and gain good effects.There are numerous in-depth learning types,and CNN model has various varieties such as LeNet, AlexNet, ResNet and VGG.It has been proved that they have excellent performances in their own tasks.Currently, there is still no enough theoretical derivation,and it is still lack of sufficient theoretical basis on how to choose suitable network structure.The breadth of combination of indepth learning and CR has shown up,but it still needs to break through the depth.

IV.APPLICATION OF DL IN EC

EC is a new computing mode that performs computation on the edge of the network, and it is a new computing mode in the era of Internet of Everything[74].AI technology represented by DL enables every EC node to have the ability of calculation and decision.The continuous integration of EC and AI promotes the development of edge intelligence.AI utilizes EC infrastructure to achieve more efficient ML models,while massive data generated at the edge of the network can be further released with AI technology, thus allowing more complex intelligent applications to be processed at the edge, and meeting the needs of agile connectivity, real-time business,data optimization, application intelligence, security and privacy protection.

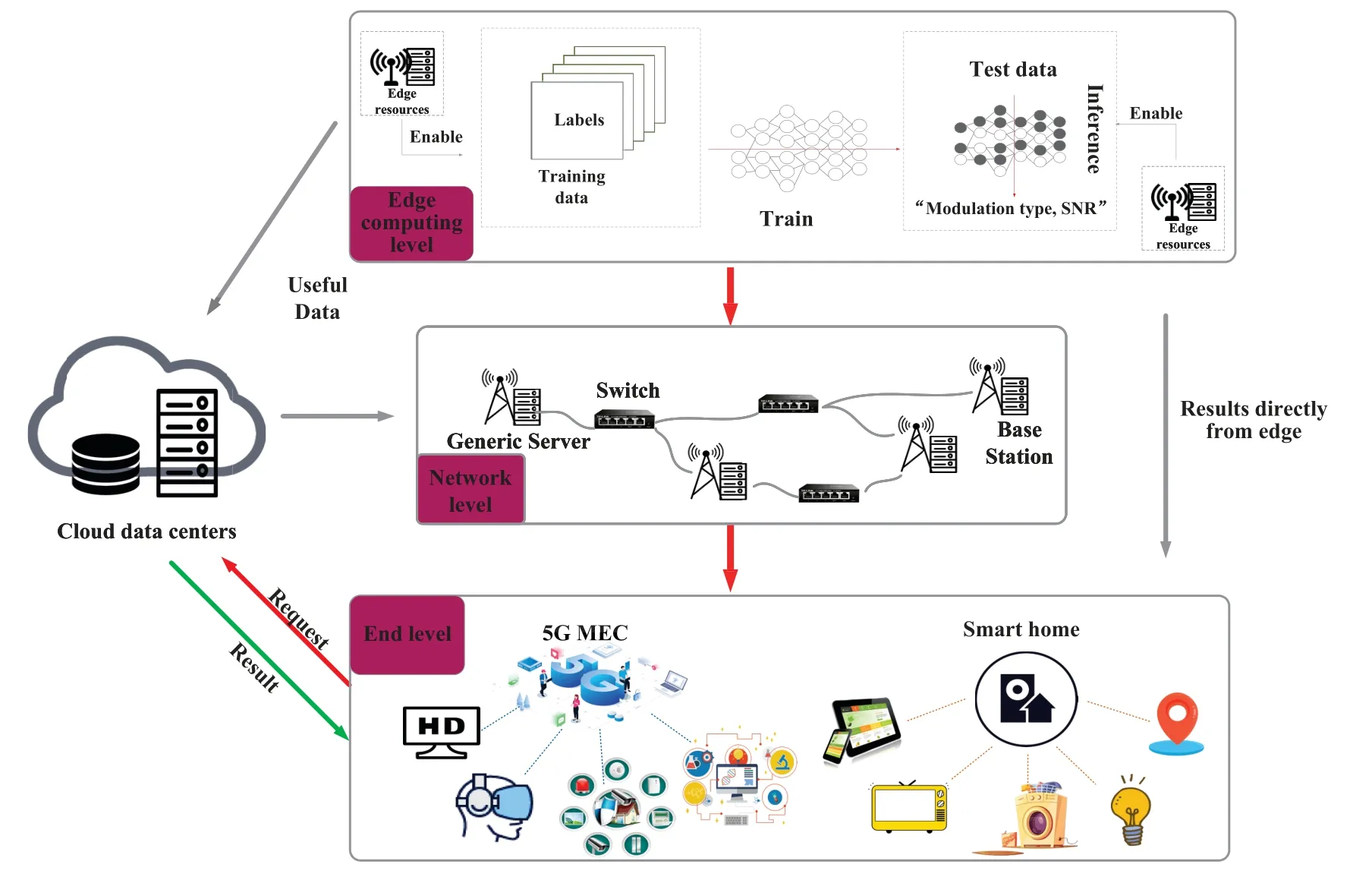

Mobile edge computing(MEC) in layered intelligent communication is shown in Figure 13.Generally,there are three roles for devices in EC: terminal device, edge server and cloud server.In the industrial Internet of Things and other application scenarios,terminal device needs to face the complex electromagnetic environment and extreme temperature and humidity environment when running.It is very difficult to extract valid data from the data under various kinds of interference[75].DL is good for this kind of scenario.DL is a very effective tool for data processing in the application of the Internet of Things.In the traditional ML method, it is necessary to extract the effective features in advance, and ML is performed according to the extracted features.As a result,the accuracy of traditional ML methods is very dependent on the accuracy of feature extraction,while DL does not care about the features of data.For example,in typical CNN,it only needs to directly feed data into CNN network to train or get results.CNN has been widely used in the fields of image processing and voice processing,etc.However, the use of CNN will produce a large amount of data.In the industrial Internet of Things and other scenarios,it is difficult to use CNN to solve the problems of low delay, high reliability and low power consumption.In the face of these challenges,researchers have proposed a data processing architecture of EC in recent years.Compared with cloud computing,EC combines the advantages of cloud computing and local computing, complements each other’s strengths,dispatches computing resources of network edge devices, reduces communication and computing costs with cloud servers,achieves distributed data processing,and combines AI with EC[76].

Figure 13. MEC in hierarchical intelligent communication.

4.1 Smart Home

Smart home combines buildings, household appliances, network communication, equipment automation and system architecture in the residential space.It integrates service with management as a whole, uses comprehensive wiring technology, network communication technology, security protection technology,automatic control technology and audio and video technology to integrate the facilities related to home life, builds an efficient management system for residential facilities and household schedule affairs, improves home safety,convenience,comfort and artistry,and achieves environmental protection and energy saving living environment; Smart pass IoT technology and mobile Internet connect home appliances or devices together to provide security control, lighting control, water and electricity control, network control and health monitoring and other functions and means, and provide efficient, comfortable, safe, convenient and environmentally friendly home space environment.Because of the privacy issues involved in the home, especially for some DL intensive applications based on computing,EC is a good choice to provide computing resources and decisions.

In Ref.[77], an automated home/enterprise monitoring system hosted on an edge server of a Network Function Virtualization (NFV) platform is proposed, which can learn online streaming data from nearby homes and businesses.By using the latest progress of DL, ubiquitous wireless signals can also be used for intelligent interaction between people and devices[78].In view of the background that many hearing people, especially deaf people, use sign language as their first or second language and that more and more people are learning and using American Sign Language (ASL), In Ref.[79], WiFi is used to recognize sign language gestures, and specifically CSI is used as input and CNN is used as classifier.Experiments show that this method can achieve the high precision recognition of 276 gestures,including head,arm,hand and finger.In Ref.[80],considering the correlation between signal changes and human movement, a WiFi-based spatial sensing devices-free activity recognition system is proposed, which uses the combination of CNN and LSTM to recognize different gestures and activities.Experiments show that the framework based on DL can integrate the hidden features of temporal and spatial dimensions, and thus achieve high recognition results.In Ref.[81], a semi-supervised DRL model is proposed for the remote control of home equipment such as lighting and television[82].This model uses both labeled and unlabeled data, and is applied to solve the indoor positioning problems based on Bluetooth low power signal strength.The above solution supporting edge intelligence can be widely used in smart home, including intrusion detection, gesture-based interaction, fall detection,etc.[83].

4.2 Edge Intelligence Requirements of 5G MEC

With the advent of 5G, applications such as ultrahigh-definition video, VR/AR, smart security and telemedicine are applied to the edge processing.While bringing high demand for AI computing power,it also breaks the current relatively independent edge intelligent reasoning architecture interface based on terminals,network edge nodes and cloud data centers.How to make full use of the resources in terminal devices,network edge nodes and cloud data centers, optimize the overall training and reasoning performance of AI models,and build more efficient ML models is the focus of current edge intelligence[84].As edge nodes of operators’mobile networks,5G access to EC is responsible for the shunt of 5G terminals and the interaction with cloud data centers.It is not only an important network element for operators to promote ICT convergence, but also a key node for the realization of collaborative intelligence among terminal, network and cloud.However,the existing MEC reference architecture is more concerned with providing cloud computing and network capability open services for application developers and content providers at the edge of the network to meet the more general IT service environment,but lacks support for edge intelligence and network intelligence.

The combination of 5G MEC and AI mainly includes two parts: user service demand and platform operation demand.User requirements are mainly to provide AI service capabilities for third-party applications running on the MEC platform.From the perspective of operators, MEC is the end of the mobile communication network.In the current MEC architecture, the edge scheduling of user and business data is achieved by sinking the shunt device UPF.It also provides life cycle management of the infrastructure and platform services required by the upper layer applications.The entire platform’s training and reasoning process for ML models is transparent, so that only a single business AI capability can be introduced for a given scenario.AI applications need to build a separate model based on their own needs.As the gateway of ICT convergence for operators,MEC upgrades the infrastructure at the edge of the network with IT,and provides users with high-quality platform services with high bandwidth and low latency.On this basis,AI-based efficient collaboration between multiple edge nodes and core nodes and the provision of adaptive AI platform services are the functions of MEC platform to be extended,so as to provide AI platform services for third-party applications.

To ensure improved QoE for users, URLLC is required for 5G and higher networks.AI-based EC and caching designs have been widely studied and recognized as promising technologies that can effectively guarantee low latency and reliable content acquisition,while reducing redundant network traffic and improving QoE[85].In particular,we investigate the use of DL algorithms, and then we analyze the performance of the latest EC schemes.In addition, edge cache,EC and edge AI have great potential in 5G and B5G networks, thus enabling emerging technologies to revolutionize how we operate every day[86].Leading companies in the world have made significant investments on it,mainly focusing on the benefits of EC and edge AI[87].Furthermore,it is assumed that most 5G and higher networks will be based on decentralized and infrastructure-free communication to enable devices to cooperate directly over D2D spontaneous connections[88].

In view of the continuous development of 5G Internet of Things (IoT) and communication technology,the mobile task of large amounts of data has a huge demand for some DL of recognition and classification.Considering that offloading tasks to a remote infrastructure such as a cloud platform will inevitably lead to the problem of offloading transmission delays,a heuristic offloading method called HOM is proposed in Ref.[89], that is, the offloading framework of DL edge services is built on a Centralized Unit(CU)-Distributed Unit(DU)architecture.Through the analysis and evaluation of the offloading time,it is proved that this method is feasible and can reduce the total transmission delay to the greatest extent.

To make the EC system have the functions of ultra reliability, low delay communication service and fault-tolerant service, a DL architecture for user association is considered in Ref.[90], and a digital twin of the network environment for offline training algorithm is established on the central server.It also proposes a low-complexity optimization algorithm for a given user solution, and experiments show that a large amount of energy can be saved by this algorithm compared to the baseline.The proposed scheme can achieve lower normalized energy consumption and lower computational complexity.In Ref.[91], in order to improve mobile Web AR applications In 5G networks, an edge-assisted distributed DNN collaborative computing scheme is designed.The evaluation results show that this scheme can significantly reduce the response time and achieve good benefits.In addition, Ref.[91]also develops an application program to achieve AR instance retrieval and recommendation functions.Users only need to visit a predefined URL to experience the AR service.

We have emphatically introduced several applications related to EC, and the common theme lies in complex DL tasks.DL has been proved able to provide excellent performances, requiring real-time operation and contingent privacy.Therefore,reasoning or training is required on edge.To enable the above application to meet the delay requirements, different system structures to rapidly execute DL deduction have been proposed,and the main three structures are:Computer on equipment, namely that network model is executed on terminal equipment;Structure based on edge server,data from terminal equipment being sent to one or more edge servers for calculation; Combined calculation among terminal equipment, edge server and cloud.Works related to management and download of scene edge resources in the latter two structures have been introduced in this article.

DL is still confronted with many challenges in terms of edge deployment, not only on terminal equipment,but also on edge server and combination of terminal equipment, edge server and cloud.Next, we will discuss some unsolved challenges.Firstly,it is challenge for system level.This challenge is mainly about reasoning delay,and minimizing energy consumption and edge migration of DL.Secondly,it is the relationship between software defined network(SDN)and NFV.If in-depth learning becomes increasingly popular,these flows containing in-depth learning data will appear on edge network, which then leads to problems such as how to realize SDN, how to manage such flows for NFV and how to ensure required QoS types for such flows.Next is management and dispatch of EC resources.EC has brought with new challenges, and there may be less requests on edge servers providing services for geographical position terminal equipment in terms of quantity and variety.Therefore,statistical multiplexing is not certainly reliably, but requires new analysis on load analysis and request dispatching mechanism.Therefore,allocation of calculation resources can be combined with control on flow from terminal equipment to edge server.Proximity is deemed as the main factor influencing flow steering decision in most research, and DL benchmark of edge equipment as another factor.It is difficult for researchers and developers hoping to deploy DL on edge equipment to choose a correct neutral network model, because of the lack of comparison with targeted hardware item by item.Most current works are concentrated in powerful servers or smart phones.However, as in-depth learning and edge calculation becomes popular, it requires to compare and further understand in-depth learning performances on heterogeneous hardware.The last are privacy and security.Although, most privacy problems are researched in the background of general distributed machine learning,they may still be valuable for researches on edge calculation,because edge calculation has smaller user sets and more professional DL models.

V.APPLICATION OF DL IN CM

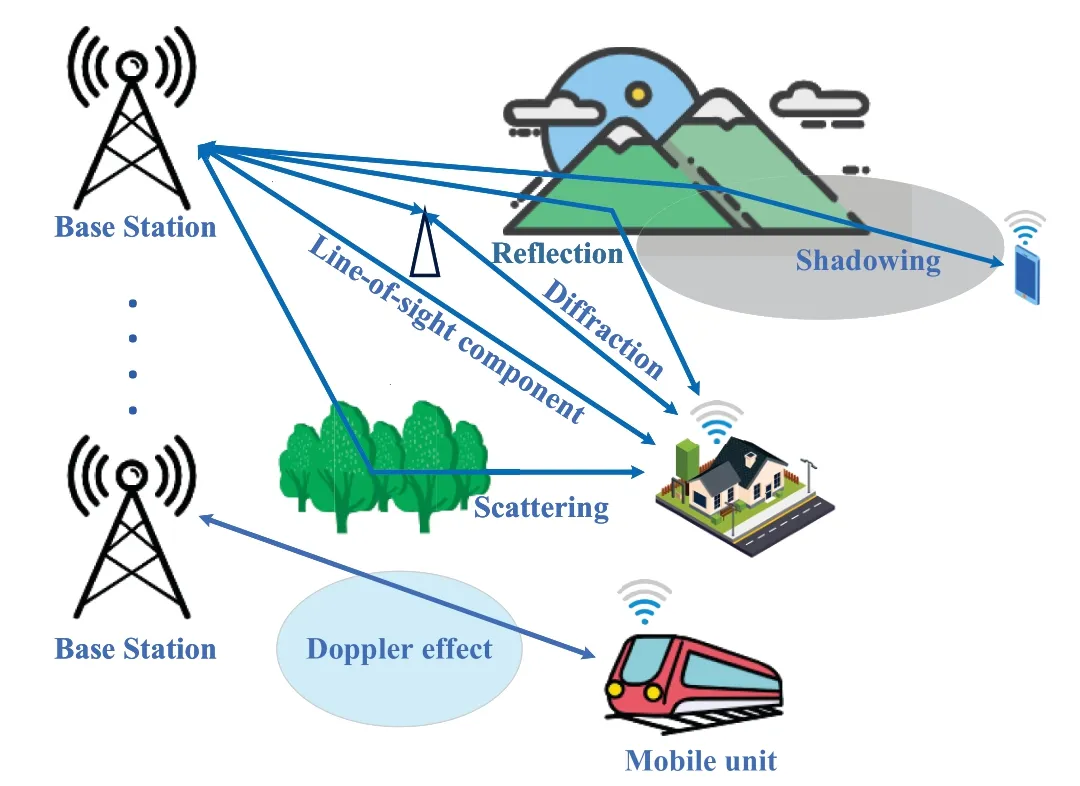

CM, also called channel detection, uses various statistical methods to analyze the statistical characteristics of channel parameters so as to obtain the channel parameters that can accurately describe the channel characteristics.The main research direction of wireless CM is to measure the large scale and small scale information of the channel, so as to obtain the characteristics of the communication channel in many aspects, such as multipath delay, Doppler spread and power fading[92].Since wireless channels inevitably encounter problems such as path loss, scattering and fading, as shown in Figure 14, these factors are usually quantified using CSI.CSI is one of the most basic concepts in wireless communication.Specifically,CSI refers to the known channel properties of a radio link.It can represent the path loss, scattering, diffraction,fading,shadow and other comprehensive effects when the signal propagates from the transmitter through the air to the corresponding receiver.In wireless communication system, it is very important to obtain accurate CSI for the communication performance experienced by mobile users.The height of CSI determines the physical layer parameters and scheme of wireless communication deployment in wireless communication system.

Figure 14. Wireless channel propagation mode.

For the problem of how to obtain accurate CSI,one method is to estimate CSI through the channel and feedback the estimated CSI to the transmitter.However,when the transmitted CSI becomes obsolete due to the fluctuation of the wireless channel(time-varying characteristics of the channel, relative movement of the client, etc.), the system performance will suffer.The channel prediction technology can be used to predict the CSI of the channel in the future according to the observation value of CSI of the channel in the historical moment.As DL continues to penetrate into traditional communication systems,DL has been ahead of some of the most advanced traditional communication algorithms in the fields of channel estimation,channel prediction, channel state information feedback, etc., and achieved remarkable performance improvement.

5.1 Channel Estimation

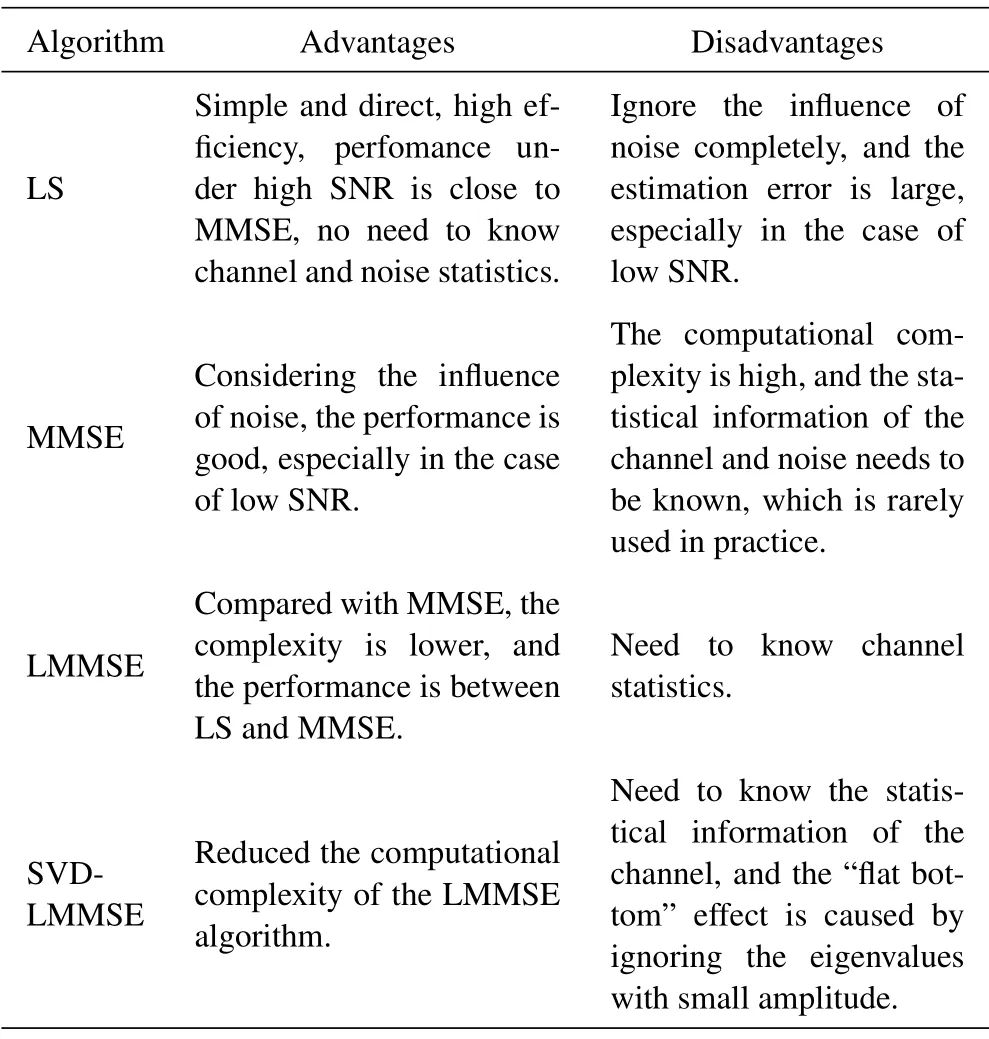

The so-called channel estimation refers to the process of using prior information or statistical information to get the channel state from the received data.Accurate CSI can be obtained through channel estimation.Up to now, there are three main channel estimation algorithms:pilot frequency based channel estimation algorithm[93], blind channel estimation algorithm[94] and semiblind channel estimation algorithm[95].The classical channel estimation criteria include the least square criterion[96]and the minimum mean square error(MMSE)criterion[97].In addition, there are many improvement schemes based on classical channel estimation algorithm,such as lin-ear minimum mean square error(LMMSE)estimation method[98] and singular value decomposition (SVD)based algorithm[99].Table 4 lists the advantages and disadvantages of the above four algorithms.

Table 4. Advantages and disadvantages of various channel estimation algorithms.

With the development of computing resources, in order to further reduce the complexity of channel estimation, and the estimation process basically does not require noise statistics,so some DL methods for channel estimation are proposed.

In Ref.[100],a time-varying Rayleigh fading channel estimator based on DL is proposed.Using NN to build, train and test channel estimators can dynamically track channel state without prior knowledge of channel model and statistical characteristics.The simulation results show that the proposed NN estimator has better mean square error(MSE)performance compared with traditional algorithms and some DL based structures.In Ref.[101], a DL tool is used to estimate the channel of a downlink pilot frequency based OFDM system.ResNet,a channel estimation method based on residual learning,is introduced.Its competitive estimation error performance,coupled with compact and flexible network structure,make it a promising channel estimation method.

In Ref.[102],Soltani,Mehran et al.regard the timefrequency response of a fast fading channel as a twodimensional image,consider the pilot frequency value as a low-resolution image,and use a SR network with cascading denoising IR network to estimate the channel.It can be comparable to the MMSE and better than the approximate linear MMSE when the channel statistics are well understood.Due to the timevarying nature of vehicle channels, reliable channel estimation is considered to be a major key challenge in ensuring system performance.In Ref.[103], IEEE 802.11p specification is studied,and a DL-based STADNN scheme is proposed,which combined the classical spectral temporal averaging STA channel estimation with the use of DNN, so as to obtain more characteristics of time and frequency correlation between channel samples, thus ensuring more advanced tracking of channel changes in time and frequency domain.Compared with the proposed IEEE 802.11p channel estimation scheme based on Auto-Encoder DNN(AEDNN)[104], the computational complexity of the optimized STA-DNN architecture is reduced by at least 55.74%.

In Ref.[105], the channel matrix is regarded as a two-dimensional natural image.It uses LDAMP to fuse DNCNN into the iterative sparse signal recovery algorithm to estimate the channel of largescale MIMO system in beamspace millimeter-wave.In Ref.[106],a DNN for channel calibration between UL and Down Link directions is proposed.In the initial training stage, the DNN is trained from UL and DL channel measurements.The trained DNN and instantaneous estimation of the UL channel are then used to calibrate the DL channel.It shows that DL-based methods have important potential for nonlinear channel estimation in large-scale MIMO systems.In Ref.[107],two very common and widely used fading scenarios are considered: quasi-static block fading and time-varying fading.In the case of quasi-static block fading, the CNN construction depth autoencoder is used to develop new techniques for MIMO channel estimation and pilot signal design.In the case of timevarying,a new channel estimation method is proposed by combining RNN and CNN.For these two fading scenarios, a channel estimation framework based on DL is developed in Ref.[108] for large-scale MIMO systems with 1−bitADC.It derives the structure and length of the pilot sequences that guarantee the existence of quantized measurements to channel mappings.It is proved that this existence requires fewer pilots to obtain a larger number of antennas.The results show that a large number of MIMO channels can be estimated efficiently with only a few pilots.

5.2 Channel Prediction

Due to the time-varying characteristics of wireless channels, it is difficult to accurately obtain valuable CSI.Therefore, the traditional channel estimation algorithm is difficult to solve the troubles caused by the time-varying characteristics of the channel in the mobile communication scenarios.We also need to make accurate and timely prediction of the CSI to obtain the CSI at the future moment.Channel prediction is to predict the future CSI through a series of algorithm models based on the known channel information.At present, the channel prediction technology is mainly divided into linear channel prediction and nonlinear channel prediction.The linear channel prediction model mainly includes the channel prediction algorithm based on statistical tap delay linear model represented by Auto Regressive (AR)[109] and Auto Regressive and Moving Average (ARMA).For complex fast fading time-varying channels, only relying on simple linear prediction algorithm can no longer play a very good prediction effect.Therefore, Projection Approximation Subspace Tracking (PAST),SVM, Markov, NN and other nonlinear prediction models have gradually become the key research objects.

In recent years, with the research development of ML, DL has been applied more and more in channel prediction.In Ref.[110],a wireless channel model framework based on big data and ML is proposed.This channel model is based on ANN,including feedforward neural network (FNN) and radial basis function neural network (RBF-NN).It predicts the received power, RMS and other important channel statistical characteristics.Compared with the existing deterministic and stochastic channel modeling methods,the proposed channel model achieves a good balance among accuracy, complexity and flexibility.The simulation results have been analyzed and verified,showing that ML algorithms can be a powerful analytical tool for future measurance-based wireless channel modeling.In Ref.[111], a channel model for atmospheric information prediction satellite inq −bandof B5G/6G satellite-to-ground wireless communication system is proposed to simulate/predict the channel attenuation at any specific time.The channel model is a data-driven model based on either MLP or LSTM.The accuracy of the proposed channel model is measured by the Cumulative Density Function (CDF) of the absolute error and MSE between the model/prediction and the measured channel attenuation.The complexity of the proposed channel model is evaluated by the training time,loading time and testing time of the DL network.Finally, the established channel model for satellite atmospheric prediction is consistent with the channel attenuation results of the actual CM.

In Ref.[112], a wireless communication system framework based on Deep Multimodal Learning is developed.It is used for channel prediction of largescale MIMO system by taking advantage of various mode combinations and fusion levels,and making full use of MSI in communication systems to improve the performance of the system.The framework can effectively utilize the constructive and complementary information of multimodal sensing data to assist the current wireless communication.Based on the classical Kalman Filter (KF) and the newly proposed RNN, in Ref.[113],the remote fading channel prediction problem is studied.Two different LRP methods are proposed,namely multi-step prediction(MSP)and fading signal processing (FSP), which use multi-step recurrent network to flexibly adjust the number of prediction steps,to reduce the sampling rate before sending the fading signal into the predictor.The results show that the performance of the RNN predictor is comparable to that of the KF predictor, while avoiding the difficulties of the KF predictor in modeling and parameter estimation.

5.3 Channel State Information Feedback

As the key technology of 5G wireless communication system, the alternative technology of 6G wireless communication system,and the key technology of the next-generation WiFi(802.11ax/ay)wireless communication system, large-scale Multiple Input Multiple Output (MIMO) technology has been favored in recent years.However, the performance advantage of large-scale MIMO systems mainly depends on BS being able to obtain accurate downlink CSI.Whether BS can obtain accurate downlink CSI has a significant impact on the performance of the whole system.Therefore, the research on effective downlink CSI acquisition technology of BS terminal has become a hot spot and difficulty in recent years.The traditional channel feedback technology is mainly based on codebook CSI feedback, but because of the large number of BS antennas in FDD large-scale MIMO system, codebook dimension must increase with the number of antennas,which makes codebook based CSI feedback difficult to apply due to the difficulty of codebook design.

In recent years, image compression methods based on DL have been easily ahead of the traditional algorithms.Inspired by this, in Ref.[114], DL is introduced into the CSI feedback problem and CSINet is developed using DL.This is a novel CSI sensing and recovery mechanism.It learns to use channel structures effectively by training samples.CSINet learns the conversion from CSI to the quasioptimal representation number (or code word), and the inverse transformation from code word to CSI.The experimental results show that CSI can be recovered by CSINet,and the reconstruction quality is significantly improved compared with the existing methods based on Compressed Sensing (CS).Even in areas of too low compression where the CS-based method does not work,CSINet maintains effective beam forming gains.However, this method reconstructs CSI independently and ignores the time dependence in time-varying channels.In Ref.[115], a real-time end-to-end CSI feedback framework is proposed to improve the architecture by considering temporal correlation, and a realtime CSI feedback framework, called CsiNet LSTM,is developed.It extends CSINet with a typical RNNtype LSTM network.Compared to CsiNet, CsiNet-LSTM shows significant robustness in reducing compression ratio and supports real-time and scalable CSI feedback applications without significant overhead.In Ref.[116], the design of the actual measured AI authorized channel feedback system is adopted.Considering the CSI noise caused by incomplete channel estimation, a new DNN structure, Ancinet, is proposed in Ref.[117]for noise CSI compression and feedback in massive Multiple Input Multiple Output(m-MIMO)system.It provides CSI feedback with limited bandwidth.AnciNet extracts noiseless features from noisy CSI samples, so as to achieve effective compression of feedback CSI and effectively eliminate the noise of m-MIMO CSI.

The application of DL in wireless communication CM is a new field.Although there have been some researches and achieved good results,there are still some technical challenges worthy of further discussion.The first is the acquisition of experimental data, because the implementation of DL algorithm is based on big data.If it can not provide a large number of data in line with the actual situation,Then the network can not show better performance than the traditional algorithm in the application process; Secondly, many DL algorithms have more network layers, and the amount of parameters in the algorithm is also greatly increased.If the model takes up more memory, it will affect the operation of the device, and is not suitable for mobile devices.Thirdly, the current algorithm based on DL is to modify some ready-made network structure according to their own data characteristics.Although the general network can save us a lot of time, but the model needs a lot of resources in training,which puts forward the requirements for the computing power of the computer.Therefore, in order to reduce the computing resources required for model training,it is necessary to design a private network model for CM and ensure the performance of the system.In addition,DL is equivalent to a black box for us.We have no way to deduce compact mathematical formula and basic theory to further demonstrate the performance of the algorithm based on DL.The mathematical formula and basic theory can help us understand the efficiency of the algorithm,which is the basis of improving the network model and developing more efficient algorithm based on DL.At the same time, the network parameters are updated automatically, we can not know the change of parameters, so whether the CM algorithm based on DL can get the optimal performance is uncertain.

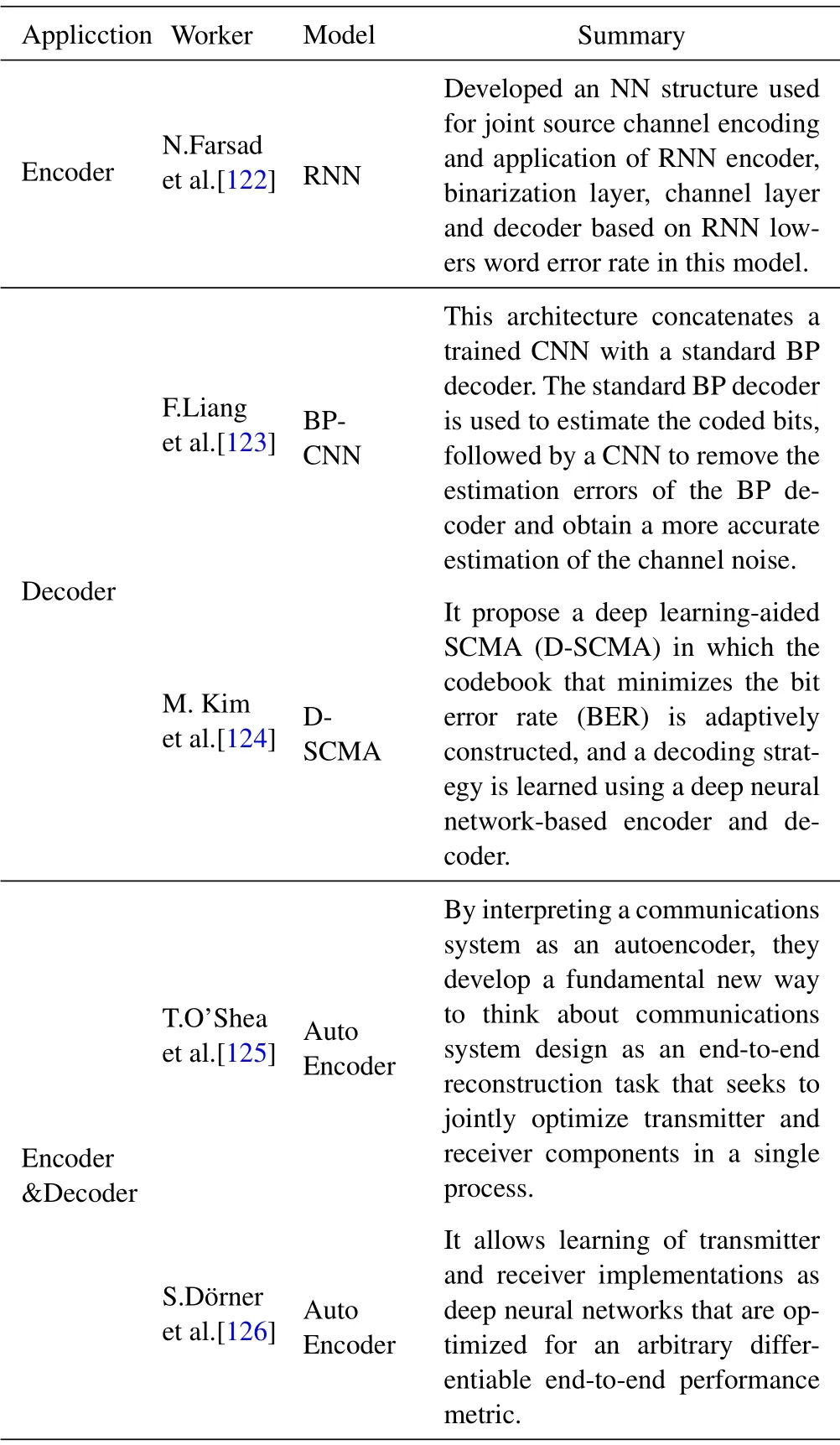

VI.APPLICATION OF DL IN END TO END ENCODER AND DECODER