Haze removal for UAV reconnaissance images using layered scattering model

Hung Yuqing,Ding Wenrui,Li Honggung

aSchool of Electronic and Information Engineering,Beihang University,Beijing 100083,China

bResearch Institute of Unmanned Aerial Vehicle,Beihang University,Beijing 100083,China

cCollaborative Innovation Center of Geospatial Technology,Wuhan 430079,China

Haze removal for UAV reconnaissance images using layered scattering model

Huang Yuqinga,Ding Wenruib,c,Li Hongguangb,*

aSchool of Electronic and Information Engineering,Beihang University,Beijing 100083,China

bResearch Institute of Unmanned Aerial Vehicle,Beihang University,Beijing 100083,China

cCollaborative Innovation Center of Geospatial Technology,Wuhan 430079,China

Atmosphere scattering model;Bayesian classification;Haze concentration;Image restoration;Layered scattering model;UAV

During the unmanned aerial vehicles(UAV)reconnaissance missions in the middle-low troposphere,the reconnaissance images are blurred and degraded due to the scattering process of aerosol under fog,haze and other weather conditions,which reduce the image contrast and color fidelity.Considering the characteristics of UAV itself,this paper proposes a new algorithm for dehazing UAV reconnaissance images based on layered scattering model.The algorithm starts with the atmosphere scattering model,using the imaging distance,squint angle and other metadata acquired by the UAV.Based on the original model,a layered scattering model for dehazing is proposed.Considering the relationship between wave-length and extinction coefficient,the airlight intensity and extinction coefficient are calculated in the model.Finally,the restored images are obtained.In addition,a classification method based on Bayesian classification is used for classification of haze concentration of the image,avoiding the trouble of manual working.Then we evaluate the haze removal results according to both the subjective and objective criteria.The experimental results show that compared with the origin image,the comprehensive index of the image restored by our method increases by 282.84%,which proves that our method can obtain excellent dehazing effect.

1.Introduction

In recent years,the use of unmanned aerial vehicle(UAV)for target recognition,surveying and mapping,geological disaster prevention and control,has become a research hotspot in the field of UAV.UAV reconnaissance missions rely mainly on UAV reconnaissance images with high quality.However,the deterioration of air quality leads to a higher incidence of long-term haze weather phenomenon.When the mediumaltitude UAVs perform reconnaissance missions,the weather phenomenon as the haze and mist exist scattering of aerosolsin the atmosphere,reducing air transparency,rapidly exacerbating the visibility.The quality and clarity of the reconnaissance images are blurred and degraded seriously influenced by haze,which reduces the image contrast and color fidelity,brings severe distortion and information loss of the image characteristics.On this account target observation and recognition is not obvious.Therefore,in the field of UAV aerial images,removing haze from reconnaissance images must have high research and practical value.

At present,image haze removal methods can be divided into two categories:hazy image enhancement based on image processing and hazy image restoration based on physical model.Regardless of image degrading cause,image enhancement methods can increase the image contrast,strengthen the details of image edges,but cause a certain loss of the salient information.Based on hazy image degradation process,in the image restoration methods,the degradation physical model is established,and the degradation process is inverted for achieving high-quality haze-free images.Based on the physical model,the haze removal effect is more natural and with less information loss.

Hazy image enhancement methods based on image processing include histogram equalization enhancement,1–3homomorphic filtering,4wavelet transform,5Retinex algorithm6and so on.There are two typical and effective methods:contrast limited adaptive histogram equalization and Retinex.In Ref.2,an algorithm called contrast limited adaptive histogram equalization(CLAHE)is proposed.By limiting the height of the image local histogram and intercepting the height greater than the set threshold histogram,the intercepted part is evenly distributed to the whole image grayscale range,to ensure that the size of overall histogram area remains the same,which can effectively suppress noise and reduce distortion.In Ref.7,based on CLAHE,the edges information is enhanced by calculating the saliency map of the white balance image.Retinex is a model to describe color constancy which has the quality of color invariant and dynamic range compression,applied to imagetextureenhancement,colorprotectingand other aspects.By Retinex algorithm,achieved haze-free images can have relatively higher local contrast and less color distortion.In Ref.6,based on the theory of Retinex,it is assumed that in the whole image,the atmospheric light change is smooth in order to estimate the transmission and the atmospheric light is estimated by Gaussian filtering.The method achieves good results.

Hazy image restoration methods based on physical model include methods based on partial differential equations,8,9depth relationship,10–13prior information14–16and so on.The method based on partial differential equations can effectively correct the border area and greatly improve the visual effect.In Ref.8,by atmospheric scattering model,an image energy optimization model is established and the partial differential equation of image depth and gradient is derived.The method improves the uncertainty of image restoration,but requires gradually changing the atmospheric scattering coeff icient and image depth information,which depends on interactive operation.The method based on depth relationship uses clear and hazy images to calculate depth relationship of each point,combined with atmosphere scattering model,to achieve image restoration.In Ref.17,an image haze removal method via depth-base contrast stretching transform (DCST)is proposed.The method is simple and has good real-time performance.However,the method based on depth relationship has certain limitations and it is difficult to satisfy the real-time processing requirements.The method based on prior information can obtain higher contrast and motivation effect.On the basis of prior information,image quality can be improved by average filtering,median filtering and other ways.However,the method based on prior information has some problems such as color over-saturation and complex computation.The most commonly used physical model is the atmosphere scattering model proposed by McCartney,which has been detailed later.

In addition,due to the characteristics of UAVs,the distance between the imaging device and the imaging target is very long.The aerosol concentration of atmosphere environment around the imaging device is very different from that of atmosphere environment around the imaging target,leading to different extinction coefficients between them.The above methods are mostly only for general outdoor hazy images and lack applicability for dehazing UAV reconnaissance images.

Considering the characteristics of UAVs,in this paper we propose a novel method for UAV reconnaissance image haze removal based on a layered scattering model.We improve the original model using the imaging distance,angles and other metadata of UAV.Then considering the relationship of extinction coefficient and wavelength,we calculate the atmospheric light and extinction coefficient of the layered model in order to achieve the restored image.In addition,a classification method based on Naı¨ve Bayes Classifier is proposed for classification of haze concentration of the image,avoiding the trouble of manual working.

The later sections can be summarized as follows:Section 2 introduces background and principle of atmosphere scattering model;Section 3 describes our improved physical model;Section 4 describes the proposed method in detail;Section 5 presentsthe experimentalresultsand analysis;Section 6 summarizes the paper.

2.Background

Based on the physical model,the haze removal effect is more natural with less information loss.One of the most typical models should be the atmospheric scattering model.18In 1975,according to Mie scattering theory,McCartney proposed that imaging principle could be described by the following two aspects:direct attenuation and atmospheric light imaging(see Fig.1).

According to the atmospheric scattering model of the hazy weather,hazy image obtained by the imaging equipment can be represented as11–15

whereIis the observed image intensity,Jthe scene radiance,Athe global atmospheric light,dimaging distance,and σ the extinction coefficient.

In Eq.(1),the first term represents the direct attenuation model.Due to the effect of atmospheric particles’scattering and absorption,part of reflected light from the surface of object suffers damage from scattering or absorption,and the rest is transmitted directly to imaging equipment.The intensity exponentially decreases with the increase ofspreading distance.The second term represents the atmospheric light imaging model called airlight.Due to the effect of atmospheric particles scattering,atmosphere shows properties of light source.With the increase of spreading distance,the atmospheric light intensity increases gradually.

Based on the physical model,the essence of haze removal methods is to estimate the parameters in the atmospheric scattering model.The model contains three unknown parameters,which is an ill-posed problem.In haze removal methods proposed in recent years,the image itself is used to construct scene constraints transmission or establish the depth assumed condition.Early on,Tan14maximized the local contrast of the restored image according to the haze concentration changes in the image in order to obtain haze-free image.However,the recovered image by this method often presents color over-saturation.Moreover,under the assumption that the transmission and the surface shading are locally uncorrelated,Fattal15estimated the medium transmission and the albedo of the scene.However,this method needs adequate color information and is based on mathematical statistics.It is difficult to obtain credible restored images when processing the images under the condition of thick haze.In allusion to above problems,He et al.16proposed the haze removal method based on dark channel prior.They found a dark channel prior theory by collecting a large number of images,which can be used to detect the most haze-opaque regions.According to this theory,the transmission can be obtained to restore the image.But when the intensity of the scene target is similar to atmospheric light,the dark channel prior will lose efficacy.

In addition,Narasimhan et al.19,20pointed out that the above atmospheric model is established under the assumption of single scattering,homogeneous atmospheric medium and the extinction coefficient independent of the wavelength.Therefore,the model does not apply to the case of remote imaging.In other words,the atmosphere scattering model is not appropriate for the haze removal of UAV reconnaissance images.Moreover the distance between the imaging device and the imaging target is very long.The environment of UAV platform is much different from the atmosphere around the object.There is only one constant extinction coefficient σ in the model,as shown in Eq.(1),which is not enough to describe the imaging model of UAV.

Therefore,this paper proposes a novel improved physical model for UAV reconnaissance images,which will be detailed in the next section.

Fig.1 Atmosphere scattering model.

3.Layered scattering model

In Ref.21,based on McCartney scattering model,the atmosphere turbidity is calculated corresponding to different atmosphere heights.As can be seen from Fig.2,haze commonly spreads around the altitude of 2 km.But the flight height of UAV is commonly much larger than the altitude.So the atmosphere turbidity around the UAV is different from the atmosphere turbidity around the object and the atmosphere medium between the two is inhomogeneous.Only one extinction coefficient cannot support the model.Therefore,for characteristics of UAV imaging,we propose a layered scattering model with two layers,and assume that inside each layer the atmosphere is homogeneous and each layer has an independent extinction coefficient.That is to say,the global atmosphere is inhomogeneous and the extinction coefficients of two layers are different.

As mentioned in Section 2,the original atmospheric scattering model is represented as Eq.(1).

The imaging model for images acquired by an UAV platform is shown in Fig.3.The platform is quite a long distance from the imaging object.There is a haze boundary in the middle.We can consider that above and below the boundary,the extinction coefficients are different.dis the imaging distance.d′is the distance of the object and the haze boundary.θ is the angle between the incident light and the horizontal plane.The height of the boundary can be calculated byd′and θ.σ1is the extinction coefficient below the haze boundary,while σ2the extinction coefficient above the haze boundary.The extinction coefficients are considered relative to wavelength.

Imaging process can be described by incident light attenuation model and atmospheric light imaging model.The total irradiance that the sensor receives is composed of two parts,as

whereEr(d,λ)is the irradiance of the object’s incident light during the attenuation process,Ea(d,λ)the irradiance of the other incident light during the imaging process,λ the wavelength of visible light anddthe imaging distance.

Fig.2 Meteorological range for various turbidity values.

Fig.3 Image formation model for images acquired by an UAV platform.

The incident light is the light radiated or reflected by the object,as shown in Fig.4.In the location whenx=0 there is the object surface,and in the location whenx=dthere is the imaging equipment,and among 0-dthere is the atmosphere medium.

The beam is considered to pass through an infinitesimally small sheet of thickness dx.The fractional change of the irradiance of the incident lightErat locationxcan be presented as

After integrating both sides of Eq.(3)between 0-d′andd′-d,we can get

whereEr,0(λ)is the irradiance at the sourcex=0.

Atmospheric light is formed by the scattering of atmosphere particles,which performs light source characteristics.In atmosphere medium,the transmitted light includes the sunlight,diffuse ground radiation and diffuse sky radiation.One of the most important reasons of the image degradation is the effect of atmospheric light.The intensity of atmospheric light gradually increases with the increase of imaging distance,leading to more severe degradation of imaging quality.

As shown in Fig.5,along the imaging direction,the environmental illumination is considered to be constant but unknown in intensity,spectrum and direction.Truncated by the object at the distancedand subtended by the UAV platform,the cone of solid angle dω can be viewed as a light source.At distanced,the infinitesimal volume dVcan be presented as dV=dωx2dx.In the imaging direction,its intensity is

Fig.4 Incident light attenuation model.

Fig.5 Airlight model.

where the proportionality constantkis used to express the nature of intensity and the form of scattering function.The element dVcan be considered as a source with intensity dIa(x,λ).After attenuation due to the medium,the irradiance that the imaging equipment receives is

Then the radiance of dVfrom its irradiance can be expressed as

By substituting Eq.(5),the following relationship can be get:

After integrating both sides of Eq.(8)between 0-d′andd′-d,we can get

Therefore,Eq.(9)can be rewritten as

Then the received irradiance of atmospheric light can be expressed as

Finally,combine the incident light attenuation and atmospheric light imaging.The two processes comprise the total irradiance received by the sensor:

Eq.(13)is our novel atmospheric degradation model.We can reformulate Eq.(13)in terms of the image:

4.Proposed algorithm

4.1.Algorithm flow

For features of UAV such as long imaging distance,this paper proposes a novel physical-based haze removal method using metadata of UAV like imaging distance and angle.The algorithm flowchart is shown in Fig.6.The method is divided into the following four parts:(1)The haze concentration presented on the image is graded up before dehazing.(2)The extinction coefficients of the model are calculated by the relationship of extinction coefficients,wavelength and visibility.(3)The atmospheric light is estimated based on the pixel intensity.(4)The haze-free image is restored based on the layered scattering model.

4.2.Algorithm principle

4.2.1.Classification of haze concentration of image

The automatic haze detection of images is the prerequisite of intelligent haze removal.A large number of reconnaissance images received from the UAV platform include images with and without haze,and the haze concentration is different that brings trouble to artificial classification.In addition,we use the classification of haze concentration in order to prove the validity of the algorithm that our algorithm can be used to remove haze from various images of different haze concentration.Therefore,it is a critical issue to judge the grade of the haze concentration.

The haze concentration presented on the image is graded up before dehazing by Naive Bayes Classifier.The concentration is graded on a scale of 1–5 in ascending order,while Level 1 signifies no haze and Level 5 signifies the heaviest haze.Several images are used as the training set by artificial classification in order to generate a reasonable classifier.Then the features of images such as intensity,contrast,edge contours,texture,hue and so on,are extracted as the feature vector to be entered into Naive Bayes Classifier in order to examine the category of the haze concentration.

Naı¨ve Bayes Classifier22,23has good stability of classification effectiveness and solid mathematical foundation with a small few parameters,which is simple and feasible.Based on Bayes theorem under the assumption of independent characteristic condition,the algorithm first calculates the joint probability distribution of input and output for given training set.Then based on the model,the output of maximum posterior probability is calculated by Bayes theorem for given input.The flowchart of Naı¨ve Bayes classification is shown in Fig.7.

The input feature vector of the classification method proposed in this paper contains saturation heft and intensity heft in hue–saturation–intensity(HSI)color space.HSI model builds on two crucial facts:(1)the heft is independent of color information;(2)hue heft and saturation heft are closely interrelated to the color feeling of human.These characteristics make itsuitable fordetection and analysisofcolor characteristic.Therefore,we select Saturation and Intensity heft in the HSI color space for features selection of UAV reconnaissance images.

Fig.6 Flowchart of algorithm.

Fig.7 Flowchart of Naı¨ve Bayes.

In this paper,the concrete realization of classification method is divided into the following steps:

(1)Preparation stage.The characteristic attributes are defined and separated appropriately.Several unclassified terms are graded artificially to form a training set.The feature vector consists of the following features:Saturation heft and intensity heft in the HSI model,blur degree,contrast and intensity of the hazy image,contrast of the dark channel image.We first choose 50 UAV reconnaissance images as the training set and rank them on the scale of 1–5 through manual means.

(2)Classifier training stage.The classifier is generated to calculate the frequency of each category’s occurrence among the trained sample and estimate the conditional probability of each category.

(3)Applied stage.The unclassified terms are input into the classifier to be graded.The hazy concentration of the image can be classified as Level 1,Level 2,Level 3,Level 4 or Level 5.

The above classification method judges the hazy concentration that avoids the trouble of manual working,saves time and increases the processing efficiency.

4.2.2.Parameter estimation

As mentioned in Ref.24,the relationship of extinction coeff icient,visibility and wavelength can be described as Eq.(15)and the specific relationship is shown in Table 1.

whereVis the visibility,α is extinction coefficient.

The common visibility observation methods include visual methods and measuring methods,but there are some problems with two methods.Visual methods have strong subjectivity and bad scientificity.Measuring methods has small sampling areas and high-cost equipment.In addition,some scholars have put forth to use the visual feature of images to measure the visibility,which is more relevant to genuine sensory of man and has obtained some achievements.

Therefore,the extinction coefficient can be calculated through visibility,d′can be calculated through the height of the haze boundary and some metadata of UAV,and the estimation of airlight is described later.Then the unknown parameters of the layered scattering model become visibility and the height of the haze boundary.Our estimation method is to choose several hazy reconnaissance images,and assume the two parameters in a reasonable range with an enumerable method.Then we input the parameters into the layered model to obtain the restored images.We choose the optimal image by manual and get the two corresponding parameters of the optimal images.At the same time,the origin image is converted from RGB model to HSI model.Through experiments,we find that there is certain linear relation between the height of the haze boundary and intensity heft,and between the visibility and saturation heft.In general,intensity decreases with the increase of the height of the haze boundary and Saturation increases with the increase of the visibility.The fitting equations are proposed by fitting analysis and constantly corrected with the increase of experiment numbers.The equations are plugged into the layered scattering model to get the final restore image.

The equations are obtained according to linear fitting and are presented as

Table 1 Relationship of extinction coefficient and visibility.

wherehis the height of the haze boundary,IintensityandSsaturationare respectively intensity heft and saturation heft in the HSI model.θ is the angle between the incident light and the horizontal plane,which can be obtained from the metadata of UAVs.The distanced′can be calculated by θ and the height of the haze boundaryh.The extinction coefficients can be calculated byVand Table 1.This method is simple and convenient and has high accuracy.

4.2.3.Estimation of atmospheric light

In Ref.16,the concept of dark channel is defined.The dark channel of an arbitrary imageJis presented as

where Ω(x)is a local patch centered atxandJcis a color channel ofJ.

Similar to the method of Ref.16,we pick the top 0.1%brightest pixels in the dark channel.Among these pixels,the pixels with highest intensity in the origin imageIare selected as the atmospheric lightA.

4.2.4.Restoration of the image

According to Eq.(14),the restored image can be obtained as follows:

where the imaging distancedcan be known from the metadata of UAV.

5.Presentation of results

To test the validity of the haze removal algorithm,it is need to evaluate the recovery image.The existing image evaluation methods mainly include subjective evaluation and objective evaluation.Subjective evaluation methods are greatly influenced by personal factors to make the evaluation results unreliable.According to the demand for reference information,objective methods can be divided into full-reference,reduced-reference and no-reference.Among them,fullreference and reduced-reference need reference images,which is difficult for UAV.Therefore,we choose no-reference method.In Ref.25,the authors tried to construct a noreference assessment system to evaluate the haze removal effect on visual perception.This paper refers to the assessment system and evaluates the haze removal effect by edge detection,color nature index(CNI)and color colorfulness index(CCI)to demonstrate the efficiency of the algorithm.

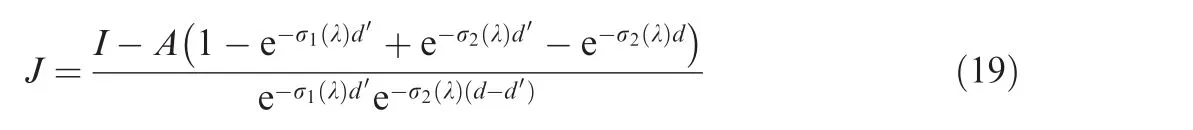

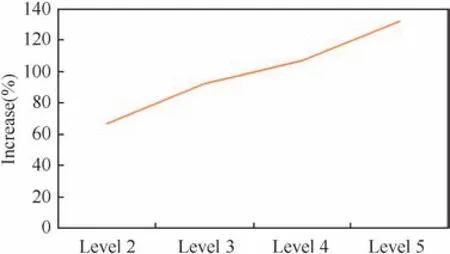

In addition,we compare our method with other typical haze removal methods and give the results of restoration.Our experimental subject is an image set of UAV reconnaissance images,with the size of 1392×1040 pixels.Our experimental platform is Microsoft Visual Studio 2010.In Fig.8,there are experimental results of 7 images from the image set.Fig.8(a)shows the origin images and Fig.8(b)–(h)show the haze removal results by CLAHE,2adaptive contrast enhancement26multi-scale Retinex, average filtering,27Gaussian filtering,dark channel prior algorithm,16and our proposed method.The haze concentration of the 7 images is respectively classified as the classification method mentioned above:the 5th and the 7th images belong to Level 2;the 1st and 2nd images belong to Level 3;the 6th image belongs to Level 4;the 3rd and 4th images belong to Level 5.As shown in Fig.8,the haze removal images by other methods have quite a certain degree of distortion with heavier color and supersaturation and are easy to emerge halo effects.Moreover,the other methods just based on the origin atmosphere scattering model or image processing,cannot apply to haze removal of UAV reconnaissance images.Our method is more scientific and obtains better effect and better recreates the real scene of UAV reconnaissance images.

In this paper,we first use standard deviation and information entropy for quantitative evaluation of the haze removal effect.The standard deviation reflects the degree of distribution of pixels while the information entropy reflects the average information of the image.The evaluation results are shown in Table 2.The two targets before and after dehazing change greatly,which shows the haze removal effect.

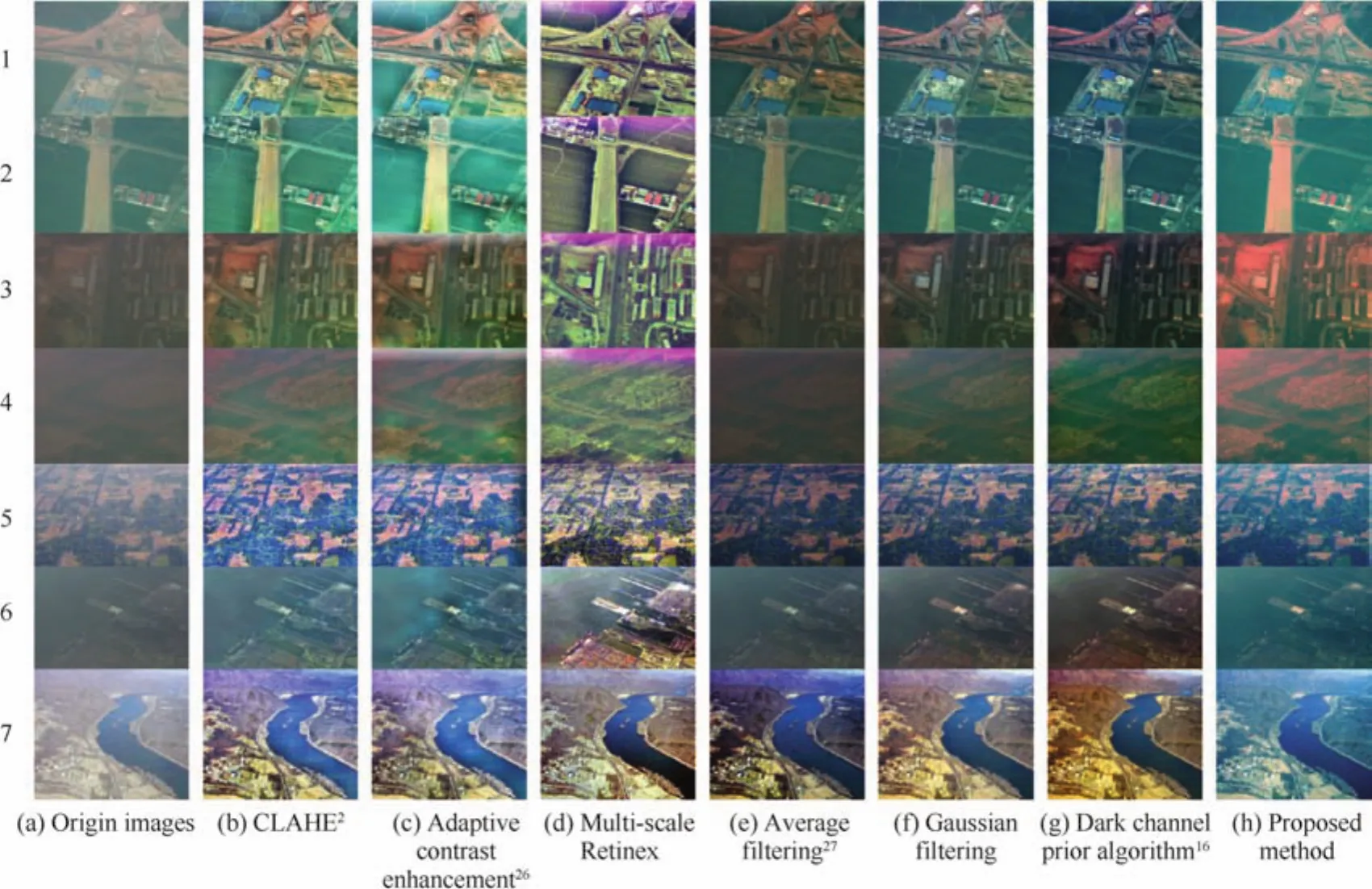

Compared with the traditional edge detection operator,Canny operator has better detection effect.Canny operator is adopted for edge detection for images in Fig.8.The detection results are shown in Fig.9.As shown,we can find that based on our algorithm,more efficient edges can be detected in the haze removal images than other methods except the multi-scale Retinex method.This is because the multi-scale Retinex method has the shortcoming ofnoise overenhancement which increases noise pixels.The result of edge detection proves that our method can improve the clarity and resolution of the image.

CNI reflects whether the color of the image is real and natural.CNI is the measure of the judgment standard28–30which ranges from 0 to 1 and if the value is closer to 1,it indicates that images are more natural.CNI is effective for thecharacteristics of UAV reconnaissance images.The calculation of CNI valueNimageis shown as follows:

Table 2 Standard deviation and information entropy before and after dehazing.

Fig.8 Dehazing results of different methods.

(1)The image is converted from RGB color space to CIELUV space.

(2)Calculate the three components:hue heft,saturation heft and luminance heft.

(3)Threshold saturation heft and luminance heft:the value bigger than 0.1 of saturation heft is retained while the value between 20 and 80 of Luminance heft is retained.

(4)According to the value of hue heft,classify the image pixels into three categories:the value of hue heft of‘‘skin” pixels is 25–70,that of‘‘grass” pixels is 95–135,and that of ‘‘sky” pixels is 180–260.

(5)For ‘‘skin”, ‘‘grass” and ‘‘sky” pixels,calculate their average value of saturation heft and record them as Saverageskin,Saveragegrass,Saveragesky.At the same time,the data of three types of pixels are carried into statistics and recorded as nskin,ngrass,nsky.

(6)Calculate CNI values of three types of pixels respectively:

(7)Calculate the finally CNI value:

CCI reflects the degree of color brightness.CCI is the measure of the judgment standard.31,32CCI valueCkcan be calculated by

whereSkis the average value of saturation heft and σkstandard deviation.The index is used to evaluate the haze removal UAV reconnaissance image.CCI is related to image content and is usually used to evaluate the rich degree of images under the conditions of the same scenes,same objects and different haze removal methods.

As is shown in Table 3,after dehazing,the values of both CNI and CCI have significant improve.

In order to achieve comprehensive evaluation of haze removal effect,the four criteria are normalized and added up to obtain a comprehensive evaluation index.We use range transformation to obtain the index:

wherenis the number of decision indexes andmthe number of testing methods.The decision matrix ispresented as X=[xij]m×n.The polarization transform matrix is presented as Y=[yij]m×n.

Table 3 CNI and CCI before and after dehazing.

Fig.9 Edge detection with different methods.

The final evaluation results are shown in Table 4.UAV reconnaissance images from the image set are experimented on and the haze concentration are graded by the classification method mentioned above.The curve graph shown in Fig.10 is the percentage increase of comprehensive evaluation index after dehazing for 4 levels.It can be seen that the bigger haze concentration of the origin image,the better haze removal effect.The histogram in Fig.11 shows a comparison of comprehensive evaluation index by different methods.The darker blue parts are the average of comprehensive evaluation index by different methods,and the lighter blue parts are the percentage increase of comprehensive evaluation by different methods.Compared with the origin image,the comprehensive index of the image restored by our method increases by 282.84%,higher than the index by 221.97%,by dark channel prior method.It can be obviously seen that our method is superior to other methods.

Fig.10 Percentage increase of comprehensive evaluation index after dehazing.

6.Conclusions

Based on the analysis of atmospheric scattering model,a novel layered scattering physical-based model is proposed in this paper.Through experimental verification,we draw the following conclusions:

(1)According to the imaging characteristics of UAV,the origin atmospheric scattering model is improved and the novel layered scattering model has strong applicability for UAV reconnaissance images.

(2)The method can achieve good haze removal performance.Compared with the origin image,the comprehensive index of the image restored by our method increases by 282.84%,and is obviously superior to other methods.

Table 4 Comprehensive evaluation index by different methods.

Fig.11 Comparison of comprehensive evaluation index.

By analyzing and comparing the experimental data,it is proved that the proposed method greatly increases clarity of images and has potential in application and can be improved for dehazing sequences of images in the future.

Acknowledgement

This work was supported by the National Natural Science Foundation of China(No.61450008).

1.Stark AJ,Fitzgerald WJ.An alternative algorithm for adaptive histogram equalization.Graphical Models Image Process1996;58(2):180–5.

2.Reza AM.Realization of the contrast limited adaptive histogram equalization(CLAHE)for real-time image enhancement.J VLSI Signal Proc2004;38(1):35–44.

3.Zhai YS,Liu XM,Tu YY,Chen YN.An improved fog-degraded image clearness algorithm.J Dalian Maritime Univ2007;33(3):55–8.

4.Seow MJ,Asari VK.Ratio rule and homomorphic filter for enhancement of digital colour image.Neurocomputing2006;69(7–9):954–8.

5.Fabrizio R.An image enhancement technique combining sharpening and noise reduction.IEEE Trans Instrum Meas2002;51(4):824–8.

6.Zhou J,Zhou F.Single image dehazing motivated by Retinex theory[C]//2013 2nd international symposium on instrumentation and measurement,sensor network and automation(IMSNA);2003 Dec 23–24;Toronto,ON,Canada.Piscataway,NJ:IEEE Press;2013.p.243–7.

7.Ansia S,Aswathy AL.Single image haze removal using white balancing and saliency map.Proc Comput Sci2015;46:12–9.

8.Sun YB,Xiao L,Wei ZH.Method of defogging image of outdoor scenes based on pde.J Syst Simul2007;19(16):3739–44.

9.Zhai YS,Liu XM,Tu YY.Contrast enhancement algorithm for fog-degraded image based on fuzzy logic.Comput Appl2008;28(3):662–4.

10.Oakley JP.Improving image quality in poor visibility conditions using a physical model for contrast degradation.IEEE Trans Image Process1998;7(2):167–79.

11.Narasimhan SG,Nayar SK.Chromatic framework for vision in bad weather[C]//IEEE conference on computer vision&pattern recognition;2000 Jun 13–15;Hilton Head Island,SC,USA.Piscataway,NJ:IEEE Press;2000.p.598–605.

12.Narasimhan SG,Nayar SK.Vision and the atmosphere.Int J Comput Vision2002;48(3):233–54.

13.Kopf J,Neubert B,Chen B.Deep photo:model-based photograph enhancement and viewing.ACM Trans Graphics2008;27(5):32–9.

14.Tan RT.Visibility in bad weather from a single image[C]//2008 IEEE conference on computer vision and pattern recognition;2008 Jun 23–28;Anchorage,AK,USA.Piscataway,NJ:IEEE Press;2008.p.1–8.

15.Fattal R.Single image dehazing.ACM Trans Graphics2008;27(3):1–9.

16.He K,Sun J,Tang X.Single image haze removal using dark channel prior[C]//2009 IEEE conference oncomputer vision and pattern recognition;2009 Jun 20–25;Miami,FL,America.Piscataway,NJ:IEEE Press;2009.p.1956–63.

17.Liu Q,Chen MY,Zhou DH.Single image haze removal via depthbased contrast stretching transform.Sci China2014;58(1):1–17.

18.Mccartney EJ.Optics of the atmosphere:scattering by molecules and particles.IEEE J Quantum Electron1976;24(7):76–7.

19.Narasimhan SG,Nayar SK.Removing weather effects from monochrome images[C]//IEEE computer society conference on computer vision and pattern recognition;2001.Piscataway,NJ:IEEE Press;2001.p.II-186–II-193.

20.Narasimhan SG,Nayar SK.Contrast restoration of weather degraded images.IEEE Trans Pattern Anal Mach Intell2003;25(6):713–24.

21.Preetham AJ,Shirley P,Smits B.A practical analytic model for daylight[C]//Proceedings of the 26th annual conference on computer graphics and interactive techniques;Los Angeles,USA.New York:ACM Press/Addison-Wesley Publishing Co.;1999.p.91–100.

22.Li H.Statistical leaning methods.Beijing:Tsinghua University Press;2012.p.47–53.

23.PhoenixZq.Naı¨ve bayes classification.[updated 2014 Feb 07;cited 2015 July 14].Available from:http://www.cnblogs.com/phoenixzq/p/3539619.html.

24.Mandich D.A comparison between free space optics and 70 GHz short haul links behavior based on propagation model and measured data[C]//2004 7th European conference on wireless technology;2004 Oct 11–12;Amsterdam,Netherlands,Holland.Piscataway,NJ:IEEE Press;2004.p.85–8.

25.Fan G.Objective assessment method for the clearness effect of image defogging algorithm.Acta Automatica Sinica2012;38(9):1410–9.

26.Image shop.Image enhancement of local adaptive auto tone/contrast.[updated 2013 Oct 30;cited 2015 July 14].Available from:http://www.cnblogs.com/Imageshop/p/3395968.html,2013-10-30.27.Liu Q,Chen M,Zhou D.Fast haze removal from a single image[C]//2013 25th chinese control and decision conference(CCDC);2013 May 25–27;Guiyang,China.Piscataway,NJ:IEEE Press;2013.p.3780–5.

28.Hanumantharaju MC,Ravishankar M,Rameshbabu DR.Natural color image enhancement based on modified multiscale Retinex algorithm and performance evaluation using wavelet energy.Adv Intell Syst Comput2014;235:83–92.

29.Yendrikhovski ASN,Blommaert FJJ,Ridder HD.Perceptually optimalcolorreproduction.ProcSPIEIntSocOptEng1998;3299:274–81.

30.Huang K,Wu Z,Wang Q.Image enhancement based on the statistics of visual representation.Image Vis Comput2005;23(1):51–7.

31.Li Q,Zheng NN,Zhang XT.A simple calibration approach for camera on-based vehicle.Robot2003;25:626–30.

32.Jourlin M,Pinoli JC.Logarithmic image processing*:The mathematical and physical framework for the representation and processing of transmitted images.Advances in Imaging&Electron Physics2001;115(1):129–96.

14 July 2015;revised 23 October 2015;accepted 15 December 2015

Available online 23 February 2016

ⓒ2016 Chinese Society of Aeronautics and Astronautics.Published by Elsevier Ltd.This is an open access article under the CC BY-NC-ND license(http://creativecommons.org/licenses/by-nc-nd/4.0/).

*Corresponding author.Tel.:+86 10 82339906.

E-mail address:lihongguang@buaa.edu.cn(H.Li).

Peer review under responsibility of Editorial Committee of CJA.

Huang Yuqingreceived the B.S.degree in Communication Engineering from Beijing University of Posts and Telecommunications in 2014.She is now pursuing M.S.degree in Beihang University.Her research interests include computer vision and machine learning.

Ding Wenruireceived the B.S.degree in computer science from Beihang University in 1994.From 1994 to now,she is a teacher of Beihang University,and now she is a professor,deputy general designer of UAV system.Her research interests include data link,computer vision,and artificial intelligence.

CHINESE JOURNAL OF AERONAUTICS2016年2期

CHINESE JOURNAL OF AERONAUTICS2016年2期

- CHINESE JOURNAL OF AERONAUTICS的其它文章

- Hypersonic starting flow at high angle of attack

- Advances and trends in plastic forming technologies for welded tubes

- Instability and sensitivity analysis of flows using OpenFOAM®

- Numerical simulations of high enthalpy flows around entry bodies

- Modeling and simulation of a time-varying inertia aircraft in aerial refueling

- Experimental investigations for parametric effects of dual synthetic jets on delaying stall of a thick airfoil