基于粗糙集的去噪扩散概率方法

佘志用 郭晓新 冯月萍 张东坡

摘要: 基于非Markov链去噪扩散隐式模型(DDIM), 提出一种粗糙集的去噪扩散概率方法, 用粗糙集理论对采样的原序列等价划分, 在原序列上构建子序列的上下近似集和粗糙度, 当粗糙度最小时获取非Markov链去噪扩散隐式模型的有效子序列. 利用去噪扩散概率模型(DDPM)和DDIM进行对比实验, 实验结果表明, 该方法获取的序列是有效子序列, 且在该序列上的采样效率优于DDPM.

关键词: 粗糙集; 去噪扩散概率模型; 非Markov链去噪扩散概率模型; Markov链

中图分类号: TP391.4文献标志码: A文章编号: 1671-5489(2024)02-0339-08

Probability Method of Denoising DiffusionBased on Rough Sets

SHE Zhiyong1, GUO Xiaoxin2, FENG Yueping2, ZHANG Dongpo1

(1. School of Information Network Security, Xinjiang University of Political Science and Law,

Tumxuk 844000, Xinjiang Uygur Autonomous Region, China;

2. College of Computer Science and Technology, Jilin University, Changchun 130012, China)

Abstract: Based on non Markov chain denoising diffusion implicit model (DDIM), we proposed probability method of denoising diffusion based on rough sets. The rough set theory was used to equivalently partition the sampled original sequence, construct the upper and lower approximation sets and roughness of the subsequences on the original sequence, and obtain the effective subsequences of the non Markov chain DDIM when the roughness was the lowest. The comparative experiments were conducted by the denoising diffusion probability model (DDPM) and DDIM, and the experimental results show that the sequence obtained by proposed method is an effective subsequence, and the sampling efficiency on this sequence is better than that of the DDPM.

Keywords: rough set; denoising diffusion probability model; non Markov chain denoising diffusion probability model; Markov chain

擴散模型[1]在计算机视觉、 自然语言处理、 时间数据建模等领域应用广泛. 从高水平的细节到生成例子的多样性, 其展现了强大的生成能力. 目前, 扩散模型已被广泛应用于各种生成建模任务, 如图像生成[2]、 图像超分辨率[3-4]、 图像插入绘画[5]、 图像编辑[6]和图像到图像转换[7]等. 此外, 通过扩散模型学习到的潜在表示也被应用在鉴别任务中, 如图像分割[8-9]、 分类[10]和异常检测[11], 从而证实了扩散模型的广泛适用性.

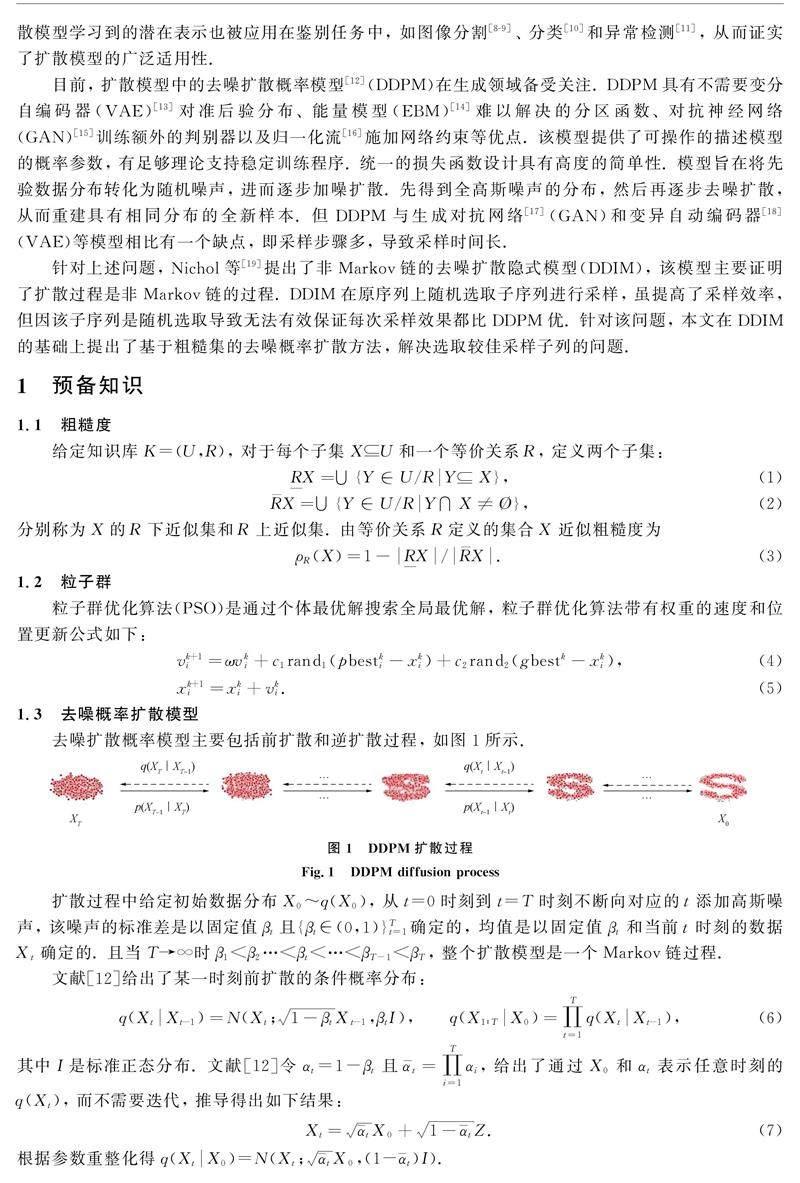

目前, 扩散模型中的去噪扩散概率模型[12](DDPM)在生成领域备受关注. DDPM具有不需要变分自编码器(VAE)[13]对准后验分布、 能量模型(EBM)[14]难以解决的分区函数、 对抗神经网络(GAN)[15]训练额外的判别器以及归一化流[16]施加网络约束等优点. 该模型提供了可操作的描述模型的概率参数, 有足够理论支持稳定训练程序. 统一的损失函数设计具有高度的简单性. 模型旨在将先验数据分布转化为随机噪声, 进而逐步加噪扩散. 先得到全高斯噪声的分布, 然后再逐步去噪扩散, 从而重建具有相同分布的全新样本. 但DDPM与生成对抗网络[17](GAN)和变异自动编码器[18](VAE)等模型相比有一个缺点, 即采样步骤多, 导致采样时间长.

针对上述问题, Nichol等[19]提出了非Markov链的去噪扩散隐式模型(DDIM), 该模型主要证明了扩散过程是非Markov链的过程. DDIM在原序列上随机选取子序列进行采样, 虽提高了采样效率, 但因该子序列是随机选取导致无法有效保证每次采样效果都比DDPM优. 针对该问题, 本文在DDIM的基础上提出了基于粗糙集的去噪概率扩散方法, 解决选取较佳采样子列的问题.

1 预备知识

1.1 粗糙度

在相同损失值下将DDPM,DDIM和本文方法利用U-net网络训练模型. DDPM在{t100,t99,t98,…,t2,t1}序列上的采样结果与DDIM和本文方法在{t75,t74,t73,…,t35,t34,t33}序列上的采样结果对比如图5所示. DDPM,DDIM和本文方法的FID(Fréchet inception distance)值列于表3.

圖5为DDPM在原序列上采样得到的3张人脸图像, DDIM和本文算法在获取的子序列上采样得到3张人脸图像. 由图5可见, 通过直观的对3种算法采样得到图像对比, DDIM和本文算法在获取子序列上采样效果与DDPM在原序列上的采样效果相当. 说明本文算法获取的子序列是有效子序列, 且采样效果相似. 表3为通过FID量化评估3种算法采样生成图像的质量, FID越小生成的图像质量越好. 由表3可见, 3种算法FID值都相对较小, 说明3种算法采样生成的图像质量较好. 本文算法生成图像的FID值与DDIM和DDPM的FID值接近, 进一步说明本文算法获取的子列是有效子列, 且采样效果相似.

验证本文算法获取子列是较佳的采样子序列. DDIM分别在随机选取3个子序列和本文获取的子序列上采样结果与本文算法采样结果对比如图6所示. 图6采样结果的FID值列于表4.

为说明本文算法获取子序列采样的优越性, 图6中的3个随机子序列都是等长的. 从DDIM在3个随机子序列采样效果与DDIM和本文算法在本文获取的子序列采样结果对比, 在本文获取的子序列上采样效果明显优于3个随机子序列上的采样效果. 说明本文获取的采样子序列不但采样有效, 且采样效果较佳. 对表4中不同子序列生成图像的FID对比分析, DDIM和本文方法在获取子序列上生成图像的FID明显低于DDIM在其他随机子序列上的FID. 进一步说明本文方法获取的子序列是较佳采样子序列.

由图5和表3可见, 虽然DDPM在原序列上采样效果与本文方法在获取子序列上的采样结果相似, 但在理论上DDPM比本文方法的采样效率低. 原因是DDPM在原序列上采样, 而本文方法在子序列上采样. DDPM的采样时间分别为103,104,102 s, 本文方法的采样时间分别为21,22,20 s. 实验结果表明, 本文方法在获取的子序列上采样效率是DDPM在原序列上采样效率的4倍多, 说明本文方法采样效率明显高于DDPM的采样效率.

综上所述, 本文在DDIM的基础上通过粗糙集和粒子群优化算法相结合获取较佳采样子序列. 实验验证结果表明, 本文方法获取的采样子序列上生成图像效果与DDPM采样效果相似, 说明本文获取的子列是有效子列; DDIM和本文方法在获取子序列上的采样效果明显优于DDIM在多个随机子序列上的采样结果, 说明本文获取的有效子序列是较佳子序列, 进一步说明DDIM在随机子序列上的采样结果不稳定; 最后通过本文方法和DDPM分别在获取的子序列和原序列上的采样时间对比, 表明本文方法采样效率明显高于DDPM的采样效率.

参考文献

[1]SOHL-DICKSTEIN J, WEISS E, MAHESWARANATHAN N, et al. Deep Unsupervised Learning Using Nonequilibrium Thermodynamics [C]//International Conference on Machine Learning. [S.l.]: PMLR, 2015: 2256-2265.

[2]LU C, ZHOU Y H, BAO F, et al. Dpm-Solver: A Fast Ode Solver for Diffusion Probabilistic Model Sampling in Around 10 Steps [J]. Advances in Neural Information Processing Systems, 2022, 35: 5775-5787.

[3]KAWAR B, ELAD M, ERMON S, et al. Denoising Diffusion Restoration Models [J]. Advances in Neural Information Processing Systems, 2022, 35: 23593-23606.

[4]SAHARIA C, HO J, CHAN W, et al. Image Super-resolution via Iterative Refinement [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 45(4): 4713-4726.

[5]LUGMAYR A, DANELLJAN M, ROMERO A, et al. Repaint: Inpainting Using Denoising Diffusion Probabilistic Models [C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway, NJ: IEEE, 2022: 11461-11471.

[6]AVRAHAMI O, LISCHINSKI D, FRIED O. Blended Diffusion for Text-Driven Editing of Natural Images [C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway, NJ: IEEE, 2022: 18208-18218.

[7]SAHARIA C, CHAN W, CHANG H W, et al. Palette: Image-to-Image Diffusion Models [C]//ACM SIGGRAPH 2022 Conference Proceedings. New York: ACM, 2022: 1-10.

[8]ROMBACH R, BLATTMANN A, LORENZ D, et al. High-Resolution Image Synthesis with Latent Diffusion Models [C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway, NJ: IEEE, 2022: 10684-10695.

[9]LI M Y, LIN J, MENG C L, et al. Efficient Spatially Sparse Inference for Conditional Gans and Diffusion Models [J]. Advances in Neural Information Processing Systems, 2022, 35: 28858-28873.

[10]MIKUNI V, NACHMAN B. Score-Based Generative Models for Calorimeter Shower Simulation [EB/OL]. (2022-06-17)[2023-02-10]. https://arxiv.org/abs/2206.11898.

[11]PINAYA W H L, GRAHAM M S, GRAY R, et al. Fast Unsupervised Brain Anomaly Detection and Segmentation with Diffusion Models [C]//International Conference on Medical Image Computing and Computer-Assisted Intervention. Berlin: Springer, 2022: 705-714.

[12]HO J, JAIN A, ABBEEL P. Denoising Diffusion Probabilistic Models [J]. Advances in Neural Information Processing Systems, 2020, 33: 6840-6851.

[13]GUU K, HASHIMOTO T B, OREN Y, et al. Generating Sentences by Editing Prototypes [J]. Transactions of the Association for Computational Linguistics, 2018, 6: 437-450.

[14]YU L T, SONG Y, SONG J M, et al. Training Deep Energy-Based Models with f-Divergence Minimization [C]//International Conference on Machine Learning. [S.l.]: PMLR, 2020: 10957-10967.

[15]CRESWELL A, WHITE T, DUMOULIN V, et al. Generative Adversarial Networks: An Overview [J]. IEEE Signal Processing Magazine, 2018, 35(1): 53-65.

[16]GARCA G G, CASAS P, FERNNDEZ A, et al. On the Usage of Generative Models for Network Anomaly Detection in Multivariate Time-Series [J]. ACM SIGMETRICS Performance Evaluation Review, 2021, 48(4): 49-52.

[17]PEI H Z, REN K, YANG Y Q, et al. Towards Generating Real-World Time Series Data [C]//2021 IEEE International Conference on Data Mining (ICDM). Piscataway, NJ: IEEE, 2021: 469-478.

[18]LIANG D W, KRISHNAN R G, HOFFMAN M D, et al. Variational Autoencoders for Collaborative Filtering [C]//Proceedings of the 2018 World Wide Web Conference. New York: ACM, 2018: 689-698.

[19]NICHOL A Q, DHARIWAL P. Improved Denoising Diffusion Probabilistic Models [C]//International Conference on Machine Learning. [S.l.]: PMLR, 2021: 8162-8171.

[20]佘志用, 段超, 張雷. 变精度最小平方粗糙熵的图像分割算法 [J]. 计算机工程与科学, 2019, 41(4): 657-664. (SHE Z Y, DUAN C, ZHANG L. An Image Segmentation Algorithm Using Variable Precision Least Square Rough Entropy [J]. Computer Engineering & Science, 2019, 41(4): 657-664.)

(责任编辑: 韩 啸)

收稿日期: 2023-04-18.

第一作者简介: 佘志用(1990—), 男, 汉族, 硕士, 讲师, 从事图像处理和智能决策的研究, E-mail: szy@xjzfu.edu.cn.

通信作者简介: 冯月萍(1958—), 女, 汉族, 博士, 教授, 从事计算机图形学和图像处理的研究, E-mail: fengyp@jlu.edu.cn.

基金项目: 国家自然科学基金(批准号: 82071995)、 吉林省科技发展计划重点研发项目(批准号: 20220201141GX)和新疆政法学院校长基金(批准号: XZZK2021002; XZZK2022008).