Collaborative positioning for swarms: A brief survey of vision, LiDAR and wireless sensors based methods

Zeyu Li , Chnghui Jing , Xioo Gu , Ying Xu , Feng zhou , Jinhui Cui

a College of Geodesy and Geomatics, Shandong University of Science and Technology, Qingdao, 266590, China

b Geoloc Laboratory, Universitˊe Gustave Eiffel, Paris, 77454, France

c School of Automation, Guangdong University of Technology, Guangzhou, 510006, China

Keywords:Collaborative positioning Vision LiDAR Wireless sensors Sensor fusion

ABSTRACT As positioning sensors, edge computation power, and communication technologies continue to develop,a moving agent can now sense its surroundings and communicate with other agents.By receiving spatial information from both its environment and other agents, an agent can use various methods and sensor types to localize itself.With its high flexibility and robustness, collaborative positioning has become a widely used method in both military and civilian applications.This paper introduces the basic fundamental concepts and applications of collaborative positioning, and reviews recent progress in the field based on camera,LiDAR (Light Detection and Ranging), wireless sensor,and their integration.The paper compares the current methods with respect to their sensor type,summarizes their main paradigms,and analyzes their evaluation experiments.Finally, the paper discusses the main challenges and open issues that require further research.

1.Introduction

With their advantages of flexibility, fault tolerance, low individual memory, and high scalability, swarms - sets of agents with communication links-can perform various tasks to cover a certain area in time- or cost-constrained scenarios.Typical swarm types include UAVs (Unmanned Aerial Vehicles) [1], UGVs (Unmanned Ground Vehicles)[2],USVs(Unmanned Surface Vehicles)[3],UUVs(Unmanned Underwater Vehicles)[4],as well as their combinations[5,6].In specific military scenarios, helicopters,tanks, and soldiers equipped with positioning technology may also form a swarm to perform military tasks such as reconnaissance, transportation,search and rescue,and firepower attacks[7].Satellites can generate multiple constraints for localization by using inter-satellite links and ground stations.In missile guidance, a cooperative strategy may be used to track maneuvering targets [8].The efficiency,robustness, and accuracy of a swarm are often the main focus.Numerous official organizations have established projects or organized competitions for swarms.Since 2016, DARPA has proposed and continuously modified the concept of Mosaic Warfare [9], which has become an important achievement of the US military in rebuilding its military system and combat methods.It emphasizes the characteristics of dynamic, cooperative, and high autonomy.To achieve this goal, autonomous, accurate, and stable cooperative positioning is paramount.Other notable projects and competitions include the CODE(Collaborative Operations in Denied Environments) project [10] and Subterranean Challenge [11] to enhance the automation level of swarms.China launched Unmanned Ground System Final Challenge since the year of 2016[12].In 2021,it added a section with the focus on collaborative tasks.In the civilian field, individuals with smartphones can form another type of swarm to perform collaborative tasks, such as face-to-face AR (Augmented Reality)/VR (Virtual Reality) games and applications.Many startup companies have focused on swarm-based technology.For instance, Niantic Labs proposed cloud-sourcing positioning and mapping to enhance user experience for AR/VR applications [13].Huawei has developed an AR Engine to support AR games for multiple face-to-face players [14].

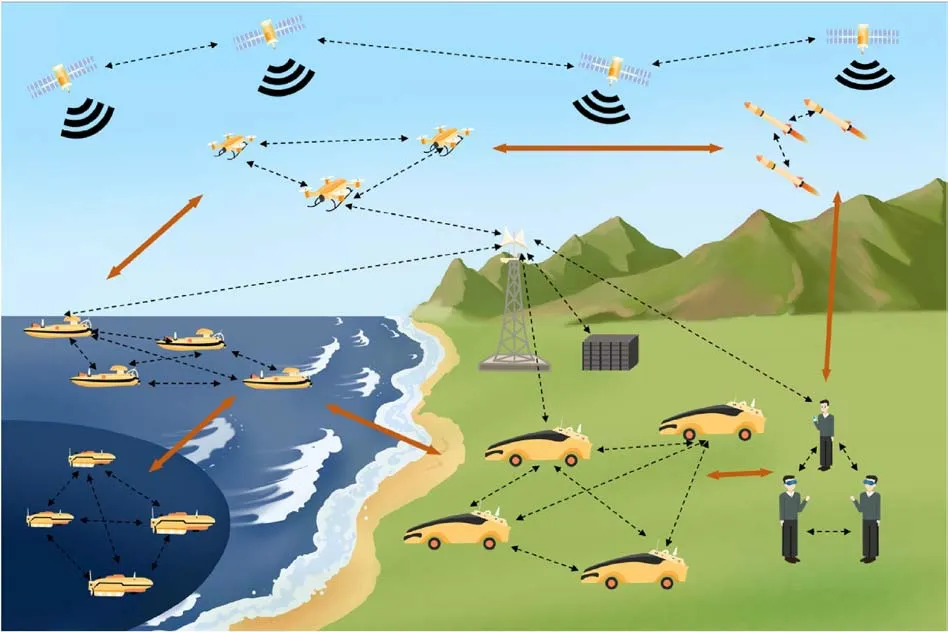

As shown in Fig.1, swarms have achieved full coverage of land,water surfaces,underwater areas,and airspace[15].Swarms have,to a certain degree, become a part of our daily lives.UAVs, UGVs,USVs, and UUVs can form a hierarchical wireless network to perform tasks in parallel.A small group of agents may form a swarm, and multiple groups of swarms may form a major swarm.Information may be exchanged between or within swarms, as illustrated by red and black arrows,respectively.

Fig.1.Possible applications of swarms.

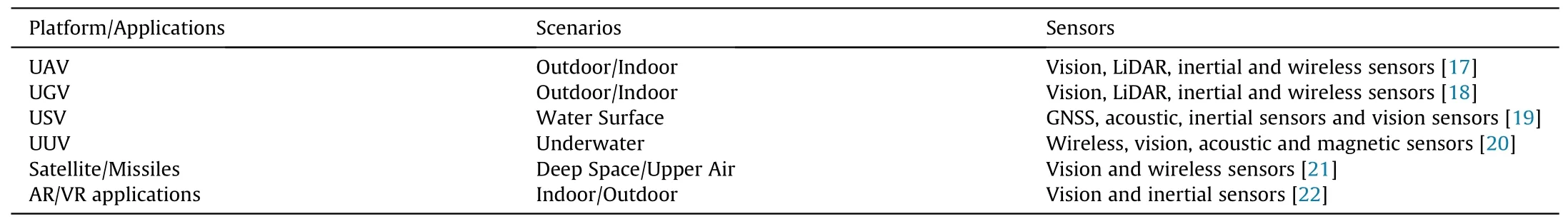

Although different types of swarms are assigned to different tasks with several related sensors, Table 1 indicates that vision,LiDAR, and wireless sensors are the mainstream sensors.For UAVs and UGVs,vision,LiDAR,inertial,and wireless sensors such as UWB(Ultra-Wide Band) are common, and some of these have been commercialized.For example,UAV swarms can be used to conduct surveying tasks [16].Due to environmental limitations, USVs mainly use GNSS (Global Satellite Navigation System) or acoustic sensors for localization, and may use inertial and vision to avoid obstacles in offshore areas.UUVs may use wireless communication with an anchor on the surface to localize themselves,as underwater environments are very complex.Additionally, a number of small UUVs may use vision,acoustic,or magnetic sensors for localization.Space and weight constraints limit sensor installation for satellites and missiles; therefore, vision and wireless sensors are the most suitable.Similarly, AR/VR equipment needs to be lightweight, so vision and inertial sensors are commonly installed for AR/VR HMD(Helmet-Mounted Displays)or smartphones.

Positioning information plays a crucial role in enabling swarms to conduct tasks.The sensors listed in Table 1 can provide spatial information from the surroundings or other agents, which can be used for collaborative positioning.As illustrated in Fig.2, collaborative positioning is the fundamental component for collaborative mapping or collaborative SLAM.The single positioning module provide basic pose or position information.With additional observations from agents in the swarms, positioning can be further enhanced by collaborative positioning.Collaborative mapping and collaborative SLAM need such positioning information for mapping in unknown environments.Collaborative positioning is supported by sensor, communication, estimation, control, and edge computing technologies.Collaborative positioning is divided into three categories based on the main sensors, namely vision-based methods and LiDAR-based methods, wireless sensor-based methods.For vision and LiDAR-based methods, data association of observations (e.g., feature matching) and parameter estimation for positioning solutions (e.g., pose estimation) are two maincomponents.For wireless sensor-based methods, ranging measurement (e.g., getting the distance) and parameter estimation(e.g., position estimation) are two main components.The positioning information supports multiple tasks such as exploration,obstacle avoidance, reconnaissance, patrol, and search& rescue.

Table 1 The summarization of different swarms, scenarios and related sensors.

Fig.2.The shared technologies, concepts and applications of collaborative positioning.

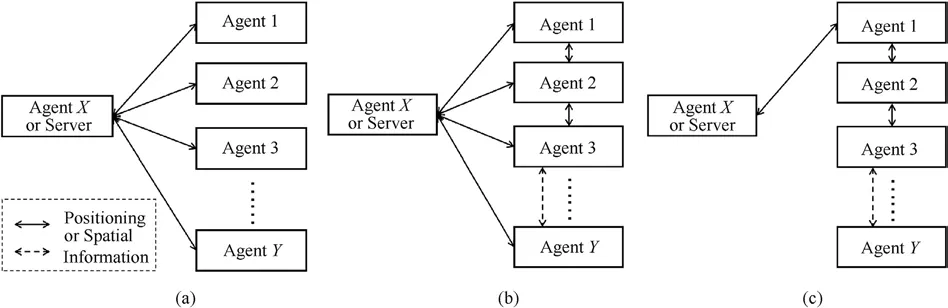

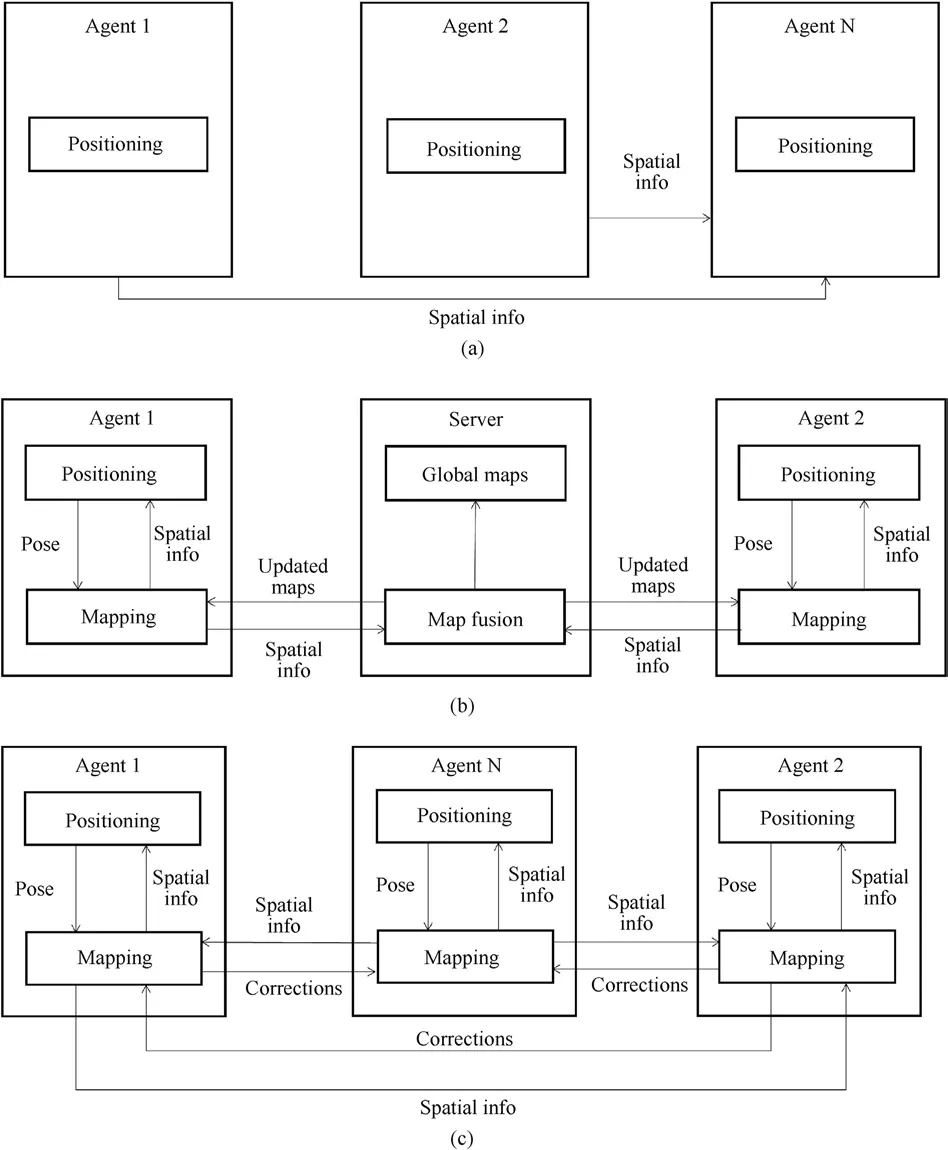

The communication and processing modes for swarms vary based on the type of platforms or tasks.As depicted in Fig.3.Agents can communicate through direct or indirect links, which can provide spatial information for positioning.Indirect positioning information can be obtained from the central agent or server, while direct information can be obtained when a direct link between robots is established.The topological structure of collaborative positioning can be categorized into three types: centralized, decentralized, and hybrid.In the centralized type, a single server or agent acts as the platform to receive all other positioning or spatial information, and then sends the processed positioning solution to all other agents to update their poses.This type is the most common hardware infrastructure, but its reliability is weak since the whole system will not work if the server or the agent is broken,and capacity is constrained as the computation power and communication bandwidth are limited.On the other hand, in the decentralized type, all or parts of agents can send or receive positioning information to/from others.Fig.3(b) illustrates all agents can communicate with each other,while Fig.3(c)reveals that one agent can only communicate with two nearby agents.This type of topology allows for more robustness, as it is not dependent on a single point of failure, but its computational and communication requirements may increase.Finally,the hybrid type combines both centralized and de-centralized types,providing a balance between reliability and scalability.

With the aforementioned concepts, applications, sensors and topological structure, this paper chooses to review vision-based method, LiDAR-based method, wireless sensor-based method,and their integration for integration.The key components of these methods are identified, categorized, and explained.A total of 60 papers about collaborative positioning are reviewed, and the methods in each category are compared within their sensor type.Finally,the challenges and open issues are summarized for readers.

This paper is organized as follows:

(1) Section 1 introduces the background and motivation of the paper,as well as the scope and objectives of the review.(2)Section 2 describes the related review articles about collaborative positioning and states their differences with this paper.(3) Section 3 provides the keywords used for searching vision, LiDAR, and wireless sensor based collaborative positioning.(4) Section 4, 5, 6 and 7 briefly review the progress of collaborative positioning based on the above-mentioned sensors.(5)Section 8 discusses the challenges and open issues related to collaborative positioning.(6)Section 9 presents concluding remarks.

Fig.3.The topological structure for collaborative positioning: (a) Centralized structure; (b) De-centralized structure A; (c) De-centralized structure B.

2.Related works

Fukuda et al.[23]proposed a cell structure based self-organized robot system in 1989,which is considered one of the earliest works on swarm systems.According to available information, the early cooperative positioning algorithm dates back to 1994,when a robot swarm achieved self-localization using a move-and-stop approach[24].In 2002,vision and LiDAR were loosely integrated to perform positioning and mapping[25].More recently,the author of a study emphasized that navigation performance is a crucial indicator of swarm autonomy [26].With the advancement in computational power and sensing technologies for swarms, numerous modern swarm systems have been proposed, and several review papers have focused on different aspects of collaborative positioning.

Pascacio et al.[27] conducted a review of collaborative positioning systems for indoor environments based on wireless sensor technology and positioning algorithms.The three most commonly used technologies were Wi-Fi, Ultra-WideBand (UWB), and Bluetooth.The predominant collaborative positioning techniques included Received Signal Strength Indication(RSSI),Fingerprinting,and Time of Arrival(ToA)or Time of Flight(ToF).The main methods used were Particle Filters(PF),belief propagation,Extended Kalman Filter (KF), and Least Squares.In a similar vein, Ref.[15] reviewed relative positioning methods, evaluated existing challenges, and proposed rough solutions for robot swarms, with a particular emphasis on the UWB-based positioning method.The authors proposed a fully distributed relative positioning method from a conceptual perspective.In Ref.[28], a brief survey was conducted on distributed estimation and perception using ranging information from a specific wireless sensor.

As mentioned,vision and LiDAR sensors are the most frequently used to sense surroundings,particularly for collaborative mapping or SLAM,which are extensions of collaborative positioning.Several related papers have been published.In Ref.[29], the authors reviewed several map fusion methods that directly impact the quality of collaborative positioning for occupancy grid maps,feature-based maps,and topological maps.The paper showed that occupancy grid maps can be merged using various methods such as probability, optimization, feature-based, and Hough-Transform-Based methods.For feature-based maps, fusion methods can be divided into point, line, and plane feature-based methods.Topological maps are less intuitive compared to occupancy grid maps,making fusion methods for topological maps rare.Map structure,geometry, and agent path are the main cues for topological map fusion.In their work, Zou et al.[17] reviewed representative collaborative vision-based SLAM systems from 2009 to 2019,dividing them into filter-based and keyframe-based approaches.The paper described the key components of collaborative pose estimation, collaborative mapping, loop closure, feature detection and matching,and outlier detection.However,most papers cited in the review focused on small environments.In this paper, we include more papers that discuss large-scale environments,providing a broader review.In Ref.[30], the authors reviewed the basic components of collaborative SLAM with various sensors,including wireless, vision, and LiDAR sensors.They also described recent developments in loop closure and estimation algorithms in collaborative SLAM before 2021, which are the most relevant references for our paper.Although parts of their paper are similar to our review, it only focuses on collaborative SLAM rather than collaborative positioning.Additionally, most of the reviewed papers are vision-based collaborative SLAM methods.Therefore, our review provides a more systematic survey from the perspective of sensor types.

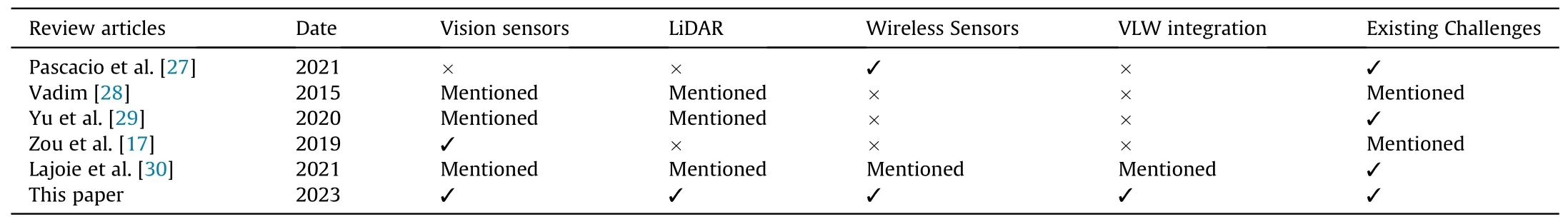

To the best of our knowledge, there is no systematic review available for LiDAR-based positioning of multiple agents.Table 2 summarizes the differences between existing literature.While our paper is the only one that provides a systematic review of collaborative positioning based on vision, LiDAR, wireless sensors,and their integration (i.e., Vision/LiDAR/Wireless Sensor integration,VLW integration),we recommend the papers listed in Table 2 to readers who would like to conduct research on any specific aspect of collaborative positioning.

3.Article selection process

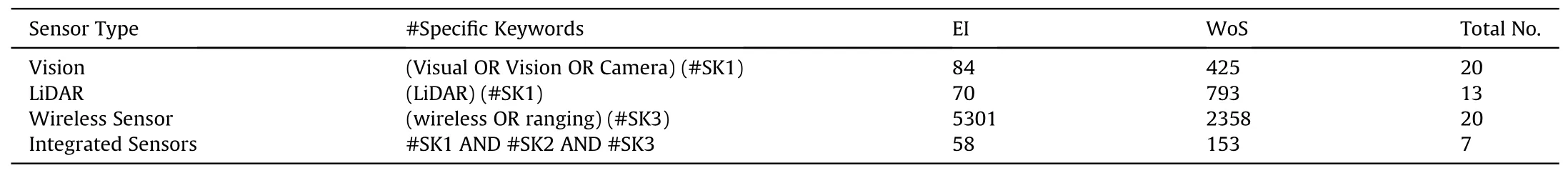

The general combination of keywords used in our literature search was ((Collabora* OR Coopera* OR Distribute* OR Crowdsourc*OR Distrib*)AND(Position*OR Track*OR Locati*OR Locali* OR Navigat*) AND # Specific Keywords).Table 3 presents the specific keywords used for each sensor type.We gathered papers from two sources: first, the extensive readings and experiences of the authors, and second, online searches using the keywords for vision,LiDAR,wireless sensors,and their integration.We limited our review to journal and top conference papers published from 2017 to 2022,and the numbers of relevant papers from Engineering Village and Web of Science are included in Table 3.We reviewed a total of 22,13, 20, and 7 papers respectively for vision,LiDAR, wireless sensors, and their integration.Since wireless sensor-based collaborative positioning is naturally a relative positioning method, there were many papers on this topic.However,we highlighted only 20 selected papers under its section due to space constraints.Table 3 shows only a small number of papers in each category were within the scope of our review.Among them,the number of papers related to localization using wireless sensors was the highest among the four categories, while the number of papers on integration was the smallest, suggesting the challenges of integrating multiple sensors for collaborative positioning.

4.Vision-based collaborative positioning

Vision-based collaborative positioning can be divided into target-based and overlap-based methods as shown in Fig.4.In theformer methods, targets such as markers and LEDs are tracked through vision sensors to obtain relative range and bearing information.With a certain mathematical model, such as an imaging model [31], the relative positions of platforms can be estimated.Usually, the agent does not have a mapping module.In the latter methods,the agent or server usually needs to fuse sub-maps from different agents to indirectly update the positions.It can be further divided into centralized and decentralized types.The advantages of a centralized model are that the server has higher computational power to handle complex data and scenarios, but the size and available space of controlled agents are limited as the computational power is not infinite.Compared with centralized swarms,the self-adaptation ability of decentralized swarms is higher as each agent does not have to communicate with one centralized agent.The target-based method is more suitable for engineering applications as it is relatively simple and easy to implement, while the overlap-based method is the mainstream in the academic field but faces many challenges.

Table 2 Comparison between existing review articles and this paper.

Table 3 Keywords for searching papers.

Fig.4.Main paradigms of vision based collaborative positioning methods: (a) Target-based method; (b) Overlap-based method: Centralized collaborative SLAM or collaborative mapping; (c) Overlap-based method: De-centralized collaborative SLAM or collaborative mapping.

4.1.Target based method

Target-based methods have been applied in various collaborative positioning systems, particularly in engineering applications.For example,a swarm of UAVs with limited computation power was proposed in Ref.[32] for functioning in windy outdoor environments or pit mines.Each UAV had a detectable marker of a circular pattern, and relative localization was conducted by detecting and observing the size of the pattern.The error of relative positioning was also analyzed.Similarly, active ultraviolet LED markers were used in Ref.[33] to estimate distances between two UAVs, and suggestions about full pose estimation were given.In Ref.[34],ground vehicles were tagged with markers, and the UAVs tracked these tags to estimate scale and reconstruct maps on the ground.A leader-follower cooperative positioning system was proposed in Ref.[35]using laser tracking,where the laser beam from the leader was tracked by visual sensors,and an improved kernel correlation tracking algorithm was proposed to improve recognition and tracking rates.In Ref.[31], three spherical underwater robots equipped with Time of Flight (ToF) cameras and IMUs were used,and a Kalman filter was applied to minimize errors.The special shapes of the robots made them easy to detect, and the experiments showed higher feasibility in underwater environments as the available distance became larger.

All the systems mentioned above are decentralized types, as there is no major server or agent involved.However, there is a possibility that a server can observe all the agents with targets and send control commands to them.Nevertheless, the computational ability to track multiple targets needs to be considered.

4.2.Overlap-based method

Collaborative SLAM and collaborative mapping are the two main forms of the overlap-based method.In collaborative SLAM or mapping, the positioning module provides pose information for mapping,and the spatial information contained in the maps helps agents with positioning,as shown in Fig.4.Therefore,both of them involve collaborative positioning from a conceptual viewpoint, as mentioned in Section 1.

Since the positioning cannot be directly corrected without aligning the map in overlap-based methods,map fusion is a crucial step in such approaches.Map fusion, which is similar to loop closure in single-agent SLAM, aims to accurately fuse the maps of other agents to update poses.The difference between map fusion and loop closure lies in the resources of maps or submaps.Loop closure aims to fuse different parts of a self-generated map,whereas map fusion involves fusing maps from multiple agents.In the inter-agent map fusion process,correspondence establishment,mismatch removal,and optimization are typically conducted in an agent or a server, as illustrated in Fig.5.

Correspondence establishment is the first step in finding the connections among submaps using spatial information such as images, features, or maps.The typical components in agent maps are feature points with 3D information.There are two main branches for finding correspondences: handcrafted features and deep learning-based features.A comparison between them can be found in Ref.[36].The role of deep learning-based features in aiding visual SLAM is described in Ref.[37], where it is shown that they can improve the performance of feature matching,loop closing,and map fusion.One of the most frequently used pipelines involving above-mentioned features is the Bag of Words (BoW) [38] based method, which finds the nearest image and establishes correspondences using a certain feature.BoW can be trained using handcrafted or deep learning features from appearances [39].

Incorrect correspondences or outliers may exist in putative correspondences, affected by multiple factors such as viewpoint changes and illumination.Therefore, mismatch removal or consistency check is necessary.For consistency-based methods, the basic principle is to find the consistency between two maps.Representative methods include RANSAC-based method [40].Graph optimization methods are commonly used for parameter estimation.Mismatches can be modeled as an outlying observation in the estimation process,and the optimization process minimizes error terms to obtain global maps.The most common error term is the re-projection error.Global maps can provide correction information for all agents, allowing their positions or poses to be updated.

Numerous overlap-based collaborative positioning methods have been proposed.PTAMM [41] used a hand-held camera and a wearable camera to share a global map, which was proposed in 2008.Modern methods such as CoSLAM[42],CCM-SLAM[43],CVISLAM [44], and CSFM [45] had been reviewed in Ref.[17].Many recent systems follow centralized structures, such as COVINS [46],where more than 10 UAVs collaborated to build a global map and estimate poses by transferring feature points to a centralized or remote cloud server.Map fusion is done using a standard multistage pipeline similar to the process shown in Fig.5, where a keyframe is sent to the server to find its nearest keyframe by BoW,followed by the establishment of accurate 3D-2D correspondences after mismatch removal.Finally, pose graph optimization is conducted to generate a global map.Zhang et al.[47] proposed an active collaborative SLAM system based on a monocular camera for large-scale environments that actively finds better places for loop closing.The main components of SLAM,including pose estimation,re-localization, and map fusion,are similar to those in Ref.[46].In Ref.[48],a cloud server was used to receive RGB-D keyframes from two robots for global dense mapping.A cloud-based SLAM architecture and protocol were proposed to significantly reduce bandwidth usage, where all depth and color images from the RGB-D camera were compressed to the PNG format for transmission, and corrected poses of keyframes were returned to robots to update their poses.

Few systems are of the decentralized type.One such system is described in Ref.[49], where a decentralized robot collaborative UAV mapping system is proposed, utilizing a Distributed Ledger Technology (DLT) based network.In this system, each UAV can manage a synchronized database of confirmed transactions,which has several advantages compared to centralized systems.For instance, the map can be shared among the swarm even if a UAV joins or leaves,without the need for communication with a central station.

Fig.5.Basic paradigm of vision based map fusion.

Various strategies have been proposed to enhance the accuracy and robustness of map fusion in collaborative mapping systems.For instance,in Ref.[50],the authors addressed the re-localization and map fusion problems in a multi-UAV SLAM system.The relocalization process was split into two stages: coarse relocalization on the server and fine re-localization on the UAV.Moreover, hierarchical clustering was utilized to prioritize the submaps to be merged.Similarly, in Ref.[51], a visual-inertial collaborative SLAM system for AR/VR applications improved the map fusion algorithm by using a heuristic approach based on the co-visible relationships among keyframes.

4.3.Deep learning assisted method

Deep learning methods can be utilized to improve the accuracy and robustness of collaborative positioning systems by aiding correspondence establishment and absolute/relative pose determination.Experiments have shown that deep learning methods outperform them.However, currently, most deep learning-based positioning systems only work for a single agent, and their application in collaborative positioning is limited.In Ref.[52], for example,data for self-supervised training was generated from pixel position and depth information, which came from other auxiliary sensors such as LiDAR, UWB, and IMU.The trained monocular camera was then able to estimate the positions of other agents.Furthermore,the use of DNNs can also improve the performance of map fusion.In Ref.[53], Chang et al.proposed Kimera-Multi, a distributed multi-robot metric-semantic system that uses deep learning-based methods for loop closure detection, outlier rejection, and pose graph optimization.However, the semantic module is only loosely coupled with the collaborative positioning system and is limited to generating global meshes.

4.4.Communication and memory burden

Researchers have also addressed the problem of data exchange in collaborative positioning.One approach proposed in Ref.[54]was to use frame coding methods, including depth coding and inter-view coding, to reduce communication burden in collaborative visual SLAM.Another study by Ref.[55] conducted collaborative SLAM between two agents using a monocular camera and a ranging tag module.Only poses and ranges were transferred between the two agents, which reduced the data exchange size and computation load.Additionally,the authors in Ref.[56]gave special consideration to the pose graph optimization problem with the minimization of data exchange size of the decentralized robots in a large robot team.They compressed the data using semantic information.To address memory management issues, Zhu et al.[57]proposed a dense, distributed RGB-D camera based collaborative positioning system.They used a compact Octomap [58] to reduce data storage requirements and a memory management strategy to reduce the time required to search through previously visited locations.The memory space was divided into three parts based on the estimated possibility of a loop using a discrete Bayesian method,which reduced the complexity of searching for candidates.

4.5.System-level design

Numerous papers have proposed the design of vision-based collaborative positioning systems with different focuses.For example,in Ref.[59],an adaptive collaborative visual-inertial SLAM system was proposed,which could adapt to different camera types such as monocular,stereo,or RGB-D cameras.The system measured the confidence of poses based on scene geometry consistency and pose observability, achieving higher accuracy in experiments on EuRoC datasets [60].In Ref.[61], Karrer and Margarita proposed a visual-inertial collaborative SLAM system where keyframes containing keypoint position, keypoint descriptors, and spatial information were communicated through a keyframe buffer in the server.Once a loop was detected and matched,global optimization using feature matching was conducted, and the optimized poses were returned to the agents.DOOR-SLAM [62] followed a similar framework, but used a place recognition module to transfer the brief descriptor of the whole keyframe and a geometric verification submodule to ensure correct data association.In Ref.[63], a pipeline for collaborative AR assembly in industry supported by multiple visual SLAM systems was presented.It started with scene cognition using multi-view acquisition of industrial environments to provide images for multiple users.Then, pose recovery was derived from different views of AR operators to get the global coordinate.Finally, each agent ran a VIO system to achieve the operation on assembly.

4.6.Summary

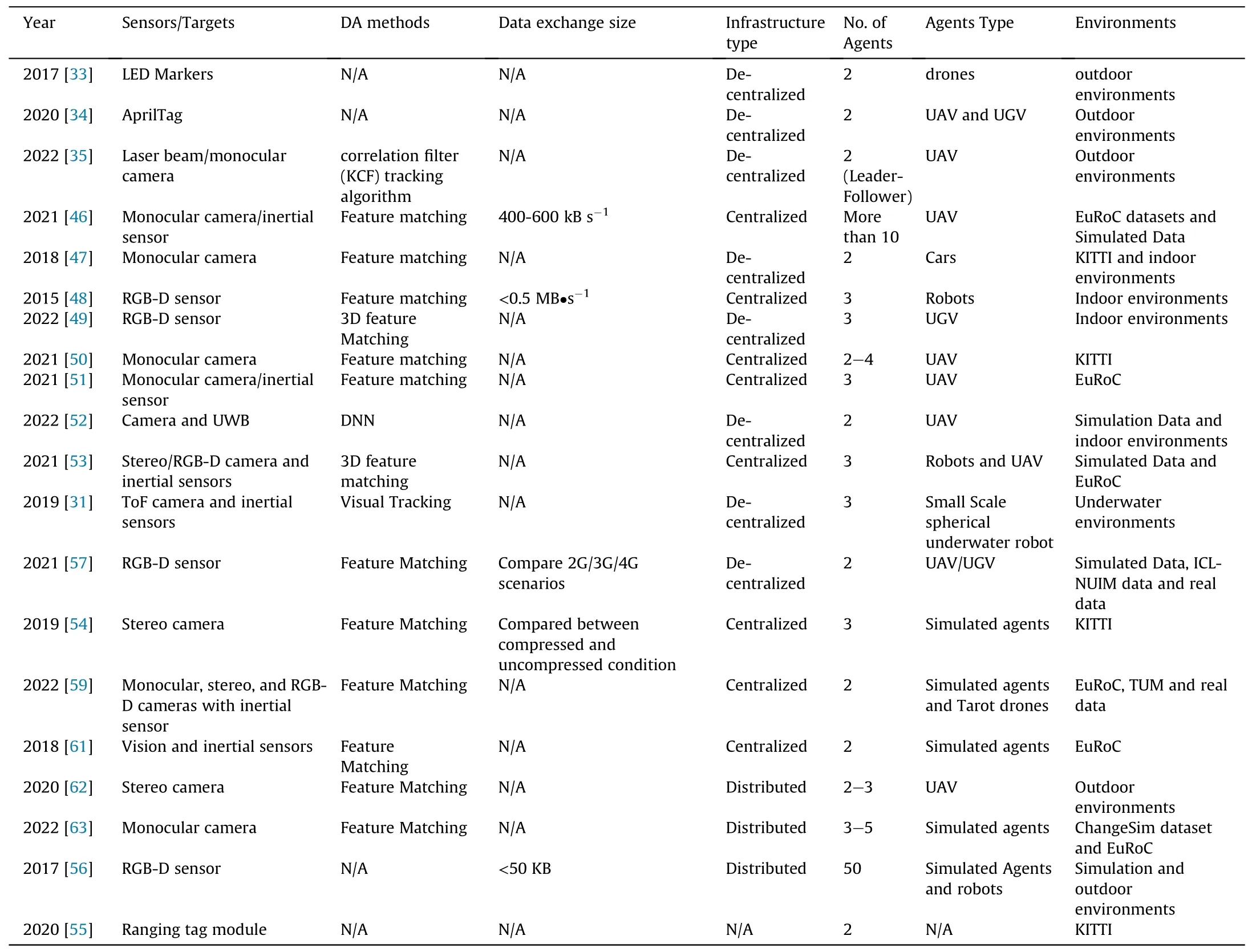

Table 4 provides a summary of current vision-based collaborative positioning methods.These methods use vision sensors,including monocular, stereo, and RGB-D cameras.Other sensors,such as inertial sensors,are also helpful in determining the position of the agent.However, all the reviewed papers only use handcrafted feature matching algorithms, which is surprising given the prevalence of deep learning techniques in computer vision research.Few papers analyze the communication bandwidth required for a collaborative system,which is a critical consideration for practical applications.Centralized and decentralized systems have both been developed,with demonstrations ranging from two to fifty agents.However, most papers only use a small number of agents due to the difficulty and expense of obtaining a large number of agents.The most frequently used dataset is KITTI [64],which is designed for autonomous driving applications.The majority of agents in the reviewed papers are UAVs, which demonstrate their potential for complex tasks.Collaborative positioning can be applied in various environments,including indoor,outdoor,and underwater scenarios.However, the scenario of USV is currently lacking due to the difficulty of acquiring features in offshore areas with many texture-less regions, especially in deepsea environments.

5.LiDAR-based collaborative positioning

LiDAR-based collaborative positioning is closely related to collaborative SLAM or mapping,as LiDAR sensors can directly sense the distance and bearing information of the surrounding environment.Recent research progress in this area can be divided into twocategories:map fusion and system-level design.However,there are fewer papers available on LiDAR-based collaborative positioning compared to its vision-based counterpart.

Table 4 Summary of current vision based collaborative positioning methods.

5.1.LiDar based map fusion

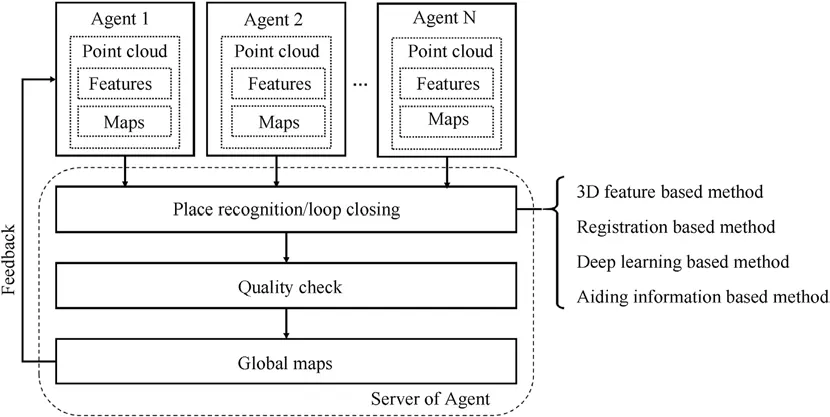

The basic map fusion mechanism for LiDAR-based collaborative positioning is similar to that of vision-based collaborative positioning,as illustrated in Fig.6.Raw data from each agent consists of point clouds from LiDAR sensors, which may include extracted 3D features.The map can be derived from these features [78], but it should be noted that in some cases, the map for each agent is a global map with more coverage rather than selected sparse features.Map fusion can be achieved using four categories of methods:3D feature-based methods [79], registration-based methods [80],deep learning-based methods [81], and aiding information-based methods [82].For 3D features, the methods can be further divided into local 3D features,which encode the structure within a small local area using a fixed-sized vector, similar to 2D features used in vision-based methods, and global 3D features, which can describe the entire point cloud of a scene.Registration-based methods use two sets of point clouds with overlapping areas as inputs and establish correspondences between them using a certain transformation model (e.g., rigid transformation) to align the point clouds.Representative methods include NDT[83]and ICP[84] methods.One advantage of using point clouds for map fusion is that it does not require feature extraction.However,a significant drawback is that the size of point clouds can be large, which can consume a lot of memory space for agents.Learning-based methods, such as LocNet [85] and PointNet [86], use Deep Neural Networks (DNNs) to represent the local or global area of point clouds.Another type of learning-based method involves using DNNs to detect geometric objects, which provides a rough relationship between two sets of point clouds.A review paper discussing these methods is available in Ref.[87].Aiding information from other sensors, such as intensity, can also be used [82].Hybrid methods that combine the above-mentioned algorithms have been proposed recently to improve map fusion performance,such as the semantic-NDT method developed by Zaganidis et al.[88].

Several researchers have attempted to enhance the robustness,accuracy,and efficiency of map fusion through various approaches.One such method is the hybrid approach, which combines the strengths of different techniques.For instance, in Ref.[65], the ISS(Intrinsic Shape Signatures) feature [89] and FPFH (Fast Point Feature Histograms) descriptor [90] were employed to obtain the initial transformation parameters R and t,while modified ICP with kd-tree was used for precise matching.Collaborative 3D mapping for heterogeneous robots (such as UAV and UGV with different sensors) was considered in Ref.[66].In this case, the point clouds from UAV and UGV were coarsely matched based on their positions from GPS (Global Positioning System) and IMU, and then feature matching on related images provided accurate matches.The rigid transfer between the two point clouds could then be determined after a voting scheme-based mismatch removal.To address the problem of false map fusion, Zhang et al.[68] developed a twostage method that modeled the pose for each agent as a pose graph.A χ2test based on the pose loops for intra-and inter-agent was then introduced to reject false loops.Similarly,Ref.[70]tackled the issue of large view changes between UAV and UGV through a segmentation-based matching method, followed by obtaining the accurate pose in a coarse-to-fine manner.

5.2.System-level design

When developing a LiDAR-based collaborative positioning system, several factors must be considered, including computation burden,memory,robustness,and accuracy.In Ref.[67],the authors fused IMU and LiDAR data to obtain the pose for each agent, and added control network constraints from Ground Control Points(GCP)to construct a non-linear least squares optimization problem for higher accuracy.Since the accuracy of GCPs was high, incorporating them improved the accuracy of the positioning solutions.However, determining the positions of GCPs required manual intervention.

LiDAR-based swarms have the capability to perform highaccuracy mapping, although this is relatively rare due to the weight and size limitations of most small-size automatic robot teams.In Ref.[72],a parent robot equipped with a laser scanner and a total station and two child robots with markers formed a realtime autonomic swarm for 3D reconstruction of large-scale environments.The agents' motion was designed based on the geometric structure of markers,and after the two child robots moved,the parent robot could determine their positions using the total station.The parent robot then moved, and its position was determined by the two child robots.This process repeated until all the areas were covered.Unlike other collaborative positioning systems,this approach did not require submap fusion as the measurements from the total station were very accurate.

Fig.6.Basic paradigm of LiDAR based map fusion.

Collaborative positioning systems based on consumer-level equipment have been proposed to enable fully automatic swarmbased mapping.In one such system proposed in Ref.[73], a centralized online LiDAR-based multiple SLAM system was proposed, which utilized SegMap descriptor [91] to construct incremental sparse pose-graph optimization.Sequential and place recognition constraints were added for more constraints.Another system, MUC-LOAM proposed in Ref.[75], was a feature-based multi-robot LiDAR odometry and mapping framework that focused on the uncertainty problem.The covariance of features was quantified by a handcrafted function,and the uncertainty of poses was evaluated by a Bayesian Neural Network (BNN).The experiments showed improved robustness and accuracy.In Ref.[77],DiSCo-SLAM was proposed as a distributed multi-robot SLAM system, which used Scan Context descriptor to establish correspondences and transfer spatial information efficiently.The poses of agents were corrected by a two-stage global and local optimization framework for map fusion.Firstly, a global-to-local coordinate transformation was established,then a subsequent local pose graph optimization was conducted to remove outliers and refine poses.Zhong et al.[76]proposed DCL-SLAM,which was a fully distributed collaborative LiDAR SLAM framework with minimal information exchange.Similar to the work of Ref.[77], it used LiDAR-Iris descriptors as the main features to establish correspondences in the distributed loop closure module and back-end module.The communication message was specially designed to improve the computation and communication efficiency.The strategy is that only useful information is transferred between agents.The sharing message include global descriptors for each keyframe, sharing filtered point cloud with pose if a candidate is found and matching result if verification pass.The transfer of the latter two messages is conditional, which reduces the redundant communication.

The swarm system in challenging environments was also explored by researchers.Facing the tasks of search and rescue of forest environments, the work of Ref.[71] proposed a centralized IMU/LiDAR/Altimeter based UAV swarm that could perform onboard sensing, estimation and planning tasks.Object level map fusion was done by using the local configuration of a group of trees as a distinctive feature, and cycle consistent multiway matching was done to eliminate incorrect matches.Li et al.[69] proposed a multiple vehicle based swarm system in the autonomous driving scenarios.Using point clouds as the inputs,the connections of pose graph from different vehicles were built by registration based method to construct map fusion constraints.The system showed good performance in city and country road scenarios.But in the degraded scenarios such as roads in forest with repetitive trees and highways without too many surrounding references, the positioning accuracy will be affected.For underground environments,centralized multi-robot LiDAR SLAM architecture was proposed Large-Scale Perceptually-Degraded subterranean environment[74]was proposed.Each agent has an accurate LiDAR/vision based front-end and a local map constructor.A server is equipped with loop closure corrector with incremental consistent measurement set maximization mechanism to ensure the correctness.

5.3.Summary

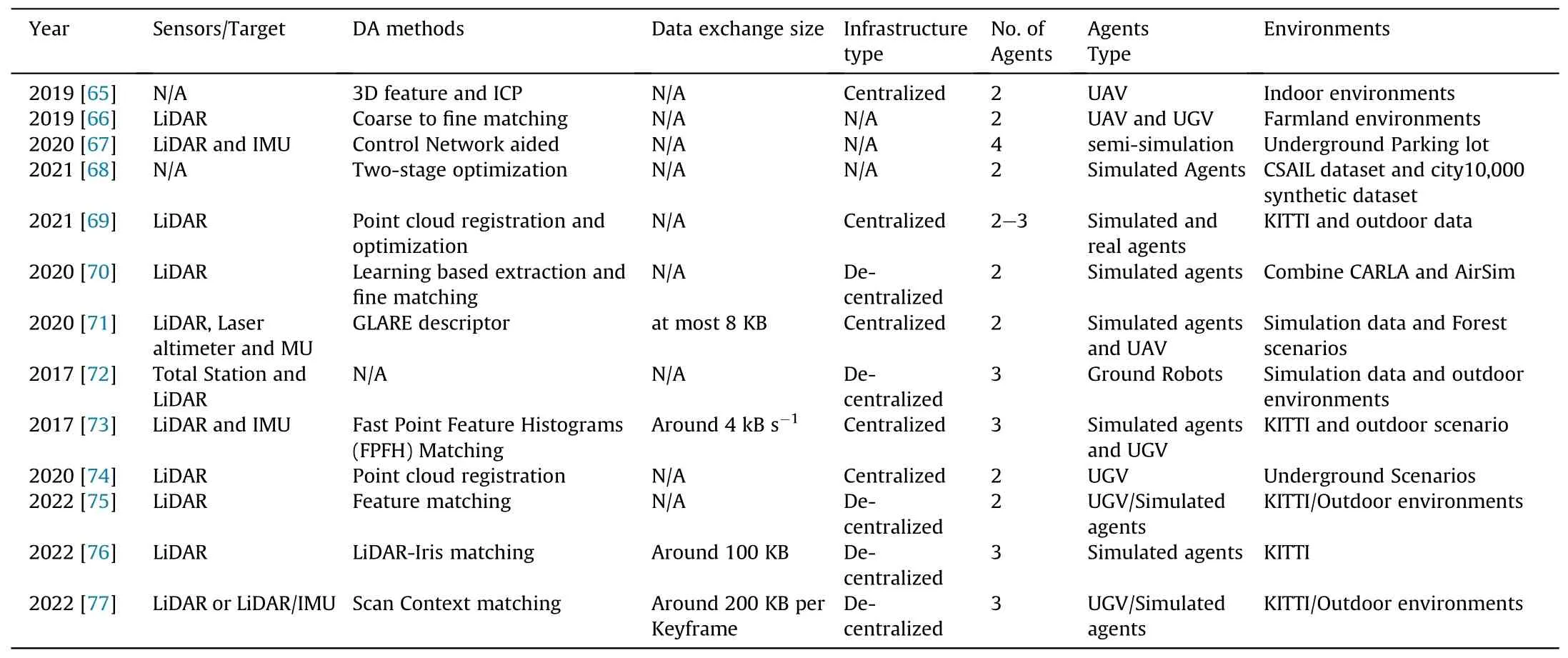

From Table 5, it can be observed that the majority of previous research only used 2 agents for experiments.With the common platform of UAV and UGV.KITTI is the most frequently used dataset for demonstrations.Although there are fewer papers for LiDARbased collaborative positioning compared with vision-based collaborative positioning, different data association algorithms have been developed to improve accuracy and robustness.Various 3D features,including GLARE(Geometric Landmark Relations)and FPFH descriptor, have been used to establish correspondences between different maps,and point set registration cloud registration is an alternative method for this purpose.While most papers do not analyze the size of data exchange, the size of LiDAR-based collaborative positioning is similar to that of vision-based collaborative positioning, mainly due to the usage of 3D feature descriptors.

Table 5 Summary of current LiDAR based collaborative positioning methods.

6.Wireless based collaborative positioning

Wireless sensor-based cooperative positioning is another commonly used method for collaborative positioning.One common approach to acquire absolute positioning information is to adopt the Global Navigation Satellite System (GNSS) receiver,which is able to provide Positioning,Navigation,and Timing(PNT)services [92].However, GNSS receivers have limited accuracy and stability due to their signal structure, and they may not function properly in the presence of electromagnetic interference or obstructions.To address these issues,range-based relative positioning sensors such as range radios can be adopted to improve positioning accuracy and reliability [93].

When a GNSS receiver is available, it can provide absolute positioning for each swarm unit.In addition, on-board Inertial Measurement Units(IMUs)and ranging measurement devices such as WiFi, Zigbee, and UWB can be tightly integrated with the GNSS receiver to provide relative positioning services, and the relative coordinate system can be mapped into the absolute coordinate system provided by GNSS sensors[94,95].To improve the absolute positioning accuracy, optimal projection algorithms can be used.However,when the swarm is in a GNSS-denied environment,other sensors such as IMUs and ranging measurement devices can be utilized to maintain relative positioning.As for the absolute positioning, algorithms such as statistical signal processing and machine learning can be deployed for absolute positioning [96-98].For instance, Qi et al.[98] formulated the measurements from UWB, IMU and GPS as a global optimization problem of a nonconvex function to obtain the positioning solution.

To achieve collaborative positioning, sensors such as GNSS receivers and IMUs obtain measurements on each node, with no mutual information exchange among the member nodes.Each node's positioning information is calculated locally.To achieve collaboration,the swarm must satisfy the following requirements:each node has a unique integer identifier, can discover, range to,and communicate with nearby nodes.Real-time intermediate distance between each pair of nodes can be measured using techniques such as Arrival Of Angle (AOA) [99], Time Of Arrival (TOA)[100],Time Difference Of Arrival(TDOA)[101],and Received Signal Strength Indicator (RSSI) [102].Collaborative positioning can then be performed using the Euclidean distance matrix constructed from all estimated relative distances.

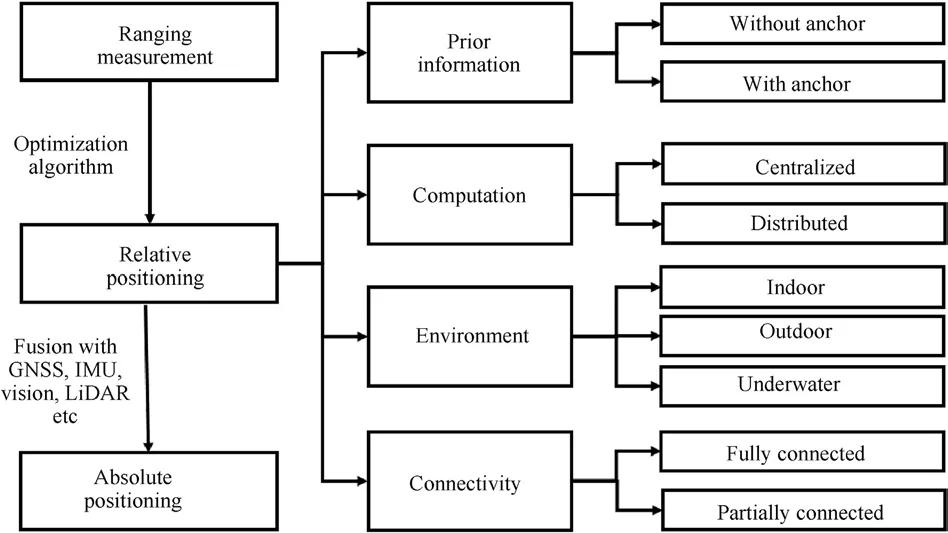

Fig.7 illustrates the three main steps involved in wireless sensor-based collaborative localization: ranging measurement,relative positioning, and absolute positioning.Optimization algorithms such as Least Squares Estimation and pose graph estimation are used to calculate the positioning solution using observations from the ranging measurement, enabling relative positioning between agents.Range radios used for measuring relative distance can be integrated tightly or loosely with other sensors to obtain the absolute position.Relative positioning can be divided based on four dimensions: prior information, computation, environments, and connectivity.

The current development of wireless sensor-based methods can be divided into seven aspects.In the following section, we will provide a brief review of related papers on each aspect.

6.1.Improved ranging methods

For missions requiring high accuracy, TOA is a widely used method.In this approach, timing tags or pseudo-range measurements are exchanged between the nodes via communication links.Since the signal propagation speed is known, the relative distance can be calculated by measuring the signal propagation time.However,the signal propagation time is influenced by factors such as clock error, space disturbance, and device delay, which need to be separated from the aggregated delays.The accuracy of the ranging measurement is thus determined by the parameter estimation error.

Fig.7.The basic paradigm of wireless sensor based collaborative positioning.

Similar to GNSS, a one-way centralized network is a viable option for ranging measurements.One notable example is the Locata pseudolite system [103], which can achieve centimeter-level positioning accuracy.In this system, the positions of the base stations are fixed and known, allowing the clock error between the master and slave stations to be decoupled from the one-way communication link.Furthermore, if the positions of the system members are fixed but unknown, traditional Two-Way Ranging(TWR) [104] can be used to estimate clock error parameters and ranging parameters.

Because of the fixed positions,the signal transmission delays of the forward links and reverse links are equal.The clock error,however, can be modeled by a linear model or a second-order polynomial, turning the ranging methods into a parameter estimation problem.Numerical statistical signal processing algorithms have been proposed to solve this problem.An affine model has been suggested to linearize the observation equation.After a few observation timing stamps are collected locally, least square (LS)estimation algorithms based on this model have been proposed[105].Alternatively, the Kalman Filter (KF) can be applied if the clock model and ranging model are expressed by the state equation and observation equation, respectively[106,107].

Furthermore, in systems where member nodes are in motion and their positions and velocities are known in advance,the signal transmission delay for each single forward or reverse link can be directly calculated using relative kinematic theory.For example,the GNSS inter-satellite link achieves sub-nanosecond level clock synchronization by using the prior information provided by ephemeris[108].However,if all the member nodes move arbitrarily,the signal transmission delays of the forward and reverse links may not be equal, making parameter estimation methods mentioned above unfeasible due to an increasing number of unknown parameters.Although the joint ranging and clock synchronization process can still be considered a parameter estimation problem,it cannot meet the requirement of full-column rank.In Ref.[109],both the forward and reverse links were represented by the same Taylor expansion,and the transmission time instants of the slave node were approximated using the reception timing tags of the master node.Such a model can meet the requirement of full-column rank and is applicable for scenarios with low-velocity.

6.2.Ranging information communication

Efficient transfer of ranging information is crucial for large-scale and highly mobile swarms.Delays in transferring such information can lead to outdated positioning data and task failures.To address this challenge, Shan et al.[110] proposed a universal Ultra-Wideband (UWB) protocol that facilitates efficient transfer of wireless ranging information and other data in dynamic and dense swarms.The protocol adjusts the ranging frequency in real-time based on the changing distance between each pair of nodes,thereby enabling adaptive ranging frequency and efficient transfer of ranging information.

6.3.With anchor nodes

After obtaining the ranging measurements between each pair of nodes, collaborative positioning can be achieved through Multidimensional Scaling (MDS).The MDS-based approaches for collaborative positioning were summarized in Ref.[111].As a method for reducing dimensionality,MDS takes a Euclidean distance matrix as input and maps a set of nodes onto a n-dimensional space.When accurate information about anchor nodes is available,one can apply a roto-translation transformation after the MDS computation to improve the accuracy of localization by superimposing the estimated coordinates over the anchors [112].Alternatively, by modifying the cost functions or adding constraints, the information of anchor nodes can be used in the minimization process [113,114].These approaches are typically implemented in a centralized network, where all the ranging data is processed at a central processing unit.In Ref.[115],a distributed algorithm was proposed for MDS-based positioning on each member node with limited exchange of information with its neighbors.Additionally,the network with anchor nodes can be designed as a tree structure, where the anchor nodes are accurately synchronized and broadcast data frames at desired time slots.In Ref.[116],a distributed time division multiple access scheme for the anchor nodes was proposed,and the positions were calculated based on gradient descent/ascent methods.Moreover,the number and positions of anchor nodes can have an impact on the positioning accuracy.Therefore, to simultaneously localize multiple targets using anchors as shared sensors,anchor selection and placement should also be considered[117,118].

6.4.Velocity-assisted

Incorporating relative velocity information into positioning is a crucial step towards more accurate localization.One approach is to combine relative velocity measurement with relative position to build an Extended Kalman Filter (EKF) estimator, as proposed in Ref.[119].In Ref.[120],a distributed consensus filter was presented that utilizes measurements of both relative position and velocity.Similarly, in Ref.[121], the authors presented a distributed KF method that integrates measurements to estimate the target state.Another method that combines velocity and ranging measurements is based on MDS.In Ref.[122],the authors proposed vMDS,a non-convex optimization method that utilizes velocity and ranging measurements.An improved Broyden-Fletcher-Goldfarb-Shanno(BFGS) algorithm was also introduced to solve the framework,outperforming vMDS in both accuracy and convergence speed.In Ref.[123], Li et al.proposed a vanilla EKF algorithm for UWB localization, utilizing an augmented state vector with acceleration bias in all three axes for updates in the EKF algorithm.

6.5.Graph theory

To enhance the accuracy of collaborative positioning in the absence of prior information,extra information such as velocity and anchor nodes can be incorporated.The connectivity topology can also serve as an additional source of information by collecting ranging information among the system members through integrated communication and navigation signals.In Ref.[124], a gradient-based local optimization method for rigidity was proposed, although constraints between member nodes at different times were not discussed.In Ref.[125],both distance and direction constraints were considered,and graph rigidity theory was used to analyze the localizability conditions of the algorithm.Furthermore,the relationship between distance and time in TOA measurements provides an invariant equality involving both position and clock information.In Ref.[126],the authors presented a new perspective based on rigidity,in which the clock synchronization process can be regarded as clock rigidity.By combining distance and clock rigidity,a joint position-clock estimation algorithm was proposed.

6.6.All-nodes in motion

Compared to networks that require anchor nodes,systems with all-nodes motion have wider application potential.In fact, networks with anchor nodes can be viewed as a special case of anchorless networks.In such scenarios, batch filters can be used,requiring a series of measurements over a short period of time.Furthermore, assuming that clock parameters are constant during this period and that relative motion can be fitted using Maclaurin's series,LS-based estimators can be used with timing measurements[127]or pseudo-range measurements[128]obtained through peerto-peer ranging measurements.However, the problem can be simplified if other positioning sensors are used, such as GNSS and Inertial Navigation Systems (INS).In Ref.[129], a collaborative localization method for UAVs was proposed using a distributed event-triggered EKF method that shared INS data, GNSS pseudoranges,and signal of opportunity pseudoranges.

6.7.Deep learning

Different from the conventional methods,the machine learning techniques are able to perform localization by capturing all probable range values instead of a single estimate of each distance.Therefore,it could be implemented in some particular scenarios.In Ref.[97], the authors adopted the dimensional reductions techniques to map the range measurements to low-dimensional features, and then obtained the generative model relying on the density estimation algorithms.In Ref.[130],in order to acquire high accuracy localization with RSS-based fingerprint, a novel kernel method was proposed to select beacon optimally.In Ref.[131], an estimator which combines Convolutional Neural Network (CNN)and Long Short-Term Memory(LSTM)was proposed to classify the Line-Of-Sight (LOS) signals and None-Line-Of-Sight (NLOS) signals before performing localization.Although collaborative localization benefits the machine learning technique, current applications are limited in light of the requirement of large computing resources.It could improve the localization performance in scenarios such as satellite formation flying, indoor positioning and robot systems,since massive training data could be collected to perform supervised learning.However, for the scenarios whose member nodes move randomly, deep learning techniques are not effective in this case.In addition, the Wireless Insite®software could be implemented to provide 3D ray-tracing for the analysis of site-specific radio wave propagation and wireless communication systems.By combining this software with a particular map, the user is able to get an evaluation environment with a series of channel state information data in both time-domain and frequency-domain.In addition,numerous public fingerprinting datasets are provided for indoor positioning,some of the representative works are[132]:UJI 1-2, LIB 1-2 (collected at Universitat Jaume I, Spain), MAN 1-2(collected at University of Mannheim, Germany), TUT 1-7(collected at Tampere University, Finland) and UTSIndoorLoc(collected at University of Technology Sydney,Australia).Moreover,the existing datasets for indoor-outdoor positioning are summarized in Ref.[133].

6.8.Summary

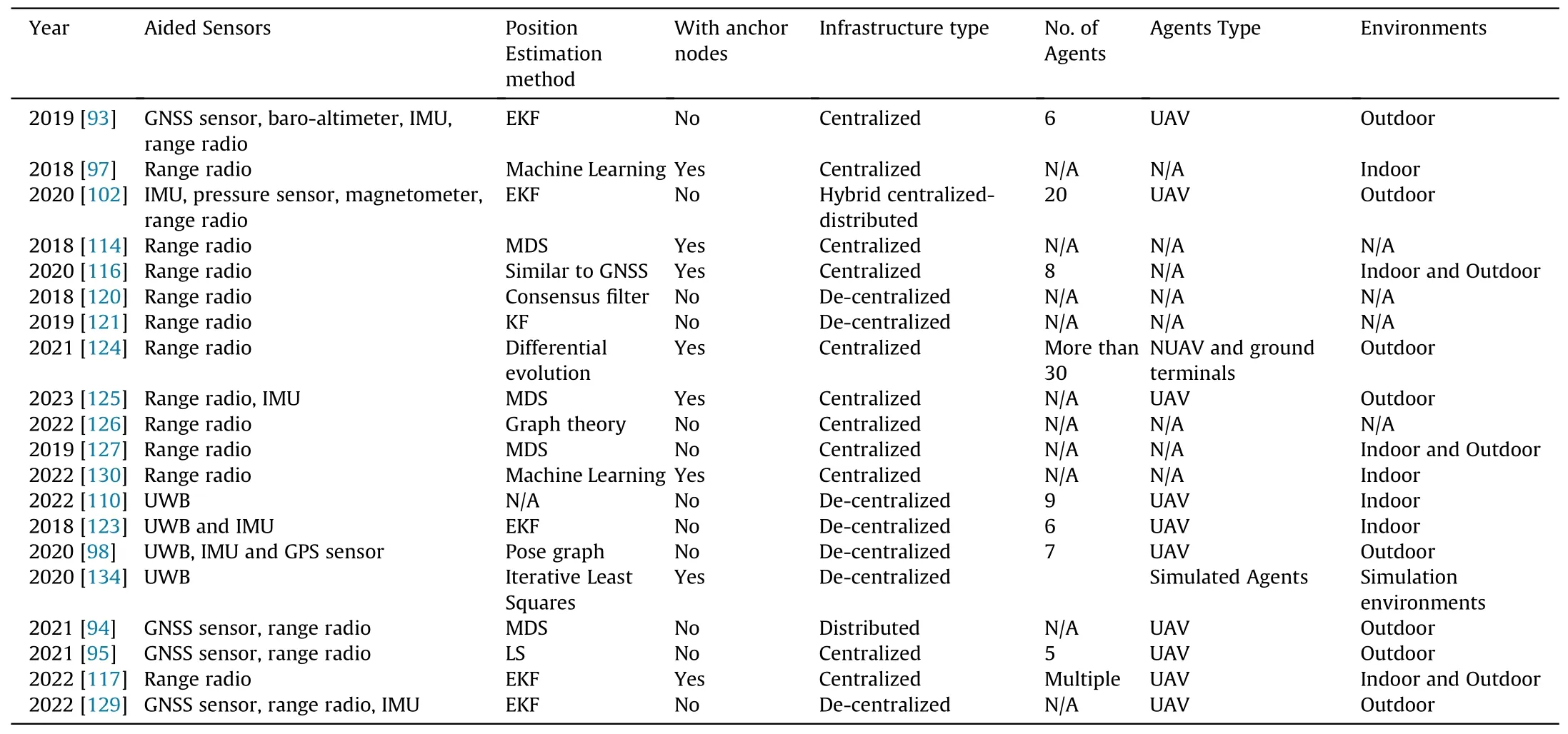

Table 6 summarizes and compares the literature mentioned above.The key components of collaborative localization are achieving relative positioning and autonomous positioning in many scenarios.Wireless range radio is often used to measure relative distance, followed by autonomous and relative positioning.When fused with other sensors like GNSS receivers, the relative position space can be mapped to the absolute position space for highprecision absolute positioning.These wireless sensor-based approaches can also be combined with GNSS and IMU to achieve robust and stable performance.While traditional methods remain the primary method for collaborative positioning, machine learning-based methods have been used as a new paradigm for parameter estimation.The number of agents can be up to 30,which is generally larger than vision and LiDAR-based methods.It should be noted that some papers focused on theoretical aspects and did not have a fully-operational collaborative positioning system, and thus the experiments do not specify a clear number of agents.As with the other two categories, UAVs are the main type of agents,and experiments are conducted in both indoor and outdoor environments.

7.Vision, LiDAR and wireless integrated collaborative positioning method

Collaborative positioning methods based on a single sensor havelimitations due to the characteristics of the sensor.Normal vision sensors,for example,cannot capture useful information in textureless and poorly illuminated environments.Although LiDAR measurements are generally more accurate, LiDAR loses color information, and the accuracy can be affected by rain and fog.Signals from wireless sensors can be easily affected by environmental factors, such as NLOS effect, and may rely on external infrastructures or anchors, which constrains their applicable range.Therefore, the integration of three types of sensors provides the possibility to complement their own weaknesses and expand their scope of applications.

As the Captain went in last he came out first, and made them all pass by him; he then closed the door, saying: Shut, Sesame! Every man bridled3 his horse and mounted, the Captain put himself at their head, and they returned as they came

Table 6 Summary of current wireless sensor based collaborative positioning methods.

Table 7 Summary of current integration based collaborative positioning methods.

The integration of camera and LiDAR has become popular in many scenarios such as autonomous driving due to their complementarity in odometry-based pose estimation.One of the earliest works can be traced back to 2006 when P.Newman et al.used images and point clouds for loop detection and closing[25].While many single-agent-based methods have been proposed, multipleagent methods are relatively few [135,136].In Ref.[136], the pose of each agent was estimated by tightly coupling visual-LiDAR odometry, and the pose graph from the camera on the UAV and the LiDAR on the UGV was jointly optimized to provide more accurate poses for the global map.David et al.[137] proposed an onboard positioning system that could perform vision-and LiDARbased odometry separately.Odometry constraints, loop-closure constraints, and GPS constraints are integrated into a pose graph for accurate poses.In the work of Ref.[138],an agent was equipped with a UWB module that generated relative positioning information between two agents.The signal was loosely coupled with visual-inertial positioning by adding a residual term in the optimization process.

The loosely coupled integration system is relatively less complex, as the raw observations are not directly used in pose estimation.In Ref.[139],visual information was loosely integrated with point clouds from LiDAR.The visual information was only used for loop detection, and a RANSAC-based approach was used to detect and remove inconsistent loops.Wang et al.[140]used maps from a UAV as a reference,and a UGV calculated its pose and updated the map simultaneously.

The research on the integration of visual information and wireless signal such as UWB is also an active field.In Ref.[141], a UGV equipped with LiDAR and UWB and a UAV equipped with a VIO system and UWB formed a heterogeneous multi-agent system.Relative positioning was achieved by pose graph optimization using relative measurements from UWB and VIO poses.Similarly,Hao et al.[142] proposed a relative positioning method based on a loosely coupled UWB and VIO system.The VIO module inside UAV α provided a relative positioning solution for itself, while another UAV β was detected and tracked by UAV α.The UWB measurements provided their relative distance, and the poses from VIO and the distance from UWB formed the constraints used to optimize relative poses.Therefore, the poses of UAV α and UAV β could be determined accurately.

Table 7 indicates that the number of papers focusing on the integration of vision, LiDAR, and wireless sensors is relatively low compared to those that use single-sensor-based methods.This suggests that integrating multiple sensors on multiple agents is a complex task.Based on the analysis, the most popular sensor combinations are:(1)Vision and LiDAR sensors;(2)UWB and other sensors.Most researchers use two agents for demonstration purposes, and feature matching remains the primary method for establishing connections between different maps from different agents.However,in some cases,a combination of feature matching and point set registration can improve the robustness of the system.

8.Challenges and open issues

Based on the analysis of the development of collaborative positioning, several challenges and open issues can be identified.However, it should be noted that some of these challenges and open issues are similar to those for single-agent-based positioning,such as robustness and accuracy, reliability and integrity, and the exploration of new perception sensors.This section will focus more on the challenges and open issues specific to collaborative positioning.

8.1.Limited and unstable data communication

Although modern communication technologies such as 5G can alleviate the pressure on data transfer, the efficiency issue still needs to be taken into consideration,especially when dealing with a large number of agents (e.g.,100 agents) or large-scale environments.There are fewer papers focused on the data transmission problem as shown in sections 3,4,and 5.How to minimize the data size and maximize the effective information,especially from image and LiDAR data, remains an open issue.For collaborative positioning based on vision and LiDAR, the key problem is the simplified representation of local maps.For example, point clouds covering an area of 1 square kilometer can reach up to GB level.Semantic maps are one applicable method for extracting useful spatial information.There have been many attempts to address this problem.For instance, in the work of Ref.[71], an object-based representation was proposed for low-bandwidth communication requirements in a forest scenario.In Ref.[143], instead of exchanging full map data between all robots, data association between two agents was performed only by a NetVLAD [144] global descriptor.Once successful on image matching, only the data required for relative pose estimation is sent to reduce the size of data exchange.

The general principle of training deep neural networks (DNNs)is to find distinctive and informative features in the data.However,how to define and detect such features in collaborative maps remains an open challenge.With appropriate DNNs,the size of maps can be reduced to the MB or even KB level, further reducing the need for transferring spatial information.But the retained maps should be able to support modules in collaborative positioning.Therefore,controlling the semantic information extraction process becomes crucial.If the semantic information extraction is too strict,it may result in significant loss of useful information, leading to difficulties in map fusion.Conversely, if it is too loose, the compression may not be significant.Thus, finding the optimal balance between these two extremes requires further exploration by researchers.

Collaborative positioning using wireless sensors faces challenges when it comes to stable communication links and large bandwidth in large-scale swarms or environments.Despite some pioneering works on message design [110] and communication infrastructure [134] for large swarms, there is limited research on the impact of unstable communication links in swarms and how positioning algorithms can address this issue.More research is needed to understand and mitigate the effects of unstable communication links on collaborative positioning using wireless sensors.

8.2.Computation burden for agents

For de-centralized and partially centralized collaborative positioning systems, real-time performance is limited by power consumption and computational ability.Therefore, the positioning algorithm must not be too complex.In terms of data size, the requirements of vision and LiDAR-based methods are much larger than wireless sensor-based methods.However, there have been few papers addressing this problem.One approach is DDF-SAM[146], which contains local optimizer, communication, and neighborhood optimizer modules.A hard-constrained factor graph is proposed to enhance the robustness to agent failure.This approach achieved low computational cost and communication bandwidth requirements, while being robust to node failure and changes in network topology.Another approach is DDF-SAM 2.0 [147], which combines both local and neighborhood information into a single incremental Bayes tree solver to further reduce computational burden.However,there has been no comprehensive and systematic research on how to leverage the computation burden of the main agent in a centralized swarm.

8.3.Robustness and accuracy

Robustness and accuracy are common problems in positioning systems.Many papers have focused on improving these aspects in single-agent positioning systems, and this will continue to be a concern in collaborative positioning [148].

There are two categories of common issues for vision-based collaborative positioning: factors that have a direct influence on data association [149],such as blurring,weak texture or structure,illumination changes, dynamic objects, large viewpoint changes,weather changes, and season changes, which can cause incorrect data association; and factors related to the estimation process for poses.In a large-scale environment, a large proportion of objects are far away from the cameras,which can make it difficult to obtain enough constraints for pose estimation even under normal conditions.To improve the robustness and accuracy of vision-based collaborative positioning, advanced feature matching or registration methods, deep learning methods, mismatching removal, and proper pipeline design of map fusion can be used to enhance the data association and optimal estimation.Data-driven methods can also be leveraged to address the latter issue.For instance, newlyproposed NeRF-based SLAM approaches such as NICE SLAM [150]can provide a different perspective compared to traditional methods.They optimize the loss on photometric observation without feature extraction and matching, and can achieve highlevel mapping solutions as full-dense maps can be optimized.

LiDAR-based collaborative positioning may face several unfavorable factors, such as degraded structures (e.g., tunnels or environments with no references),which cause the scans from LiDAR to be almost identical, resulting in the loss of differences in one dimension.Therefore, multi-sensor fusion-based methods that incorporate observations from inertial, vision, or wireless sensors may be more feasible than LiDAR-only methods in this challenging scenario.This highlights the high academic value of collaborative positioning in complex environments.Another factor is time synchronization with other sensors.While time difference estimation between LiDAR and other sensors on a single platform has been well researched, the time calibration problem on collaborative positioning systems using multiple constraints from each agent as a whole is still an open issue.The constraints include agent-level and sensor-level information.

For wireless sensor-based collaborative positioning, there are several challenging factors that can affect its robustness and accuracy, including space disturbance, electromagnetic interference,and obstacle occlusion.These factors can lead to two outcomes:degradation of ranging accuracy and invisible communication links.To address these issues, one possible approach is classification,which can be used to filter out unusable data,and statistical signal processing algorithms can be adopted to improve ranging accuracy.For invisible communication links,a feasible solution is to estimate missing data using past information.Once the missing links are completed by the estimated data, traditional collaborative localization methods can be applied.Alternatively, optimization algorithms can be used to perform positioning using partial information.

8.4.Reliability and integrity

Reliability can be assessed in terms of internal and external reliability to measure the performance of outlier detection in the observation domain.Integrity,on the other hand,reflects the level of trust in the positioning domain.While there has been a considerable amount of research on reliability and integrity of single-agent multiple-sensor fusion, the same cannot be said for collaborative positioning due to various factors.Although certain principles underlying wireless sensor-based collaborative positioning are similar to that of GNSS,the process of vision and LiDARbased collaborative positioning involves multiple steps in a pipeline, making uncertainty propagation unclear.Furthermore,nonlinear and non-Gaussian noise are often present, making traditional reliability analysis and integrity monitoring methods less effective.One promising approach is to use deep neural networks (DNNs) to learn the positioning error model by testing various scenarios.Several large-scale datasets,including Cityscapes[151] (city data), Tartanair [152] (simulated data), and UAV data[153], have been used to verify correctness and compare performance of many positioning methods.However, it remains challenging to define the differences between cases and establish error rules that apply universally.

8.5.Exploration on new perception sensors

As positioning sensors become increasingly lightweight, inexpensive, and low-power, new sensors such as event cameras and solid-state LiDAR are emerging.Many researchers have developed new positioning methods using these sensors.However, collaborative positioning based on these new sensors is still uncommon due to the differences in the characteristics of their observations compared to traditional sensors.For example, event flows from event cameras differ from traditional camera frames, making traditional data association algorithms impractical.Thus, collaborative positioning using these sensors requires further exploration to bridge this gap.

8.6.Active collaborative positioning

Active positioning, also known as active SLAM, is an important extension to collaborative positioning since it allows for efficient and safe exploration of unknown environments.Active collaborative mapping is a by-product of active positioning, as mapping cannot be implemented without positioning information.One advantage of active collaborative positioning is that the motion of the agent may contribute to positioning in terms of accuracy and robustness.For example,if the camera on one agent can control the shooting angle, it can always face a texture-rich area to keep long feature tracks, which is helpful for positioning.Some pioneering papers about active positioning of multiple robots have been proposed by Kai et al.[154]and Dong et al.[155].Although they used different sensors, the efficiency and completeness of map reconstruction were the main focuses.The former one modeled it as a bipartite graph matching problem,while the latter one formulated it as an optimization problem.Another different work was proposed by Hui et al.[47].Although path planning was added to modify the ORB-SLAM system for active loop closure, it ran standalone without consideration of using the robots as a whole.However, the relationship between collaborative positioning and collaborative exploration still lacks comprehensive research,especially on how to control the exploration to improve the accuracy and robustness of collaborative positioning.

8.7.Collaborative positioning dataset and framework

Positioning datasets usually focus on a single agent [156].To simulate scenarios for multiple agent based positioning, single agent based datasets such as KITTI can be divided into multiple parts.However,this kind of simulation is only suitable for method validation, and is far from being applicable for real applications.Developing multiple agent based datasets based on wireless sensors is mainly concentrated in the field of indoor positioning and robot systems,which are based on known and well-calibrated anchors.The difficulties of developing multiple agent based datasets lie in the higher cost compared to single agent based datasets.One economical way to generate such datasets is through computer graphics simulation and certain mathematical functional models.For example, TitianAir [152] used photo-realistic simulation environments that include moving objects,changing light,and various weather conditions.This allows the generation of multi-modal sensor data including RGB, camera poses, and LiDAR data.Going further,different moving platforms and wireless data can be added,and the loss of communication can be considered.Developing more diverse datasets for collaborative positioning in different scenarios,agent sizes, and anchor types is a promising direction for future work.

The collaborative positioning community currently lacks an offthe-shelf framework that includes fundamental components such as communication links between agents.If a general framework were to be open-sourced and provided as a platform, the community would be able to focus more on algorithm development with greater convenience.

9.Conclusions

This paper provides a brief review of recent developments in collaborative positioning based on vision, LiDAR, and wireless sensors, as well as their integration.The authors summarize and discuss the recent progress,key components,main paradigms,and experimental details for collaborative positioning for each sensor type.They note that UAVs are the primary platform for collaborative positioning,while research on USVs and satellite swarms is still lacking.They also highlight map fusion as a critical component for vision and LiDAR based collaborative positioning.For wireless sensor-based methods, the authors point out that various aspects need to be taken into consideration, such as ranging measurements, estimation methods, and motion scenarios.While deep learning-based methods have shown promise in handling complex scenarios, they still require further exploration.Finally, the paper identifies and describes various challenges and open issues,including both engineering aspects (e.g.communication, computation burden, dataset, framework, and new sensors) and theoretical aspects (e.g.robustness, accuracy, reliability, integrity, and active collaborative positioning), which need to be addressed for further research in this field.

This paper presents a concise overview of collaborative positioning based on vision, LiDAR, and wireless sensors, highlighting recent developments and key components.The review includes a selection of representative research papers,including preprints, to present the latest progress in the field.To improve readability,complex theoretical equations are not included.The authors aim to provide a panoramic reference for scholars with expertise in vision,LiDAR, and wireless sensor-based positioning to initiate further research on collaborative positioning.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The authors would like to acknowledge National Natural Science Foundation of China (Grant No.62101138), Shandong Natural Science Foundation (Grant No.ZR2021QD148), Guangdong Natural Science Foundation(Grant No.2022A1515012573)and Guangzhou Basic and Applied Basic Research Project(Grant No.202102020701)for providing funds for publishing this paper.

- Defence Technology的其它文章

- Explosion resistance performance of reinforced concrete box girder coated with polyurea: Model test and numerical simulation

- An improved initial rotor position estimation method using highfrequency pulsating voltage injection for PMSM

- Target acquisition performance in the presence of JPEG image compression

- Study of relationship between motion of mechanisms in gas operated weapon and its shock absorber

- Data-driven modeling on anisotropic mechanical behavior of brain tissue with internal pressure

- The effect of reactive plasticizer on viscoelastic and mechanical properties of solid rocket propellants based on different types of HTPB resin