Meibomian glands segmentation in infrared images with limited annotation

Jia-Wen Lin, Ling-Jie Lin, Feng Lu, Tai-Chen Lai, Jing Zou, Lin-Ling Guo,Zhi-Ming Lin, Li Li,4

1College of Computer and Data Science, Fuzhou University,Fuzhou 350108, Fujian Province, China

2Fujian Provincial Key Laboratory of Networking Computing and Intelligent Information Processing, Fuzhou University,Fuzhou 350108, Fujian Province, China

3Shengli Clinical Medical College of Fujian Medical University, Fuzhou 350001, Fujian Province, China

4Department of Ophthalmology, Fujian Provincial Hospital South Branch, Fujian Provincial Hospital, Fuzhou 350001,Fujian Province, China

Abstract

● KEYWORDS: infrared meibomian glands images;meibomian gland dysfunction; meibomian glands segmentation; weak supervision; scribbled annotation

INTRODUCTION

The meibomian glands (MGs) are a series of large sebaceous glands that extend along the eyelid margin behind the eyelashes.They are responsible for producing and secreting lipids called blepharoplasts, which constitute the lipid layer of the tear film.This lipid layer is crucial for maintaining the health and integrity of the ocular surface and is an essential component of the tear film functional unit[1].Meibomian gland dysfunction (MGD) is a chronic and diffuse condition affecting MGs and is considered the primary cause of various ocular diseases, including dry eye and blepharitis[2].Epidemiological studies have demonstrated a global prevalence of MGD exceeding 35.9%, with variations among different racial groups ranging from 21.2% to 71.0%[3].Infrared imaging has emerged as an effective clinical technique for assessing the morphological characteristics of the MGs[4].Ophthalmologists employ these images to observe and analyze various MGs features, providing valuable insights for diagnosing MGD[5].However, relying solely on visual observation and clinical experience is subjective and yields relatively low reproducibility.In response to the challenges mentioned above, Kohet al[6]and Lianget al[7]investigated methods for distinguishing healthy images from unhealthy ones, while Fuet al[8]proposes a classification model based on color deconvolution and transformer structure.Furthermore, leveraging advancements in deep learning and medical image processing[9], Prabhuet al[10]used the U-Net architecture for MG segmentation,while Khanet al[11]introduced an adversarial learningbased approach to enhance segmentation accuracy.Linet al[12]introduced an improved U-Net++ model for MGs segmentation, yielding remarkable results.However, despite these advancements, many existing MGs segmentation approaches face limitations in clinical application due to their heavy reliance on a substantial amount of fully annotated label data.Manual annotation can be exceedingly time-consuming and labor-intensive, considering the considerable number and close arrangement of glands.Even for experienced ophthalmologists, the process can take an average of 5 to 8min per image[13].

Building upon these approaches, this study investigates the use of scribble annotation to achieve satisfactory automatic segmentation of MGs in infrared images.The proposed model is anticipated to substantially alleviate the manual labeling burden while simultaneously maintaining segmentation performance.This advancement is expected to enhance the diagnostic efficiency of MGD and facilitate the broader application of medical image processing.

MATERIALS AND METHODS

Ethical ApprovalThis study received approval from the Ethics Committee of Fujian Provincial Hospital (K2020-03-124) and adhered to the principles outlined in the Declaration of Helsinki.All subjects were duly informed and consented to participate in this study.

Acquisition and Pretreatment of Infrared MG ImagesThe infrared MG images used in this study were graciously provided by the Department of Ophthalmology, Fujian Provincial Hospital.These images were captured using an ocular surface comprehensive analyzer (Keratograph 5 M).The dataset comprised 138 patients with dry eyes (276 eyes) from January 2020 to June 2021.Given the technical challenges associated with everting the lower eyelid, particularly because of the absence of a substantial tarsal plate compared with the upper eyelid, MGs images of the lower lid often exhibit uneven focus and partial exposure.Consequently, in alignment with established research practices[17], our study concentrates exclusively on upper eyelid images.A batch cropping process was executed to eliminate potential confounding information,resulting in images with dimensions of 740×350 pixels(Figure 1A).The contrast enhancement mode was employed to accentuate the visualization of the MGs.After meticulous selection, images presenting issues such as eyelash occlusion,incomplete coverage, excessive blurriness, and substantial glare were excluded.Consequently, 203 images were retained.Construction of DatasetTwo datasets were meticulously developed for model training and validation: an MGs scribbled dataset and a fully annotated dataset.Preprocessed images underwent annotation by three experienced ophthalmologists,each possessing over one year of clinical experience.Annotation procedures were conducted using the polygon tool in the Labelme software, facilitating comprehensive delineation of the meibomian region and individual structures of the eyelid glands.In instances of annotation discrepancies,a fourth senior ophthalmologist was consulted to make the final adjudication.The fully annotated results are illustrated in Figure 1B.The meibomian region mask was multiplied with the cropped original image to eliminate interference,such as eyelashes, resulting in the final input image (Figure 1C).We processed the fully annotated dataset to construct the scribbled dataset to alleviate annotation pressure and draw inspiration from the skeletonization algorithm.The morphological skeletonization algorithm was employed to extract intermediate lines representing the foreground and background regions, simulating manually generated scribble annotations (Figure 1D).After thorough expert verification, the generated scribble annotations from our method exhibited no discernible difference from the manually annotated scribbles by experts.The dataset used in this study comprises 203 sets of images, partitioned into training, validation, and test sets with a ratio of 7:1:2.The training and validation sets were employed for model training and parameter tuning.In contrast, the test set was used to validate the proposed method.

Figure 1 Dataset of infrared MGs images A: Cropped image; B: Full annotation, meibomian (red) and the MGs (green); C: Input image; D:Scribble annotation, meibomian (red) and the MGs (green).MGs: Meibomian glands.

Model for MGs Segmentation in EyelidsWithin the framework of weakly supervised learning, we introduce an rectified scribble-supervised gland segmentation (RSSGS)model (Figure 2).This model uses the U-Net architecture as the segmentation network and integrates three key strategies:temporal ensemble prediction (TEP), uncertainty estimation,and transformation consistency constraint.Each image x,paired with its corresponding scribble label, serves as input to the model.The proposed network is trained with cross-entropy loss for scribble pixels as follows:

WhereSc,iandPc,irepresent the scribbled pixel and the model’s predicted probability for theipixel in thecclass, respectively.Ωsdenotes the set of scribble pixels.|Ωs| andMrepresent the total number of scribble pixels and classes, respectively.

For unlabeled pixels, we utilized the predicted exponential moving average (EMA) to generate pseudo-labels, offering initial segmentations for the unlabeled regions based on the model’s predictions.

Recognizing the potential disruption caused by unreliable labels, we integrate uncertainty estimation, quantifying the confidence level associated with each pseudo-label.Furthermore, we introduced a transformation consistency constraint strategy to enforce uniformity in the model’s predictions, resulting in more reliable and accurate segmentation.For unlabeled pixels, we utilized the predicted EMA to generate pseudo-labels, offering initial segmentations for the unlabeled regions based on the model’s predictions.Recognizing the potential disruption caused by unreliable labels, we integrate uncertainty estimation, quantifying the confidence level associated with each pseudo-label.Furthermore, we introduced a transformation consistency constraint strategy to enforce uniformity in the model’s predictions, resulting in more reliable and accurate segmentation.

年轻人紧紧跟住驼子,像生怕被驼子甩掉。驼子将防爆灯交给年轻人。年轻人放心了。“拎高点。”驼子道。年轻人举起防爆灯。驼子歪歪趔趔走在灯影里,阴影拉得很长,像尾巴。穿过风门,进入大巷,风流明显了。盗墓者和何良诸走在后面。盗墓者说:“驼子再不下来,我就要背叛行规了。”

Temporal Ensemble PredictionTo address the lack of supervision for unlabeled pixels, we drew inspiration from Scribble2Label[15]and introduced the TEP strategy.We used EMA[18]technique to amalgamate historical and current predictions throughout our network training process.This process results in an ensemble prediction encompassing knowledge learned from previous iterations, as the formula equation (2) indicates.

whereyrepresents the average prediction value,yo=p1.αis the adaptive ensemble momentum (refer to equation 4),ndenotes the number of average predictions.To ensure efficient integration and reduce computational expenses, we perform pseudo-label updates only everyγcycle and set the value ofγto 5.

Uncertainty EstimationOne limitation of the TEP strategy is that it treats reliable and unreliable predictions equally when generating pseudo-labels.However, the presence of unreliable predictions can have a negative impact on network optimization.Therefore, it is crucial to mitigate the effects of these unreliable predictions.

Inspired by uncertainty theory[19], we introduced an uncertainty graph that evaluates the uncertainty in the model predictions.The uncertainty graph can be represented as follows:

whereKcontrols the number of times, the model processes each image during training and is set to two in our approach.Pkrepresents the model output for thek-th pass.Introducing random Gaussian noise to the input images induces variability in the model’s output for each pass, facilitating uncertainty graph estimation.

Subsequently, we introduce an adaptive ensemble momentum mapping that varies for each pixel on the basis of the uncertainty graph U to guide the generation of pseudo-labels.This momentum mapping α is defined as follows:

where λ=0.6.By incorporating the adaptive weight α, we dynamically adjust the influence of each pixel’s prediction on the basis of its associated uncertainty.This enables us to prioritize certain predictions while down-weighting the contributions of uncertain predictions during pseudo-label update.

Additionally, we define a confidence threshold for label filtering.This threshold enables us to remove unreliable predictions and retain those deemed more reliable for network optimization.The label filtering function is represented by equation (5):

whereτrepresents the confidence threshold set at 0.8,δdenotes the grayscale value of unlabeled pixels, andzrepresents the grayscale value of scribbled pixels.

Having obtained with the reliable pseudo-labels in hand, we optimize the unlabeled pixels.The loss function for unlabeled pixels is defined in equation (6).

whereZc,iandPc,irepresent the pseudo-label and model prediction probability for thei-th pixel in thec-th class.Ωudenotes the set of unlabeled pixels, and |Ωu| represents the total number of unlabeled pixels.TheLupalso excludes unlabeled pixels in the pseudo-labels.Due to the initially generated unreliable pseudo-labels,Lupis applied for model optimization after theE-th epoch, which is set to 100 in our approach.

Transformation EquivarianceConstraintIn weakly supervised learning, it is expected that applying specific transformations such as flip, rotate, and scale to an input image will yield an equivariant segmentation outcome.This property,known as transformation consistency, is shown in equation (7).This consistency is crucial for maintaining spatial coherence and stability in the model’s predictions, even with limited annotated data.

whereIis the input image,Tis the transform function, andFis the segmentation network.

We impose constraints on these transformations through cosine similarity lossLtcas follows:

where ||·|| denotes the L2 paradigm.

The RSSGS framework optimizes the model using a weighted sum of three losses: the scribble pixel loss (Lsp), the unlabeled pixel loss (Lup), and the transformation consistency constraint(Ltc), as shown in equation (9):

Here,λu=0.5, and the parameterλtrepresents a weight function, as illustrated in equation (10).This weight function dynamically adjusts the magnitude of the transform consistency loss based on the number of training cycles.This adaptive approach serves the purpose of alleviating the premature impact of transform consistency on the network.

wheretdenotes the current number of training cycles, whiletmaxrepresents the maximum number of training cycles.

RESULTS

Adhering to the criteria outlined in pertinent literature[20], we employ accuracy (Acc), dice coefficient (Dice), and intersection over union (IoU) as evaluation metrics for our model.The training regimen spans 300 epochs across all datasets, using a batch size of eight.The RAdam optimizer is employed with an initial learning rate of 0.0003.To bolster the credibility of our experimental outcomes, a 5-fold cross-validation approach was implemented.Our comparative analysis assesses our method against state-of-the-art techniques for medical image segmentation.This evaluation compares models trained with either full annotation or scribble annotation.

Segmentation ResultsFigure 3 displays the segmentation outcomes of our method (Figure 3D) and the U-Net (full annotation) approach (Figure 3C) on MGs in infrared images.Both methods accurately segment MGs within non-edge regions, effectively capturing their irregular shapes.However,in edge regions, particularly in ambiguous areas, the U-Net[21]exhibits some segmentation errors, whereas our approach maintains a commendable performance, possibly attributed to the reliable pseudo-label.

Outperformance Compared with Other MethodsWe conducted a comparative analysis of our method against mainstream medical image segmentation approaches employing scribble labels.The methods considered include the minimization of the regularized loss method (PCE)[22], the Gated CRF loss-based method (Gated CRF)[23], the Mumford-Shah loss-based method (M-S)[24], an efficient method for deep neural network (Pseudo-Label)[25]dual-branch network method(Dual-branch Net)[26], and Scribble2Label[15].

In Table 1[21-28], our method excels with Acc, Dice, and IoU at 93.54%, 78.02%, and 64.18%, respectively.Outperforming other weak-supervised methods across all metrics, our method demonstrates improvements of 0.76% in Acc, 2.06% in Dice,and 2.69% in IoU compared with the leading Scribble2Label.In a broader comparison with models trained using full labels,including U-Net, skip-connections-based U-Net++[27], and full-scale connected U-Net3+[28], our approach, while slightly less effective than U-Net++, demonstrates reductions of 0.8%in Acc, 1.9% in Dice, and 3.1% in IoU.Importantly, our method achieves a significant 80% reduction in annotation costs, highlighting its capacity to minimize performance loss even with substantial cost cutting.In summary, our method consistently exhibits exceptional MG segmentation performance.

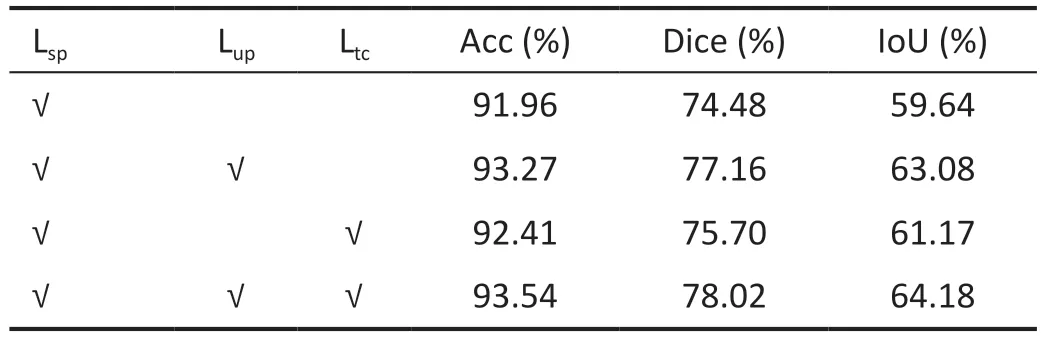

Ablation StudyIn this section, we conduct an ablation study to analyze the roles of uncertainty estimation (Lup) andtransformation equivariance constraint (Ltc).All experiments were conducted on scribble datasets, with U-Net used for evaluating the results.Table 2 presents the improvements associated with each component.The results indicate that the proposed method effectively enhance model performance when applied individually.Moreover, there is a synergistic effect when these methods are employed together.Specifically,the combined use resulted in an improvement of 1.58% in Acc,3.54% in Dice, and 4.54% in IoU.

Table 1 Performance comparisons with other state-of-the-art methods

DISCUSSION

The quality of the scribble annotations substantially influences the model’s performance.To assess the robustness of our method, we conducted experiments using scribble annotations with varying quality levels.Specifically, we randomly retained 10%, 30%, 50%, 70%, and 90% of the scribble pixels and used them as input for the experiment.As depicted in Table 3, adiminishing trend in the model’s performance was observed as the proportion of scribble annotations decreased.For instance,at a 10% proportion, the model achieved Acc, Dice, and IoU of 92.61%, 75.76%, and 61.23%, respectively.These values surpassed those of many methods employing 100% proportion,with only marginal decreases of 0.93%, 2.26%, and 2.88%compared with the results obtained with 100% proportion.This observation underscores the effectiveness of the proposed method in maintaining high performance even with reduced scribble annotation proportions.

Table 2 Ablation study on the effectiveness of different components

Table 3 Quantitative results with various amounts of scribbles

Our method demonstrates exceptional performance in addressing the challenging task of MGs segmentation with sparse annotation.Outperforming the latest weakly supervised segmentation method, it achieves segmentation results comparable with those of fully supervised methods while substantially reducing annotation costs.Notably, our method even surpasses in specific details.Its robustness is evident when faced with varying levels of scribble annotation; even under a 10% setting, the achieved Acc, Dice, and IoU metrics only marginally decreased by 0.93%, 2.26%, and 2.88%,respectively, compared to the 100% setting.Confronted with the limited supervision challenge arising from a scarcity of labeled data, we meticulously address the unique aspects of infrared MG images, such as the glands’ elongated and densely distributed characteristics.We introduce a temporal ensemblebased approach for generating pseudo-labels to augment label information, incorporating uncertainty estimation and consistency constraints to enhance label reliability.Our method effectively compensates for the limited information provided by scribble annotation, facilitating the automation of infrared MGs image analysis.This, in turn, provides valuable assistance to ophthalmologists in making accurate diagnoses.Furthermore, a time efficiency test was conducted, engaging three experts to annotate 100 MG images using the scribble annotation method.The results revealed that the average time spent on scribble annotation (100%) for one image was approximately 1.2min.This starkly contrasts with the average 5-8min required to thoroughly label each image, indicating a substantial reduction in annotation burden.

Despite the promising outcomes, our study does have some limitations.First, our investigation was exclusive to the infrared MGs dataset.It is essential to recognize that the applicability of our approach extends beyond this dataset alone.In future research, we aim to explore the performance of our approach on a more diverse range of datasets, evaluating its effectiveness in clinical practice and fostering a deeper integration of deep learning and medical imaging, ultimately enhancing artificial intelligence’s capacity to serve humanity more effectively.Second, concerning segmentation results,morphological parameters can be computed to derive various characteristics of individual glands, such as gland drop,tortuosity, and total gland count.Using these morphological parameters can offer valuable support to ophthalmologists in making accurate clinical diagnoses and designing appropriate treatment plans.

In conclusion, this study successfully addressed the segmentation task with limited labeled data, demonstrating remarkable performance.It promises substantial benefits for both physicians and patients.

ACKNOWLEDGEMENTS

Foundations:Supported by Natural Science Foundation of Fujian Province (No.2020J011084); Fujian Province Technology and Economy Integration Service Platform(No.2023XRH001); Fuzhou-Xiamen-Quanzhou National Independent Innovation Demonstration Zone Collaborative Innovation Platform (No.2022FX5).

Conflicts of Interest: Lin JW,None;Lin LJ,None;Lu F,None;Lai TC,None;Zou J,None;Gou LL,None;Lin ZM,None;Li L,None.

International Journal of Ophthalmology2024年3期

International Journal of Ophthalmology2024年3期

- International Journal of Ophthalmology的其它文章

- Late infection after peri-orbital autologous micro-fat graft:a case presentation and literature review

- Stromal lenticule addition keratoplasty with corneal crosslinking for corneal ectasia secondary to FS-LASlK:a case series

- Clinical features and possible pathogenesis of multiple evanescent white dot syndrome with different retinal diseases and events: a narrative review

- Utility of real-time 3D visualization system in the early stage of phacoemulsification training

- Efficacy of scleral buckling for the treatment of rhegmatogenous retinal detachment using a novel foldable capsular buckle

- Effect of navigation endoscopy combined with threedimensional printing technology in the treatment of orbital blowout fractures