Leveraging open source software and programming best practices for sustainable web applications in support of marine data sharing-BCO-DMO case

SUN Miao,ZUO Changsheng*,YIN Yue,Robert C.Groman,Adam Shepherd

1. National Marine Data and Information Service,Tianjin 300171,P.R.China;

2. Biological and Chemical Oceanography Data Management Office,Woods Hole Oceanographic Institution,Woods Hole,MA,02543 USA

Abstract: The National Science Foundation (NSF) of the United States of America funded project, the Biological and Chemical Oceanography Data Management Office(BCO-DMO), uses Drupal as a content management system (CMS) to organize and serve marine metadata and data from a subset of NSF funded and other projects.Metadata is essential for making data (in our case oceanographic data) discoverable,retrievable, and reusable. While most metadata must come directly from the data contributor,metadata from NSF funded research can be obtained from the NSF website.This paper describes our approach of leveraging Open Source Software and Programming Best Practices for Sustainable BCO-DMO Web Applications. We chose to set up an intermediate website and supporting database, calling it the NSF Tracker website,between the NSF and the BCO-DMO websites, implemented using Drupal, to acquire, store, and pre-populate the BCO-DMO metadata database. During the development process of the NSF Tracker website, we used carefully selected Drupal contributed modules and developed custom modules and followed the concept of loose coupling between the NSF website and the BCO-DMO website. To achieve loose coupling of the two websites, we decided to pass data between the websites by using web services. This way, neither website needed to know how data were stored or retrieved.The new version benefited by using Drupal modules,Drupal Form Application Programming Interface (API), jQuery, Ajax and PHP components. This approach improved security,flexibility and sustainability of the NSF Tracker implementation.

Keywords:Metadata,NSF,database,dynamic website,Drupal,loose coupling,marine data management,marine data sharing

Introduction

It is essential for a website to provide up-to-date and dynamic content for the engagement of a community of website visitors. However, this proved to be costly in time spent on maintenance and updates for older,static websites. Out of this need grew a new breed of software called content management systems(CMS)focused on the development and maintenance of websites.There are many examples including WordPress[1], Joomla[2]and Drupal[3]. Drupal[4]is a powerful CMS and is especially appealing when one needs to handle complex content. Drupal is an open source and highly modular CMS. The Drupal community provides strong support via frequent enhancements to ensure Drupal moves forward with new features. Independently developed modules are made available by Drupal users. These modules often add specialized or enhanced features to the standard Drupal distribution.The modules are available through the Drupal organization website and can save new users of the Drupal CMS from having to develop their own,custom modules.Modules take advantage of the basic Drupal tool set and its ability to use PHP custom code to add new capabilities and tools.

For example, Papanicolaou A.and David G. Heckel[5]explain how they used custom modules for bioinformaticians.Klaus Purer[6],using Drupal’s Services module,developed a Web service client module. He also applied his custom module to a translation web services site and demonstrated its robustness. Many other researches also use Drupal to manage and organize their data or build websites. The website developed by the US Government’s White House[7]is based on Drupal.

The Drupal content management system uses an integrated database and database management system (DBMS), such as MySQL or PostGres, to store all the information,content, and data it manages, including access control configuration, web content,description of views,PHP code used to control input,output,and display of content,and in BCO-DMO’s case,all the metadata about the marine data they manage.Drupal’s database abstract layer isolates the implementation details of the DBMS from Drupal’s use of the database. This enables the user to choose which DBMS to use without needing to alter Drupal code.

The Biological and Chemical Oceanography Data Management Office(BCO-DMO)is a U.S. National Science Foundation (NSF) funded project. Its staff members work with investigators to serve data online from research projects funded by the Biological and Chemical Oceanography Sections and the Division of Polar Programs Antarctic Organisms& Ecosystems Program at the U.S. National Science Foundation. BCO-DMO manages data using a combination of people and computing resources. The people include the investigators who collect or generate the data and information,the people who process the data, and the BCO-DMO data managers that help organize and make the data web accessible. The computing resources include a combination of data and web servers and software to make data and information discoverable, available online for visualizing, and downloadable.[8]

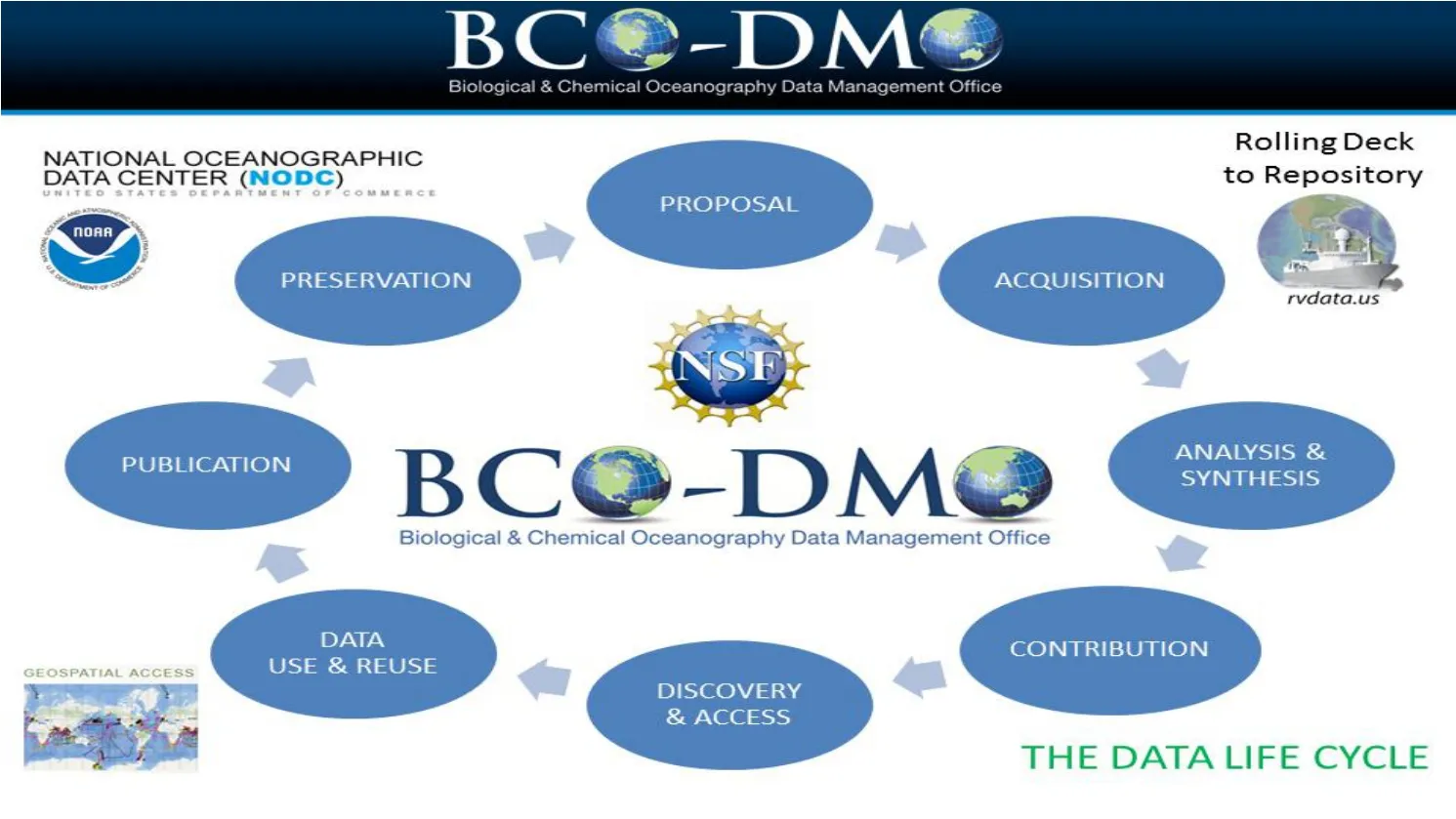

BCO-DMO's data stewardship philosophy encompasses all phases of the data life cycle from "proposal to preservation". BCO-DMO’s view of the data life cycle (Fig.1)includes the following eight steps: science proposal writing; data acquisition; analysis and synthesis; data contribution; discovery and access; data use and reuse; publication; and preservation.

Fig.1 Data Life Cycle

BCO-DMO Data Managers work closely with the investigators to ensure that the metadata needed for data discovery, use, and reuse are collected and preserved. This includes information about the design of the experiment, data acquisition details including instruments used,and data processing steps taken to convert field measurements into final,useful data.The BCO-DMO Data Manager works closely with the data contributor(s)once the data are contributed to BCO-DMO to determine the best way to organize and display the data. This process starts early on in the data life cycle preferably at the start of the scientists’ project, even before any data is collected. These tasks are people resource intensive but this approach results in higher quality data and data that can be found and effectively and accurately reused by others.

BCO-DMO’s software and hardware architecture consists of a Drupal based website containing metadata and web content providing Data Manager and public web access,geospatial access provided by MapServer[9], and Java-based libraries (such as OpenLayers[10]and ExtJS[11]), the JGOFS/GLOBEC data management system[12,13], and other software components. The organization of these components are summarize in our Architectural Overview(Fig.2).

BCO-DMO uses Drupal as a CMS to organize and serve metadata for NSF and other organizations making oceanographic data discoverable and retrievable online.These data include biological abundance, meteorological, nutrient, pH, carbonate, PAR, sea surface temperature, heat and momentum flux, sediment composition, trace metals, primary production, pigment concentration measurements, and other data from the laboratory,model results,and the field.

1 Overall design

1.1 Motivation for NSF Tracker

Extracting and collecting these metadata, is time consuming and can prove tedious to the contributing scientists. Consequently, BCO-DMO takes every opportunity to minimize the burden on the investigators. One way they have accomplished this is to take advantage of the publically available information NSF makes available about funded science projects.This information is web accessible and can be used to obtain much of the basic, project specific information that contributors used to send to BCO-DMO by completing a form. In practice, BCO-DMO opted to collect and organize the metadata available from NSF into a separate NSF Tracker Drupal-based database (hereafter called the NSF Tracker database) and website. This metadata is then added to BCO-DMO’s Drupal-based metadata database (hereafter called the BCO-DMO database) and website after appropriate review.

Originally, BCO-DMO built its websites with Drupal version 6 (Drupal 6). However,after websites were upgraded to higher Drupal version,the old NSF Tracker application no longer worked. Its failure was due in part to the tight coupling between the database containing the local copy of the metadata downloaded from the NSF website and the BCO-DMO database. A second cause of the failure was that the original implementation used a form written in HTML and JavaScript outside of the Drupal 6 environment.This form was used by the BCO-DMO data managers to review the NSF metadata, make any necessary changes and updates, and then confirm the information for addition to the BCO-DMO database. This form attempted to communicate directly with the BCO-DMO database. These failures meant that the stability and efficiency of the original approach were low as every time the NSF database was upgraded to a new Drupal version, the HTML form had to be manually upgraded as well. In addition, attempting to directly communicate with the BCO-DMO database was a potential security threat to the BCO-DMO database. Thus, BCO-DMO needed a better approach to capture the NSF metadata, provide the opportunity for the data managers to update the content and then commit the update to the BCO-DMO website. BCO-DMO decided to build a new intermediate website, replacing the old NSF Tracker database and separate HTML form,with a database and locally written Drupal-based module,to improve the stability,flexibility,and efficiency in communicating to and updating of the BCO-DMO database.

1.2 New design of NSF Tracker application

Drupal is a highly modular CMS, and it is important to intelligently make use of contributed modules and the ability to write custom modules. BCO-DMO redesigned the Drupal-based NSF Tracker database and the NSF Tracker application by initially addressing what modules BCO-DMO should use to import the NSF organization’s website’s metadata, determining the best method of accessing the BCO-DMO database’s metadata, and how to implement the form that pre-populates the form with a combination of the NSF metadata and the BCO-DMO database’s metadata.(Fig.3)

Fig.3 NSF Tracker Overall Design

Fig.4 New implementation of the data entry form combining information from the NSF website,the BCO-DMO website,and the Data Manager’s additions and changes

To import the information from the NSF website, BCO-DMO chose the Migrate module[14]in Drupal 7 instead of the Node Import module[15]used in Drupal 6. This approach improved the process since it took into account the reality that the NSF organization’s website was always changing as new projects were funded. Furthermore,the Node Import module was not supported in Drupal 7.Since the NSF website did not yet provide web services to its award data,it was necessary to manually download the data as a comma separated flat file. This information can be directly incorporated into the NSF Tracker database using the Migrate module.

In the original approach, the NSF Tracker form communicated with the BCO-DMO database using SQL commands.The original BCO-DMO database was a directly accessed MySQL database. Now in the new approach, the BCO-DMO database containing all the metadata describing the data BCO-DMO manages, is contained in a separate Drupal-based database. The approach enables several new features because Drupal supports these features natively.These features include content search,creation of Resource Description Framework (RDF)[16]records, and overall better integration of“normal” text-based web content and the metadata content. BCO-DMO built the separate NSF Tracker database and website using Drupal, with the help of a custom module called nsf_tracker, extending Drupal’s Migrate module’s class and using the Services module to offer services.

BCO-DMO chose a Representational State Transfer (REST)[17]Application Programming Interface (API) to expose data from the BCO-DMO website. In this way,the NSF Tracker application and any future applications that rely on the metadata now have a consistent delivery mechanism for accessing the metadata. Furthermore, by doing this,BCO-DMO moved a dependency on the specifics of the database to the access protocol of HTTP. This abstraction of the underlying database of the BCO-DMO website isolates the application from the choice of database engine. The REST API structure changes less often than the NSF Tracker database,BCO-DMO database,or Drupal software.To access the existing information from the BCO-DMO database, BCO-DMO chose to use the Services module[18], which is an implementation of REST within Drupal, on both the NSF Tracker website and the BCO-DMO website to enable intercommunication between these two websites.

In addition, BCO-DMO added new capabilities to the replacement custom, dynamic form within Drupal to provide a better user experience through more data entry options for the data managers.By using the Services module and the REST API,BCO-DMO was able to improve the efficiency of their work. The new custom form makes full use of the Drupal themes, and the Migrate and Services modules. By combining the modules and approaches mentioned above, BCO-DMO was able to implement the concept of “Loose Coupling”[19]between the NSF website and the BCO-DMO website.

1.3 Key technologies used by BCO-DMO

BCO-DMO used both contributed modules and custom modules on the NSF Tracker website to implement its functionality.These include the following:

(a) Migrate module: BCO-DMO extended the Migrate module’s function by developing a new class to more easily import downloaded data from the NSF organization’s website and translate the data into a recognizable format.

(b)Custom module:Using Drupal’s Form API,jQuery and Ajax,BCO-DMO created a new dynamic form with more options and additional flexibility by creating a custom module called nsf_tracker.The form can be used by data managers to modify and pre-populate the data for inclusion in the BCO-DMO database.

(c) Services module: The Services module was used to access the data from the BCO-DMO database. By using the Services module on both the NSF Tracker and BCO-DMO websites, BCO-DMO could make Ajax calls to request data and pre-populate the data fields based on both data managers’ selections and the existing data in the BCO-DMO database.

2 The implementation of new NSF Tracker approach

2.1 Set up empty Drupal 7 website

This section describes in some detail how the Drupal website was set up. It is included as the Drupal learning curve is steep and the authors felt this level of detailed description would be beneficial to others new to Drupal.

On the website computer, BCO-DMO set up Drupal 7 by using a script file. After BCO-DMO installed the website,Drupal’s core modules were set up.Based on their needs,BCO-DMO used the“drush dl”command to download contributed modules from the Drupal organization’s website and the “drush en” command to enable the downloaded modules.Next, BCO-DMO created the nsf_tracker module stored within the Drupal sites/all/module/custom directory (Fig.5). The downloaded modules are all third party modules identified as “contrib” modules and stored in their own /contrib sub-directory. The nsf_tracker custom module is created based on what we want Drupal to do. Drupal regards all modules except the Drupal core modules as contributed modules. The difference is where they come from. Following best practices, modules coming from the Drupal community are put under a separate “contrib” directory.Modules developed locally are put under a “custom” or “features” directory. The features directory also contains custom modules, but they are designed to work with the other Features contributed modules for deploying Drupal configuration code. This concept is important when a Drupal site needs to be moved or replicated in another environment. For BCO-DMO, it’s important to be able to replicate the NSF Tracker website for testing new code or bug fixes.Features provide the capability to package up a Drupal site’s configuration settings that otherwise cannot be set upon installation as they may pertain to contributed and custom modules.

Fig.5 Extension of Migrate module

2.2 System implementation

Once the NSF organization website changed its online web presentation of the award metadata resulting in a change in the structure of the downloaded files.By creating an NSF specific content type in Drupal, BCO-DMO was able to use Drupal fields to capture the structured information from the downloaded NSF data files. Field names in the Drupal content type are mapped to the NSF downloaded data columns[20]. BCO-DMO uses an extension to the Migrate module to handle these downloaded data files automatically,that is,without having to manually reformat the files.

2.2.1 NSF Tracker data manager HTML form

The custom form (Fig. 4) is used to pre-populate the metadata values for entry into the BCO-DMO website.Since the form’s information used to go directly into the BCO-DMO database, it was a security problem waiting to happen. The original form was written in HTML and JavaScript,outside of Drupal.BCO-DMO rewrote it within the Drupal framework by using the form API,jQuery,Ajax and,at the same time,added new features to the form.As this form is dynamic,it did require extra effort to implement.

Two main attributes of this implementation are:

(a) Data in the form come from three sources. Some of the data are from the BCO-DMO database, some of the values are from the NSF organization’s website via the intermediate NSF Tracker database, and the remaining data are from data managers’modifications. Data coming from the BCO-DMO database can reduce the data managers’edit time by eliminating the need to type in values. The possible values are presented as dropdown lists. Because the BCO-DMO website exposes a REST API for accessing its data, the NSF Tracker form can make Ajax requests for those data. Due to cross-domain browser restrictions, a REST API was implemented for the NSF Tracker application to access the BCO-DMO data via its REST API. This enables the NSF Tracker data entry form to call its local REST API for retrieving local data as well as remote BCO-DMO data.This improves sustainability as different client browsers vary in the way they handle cross-domain issues.The BCO-DMO REST API abstracts access to its data and the NSF Tracker REST API abstracts access to its local data. This issue could have been addressed by enabling cross-origin resource sharing (CORS) support, but it is unclear if the using CORS support would have been the best sustainable decision as the domains for BCO-DMO, staging and development environments for both BCO-DMO and NSF Tracker are mutable. We acknowledge that we have not thoroughly investigated CORS support as a viable option.

(b) The award information from the NSF organization’s website is not all formatted the same. For instance, some projects do not have co-principal investigators(co-PIs).While some awards have more than one co-PI. The nsf-tracker module creates the form and its prepopulated values are based on the information captured from the NSF Tracker database and on the information already stored in the BCO-DMO database.Information is double checked via jQuery and Ajax calls by searching the BCO-DMO database. If some of the new information is already in the BCO-DMO database, such as the email address of the principal investigator,data managers can make their own decision on whether to use the existing information or update these records based on the new information from the NSF organization’s website.

By providing as much detailed information as possible, the dynamic form gives data managers more options so they do not have to fill in the fields manually. In this case, the efficiency and accuracy of their work were improved.

2.2.2 Migrate Module Implementation

The NSF Tracker database is updated every two weeks to keep track of new data on the NSF organization’s website. The Drupal Migrate module is used to capture the NSF metadata as it provides a flexible framework for migrating content into Drupal from other sources. Furthermore, the Migrate module can easily be extended for migrating different kinds of objects such as files,nodes and so on.[21]

The following two steps describe how BCO-DMO keeps the local NSF Tracker database up to date:

First, a new content type for storing the NSF information formatted as flat files is created. New content is imported from each of the NSF downloaded files: By writing an extension class of the Migrate module’s DynamicMigration class, the newly downloaded files are uploaded to the NSF Tracker database by creating a content type instance which will be detected by the Migrate module resulting in an updated set of NSF Awards.(Duplicate entries are automatically avoided because the custom Migration class specifies the primary key to be the Award number.)This extension class removes the need for data managers to modify files by hand as required by the previous approach, which used the Node Import module.

Second, use the custom class NSFAwardMigration to extend the original class DynamicMigration (Fig.5). By doing this, we can let the information within the uploaded files go directly into the related form fields’ content type correctly. This enhancement accomplished two goals: (a) Stored these metadata files into the NSF Tracker database;and(b)Automatically matched the files’content with the form fields’content type.

2.2.3 Services module on both the NSF Tracker website and the BCO-DMO website

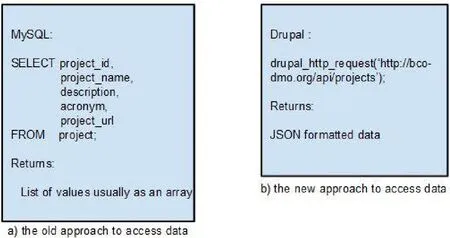

The Drupal Services module provides a standardized solution for integrating external applications with Drupal.As described above,we use REST APIs to allow a Drupal 7 site to provide web services for multiple interfaces while using the same callback code.[22]The Services module provides a standardized API for Drupal to get and send data to a remote application.Fig.6 compares the old and new coding approaches.

Fig.6 Comparison of old and new approaches to accessing data

Within the NSF Tracker website we used jQuery and Ajax to accomplish a dynamic form.By using Ajax,BCO-DMO used functions to talk to the website server and obtain data from the BCO-DMO database. However, Ajax cannot talk to the third party database directly, in order to prevent Cross-site request forgery (CSRF)[23]. Thus, BCO-DMO installed the Drupal Services module on both the NSF Tracker website and BCO-DMO website servers. The NSF Tracker website’s client side sends Ajax calls to the Services module on the NSF Tracker server side.This Services module talks to the Services module on the BCO-DMO server side via the Drupal drupal_http_request() call. The Services module on the BCO-DMO server side then sends JSON-formatted data back to the Services module on the NSF Tracker server side. Finally, the NSF Tracker server side sends what it received from the BCO-DMO database back to the NSF Tracker client side,which pre-populates the obtained data into the custom form fields.After the Data Manager finalizes all the data in the form that they want to save into the BCO-DMO database,BCO-DMO used the Services module on the BCO-DMO website server side to acquire the JSON-formatted data and save the finalized data into the BCO-DMO database. The NSF Tracker form does not update the NSF Tracker database.

3 Conclusion

We applied the concept of loose coupling to the process of building the NSF Tracker database and its connection to the BCO-DMO database. Using this approach enabled BCO-DMO to accomplish a dynamic connection between the data available from the NSF organization’s website and the information presented from the BCO-DMO website. The major steps in this process included:

(a)Setting up a Drupal 7 NSF Tracker website and redesigning it based on the“loose coupling” concept thereby accomplishing a “loose coupling” between the NSF Tracker database and the NSF organization’s and the BCO-DMO websites.

(b) Using the Drupal Form API to develop a custom dynamic form within Drupal instead of using an HTML-based form outside of the Drupal environment. In the long run this approach is more robust;it will be upgraded whenever a website is upgraded and can make use of the resources provided by the Drupal community. By using and customizing this dynamic form within Drupal, the BCO-DMO’s data managers’ efficiency is improved.An additional benefit is improved security of the websites.

(c)Developing a new Migrate module class to extend the original class’s function.By doing this, the NSF Tracker website could then handle data files automatically and match the file’s content to a Drupal content type’s fields. Matched information from the original NSF data files can go directly into the custom form.

(d) Using the Drupal Services module on both the NSF Tracker and BCO-DMO websites: The form’s data no longer communicates with or goes into the BCO-DMO database directly.This improves the security of the database and also makes full use of the existing data from the BCO-DMO database.

Drupal is a powerful and modular CMS that handled BCO-DMO’s data capture and processing requirements. It provided enough flexibility to enable BCO-DMO to customize its own modules and to extend the contributed modules’ functions to accomplish the management of NSF supplied metadata.

Marine Science Bulletin2023年1期

Marine Science Bulletin2023年1期

- Marine Science Bulletin的其它文章

- Characteristics of storm surge disasters along Fujian coast in recent 10 years

- Methods and empirical research on Chinese sea area resource assets value accounting

- Design and development of international buoy data operational processing system

- Isolation and purification of diketopiperazines with antialgal activity from marine macroalgae

- Data analysis of HF surface wave radar in Zhoushan sea area

- Priority conservation pattern and gaps of Guangxi sea area based on systematic conservation planning