A novel strategy for fine-grained semantic verification of civil aviation radiotelephony read-backs

Guimin JIA ,Junxian LI

a Tianjin Key Lab for Advanced Signal Processing,Civil Aviation University of China,Tianjin 300300,China

b College of Electronic Information and Automation,Civil Aviation University of China,Tianjin 300300,China

KEYWORDS Attention mechanism;BiLSTM;Interaction layer;Radiotelephony read-backs;Semantic verification

Abstract During the flight of the aircraft,the pilot must repeat the instruction sent by the controller,and the controller must further confirm these read-backs,in this way to further ensure the safety of air transportation.However,fatigue,tension,negligence and other human factors may prevent the controller from realizing read-back errors in time,which is a huge hidden danger for the safety of civil aviation transportation.This paper proposes a novel strategy to implement fine-grained semantic verification of radiotelephony read-backs by introducing interaction layer and attention mechanism at the output of BiLSTM model.Compared with the traditional twochannel verification strategy,the interaction layer is added to obtain fine-grained semantic matching relation representation,rather than connecting the BiLSTM output vectors to obtain the overall semantic representation of the sentence.And by adding attention layer,the new strategy can capture the potential semantic relation between the read-backs and the instructions,which is applicable to non-standard diction and abbreviated read-backs in real radiotelephony communications.Extensive experiments are conducted and the results show that the proposed new strategy is more effective than the traditional method for read-backs checking,and the average test accuracy of the new strategy based on the Chinese ATC radiotelephony read-backs corpus can reach 93.03%.

1.Introduction

Radiotelephony communication is one of the main communication methods in the process of civil air transportation.In order to ensure that the aircraft can travel safely,Air Traffic Controllers(ATCs)and pilots must ensure accurate communication.During the flight,the ATCs need to give different instructions to the flight crews by considering the flight phase,weather condition,etc.The flight crew also has to keep in touch with the ATCs at different flight stages to ensure that the information is received and to report the flight status.Therefore,it is very crucial to exchange information correctly between the ATCs and flight crews in civil aviation transportation.

Radiotelephony communication in civil aviation air traffic control is a professional semi-artificial language established on the basis of natural language.1In order to make the language of radiotelephony communication more standardized,the International Civil Aviation Organization (ICAO) and the Civil Aviation Administration of China have clearly stipulated the standard of the language and imposed strict requirements on ATCs and flight crews.2,3In the past few decades,civil aviation technology has greatly developed,and the accidents caused by the failure of communication equipment have reduced.However,in the actual flight process,civil aviation flight safety accidents caused by human errors in radiotelephony communication such as inaccurate read-backs,misunderstanding,non-standard diction,and incomplete content,occur from time to time.4–6For example,in 2018,a JAL B787-9 aircraft invaded the runway at Pudong airport,which was caused by the reason that the air traffic controller is not aware of the read-back error.

Although the double check is required in radiotelephony communication,these human errors are usually difficult to be noticed by ATCs and pilots themselves in time,due to fatigue,tremendous pressure,fast speaking speed and unchanged low intonation.The common problems mentioned above usually result in read-back errors in civil aviation radiotelephony communications.7Actually,with the rapid development of the civil aviation transportation industry,the work pressure of controllers and pilots is increasing,and human errors are more likely to occur,which will further cause pilots’ operational errors and bring huge safety problems.Therefore,this paper is focused on establishing a strategy to automatically verify the consistency of ATC instructions and pilot read-backs(ATC-pilot sentence pairs).

Sentence matching is an important task in many fields of natural language processing,such as question answering systems,8,9machine translation tasks and dialogue systems.10–12In recent years,some algorithms based on deep neural networks have achieved encouraging results in semantic analysis and matching.13–16LSTM is a recursive deep network with a linear chain structure,which is widely used to extract semantic features of sentences in the construction of language models.Long Short-Term Memory (LSTM) can learn historical information,17,18however,it pays more attention to forward information and neglects backward information.Bi-Directional Long Short-Term Memory (BiLSTM) effectively solves this problem and further improves the learning ability of LSTM.19–21Since the Transformer model was proposed in 2017,the attention mechanism has aroused researchers’extensive interest.22–24In the field of natural language processing,the attention mechanism was first applied to machine translation,25and has now become an important way to model the sentence meaning.26,27In recent years,pre-trained models based on attention mechanisms are further proposed by researchers,and pre-trained models such as Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformer (GPT) all use the transformer structure based on the attention mechanism as the feature extractor.Pre-trained models have set for various Natural Language Processing (NLP) tasks new state-of-the-art results,including question answering,sentence classification,28and text matching.29,30These developments provide valuable references for us to check the read-back errors.

Recently,some read-back checking models,which adopt two-channel sentence matching scheme,have been proposed based on the relevant research in natural language processing.In these traditional checking models,only the semantic vector of the entire sentence can be extracted for ATC-pilot sentence pairs matching,while the semantic interaction information at the word level cannot be extracted by the traditional twochannel read-back checking models.However,the more finegrained semantic information is particularly important in the task to verify the consistency of ATC instructions and pilot read-backs.And there is also a lack of research and analysis on attention mechanisms and pre-training for this task.

Therefore,we propose a novel strategy to address this problem.The interaction layer and the attention mechanism are introduced in our new strategy based on BiLSTM.The word embedding method of word2vec vector is used to represent the segmented words of these sentence pairs.The traditional BiLSTM is used to represent the word information,and then semantic interaction is implemented by interaction layer.Semantic matching features at the word level can be acquired through interaction layer.The memory-based attention layer is used to assign different weight to semantic features output by the interaction layer,which can obtain more robust potential semantic matching information than traditional strategy of read-backs checking.

2.BiLSTM model

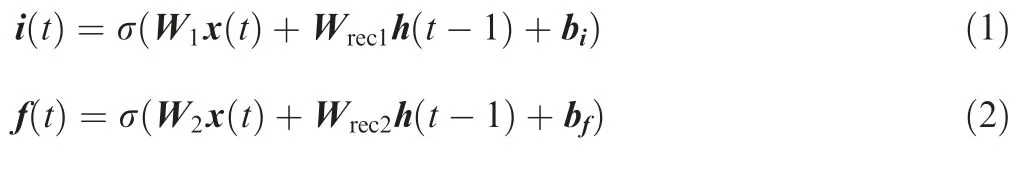

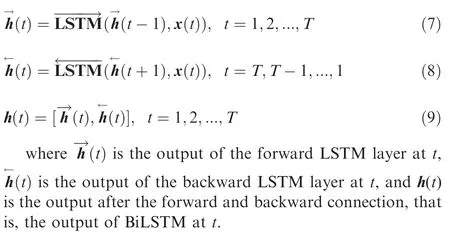

LSTM evolved from RNN,which solves the problem of gradient disappearance or gradient explosion that may occur when RNN processes long sequences.31,32Therefore,LSTM has been widely used to process long time-series data and has achieved high performance.The core structure of LSTM is composed of input gate,forget gate,output gate and memory unit.The memory unit is the core of the LSTM network which is used to store historical information in a certain period.X={x(1),...,x(t)...,x(T)} is the input sequence,and x(t) is the word vector of thet-th word.h(t)is the hidden layer output at timet.o(t),f(t),i(t),and c(t)are the state vectors of the output gate,forget gate,input gate,and memory unit at timet,respectively.The transition functions of LSTM are shown as

where Wiand Wreci(i=1,2,3,4) are input connections and recurrent connections of output gate,forget gate,input gate and memory unit,respectively;tanh(·)and σ(·)are activation function;bi,bf,bo,and blare bias.

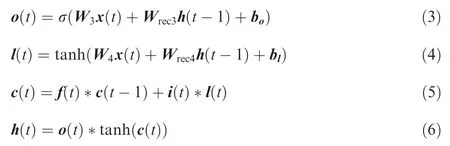

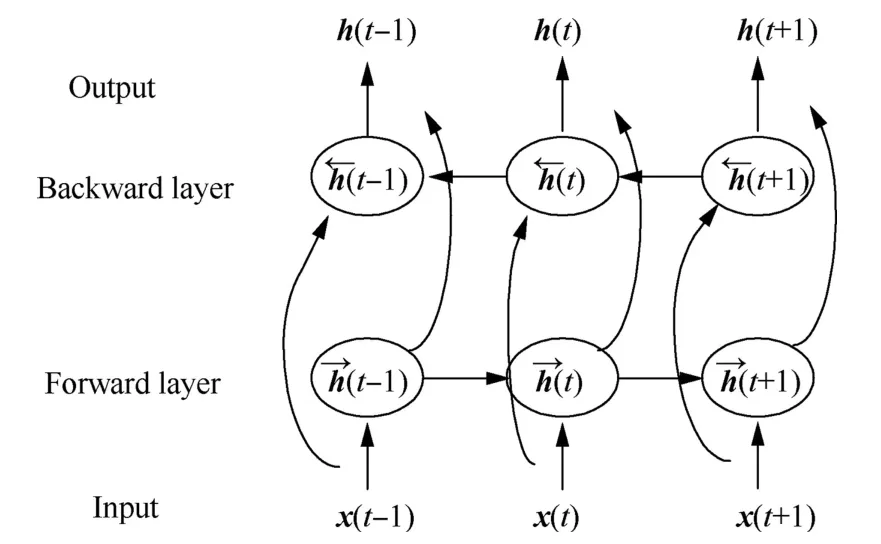

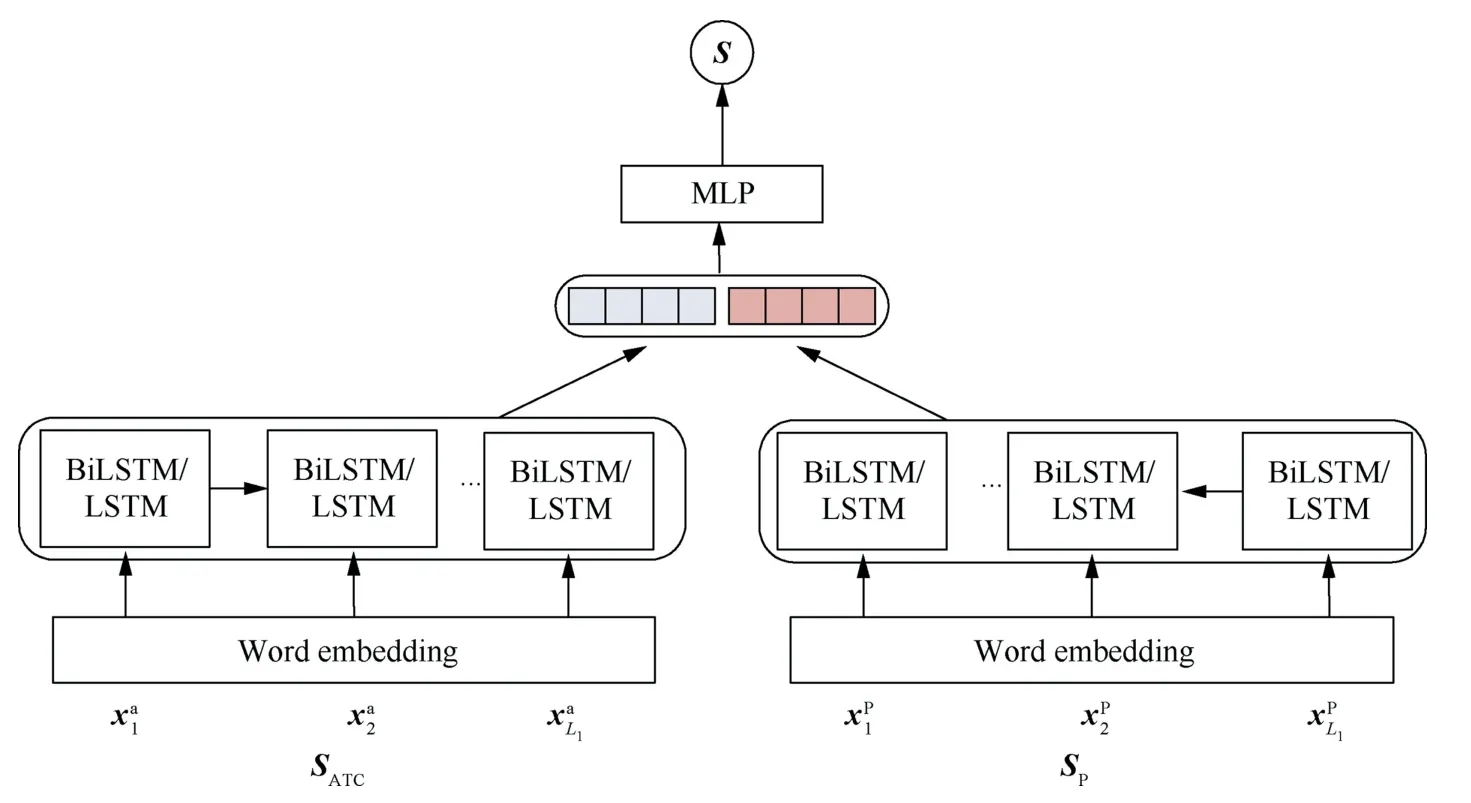

Although LSTM can learn historical information,it is limited to forward information and cannot use backward information.The BiLSTM further improves the learning ability of LSTM.Its structure is shown in Fig.1,and BiLSTM is composed of LSTMs in two different directions.

Fig.1 BiLSTM structure.

The final output of the BiLSTM network is determined by the values of these two LSTMs,as shown in

3.Our new strategy

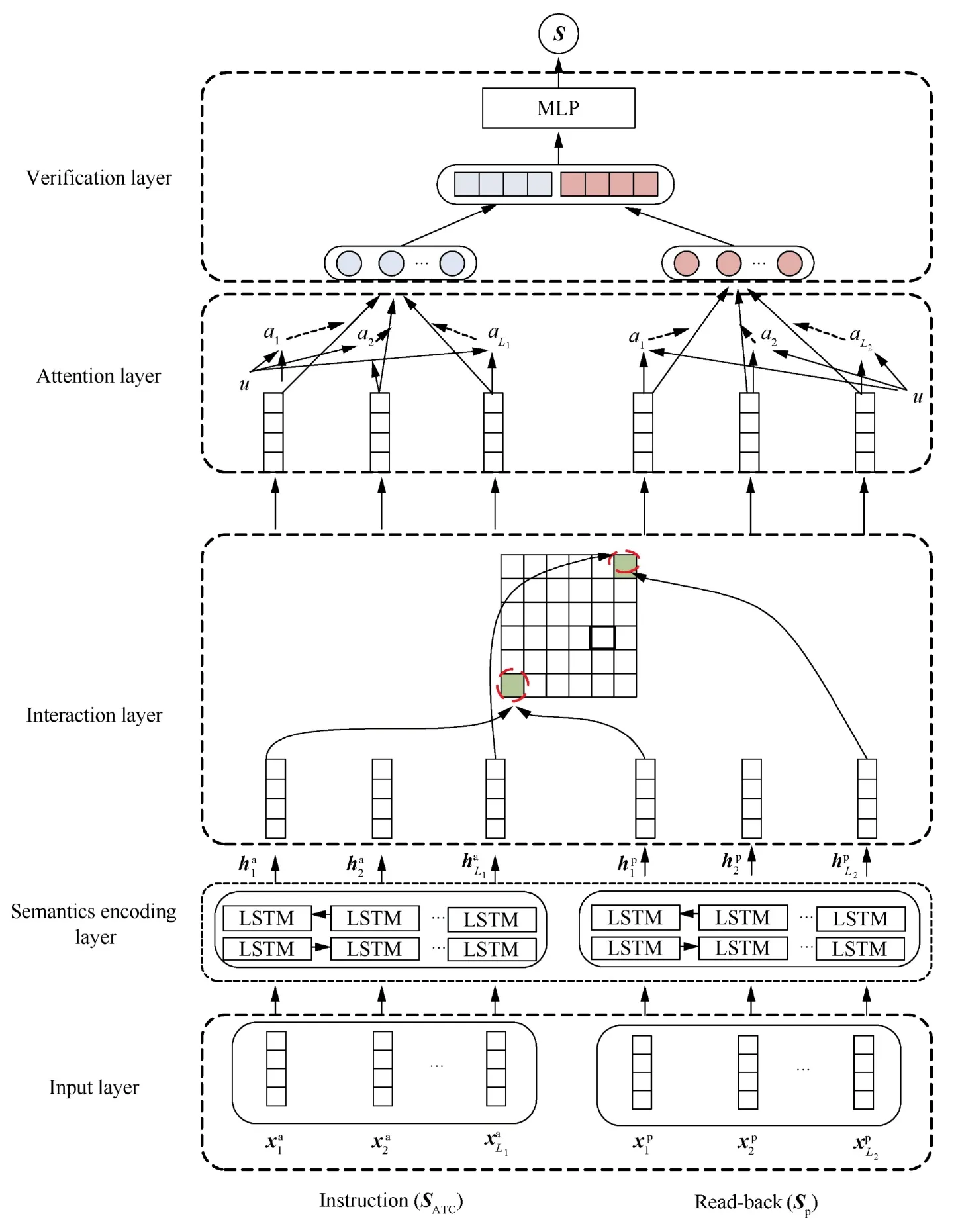

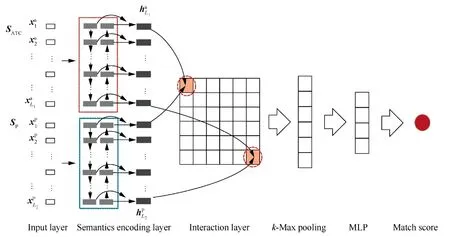

The BiLSTM network is used to extract the basic bidirectional semantics of radiotelephony communications,and then the interaction layer and the attention layer are adopted separately to obtain word-level semantic relations within sentences and assign different weights to interactive semantic features dynamically.The proposed new strategy (named INT-ATTBiLSTM)is shown in Fig.2,and the structure of the new strategy is elaborated as follows:

Fig.2 Structure of proposed INT-ATT-BiLSTM model for read-backs verification.

Step 1.The words of instructions (SATC) and read-backs(Sp) are mapped into SATC=and Sp=by word embedding method,respectively.is the word vector representations of thei-th word in the instruction andis the word vector representations of thej-th word in the read-backs.Since only key information is repeated during radiotelephony communications,the length of Spis usually smaller than the length of SATC.The relation among the words is also weaker than common language mod-eling tasks.In our new model,each segmented word is coded with word2vec vector.

Step 2.The word vectors SATCand Spare input into the parallel BiLSTM network separately to extract the basic sentence semantic features of controller instructions and pilot read-backs,and the outputs are hp=and

Step 3.In order to represent the word-level context information,haand hpare interacted by the tensor network to generate a matching matrix,33as shown in

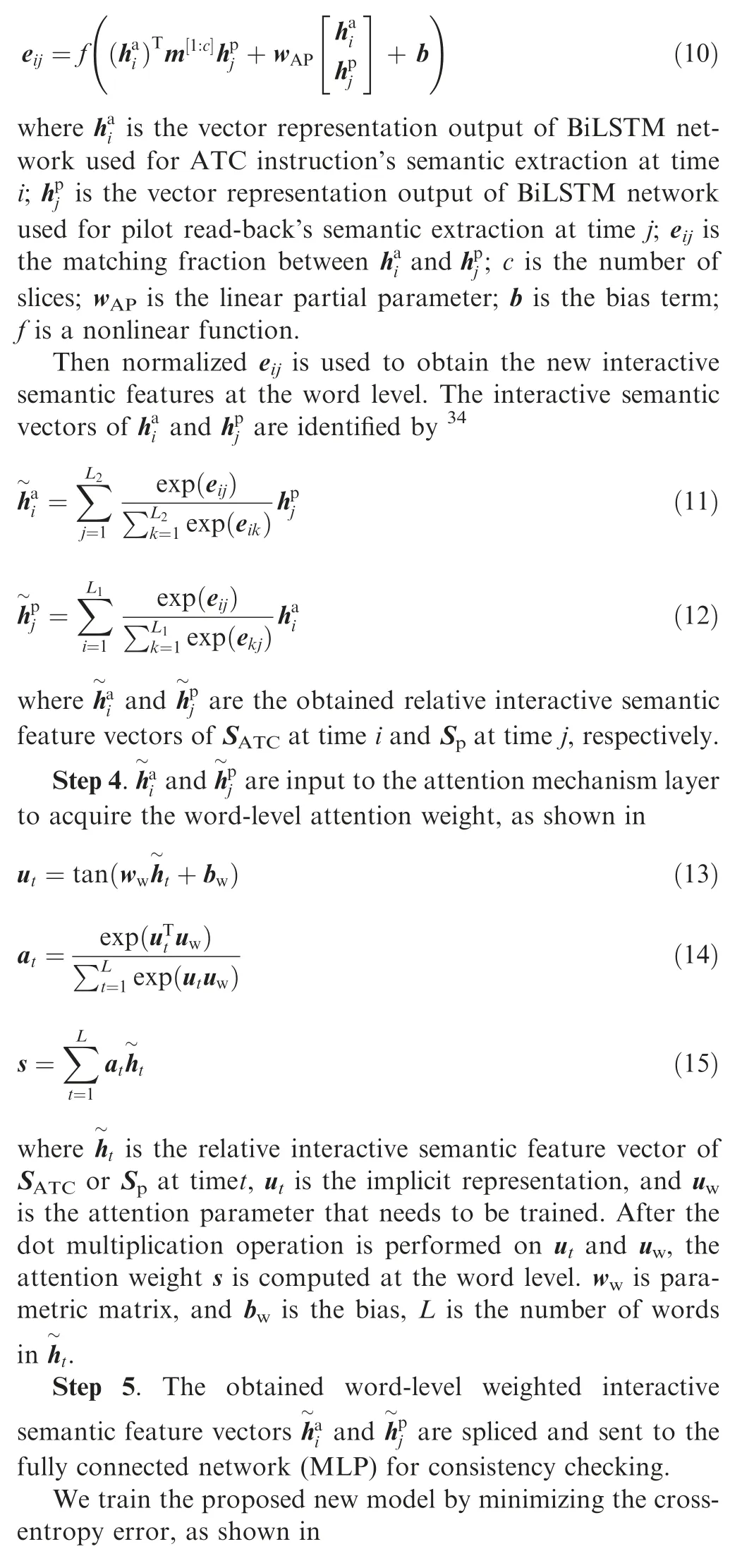

where θ represents all trainable parameters in the model,nrepresents the total number of samples participating in the training of ATC sentence pairs,yirepresents the real label 0 or 1 of theisample,andsirepresents the matching score between SATCand Spafter the training of the model.

4.Experiments and analysis

In order to fully analyze the performance of the novel strategy for semantic consistency verification of read-backs proposed in this paper,the experiments in this part were organized as follows: firstly,experiments were conducted on the traditional two-channel checking model;then,in order to further verify the effectiveness of the interaction layer and attention layer for our task,extensive ablation experiments and comparison experiments were conducted;thirdly,the influence of the different positions of the interaction layer and the attention layer on our task was tested;finally,the new strategy was compared with state-of-the-art models in our task.

4.1.Experimental corpus

In order to analyze the performance of our new strategy,we established two experimental civil aviation radiotelephony communication corpora: one in Chinese (CATC corpus) and

the other in English (EATC corpus).The corpora are built up according to actual recordings of ATC-pilot communications and professional books used for civil aviation radiotelephony communication training.We invited professional ATCs to listen to these recordings and translate them to text format,as there are a large number of proper nouns in the recordings and the speaking speed is much faster than common communication.For improper read-backs do not occur regularly,we chose and designed different types of improper readbacks according to the investigation of communication problems in civil aviation.

Sentence pairs of read-backs (SATC-P) are used to conduct these experiments.In CATC corpus,2500 SATC-Pare annotated with consistency in semantics,and 2500 SATC-Pare inconsistent in semantics,so there are totally 5000 samples in CATC corpus.In EATC corpus,1500 English SATC-Pwith the same semantics are used,and the semantics of 1000 English SATC-Pare inconsistent,so there are 2500 English SATC-Pin EATC corpus.

4.2.Parameter setting

To avoid over-fitting of the model,we set the learning rate on the CATC corpus as 0.001,dropout rate as 0.3,and set the learning rate on the EATC corpus as 0.001,dropout rate as 0.1.The Adam method is used for model optimization and the batch size is 300.In the experiment,the CATC corpus and the EATC corpus are divided into the training set,verification set and test set with the ratio of 8:1:1,respectively.For each experiment,the training set,verification set and test set are picked up randomly from the total samples.For traditional models,we use default parameter setting as in their original papers or implementations.

Each experiment is randomly trained and tested twenty times.Average test accuracy (),F1 score (F1) and Mean Squared Error (MSE) are used to evaluate the performance of the checking model.35Although the EATC corpus has a smaller amount of data than CATC corpus,which may affect the model training and the checking accuracy,this paper concentrates on the performance comparison of different checking strategies,that is,we just need to compare the experimental results in the same corpus.

4.3.Experimental results based on traditional checking model

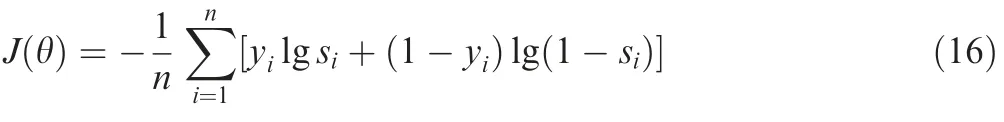

To compare the proposed new strategy,experiments were conducted based on the traditional checking model(Two-Channel model)of read-backs.36,37The structure of the traditional twochannel model is shown in Fig.3.The preprocessing of the SATC-Ppairs is the same,while the outputs of BiLSTM/LSTM models are directly put into the MLP layer to compute the relatedness of haand hpwithout interaction or attention mechanism.

Fig.3 Procedure of traditional checking model (Two-Channel model).

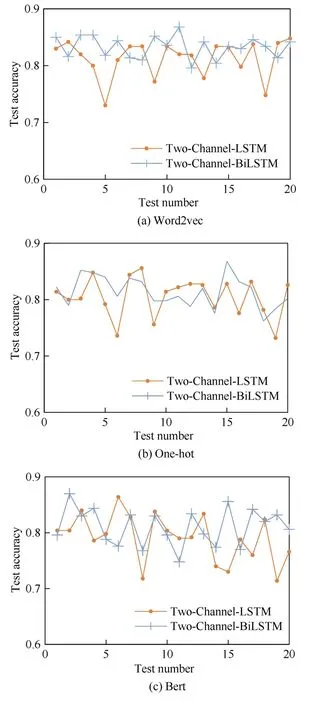

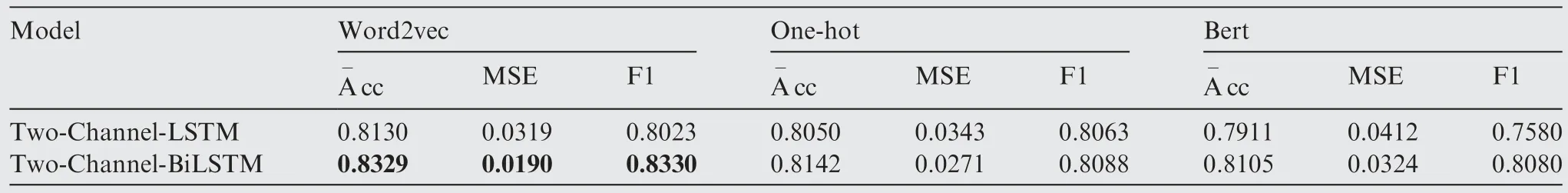

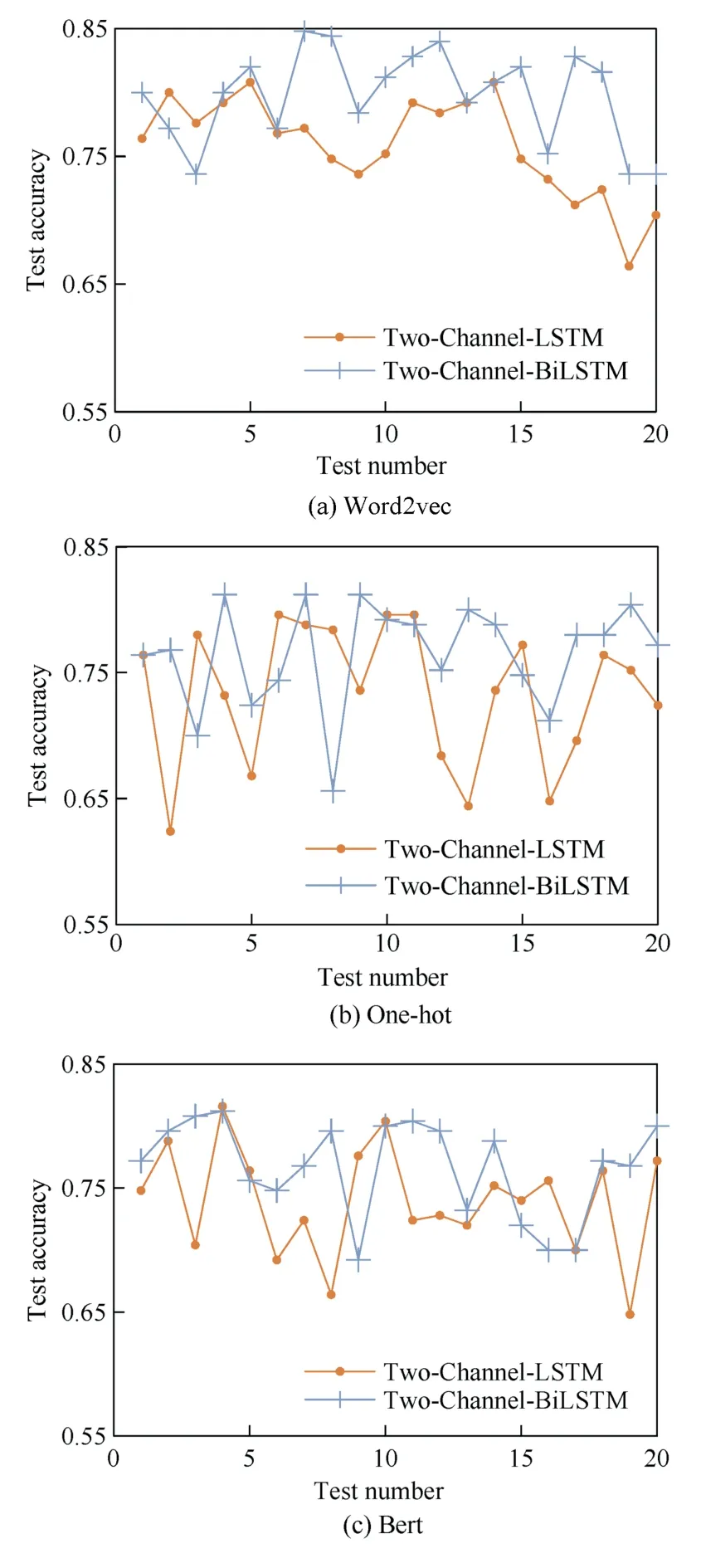

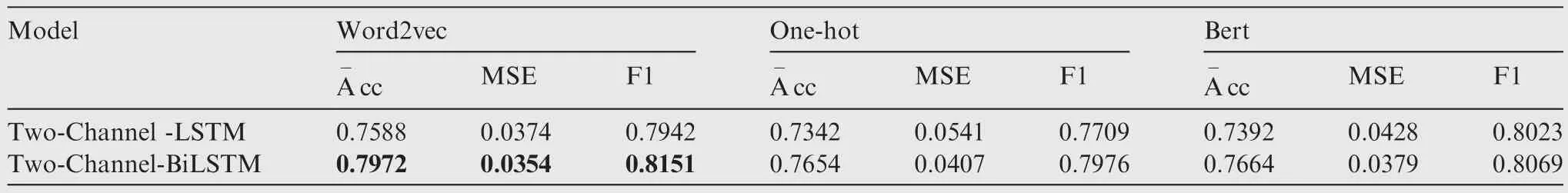

Three kinds of word embedding methods,namely word2-vec,one-hot and bert,are compared in the experiment.The comparative experiment results of Two-Channel-LSTM and Two-Channel-BiLSTM based on CATC corpus are shown in Fig.4 and Table 1.Experiments were also conducted on the EATC corpus,and the comparative experiment results are shown in Fig.5 and Table 2.

Fig.4 Test accuracy of Two-Channel-LSTM and Two-Channel-BiLSTM on CATC corpus.

Table 1 Comparative experiment results of Two-Channel model on CATC corpus.

Fig.5 Test accuracy of Two-Channel-LSTM and Two-Channel-BiLSTM on EATC corpus.

Table 2 Comparative experiment results of Two-Channel model on EATC corpus.

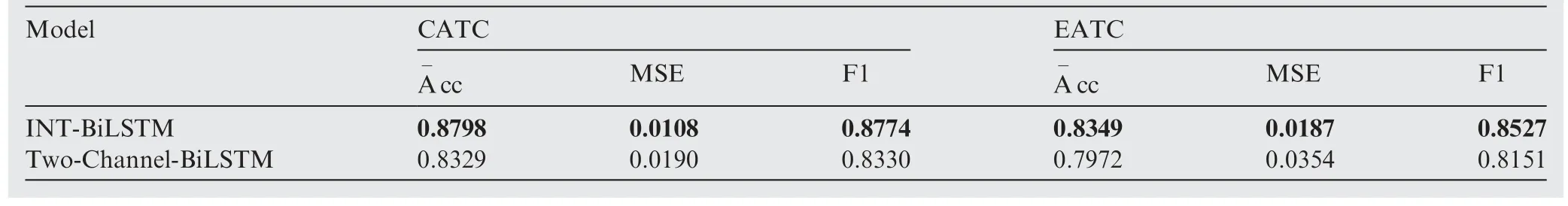

Table 3 Comparative experiment results of INT-BiLSTM and Two-Channel-BiLSTM on two corpora.

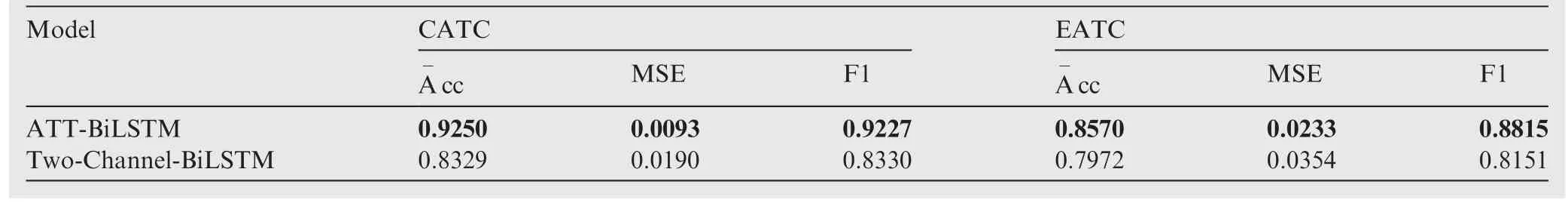

Table 4 Comparative experiment results of Two-Channel-BiLSTM and ATT-BiLSTM on two corpora.

From Fig.4,Fig.5,Table 1 and Table 2,we can figure out that the traditional two-channel checking model based on BiLSTM (Two-Channel-BiLSTM) has lower MSE,highercc,and higher F1 than the traditional two-channel checking model based on LSTM (Two-Channel-LSTM).It is indicated that BiLSTM has more advantages in semantic extraction,so we use BiLSTM in the proposed new checking strategy.Moreover,compared to the other two word vectors,word2vec performs much better as it can capture additional word-level semantic information well.38Therefore,we use word2vec word embedding method in the following experiments.The experimental results above can be used as a baseline to compare with the novel strategy proposed in this paper.

4.4.Experimental results of proposed new strategy

In order to further verify the effectiveness of the interaction layer and attention layer for our task,extensive experiments and comparison experiments are conducted as follows: (A)only the interaction layer is introduced into Two-Channel-BiLSTM model(named INT-BiLSTM);(B)only the attention layer is introduced into Two-Channel-BiLSTM model(named ATT-BiLSTM);(C) the proposed novel strategy (INT-ATTBiLSTM);(D) change the position of interaction layer and attention layer (named ATT-INT-BiLSTM);(E) comparative experiments are conducted with state-of-the-art models.The experiments are conducted separately,and the experimental parameters setting is described in Section 4.2.The datasets described in Section 4.1 are used during the experiments.

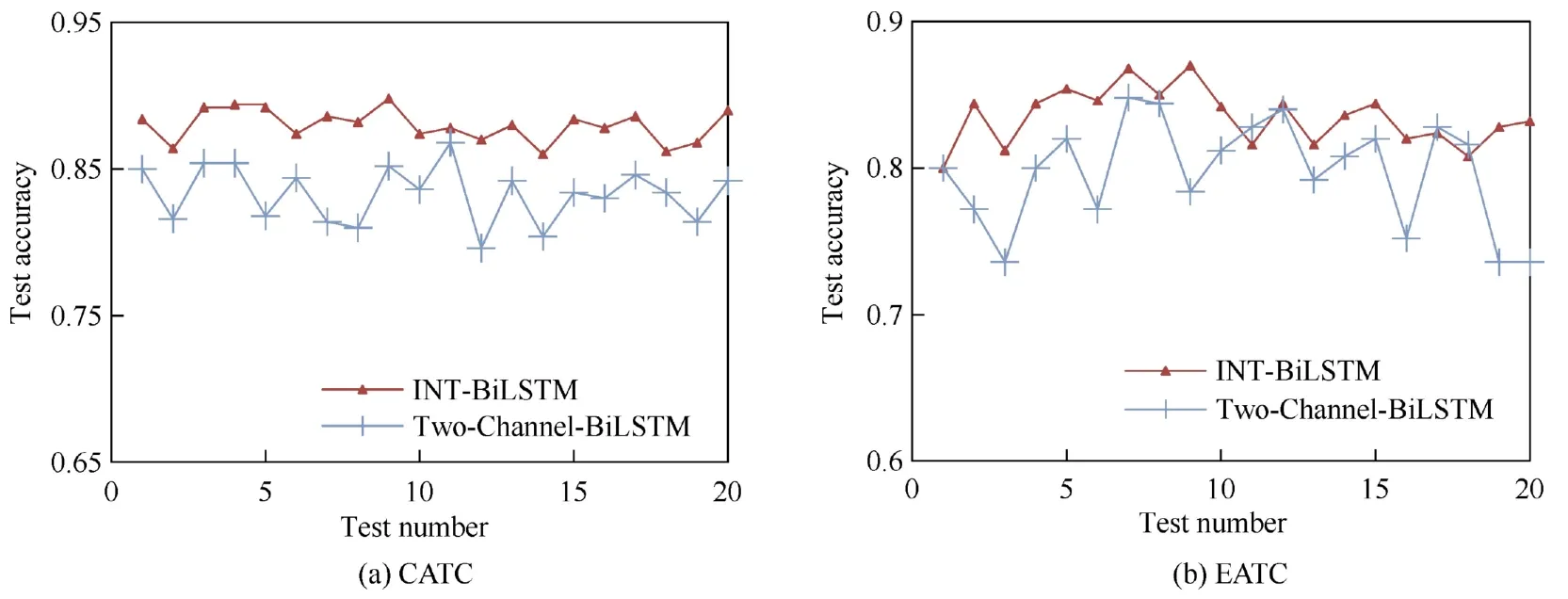

(1) When only the interaction layer is added,the structure of INT-BiLSTM is elaborated as Fig.6,and the comparative experiment results of INT-BiLSTM and Two-Channel-BiLSTM based on CATC corpus and EATC corpus are shown in Fig.7 and Table 3.

Fig.6 Structure of INT-BiLSTM.

Fig.7 Test accuracy of INT-BiLSTM and Two-Channel-BiLSTM on two corpora.

From Fig.7 and Table 3,we can see that the checking accuracy of radiotelephony read-backs is obviously improved compared to the Two-Channel-BiLSTM model by introducing the interaction layer.Also,INT-BiLSTM has lower MSE and higher F1 than the traditional Two-Channel-BiLSTM checking model.It is indicated that the added interaction layer is helpful to improve the performance of the read-back verification,as more semantic relation information of SATC-Ppairs underlying the word level is taken into account by this layer.

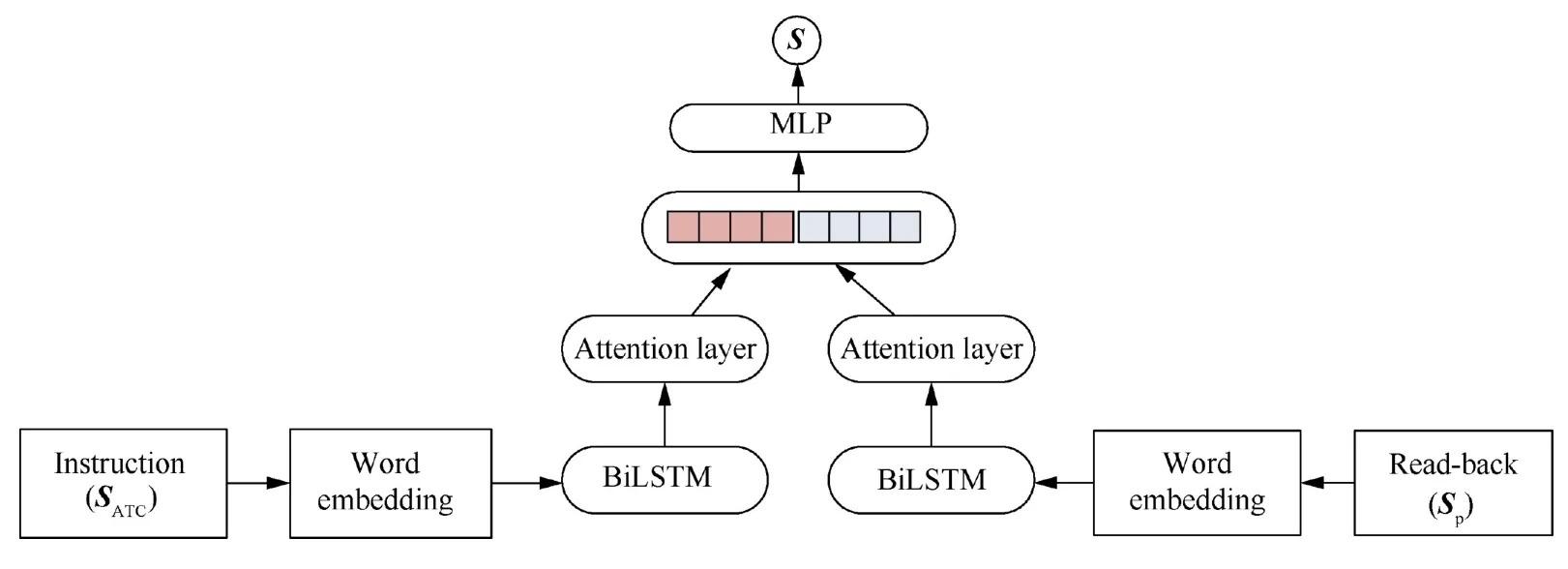

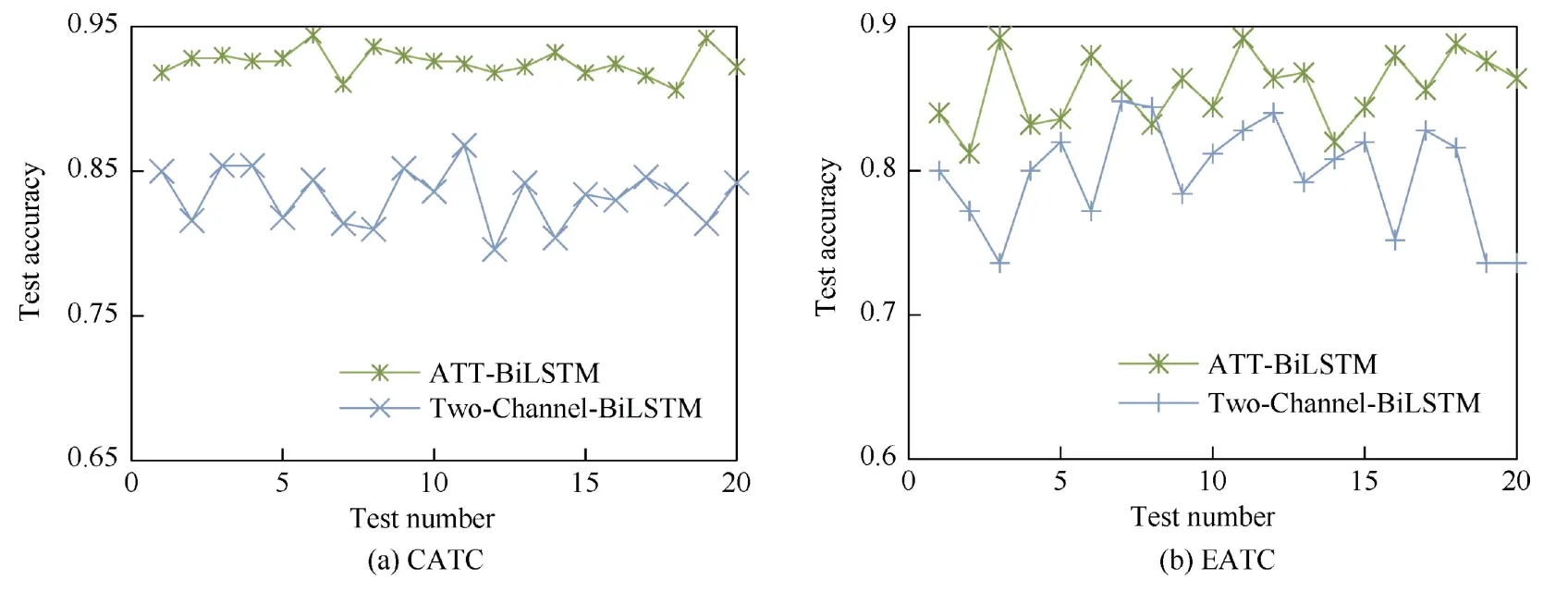

(2) When only the attention layer is added to the Two-Channel-BiLSTM model,the structure of the ATT-BiLSTM model is elaborated as Fig.8 and the comparative experiment results of ATT-BiLSTM and Two-Channel-BiLSTM based on CATC corpus and EATC corpus are shown in Fig.9 and Table 4.

Fig.8 Structure of ATT-BiLSTM model.

Fig.9 Test accuracy of ATT-BiLSTM and Two-Channel-BiLSTM on two corpora.

As indicated in Fig.9 and Table 4,we can see that the experiment results are obviously improved compared to the traditional two channel BiLSTM checking model by introducing attention layer,for instance,cc of CATC corpus shows a significant increase of 0.1.It can be verified that the attention layer contributes to obtaining robust semantic information,by giving different focus to the information output by the BiLSTM hidden layer.

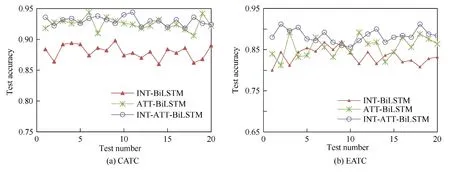

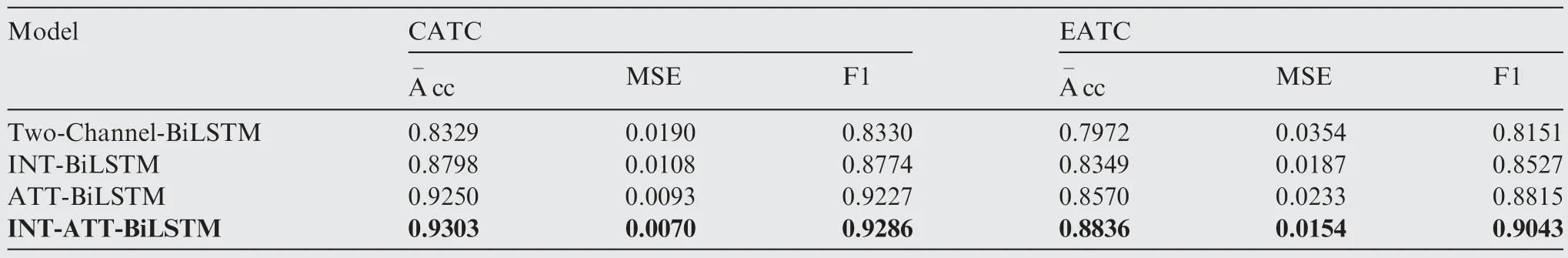

Fig.10 Comparative test accuracy of INT-ATT-BiLSTM,ATT-BiLSTM and INT-BiLSTM on two corpora.

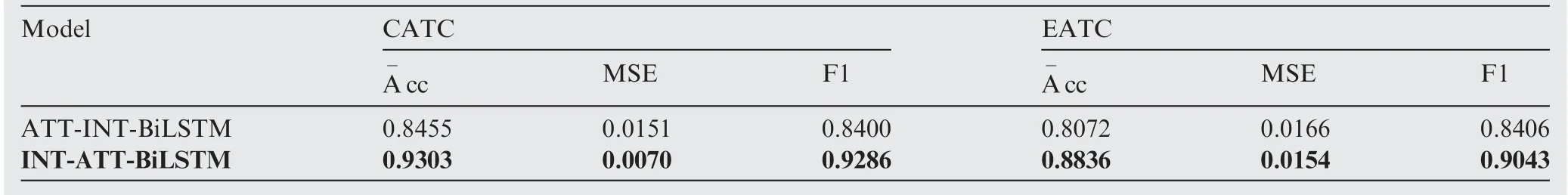

Table 5 Comparative experiment results of four models on two corpora.

Table 6 Comparative experiment results of ATT-INT-BiLSTM and INT-ATT-BiLSTM on two corpora.

Table 7 Comparative experiment results with baseline models on two corpora.

Fig.10 and Table 5 present that the INT-ATT-BiLSTM model has the smallest fluctuation of test accuracy in the experiments,and the results of the INT-ATT-BiLSTM model are obviously better than other three models,which means that the proposed new strategy is more robust and more superior for the checking task of read-backs.Meanwhile,the ATTBiLSTM model achieves the secondary results,which show that the attention layer contributes more to the checking performance and can be well combined with the interaction layer in the proposed new strategy,so as to extract more important interaction matching information.

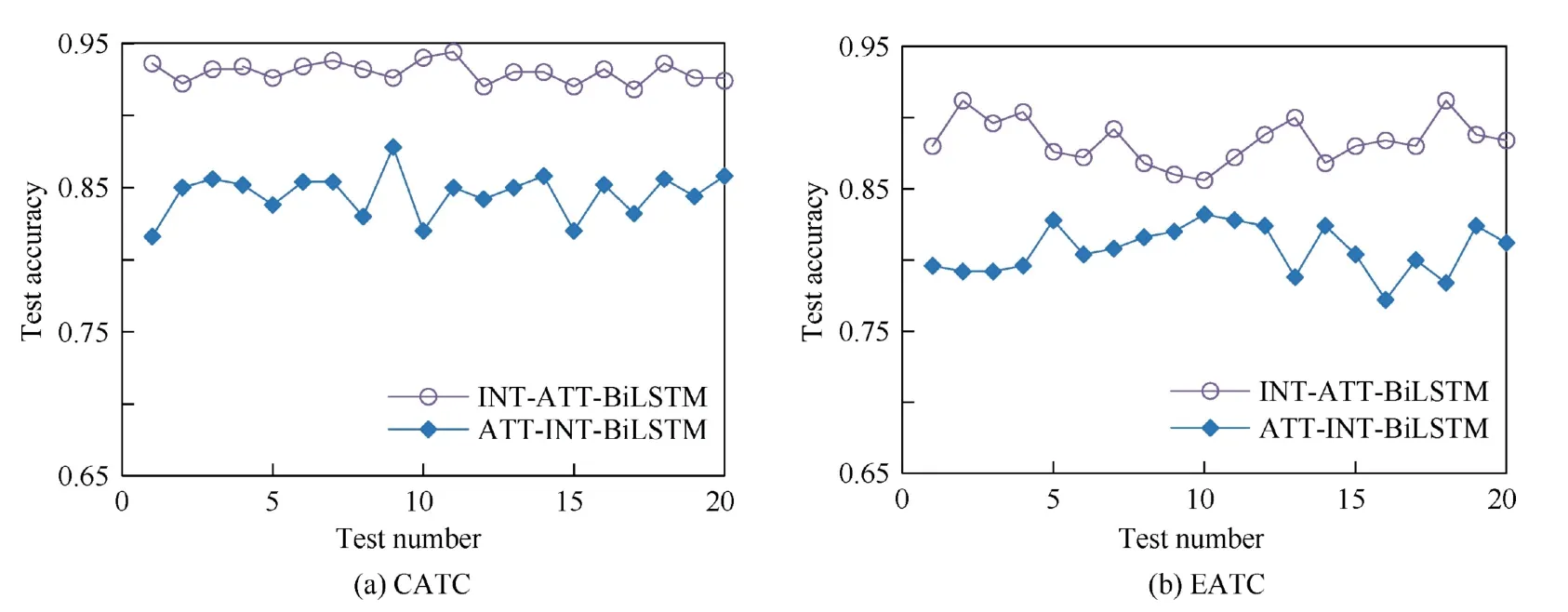

(4) In order to analyze the effectiveness of the position of the interaction layer on our task,we change the order of attention layer and interaction layer,and the experiments are carried out to compare the performance of INT-ATT-BiLSTM model and ATT-INT-BiLSTM model.The experimental results of INT-ATT-BiLSTM and ATT-INT-BiLSTM model based on CATC corpus and EATC corpus are illustrated in Fig.11 and Table 6.

Fig.11 Test accuracy of ATT-INT-BiLSTM and INT-ATT-BiLSTM on two corpora.

From Fig.11 and Table 6,we can see that the test accuracies of ATT-INT-BiLSTM model do not outperform those of INT-ATT-BiLSTM model.The primary reason is that the interaction layer after the attention layer discards some important word-level interaction information between sentence pairs since the length of radiotelephony communication is relatively short,and the word-level interaction information is particularly important in the verification task of read-back.Thus the INT-ATT-BiLSTM can model consecutive and shortdistance semantics well,and is more suitable for our task.

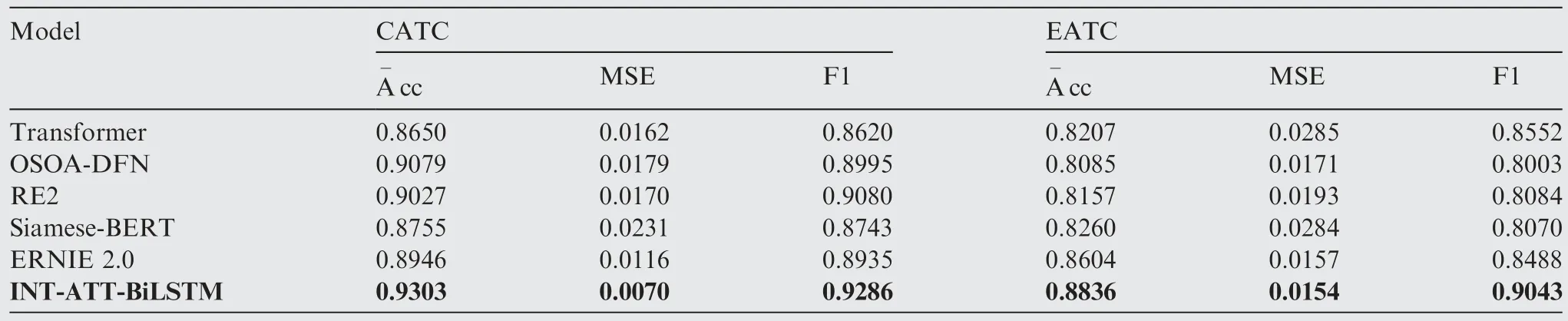

(5) To further demonstrate the performance of our model,we compare our new method (INT-ATT-BiLSTM) with five state-of-the-art models that are referenced in Introduction.Transformer23is a network architecture based solely on attention mechanisms.Siamese-BERT29and ERNIE 2.030are both pre-training framework for text matching and language understanding.OSOA-DFN15and RE216are both based on attention mechanism and used for text matching.We compare these models on our task with the proposed new method.The comparative experiment results based on CATC corpus and EATC corpus are illustrated in Fig.12 and Table 7.

Fig.12 Comparative test accuracy with baseline models on two corpora.

It can be seen that our new method (INT-ATT-BiLSTM)performs the best and significantly outperforms other models on both datasets.The experimental analysis is as follows: (A)Siamese-BERT and ERNIE 2.0 both use Transformer as feature extractor and lack the interactive matching of semantic features,which are particularly important on our task.(B)The inputs of Siamese-BERT and ERNIE 2.0 are the word vectors trained on the general dataset,which cannot accurately express proper nouns in the field of radiotelephony communication.(C) Meanwhile,RE2 does not introduce the attention mechanism to weight the features in the fusion layer,which restricts the representation capacity of global context to enhance the semantic information after sentence interaction.(D) The overall model structure and the attention mechanism adopted by these baseline models are a little complex and difficult to be fully trained based on existing civil aviation radiotelephony communication corpora.

5.Conclusions

In order to verify the radiotelephony read-backs in Chinese and English intelligently and automatically,a novel strategy is proposed based on BiLSTM by introducing attention and interaction mechanism.According to the experimental results and analysis,we can draw the following conclusions:

(1) The performance of the new checking model has obvious improvement for the experimental results on EATC as well as CATC by adding the word-level interaction layer and the attention layer.It is shown that the proposed new strategy is more helpful to extract the interactive semantic features of radiotelephony communications,and also has a better ability to represent the similarity of the read-backs.

(2) The traditional checking model with two-channel structure is improved by adding the interaction layer after BiLSTM layer,which has advantages in fully utilizing the semantic relation information of read-back sentence pairs underlying the word level.In addition,the BiLSTM has a better balance for semantic expression of the former words and the latter words,so the semantic feature of the whole sentence is more robust than that extracted by two-channel model.

(3) The attention layer supplies more dynamic ability for assigning different weights for different semantic feature vectors,which enables the new strategy to cooperate with MLP and work more effectively.And as a result,the quantitative similarities acquired by the MLP are more distinctive than two-channel model.

(4) By combining the interaction layer and the attention layer,the proposed checking model is more robust to extract the more fine-grained matching semantic information.And the sentence pairs of read-backs that are interacted earlier can be better combined with the attention layer to fully capture the word-level interaction information between shorter sentence pairs,which further improves the checking accuracy of read-backs.

(5) For the natural language processing task of small dataset such as radiotelephony communication,models based on pre-training are difficult to be fully trained.The overall structure of our proposed method and the adopted attention mechanism are relatively simple,and better experimental results can be obtained in the task to verify the consistency of ATC instructions and pilot read-backs.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

This research is jointly supported by Tianjin Natural Science Foundation of China (‘‘Research on the Key Issues of Situational Cognition and Intelligent Early-warning for Civil Aviation Radiotelephony Communication”),the Fundamental Research Funds for the Central Universities,China (No.3122019058),and the Project Funds for Civil Aviation,China(No.H01420210285).

CHINESE JOURNAL OF AERONAUTICS2022年12期

CHINESE JOURNAL OF AERONAUTICS2022年12期

- CHINESE JOURNAL OF AERONAUTICS的其它文章

- Effect of variable-angle trajectory structure on mechanical performance of CF/PEEK laminates made by robotic fiber placement

- An efficient hyper-elastic model for the preforming simulation of Carbon-Kevlar hybrid woven reinforcement

- Tribological performance of hydrogenated diamond-like carbon coating deposited on superelastic 60NiTi alloy for aviation selflubricating spherical plain bearings

- Multi-parameter load sensing pump model simulation and flow rate characteristics research

- A modified forward and backward reaching inverse kinematics based incremental control for space manipulators

- Dynamic neighborhood genetic learning particle swarm optimization for high-power-density electric propulsion motor