The surrounding vehicles behavior prediction for intelligent vehicles based on Att-BiLSTM

Yunqing Gao ,Juping Zhu ,and Hongbo Gao,

1Department of Automation,University of Science and Technology of China,Hefei 230022,China;

2Institute of Advanced Technology,University of Science and Technology of China,Hefei 230088,China

Abstract: A surrounding vehicles behavior prediction method was presented for intelligent vehicles.The surrounding vehicles’ behavior is hard to predict since the significant uncertainty of vehicle driving and environmental changes.This method adopts bidirectional long short-term memory (BiLSTM) model combined with an encoder to ensure the memory of long-time series training.By constructing an attention mechanism based on BiLSTM,we consider the importance of different information which could guarantee the encoder’s memory under long sequence.The designed attention-bidirectional LSTM (Att-BiLSTM) model is adopted to ensure the surrounding vehicles’ prediction accuracy and effectiveness.

Keywords: behavior prediction;attention mechanism;long short-term memory (LSTM);intelligent vehicle

1 Introduction

Intelligent driving is one of the vital application fields of artificial intelligence (AI),which improves the speed of traffic,relieves traffic pressure,and significantly improves human life[1].To ensure a safe driving path,it is necessary to predict the future driving behavior of surrounding vehicles in advance.

Scholars have done many works to achieve accurate and fast behavior prediction.Classical methods include three types:physical model,intention model,and interaction model[2].The physical model is only based on the motion model of vehicles,without considering the interaction with other vehicles on the road and the restrictions of traffic rules[3].Traffic rules are considered in the intention model,but there is no interaction with other motor vehicles.Moreover,it lacks qualitative constraints on time,so it can’t adjust to roads with other topological structures.Predicting with the intention model may cause intelligent driving cars to plan a very unreasonable trajectory or collide with other motor vehicles[4].Given the above shortcomings,the interaction model considers the interaction between traffic rules and other road participants,which takes longer to predict than the physical model,and is more stable than the prediction based on intention.The only disadvantage is that the calculation of the relationship between multiple cars is relatively large[5].Fortunately,with the popularity of artificial intelligence and the rocketlike development of deep learning in recent years,intelligent vehicle behavior prediction has a more perfect and powerful tool.Because of good performance in complex and realistic scenes,these deep learning methods have become more important.

Deep neural networks have been applied to many machine learning tasks and have successfully learned various practical situation representations[6,7].The recurrent neural network(RNN) is widely used to analyze time series data structures.LSTM,as a popular RNN variant,has shown excellent performance in various tasks in vehicle behavior prediction[8].Moreover,BiLSTM is a combination of forward LSTM and backward LSTM,which has been widely researched and used[9].

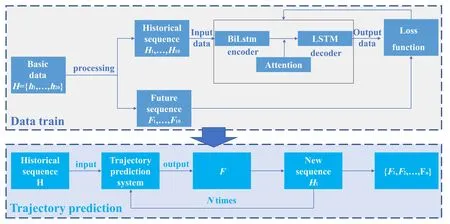

Facing existing problems,we present an innovative method that adds the attention mechanism to the encoder-decoder of bidirectional LSTM and makes different weights to different states’ importance in the network training process.And we solve the problem that the encoder will forget if the sequence is too long.The overview of the developed algorithm is shown in Fig.1.The historical sequence dataset is an input into the BiLSTM algorithm model,and the data training is realized in the feedback process based on the loss function.Then,based on the first 10 time steps,predict the 11th step,and so on,to get the prediction result.

BiLSTM and Att-BiLSTM are existing theoretical methods;we have applied this knowledge to the platform of intelligent driving,which made some innovations based on the original research.Based on the experimental results and analysis of surrounding vehicles’ behaviors prediction,Att-BiLSTM theory has good performance in the field of intelligent driving.In addition,we have added encoder and decoder on the basis of Att-BiLSTM,which is also a great innovation.

The main contributions of this study are summarized as follows:

(ⅰ) BiLSTM model combined with encoder is adopted to ensure the memory of long-time series training.

(ⅱ) According to that,add the attention mechanism.Therefore,it solved the importance of different states’ problems.

Fig.1.Att-BiLSTM overview.

(ⅲ) The Att-BiLSTM model is designed,which ensures the effectiveness and accuracy of surrounding vehicles’ prediction.

The structure of this work is as follows:Section 2 reviews the related work.Section 3 provides a detail procedure about the Att-BiLSTM model based on LSTM and the attention mechanism.Experiment results are shown in Section 4.Finally,Section 5 concludes this work and proposes the future work.

2 Related work

For LSTM,Ondruska and Posner[10]utilized this with ranging sensor measurement as input to track the position of an object,and Khosroshahi et al.[11]used LSTM with trajectory data as input to identify the driver’s intention.However,they have not solved the problem that the model input is a fixed time step.

Jeong et al.[12]proposed an RNN algorithm based on LSTM to predict the motion of vehicles around multi-lane turning intersections.Ref.[13] designed a vehicle trajectory algorithm based on deep learning,where the encoder based on LSTM is used to analyze the past trajectory patterns,and the decoder based on LSTM is used to generate future trajectory sequences.The structure uses beam search technology to generateKmost possible trajectory candidates on the occupied grid map so thatKlocal optimal candidates are far away from the decoder output.Refs.[14,15] presented a long-term prediction method based on a recursive neural network model of the gating unit.The deep neural network with LSTM and GRU structure is used to analyze the temporal and spatial characteristics of the past trajectory.The system that generates the future trajectory of other traffic participants through sequence learning can be used in different prediction levels.However,LSTM model often has the problem of gradient disappearance,so it isn’t easy to train for a long time series.In Ref.[16],a trajectory prediction model based on spatio-temporal LSTM (ST-LSTM) was designed,which embeds spatial interaction into the LSTM model to implicitly measure the interaction between adjacent vehicles.Moreover,a quick connection between the input and output of two consecutive LSTM layers is introduced to deal with gradient disappearance.An algorithm for long-term trajectory prediction of surrounding vehicles was designed by using double LSTM network[17],which effectively improved the prediction accuracy in a strong interactive driving environment.Compared with the traditional trajectory matching and artificial feature selection methods,this method can automatically learn the advanced spatio-temporal features of driver behavior from natural driving data through sequence learning.Refs.[18,19] proposed a coupled LSTM model using the historical track of the target vehicle and the surrounding environment information effectively to predict the future motion track of the vehicle.This method predicts the observable motion intention of the vehicle,builds a grid for the target vehicle,and extracts the hidden space and intention information of adjacent vehicles.However,they did not consider that the information of each time period in the input sequence has different contributions to the final prediction results.For example,in an input sequence,the predicted target vehicle has tried to change lanes at an earlier time and tended to follow later.So assigning different weights to the final prediction results for each time period is necessary.Therefore,the surrounding vehicles’ behavior prediction method is presented for intelligent vehicles based on Attention-BiLSTM.

3 Behavior prediction model based LSTM and attention mechanism

In this section,We first introduce the RNN algorithm,then the LSTM algorithm and attention mechanism,and finally the Att-BiLSTM algorithm.

3.1 RNN algorithm

RNN is a cyclic neural network,which performs the same function on each data input,and the output depends on the last calculation.The generated output is copied and sent back to the circular network.In other neural networks,all inputs are independent of each other.But in RNN,all inputs are interrelated.In many real-life and work tasks,the network’s output is related to the current input and previous output.

Given an input sequencex1:T=(x1,x2,...,xt,...,xT),RNN updates the activity valuehtof hidden layer with feedback edge by the following formula:

whereh0=0 ,f(*) is a nonlinear function and can be a feedforward network.Fig.2 shows an example of RNN,where the “delayer” is a virtual unit that records the latest (or several) activity values of neurons.

3.2 LSTM network

The explicit design of LSTM is to solve the defect that the circulating neural network cannot deal with long-range problems.Fig.3 shows the cyclic unit structure of LSTM network which introduces gating mechanism to determine the direction and path of information transmission.Denote the input gate asit,the forgetting gate asft,and the output gate asot.

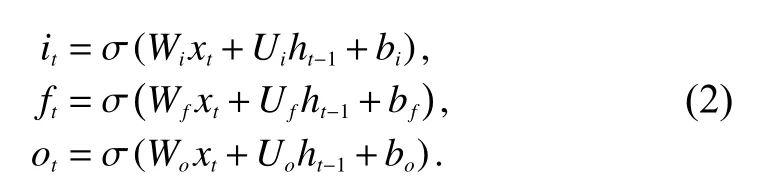

The gate in the LSTM network is a kind of “soft” gate,and its value is between 0 and 1,which means that information passes through in a certain proportion.The calculation method of the three gates is shown in Eq.(2).

Fig.2.Recurrent neural network.

Fig.3.The cyclic unit structure of LSTM network.

The input gateitcontrols the amount of candidate statec˜tinformation at the current time.The forgetting gateftcontrols the internal statect-1at the last moment,selectively del etes some information in the storage unit,and activates it to the next input memory.Output gateotcontrols how much information the internal statectneeds to output to the external statehtat the current moment,as shown in Eq.(3).

LSTM network is an improved form of RNN,which makes the past data in the network easier to remember.LSTM solves the gradient disappearance problem in RNN.Using backpropagation to train the model makes LSTM suitable for classifying,processing,and predicting time series with unknown time delays.

3.3 Attention mechanism

The amount of information stored in neural networks is called network capacity.Generally speaking,the capacity of storing data with a group of neurons is proportional to the number of neurons and the complexity of the network.If more information is to be stored,the number of neurons will be more or the network will be more complex,leading to the multiple increase of neural network parameters.

When using a neural network to process a large amount of input information,we can learn from the attention mechanism of the human brain and select only some key information to process to improve the efficiency of the neural network.X=[x1,...,xN] presentsNpieces of input information.To save computing resources,it is not necessary to input allNinput information into the neural network for calculation,but only to select some task-related information fromXand input it into the neural network.

The calculation of attention mechanism can be divided into two steps:One is to calculate the attention distribution on all input information,and the other is to calculate the weighted average of input information according to the attention distribution.

Let Key=Value=X,then attention distribution can be obtained as follows:

where αiis an attention distribution (probability distribution),s(Xi,q)is the attention scoring mechanism.There are several scoring mechanisms:

The attention distribution αican be interpreted as the degree to thei-th information is concerned when a task-related queryqis given.We use a “soft” information selection mechanism to summarize the input information.The mechanism of soft attention is as follows:

In addition,some other changed models,for example,hard attention,key-value attention,etc.,will not be described here.The attention mechanism is generally used as a neural network component,mainly to filter information and select relevant information from input information.

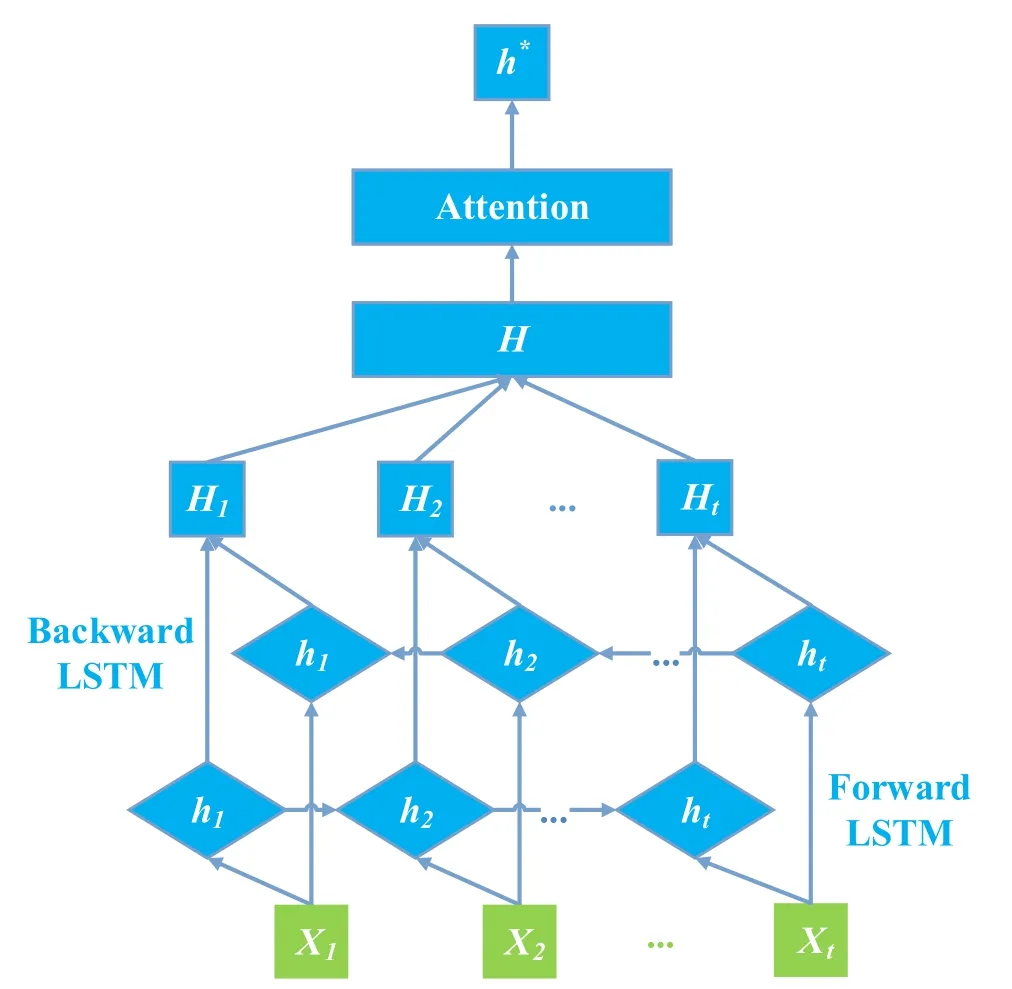

3.4 Att-BiLSTM with encoder-decoder structure

BiLSTM consists of two layers of long-term and short-term memory networks,which have the same input and different ways of information transmission.The encoder-decoder structure is adopted to ensure the memory of long-time series training.The encoder is composed of BiLSTM,base on the inputXtat timet,the output vectorhtis obtained through BiLSTM encoder.The calculation is shown in the following Eq.(6),whererepresents the forward output,represents the reverse output,and ⊕ represents the addition of corresponding elements.

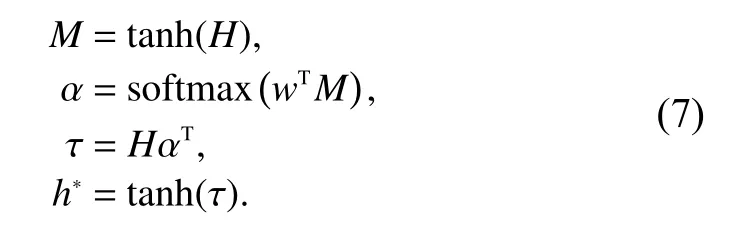

In the structure of encoding and decoding,as the length of the input sequence becomes longer,the information of the input sequence saved by the intermediate vector obtained by the encoder will become less and less.At this time,by adding the attention mechanism,the decoder can no longer only consider the hidden state of the last time step of the encoder but can choose the most relevant hidden state among the hidden states of the input sequence at all times.Correspondingly,in this work,the model will use the hidden vectors of all time steps of the encoder at every moment of decoding and focus on the most relevant input information of the time step according to attention.The encoder output is added to the attention mechanism,assuming thatH=[h1,h2,...,ht] is the set of BiLSTM outputs afterttime steps.The calculation method of attention shows as follow:

Inputh*to the decoder,then the prediction result is obtained.The whole process is shown in Fig.4.

4 Experiment and result analysis

4.1 Datasets

In our experiment,the proposed model is tested on a public dataset:next generation simulation (NGSIM) which is widely based on many existing studies[20-22].The NGSIM dataset is the detailed vehicle trajectory data collected by the Federal Highway Administration of the United States on the American highway 101 in Los Angeles,California.NGSIM contains four data sets in different scenes,namely,US101,I-80,Lankershim Boulevard,and Peachtree Street.The first two data sets record the driving track of vehicles on expressways;in comparison,the last two data sets record the driving track of vehicles on urban roads.And US-101 is selected as the primary research dataset,and the operations of other data sets are similar.

The vehicle trajectory feature information in the NGSIM dataset includes the target vehicle’s global and local coordinates.Also,it contains essential information such as vehicle speed,acceleration,front and rear following distance,and so on.The study area consists of five main lanes and two auxiliary lanes.The vehicle trajectory data provides the precise position of each vehicle in the study area every tenth of a second,thus obtaining the precise lane position and the position relative to other vehicles.

4.2 Datasets preprocessing

Firstly,based on Python’s Pandas tool,the US-101 dataset in the NGSIM dataset is extracted,and then the training set and test set of this experiment are constructed.The specific process is as follows:

(ⅰ) Arrange all vehicle numbers from small to large.

(ⅱ) Arrange the total number of frames of the vehicle from small to large,and every vehicle entering the recording area will be numbered and reused,so we only take the data with the smallest total number of frames in each vehicle number and get 3108 sets of data.

(ⅲ) Each data’s total number of frames is different.The position data is recorded once every tenth second,so the data with existing frames less than 60 are discarded,the first 60 data with more than 60 are taken,and finally,2100 groups are obtained.

(ⅳ) Take 2000 groups of data,and take random training from them.The remaining 100 groups are used as test set data.

4.3 Model training

Fig.4.Attention mechanism-based BiLSTM.

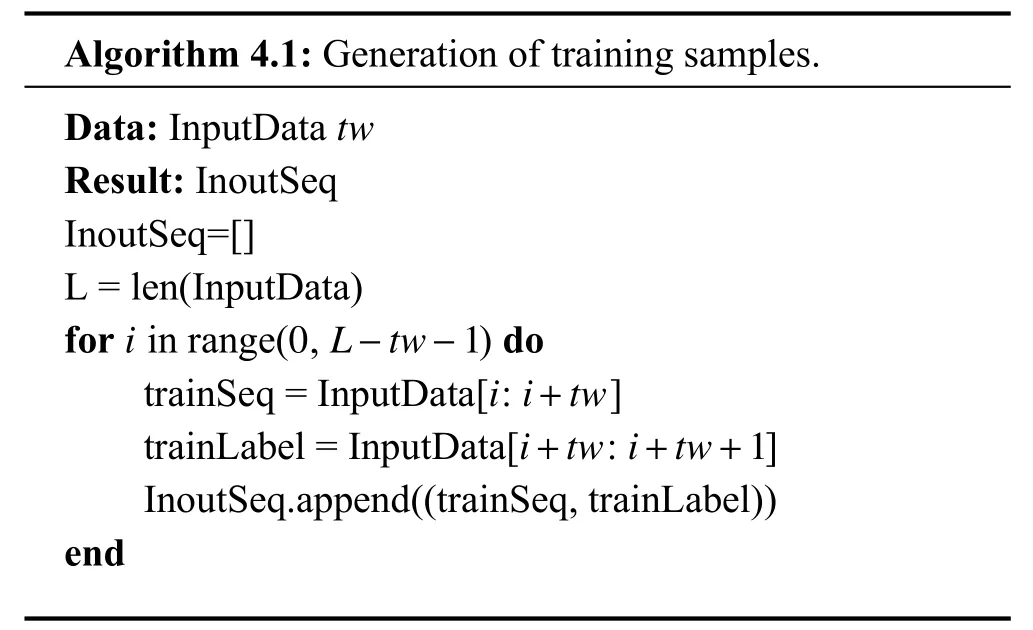

Based on the obtained training set processing,the final training sample contains historical information of 10-time steps and future trajectory information of 1-time step.The specific process is shown in Algorithm 4.1.

For Algorithm 4.1,InputData presents the input data,andtwpresents the sequence length of the input encoder,i.e.,10.The dimension of the hidden state of encoder and decoder is 128.Adam optimizer is used in training,and the learning rate is 0.001.The algorithm is based on PyTorch.The model’s task is to predict the trajectory of intelligent driving vehicles.Naturally,the loss function of the model is set as the mean square error between the predicted future trajectory and the true value of the trajectory,as shown in Eq.(8):

where (xpre,ypre) is the predicted trajectory coordinate,(xTr,yTr)is the true value of the trajectory.

The losses of the final model in the training set and test set are shown in Fig.5.It shows that the loss of the model in the training set and test set decrease continuously with the increasing of iteration times and finally tend to be stable.This process indicates that the verification result can be completed fast after the model training.

4.4 Experiment results and analysis

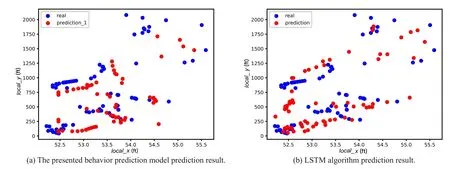

We compared the proposed Att-BiLSTM and the classical LSTM prediction methods based on the matplotlib tool provided by python.For a specific car number (3000 or 2000),the prediction results are shown in Figs.6 and 7,where Figs.6a and 7a are the prediction result obtained based on Att-BiLSTM,and Figs.6b and 7b are the result obtained by LSTM method.The blue point in the image is the actual route,and the red point is the predicted route.Prediction and prediction_1 indicate that the data of the previous 10 time steps are input to predict the data of the next time step,and every 10 time steps are predicted.prediction_2 is to take the data of 10 time steps at the beginning and predict the data of 10 time steps in the future,which is only conducted once.They show that Att-BiLSTM is indeed much better than the LSTM prediction method.The algorithm can well predict the future trajectory of a simple cycle track and predict the complex cycle track with multiple direction changes.Although the predicted trajectory obtained by the algorithm has some deviation in position,the overall direction is still accurate.

Fig.5.Loss change curve of training and test sets.

Fig.6.The prediction results with ID 30000 car.

Fig.7.The prediction results with ID 20000 car.

To reflect the superiority of this algorithm as a whole,we compare the following contents with the classic LSTM prediction algorithm in the test set from the data level.Average displacement error (ADE) will be used as an index to evaluate the prediction results in the experiment,which is defined as the Euclidean distance between the predicted track coordinates of the predicted vehicle in the future time step and the real value,i.e.,

whereNrepresents the number of samples,(xpre,ypre) is the predicted track coordinate,and (xTr,yTr) is the true value of the track.The basic prediction process uses 10 historical time steps of real data to predict a future time step.In predicting multiple future time steps,the predicted values are combined with the last 9 of the previous 10-time step data to form a new 10-time step data,which is used as an input for prediction.

The results of calculating the average distance error by predicting different time steps are shown in Table 1.It indicates that Att-BiLSTM is better than the classical short-time memory network under the same prediction time step.However,with the increase of the prediction time step,the prediction accuracy is also declining,but the track direction of the subject is still accurate.As shown in Fig.8,prediction_1 predicts one-time step in the future.

Table 1.The results of calculating the average distance error.

Fig.8.Comparison of different forecasting time series.

Table 2.The influence of different historical sequence lengths on the prediction results.

Table 2 shows the influence of different historical sequence lengths on the prediction results.It indicates that when predicting the future trajectory information,it is not that the longer the input historical sequence is,the better.If the input historical sequence is too long,the prediction accuracy may decrease,so the appropriate chronological sequence length should be selected when predicting the future trajectory of surrounding motor vehicles.Although the average distance error of some data is very small,there are great differences in the visual effect of the resulting graph,and it is speculated that there may be over-fitting.

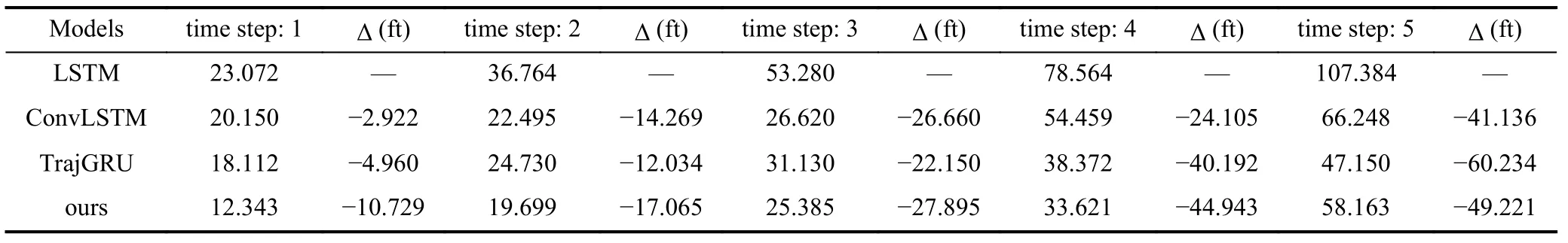

4.5 Experimental comparative analysis

Based on a comparison with LSTM,a further experimental comparative analysis was carried out.The objects of comparison are ConvLSTM and TrajGRU.LSTM is the stacking and time connection of a plurality of LSTM units.ConvLSTM has a convolution structure in input-to-state and state-to-state transition,and a coding prediction structure is formed by stacking multiple ConvLSTM layers.It is roughly composed of two networks:a coding network and a prediction network.The initial state of the prediction network is copied from the last network of the coding network[23].TrajGRU uses the current and previous input states to generate local neighbor sets of each location at each time tamp and can learn the connection topology by learning the parameters of the subnet.The model inserts a down-sampling layer and an up-sampling layer between neural networks,which are realized by convolution and de-convolution with step size[24].

Both ConvLSTM and TrajGRU are classical algorithms for spatiotemporal sequence prediction.And they have a good effect and strong adaptability.At the same time,they put forward a new comprehensive evaluation scheme to verify whether the new algorithm is SOTA.Compared with them,it can show the advantages of the presented algorithm.

Table 3 describes the performance of different models,including this model on the NGSIM dataset.By analyzing the performance of each model in the next 1 to 5 time steps,we get the mean square error of their predicted coordinates and real coordinates at different time steps.Based on the LSTM model,the improvement of other models relative to LSTM is analyzed.It shows that this model has a better prediction effect than other models under the same conditions.

On the basis of Table 3,take the future forecast time step as the horizontal coordinate axis and the mean square error between the forecast result and the real result as the coordinate vertical axis.The relative results of different models are shown in Fig.9.It indicates that the prediction effect of LSTM is the worst.Our model works best when the prediction time step is small,while ConvLSTM and TrajGRU are comparable.When the prediction time step is 3,the prediction error of ConvLSTM is close to that of our model.When the prediction time step is 4,the prediction error of TrajGRU is close to that of our model.When the prediction step size is 5,the accuracy of the TrajGRU model is higher than ours,but our model’s the total accuracy of the first five prediction steps is still higher than other models.We can say that the model in this work takes into account the advantages of ConvLSTM and TrajGRU in a small prediction time step.

Table 3.The performance of different models.

Fig.9.The mean square error between the forecast result and the real result of each model.

Fig.10.The increase of prediction time step on the interference degree of each model.

Fig.10 gives the influence of the increase of prediction time step on the interference degree of each model more intuitively.The growth of prediction time step has little influence on LSTM and TrajGRU,but it has some influence on ConvLSTM and our model.Specifically,when the prediction time step increases from 3 to 4,the error of ConvLSTM fluctuates greatly,and from 4 to 5,the error of our model also fluctuates slightly.However,our model still has great advantages in overall error.

5 Conclusions and future work

This work investigates the problem of the surrounding vehicles’ behavior prediction.By adopting the attention mechanism into the encoder-decoder of a bidirectional longshort memory network and giving different weights to different states according to the importance of the network training process,we solve the problem that the encoder will forget if the sequence is too long.We present detailed comparisons to show the high accuracy of the method.

We successfully solved the contribution of the different information of each time period in the input sequence to the final prediction result.However,some issues,such as,complex environments and multi-vehicle interaction problems,need to be researched in the future.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (U20A20225,U2013601),the Fundamental Research Funds for the Central Universities,the Natural Science Foundation of Hefei (2021032),the Key Research and Development Plan of Anhui Province(202004a05020058),the CAAI-Huawei MindSpore Open Fund,and University of Science and Technology of China-NIO Intelligent Electric Vehicle Joint Project.

Conflict of interest

The authors declare that they have no conflict of interest.

Biographies

Yunqing Gaoreceived his B.S.degree in Automation from the University of Science and Technology of China (USTC) in 2020.He is currently pursuing the M.S.degree in Control Engineering at USTC.His research interests include automatic control and trajectory prediction.

Hongbo Gaoreceived his Ph.D.degree from Beihang University in 2016.He is currently an associate professor at the Department of Automation in the University of Science and Technology of China.He is the author or coauthor of over 40 journal papers,and the co-holder of 10 patent applications.His research interests include unmanned system platform,robotics,machine learning,decision support system,and intelligent driving.

- 中国科学技术大学学报的其它文章

- IITZ-01 activates NLRP3 inflammasome by inducing mitochondrial damage

- Sphingosine-1-phosphate induces Ca2+ mobilization via TRPC6 channels in SH-SY5Y cells and hippocampal neurons

- Comprehensive bioinformatic analysis of key genes and signaling pathways in glioma

- Evolutionary game analysis of promoting the development of green logistics under government regulation

- Self-supervised human semantic parsing for video-based person re-identification