Computer-Assisted Real-Time Rice Variety Learning Using Deep Learning Network

Pandia Rajan Jeyaraj, Siva Prakash Asokan, Edward Rajan Samuel Nadar

Experimental Technique

Computer-Assisted Real-Time Rice Variety Learning Using Deep Learning Network

Pandia Rajan Jeyaraj, Siva Prakash Asokan, Edward Rajan Samuel Nadar

()

Due to the inconsistency of rice variety, agricultural industry faces an important challenge of rice grading and classification by the traditional grading system. The existing grading system is manual, which introduces stress and strain to humans due to visual inspection. Automated rice grading system development has been proposed as a promising research area in computer vision. In this study, an accurate deep learning-based non-contact and cost-effective rice grading system was developed by rice appearance and characteristics. The proposed system provided real-time processing by using a NI-myRIO with a high-resolution camera and user interface. We firstly trained the network by a rice public dataset to extract rice discriminative features. Secondly, by using transfer learning, the pre-trained network was used to locate the region by extracting a feature map. The proposed deep learning model was tested using two public standard datasets and a prototype real-time scanning system. Using AlexNet architecture, we obtained an average accuracy of 98.2% with 97.6% sensitivity and 96.4% specificity. To validate the real-time performance of proposed rice grading classification system, various performance indices were calculated and compared with the existing classifier. Both simulation and real-time experiment evaluations confirmed the robustness and reliability of the proposed rice grading system.

deep learning algorithm; rice defect classification; computer vision; agriculture;automated visual grading system

Most of the country’s economy depends on the agricultural sector. For sustainable development, the increase in crop production and supply has increased exponentially (Ismail and Malik, 2022). Staple crops like rice have a predominant contribution to human’s effective food supply and livelihood (Hu et al, 2020). This growing demand for high-quality rice makes it need advanced technology in agriculture. Intelligence computer-assisted artificial based farming technology used by developingcountries will have a higher yield, effective agricultural management, accurate monitoring and reduced water resource usage (Zareiforoush et al, 2016; Marimuthu and Roomi, 2017; Sun et al, 2020; Xiao et al, 2020; Su et al, 2021).

The computer-assisted technology implementation in the agricultural sector has primary objectives to increase the yield of high-quality rice, reduce the time of processing and raise market price (Shamim Hossain et al, 2019). Increased population and economy make higher production and fast processing of agricultural crops possible by using advanced automated rice industry. For having better farming and accurate paddy processing in the industry, both agriculture methods and innovative research have provided solutions to better-automated farming and processing for delivering global crop need (Kucuk et al, 2016; Sun et al, 2017; Jeyaraj and Nadar, 2020a; Zeng and Li, 2020; Abinaya et al, 2021; Chen et al, 2021).

The paddy industry mostly depends on a great number of labours. Hence, the grading of paddy rice by physical inspection is a tough process (Yang et al, 2021). Labour working in agriculture is steadily decreasing, hence there is a shortage of labour in the agriculture sector (Kaur et al, 2018). Paddy rice defects like black spots, wrinkled and broken are to be identified in the rice industry. Implementing computer vision technology will provide an accurate defect identification, increased productivity and good quality rice for people (Alfred et al, 2021). This automated grading of paddy rice results in serving good quality with high yielding rice to human society. Hence, three major defects should be considered by advanced intelligent processing (Jo et al, 2020).

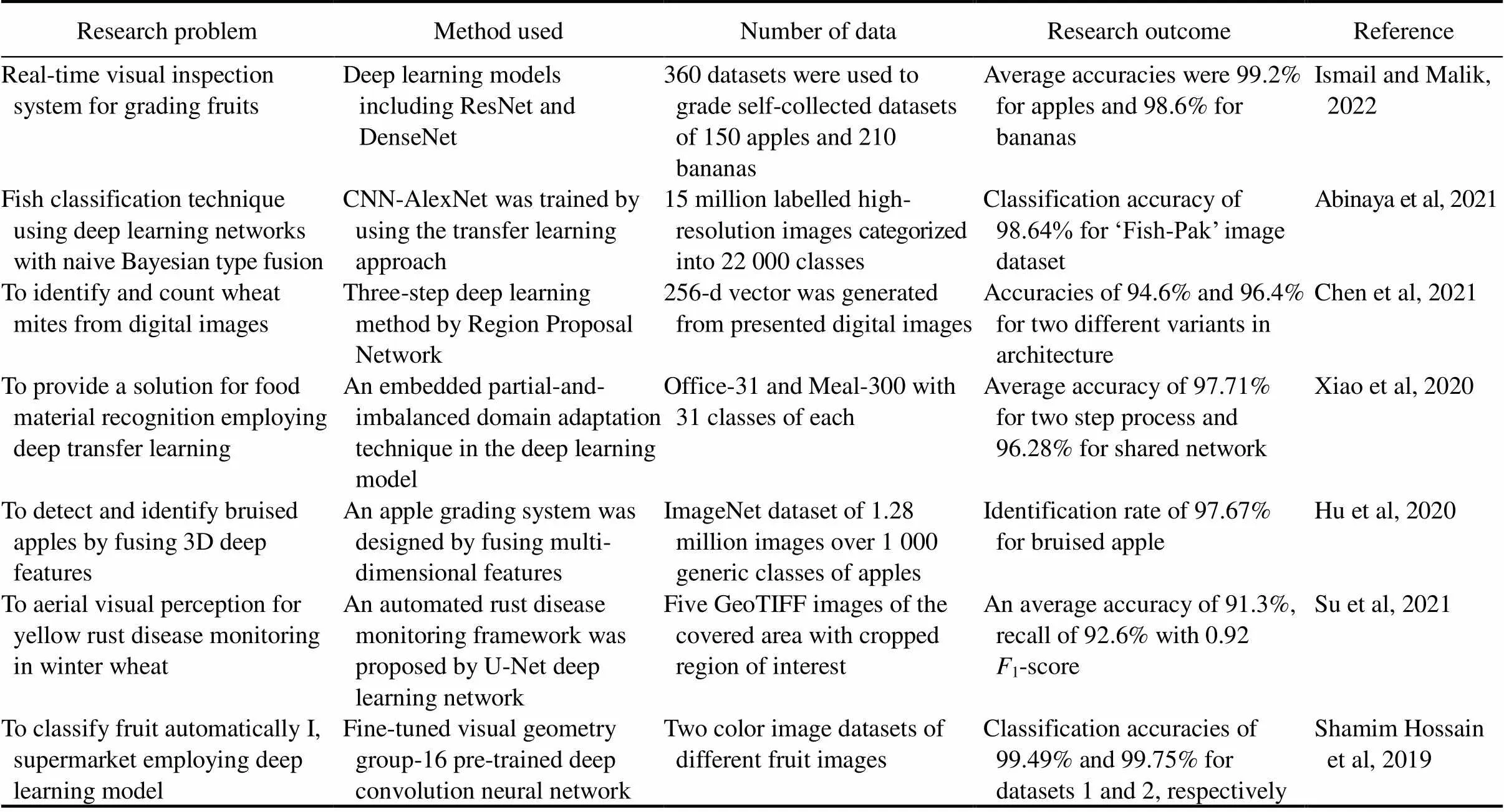

Table 1. Summary of literature reviews similar to rice defect classification.

CNN, Convolutional neural network.

In the current scenario, rice classification has not utilized any feature extracting algorithms. Guerrero et al (2017) primarily use both spatial and temporal features like texture color appearance descriptor to detect crops. Recent advancements in deep learning algorithms to extract deep features make an impact in providing a possible solution. Some like plant disease classification (Zhang et al, 2020), weed detection (Jeyaraj and Nadar, 2019), production estimation (Kuo et al, 2016) and farmer assist (Wu et al, 2019), have already emerged as a possible technique. Hence, we adopt a deep learning model for paddy rice defect identification and grading industry development. Table 1 gives the summary of notable literature reviews similar to rice defect classification. In Table 1, we compared the research problem with the method used for solving and research outcome.

The manual grading and quality control depend on more humans trained to inspect (Ren et al, 2017; Jeyaraj and Nadar, 2020b; Xu et al, 2021). In the research of Koklu et al (2021), a total of 75 000 grain images, 15 000 from each of these varieties, are included in the dataset. Classification models were created by using deep neural network (DNN) algorithms. Based on the various statistical results, classification successes from the proposed models were achieved as 99.87% for artificial neural network, 99.95% for DNN, and 100% for convolutional neural network (CNN). By automating the rice industry using a deep learning model, grading accuracy has been improved, but real-time processing has not been considered (Nandi et al, 2016; Singh and Chaudhury, 2016; Yang et al, 2017; Mittal et al, 2019; Cao et al, 2021).

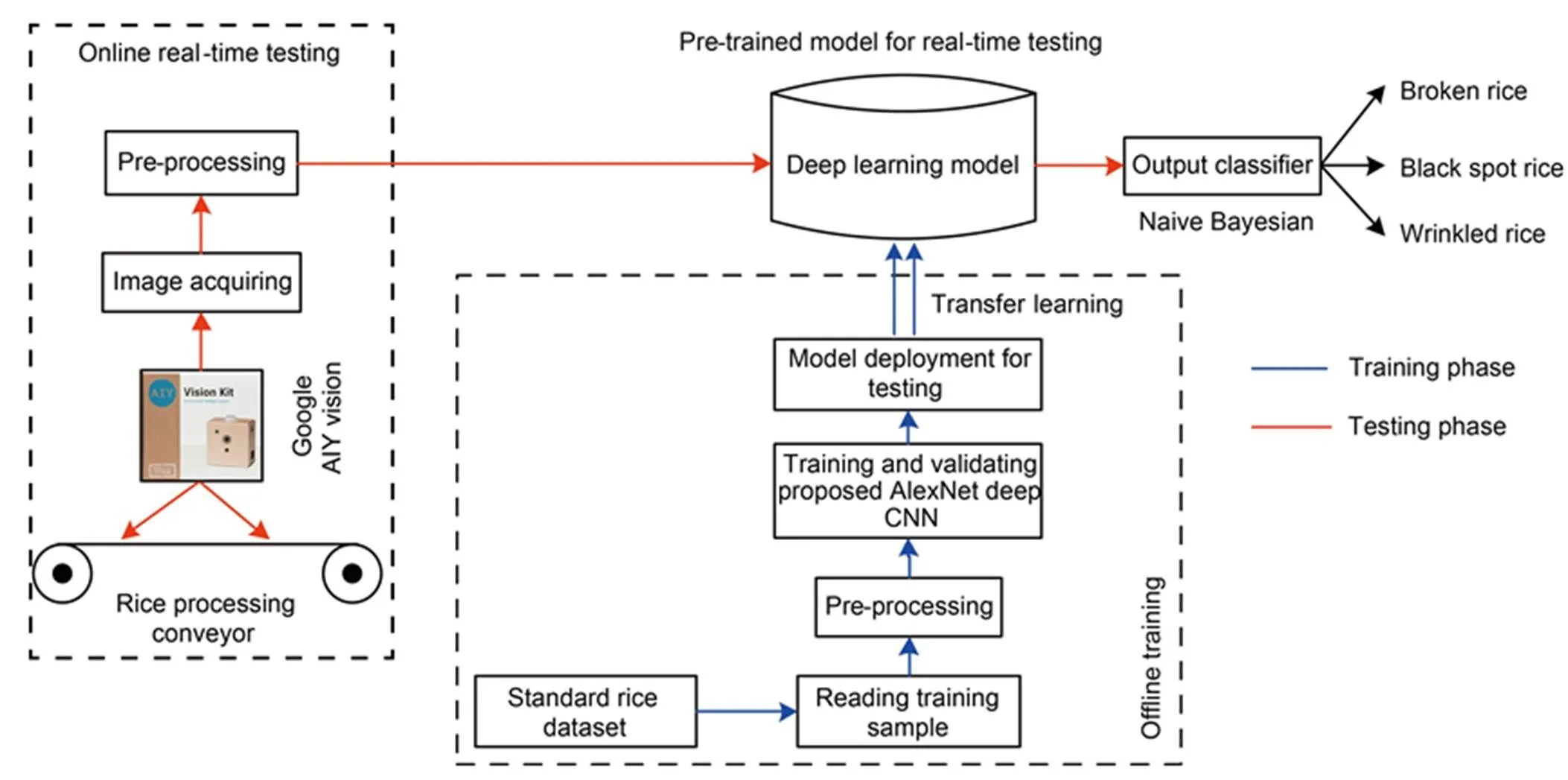

In this study, we developed an automated visual grading system for paddy rice grading for rice quality control. The proposed deep learning model was designed using a cycle-consistent AlexNet architecture, which can extract high level distinct feature. For validating the proposed deep learning model, the performance was compared with other existing conventional grading algorithms like support vector machine (SVM), GoogleNet and ResNet-152. The proposed model extracted handcrafted features and did not depend on the type of images used for training and testing. The overall block diagram of the proposed real-time rice quality analysis system is given in Fig. 1.

The main contribution of this study was highlighted as follows: we designed and developed a computer vision system for real-time paddy rice defect identification and classification system by visual inspection. To detect the defects in rice, we used AlexNet based CNN that has wide and deep learning capability to detect high discriminant features in rice. The proposed system can be used in both offline and online (real-time) modes since we used a low-cost Google AIY vision kit.

Fig. 1. Block diagram of proposed real-time rice defect classification system.

AIY, Artificial intelligence yourself; CNN, Convolutional neural network.

MATERIALS AND METHODS

Materials considered for proposed rice defect classification system design

We present a detailed description of the materials used for developing proposed system and the dataset used for training the AlexNet deep learning architecture by a novel transfer learning approach.

The proposed real-time rice defect classification consisted of four main components: 1) A computer vision- enabled Google AIY vision camera, 2) National Instruments (NI)-myRIO module for deep learning model implementation, 3) Proposed model deployment by transfer learning with pre-training and testing, and 4) User end display for rice defect visualization. For the proposed system, several offline trainings were carried out with the rice dataset and testing with corresponding validation was also applied.

For the development of a real-time rice classification system, the proposed deep learning model was trained and the transfer learning deployment was carried out using two standard datasets. First dataset is Kaggle- labelled rice of Vietnam, which contains 107 orientations of 5 rice varieties, and the second dataset is Data- worldof Asian species, consisting of 750 rice images with different orientations of 4 major varieties.

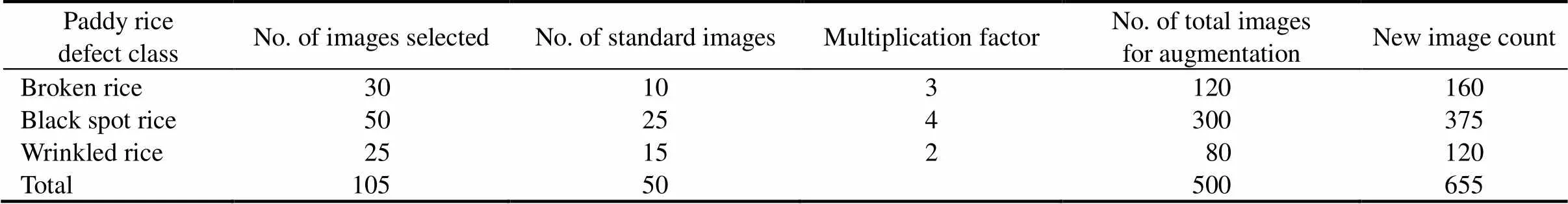

Table 2. Training image dataset in detailed description.

CASC, Comprehensive Automation for Speciality Crops.

For testing and validation, we considered the Comprehensive Automation for Speciality Crops (CASC) dataset of rice. This research outcome gives the major defects to be identified in rice as broken, black spots due to starch reduction, and wrinkled due to bacterial infection. Each training image was clipped to 180 × 180 pixel size, and each image taken at a different orientation was marked with a label with definite ground truth. Each dataset had varying amounts of healthy and defective rice. Table 2 shows the total numbers of healthy and defective images of rice with the number of orientations taken for collecting the sample dataset. The dataset was proposed to defect class imbalance in each category of images and data augmentation was used to reduce the dimension of presented images. Fig. 2 shows the sample images of standard Kaggle training dataset.

Fig. 2. Sample image instances of Kaggle rice dataset.

For increasing the proposed deep learning architecture generalization, data augmentation was used for random flipping and to compute a probability distribution. Considering a group of paddy image(), a particular class of paddy image() was selected. The total number of images in each class() was compared with standard target image(), to determine multiplication factor (MF). This MF was decided from the number of images to be reproduced in the same class of particular defectby eachconvolution filtersandcorresponding class filters label

Let the rotation value of imageand image translation vector () be predefined by the probability distribution of the same defect() as given by:

() = {1,2,3,∙∙∙,n}(1)

= {MF= |1 ‒() /()|,ϵ (1, 2, 3,∙∙∙,)} (2)

() = {((),,)}(3)

In the proposed feature extraction method, we used target image() = 50 per paddy rice defect class. Table 3 provides the total images augmented for each defect and standard training image values. Hence, this new image dataset obtained by data augmentation was used for training the proposed deep learning network.

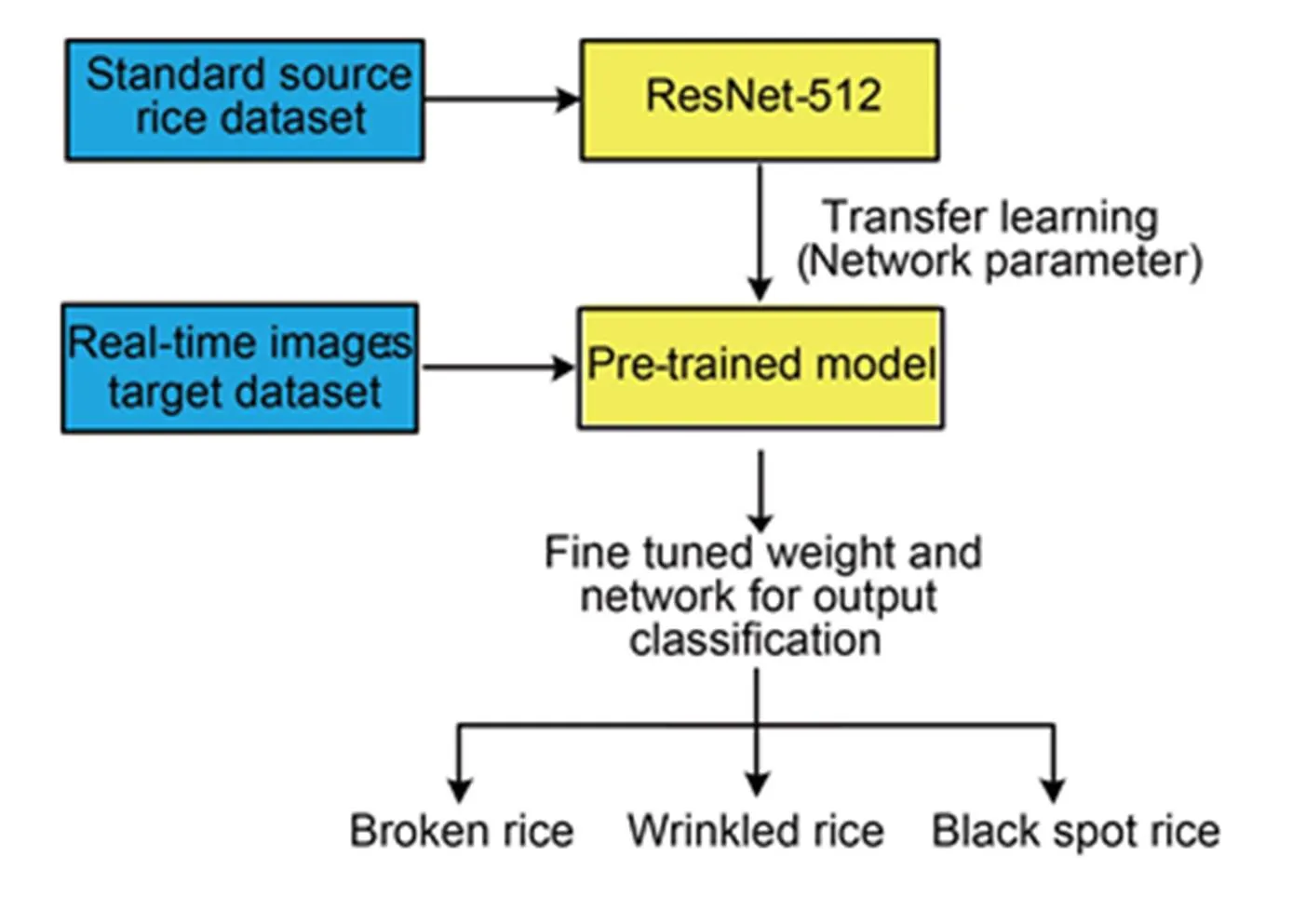

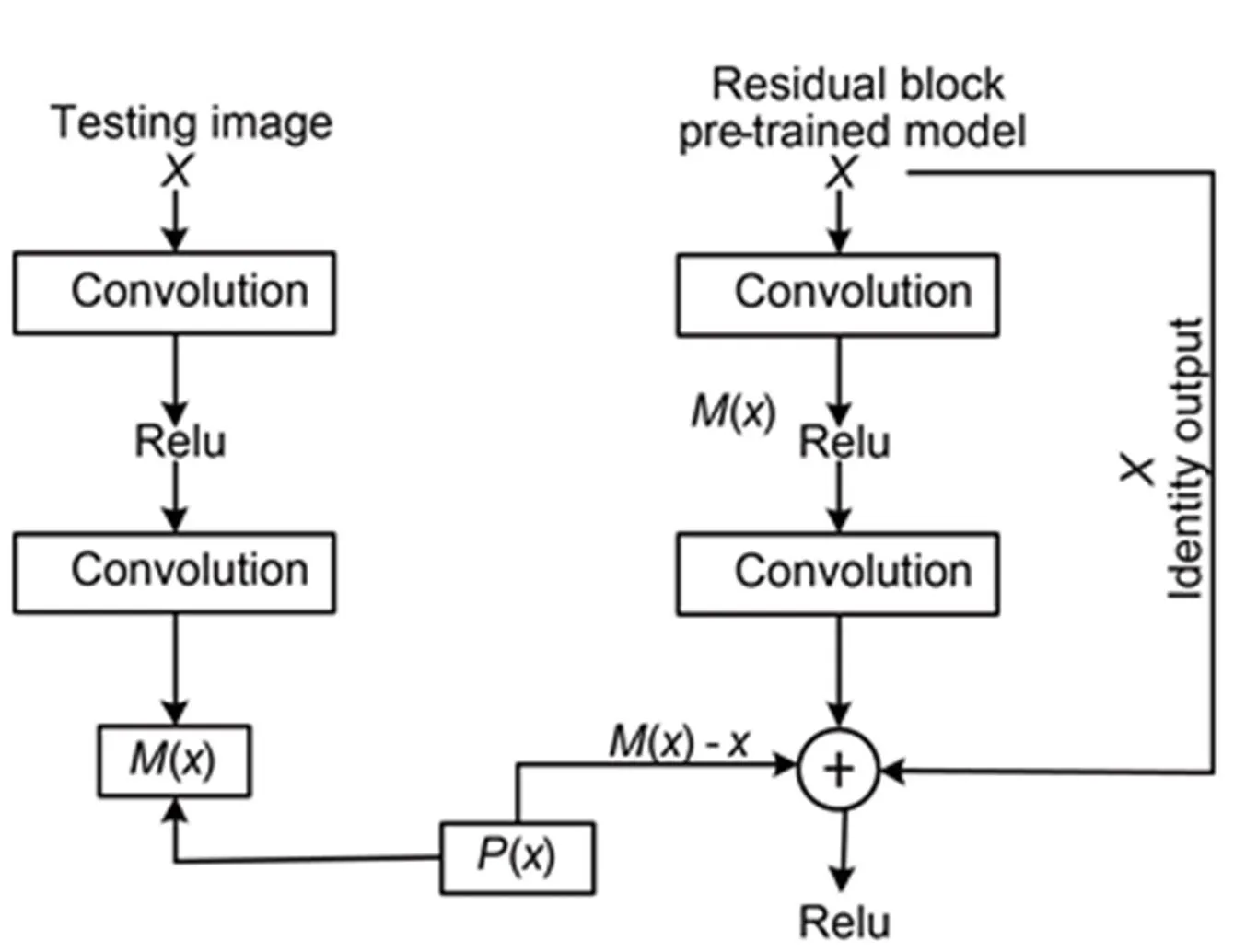

Fig. 3. Proposed transfer learning and deep learning model for pre-training.

Proposed method

For detecting and realization of paddy rice defects, it is important to extract rice image discriminative features. Pre-training deep feature extraction and fine-tuning are important aspects of the proposed method, which are obtained by implementing transfer learning strategies in the network pre-training process.

Table 3. Paddy rice defect dataset image augmentation in detail.

Data augmentation was used to reduce the large number of training image sets. Fine-tuning of ResNet- 512 was performed using the generalized Kaggle paddy rice dataset. However, the amount of data needed for training was reduced by data augmentation. This pre-training process using the transfer learning strategy is shown in Fig. 3. The fine-tuning of the transfer learning process was completed by fixing other layer weights for rice defect classification.

By using the residual units, the problem of network degradation caused by learning layers was avoided in the proposed deep learning network architecture. The learning level determined the feature was extracted by each processing layer for detecting the rice defect in the presented dataset. The feature extraction can be increased by the number of stacked residual layers. In this proposed feature extraction of the rice dataset, we used a 512-layer residual unit,i.e., ResNet-512 for extracting dominant features and increasing defect detection accuracy in real-time. The residual unit model for pre-training is illustrated in Fig. 3.

Each residual function is expressed as:

() =() ‒(4)

Where() indicates residual, and() weight is updated from pre-training process.indicates the number of input images. Equation 4 was used for residual learning. Fig. 4 indicates the feature extracted by the proposed ResNet-512 learning. For transfer learning, the useless features were suppressed by the ResNet function and by using the squeeze and excitation (SE) function. On detecting a marginal difference in rice defect, the SE function was embedded with ResNet-512 for high-quality feature extraction and transfer learning.

Fig. 4. Concept of proposed model pre-training for feature extraction by ResNet-512 (residual learning).

() indicates paddy image;() indicates residual;indicates the number of input images; andindicates the vector form of input images.

Due to data imbalance, the re-weighting schemes were used to improve the loss function. The updated loss function(,) is given by Equation 5:

(,) = 1 /∙(,) = (1 ‒) /∙(,) (5)

Whereis a hyperparameter,andis a class imbalance loss function.adjusts the class imbalance in the image dataset. Class balanced focal loss adds a modulating factor to reduce relative loss and sample the difficult data point by Equation 6:

Where,(,) is focal balanced loss, andis target domain prediction.

The sigmoidal modulating factor is

() = 1 / [1 ‒ exp (-Z)](7)

The output modelZ= (1,2,3,∙∙∙,Z) with-class of rice data.

In the proposed real-time rice defect classification system, a pre-trained, fully connected AlexNet was used. Using a novel transfer learning approach, AlexNet captures the variation in the real-time acquired image. This AlexNet has already pre-trained by Kaggle-labelled image dataset and ResNet-512 feature extraction, which makes the proposed deep learning algorithm as an efficient feature extractor. Also, the proposed architecture is easy to customize and simple in computation with robust architecture by using transfer learning.

The proposed pre-trained AlexNet consists of seven convolution and five pooling functional layers with two fully connected layers and a SoftMax output classification layer. Fig. 5 shows the proposed deep learning network for real-time rice defect classification.Initial real-time rice images are labelled and compressedto 228 × 228 × 4 pixel for processing by the pre-trained AlexNet. The presented input real-time image is convoluted with 18-filter of 12 × 12 × 4 pixel with 5 strides, which modifies the input image by 48 × 48 × 78 pixel size. Max-pooling operation is performed after the convolution process, which is consisted of 56-filter of 2 × 2 × 12 size with 3 strides and a pooling operation.

The dominant pixel with window is obtained through discriminative feature by pooling to identify maximum pixel. This pooling operation makes the proposed AlexNet to detect highly discriminative rice feature learned from the training dataset. Each convolution and max-pooling operation reduce computational complexity and overfitting, and each convolution layer reduces the dimension of image to 4 × 4 × 3. Further convolution layer reduces the images to provide feature map for classification by fully connected layer. Using this extracted feature from the presented real-time input image, the fully connected layer decides the output defect.

Fig. 5. Architecture of proposed deep learning network with Naive Bayesian layer.

Transfer learning makes the trained network unaltered by presented input images. This provides a robust architecture for testing and validation. The confidence score indicates the output class belongingness and is normalized by the SoftMax layer. This SoftMax layer converts the decision to the highest probability class, which is obtained by a Naive Bayesian classifier. Each pre-trained network has multiple feature fusion. The moved multiclass segmentis computed in parallel. In this real-time defect detecting system, the Naive Bayesian classifier was used to fuse confident score computed by the pre-trained AlexNet architecture. Each image confidence score is increased by the Naive Bayesian layer with an encapsulating probability distribution.

The probability distribution is given by Equation 8:

(R|C) =(C|R)(R) /(C) (8)

Here,(C|R)is the prior probability of rice defect, and(R|C) is the posterior probability of rice defect. This posterior probability is generated by a Naive Bayesian classifier. The prior probability of(R) and evidence parameter(C) are computed for each presented image. Hence, the posterior probability(R|C)gives a confidence score for rice defect classification.

In this study, the network learning rate was set as 0.0075. Using Equation 5, the focal balanced loss function adjusted the proposed network learning rate and the updated learning rate dynamically updated dataset index. For each epoch training, the learning rate was gradually reduced by 10%‒15% to set the final weight and bias for each epoch. For each training dataset, 25 epochs were considered with a momentum of 0.85. For experimental simulating, the prototype computer configuration consisted of 4.2 GHz CPU frequency with 16 GB memory and windows 10.1 operating system of 64 bits, and the inbuilt processor had NVIDIA GTx 1100xi with TensorFlow.

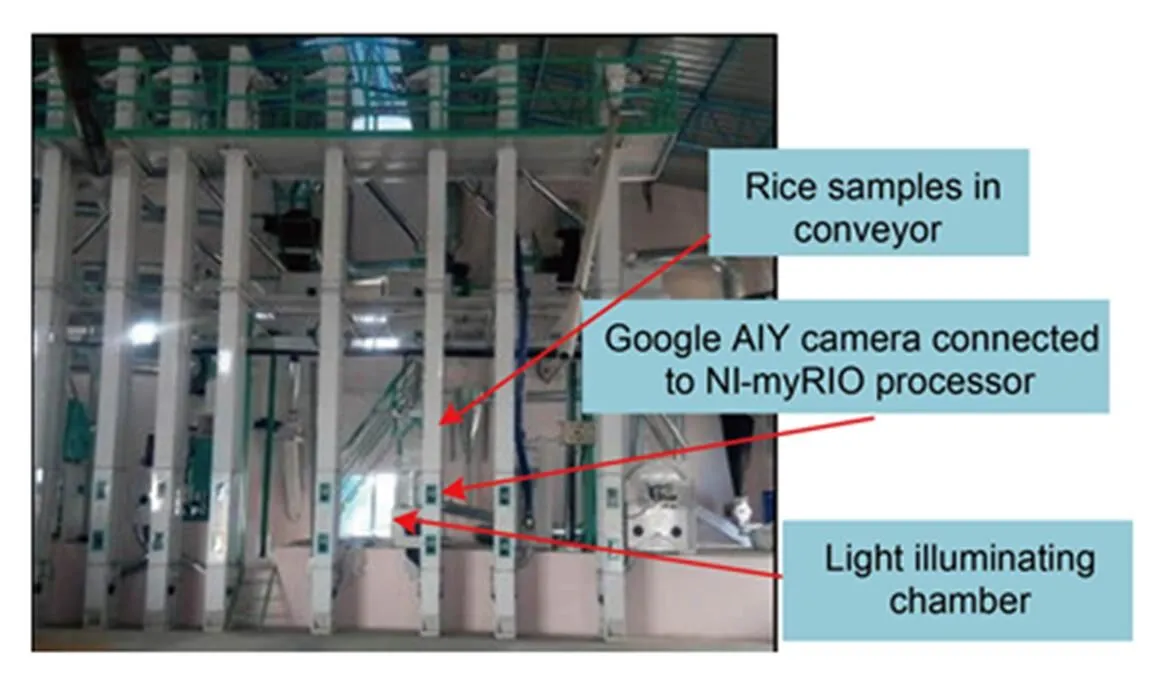

Fig. 6. Proposed real-time automated rice defect monitoring system: Prototype Model.

AIY, Artificial intelligence yourself.

Proposed real-time prototype model

By using the pre-trained proposed deep learning model, a prototype model was formed for real-time testing. The prototype hardware system consists a NI-myRIO processor with a Google AIY vision module embedded on the front end, to acquire real-time images. This NI- myRIO processor is connected to a high-resolution Logitech camera and enclosed by a bonnet with a high uniform illuminating surface to provide accurate vision during scanning. The controlled lighting helps the proposed system to get uniform visual effect and light distribution. For output visualization, a touch screen is connected to the NI-myRIO digital pin. Fig. 6 shows the proposed real-time prototype system for automated rice defect monitoring.

RESULTS AND DISCUSSION

The classification performance of the proposed real- time automated system was verified using the Kaggle Vietnam rice dataset and Data-world Asian dataset. To enable performance validation, different broken rice sample images were considered, and 150 images of broken rice were used in this study. To make the validation for unbalanced variation, different rice samples were used in the Data-world Asian dataset, which made testing with an unequal image for validating performance.

In image augmentation, both rotational and trans- lational variations were considered for images in the dataset. Image augmentation was used to validate defect classification accuracy by comparing with other conventional methods. Using the confusion matrix, the proposed deep learning classified was validated. The confusion matrix was computed for the rice defect dataset in both pre-training and testing phases for standard and real-time datasets. Fig. 7 shows the confusion matrix for training and the testing rice image dataset, respectively. Confusion matrix consists target value as a row vector and final predicted output as a column vector.

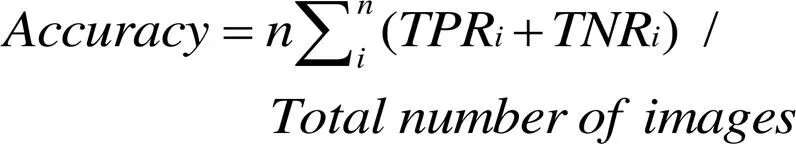

Performance index

The proposed deep AlexNet performance was evaluated in two phases. First, the pre-trained model was tested in the standard Kaggle Vietnam and Data-world Asian datasets. This was conducted offline using the TensorFlow package. After this, real-time performance validation was conducted on the developed prototype model. Ten-fold cross-validation was used for evaluating broken, wrinkled and black spot rice.

For validation, the performance indices like accuracy, specificity as correctly classified true positive rate (TPR), the sensitivity of rice defect as true negative rate (TNR), area under region of classification (ROC), and curve1-score were used. For computing sensitivity, the ability of proposed AlexNet was considered to all instances of presented image set. The negative classification ability was obtained by the specificity of the proposed classifier to detect correctly classified. The parameters used to evaluate the performance are given in Equations 9‒12.

1-score = 2 × (×) / (+) (12)

Wheredenotes the number of the class labels.andrefer to false negative rate and false positive rate, respectively.

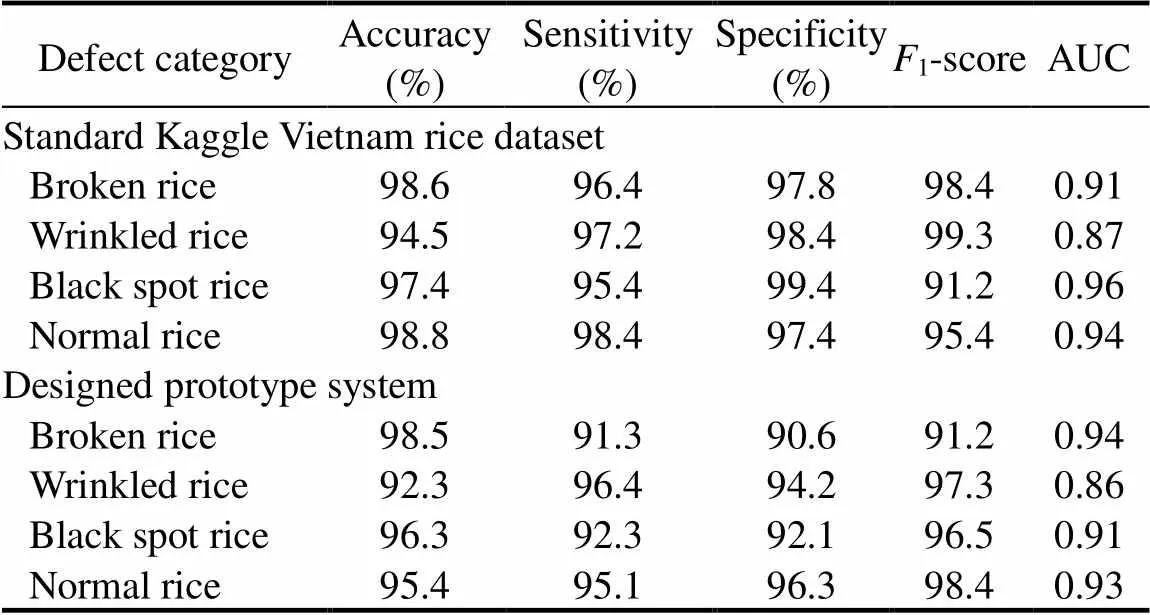

Table 4. Experimental results from training Kaggle Vietnam rice dataset and real-time image acquired on designed prototype system.

AUC, Area under curve.

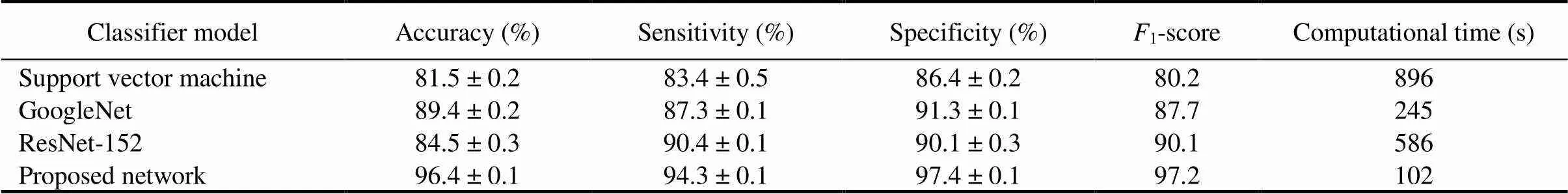

In Table 4, we show the performance indices obtained by employing the proposed deep AlexNet model on the standard Kaggle Vietnam dataset and the acquired real-time dataset. The accuracy to detect broken rice defects was 98.5% in the designed prototype system, which was higher than ResNet-152 classifier. In Table 5, performance comparisons between the proposed network with other classifiers like SVM, GoogleNet and ResNet-152 are given. The computational time for the proposed network was shorter by comparing with other classifiers.

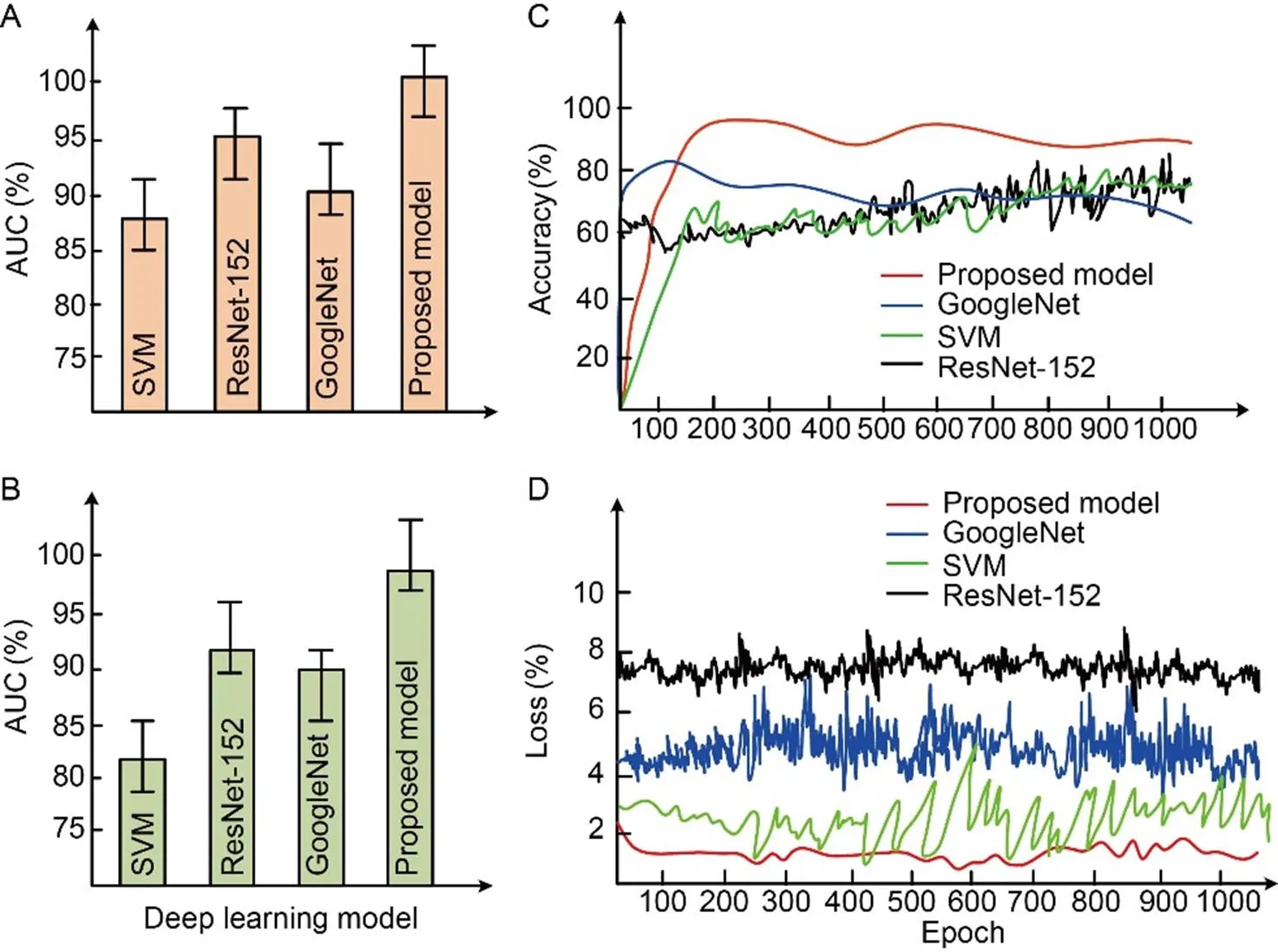

Overall, the proposed AlexNet achieved better accuracy in classifying real-time images. This was due to novel pre-trained by transfer learning concept. The specificity of the proposed AlexNet deep CNN model was 97.4%, and this higher specificity value validates the prior feature learned and abstract feature extraction by the proposed network. Fig. 8-A shows the area under curve (AUC) bar plot of the training dataset and Fig. 8-B shows the AUC for the real-time dataset obtained by the prototype model. The pre-trained model exhibited no confusion in detecting wrinkled rice by considering different orientations. By comparing the ROC value of the proposed classifier with other classifiers, the proposed AlexNet deep CNN classifier was more robust than GoogleNet architecture for the defects of wrinkle and black spot present on rice surface.

Results after augmentation

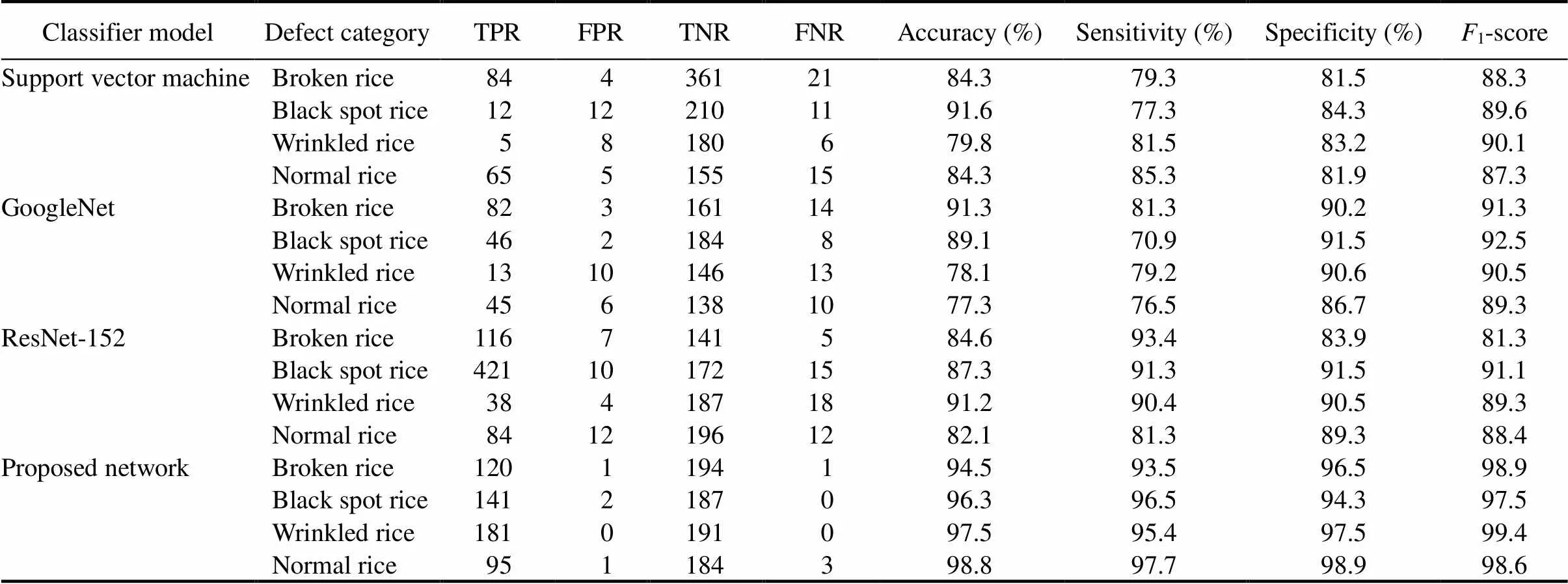

The real-time rice image was augmented for higher abstracted feature extraction. The entire acquired dataset was converted into binary vector by confusion matrix. In the real-time acquired dataset, output classes like defect free, broken rice and black spot rice were considered as positive samples and other classes were considered as negative samples. Table 6 gives comparisons of all performance indices in percentage scale for real-time rice sample by the proposed deep learning algorithm and other classifiers. The pre-trained model with data augmentation reduced training loss. Fig. 8-C shows the comparison of training accuracy, and training loss for the proposed classifier over other conventional classifiers is shown in Fig. 8-D. We can clearly identify reduced value of loss for higher epoch of training by the proposed deep learning algorithm (Fig. 8-D). The proposed AlexNet deep CNN classified the paddy rice defects accurately and generated label to predict rice defects with reduced computational time compared to other classifiers.

Fig. 7. Confusion matrix for training and testing for proposed deep AlexNet.

A, Training confusion matrix. B, Testing confusion matrix.

Table 5. Performance comparison of proposed network with other classifier networks for rice defect Kaggle dataset.

Values are Mean ± SE (= 3).

The accuracy of the proposed prototype model outperforms other conventional existing classification models. The proposed system is a non-destructive system with fast-moving conveyor. Various rice defects can be monitoredand separated from normal rice. The proposed process is automated and can acquire multiple orientations from fast-moving rice samples in the conveyor belt. The proposed system is low-cost, highly reliable and highly beneficial to the agricultural sector to produce high-quality paddy rice for consumers. Hence, the proposed automated deep learning-based real-time ricedefect analyse system has a significant contribution to the farmer and the agricultural sector.

Fig. 8. Results of proposed model.

A, AUC bar plot comparison between various classifiers and proposed classifier for Kaggle training dataset. B, AUC bar plot comparison between various classifiers and proposed classifier for real-time testing. C, Training accuracy comparison for proposed classifier and conventional classifiers. D, Training loss comparison between proposed classifier and conventional classifiers.AUC, Area under curve; SVM, Support vector machine.

CONCLUSIONS

This study proposed an automated deep learning method to identify rice defects in the rice processing industry, based on their outer defects or appearance. Using the transfer learning approach, the proposed AlexNet deep CNN need to construct a highly discriminative feature before being applied to the real-time dataset. This pre-training process reduced class balance, focal loss in training and validation. Real-time quantitative experiments were conducted using a prototype model developed from a commercial hardware. The real-time performance was analyzed using various performance indices. The validating accuracy for augmented proposed AlexNet CNN was 98.8% with a specificity of 98.9% and a sensitivity of 97.7% to rice defects. These obtained results validated the proposed AlexNet deep CNN has the highest accuracy on testing class and real-time samples compared to other conventional deep learning algorithms.

Table 6. Comparison of performance indices for real-time rice sample and other classifiers with augmentation.

TPR, True positive rate;FPR, False positive rate;TNR,True negative rate; FNR, False negative rate.

Moreover, the proposed method clearly visualized rice defects. The defect identification was compared with other standard deep learning models. Our proposed method provided a better performance for rice defect identification and classification on unbalanced image data than other existing methods like SVM, GoogleNet CNN model and ResNet-152 embedded stacked encoder. Transfer learning with data augmentation study implementation demonstrated the reliability and robustness of our method even in unbalance image acquiring in real-time. Hence, our proposed system provided a good quality paddy rice by assisting farmers and agricultural workers.

AcknowledgEmentS

We thank the Indian National Academy of Science, New Delhi for providing research fellowship in the Department of Electrical Engineering, Indian Institute of Technology, New Delhi and Department of Electrical and Electronics Engineering, Mepco Schlenk Engineering College, Sivakasi, India for providing the necessary research facilities.

Abinaya N S, Susan D, Rakesh Kumar S. 2021. Naive Bayesian fusion based deep learning networks for multisegmented classification of fishes in aquaculture industries., 61: 101248.

Alfred R, Obit J H, Chin C P Y, Haviluddin H, Lim Y. 2021. Towards paddy rice smart farming: A review on big data, machine learning, and rice production tasks., 9: 50358–50380.

Cao J J, Sun T, Zhang W R, Zhong M, Huang B, Zhou G M, Chai X J. 2021. An automated zizania quality grading method based on deep classification model., 183: 106004.

Chen P, Li W L, Yao S J, Ma C, Zhang J, Wang B, Zheng C H, Xie C J, Liang D. 2021. Recognition and counting of wheat mites in wheat fields by a three-step deep learning method.,437: 21–30.

Guerrero J M, Ruz J J, Pajares G. 2017. Crop rows and weeds detection in maize fields applying a computer vision system based on geometry., 142: 461–472.

Hu Z L, Tang J S, Zhang P, Jiang J F. 2020. Deep learning for the identification of bruised apples by fusing 3D deep features for apple grading systems., 145: 106922.

Ismail N, Malik O A. 2022. Real-time visual inspection system for grading fruits using computer vision and deep learning techniques., 9(1): 24–37.

Jeyaraj P R, Nadar E R S. 2019. Deep Boltzmann machine algorithm for accurate medical image analysis for classification of cancerous region., 1(3): 85–90.

Jeyaraj P R, Nadar E R S. 2020a. High-performance dynamic magnetic resonance image reconstruction and synthesis employing deep feature learning convolutional networks., 30(2): 380–390.

Jeyaraj P R, Nadar E R S. 2020b. Dynamic image reconstruction and synthesis framework using deep learning algorithm., 14(7): 1219–1226.

Jo H W, Lee S, Park E, Lim C H, Song C, Lee H, Ko Y, Cha S, Yoon H, Lee W K. 2020. Deep learning applications on multitemporal SAR (Sentinel-1) image classification using confined labeled data: The case of detecting rice paddy in South Korea., 58(11): 7589–7601.

Kaur H, Sawhney B K, Jawandha S K. 2018. Evaluation of plum fruit maturity by image processing techniques., 55(8): 3008–3015.

Koklu M, Cinar I, Taspinar Y S. 2021. Classification of rice varietieswith deep learning methods., 187: 106285.

Kucuk Ç, Taşkın G, Erten E. 2016. Paddy-rice phenology classificationbased on machine-learning methods using multitemporal co-polar X-band SAR images., 9(6): 2509–2519.

Kuo T Y, Chung C L, Chen S Y, Lin H G, Kuo Y F. 2016. Identifyingrice grains using image analysis and sparse-representation-based classification., 127: 716–725.

Marimuthu S, Roomi S M M. 2017. Particle swarm optimized fuzzy model for the classification of banana ripeness., 17(15): 4903–4915.

Mittal S, Dutta M K, Issac A. 2019. Non-destructive image processing based system for assessment of rice quality and defects for classification according to inferred commercial value.,148: 106969.

Nandi C S, Tudu B P, Koley C. 2016. A machine vision technique for grading of harvested mangoes based on maturity and quality., 16(16): 6387–6396.

Ren S Q, He K M, Girshick R, Sun J. 2017. Faster R-CNN: Towards real-time object detection with region proposal networks., 39(6): 1137–1149.

Shamim Hossain M, Al-Hammadi M, Muhammad G. 2019. Automatic fruit classification using deep learning for industrial applications., 15(2): 1027–1034.

Singh K R, Chaudhury S. 2016. Efficient technique for rice grain classification using back-propagation neural network and wavelet decomposition., 10(8): 780–787.

Su J Y, Yi D W, Su B F, Mi Z W, Liu C J, Hu X P, Xu X M, Guo L, Chen W H. 2021. Aerial visual perception in smart farming: Field study of wheat yellow rust monitoring., 17(3): 2242–2249.

Sun B Y, Yuan N Z, Zhao Z. 2020. A hybrid demosaicking algorithm for area scan industrial camera based on fuzzy edge strength and residual interpolation., 16(6): 4038–4048.

Sun J, Lu X Z, Mao H P, Jin X M, Wu X H. 2017. A method for rapid identification of rice origin by hyperspectral imaging technology., 40(1): e12297.

Wu L H, Liu Z C, Bera T, Ding H J, Langley D A, Jenkins-Barnes A, Furlanello C, Maggio V, Tong W D, Xu J. 2019. A deep learning model to recognize food contaminating beetle species based on elytra fragments., 166: 105002.

Xiao G Y, Wu Q, Chen H, Da D, Guo J Z, Gong Z G. 2020. A deep transfer learning solution for food material recognition using electronic scales., 16(4): 2290–2300.

Xu X L, Li W S, Duan Q L. 2021. Transfer learning and SE-ResNet152 networks-based for small-scale unbalanced fish species identification., 180: 105878.

Yang H J, Pan B, Li N, Wang W, Zhang J, Zhang X L. 2021. A systematic method for spatio-temporal phenology estimation of paddy rice using time series Sentinel-1 images., 259: 112394.

Yang S, Zhu Q B, Huang M, Qin J W. 2017. Hyperspectral image- based variety discrimination of maize seeds by using a multi- model strategy coupled with unsupervised joint skewness-based wavelength selection algorithm., 10(2): 424–433.

Zareiforoush H, Minaei S, Alizadeh M R, Banakar A, Samani B H. 2016. Design, development and performance evaluation of an automatic control system for rice whitening machine based on computer vision and fuzzy logic., 124: 14–22.

Zeng W H, Li M. 2020. Crop leaf disease recognition based on Self-Attention convolutional neural network., 172: 105341.

Zhang M, Zhang H Q, Li X Y, Liu Y, Cai Y T, Lin H. 2020. Classification of paddy rice using a stacked generalization approach and the spectral mixture method based on MODIS time series., 13: 2264–2275.

Copyright © 2022, China National Rice Research Institute. Hosting by Elsevier B V

This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/)

Peer review under responsibility of China National Rice Research Institute

http://dx.doi.org/

18 September 2021;

15 February 2022

Pandia Rajan Jeyaraj (pandiarajan@mepcoeng.ac.in)

(Managing Editor: Wu Yawen)

- Rice Science的其它文章

- Breeding Effects and Genetic Compositions of a Backbone Parent (Fengbazhan) of Modern indica Rice in China

- A Simple and Rapid Oxidative Stress Screening Method of Small Molecules for Functional Studies of Transcription Factor

- Saline-Alkali Tolerance in Rice: Physiological Response, Molecular Mechanism, and QTL Identification and Application to Breeding

- Coleoptile Purple Line Regulated by A-PGene System Is a Valuable Marker Trait for Seed Purity Identification in Hybrid Rice

- Improve Anthocyanin and Zinc Concentration in Purple Rice by Nitrogen and Zinc Fertilizer Application

- Wheat Straw Burial Improves Physiological Traits, Yield and Grain Quality of Rice by Regulating Antioxidant System and Nitrogen Assimilation Enzymes under Alternate Wetting and Drying Irrigation