Application of artificial intelligence-driven endoscopic screening and diagnosis of gastric cancer

Yu-Jer Hsiao, Yuan-Chih Wen, Wei-Yi Lai, Yi-Ying Lin, Yi-Ping Yang, Yueh Chien, Aliaksandr A Yarmishyn, .De-Kuang Hwang, Tai-Chi Lin, Yun-Chia Chang, Ting-Yi Lin, Kao-Jung Chang, Shih-Hwa Chiou, Ying-Chun Jheng

Abstract The landscape of gastrointestinal endoscopy continues to evolve as new technologies and techniques become available. The advent of image-enhanced and magnifying endoscopies has highlighted the step toward perfecting endoscopic screening and diagnosis of gastric lesions. Simultaneously, with the development of convolutional neural network, artificial intelligence (AI) has made unprecedented breakthroughs in medical imaging, including the ongoing trials of computer-aided detection of colorectal polyps and gastrointestinal bleeding. In the past demi-decade, applications of AI systems in gastric cancer have also emerged. With AI’s efficient computational power and learning capacities,endoscopists can improve their diagnostic accuracies and avoid the missing or mischaracterization of gastric neoplastic changes. So far, several AI systems that incorporated both traditional and novel endoscopy technologies have been developed for various purposes, with most systems achieving an accuracy of more than 80 %. However, their feasibility, effectiveness, and safety in clinical practice remain to be seen as there have been no clinical trials yet. Nonetheless,AI-assisted endoscopies shed light on more accurate and sensitive ways for early detection, treatment guidance and prognosis prediction of gastric lesions. This review summarizes the current status of various AI applications in gastric cancer and pinpoints directions for future research and clinical practice implementation from a clinical perspective.

Key Words: Artificial intelligence; Diagnostic; Therapeutic; Endoscopy; Gastric cancer;Gastritis

INTRODUCTION

Diagnostic and therapeutic endoscopies play a major role in the management of gastric cancer (GC). Endoscopy is the mainstay for the diagnosis and treatment of early adenocarcinoma and lesions and the palliation of advanced cancer[1 -5 ]. GC, being the fifth most common cancer and the third leading cause of cancer-related deaths worldwide, affects more than one million people and causes approximately 780000 deaths annually[6 -10 ]. Continued development in endoscopy aims to strengthen its quality indicators. These developments include using higher resolution and magnification endoscopies, chromoendoscopy and optical techniques based on the modulation of the light source, such as narrow-band imaging (NBI), fluorescence endoscopy and elastic scattering spectroscopy[11 -13 ]. New tissue sampling methods to identify the stages of a patient’s risk for cancer are also being developed to decrease the burden on patients and clinicians during endoscopy.

Statistically, the relative 5 -year survival rate of GC is less than 40 %[7 ,9 ,14 ,15 ], often attributed to the late onset of symptoms and delayed diagnosis[10 ]. Although early diagnosis is difficult as most patients are asymptomatic in the early stage, the diagnosis point largely determines the patient’s prognosis[16 ,17 ]. In other words,endoscopic detection of GC at an earlier stage is the only and most effective way to reduce its recurrence and to prolong patient survival. This early diagnosis of GC provides the opportunity for minimally invasive therapy methods such as endoscopic mucosal resection or submucosal dissection[18 -20 ]. The 5 -year survival rate was reported to be more than 90 % among patients with GC detected at an early stage[21 -23 ]. Yet, the false negative rate of GC detected by esophagogastroduodenoscopy, the current standard diagnostic procedure, was reported to be between 4 .6 % and 25 .8 %[24 -29 ]. In terms of the common diagnostic methods, esophagogastroduodenoscopy is the preferred diagnostic modality for patients with suspected GC; the combination of lymph node dissection, endoscopic ultrasonography and computed tomographic scanning is involved in staging the tumor[30 ,31 ]. From the differential diagnosis between GC and gastritis, prediction of the horizontal extent of GC to characterizing the depth of invasion of GC, the early abnormal symptoms of GC and its advanced aggressive malignancy as well as the heavy workload of image analysis present ample inevitable challenges for endoscopists[32 -34 ]. With large variations in the diagnostic ability of endoscopists, long-term training and experience may not guarantee their consistency and accuracy of diagnosis[35 -37 ].

In recent years, artificial intelligence (AI) has caught considerable attention in various medical fields, including skin cancer classification[38 -41 ], diagnosis in radiation oncology[42 -45 ] and analysis of brain magnetic resonance imaging[46 -49 ].Although its applications have shown impressive accuracy and sensitivity identifying and characterizing imaging abnormalities, its improved sensitivity also meant the detection of subtle and indeterminately significant changes[50 ,51 ]. For example, in the analysis of brain magnetic resonance imaging, despite the promise of early diagnosis with machine learning, the relationship between subtle parenchymal brain alterations detected by AI and its neurological outcomes is unknown in the absence of a welldefined abnormality[52 ]. In other words, the use of AI in diagnostic imaging in various medical fields is continuously undergoing extensive evaluation.

In the field of gastroenterology, AI applications in capsule endoscopy[53 -56 ] and in the detection, localization and segmentation of colonic polyps have been reported as well[57 -59 ]. In particular, in the late 2010 s, there was an explosion of interest in GC.The use of AI has proven to provide better diagnostic capabilities, although further validation and extensions are necessary to augment their quality and interpretability.An AI system’s quality is often described with statistical measures of sensitivity,specificity, positive predictive value and accuracy.

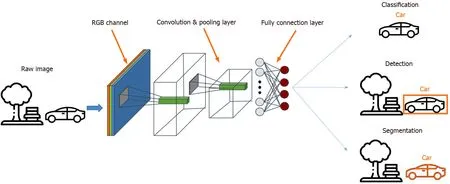

Among the different AI models, the convolutional neural network (CNN) is a method most commonly used in medical imaging[60 ,61 ] as it allows the detection,segmentation and classification of image patterns[62 ] (Figure 1 ). CNN uses the mathematical operation of convolution to classify the images after recognizing patterns from the raw image pixel. Because the 7 -layer Le-Net-5 program was first pioneered by LeCunet alin 1998 , CNN architectures have been rapidly developing.Today, other widely-used CNNs include AlexNet (2012 ) with about 15 .3 % error rate,22 -layer GoogLeNet (2014 ) with a 6 .67 % error rate but only 4 million parameters, 19 -layer visual geometry group (VGG) Net (2014 ) with 7 .3 % error rate and 138 million parameters, and Microsoft’s ResNet (2015 ) with an error rate of 3 .6 % that can be trained with as many as 152 layers[63 -65 ]. While scholars have lauded AI for the potential and performance it has displayed, some have cast doubts on its generalizability and role in the holistic assessment of gastric abnormalities.

In the beginning of an AI-assisted diagnostic imaging revolution, we have to anticipate and meticulously assess the potential perils, in the context of its capabilities,to ensure effective and safe incorporation into clinical practice[66 ]. In this paper, we thereby review the current status of AI applications in screening and diagnosing GC.We explore with emphasis on two broad categories: namely, the identification of pathogenic infection and the qualitative diagnosis of GC. Finally, we considered some directions for further research and the future of its introduction into clinical practice.

IDENTIFICATION OF HELICOBACTER PYLORI INFECTION

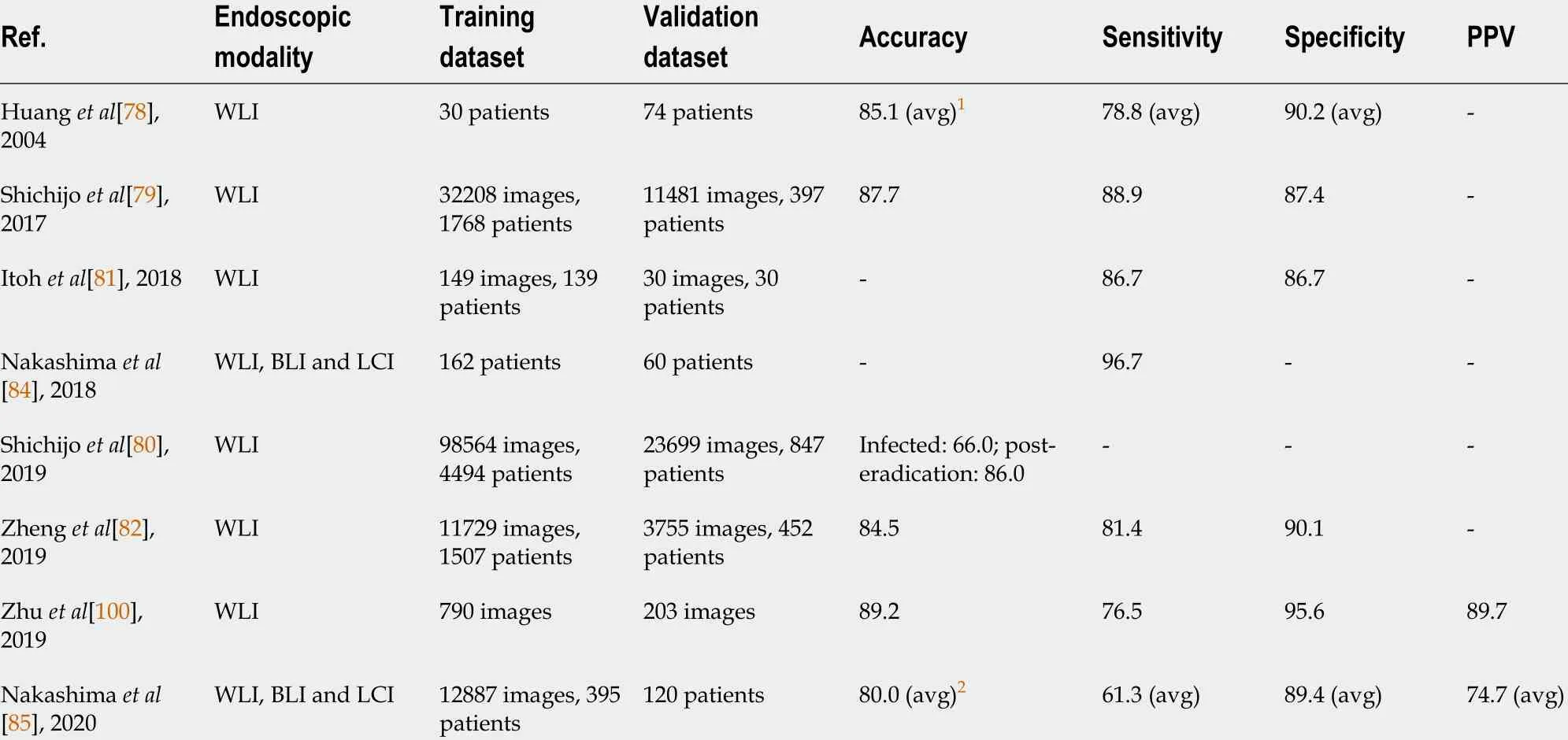

AI applications in identifying pathogenic infections have been widely explored[67 ](Table 1 ). Gastric epitheliumHelicobacter pylori(H. pylori) infection is associated withfunctional dyspepsia, peptic ulcers, mucosal atrophy, intestinal metaplasia, atrophic gastritis and GC[68 ,69 ]. Because most gastric malignancies correlate withH. pyloriinfection, identifyingH. pyloriinfection at its early stage is essential in preventingH.pylori-aggravated comorbidities[70 -74 ]. Although physicians usually use the C13 urea breath test to diagnoseH. pylori, most subclinicalH. pyloriinfection cases still rely on the time-consuming and invasive biopsy examination to avoid the risk of a false negative diagnosis. Moreover, the Kyoto Classification, as the current gold standard ofH. pyloriseverity classification, requires examiners to measure lesions by their bare eyes. Such a method is a subjective judgment that usually comes with interoperator bias[75 -77 ]. Compelled by such ambiguity, researchers have turned to devising a nextgeneration semi-automatic standard examination protocol, that is AI.

Table 1 Summary of artificial intelligence applications in predicting Helicobacter pylori infection

Figure 1 The convolutional neural network model. A convolutional neural network consists of an input layer, a few hidden layers and an output layer. It is commonly applied in medical imaging through the detection, segmentation and classification of image patterns.

As early as 2004 , before CNN took the lead in machine-assisted image diagnosis,Huanget al[78 ] deployed the refined feature selection neural network to process endoscopic images and return the results ofH. pyloriinfection probability and severity.By training AI with 30 patients’ endoscopic images including crops of antrum, body and cardia locations of the stomach, they established an algorithm that achieved an average of 78 .8 % sensitivity, 90 .2 % specificity and 85 .1 % accuracy in an independent cohort of 74 patient images. The overall prediction accuracy was better than the one demonstrated by young physicians and fellow doctors, who scored 68 .4 % and 78 .4 %,respectively. It was the first model demonstrating the potential of computer-aided diagnosis ofH. pyloriinfection by endoscope images.

However, since the introduction of the 7 -layer Le-Net-5 program by LeCunet alin 1998 , the CNNs have gradually taken over in the field of medical image processing. To name a few examples, Shichijoet al[79 ] used 32208 images of 735H. pylori-positive and 1015H. pylori-negative cases to develop anH. pyloriidentifying AI system based on the architecture of 22 -layer GoogLeNet. The sensitivity, specificity, accuracy and time consumption were 81 .9 %, 83 .4 %, 83 .1 % and 198 s for the first CNN and 88 .9 %, 87 .4 %,87 .7 % and 194 s for the secondary CNN developed, respectively, compared with that of 79 .0 %, 83 .2 %, 82 .4 % and 230 min by the endoscopists. Later, still using GoogLeNet,Shichijoet al[80 ] developed another system that further classified the current infection,post-eradication and current noninfection statuses ofH. pylori, obtaining an accuracy of 48 %, 84 %, and 80 %, respectively. In this system, the CNN was trained with 98564 images from 4494 patients and tested with 23699 images from 847 independent cases.Itohet al[81 ] also developed a CNN based on GoogLeNet, trained with 149 endoscopic images obtained from 139 patients and tested with 30 images from 30 patients, which could detect and diagnoseH. pyloriwith sensitivity and specificity of 86 .7 %.Additionally, the use of ResNet CNN architecture was reported by Zhenget al[82 ] in 2019 , achieving a sensitivity, specificity and accuracy of 81 .4 %, 90 .1 % and 84 .5 %,respectively. In this study, the system was trained with 11729 images from 1507 patients and tested with 3755 images from 452 patients using a 50 -layer ResNet-50 (Microsoft) CNN system and PyTorch (Facebook) deep learning framework.

Recently, AI has also been applied to linked color imaging (LCI) and blue laser imaging, two novel image-enhanced endoscopy technologies[83 ]. It helped diagnose[84 ] and classify[85 ] theH. pyloriinfection and has shown greater effectiveness. In 2018 , Nakashima et al[84 ] developed a system on a training set of 162 patients and a test set of 60 patients that could diagnoseH. pyloriinfection with an area under the curve of 0 .96 and 0 .95 and sensitivity of 96 .7 % and 96 .7 % for blue laser imaging-bright and LCI, respectively. Such performance is superior to systems that use conventional white light imaging (WLI) (with 0 .66 area under the curve and sensitivities as mentioned earlier in other studies) as well as that of experienced endoscopists.Another 2020 study by the same team also showed that classifying theH. pyloriinfection status (uninfected, infected and post-eradication) by incorporating deep learning and image-enhanced endoscopies yields more accurate results. The system was trained with 6639 WLI and 6248 LCI images from 395 patients and tested with images from 120 patients[85 ].

However, there are some limitations of AI in identifyingH. pylorithat remain to be overcome amongst the developed systems and findings. First, the histological time frame, especially for the eradicated infection, was not considered in the AI systems[86 ]. Second, both the training data sets and test data sets were obtained from a single center for all existing systems. A continued and even more rigorous external validation, which uses more diverse sources of images and endoscopies, is necessary to evaluate each system’s generalizability[87 ,88 ]. Additionally, the application of CNN algorithms is also still confined to the existing models of CNN algorithms (mostly GoogLeNet and a few ResNet). Further technical refinements may overcome current limitations faced by endoscopists. They also shed light on the possibility of a system that distinguishes betweenH. pylori-infected andH. pylori-eradicated patients,determines different parts of the stomach (cardia, body, angle and pylorus) and provides real-time evaluations ofH. pylori. These will be considerations vital for its implementation in clinical practice in the future.

DETECTION OF GC

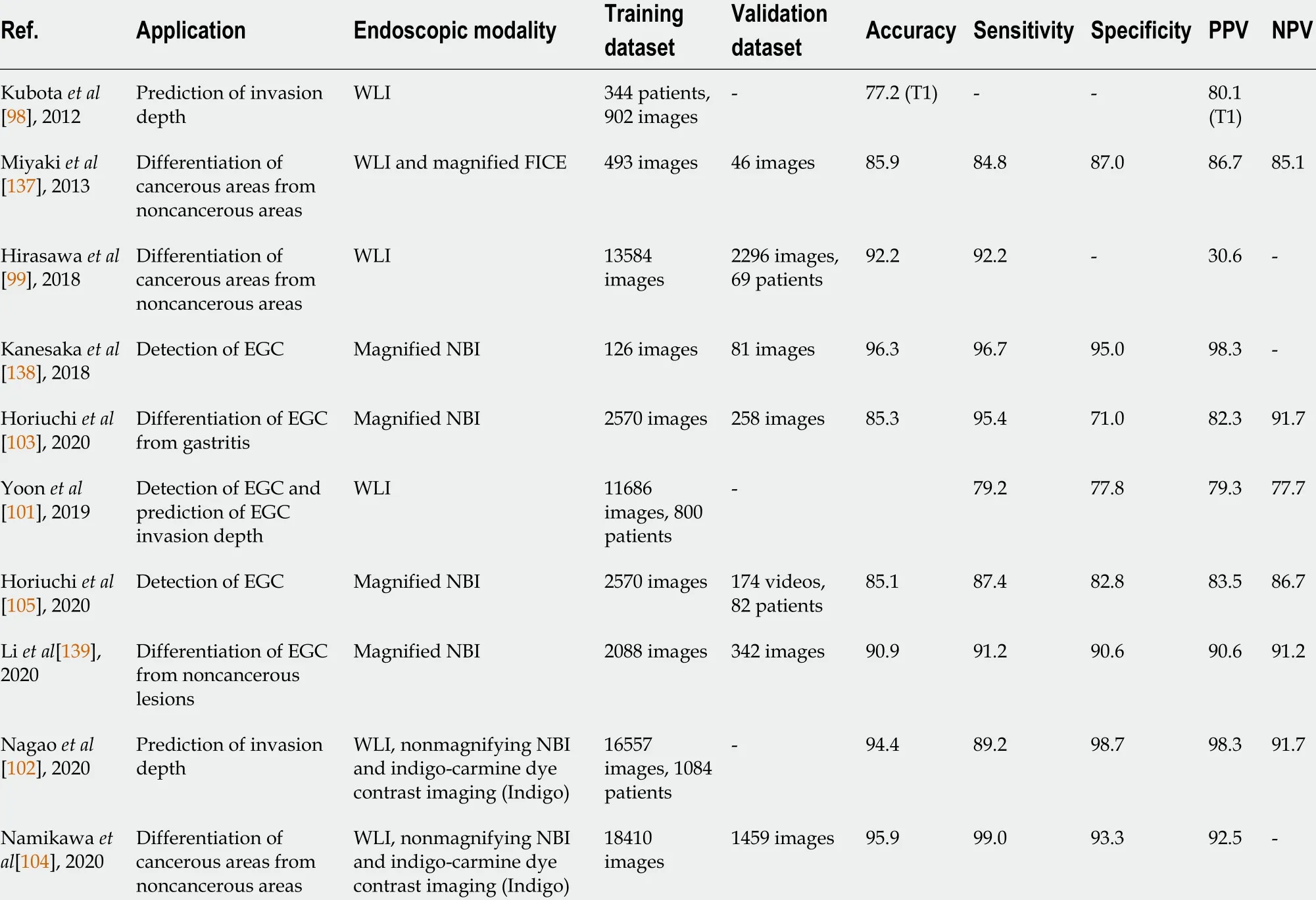

BesidesH. pyloriinfection, computer-aided pattern recognition with endoscopic images has also been applied to diagnose wall invasion depth (Table 2 ). An accurate diagnosis of invasion depth and subsequent staging is the basis for determining theappropriate treatment modality, especially for suspected early GCs (EGC)[89 -91 ].Classified based on the 7 th TNM classification of tumors[92 ,93 ], EGC is categorized as tumor invasion of the mucosa (T1 a) or invasion of the submucosa (T1 b) stages. While endoscopic ultrasonography is useful for T-staging of GC by delineating each gastric wall layer[94 ,95 ], conventional endoscopy is still arguably superior to endoscopic ultrasonography for T-staging of EGC[96 ,97 ]. However, there remains room for improvement, such as by utilizing AI, to increase its accuracy. In 2012 , Kubota et al[98 ]first explored the system with a relatively high sensitivity of 68 .9 % and 63 .6 % in T1 a and T1 b GCs, achieving high accuracy, especially in early tumors. The accuracy for T1 tumors was 77 .2 % compared to that of 49 .1 %, 51 .0 %, and 55 .3 % for T2 , T3 and T4 tumors, respectively. Another system developed by Hirasawaet al[99 ] achieved a high sensitivity of 92 .2 % of CNN, though at the expense of a low positive predictive value(30 .6 %). Zhu et al[100 ] later demonstrated a CNN-computer assisted diagnosis system that achieved much higher accuracy (by 17 .25 %; 95 % confidence interval: 11 .63 -22 .59 )and specificity (by 32 .21 %; 95 % confidence interval: 26 .78 -37 .44 ) compared to human endoscopists. These preliminary findings showed that AI is a potentially helpful diagnostic procedure in EGC detection and pointed towards developing an AI system that can differentiate between malignant and benign lesions.

Table 2 Summary of artificial intelligence applications in prediction of invasion depth and differentiation of cancerous areas from noncancerous areas

Given that the difference in EGC depth in endoscopic images is subtler and more difficult to discern, Yoonet al[101 ] identified that more sophisticated image classification methods but not merely conventional CNN models are required. The team developed a system that classifies endoscopic images into EGC (T1 a or T1 b) or non-EGC. This system used the combination of the CNN-based visual geometry group-16 network pretrained on ImageNet and a novel method of the weighted sum of gradient-weighted class activation mapping. This system focused on learning the visual features of EGC regions rather than those of other gastric textures, achieving both high accuracy of 91 .0 % and high area under the curve of 0 .981 . In another study in 2020 , Nagao et al[102 ] used the state-of-the-art ResNet50 CNN architecture to develop a systemviatraining the images from different angles and distances. This system predicted the invasion depth of GC with an image-based accuracy as high as 94 .5 %.

However, when using these AI systems for invasion depth diagnosis, distinguishing superficially depressed and differentiated-type intramucosal cancers from gastritis remains a challenge. The diagnostic accuracy of determining invasion depth is largely affected by its histological characteristics. For instance, the system developed by Yoonet al[101 ] achieved an accuracy of 77 .1 % for differentiated-type tumors in contrast to that of 65 .5 % for undifferentiated type. Horiuchi et al[103 ] made a substantial effort and developed another system that could differentiate EGC from gastritis using magnifying endoscopy with NBI (M-NBI). The system achieved an accuracy of 85 .3 %and sensitivity and negative predictive value of 95 .4 % and 91 .7 %. Another attempt was made by Namikawaet al[104 ], who developed a system that was trained by gastritis images and tested to classify GC and gastric ulcers. A continued development of AI systems that consider the differentiated type histology will shed light on the future of AI-assisted differentiation of T1 a from T1 b GC and that of T1 a and T1 b cancers from the later stages of GC.

To bring AI-assisted systems one step closer to real-time clinical applications, videobased systems have also been explored. In 2020 , Horiuchi et al[105 ] used the videobased systems and achieved a comparable accuracy of 85 .1 % in distinguishing EGC and noncancerous lesions. Based on the CNN-CAD system, their system was trained with 2570 images (1492 cancerous and 1078 noncancerous images) and tested with 174 videos. This preliminary success in the video-based CNN-CAD system pointed out the potential of real-time AI-assisted diagnosis, which could be a promising technique for detecting EGC for clinicians in the future. Early detection of GC means an early treatment of endoscopic dissection in accordance with the works promoted by the Japanese Gastric Cancer Association since 2014 [106 ].

DISCUSSION

Over the past decade, AI has displayed its potential diagnosing GC to amplify human endoscopist capacities. Although the diagnosis of GC requires a holistic set of assessments, AI is applicable and helpful in some parts. A system that detects GC with high sensitivity regardless of its accuracy in determining invasion depth could provide great clinical assistance for physicians to decide if biopsy and endoscopic submucosal dissection are necessary. In the near future, there should be some other diagnostic procedures that can be explored with AI. For example, macroscopic characteristics,namely the “nonextension signs” commonly used to distinguish between SM1 and SM2 invasion depths of GCs[107 ] have yet to be explored with AI.

Clinically, there are also some distinct markers that endoscopists use to evaluate gastric surface and color changes. Distinguishing the markers such as changes in light reflection and spontaneous bleeding are clinical skills[108 ,109 ] that AI could potentially learn and interpret. In clinical practice, antiperistaltic agents are suggested for polyethersulfone preparation, and indigo carmine chromoendoscopy could help diagnose elevated superficial lesions with an irregular surface pattern[110 ] with which their efficacy could be evaluated by real-time AI endoscopy in the near future.

Although several studies have attempted to apply AI in different types of endoscopies, ranging from WLI to LCI to blue laser imaging, these studies can also continue to extend AI to NBI and other nonconventional endoscopies. For instance,endocytoscopy with NBI has shown higher diagnostic accuracy compared to M-NBI[78 .8 % (76 .4 %-83 .0 %) vs 72 .2 % (69 .3 %-73 .6 %), P < 0 .0001 ][111 ]. An AI system that is trained with WLI images and tested with NBI images instead will also have clinical significance[112 ,113 ]. Proposed in 2016 was the Magnifying Endoscopy Simple Diagnostic Algorithms for EGC that suggested a systematic approach to WLI magnifying endoscopy. It is recommended that if a suspicious lesion is detected, MNBI should be performed to distinguish if the lesion is cancer or noncancer[114 ].According to this algorithm, changing from WLI to M-NBI endoscopy is therefore critical for diagnosis, and the future development of AI systems can consider accounting for such changes.

In the AI systems developed over the past decade, we summarize the following common limitations faced. First, there seems to be a common lack of high-quality datasets for machine learning development, a problem faced in clinical practice even without AI[115 ]. Simultaneously, some studies reported that low-quality images result in higher chances of misdiagnosis by the AI system[116 ], and most studies excluded large numbers of poor-quality images[99 -101 ]. The call for cross-validation with multicenter observational studies has also been discussed in several studies in hopes of picking out any potential overfitting and spectrum bias that is foreseeable in deep image classification models[117 -119 ]. Some authors have argued that the AI system they have developed is institution-specific and that the validation with the dataset from external sources is necessary[103 ,105 ]. In this regard, multicenter studies have been widely used in other medical fields to evaluate deep learning systems[120 -122 ],though there have been no such studies in the field of GC.

Another challenge that remains is seen in the imbalanced class distributions, a common classification problem in which the distribution of samples across the known classes is biased or skewed. For example, in the study reported by Hirasawaet al[99 ] in 2018 , there are few samples for the later stages (only 32 .5 % of samples were T2 -T4 cancers) than for early cancer (67 .5 % of samples). Such imbalanced classifications pose a challenge as machine learning models are primarily designed on the assumption of an equal number of samples for each class[123 ]. Without sufficient samples for certain classes of the training dataset, their existence might be misperceived as other classes as the AI model becomes more sensitive to classification errors. It may result in poor predictive performance, especially for the minority class and subsequently an overall increased misdiagnosis rate. For example, in the cases of the AI model for GC staging,a misdiagnosis of late-stage cancer for gastritis or nonmalignancy has dangerous implications[124 -126 ] if the AI system was used for its diagnosis alone. However, in most cases, advanced-stage GC might have already metastasized to other parts of the body[127 -129 ], and its diagnosis based only on the AI system alone is unlikely.Nonetheless, in the development of AI systems for such medical applications, these technical problems of imbalanced classification should not be overlooked. It has been discussed by other reviews how modifications can be made to AI models to recognize targets, no matter how frequent or rare they are, to minimize the possibility of misdiagnosis[130 ,131 ].

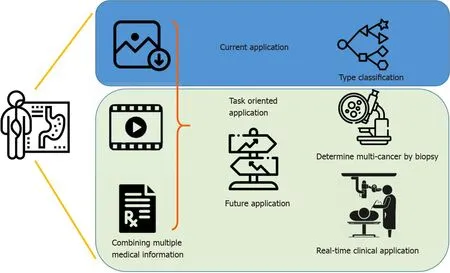

Overall, the potential for AI applications in GC is extensive yet highly specific. In an upcoming era of AI-assisted diagnosis, by combining image information, medical history and laboratory data, endoscopists can look forward to the continued development of new systems for varying purposes (Figure 2 ). AI systems are specific and unlikely to be generalized[132 ,133 ], and it is fallacious to compare a single statistical performance measure across different AI systems. The efficacy of an AI system depends on the intended role it plays in clinical practice. For example, an AI system with a high positive predictive value is desirable in determining which multicancer to send for biopsy, while high sensitivity suffices for a system that helps differentiate cancerous from noncancerous clinical signs, especially for amateur endoscopists. In the foreseeable future, AI can be incorporated in the differential diagnosis of the malignancy and stages of gastric lesions, using various endoscopic technologies and techniques.

CONCLUSION

Overall, the application of AI in gastroenterology is in its infancy. At present, there exist several retrospective models applied in both images and videos and using both WLI and NBI endoscopies that have proven to have better performance for the same tasks carried out by experienced endoscopists. However, there have not been any attempts of clinical trials. In contrast to the ongoing trials for detecting colorectal polyps[134 -136 ], AI applications in GC and its corresponding diagnostic methods are still preliminary. The limitations of existing efforts point towards the importance of continued research in the field that can go a long way in making quicker, more accurate and precise evaluations of GC risk. While we witnessed its rapid and steep growth in the past decade, future studies are needed to streamline the machine learning process and define its role in the computer-aided diagnosis ofH. pyloriinfections and GC in real-life clinical scenarios.

Figure 2 Current status and future research direction for implementation of artificial intelligence-assisted endoscopy in clinical practice.In an upcoming era of artificial intelligence-assisted diagnosis, endoscopists can look forward to the continued development of new artificial intelligence systems for varying purposes. From determining multicancer via biopsy to real-time endoscopies, artificial intelligence has the potential of assisting physicians to improve their diagnostic accuracies.

World Journal of Gastroenterology2021年22期

World Journal of Gastroenterology2021年22期

- World Journal of Gastroenterology的其它文章

- Fecal microbiota transplantation for irritable bowel syndrome: An intervention for the 21 st century

- Hepatocellular carcinoma in viral and autoimmune liver diseases: Role of CD4 + CD25 + Foxp3 + regulatory T cells in the immune microenvironment

- Mucosal lesions of the upper gastrointestinal tract in patients with ulcerative colitis: A review

- Preservation of superior rectal artery in laparoscopically assisted subtotal colectomy with ileorectal anastomosis for slow transit constipation

- Early serum albumin changes in patients with ulcerative colitis treated with tacrolimus will predict clinical outcome

- Idiopathic mesenteric phlebosclerosis associated with long-term oral intake of geniposide