改进RetinaNet的水稻冠层害虫为害状自动检测模型

姚 青,谷嘉乐,吕 军,郭龙军,唐 健,杨保军,许渭根

改进RetinaNet的水稻冠层害虫为害状自动检测模型

姚 青1,谷嘉乐1,吕 军1,郭龙军1,唐 健2※,杨保军2,许渭根3

(1. 浙江理工大学信息学院,杭州 310018;2. 中国水稻研究所水稻生物学国家重点实验室,杭州 310006;3. 浙江省植保检疫与农药管理总站,杭州 310020)

中国现行的水稻冠层害虫为害状田间调查方法需要测报人员下田目测为害状发生情况,此种人工调查方法存在客观性差、效率低与劳动强度大等问题。近几年,诸多学者开始利用深度学习方法来识别植物病虫为害状,但大多针对单株或单个叶片上病虫害种类进行识别研究。该研究采集了水稻冠层多丛植株上稻纵卷叶螟和二化螟为害状图像,提出一种改进RetinaNet的水稻冠层害虫为害状自动检测模型。模型中采用ResNeXt101作为特征提取网络,组归一化(Group Normalization,GN)作为归一化方法,改进了特征金字塔网络(Feature Pyramid Network,FPN)结构。改进后的RetinaNet模型对2种害虫为害状区域检测的平均精度均值达到93.76%,为实现水稻害虫为害状智能监测提供了理论依据和技术支持。

图像处理;算法;自动检测;水稻冠层;为害状图像;稻纵卷叶螟;二化螟;RetinaNet模型

0 引 言

水稻是中国最主要的粮食作物之一。水稻病虫害种类多、分布广、危害大,每年对水稻产量造成巨大的经济损失[1],因此准确测报水稻病虫害是制定合理防治措施、减少经济损失的前提。中国现行的水稻病虫害测报方法大部分仍需要基层测报人员下田调查,并人工目测和诊断病虫害的发生情况(包括病虫害发生种类、数量和发生等级等),此种人工田间调查存在任务重、客观性差、效率低与非实时性等问题。因此,亟需快速、便捷和智能的水稻病虫害调查方法和工具。

随着图像处理和机器学习在多个领域的深入应用,利用各种影像或图像进行农作物病虫害的实时监测与智能诊断成为近些年的研究热点。基于传统图像模式识别方法的农业病虫为害症状识别研究已有多篇文献报道[2-6]。主要研究思路为通过背景分割获得为害状区域,然后提取这些区域的图像特征,最后利用特征向量训练各种分类器来识别为害症状的种类。利用传统模式识别方法识别农业病虫害在有限种类和有限测试集上一般均能获得较高的准确率。然而,自然环境下的农作物病虫害有着复杂的图像背景和生物多样性,传统的模式识别方法鲁棒性弱,泛化能力差,导致这些研究成果无法广泛应用于田间农作物病虫害测报。

近几年,深度学习方法在目标图像识别和检测任务中表现出色[7-9]。与传统模式识别方法最大不同在于深度学习方法可以从图像中自动逐层提取特征,包含成千上万个参数。已有很多学者开始利用深度学习方法来识别病虫为害状[10-17]。针对多种植物的不同病害,Sladojevic等[10]、Mohanty等[11]和Ferentinos[12]利用卷积神经网络(Convolutional Neural Networks,CNN)模型识别十几种到五十几种病害,均取得了较好的识别效果。Liu等[13]、Ashqar等[14]和Brahimi等[15]利用CNN模型识别一种植物叶片上的不同病害,取得较高的识别率。刘婷婷等[16]利用CNN识别水稻纹枯病,获得97%的准确率。Wang等[17]利用CNN对苹果叶片病害的危害程度进行了研究,获得了90.4%的准确率。利用深度学习方法不仅可以避免去背景和人工设计特征的环节,还可以通过训练大量样本获取较高鲁棒性的识别模型。

在水稻病虫害测报中,一般需要在一定范围内对多株或多丛水稻上的病虫害进行识别与诊断,上述仅对单株或单个叶片上的植物病虫害种类识别方法,难以满足水稻病虫害的测报。本研究利用深度学习中目标检测方法研究水稻冠层稻纵卷叶螟和二化螟2种害虫为害状的自动检测模型,研究结果将为多株多丛水稻2种害虫为害状的智能调查和监测提供数据支持。

1 材料与方法

1.1 图像采集与数据集建立

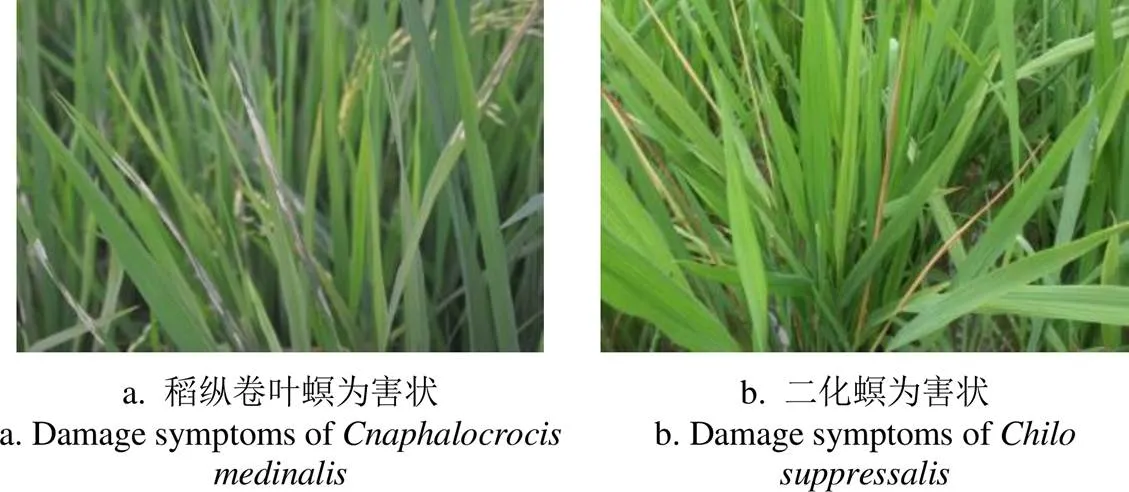

利用数码相机(Sony DSC-QX100,2 020万像素)采集水稻不同品种和不同生育期的水稻冠层稻纵卷叶螟()和二化螟()为害状图像(图1),图像大小为4 288×2 848像素,图像数据集信息如表1所示。利用目标标注工具LabelImg对训练集进行标记,将图中目标标注框的坐标和标签信息写入到XML文件中,建立PASCAL VOC[18]数据集格式。

图1 水稻冠层2种害虫为害状图像

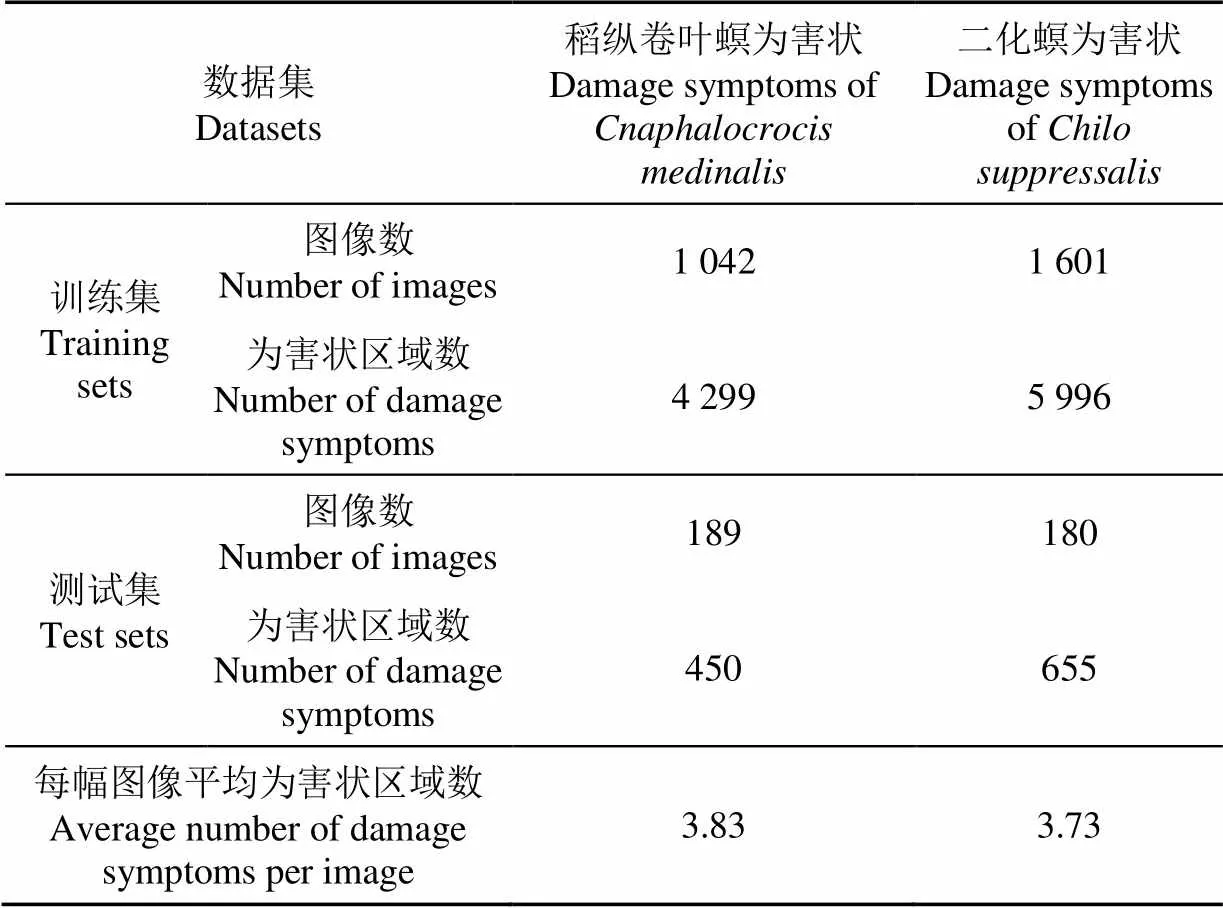

表1 水稻冠层2种害虫为害状图像数据集

1.2 图像数据增强

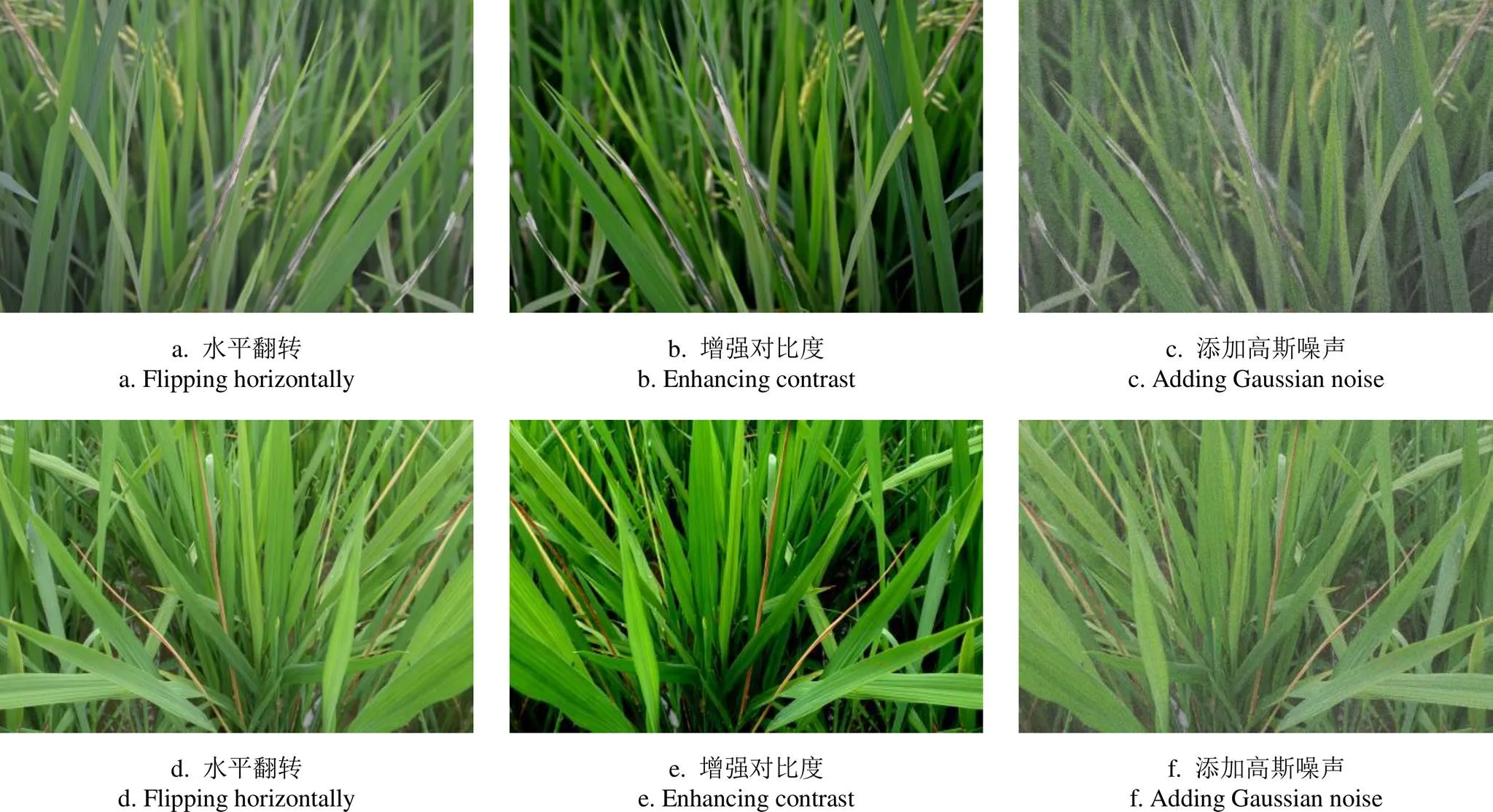

为了提高目标检测模型的鲁棒性和泛化能力,通过水平翻转、增强对比度与添加高斯噪声的方法对训练样本集中的图像进行数据扩充(图2),训练样本数量是原来的4倍。

注:图2a~图2c是稻纵卷叶螟为害状增强图像;图2d~图2f是二化螟为害状增强图像。

1.3 水稻冠层害虫为害状自动检测模型

1.3.1 改进RetinaNet 的检测模型网络框架

基于深度学习的目标检测模型主要分为2类,一类是基于区域提议(Region Proposal Network,RPN)的两阶段方法,第一阶段通过区域提议的方法生成一系列候选区域,第二阶段对候选区域提取特征进行分类和位置回归,经典的模型包括R-CNN[19]、Fast R-CNN[20]、Faster R-CNN[21]等;另一类是基于回归的单阶段方法,不需要提取候选区域,直接提取输入图片特征,然后进行分类和位置回归,经典的模型包括YOLO系列[22-24]、SSD[25]、RetinaNet[26]等。

RetinaNet是Lin等[26]于2017年提出的一种单阶段的目标检测框架,由ResNet[27]、特征金字塔网络(Feature Pyramid Network,FPN)[28]和2个全卷积网络(Fully Convolutional Network,FCN)[29]子网络的组合。RetinaNet通过采用改进交叉熵的焦点损失(focal loss)作为损失函数,解决了目标检测中正负样本区域严重失衡而损失函数易被大量负样本左右的问题。RetinaNet在小目标检测中表现良好。

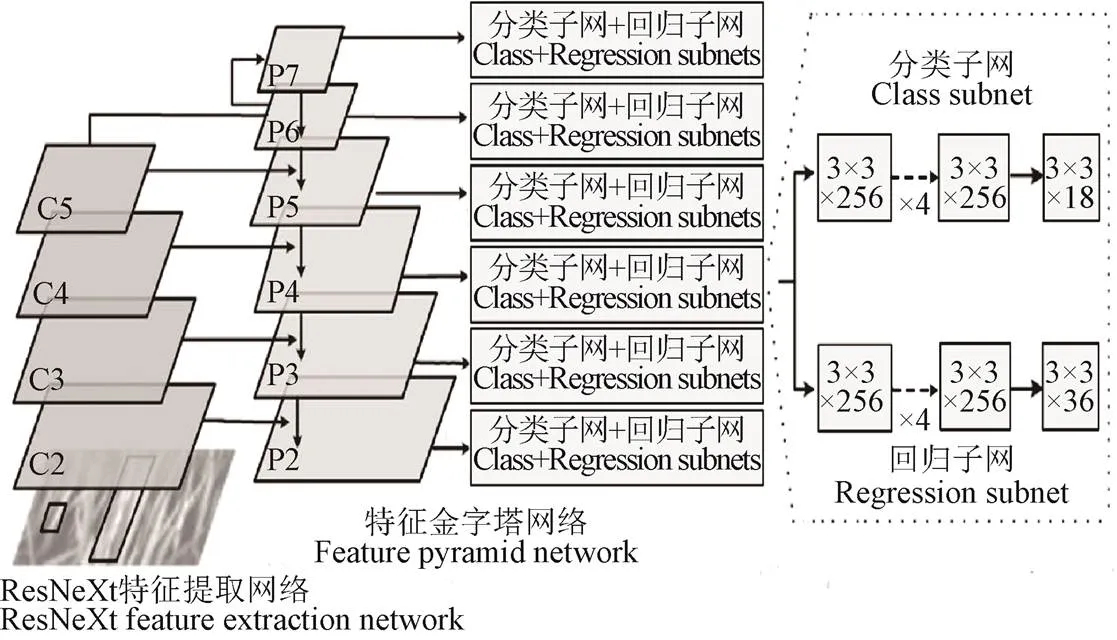

由于水稻害虫为害状区域大小差异较大,在图像中所占像素面积较小,导致对目标区域的定位和识别具有较大难度。本研究选择RetinaNet模型作为水稻冠层害虫为害状检测网络框架,在此基础上进行了改进,将RetinaNet模型中ResNet特征提取网络改为ResNeXt,改进了FPN结构,损失函数仍采用Focal loss,归一化采用组归一化(Group Normalization,GN)方法,网络结构见图3所示。

注:C2~C5是ResNeXt特征提取网络卷积层的输出;P2~P7是特征金字塔网络卷积层的输出;3×3为卷积核的尺寸大小;18、36和256为卷积层的输出通道数;×4代表卷积核大小为3×3,输出通道数为256的卷积层重复了4次。

1.3.2 特征提取网络

特征提取是目标检测的一个重要环节。不同的特征影响目标检测结果,特征数量影响检测器的内存、速度和性能。深度学习中的特征提取网络可以自动提取图像中成千上万个的特征参数。比较常用的特征提取网络包括AlexNet[30]、VGGNet[31]、GoogLeNet[32]等。

为了解决深度网络训练时产生精度退化的问题,He等[27]提出深度残差网络,利用恒等映射的概念在多层网络拟合残差映射解决退化问题。ResNeXt[33]在深度残差网络的基础上采用聚合相同的结构,每个结构都是通过conv1×1、conv3×3的卷积层堆积而成的,如图4所示,输入维度数为256的数据先通过conv1×1卷积层降低维度,减少了后续卷积的计算量,再通过32组平行堆叠的conv3×3卷积层来进一步提取目标特征,最后通过conv1×1卷积层升维,实现跳跃连接。与残差网络相比,ResNeXt网络更加模块化,超参数数量减少,便于模型的移植;同时,在相同参数量级的情况下,具有更高的模型准确率。本研究选择ResNeXt作为水稻冠层害虫为害状的特征提取网络。

1.3.3 归一化方法

为了加快模型收敛的速度和缓解深层网络中梯度弥散的问题,输入的数据在卷积计算之前,需要对不同量纲的数据进行归一化处理。深度学习方法中数据归一化方法主要包括:批归一化(Batch Normalization,BN)[34]、层归一化(Layer Normalization,LN)[35]、实例归一化(Instance Normalization,IN)[36]。

注:1×1和3×3为卷积核的尺寸大小;128和256卷积层的输出通道数;组 =32代表了32组平行堆叠的卷积层;“+”代表跳跃连接。

在ResNeXt 模型中,使用BN方法进行数据预处理。由于卷积神经网络训练时BN层计算存在数据偏差问题,Wu等[37]提出组归一化(Group Normalization,GN)方法,将通道分成许多组,在每个组内分别计算均值和方差,解决了小批量归一化导致计算存在偏差的问题。为了提高深层卷积神经网络的训练和收敛速度,本研究将ResNeXt 模型中的BN层改为GN层。

1.3.4 改进的特征金字塔网络(FPN)

RetinaNet检测模型中采用FPN,对小目标具有较好的检测效果。由于水稻冠层害虫为害状区域大小和形状差异较大,经过测试仍存在一些目标区域的漏检。为了减少为害状目标区域的漏检和误检,本研究对原有的FPN结构上进行改进(图3)。图中C2~C5是ResNeXt特征提取网络卷积层的输出;P2~P7是特征金字塔网络卷积层的输出,其通道数均为256,其中P7通过3×3、步长为2的卷积核与P6进行卷积得到,P6通过3×3、步长为2的卷积核对降维后的C5进行卷积得到。P7上采样与P6横向连接后再进行上采样,得到的结果与C5横向连接后得到P5。P4~P2分别由低分辨率、高语义信息的上一层特征上采样后经降维后的C4~C2横向连接生成。

1.3.5 焦点损失函数

在水稻冠层害虫为害状图像中,大部分区域是水稻背景,为害状区域所占面积较小,在进行为害状目标区域检测时候,出现了背景负样本和目标区域正样本极不平衡的问题。Lin等[26]在RetinaNet模型中提出的损失(Focal Loss,FL)函数,即尺度动态可调的交叉熵损失函数FL(p)如式(1)所示,可以解决目标检测中正负样本区域严重失衡的问题。

1.4 结果评价方法

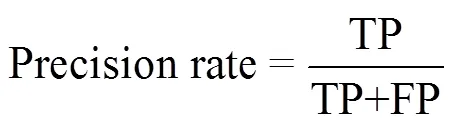

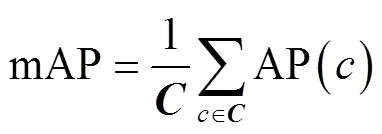

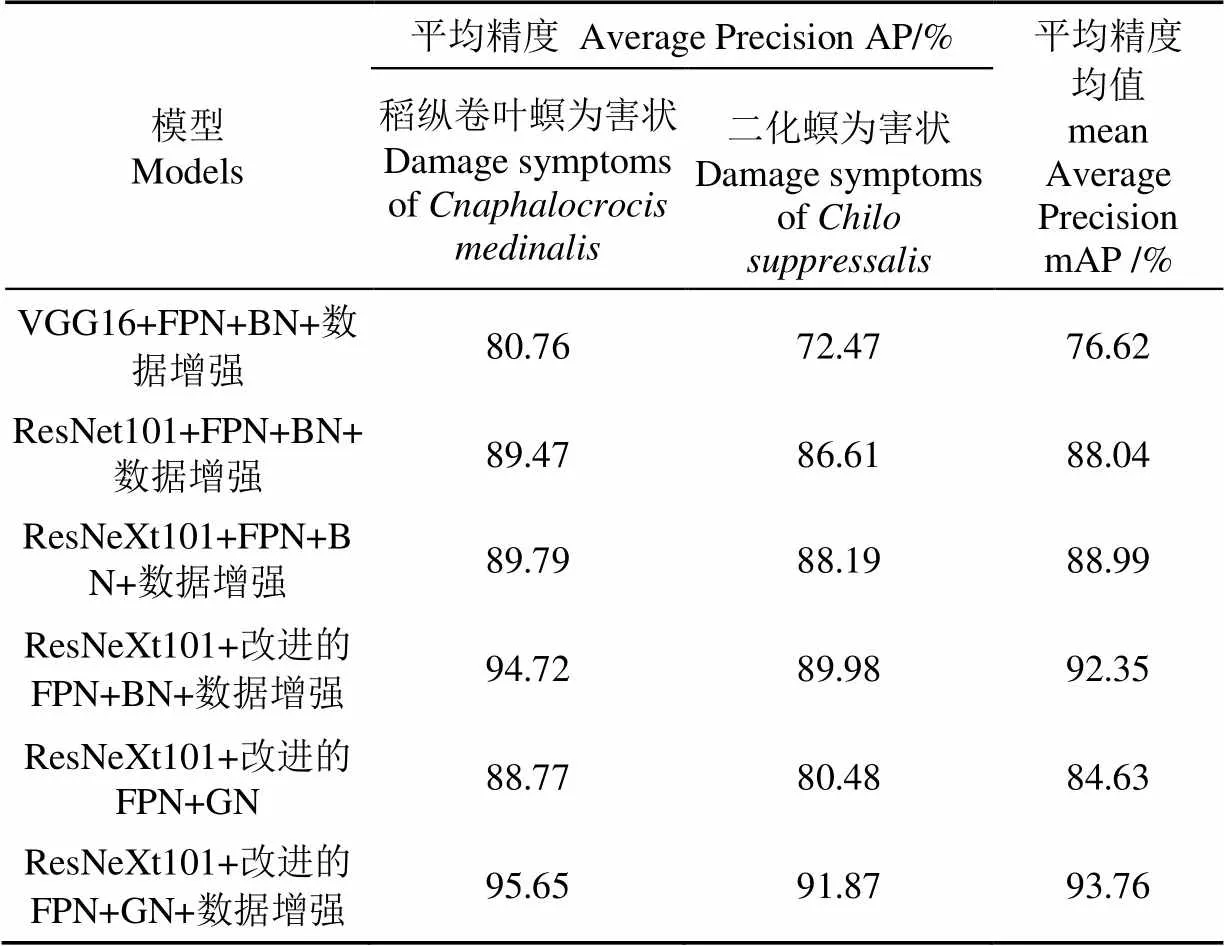

为了评价本研究提出的水稻冠层害虫为害状检测模型的有效性,选择精确率-召回率曲线(Precision-Recall curve,PR)、平均精度(Average Precision,AP)和平均精度均值(mean Average Precision,mAP)作为评价指标。

PR曲线中Precision rate和Recall rate如式(2)和式(3)所示

式中TP表示某个类别检测正确的数量,FP表示检测错误的数量,FN表示没有检测到目标数量。

AP是衡量某一类别检测的平均精度值,利用精确率对召回率的积分,如式(4)所示

式中表示某一类别。

mAP是衡量所有类别AP的平均值,如式(5)所示

式中表示所有类别的集合。

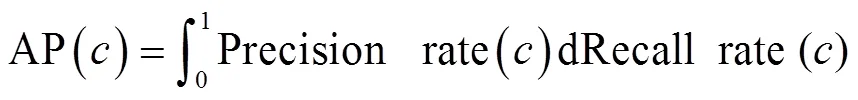

1.5 不同模型的比较

为了验证本研究提出的模型对水稻冠层害虫为害状检测的效果,在RetinaNet检测网络框架下,分别选择了VGG16、ResNet101和ResNeXt101作为特征提取网络,FPN网络改进前后、不同的归一化方法和图像数据增强前后等不同情况下共6个模型对水稻冠层2种害虫为害状进行检测,比较不同模型的检测结果(表 2)。

表2 RetinaNet框架下6种模型对水稻冠层2种害虫为害状的检测结果

2 结果与分析

2.1 模型运行环境

所有模型是在CPU为Inter-i5-6500处理器,GPU为NVIDIA GTX 1080Ti 的台式计算机,操作系统为Ubuntu16.04,PyTorch深度学习框架下进行训练和测试。

2.2 不同模型PR曲线与分析

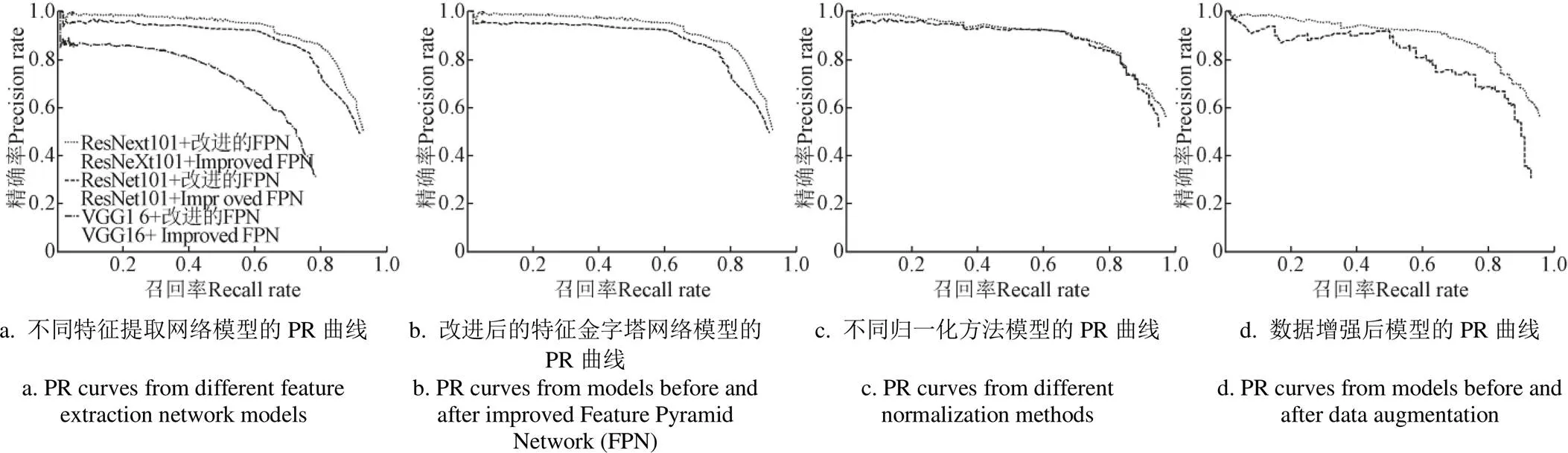

本研究提出的检测模型与另外5种模型对测试集图像中的稻纵卷叶螟和二化螟为害状进行测试,利用Python语言中的Matplotlib库绘制PR曲线(图5)。图 5a是基于VGG16、ResNet101和ResNeXt101 3种特征提取网络训练获得的PR曲线,在相同的召回率情况下,基于ResNeXt101的模型获得了更高的精确度。图5b是基于FPN改进后的模型获得的PR曲线,从图中可以看出FPN改进后的模型整体性能得到了提升,漏检率进一步的降低。图5c是基于BN和GN获得的PR曲线,在相同的召回率情况下,基于GN的模型检测得到精确度略有提升。图5d显示了数据增强后获得的PR曲线,数据增强后训练得到的模型其精确度明显提高很多。

图5 6种不同模型的精确率-召回率曲线图

2.3 不同模型平均精度(AP)和平均精度均值(mAP)与分析

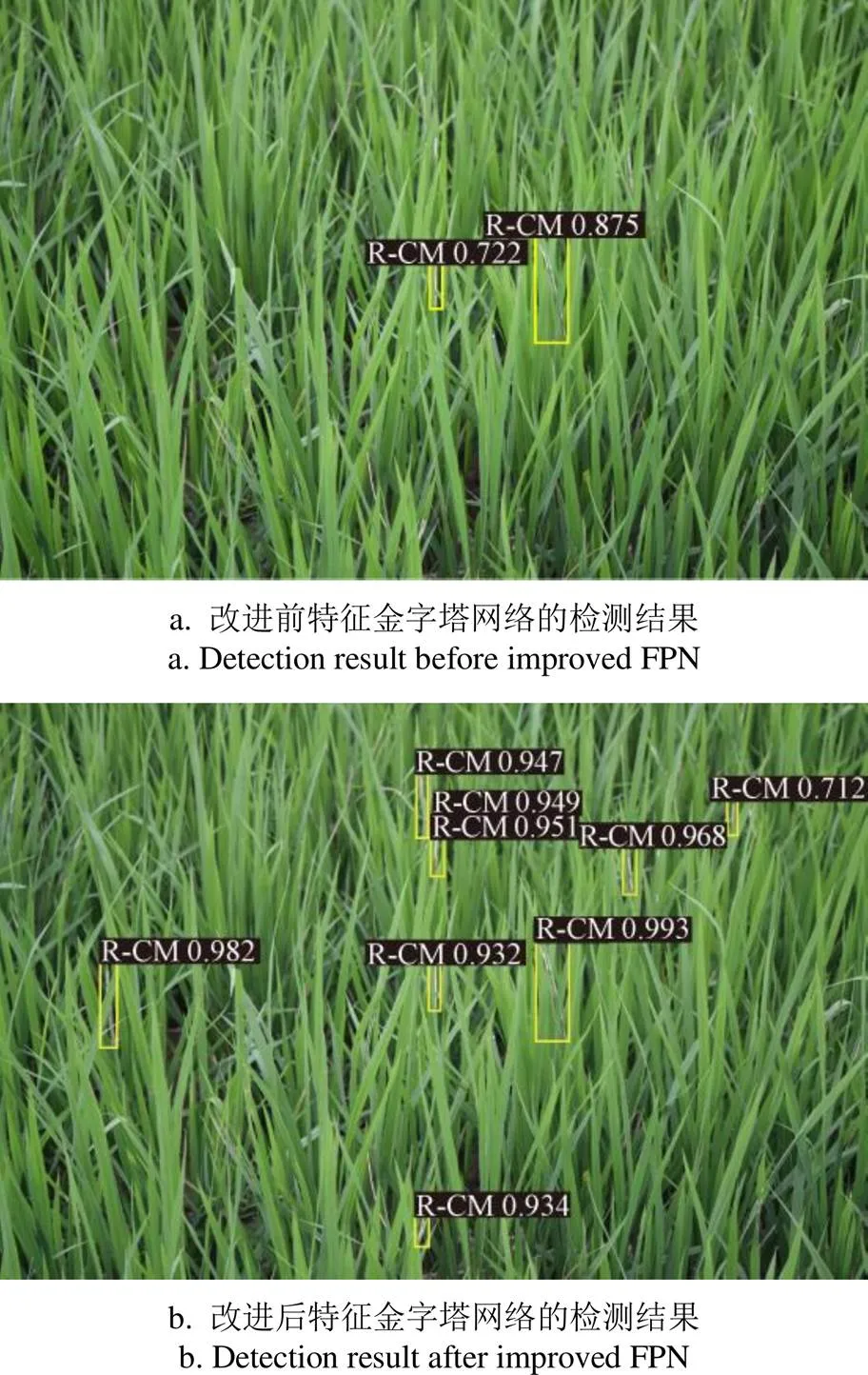

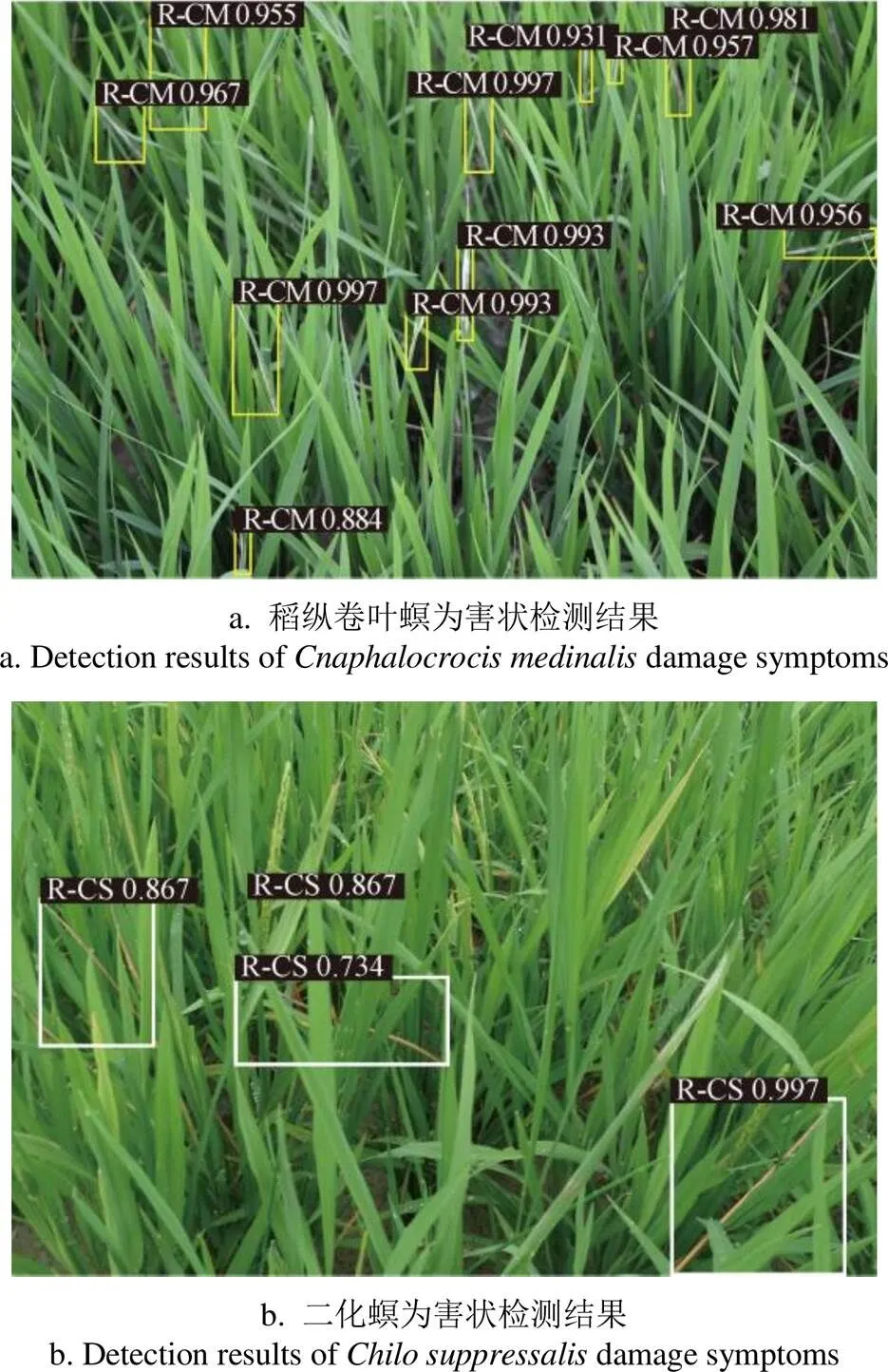

6种模型对测试样本检测结果的AP和mAP值见表 2。其中,基于ResNeXt101的模型比基于VGG16的模型检测2种害虫为害状mAP值提高了12.37%,比基于ResNet101的模型检测2种害虫为害状mAP值提高了0.95%,表明ResNeXt101在提取特征方面,优于VGG16和ResNet101特征提取网络。FPN改进后获得的检测模型在稻纵卷叶螟为害状检测结果比改进前提高了4.93%,mAP提高了3.36%,表明改进FPN获得的模型更有利于检测到为害状区域(图6)。将批归一化BN替换为组归一化GN后,获得的模型检测为害状的AP和mAP值均有一定的提高。数据增强后获得的检测模型较数据增强前获得的检测模型,mAP提高了9.13%,表明数据增强对于模型的泛化能力有了明显的提升。由此可见,本研究提出的ResNeXt101+改进的FPN+GN+数据增强获得的检测模型对水稻冠层2种害虫为害状检测效果好于另外5种模型(图7)。

注:方框表示模型检测到的害虫为害状区域;方框上的R-CM表示稻纵卷叶螟为害状;数字表示识别为稻纵卷叶螟为害状的概率。

在相同的环境下,改进后的模型检测一张图像检测平均需要0.56 s左右,可以满足水稻冠层害虫为害状检测任务。

注:方框表示模型检测到的害虫为害状区域;方框上的R-CM表示稻纵卷叶螟为害状,R-CS表示二化螟为害状;数字表示不同区域识别为不同害虫为害状的概率。

3 结论

本研究提出了一种基于改进RetinaNet的水稻冠层害虫为害状自动检测模型,可为植保无人机田间病虫害巡检和无人机精准喷药提供理论依据,为后续稻田病虫害智能测报打下基础。

1)采用深度学习中RetinaNet目标检测框架,选择ResNeXt101作为特征提取网络,改进了特征金字塔网络(Feature Pyramid Network, FPN)结构,选择组归一化(Group Normalization,GN)作为归一化方法,提高了水稻冠层害虫为害状检测模型的鲁棒性和准确性。

2)改进的RetinaNet模型对稻纵卷叶螟和二化螟为害状区域的检测指标平均精度均值(mean Average Precision , mAP)为93.76%,高于其他5种模型。本结果为稻纵卷叶螟和二化螟为害状的田间调查与测报提供了可靠的数据。

[1] 王艳青. 近年来中国水稻病虫害发生及趋势分析[J]. 中国农学通报,2006,22(2):343-347. Wang Yanqing. Analysis on the occurrence and trend of rice pests and diseases in China in recent years[J]. Chinese Agricultural Science Bulletin, 2006, 22(2): 343-347. (in Chinese with English abstract)

[2] 郑志雄,齐龙,马旭,等. 基于高光谱成像技术的水稻叶瘟病病害程度分级方法[J]. 农业工程学报,2013,29(19):138-144. Zheng Zhixiong, Qi Long, Ma Xu, et al. Classification method of rice leaf blast disease based on hyperspectral imaging technique[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2013, 29(19): 138-144. (in Chinese with English abstract)

[3] 管泽鑫,唐健,杨保军,等. 基于图像的水稻病害识别方法研究[J]. 中国水稻科学,2010,24(5):497-502. Guan Zexin, Tang Jian, Yang Baojun, et al. Image-based rice disease identification method[J]. Chinese Journal of Rice Science, 2010, 24(5): 497-502. (in Chinese with English abstract)

[4] 杨昕薇,谭峰. 基于贝叶斯分类器的水稻病害识别处理的研究[J]. 黑龙江八一农垦大学学报,2012,24(3):64-67. Yang Xinwei, Tan Feng. Research on rice disease recognition and processing based on Bayesian classifier[J]. Journal of Heilongjiang Bayi Agricultural University, 2012, 24(3): 64-67. (in Chinese with English abstract)

[5] 路阳,邵庆,张楠,等. 水稻稻瘟病图像识别预处理方法研究[J]. 黑龙江八一农垦大学学报,2011,23(4):64-67. Lu Yang, Shao Qing, Zhang Nan, et al. Study on pretreatment methods for rice blast disease image recognition[J]. Journal of Heilongjiang Bayi Agricultural University, 2011, 23(4): 64-67. (in Chinese with English abstract)

[6] 石凤梅,赵开才,孟庆林,等. 基于支持向量机的水稻稻瘟病图像分割研究[J]. 东北农业大学学报,2013,44(2):128-135. Shi Fengmei, Zhao Kaicai, Meng Qinglin, et al. Image segmentation of rice blast in rice based on support vector machine[J]. Journal of Northeast Agricultural University, 2013, 44(2): 128-135. (in Chinese with English abstract)

[7] Alotaibi A, Mahmood A. Deep face liveness detection based on nonlinear diffusion using convolution neural network[J]. Signal, Image and Video Processing, 2017, 11(4): 713-720.

[8] Bautista C M, Dy C A, Mañalac M I, et al. Convolutional neural network for vehicle detection in low resolution traffic videos[C]//2016 IEEE Region 10 Symposium (TENSYMP). Bali, Indonesia, IEEE, 2016: 277-281.

[9] Tajbakhsh N, Shin J Y, Gurudu S R, et al. Convolutional neural networks for medical image analysis: Full training or fine tuning?[J]. IEEE Transactions on Medical Imaging, 2016, 35(5): 1299-1312.

[10] Sladojevic S, Arsenovic M, Anderla A, et al. Deep neural networks based recognition of plant diseases by leaf image classification[J]. Computational Intelligence and Neuroscience, 2016: 1-11, [2016-06-22], http://www.ncbi.nlm. nih.gov/pmc/articles/PMC4934169/pdf/CIN2016-3289801.pdf.

[11] Mohanty S P, Hughes D P, Salathé M. Using deep learning for image-based plant disease detection[EB/OL]. 2016, https://arxiv.org/abs/1604.03169.

[12] Ferentinos K P. Deep learning models for plant disease detection and diagnosis[J]. Computers and Electronics in Agriculture, 2018, 145: 311-318.

[13] Liu Bin, Zhang Yun, He DongJian, et al. Identification of apple leaf diseases based on deep convolutional neural networks[J]. Symmetry, 2017, 10(1): 11-26.

[14] Ashqar B A M, Abu-Naser S S. Image-based tomato leaves diseases detection using deep learning[J]. International Journal of Engineering Research, 2019, 2(12): 10-16.

[15] Brahimi M, Boukhalfa K, Moussaoui A. Deep learning for tomato diseases: Classification and symptoms visualization[J]. Applied Artificial Intelligence, 2017, 31(4): 299-315.

[16] 刘婷婷,王婷,胡林. 基于卷积神经网络的水稻纹枯病图像识别[J]. 中国水稻科学,2019,33(1):90-94. Liu Tingting, Wang Ting, Hu Lin. Image recognition of rice sheath blight based on convolutional neural network[J]. Chinese Journal of Rice Science, 2019, 33(1): 90-94. (in Chinese with English abstract)

[17] Wang Guan, Sun Yu, Wang Jianxin. Automatic image-based plant disease severity estimation using deep learning[J]. Computational Intelligence and Neuroscience, 2017: 1-8, [2017-07-05], http://doi.org/10.1155/2017/2917536.

[18] Everingham M, Van Gool L, Williams C K I, et al. The pascal Visual Object Classes (VOC) challenge[J]. International Journal of Computer Vision, 2010, 88(2): 303-338.

[19] Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580-587.

[20] Girshick R. Fast R-CNN[C]//Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440-1448.

[21] Ren Shaoqing, He Kaiming, Girshick R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[C]//Advances in Neural Information Processing Systems, Montreal, Canada, 2015: 91-99.

[22] Redmon J, Divvala S, Girshick R, et al. You only look once: Unified, real-time object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 779-788.

[23] Redmon J, Farhadi A. YOLO9000: Better, faster, stronger[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 7263-7271.

[24] Redmon J, Farhadi A. Yolov3: An incremental improvement[EB/OL]. 2018, [2018-04-08], https://arxiv.org/abs/1804.02767.

[25] Liu Wei, Anguelov D, Erhan D, et al. SSD: Single shot multibox detector[C]//European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 21-37.

[26] Lin Tsungyi, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]//Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980-2988.

[27] He Kaiming, Zhang Xianyu, Ren Shaoqing, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770-778.

[28] Lin Tsungyi, Dollár P, Girshick R, et al. Feature pyramid networks for object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2117-2125.

[29] Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 3431-3440.

[30] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks[C]// Advances in Neural Information Processing Systems, Lake Tahoe, USA, 2012: 1097-1105.

[31] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[EB/OL]. 2014, [2014-09-04], https://arxiv.org/abs/1409.1556.

[32] Szegedy C, Liu Wei, Jia Yangqing, et al. Going deeper with convolutions[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1-9.

[33] Xie Saining, Girshick R, Dollár P, et al. Aggregated residual transformations for deep neural networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1492-1500.

[34] Ioffe S, Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift[C]// International Conference on Machine Learning, Lille, France, 2015: 448-456.

[35] Ba J L, Kiros J R, Hinton G E. Layer normalization[EB/OL]. 2016, [2016-07-21], https://arxiv.org/abs/1607.06450.

[36] Ulyanov D, Vedaldi A, Lempitsky V. Instance normalization: The missing ingredient for fast stylization[EB/OL]. 2016, [2016-07-27], https://arxiv.org/abs/1607.08022.

[37] Wu Yuxin, He Kaiming. Group normalization[C]// Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 3-19.

Automatic detection model for pest damage symptoms on rice canopy based on improved RetinaNet

Yao Qing1, Gu Jiale1, Lyu Jun1, Guo Longjun1, Tang Jian2※, Yang Baojun2, Xu Weigen3

(1.,,310016,; 2.,311400,; 3.,,310020,)

In China, the current field survey methods of pest damage symptoms on rice canopy mainly rely on the forecasting technicians to estimate the sizes and numbers of damage symptoms by visual inspection for estimating the damage level of pests in paddy fields. The manual survey method is subjective, time-consuming, and labor-intensive. In this study, an improved RetinaNet model was proposed to automatically detect the damage symptom regions of two pests (and) on rice canopy. This model was composed of one ResNeXt network, an improved feature pyramid network, and two fully convolutional networks (one was class subnet and the other was regression subnet). In this model, ResNeXt101 and Group Normalization were used as the feature extraction network and the normalization method respectively. The feature pyramid network was improved for achieving a higher detection rate of pest damage symptoms. The focal loss function was adopted in this model. All images were divided into two image sets including a training set and a testing set. The training images were augmented by flipping horizontally, enhancing contrast, and adding Gaussian noise methods to prevent overfitting problems. The damage symptom regions in training images were manually labeled by a labeling tool named LabelImg. 6 RetinaNet models based on VGG16, ResNet101, ResNeXt101, data augmentation, improved feature pyramid network, and different normalization methods respectively were developed and trained on the training set. These models were tested on the same testing set. Precision-Recall curves, average precisions and mean average precisions of six models were calculated to evaluate the detection effects of pest damage symptoms on 6 RetinaNet models. All models were trained and tested under the deep learning framework PyTorch and the operating system Ubuntu16.04. The Precision-Recall curves showed that the improved RetinaNet model could achieve higher precision in the same recall rates than the other 5 models. The mean average precision of the model based on ResNeXt101 was 12.37% higher than the model based on VGG16 and 0.95% higher than the model based on ResNet101. It meant that the ResNeXt101 could effectively extract the features of pest damage symptoms on rice canopy than VGG16 and ResNet101. The average precision of the model based on improved feature pyramid network increased by 4.93% in the detection ofdamage symptoms and the mean average precision increased by 3.36% in the detection of 2 pestdamage symptoms. After data augmentation, the mean average precision of the improved model increased by 9.13%. It meant the data augmentation method could significantly improve the generalization ability of the model. The improved RetinaNet model based on ResNeXt101, improved feature pyramid network, group normalization and data augmentation achieved the average precision of 95.65% in the detection ofdamage symptoms and the average precision of 91.87% in the detection ofdamage symptoms. The mean average precision of the damage symptom detection of 2 pests reached 93.76%. These results showed that the improved RetinaNet model improved the detection accuracy and robustness of pest damage symptoms on rice canopy. It took an average time of 0.56 s to detect one image using the improved RetinaNet model, which could meet the realtime detection task of pest damage symptoms on rice canopy. The improved RetinaNet model and its results would provide the field survey data and forecasting of damage symptoms ofandon the rice canopy. It could be applied in precision spraying pesticides and pest damage symptom patrol by unmanned aerial vehicles. It would realize the intelligent forecasting and monitoring of rice pests, reduce manpower expense, and improve the efficiency and accuracy of the field survey of pest damage symptoms on rice canopy.

image processing; algorithms; automatic testing; rice canopy; damage symptom image;;; RetinaNet model

姚青,谷嘉乐,吕军,等. 改进RetinaNet的水稻冠层害虫为害状自动检测模型[J]. 农业工程学报,2020,36(15):182-188.doi:10.11975/j.issn.1002-6819.2020.15.023 http://www.tcsae.org

Yao Qing, Gu Jiale, Lyu Jun, et al. Automatic detection model for pest damage symptoms on rice canopy based on improved RetinaNet[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2020, 36(15): 182-188. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2020.15.023 http://www.tcsae.org

2019-12-13

2020-04-07

国家“863”计划项目(2013AA102402);浙江省公益性项目(LGN18C140007);浙江省自然科学基金(Y20C140024)

姚青,博士,教授,主要研究方向为农业病虫害图像处理与智能诊断。Email:q-yao@zstu.edu.cn

唐健,研究员,主要研究方向为农业病虫害智能测报技术。Email:tangjian@caas.cn

10.11975/j.issn.1002-6819.2020.15.023

TP391

A

1002-6819(2020)-15-0182-07