Adaptive Application Offloading Decision and Transmission Scheduling for Mobile Cloud Computing

Junyi Wang , Jie Peng, Yanheng Wei, Didi Liu, Jielin Fu

1 Guangxi Key Lab of Wireless Wideband Communication & Signal Processing, Guilin 541004, China

2 Shanghai Astronomical Observatory, Chinese Academy of Sciences, Shanghai 200000, China

3 School of Telecommunication Engineering Xi’an University, Xi’an 710049, China

4 Sci. and Tech. on Info. Transmission and Dissemination in Communication Networks Lab., Shijiazhuang 050000, China

* The corresponding author,email: fujielin@gmail.com

I. INTRODUCTION

As smart mobile devices are gaining enormous popularity, more and more new mobile applications, such as face recognition, large-scale 3D game and augmented reality, surge up and attract great attentions [1, 2]. All these kinds of mobile applications are typically user-interactive and resource-hungry, thus require quick response and high energy consumption. In recent years, advanced technologies in hardware field have been developed, but general mobile devices are still resource-constrained, having limited computation resources and limited battery lives due to their physical size constraint. The tension between resource-hungry application and resource-constrained mobile device hence poses a significant challenge for the future mobile platform development [3].

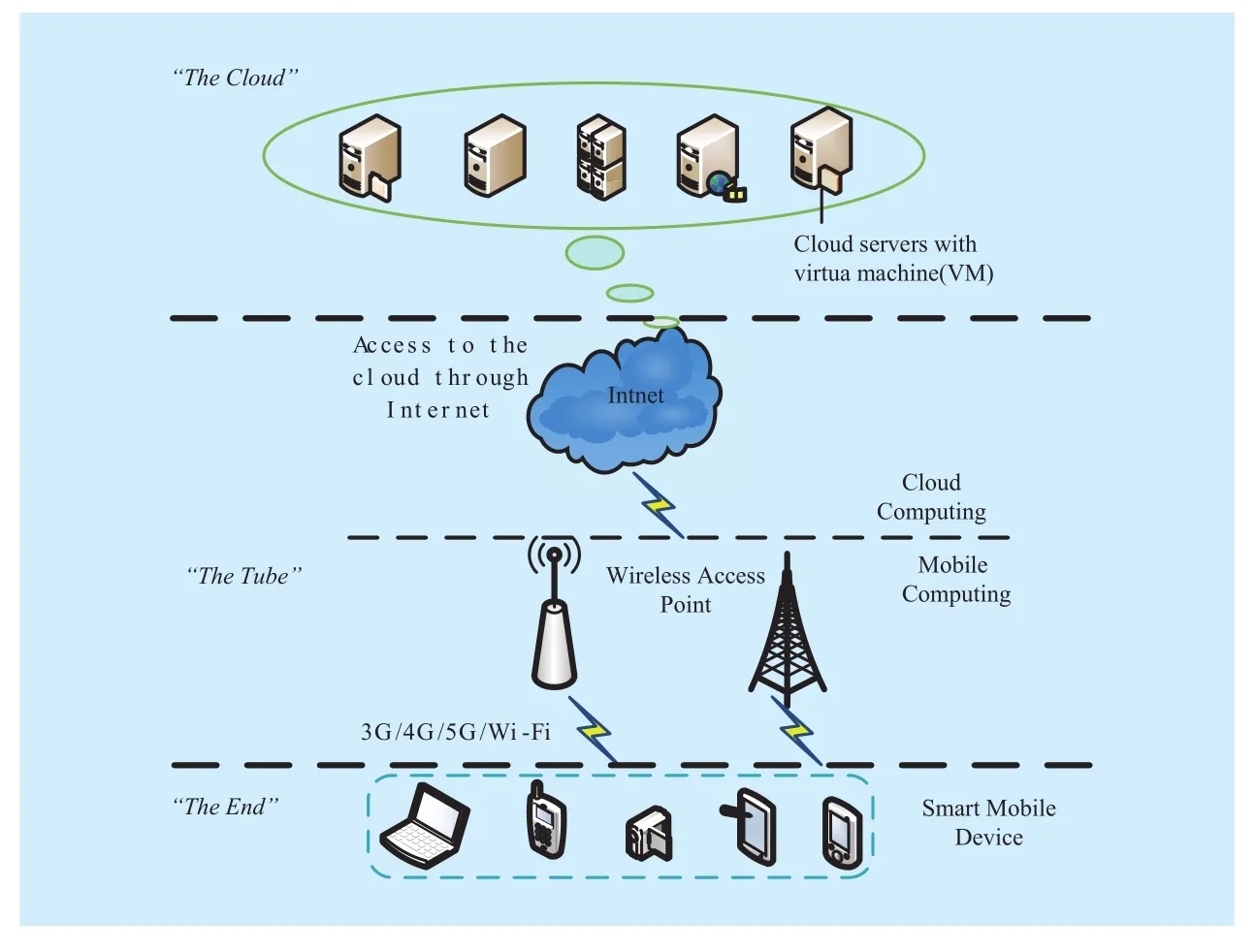

With the rapid deployment of broadband wireless networks, the newly emerging mobile cloud computing [4] is envisioned as a promising solution to solve above tension. Mobile cloud computing is introduced as an integration of cloud computing into the mobile environment, in which mobile device can access to clouds for resources by using wireless access technology together with the Internet technology [5]. In general mobile cloud computing,there exists three parts: ‘the end’, ‘the tube’and ‘the cloud’ [6]. As illustrated in Figure 1,vendors (such as Amazon Elastic Compute Cloud (EC2), Amazon Virtual Private Cloud(VPC), and PacHosting) provide computing resource (such as servers, storage, software and so on) for registered users. Thus, mobile device can remotely offload their application which cannot run or can generate much cost on mobile device to appropriate cloud [7]. But unlike cloud computing in wired network, the mobile-specific challenges of mobile cloud computing arise due to the unique characteristics of mobile network, which have severe resource constraints (such as the limitation of bandwidth, communication latency) and frequent variations [8]. When there are large amount of data transferred in a wireless network, the network delay may be increased significantly and become intolerable. So an adaptive application offloading decision and wireless transmission scheduling have to be made for mobile device to decide whether to offload an application task or not and which cloud to choose.

In this paper, the authors have designed and implemented an adaptive application offloading policy for multiple offloading target clouds.

Now there have been many useful research efforts dedicated to offloading dataor computation-intensive programs from a resource-poor mobile device to optimize application execution cost (energy and response time). For energy saving, [9] proposed a Lyapunov optimization theory based dynamic offloading algorithm to identify offloading component without considering the impact of changeable wireless channel on offloading algorithm. [10] investigated a theoretical framework and derived a threshold policy for energy-optimal mobile cloud computing under stochastic wireless channel. The insufficient of their works was no performance evaluation of real applications with intensive computation. For response time saving, [11]implemented and evaluated offloading mechanisms for offloading computationally intensive Java programs to remote servers by using Ibis middle ware. Their methods could not be extended to other execution environments since their works were related with specific software packet. To our best knowledge, only few works focused on saving energy and response time at the same time. Among them, [12] proposed an offloading framework which considered response time and energy consumption simultaneously and made numerical results analysis. However, the theoretical analysis about their offloading model was not given.In this paper, based on all these researches we develop a good applicability offloading model which optimizes these two key performance indexes at the same time. Based on Lyapunov optimization, the offloading problem was solved and performance analysis was made.

Specifically, aiming at the stochastic input mobile application workloads which mainly depend on users’ needs, we develop a customizable cost model which allows mobile user to adjust the weight of application response time and energy consumption based on battery lifetime, wireless transmission channel, mobile CPU speed, clouds resources and so on. Application execution targets including multiple different clouds can provide different available cloud resource for mobile device on any slot t, which improve the execution efficiency of real-time application. In the mobile cloud computing, mobile device can access to different clouds through different wireless access networks, such as 3/4/5G, Wi-Fi and so on.In conclusion, based on the customizable cost model, we propose an adaptive offloading policy (including offloading decision and transmission scheduling) to determine whether tooffload application remotely and which cloud to choose for decreasing application execution cost. The main results and contributions of this paper are summarized as follows:

Fig. 1 A basic framework of the mobile cloud computing

● General offloading model formulation:We formulate an offloading model which considers stochastic applications (i.e., the input computation data size of application is stochastic, so is the computation size) under general network environment, by taking into account both response time and energy consumption of application. Our offloading model has good universality and scalability.

● Adaptive offloading decision and transmission scheduling scheme:Based on Lyapunov optimization theory, we obtain an optimal offloading policy with low complexity. The performance analysis is also given.

● Application offloading reference:We devise several simulation experiments based on real network settings. The simulation results show that compared with that all applications are executed on local mobile device, mobile device can save 68.557%average response time and 67.095% average energy consumption. Moreover, the statistics results of application offloading probability can provide a reference for real application offloading on mobile device. It can reduce the overhead of offloading policy itself.

The rest of this paper is organized as follows. Section II describes some related works.Section III gives the problem statement and model formulation. Section IV details the implementation of dynamic offloading. Section V shows the performance analysis of application offloading policy. Section VI shows the simulation results and numerical analysis. And Section VII concludes this paper.

II. RELATED WORKS

Many research works have investigated offloading data- or computation-intensive programs from resource-poor mobile device. All the researches aimed at different important factors (such as bandwidth, routing and so on) and different objects (such as energy consumption, response time and so on). According to their research goals, these works almost can be classified into three categories.

1) Works on energy saving:Extending battery lifetime is one of the most crucial design objectives of mobile device because of their limited battery capacity. [13] proposed a taxonomy study for energy-aware high performance computing, they built energy model to approximate the energy consumption of offloading. [14] studied the feasibility of mobile computation offloading. They gave a feasibility evaluation method and to evaluate the costs of both off-clones and back-clones in terms of bandwidth and energy consumption. [15]made an encoding scheme for mobile cloud computing network, which could achieve lower energy consumption by lowering the power consumption of a CPU and a wireless network interface card. [16][17] aimed at studying novel routing methods for transmitting data effectively to optimize node’s energy. All above researches aimed at maximizing battery lifetime for mobile devices.

2) Works on response time saving:Responsiveness of application is important,especially for real-time and user-interactive applications. [18] developed an offloading middle ware, which provided runtime of floading service to improve the responsiveness of mobile device. [19] studied an exhaustive search algorithm to examine all possible application partitions in order to find an optimal offloading partition. All partition methods performance well for small-size applications. [20]proposed an effective virtual machine placement algorithm to reduce cloud service response time under wireless mesh environment.[21] studied multi-user computation partitioning problem, which considered partitioning of multiple users’ computations together with the scheduling of offloaded computations on cloud resources. [22]-[24] aimed at studying transmission mechanism or protocol to solving applications transferring problems for the real-time application. Under mobile cloud com-puting, fast and effective data transmission is important. All of these can achieve minimum average application completion time for mobile device.

3) Works on energy and time saving:Energy consumption and response time are two key performance indexes of mobile application. However, only few works have addressed these two targets simultaneously. [25]implemented a framework named ThinkAir,which could make developers simply migrate their application workloads to the cloud. [26]proposed a game theoretic approach to achieve efficient computation offloading for optimizing execution costs.

All these works studied energy consumption and response time for mobile cloud computing in different ways, they provided many important references for our works. Based on all these works, we provide our own adaptive application offloading model from other aspects.

III. MODEL FORMULATION

In this paper, we investigate an adaptive application offloading policy under mobile computing cloud where mobile device can offload compute-intensive applications to one of the multiple available clouds through various available wireless networks. The key characteristics of offloading model are described as follows, and the offloading model is also formulated as follows.

3.1 Network description

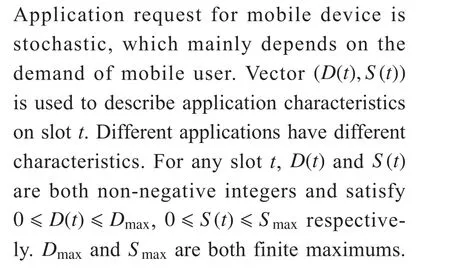

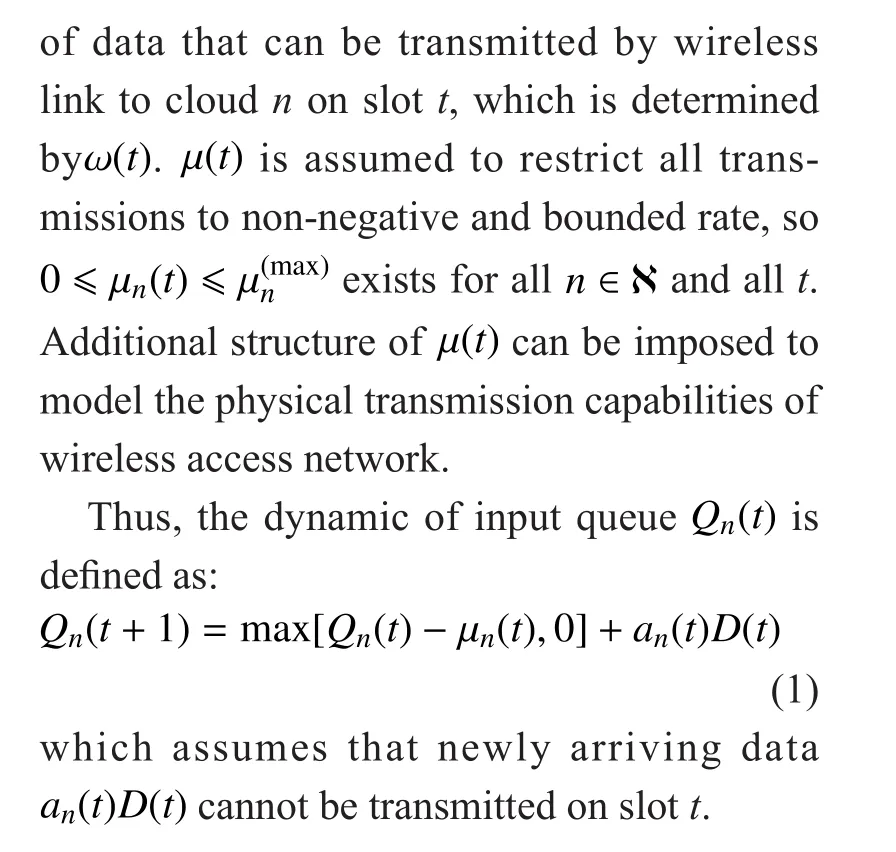

Network scenario is illustrated in Figure 2,which contains one mobile device and multiple target clouds. To simplify the offloading model, we slot the system model with fixed size slotsWe assume that 3/4/5G network is available for all locations while the availability of Wi-Fi network depends on locations. The data transmission rate of each wireless network changes over different slots.When there is a request for application execution on mobile device on slot t, in order to improve user experiment and reduce energy consumption simultaneously, the controller on mobile device needs to determine whether or not to offload application to cloud based on the current network, application itself, available cloud resource and so on. Letbe the set of clouds. If there is more than one available cloud on slot t, the controller needs to further choose the best one among all available clouds. Application with few input computation data size (i.e., the program codes and data) and high computation size (i.e., the total number of CPU cycles) suits the offloading model better. Application component partitioning is not considered.

3.2 Offloading model

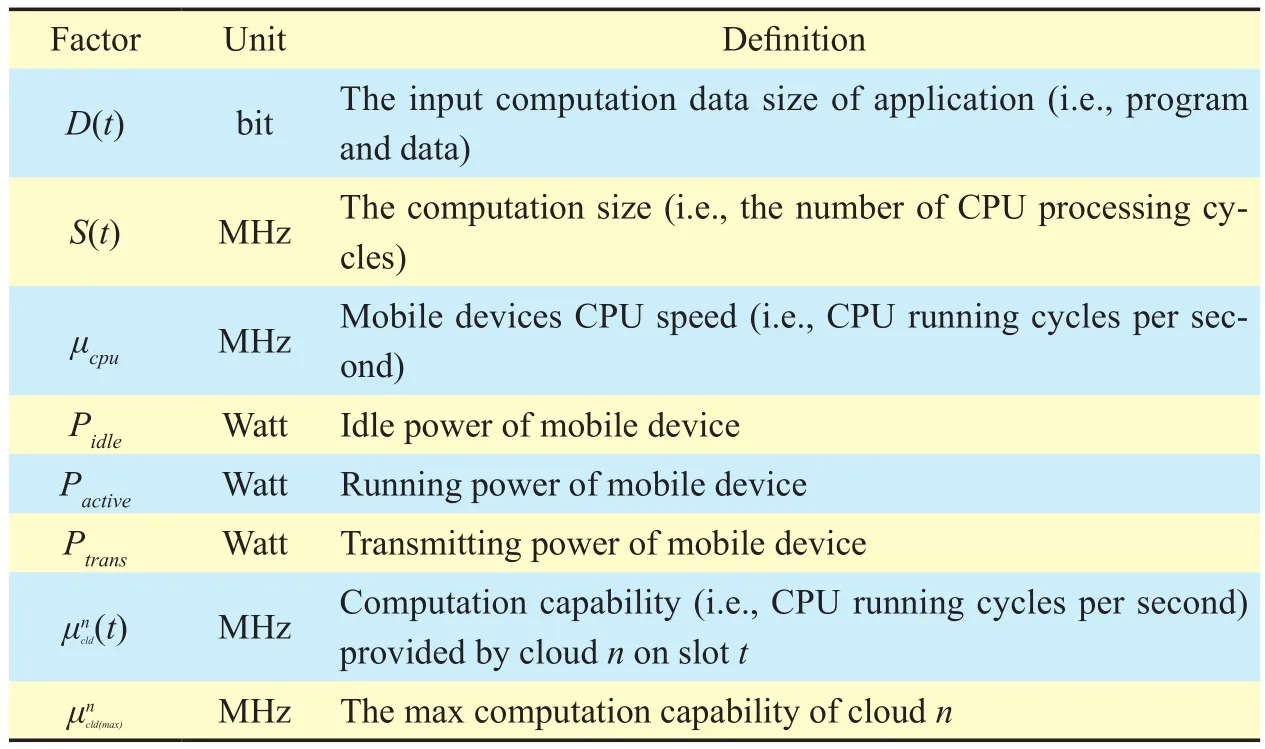

For the convenience of presentation, some parameters are listed in Table I.

3.2.1 Application generation and offloading decision

Fig. 2 Network operation topology for application offloading

Table I Notation table

3.3 Problem formulation

On slot t, mobile device firstly needs to estimate application executing cost (including response time and execution energy). According to application offloading decision, the executing timeis given by:

estimated execution time for local mobile device executing application. The first part on the right-hand-side of (2) is the offloading response time (including transmission time and running time), and the second part is the response time executing locally. Specifically,according to the assumption that one application which needs to be offloaded can only be offloaded to one cloud, so based on the value of vectorthe response time is denoted by either the first part of (2) if offloading, or the second part of (2) if executing locally. Here we neglect the propagation delay of input codes and data. And we also do not consider the time spent for the result data transmitted from cloud to the mobile device, because the size of data returning from cloud is always

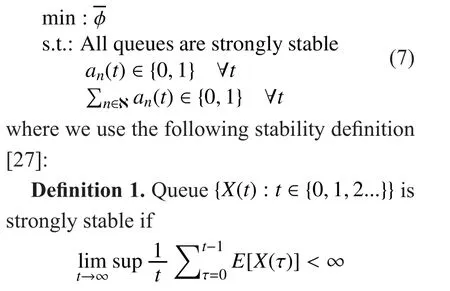

Based on the problem description and modeling above, the objective is to solve:

Intuitively, this means that a queue is strongly stable if its average backlog is finite.

IV. DYNAMIC APPLICATION OFFLOADING POLICY

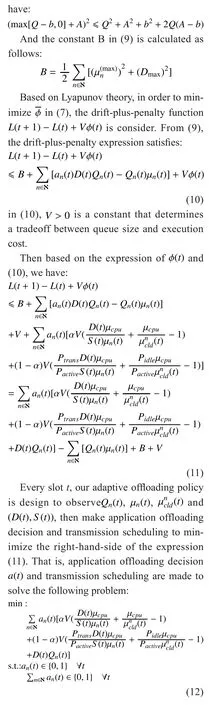

The above problem (7) can be cast as a stochastic network optimization. Early works[9] used Lyapunov optimization theory to show that the queuing naturally fits in the related application offloading problem. Rather than trying to solve problem (7) (which require a-priori knowledge of the applicationqueue backlogsand so on),we use Lyapunov optimization theory to solve(7) that only requires knowledge of the current network state and queues backlogs [28]. It can reduce the complexity of solving the offloading problem [9].

4.1 Lyapunov optimization

Where we omit the constant on slot t which have nothing to do with the decision variable an(t), such as B,

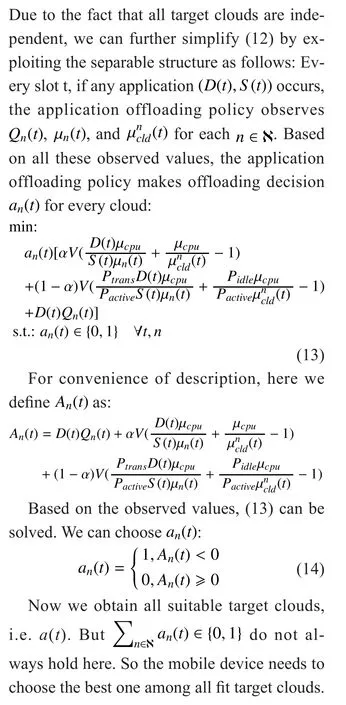

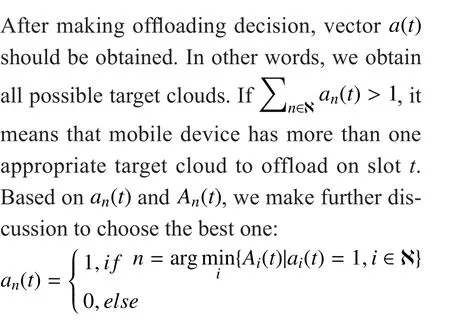

4.2 Offloading decision

4.3 Transmission scheduling

In conclusion, based on Lyapunov theory,there is no need to obtain the future/history network state values which may bring delay and energy consumption. And the solving complexity just depends on the structure of the decision spaces (the set of all possible decision variables). The of floading decision set in our paper contains a finite number of possible control actions, so offloading policy can simply evaluates the problem over each decision option and chooses the best one. The Lyapunov technique has low complexity for this kind of problem.

V. PERFORMANCE ANALYSIS

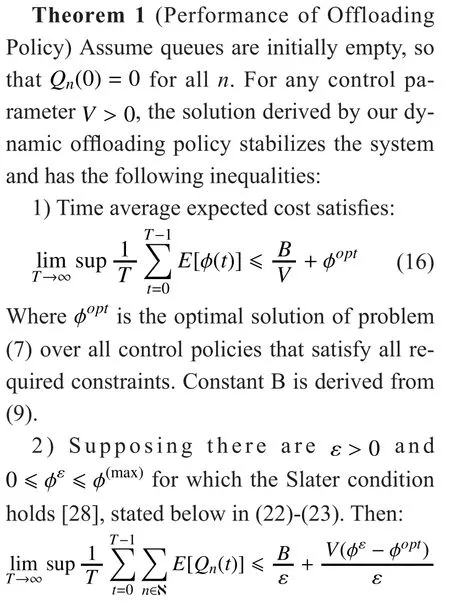

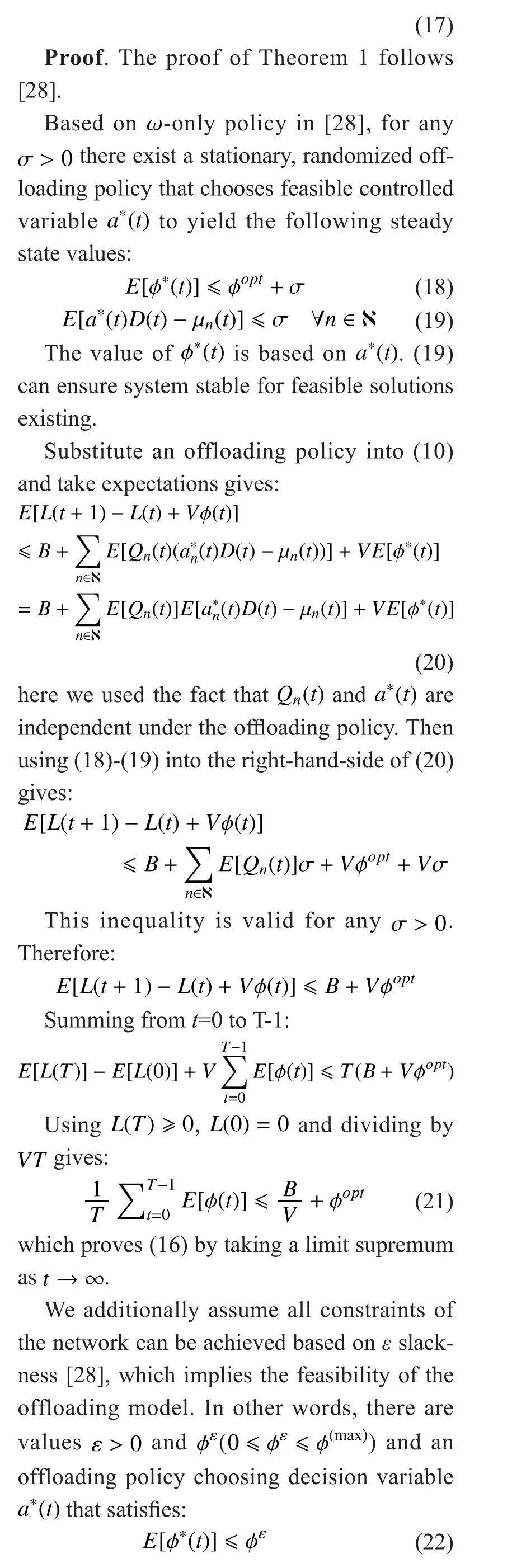

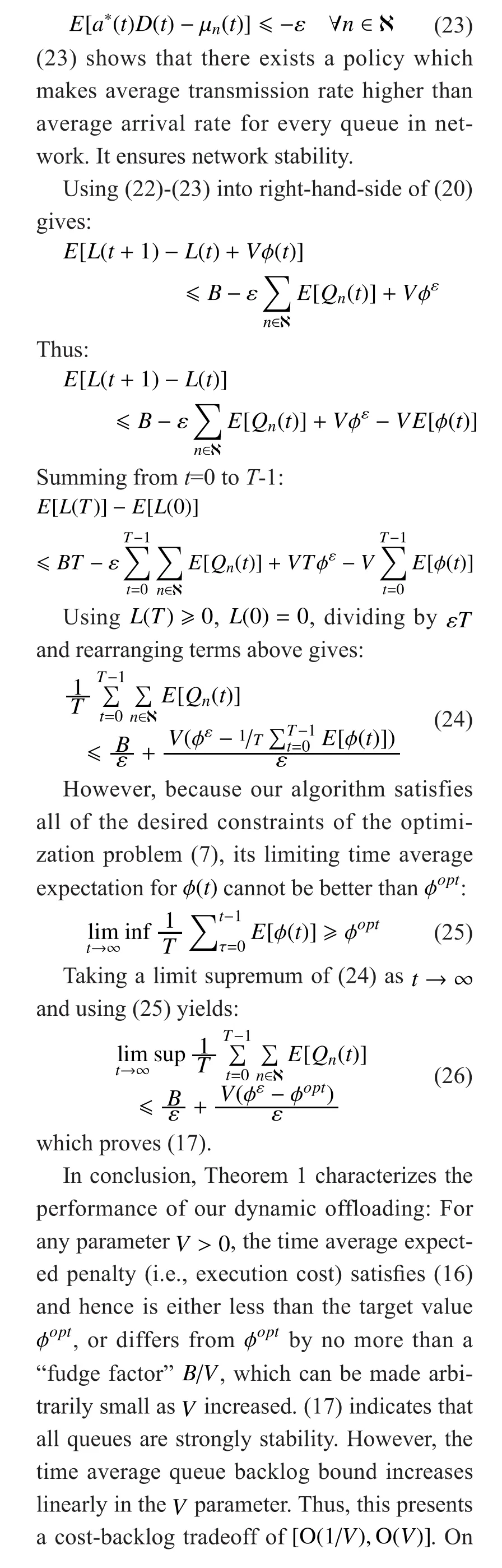

Based on Lyapunov optimization theory, the following theorem details the performance analysis of the adaptive application offloading policy.

the other hand, it also can be seen that our offloading policy can maintain the stability of queues while driving the time average cost to its exact optimum value. All of these confirmed the effectiveness of our application offloading policy. In the next section, we will further verify our offloading policy by real simulation.

VI. SIMULATION RESULTS

In this section, we conducted several experiments on OMNeT++ to evaluate our of floading policy. Simulation settings are as follows:There are one mobile device and two remote clouds in wireless network scenario, which is similar to Figure 2. The mobile device is equipped with one 1-GHz processor and Wi-Fi IEEE 802.11 b/g interface (Taking HTC Nexus One as an example). Its idle power, running power, and transmitting power is 0.886W,1.539W, 2.262W respectively. The cloud is simulated by a resource pool [12]. Cloud 0 contains 1000 concurrent servers in which every server is with a 3.9-GHz Intel processor, cloud 1 contains 10 concurrent servers in which every server is with a 1.8-GHz Inter processor. As for wireless access network, 3G and Wi-Fi networks are available in the whole network. To distinguish wireless 3G and Wi-Fi, mobile device offloads application to cloud 0 just through wireless 3G network, and to cloud 1 through Wi-Fi network. The orders of magnitude of transmission rate for 3G and Wi-Fi are 10Kb/s and 103Kb/s, respectively[9]. And the real-time application generated by mobile device is considered as a random process. Their input computation data size and computation size are 102KB and 10GHz in terms of order of magnitude, respectively.The control weights are V=0.98 and α=0.55.However, we adopt these settings just for exposition purpose. The analysis in the previous section does not depend on these settings.

6.1 Performance evaluation of adaptive offloading policy

To gain insight on our offloading policy,we compare our offloading policy with one scheme that all applications are executed on local mobile device (i.e., no offloading and no scheduling for all application). For the convenience of description, ‘L scheme’ is used to represent the scheme that all applications are executed on local mobile device.

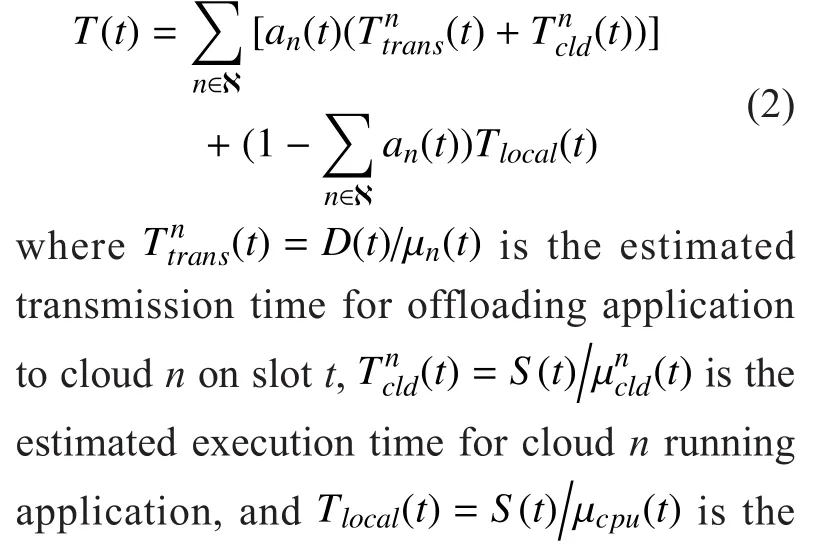

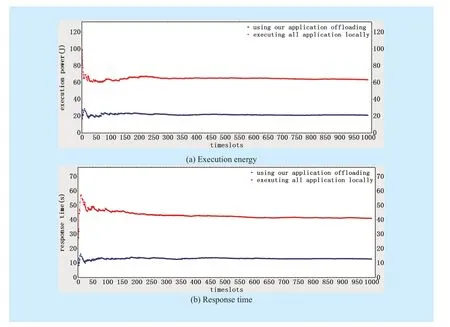

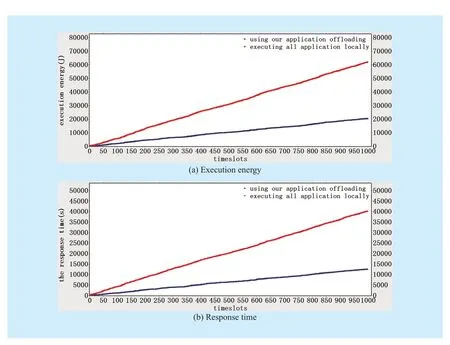

We first show the mobile device’s execution cost comparison between offloading policy and L scheme. Figure 3(a) and Figure 3(b)respectively show the comparison of time-averaged energy consumption and response time between offloading policy and L scheme. It demonstrates that the offloading policy can help to save about 68.557% average response time and 67.095% average energy, which proves that the offloading policy is effective.

On the other hand, we also use Figure 4(a) and Figure 4(b) to respectively compare time accumulative energy consumption and response time between offloading policy and L scheme. It clearly shows that our offloading policy can extend the battery lifetime of mobile device and improve mobile user experience at the same time. Conclusively, our application offloading is indeed helpful for the mobile device which has limited resources to run the sophisticated and real-time application.

We next show the impact of offloading policy on queues backlogs under above wireless network scenario. On every slot t, the input computation data of application which needs to be uploaded to cloud 0 caches in queue 0.Similarly, the input computation data of application which needs to be uploaded to cloud 1 caches in queue 1. Figure 5(a) and Figure 5(b) reveal the dynamics of queue backlogs in queue 0 and queue 1, respectively. Both queues lengths are finite for any slot, which indicates that queues are stable (i.e., network stability). Meanwhile, simulation statistics show that the total amount of application offloaded to cloud 0 is 26 and to cloud 1 is 814, which means most applications are sent through Wi-Fi network. It indicates that the input computation data migration cost among mobile device and clouds caused by wireless communication dominate the whole execution cost. All of these further implied the effectiveness of our of floading decision algorithm and transmission scheduling scheme.

6.2 Offloading reference for various kinds of application

Fig. 3 Comparison of time-average execution cost between our of floading and L scheme

Fig. 4 Comparison of time-accumulated execution cost between our offloading and L scheme

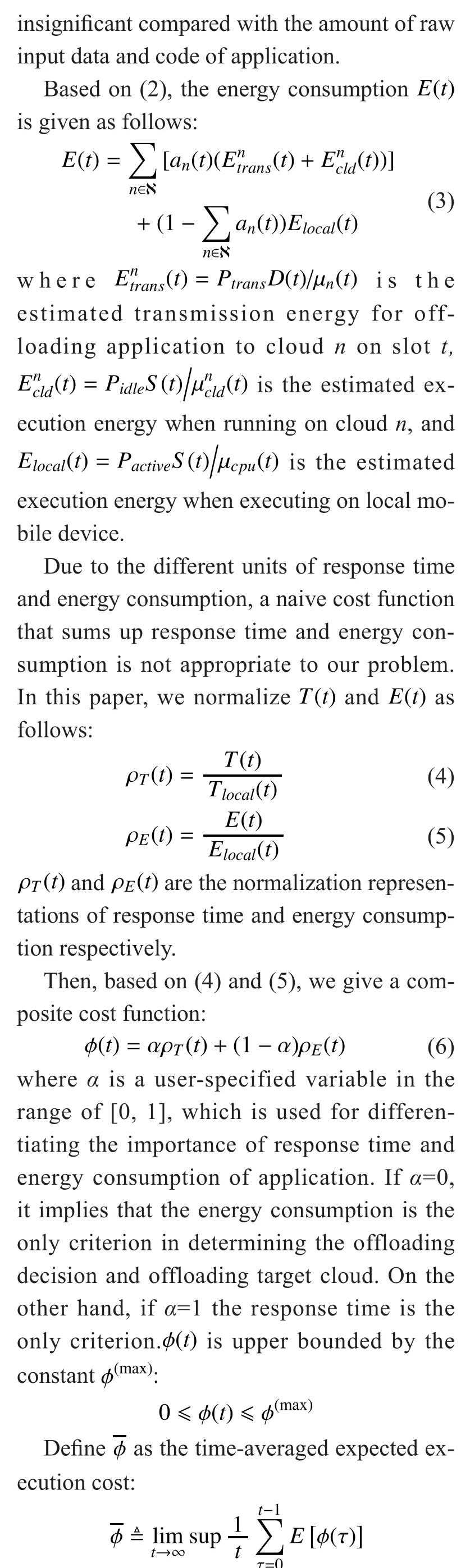

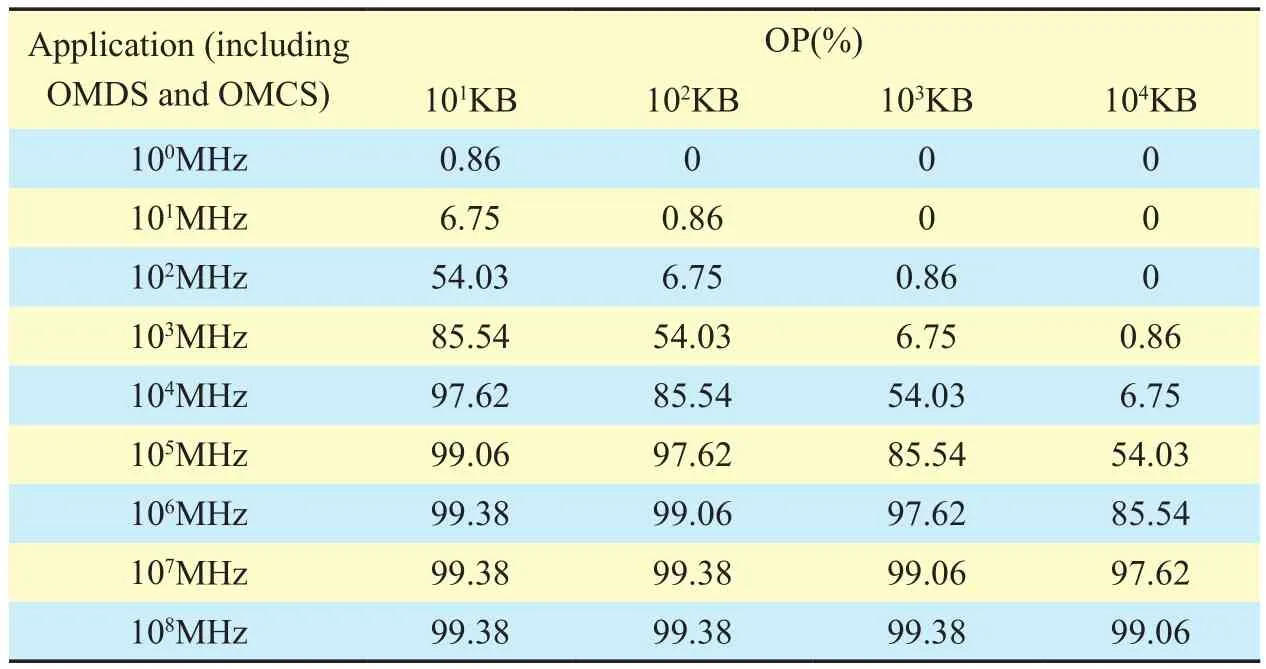

Table II Offloading probability for all kinds applications under our network environment

Above simulation numerical results demonstrate that our offloading policy is effective for the kind of application whose average offloading probability is 85.54% (It comes from above simulation). To investigate the impact of general application on adaptive offloading policy, we next implement the simulations for different applications. The simulation results are summarized in Table II. For the convenience of exposition, some abbreviations are used in Table II: OMDS represents the Order of Magnitudes of Data Size; OP represents the Offloading Probability of application; OMCS represents the Order of Magnitudes of Computation Size.

From Table II, we can get three conclusions: (1) Fix the input computation data size of application, the offloading probability increases with the computation size. This is because as the computation size increases, mobile device would rather choose to utilize the cloud computing via computation offloading to mitigate the heavy cost of local computing.(2) Fix the computation size of application,the offloading probability decreases with the increasing size of the input computation data due to the fact that a larger data size requires higher overhead for computation offloading via wireless communication. (3) The ratio of the computation size to the input computation data size is no less than 104(the offloading probability is 54.03%), the proposed offloading policy works well because low offloading probability indicates poor offloading policy according to previous model theory analysis.

In fact, all these statistical results can do help for offloading software implementation for mobile device and can reduce offloading itself running overhead at the same time: when the programmers design offloading program,they can put all statistics collected under all different network environment similar to Table II into the program. In this way, when a mobile device needs to deal with the real-time application, Table statistics can provide a reference factor for application offloading, reducing the overhead of the application offloading itself running.

VII. CONCLUSIONS

Saving energy and quick response in mobile computing are becoming increasingly important due to the challenge of running computationally intensive applications on resource-constrained mobile devices. In this paper, we have designed and implemented an adaptive application offloading policy. Unlike previous works, which consider only one target cloud, our offloading policy is suitable for multiple offloading target clouds. And the numerical results show that mobile device can save about 68.557% average response time and 67.095% average energy consumption.

Our future work includes three aspects.First, we will implement an offloading policy among multiple mobile devices and target clouds in mobile cloud computing. Second, we plan to investigate the applications partitions scheme for some special applications. Finally,we will study the overhead caused by offloading policy itself.

ACKNOWLEDGEMENTS

The authors would like to thank the reviewers for their detailed reviews and constructive comments, which have helped to improve the quality of this paper. This work was supported by National Natural Science Foundation of China (Grant No. 61261017,No. 61571143 and No. 61561014); Guangxi Natural Science Foundation (2013GXNSFAA019334 and 2014GXNSFAA118387);Key Laboratory of Cognitive Radio and Information Processing, Ministry of Education(No. CRKL150112); Guangxi Key Lab of Wireless Wideband Communication & Signal Processing (GXKL0614202, GXKL0614101 and GXKL061501); Sci. and Tech. on Info.Transmission and Dissemination in Communication Networks Lab (No. ITD-U14008/KX142600015); and Graduate Student Research Innovation Project of Guilin University of Electronic Technology (YJCXS201523).

[1] T Soyata, R Muraleedharan, C Funai,et al,“Cloud-vision: Real-time face recognition using a mobile-cloudlet-cloud acceleration architecture”, Proceedings of Symposium on Computers and Communications, pp. 59-66, 2012.

[2] J Cohen, “Embedded speech recognition applications in mobile phones: Status, trends, and challenges”, Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 5352-5355, 2008.

[3] E Cuervo, A Balasubramanian, D Cho,et al,“MAUI: making smartphones last longer with code offload”, Proceedings of the 8th international conference on Mobile systems, applications and services, pp. 49-62, 2010.

[4] C Gallen, “Mobile cloud computing subscribers to total nearly one billion by 2014”, http://www.abiresearche.com/press/1484 -Mobile+Cloud+-Computing+Subscribers+to+Total+Nearly+One+Billion+by+2014.

[5] L Lei, Z.D Zhong, K Zheng,et al, “Challenges on wireless heterogeneous networks for mobile cloud computing”. IEEE Transactions on Wireless Communications, vol. 20, no. 3, pp. 34-44,2013.

[6] J Zhao, “How to embrace mobile cloud computing for operators”, http://www.cnii.com.cn/incloud/2015-02/12/content_1533469.htm.

[7] Y Lu, S.P Li, H.F Shen,et al, “Virtualized screen:A third element cloud-mobile convergence”,IEEE Journal on Multimedia, vol. 18, no. 2, pp.4-11, 2011.

[8] H.T Dinh, C Lee, D Niyato, “A Survey of Mobile Cloud Computing: Architecture, Applications,and Approaches”, Wireless Communications and Mobile Computing, vol. 13, no. 18, pp.1587-1611, 2013.

[9] D Huang, P Wang, D Niyato, “A dynamic offloading algorithm for mobile computing”, IEEE Transactions on Wireless Communications, vol.11, no. 6, pp. 1991–1995, 2012.

[10] W.W Zhang, Y.G Wen, K Guan,et al, “Energy-Optimal Mobile Cloud Computing under Stochastic Wireless Channel”. IEEE Transactions on Wireless Communications, vol. 12, no. 9, pp.4569-4581, 2013.

[11] R Kemp, N Palmer, T Kielmann,et al, “Eyedentify:Multimedia cyber foraging from a smartphone”,Proceedings of 11thIEEE International Symposium on Multimedia, pp. 14-16, 2009.

[12] Y.D Lin, T Edward, Y.C Lai,et al, “Time-and-energy aware computation offloading in handheld devices to coprocessors and clouds”, IEEE Journals & Magazines, vol. 9, no. 2, pp. 393-405,2015.

[13] C Cai, L Wang, S U.Khan,et al, “Energy-aware high performance computing: A taxonomy study”, Proceedings of 17thIEEE International Conference on Parallel and Distributed Systems,pp. 7-9, 2011.

[14] M V. Barbera, S Kosta, A Mei,et al,“To offload or not to offload? The bandwidth and energy costs of mobile cloud computing”, Proceedings of IEEE on INFOCOM, pp. 14-19, 2013.

[15] E Boyun, L Hyunwoo, L Choonhwa, “Power-Aware Remote Display Protocol for Mobile Cloud”, Proceedings of International Conference on Information Science and Applications(ICISA), pp. 1-3, 2014.

[16] D.G Zhang, K Zheng, T Zhang, “A Novel Multicast Routing Method with Minimum Transmission for WSN of Cloud Computing Service”, Soft Computing, vol. 19, no. 7, pp. 1817-1827, 2015.

[17] D Zhang, G Li, K Zheng, “An energy-balanced routing method based on forward-aware factor for Wireless Sensor Network”, IEEE Transactions on Industrial Informatics, vol. 10, no. 1, pp. 766-773, 2014.

[18] S Ou, K Yang, J Zhang, “An effective offloading middleware for pervasive services on mobile devices”, Pervasive and Mobile Computing, vol.3, no. 4, pp. 362-385, 2007.

[19] I Giurgiu, O Riva, I Krivulev,et al,“Calling the cloud: Enabling mobile phones as interfaces to cloud applications”, Proceedings of the 10thACM/IFIP/USENIX International Conference on Middleware, pp. 1-20, 2009.

[20] D Chang, G Xu, L Hu,et al, “A network-aware virtual machine placement algorithm in mobile cloud computing environment”, Proceedings of IEEE Wireless Communications and Networking Conference Workshops (WCNCW), pp. 117-122,2013.

[21] L Yang, J Cao, H Cheng,et al, “Multi-user Computation Partitioning for Latency Sensitive Mobile Cloud Applications”, IEEE Transactions on Computers, vol. 64, no. 8, pp. 2253-2266, 2015.

[22] D Zhang, X Song, X Wang, “New Agent-based Proactive Migration Method and System for Big Data Environment (BDE)”, Engineering Computations, vol. 32, no. 8, pp. 2443-2466, 2015.

[23] D Zhang, K Zheng, D Zhao, “Novel Quick Start(QS) Method for Optimization of TCP”, Wireless Networks, vol. 22, no. 1, pp. 211-222, 2016.

[24] D Zhang, “A new approach and system for attentive mobile learning based on seamless migration”, Applied Intelligence, vol. 36, no. 1, pp.75-89, 2012.

[25] S Tosta, A Aucinas, P Hui,et al, “ThinkAir: Dynamic resource allocation and parallel execution in the cloud for mobile code offloading”,Proceedings of IEEE INFOCOM, pp. 945-953,2012.

[26] X Chen, “Decentralized Computation Offloading Game for Mobile Cloud Computing”, IEEE Transactions on Parallel and Distributed Systems, vol.26, no. 4, pp. 974-983, 2014.

[27] L Georgiadis, M Neely, L Tassiulas, “Resource Allocation and Cross-layer Control in Wireless Networks”, Foundations and Trends in Networking, 2006.

[28] M Neely, “Stochastic Network Optimization with Application to Communication and Queueing Systems”, Morgan & Claypool, 2010.

- China Communications的其它文章

- Degree-Based Probabilistic Caching in Content-Centric Networking

- The Novel Spectrum Allocation Algorithm in Cognitive Radio System

- Multi-Gradient Routing Protocol for Wireless Sensor Networks

- Smart Service System(SSS): A Novel Architecture Enabling Coordination of Heterogeneous Networking Technologies and Devices for Internet of Things

- Efficient XML Query and Update Processing Using A Novel Prime-Based Middle Fraction Labeling Scheme

- Direction of Arrivals Estimation for Correlated Broadband Radio Signals by MVDR Algorithm Using Wavelet