Object Tracking Using a Particle Filter with SURF Feature

Shinfeng D. Lin, Yu-Ting Jiang, and Jia-Jen Lin

Object Tracking Using a Particle Filter with SURF Feature

Shinfeng D. Lin, Yu-Ting Jiang, and Jia-Jen Lin

—In this paper, a novel object tracking based on a particle filter and speeded up robust feature (SURF) is proposed, which uses both color and SURF features. The SURF feature makes the tracking result more robust. On the other hand, the particle selection can lead to save time. In addition, we also consider the matched particle applicable to calculating the SURF weight. Owing to the color, spatial, and SURF features being adopted, this method is more robust than the traditionalcolor-basedappearancemodel. Experimental results demonstrate the robustness and accurate tracking results with challenging sequences. Besides, the proposed method outperforms other methods during the intersection of similar color and object’s partial occlusion.1

Index Terms—Object tracking, occlusion, particle filter, SURF feature.

1. Introduction

Object tracking is one of the important tasks in many applications in computer vision, e.g., video analysis, intelligent vehicle, surveillance system, robot vision, human-computer interaction, and so on. This topic has received much attention in the recent decade. Although the topic of object tracking has been well studied in computer vision, it still remains challenging in the varying illumination condition, noise influence, scene change, cluttered background, occlusion, and similar color. Therefore, how to develop a robust method for object tracking is seriously essential. In recent years, people have focused on two categories of object tracking: stationary and non-stationary.

1.1 Object Tracking with Non-Stationary Camera

In this category, the tracking methods do not need the information of background. Hence, they have a wide range of applications. The visual object tracking combined foreground/background feature points and mean shifts was proposed by Haner and Gu[1]. The tracker uses a random sample consensus (RANSAC) cost function combining the mean shift motion estimate and dynamically updates sets of foreground and background features. In [2], the object tracking algorithm was based on the speeded up robust feature (SURF) key-point and super pixel. The SURF feature was used for matching point in two frames, and the super pixel lattice was used to get more available key-points for tracking and construct a weight mapping. In [3], the objective of this method of updating an adaptive appearance model of tracking system was using multiple instance learning (MIL) to train the appearance classifier. These classifiers made the method more robust.

1.2 Object Tracking with Stationary Camera

The tracking method is implemented under a stationary camera in this category. To obtain the priori information about the position of moving object, different background subtraction methods are usually employed. In [4], the color and motion information was combined to detect the foreground object. Once the foreground object has been detected, various features of this object behavior can be extracted. Based on these features, the system can estimate the degree of danger and use the result to warn the user if needed. Object tracking based on a particle filter, proposed by Zhuet al.[5], utilized the structured environment to overcome the cluttered background. In this method, the movements of objects are constrained by structured environments. If the environment is simple, this method could improve the tracking rate. On the other hand, if the environment is too complex to analyze, the tracking rate will be decreased. It means this method restricts to the sequence condition. In [6], Jhuet al.proposed the method of multiple objects tracking via a particle filter based on foreground extraction. The features of this method are color and spatial similarities. In addition, particles refining makes the tracking result maintain good accuracy with fewer particles. But if the colors of objects are too similar, the classifier may fail. Besides, the foreground extraction may be affected by occlusion and similar colors. Therefore, in the issue of occlusion and similar color, the tracking accuracy may be affected by spatial and color similarities.

In this paper, a novel method based on a particle filter and SURF feature is proposed to solve the tracking problemof scene change, partial occlusion, and similar colors. To reduce the influence of background, only the foreground information that the classifier extracts is concerned. The original color feature is replaced by the features of color and SURF. To save more time and improve accuracy, we select particles to extract the SURF feature at first. After matching points, the points of correct matched SURF is chosen. According to these correct matched SURF points, the center successfully estimated is more accuracy. In addition, each particle is weighted using color, spatial, and SURF similarities. Therefore, our method improves not only the robustness but also the accurate tracking results. Experimental results demonstrate the robustness and accurate tracking results of the proposed method with challenging sequences.

The remainder of this article is organized as follows. The proposed object tracking scheme is presented in Section 2. Section 3 demonstrates the experimental results. Finally, the conclusions are drawn in Section 4.

2. Proposed Method

The general procedure of object tracking via a particle filter can be divided into two parts: the feature extraction and the weighting of the particles. The proposed method includes two features: the color histogram and SURF feature[7],[8]. Fig. 1 illustrates the flowchart of the proposed method. The feature of color histogram is the original framework from [6]. Adding SURF features makes the tracking results more robust. The object is extracted from the video by the manual selection. Fig. 2 shows the detailed steps of the classification. We cluster the foreground and background byK-means first. Then the result of clustering is used to be the training data for the classifier. Each particle, which is randomly sampled, is classified into foreground or background. We extract the foreground to calculate the color histogram as a color feature. And SURF points are extracted as another feature. In addition, the particles need to be adjusted to the new position (the center of foreground) as the spatial feature. Each weight of particle is calculated by considering spatial, color, and key-point features. Finally, the weighted sum is calculated by all particles.

Fig. 1. Flowchart of the proposed method.

Fig. 2. Flowchart of classification.

2.1 Particle Selection

Usually the tracking object does not move a lot between two continuous frames in the video. Based on the little movement of the object in two continuous frames, we select the particles before extracting SURF points. The center of object is used to draw a circle with a radiusRin the previous frame.denotes the object center of object i in the thet-1 frame.denotes the particle center of thejth particle in framet. Equation (1) denotes the step of particle selection. Fig. 3 is the diagrammatic explanation.

If the particle center is located in the circle (particle center distance<threshold), these particles can be exploited to extract key-point of SURF. This approach can save more time and improve tracking accuracy.

Fig. 3. Particle selection: (a) before selection (b) after selection.

2.2 Locating Region of Foreground SURF Feature

In our proposed method, the position of an object is given in the first frame. The object is modeled as a rectangle patchPwith a parameter vectorwhereis the center of the rectangle,his the height of the rectangle, andwis the width of the rectangle. These key points within the patchPcan be used to represent the tracking object. It is noted that the key points of the object may locate on the border of the rectangleP. If we expand the region of extraction for the foreground SURF feature, more points can be maintained for use. Therefore, we extract the foreground SURF feature within a patchP1which is bigger than the object regionP. The center ofP1is the same as that ofP, as shown in Fig. 4. It can be expressed as, wheredxanddyare both 15 pixels in our tracking work.

Fig. 4. Object patch P (the red rectangle) in image.

2.3 Selection of Matched SURF Feature

In this subsection, the selection of matched SURF feature is our concern. This step increases the matched point accuracy and tracking result. After the step of point matching, the match distance exists in these matched points. The shorter the match distance is the more similar it is between two points.,ilOdenotes the objectiwith a pointl.The particlejwith a pointlis represented as,jlx. If the similarity of key-pointd(Oi,l,xj,l) is less than the threshold, the key-point will be used. This selection result is described in the following:

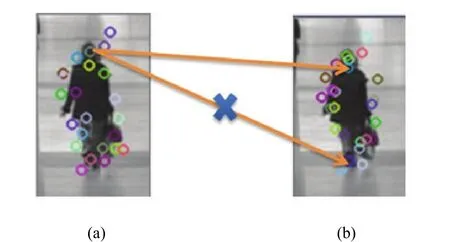

After selecting the matched key-point of a high similarity, we choose the correct points. The same object matched points of the relative position will not be far away. Equation (3) denotes that the relative position of matched SURF features should be less than threshold (15 pixels). This concept prevents the matched points from being incorrect points. The selection of the matched SURF feature is shown in Fig. 5.

Fig. 5. Selection of matched SURF feature: the matched points between (a) and (b) with “×” sign means incorrect points.

2.4 Weighting of Particles

Adding the SURF feature can improve our method’s performance. Each weight of particles is calculated from the spatial, color, and matched SURF point similarities. The spatial similarity calculates the Euclidean distance between each center of particle (after refining) and the center successfully estimated at the framet-1. The histogram intersection distance has been used to define the color similarity of two color histogram representations. The matched point similarity calculates the matched distance between the particle point and the tracked target’s point.

Finally, we will obtain the new position of the new center at framet, as shown in (6). In this Equation, α is set to 0.5 in our framework. This value adjusts the weight of the color feature and SURF feature.denotes the first weight which is the color similarity’s weight with the particlejin the framet.denotes the second weight which is the SURF points’ similarity’s weight with the particlejin the framet.

where

3. Experimental Results

This section shows the results of the proposed method and comparison with other existing methods. The robustness and effectiveness of the proposed method is demonstrated by the following experiments.

The proposed method is implemented in the environment: i7-3.4 GHz, 4GB RAM, Windows 7 platform and C language. We test our method with several sequences. These sequences have different conditions and challenges.

To validate the proposed method, we conduct the experiment on a benchmark. The Euclidean distance and average error mean are adopted to fairly evaluate the performance. The Euclidean distance is defined as (7), where GT denotes the ground truth and (Cx,Cy) is the coordinate of predicted center.

The average error mean is defined as (8), whereIis the frame number andNis the maximum frame number,jis the test times, andTis the total number of test times.

3.1 PETS2001 Database

PETS2001 is a popular surveillance benchmark. It provides ground truth data of object trajectories for the performance evaluation. Its resolution is 768×576 pixels and the frame rate is down-sampled to 5 fps. The tracking result with 50 particles is shown in Fig. 6. The frame numbers are 2121, 2149, 2255, 2292, 2301, 2326, and 2363 from left to right. The object moves from the right-bottom to the left-top position. Fig. 7 shows the comparison with [5] and that in [6] from the 2100th to the 2571th frame. Since the method includes the SURF feature, the performance is better than [6] when he walks past a pole. After the 2300th frame, the object moves to a cluttered background and the proposed method is more robust than that in [5].

Fig. 6. Tracking results of our proposed method.

Fig. 7. Comparison of tracking accuracy in PETS2001 sequence.

Note that the number of particles is 50 for our method and 150 for [5] and [6]. Experimental results demonstrate that our method is more robust than [5] and [6] with fewer particles.

3.2 Karl-Wilhelm-Straße Database

We test two sequences for vehicle tracking with a resolution of 768×576 pixels at 25 fps, which monitor the traffic of the same road during the heavy snow and accumulation of snow condition. These two sequences are referred to as Winter Traffic and Snow Traffic in the following discussions.

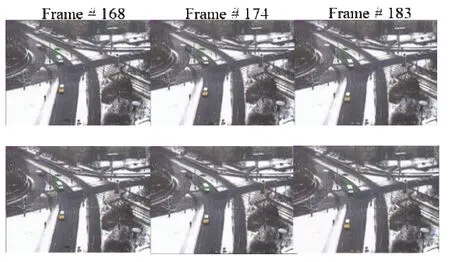

For the Winter Traffic sequence, we track a white van driving along the curved road with snow covered surroundings. Note that the color histogram and SURF similarity are used in the observation model. This sequence challenges the robustness of the tracking algorithms against background clutters. Even if the foreground classification is not very well, our method tracks the white van well by the SURF feature. The comparison is demonstrated in Fig. 8. It is noted that our result using 50 particles is better than that in [6] using 150 particles.

For the Snow Traffic sequence, we track a white car moving along a curved road in the snow. The snow noises influence the tracking results. The tracking results of [6] and our method are shown in Fig. 9. The particle number of [6] and our method are 150 and 50, respectively. Both trackers can continuously track the car, however our method has better accuracy.

Fig. 8. Tracking results of [6] (first row) and the proposed method (second row) in Winter Traffic sequence.

Fig. 9. Tracking results of [6] (first row) and the proposed method (second row) in Snow Traffic sequence.

3.3 CAVIAR Database

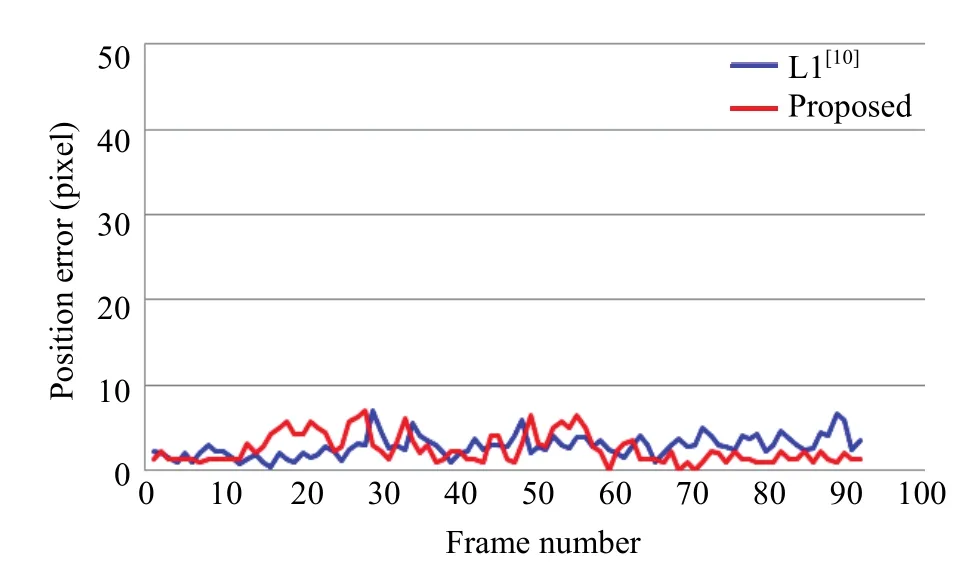

We utilize the other database, CAVIAR, in our experiments. The OneLeaveShopReenter2cor sequence is tested with a resolution of 384×288 pixels at 25 fps. In this video, the background color is similar to the woman’s pants. In addition, the man’s T-shirt and his pants color is similar to the woman’s coat. For the OneLeaveShopReenter2cor sequence, our target is to track the woman who undergoes partial occlusion. Some tracking results are given in Fig. 10. It can be observed that the L1 tracker[10]is influenced when the woman is partially occluded by the man at the 225th frame. Compared with [10], our tracker is more robust to the condition of partial occlusion. As shown in Fig. 11, the position error is smaller than L1 tracker. This demonstrates the advantage of our proposed method.

Fig. 10. Tracking results of L1tracker[10](first row) and the proposed method (second row) in the OneLeaveShopReenter2cor sequence.

Fig. 11. Comparison of tracking accuracy in the OneLeaveShopReenter2cor sequence.

3.4 Girl Sequence

The Girl sequence is simulated to compare with [6], [9], and [10]. The sequence resolution is 128×96 pixels and the frame rate is 25 fps. For this sequence, our target is to track a girl’s face. The girl’s face is occluded by a man. The man’s face is passing in the front of the girl’s face. Fig. 12 and Fig. 13 demonstrate the comparison of tracking accuracy. In Fig. 13, it is obvious that the tracking result is not very promising between the 15th frame and the 27th frame, where occlusion occurs. Our method’s feature is to combine color the histogram and SURF feature, so the color histogram feature influences the tracking result. After 57th frame, the proposed method is more robust than that proposed in [10]. The tracking result proves once again that our method is robust. The other tracker is influenced when the man’s face is passing through the girl’s face.

Fig. 12. Tracking results of [9] (first row), [6] (second row) and the proposed method (third row) in Girl sequence.

Fig. 13. Comparison of tracking accuracy in Girl sequence.

4. Conclusion

A novel tracking method based on the particle filter and SURF feature has been proposed. The proposed method is primarily described in the following. First, each particle is randomly sampled. The particles have to be selected for extracting SURF key-points. After matching SURF points, the matched SURF feature should be chosen. This step is conducted to effectively improve our method’s accuracy. Third, the weights of all particles considering the spatial-color mixture appearance and SURF feature are used to make the method more robust. Experimental results demonstrate the robustness and accurate tracking results with challenging sequences. Besides, the proposed method outperforms other methods during the intersection of similar color and object’s partial occlusion.

[1] S. Haner and I. Y.-H. Gu, “Combing foreground/background feature points and anisotropic mean shift for enhanced visual object tracking,” in Proc. of Int. Conf. on Pattern Recognition, Istanbul, 2010, pp. 3488-3491.

[2] Y. Liu, W. Zhou, H. Yin, and N. Yu, “Tracking based on SURF and superpixel,” in Proc. of Int. Conf. on Image and Graphics, Hefei, 2011, pp. 714-719.

[3] B. Babenko, M. H. Yang, and S. Belongie, “Robust object tracking with online multiple instance learning,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 33, no. 8,pp. 1619-1632, 2011.

[4] C.-Y. Fang, W.-T. Hsiao, H.-H. Hsieh, and S.-W. Chen, “A danger estimation system for infants based on a foreground dynamic probability model,” Journal of Electronic Science and Technology, vol. 10, no. 2, pp. 142-148, 2012.

[5] J. Zhu, Y. Lao, and F. Zheng, “Object tracking in structured environments for video surveillance applications,” IEEE Trans. on Circuits and Systems for Video Technology, vol. 20, no. 2, pp. 223-235, 2010.

[6] Y. Jhu, C. Chuang, and S. D. Lin, “Multiple object tracking via particle filter based on foreground extraction,” in Proc. of the 25th IPPR Conf. on Computer Vision, Graphics, and Image Processing, Nantou, 2012.

[7] H. Bay, A. Ess, T. Tuytelaars, and L. Van Gool, “SURF: speeded-up robust features,” Int. Journal Computer Vision and Image Understanding, vol. 110, no. 3, pp. 346-359, 2008.

[8] H. Bay, T. Tuytelaars, and L. Van Gool, “SURF: Speeded up robust features,” in Proc. of European Conf. on Computer Vision, Graz, 2006, pp. 404-417.

[9] Y. Qi and Y. Wang, “Visual tracking with double-layer particle filter,” in Proc. of the 11th Int. Conf. on Signal Processing, Beijing, 2012, pp. 1127-1130.

[10] X. Mei and H. Ling, “Robust visual tracking and vehicle classification via sparse representation,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 33, no. 11, pp. 2259-2272, 2011.

Shinfeng D. Linreceived his Ph.D. degree in electrical engineering from Mississippi State University in 1991. He is currently a professor and the Dean with the Department of Computer Science and Information Engineering, College of Science and Engineering, National Dong Hwa University, Taiwan. His research interests include image processing image/video compression, and information security.

Yu-Ting Jiangwas born in Taiwan in 1987. She received the B.S. degree from the Department of Computer Science, National Pingtung University of Education, Taiwan in 2010. In 2013, she received the M.S. degree from the Department of Computer Science and Information Engineering, National Dong Hwa University, Taiwan. She currently works in Hsinchu. Her research interests lie in image processing and computer vision.

Jia-Jen Linwas born in Taiwan in 1989. She received the B.S. degree from the Department of Computer Science and Information Engineering, National Dong Hwa University, Taiwan in 2012. She is currently pursuing the M.S. degree at National Dong Hwa University. Her research interests include computer vision and digital image processing.

Manuscript received January 10, 2014; revised March 28, 2014. This work was supported by the NSC under Grant No. NSC 101-2221-E-259-032-MY3.

S. D. Lin is with the Department of Computer Science and Information Engineering, National Dong Hwa University, Hualien 97401 (Corresponding author e-mail: david@mail.ndhu.edu.tw).

Y.-T. Jiang and J.-J. Lin are with the Department of Computer Science and Information Engineering, National Dong Hwa University, Hualien, 97401 (e-mail: 610021036@ems.ndhu.edu.tw; 610121001@ ems.ndhu.edu.tw).

Color versions of one or more of the figures in this paper are available online at http://www.journal.uestc.edu.cn.

Digital Object Identifier: 10.3969/j.issn.1674-862X.2014.03.018

Journal of Electronic Science and Technology2014年3期

Journal of Electronic Science and Technology2014年3期

- Journal of Electronic Science and Technology的其它文章

- Guest Editorial Special Section on Energy-Efficient Technologies

- Guest Editorial TTA Special Section on Terahertz Materials and Devices

- Numerical Solution for Fractional Partial Differential Equation with Bernstein Polynomials

- Role of Gate in Triode-Structure for Carbon Nanotube Cold Cathode

- A High Efficiency Fully Integrated OOK Transmitter for WBAN

- Detecting Circles Using a Two-Stage Approach