Xenobiotic effects in cancer related pathways-high throughput screening and proof of concept in animal models

Ursula E.Schumacher

(Suzhou Xishan Zhongke Drug Research & Development Co.,Ltd.

1336 Wuzhong Avenue,Suzhou 215104,Jiangsu,China,Uschumacher@hotmail.com)

1 Evolutionary Biology in the classical and in the modern sense

Charles Robert Darwin,an English naturalist,established the theory of evolution[4],that all species oflife have descended overtime from common ancestors,and in which the struggle for existence has decided the biological diversity that he called natural selection.He postulated,that one species can turn into another(transmutation)and that homo sapiens and apes have the same ancestors.He established an evolutionary tree and postulated,that those species survive,that have the better properties in the struggle of life.

In the 1960s Prof.George C.Williams at the State University of New York vigorously opposed to Darwin's theory of group selection[5]. The work of Williams in this area,along with Hamilton,Smith and others led to the development of a gene-centric view of evolution.The gene-centered view of evolution or gene selection theory,or selfish gene theory postulates,that evolution occurs through the differential survival of competing genes,increasing the frequency of those alleles whose phenotypic effects successfully promote their own propagation.This is in contrast tothe organism-centered viewpoint adopted historicallyby biologists.

Fig.1 Concordance of human and animal toxicities and adverse effects[13].

2 The poor Concordance of Human and Animal Toxicities

This new gene-centric view of life and disease initiated around the year 2000 quite many studies about the usefulness ofpredicting pharmacological and toxicological effects in humans from animal models.The most well known paper is from Olson et al.in 2000[6], which summarises the results of a multinational pharmaceutical companies survey which was also reviewed in an ILSI workshop(International Life Sciences Institute)in April 1999.This study only considered the cases,where human toxicities of drugs were found in clinical studies and then compared to respective effects in animals:Only 7% of human toxicities were found in rodents,with the non-rodents correlating better with 27% concordance with human toxicities.The opposite case where toxicities were found in animals butnot in humans was not considered.The highest incidence of oral concordance was seen in haematological,gastrointestinal and cardiovascular toxicity.Although this study still valued an overall concordance rate of 71% ,other authors[7,8]paint a much darker picture about the prediction of human drug toxicities from animal testing,which are summarized in Jan 2009 by Niall Shanks et al.in his book and review paper[7,8]. There he cites the US Secretary of Health:“Currently,nine out of ten experimental drugs fail in clinical studies because we cannot accurately predict how they will behave in people based on laboratory and animal studies”[10].Further he cites[11]that only 4 of 24 toxicities were found in animal data first and in only 6 of 114 cases did clinical toxicities have animal correlates[12].Already the bioavailability in animals does not at all correlate with the bioavailability in humans.Greaves et al[13]also found, thatthe correlation ofhuman toxicities found in animals is much better than the animal toxicities found in humans(false positives).The concordance is much better for haematological,gastrointestinal and cardiovascular toxicities than for others,in which concordance is less than 50%(Figure 1a).However the type of adverse reactions observed is generally very different in animals than in humans(Figure 1b)as has been shown for a large number of drugs for many types of diseases.

3 Toxicity Testing in the 21st Century

Since in 2007 James D.Watson(one of the codiscoverers of the structure of DNA)completed the sequencing of the full human genome[14], a pharmacological paradigm shift took place first of all in the understanding of diseases and secondly in the way pharmaceuticalcompanies plan and conducttheir research.While for the past several decades,drug discovery has focused primarily on a limited number of families of‘druggable’genes against which medicinal chemistscould readilydevelop compoundswith a desired biochemical effect.These targets were usually exhaustively investigated,with dozens or even hundreds of related publications often available,before huge investments in discovery programmes began.

Today,that comfort level is often missing with a huge number of suspected genomics-based targets.Although the genomicsapproach willundoubtedly increase the probability of developing novel therapies,the limited knowledge currently available will(and has)increase the risk and the failure quote for earlystage drug development projects.

To effectively and competitively exploit the completed human genome sequence for potential drug targets,the incorporation of technologies capable of identifying,validating and prioritizing thousands of genes to select the most promising as targets will be required.The estimated 35,000 genes in the human genome,as well as multiple splice variants of many mRNAs,mandates that these technologies must be higher in throughput than most current technologies,as it will be impossible to develop the traditional depth of knowledge abouteach target[15]. Importantly, no single technology will be sufficient to generate all of the necessary information and the integration of knowledge from several approaches is required to select the best new drug targets for drug development.

Similarly the needs and the methods for safety evaluation of potential drug candidates or xenobiotics from the environment cannot be met by simple animal testing based on lethality any more.Biological effects on genes either via mutation or up-or downregulation of expression may result in serious health effects which may not lead to lethality or birth defects in animals,but in humans or even only certain human subpopulations.

The National Research Council of the National Academy of Sciences in the US,in its report“Toxicity Testing in the 21st Century:a Vision and a Strategy”,has therefore proposed thattoxicity testing should become less reliant on apical endpoints from whole animaltests (e.g. cancerincidence, impaired reproduction,and developmentalanomalies)and eventually rely instead on quantitative dose-response models based on information from in vitro assays and in vivo biomarkers,which can be used to screen large numbers of chemicals[16].

This approach to toxicity testing is based on the understanding that traditional endpoints are the ultimate result of a series of cellular and sub-cellular responses to toxic chemicals.Toxic chemicals trigger toxicity pathways,which trigger a chain of biological events at multiple scales that ultimately lead to adverse human health effects.For this approach to be successful however,it must build on advances in multiple disciplines to extend toxicity testing beyond whole-animaltesting towards a more holistic and systematic understanding of human health effects at multiple scales of biological organization from gene to cell to tissue to organ to the entire human body.

The NRC report noted the limitations of traditional toxicity testing that relies primarily on adverse biologic responses in homogeneous groups of animals exposed to high doses of a test agent.It questioned the relevance of such animal studies for the assessment of risks to heterogeneous human populations exposed at much lower concentrations.

The NRC report proposed that toxicity testing should instead takeadvantage ofdevelopmentsin genomics,cellular biology,bioinformatics and other fields in order to enable risk assessment based upon toxicity pathways.

As response to this the Tox 21 Initiative[17]was formed in the US,in which EPA (Computational Toxicology Program),NIEHS(National Toxicology Program),NHGRI(NationalHuman Genome Research Institute),and FDA teamed up to share HTS(High ThroughputScreening) “in vitro” and sequencing resources with the aim to test a total of about 10.000 chemicals with an average of about 200 HTS assays each,in order to screen toxicity and efficacy pathways.

EPA's initiative is called ToxCast and all their data are published on their website[18].

Technological platforms that range from nucleotide chemistry and molecular and cell imaging to proteomics,bioinformatics and model organisms are applied in parallel, to generate a multi-dimensional profile of those genes that show response to xenobiotics and future challenges will be to integrate,analyse and interpret large sets of diverse data types to better understand the role of genes in biological pathways.

In order to increase throughput,technology has been developed to use microtiter plates of decreasing volume per well(96 wells,384 wells,1536 wells)and pipetting robots as well as laser scanner for plate reading.

A new method to further miniaturize the assays is based on microfluidics[19](as is also used in ink jet technologies)resulting in lowering the reagents usage and thus costs while at the same time speeding up fluid dosing and thus assay duration.Many treatment technologies for cells,eggs,embryos have thus been miniaturized as well as detection technologies like capillary electrophoresis,qPCR and ELISA.

Microfluidics also offers the possibility to overcome the disadvantage of conventional in vitro tests which are actually anaerobic,since dissolved oxygen is consumed after less than 2 hours and no new oxygen is added.Cells are usually surrounded by other cells in constantly perfused tissue.This can be simulated now on microfluidic chips,where cells(e.g.brain or lung cells)are grown on a synthetic scafford made from silicon in a 3D sandwich structure,where cells can be exposed to their natural environment so that they can also build their own blood vessels and fulfill their dedicated function e.g.to breathe in the case of lung cells.

NCATS from the National Institutes of Health NIH,FDA and DARPA in the US cooperate regarding the development of fluidic microchips,simulating 9 different organs in the human body[20]. These microfluidic chips will then be validated and accepted by FDA and EPA as screening tools for xenobiotics regarding potential health risks towards specific organs.

In 2011 in Europe a similar mammut project has started called SEURAT[21], financed partly by the Commission of the European Union.In Europe a complete ban of cosmetics testing on animals takes full effect in 2013,which puts Europe in a desperate need of efficient non-animal safety testing.

Now how can a functional genomics approach deliverreliable and quantifiable information about whether and how a xenobiotic harms mankind and/or environmental living organisms.

Without the claim of being a review paper this paper shall describe some of the most recent approaches to bridge the gap between raw genomic information and a new and modernized measure for toxicity.To demonstrate one possible and practical way‘Xenobiotic Effects in Cancer related Pathways’has been chosen as an example.

4 Towards a new metrics in toxicology

This brings up the question at first:What is toxicity?The Wikipedia answer is:“Toxicity is the degree to which a substance can damage an organism.Toxicity can refer to the effect on a whole organism,such as an animal,bacterium,or plant,as well as the effect on a substructure of the organism,such as a cell(cytotoxicity)or an organ such as the liver(hepatotoxicity).”This is followed by a discussion aboutthe importance ofthe dose (amountand duration)and all the difficulties in comparing lethal doses(e.g.LD50)between species.Wikipedia also lists that there are different types of toxicity and the attempts to classify toxicity either with regard to the similarity in chemical structure(e.g.PCBs),or the targetorgansto be affected (e.g. patotoxicity,reproductive toxicity).In many cases this correlates,but not in all.

Since 2007 the US FDA and other regulatory authorities require bioavailability testing and both toxicokinetics and pharmacokinetics is mandatory to estimate the absorption,hepatic clearance and plasma protein binding as well as metabolism of the drug under investigation.Since 2011 no repeated dose studies in animals are accepted any more by regulatory authorities without the TK data and the clarification of the“mode of action”of a xenobiotic becomes more and more important both with regard to efficacy,as well as toxicity.For cancer drugs the FDA would not accept a drug IND filing without a detailed description about the“modes of action”.

This has led to a modified view of pharmacokinetics,since if a xenobiotic causes vascular injury in the heart,then only the concentration in the heart matters.This multiple compartment based kinetic assessment is called physiologically based pharmacokinetics or PBPK[22,23].

So to compare the toxicities of various xenobiotics in one particular animal model has to take into account differences in gastrointestinal absorption,hepatic clearance,plasma protein binding,tissue distribution etc.which are different for different chemicals.

With all these differences,difficulties and uncertainties in highly developed organisms like mammals and humans it seems to be desirable to abandon the traditional view associated with the word toxicity and define the widely varying effects xenobiotics have on a cellular level by their particular molecular interaction they have on biological pathways and predict the adverse outcome in organs and on health.

Both in the US and in Europe the scientific societies and communities are very clear,that in modernizing toxicology the goal is not to use novel methods to predict lethality as is now a regulatory requirement in single dose, repeated dose,reproductive etc.toxicity testing,since this metrics is not capable to reflect health effects under normal conditions.Instead a new metrics has to be found.

免疫克隆选择的参数设置为:初始种群规模设定为3*类别数,克隆规模取初始种群规模的2倍,变异概率P1=0.6,交叉概率P2=0.9,其余参数根据交叉验证取值。正确率阈值α=0.45,β=0.80.令亲合度连续5次迭代没有提升为算法终止条件,估计分类错误率时采用交叉验证法和t检验法[13].

Nevertheless there has been an attempt to predict among other endpoints lethal outcome on reproduction[24](divided into male and female fertility and developmental toxicity).From more than 500 bioassays 36 assays involving 8 genes/receptors(nuclear receptors,both steroidal and non-steroidal,androgen receptors AR,estrogen receptors ERα ER1,peroxisome proliferator-activated receptors PPARα PPARγ,pregnane X receptor NR1L2,GPCR,CYP)were chosen for univariate association with reproductive animal toxicities(rat)from the ToxRef database using different datasets in validation than for calibration.Each assay was assessed for univariate association with the identified reproductive toxicants.Using linear discriminant analysis and fivefold cross-validation,a robustand stable predictive modelwasproduced capable of identifying rodent reproductive toxicants with 77% ±2%and 74% ±5%(mean±SEM)training and test cross-validation balanced accuracies,respectively. Thisexample demonstrates, thatthe prediction of lethality and other reproductive endpoints is possible,although the new metrics of toxicity shall be able to predict the totality of all changes in genes and proteins in humans,that may be affected by xenobiotics in any of the biological pathways involved.

5 Dosimetry asthe toolto estimate human internal exposure and response from in vitro assays AC50[IVIVE]

A paper published by Barbara Wetmore et al.[25]has received considerable attention and honors.It describes how high throughput in vitro screening data(AC50)by integrating“dosimetry”(plasma binding,hepatic and metabolic clearance etc.assays)can be used to predicthuman internalexposure,genetic changes and adverse outcome.It has been pointed out,that by themselves in vitro assay effect concentrations(AC50)are quantitatively meaningless for risk assessment,if not adequately take into account the pharmacokinetic behaviourin humansofthedrug candidate or environmental chemical under investigation.This is called in vitro in vivo extrapolation IVIVE.

This has been futher detailed[26]to use physiologically based pharmacokinetic information PBPK to better predict the internal exposure in specific organs,e.g.the liver.Primary hepatocytes have been used in perfused 3D sandwich in vitro structures and the“free”xenobiotic,as well as the plasmaprotein bound,cell membrane bound and metabolized portion of the xenobiotic are recorded,when estimating the internal exposure in humans along with cellular effects towards receptors,genes or proteins.

6 Xenobiotic Effects in Cancer related Pathways

Perturbations by Xenobiotics often sweep changes across the whole transcriptome,due to highly interconnected networks.Consequently the entirety of changes need to be examined to make a judgement about theintegralavderseoutcome.Whole-genome microarray analysis provides broad information on the response of biological systems to xenobiotic exposure,but is not practical to use when thousands of drug candidates and chemicals need to be evaluated at multiple doses and time points,as well as across different tissues,species and life-stages.

A useful alternative approach is to identify a focused set of genes that can give insight into systemslevel responses and that can be scaled to the evaluation ofthousands ofchemicals and diverse biological contexts.

Recently a computationalapproach has been published by Judson et al.[2]in which a list of“sentinel”genes was derived,suitable to detect the tumorigenic potential of drug candidates and chemicals in in vitro assays.

7 Highly interconnected networks and the use of evidence weighted functional linkage network(FLN)log-likelihoodscores forthechoiceof cancer“sentinel genes”

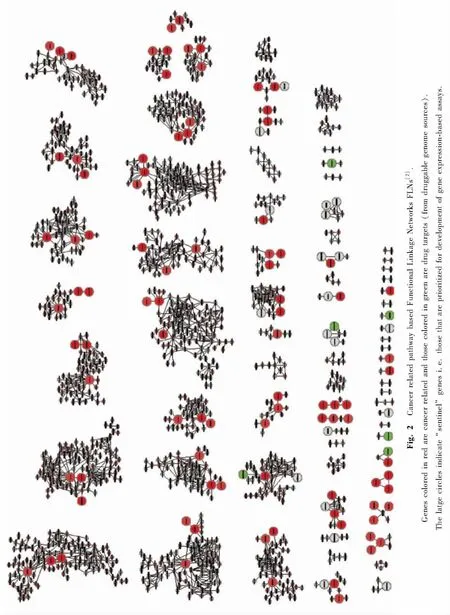

Judson et al.have used the functional linkage network method of Linghu et al.[3]to map literature data(22 388 609 links for 21 657 genes)to genegene, protein-protein, mass spectrometry, shared protein domains etc.from GO and coexpression from large expression data sets to Kegg and Ingenuity cancer related pathways.

The following pathwaysare involved:14-3-3-mediated Signaling,BMP signaling pathway,ERK/MAPK Signaling, G-Protein Coupled Receptor Signaling,Hypoxia Signaling in the Cardiovascular System,PI3K/AKT Signaling,VDR/RXR Activation,Valine,Leucine and Isoleucine Degradation,cAMP-mediated Signaling,Adipocytokine signaling pathway,Bladdercancer, Butanoate metabolism, Colorectal cancer, Endometrialcancer, Non-smallcelllung cancer,PPAR signaling pathway,Pancreatic cancer,Prion disease,Prostate cancer,Small cell lung cancer,Synthesis and degradation of ketone bodies,Thyroid cancer, Tryptophan metabolism, Valine, Wnt signaling Pathway.

Of these modules with fewer than 10 hits(at significence level 0.01)were excluded.The remaining genes were given a score equal to the number of CTD(Comparative Toxicogenomic Database)plus a bonus of 100 if they were known to have cancer association.

This process yielded 178 unique expression assay target candidate genes,listed in Tab.1.

A method for target prioritization for gene expression assays has been applied to the cancerrelated module set as shown in Figure 1.First modules have been prioritized based on the number of chemicals with expression hits from CTD and those modules with few or no hits have been discarded.

Two thresholds have been used,one for the number ofhitsand the otherforthe p-value of significance fora hit. Next, within the selected modules,many genes are only rarely perturbed,so these are also discarded.Additionally,some chemicals hit too many genes(promiscuous chemicals)and these chemicals are discarded from further calculations.Of the remaining genes added priority has been given to those with known cancer associations. Finally, to select sets of genes within any given module preference has been given to those,that are functionally as different as possible.This difference is quantified as the FLN score relating the genes.

Pairs of genes with low FLN scores would be selected before pairs with high scores.Because some genes occur in multiple modules,a final set of assay targets is selected after merging overlaps.To illustrate this approach,moduleswere excluded and genes within modules,with fewer than 10 chemical hits from CTD(of type“expression”)(out of 42000)at the 0.01 significance level. Chemicals are discarded if they are promiscuous,defined as hitting more than 10 genes in the module or>10% of the genes in the module,whichever is larger. The remaining genes were given a score equal to the number of CTD chemical hits,plus a bonus of 100 if they have a known cancer association.The top scoring set of at most 10 genes in each module were then ranked by their summed FLN scores with each other,and the lowest scoring(most dissimilar)1-to-3 genes per module were selected.This process yielded a set of 178 unique expression assay target candidate genes.This gene list is chosen for HTS analysis of xenobiotics regarding potential cancer risks.

8 Validation of the cancer-relatedness of these 178 sentinel genes with an independent set of Whole Genome Microarray data

The utility of this target selection approach was tested by assessing the performance of these target genes against an independent set of microarray data.A small number of target genes were selected for assay developmenttermed “sentinelgenes”, and then validated by checking,whether a chemical perturbs a pathway module by looking for patterns of differential expression using whole-genome microarray data.

This property of the target assay genes was tested using the Iconix data set[27]which consists of microarray data for 40 rodent non-genotoxic hepatocarcinogens and 86 chemicals negative for rodent liver tumors.

Differentially expressed genes(DEGs)for each chemical were examined and the data transformed into the same format as reported by CTD.Genes were mapped from rat to human before matching with the FLN modules,using the HomoloGene database.There were a total of 5633 genes for which human homologs were found.Only 128 of the 178 sentinel genes were mapped to this data set.

Tab.1 Cancer related pathway based Functional Linkage Networks FLNs derived“sentinel”genes for HTS screening of xenobiotics regarding potential cancer risks[2].

As with the analysis of chemical-module interaction scores based on CTD chemicalgene expression information,chemical-module interaction pvalues were calculated for all chemical-module pairs from the Iconix DEG data.The distribution of module hits was similar to what was seen using the CTD chemical-gene expression information. Figure 2 illustrates the performance of the sentinel assay set,in which all modules with FLN threshold from 2 to 10 are included.Recall that the selection process for sentinel genes for assay development was based on data from CTD.Here the usefulness of these sentinel genes using the independent Iconix whole-genome microarray data set was tested.The data showed that as modules get larger,the fraction of interactions recovered grows,and for most modules above about 50 genes in size,almost all chemical-module interactions are found using just the sentinel genes.

The graph(Fig.3)showstheperformance distributions at the 3 significance thresholds and the plot shows that for the majority of modules,100% of the true chemical-module interactions was found,using the sentinel genes.On the left hand group of bars,corresponding to chemical-module hits at the p=0.05 level.The tall bar at the right shows that for 70% of the modules,100%of the chemicals that interact with the module also cause one of the sentinel genes to be differentially expressed.Foranother10% ofthe modules,95 ~ 99% of the chemicals hitting the module also hit one of the sentinel genes;for another 2%of the modules,90~94%of chemicals hitting the module also hit a sentinel gene;and so on.Moving to the right,the fraction of chemicals hitting the module and also hitting one of the sentinel genes rises as we increase the stringency for chemical-module interactions. This provides strong support for the usefulness of the 178 target genes in detecting cancerogenous xenobiotics and the target gene selection procedure.

9 Outlook

On Sept 04,2012 the US National Research Council published a paper[28]with the title:“Science for environmental protection:The Road ahead”,in which the NRC urged US EPA to implement the new toxicity testing strategy into regulatory practice and give a timeline for the implementation.

Fig.3 Distribution of chemical-module hits recovered using sentinel genes[2].Each of the 3 blocks shows the results for different levels of statistical significance for chemical-module interactions.Within each block,the heights of the bars give the fraction of modules for which the x-axis designated fraction of true chemical module pairs are detected using the sentinel genes.For instance,in the left-most block 70%of the modules have all chemical interactions detected,10%of modules have 95% ~99%of interactions detected and so on.In the third block(p=0.001)for about 90%of modules,all modulechemical interactions are recovered using just the sentinel genes.Perfect detection of all chemical-module interactionswould result in a single bar at x=1 and y=1.

The Endocrine Disruptors Screening Program EDSP21 initiative already published[29,30]their schedule and the Tox 21 will probably soon join:

○Within the next 2 years:HTS in vitro assays become mandatory.Only if positive,the current Tier I testing(which is the short term animal and in vitro testing)needs to be performed

○And from year 2 to year 5:current short term animal and the current in vitro testing will be replaced with validated in vitro HTS assays.

○After 5 years:there will be a full replacement of in vivo screening assays(Tier 1)by validated in vitro HTS assays.Only if a reliable Risk Assessment is not possible based on these results animal testing shall be performed(Tier 2).But then this animal testing includes many more species and many biomarkers.

Fig.4 Characterizing the Exposome(31).The exposome represents the combined exposures from all sources that reach the internal environment.Examples of toxicologically important exposome classes are shown.Biomarkers such as those measured in blood and urine,can be used to characterize the exposome.

Future broadening of practical impelemtations of suitable HTS assays and statistical data evaluation models to derive reliable human internal exposure and effects estimates from in vitro assays and weight of evidence validations will help to improve expertise.Not only the drug development and public health communities will benefit from this,but it shall lead to a betterunderstanding ofthe entirety ofhuman exposures,now called the Exposome(Fig.4)[31].

[1] Krewski D..“Toxicity Testing in the 21st Century:A Vision and a Strategy”,Committee on Toxicity Testing and Assessment of Environmental Agents.National Academies Press,Washington,DC,USA.(2007).

[2] R.S.Judson,H.M.Mortensen,I.Shah,T.Knudsen,F.Elloumi,“UsingPathwayModulesasTargetsforAssay Development in Xenobiotic Screening”,Mol.Biosyst.,8[2],531-542(2012).

[3] B.Linghu,E.Snitkin,Z.Hu,Y.Xia,C.DeLisi,”Genomewide prioritization of disease genes and identification of diseasedisease associations from an integrated human functional linkage network“,Genome Biology,2009,10(9),R91.

[4] Desmond,Adrian;Moore,James(1991).“Darwin”.London:Michael Joseph,Penguin Group.ISBN 0-7181-3430-3.

[5] Williams,G.C.[1957].“Pleiotropy,Natural Selection,and the Evolution of Senescence”.Evolution 11(4):398 - 411.doi:10.2307/2406060.JSTOR 2406060.

[6] Olson H,Betton G,Robinson D,Thomas K,Monro A,Kolaja G,Lilly P,Sanders J,Sipes G,Bracken W,Dorato M,Van Deun K,Smith P,Berger B,Heller A.,“Concordance of the toxicity of pharmaceuticals in humans and in animals.” Regul Toxicol Pharmacol.2000 Aug;32(1):56 -67.Review.

[7] N.Shanks,C.R.Greek“Animal Models in the Light of Evolution”Boca Raton:BrownWalker Press;2009.443 pages,ISBN-10:1599425025;ISBN-13:9781599425023.

[8] Shanks N.,Greek C.R.,Greek J.“Are animal models predictive for humans?”Philosophy,Ethics and Humanities in Medicine 2009,4:2 doi:10.1186/1747-5341-4-2.

[9] FDA Issues Advice to Make Earliest Stages Of Clinical Drug Development More Efficient[http://www.fda.gov/bbs/topics/news/2006/NEW01296.html].

[10] Heywood R,“Clinical Toxicity-Could it have been predicted?Post-marketing experience.” AnimalToxicityStudies:Their Relevance for Man 1990:57-67.

[11] Spriet-Pourra C,Auriche M,“Drug Withdrawal from Sale.”New York 2nd edition.1994.

[12] Grass GM,Sinko PJ.“Physiologically-based pharmacokinetic simulation modeling”Adv Drug Deliv Rev.2002 Mar 31;54(3):433-5.

[13] Greaves,P.,Willliams,A.,and Eve,M.2004.“First dose of potential new medicines in humans:How animals help.”Nat.Rev.Drug Discov.3,:226 -236.

[14] Wade,Nicholas[2007-05-31].“Genome of DNA Pioneer Is Deciphered”.The New York Times.http://www.nytimes.com/2007/05/31/science/31cnd _ gene. html? em&ex =1180843200&en=19e1d55639350b73&ei=5087%0A.

[15] Kramer R.,Cohen D.,“Functional genomics to new drug targets”,Nature Reviews/Drug Discovery Vol.3,Nov 2004,965-972.

[16] National Research Council(NRC).(2007).Toxicity Testing in the 21st Century:A Vision and A Strategy.National Academy Press,Washington,DC.

[17] Collins FS, Gray GM, Bucher JR. 2008. Transforming Environmental Health Protection.Science 319(5865):906 -907.

[18] http://epa.gov/ncct/toxcast/

[19] http://fluidicmems.com/whats-microfluidics/

[20] http://www.ncats.nih.gov/files/factsheet-tissue-chip.pdf

[21] “Safety Evaluation Ultimately Replacing Animal Testing(SEURAT)”5 years EU-FP7 Research Project started Jan 2011.

[22] U.S.Environmental Protection Agency[EPA].[2006].Approaches for the Application ofPhysiologically Based Pharmacokinetic(PBPK)Models and Supporting Data in Risk Assessment(Final Report).Washington,DC.EPA/600/R-05/043F.

[23] World Health Organization.(2010).International Programme on Chemical Safety Harmonization Project.Characterization and Application of Physiologically Based Pharmacokinetic Models in Risk Assessment.Harmonization Project Document No.9.World Health Organization,Geneva,Switzerland.

[24] Martin M.T.,Knudsen T.B.,Reif D.M.,et al.“Predictive Model of Reproductive Toxicity from ToxCast High Throughput Screening”,Biology of Reproduction, Vol.85,327 - 339(2011).

[25] Wetmore B.A.,Wambaugh J.F.,Ferguson S.S.,et al,“ Integration ofDosimetry, Exposure, and High-Throughput Screening Data in Chemical Toxicity Assessment”,Toxicological Sciences 125(1),157-174(2012).

[26] McLanahanE. D., El-MasriH. Sweeney A., etal,“PhysiologicallyBased PharmacokineticModelUsein Risk Assessment—Why Being Published Is Not Enough ”,Toxicological Sciences 126(1),5-15(2012).

[27] Fielden M.R.,Brennan R.and Gollub J.,“A gene expression biomarker provides early prediction and mechanistic assessment of hepatic tumor induction by nongenotoxic chemicals”,Toxicol.Sci.,2007,99(1),90 -100.

[28] National Research Council, “ Science for Environmental Protection:The Road Ahead”,National Academic Press 2012 ISBN 978-0-309-26489-1.

[29] U.S.EPA,Endocrine Disruptor Screening Program for the 21st Century:(EDSP21 Work Plan),Summary Overview,September 30,2011.

[30] Office of Chemical Safety & Pollution Prevention Endocrine Disruptor Screening Program Comprehensive Management Plan,June 2012.

[31] Rappaport S.M.,Smith M.T.,“Environment and disease risks”,Science 330(6003):460 -461(2010).