Progressive Fusion Network Based on Infrared Light Field Equipment for Infrared Image Enhancement

Yong Ma, Xinya Wang, Wenjing Gao, You Du,Jun Huang, and Fan Fan

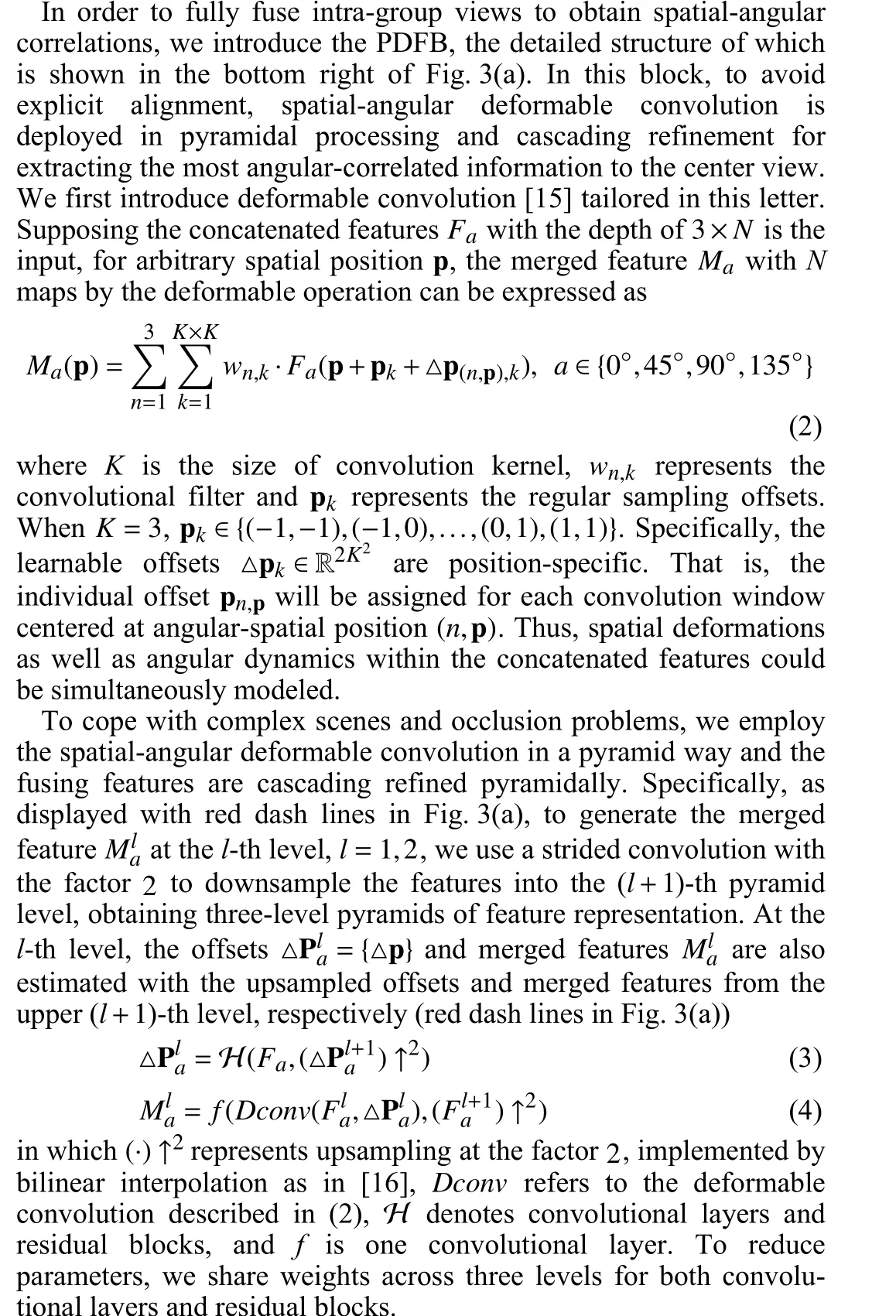

Dear Editor,

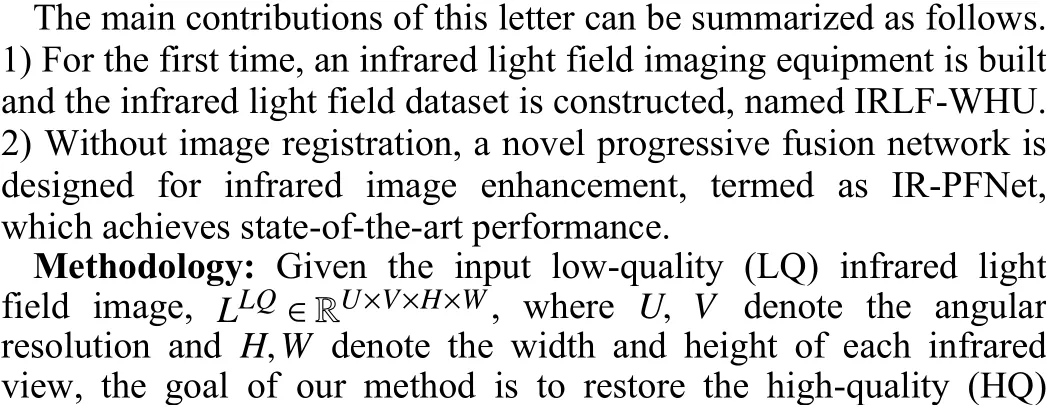

Infrared imaging, generally, of low quality, plays an important role in security surveillance and target detection. In this letter, we improve the quality of infrared images by combining both hardware and software. To this end, an infrared light field imaging enhancement system is built for the first time, including a3×3 infrared light field imaging device, a large-scale infrared light field dataset (IRLF-WHU), and a progressive fusion network for infrared image enhancement (IR-PFNet). The proposed algorithm leverages rich angular views among the infrared light field image to explore and fuse auxiliary information for infrared image enhancement.Given an infrared light field image, multiple views are first divided into four groups according to the angle and each group contains parallax shifts along the same direction. As strong spatial-angular correlations are existing in each group, we customize a progressive pyramid deformable fusion (PPDF) module for intra-group fusion without explicit alignment. In the PPDF module, the deformation and parallax are modeled in a progressive pyramid way. To integrate the supplementary information from all directions, we further propose a recurrent attention fusion (RAF) module, which constructs attention fusion block to learn the residual recurrently and provides several intermediate results for multi-supervision. Experiments on our proposed IRLF-WHU dataset demonstrate that IR-PFNet can achieve state-of-the-art performance on different degradations, yielding satisfying results. The dataset is available at: https://github.com/wxywhu/IRLF-WHU, and the code is available at: https://github.com/wxywhu/IR-PFNet.

Infrared imaging, which could capture the thermal radiation information emitted from the objects, is an important technology in many fields, such as surveillance systems, security monitoring, and military target detection [1]-[3]. In order to improve the quality of infrared imaging, various methods have been developed for imaging systems or infrared images. Few methods are dedicated to improving the infrared detectors [4]-[6], which are usually expensive and not suitable for wide applications. Other algorithms designed to improve the infrared image quality are served as post-processing techniques.Based on the convolutional neural network (CNN), some approaches were proposed for single thermal image enhancement [7], [8].Several methods also registered and fused multiple infrared images for quality enhancement [9], [10]. However, the former only relies on a single input, and thus, has limited performance. The latter performance depends heavily on image registration, which is itself a difficult problem. Therefore, it is desirable to combine software and hardware techniques to design an efficient system for infrared image enhancement. To address this issue, we design an infrared light field imaging equipment and a progressive fusion algorithm to enhance the quality of infrared image.

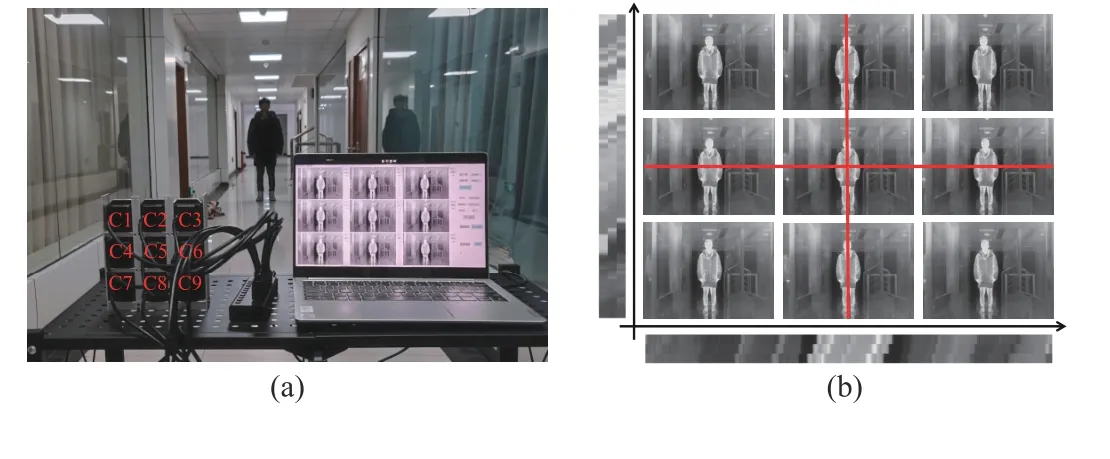

Light field imaging that records different angular views in subaperture images has been developed well in RGB images. The strong correlations among sub-aperture images could provide abundant supplementary information, which has proven to be beneficial for super-resolution (SR) techniques [11]-[13]. Due to this advantage,we design infrared light field imaging equipment composed of nine infrared cameras arranged in a regular grid of 3×3. As shown in Fig. 1, in a one-shot, the sub-aperture images captured by the device exhibit sub-pixel shifts in fixed directions corresponding to the central image. Thereby, we expect to use rich angular information to improve the quality of the central view. Based on this equipment, we construct an infrared light field dataset, including four scenes:building, people, car, and others. The whole dataset contains 1132 infrared light field images in a size of 3 ×3×384×280 pixels.

Fig. 1. Infrared light field imaging equipment. (a) The designed equipment.This device is composed of 9 infrared cameras arranged in a regular grid of 3×3. (b) The infrared sub-images. To show differences between sub-images,we extracted lines horizontally (bottom of sub-images) and vertically (left of sub-images) along the red lines for visualization.

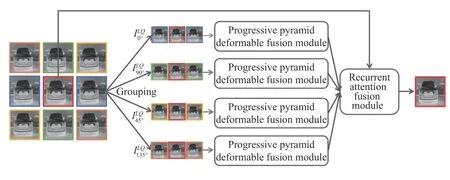

Considering the rich angular information existing in infrared light field images, we custom a novel progressive fusion network for central infrared image enhancement, termed as IR-PFNet. As shown in Fig. 2, we divide the infrared sub-images into four groups according to the direction. Each group is fed into the PPDF module for intra-group fusion, which avoids explicit alignment. Subsequently, the intermediate features from different directions are utilized to provide auxiliary information for the central infrared image in the RAF module. In this way, our proposed IR-PFNet could make full use of rich angular information to improve the center image quality.

Fig. 2. The whole network of our proposed IR-PFNet.

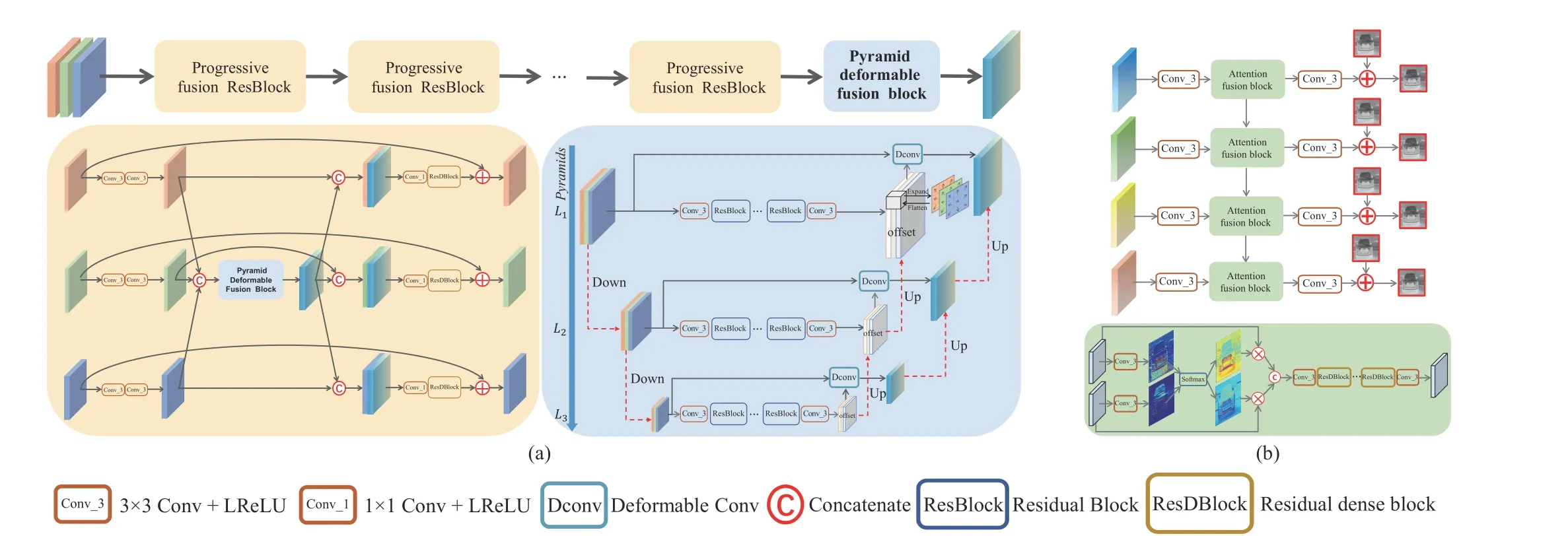

Fig. 3. The detailed architecture in our IR-PFNet. (a) PPDF module. This module consists of a series of PFRBs followed by a PDFB; (b) RAF module.

whereθrepresents the parameters of our method. Note that in this letter we focus on improving the quality of the central infrared view whereas our proposed IR-PFNet could deal with each sub-infrared view because of the flexible network design.

Network design: As illustrated in Fig. 2, according to the direction, our IR-PFNet groups the input LQ infrared light field image into four stacks. Taking one stack containing sub-pixel shifts from a specific direction as input, the PPDF module is supposed to fully extract spatial information and fuse the angular information to capture intra-group spatial-parallax correlations. Hence, we employ a progressive fusion structure, where the deep features are gradually extracted and fused. For the angular information fusion, since the traditional regular convolution cannot well capture spatial deformation and angular dynamics in multiple views, we use spatial-angular deformable convolution to learn the kernel offset, avoiding explicit alignment in angular information fusion. In specific, the spatialangular deformable convolution is deployed in a pyramid structure to model the deformation at multiple levels for obtaining the most angular-correlated information to the center view. After acquiring the supplementary information from different directions, the RAF module with attention fusion block is designed to integrate all auxiliary information for the central image in a recurrent way, which benefits our task by enabling the model to pay different attention across angles.

1) Progressive pyramid deformable fusion: As shown in Fig. 3(a),a series of progressive fusion residual blocks (PFRBs) are deployed in the PPDF module, which is supposed to make full extraction of intra-group spatial-parallax correlations. After that, the learned features from each direction are merged by a pyramid deformable fusion block (PDFB).

We show the detailed structure of PFRB in the bottom left of Fig. 3(a). It takes sub-views as input in three paths. Sharing the same insight as [14], we first conduct two 3×3 convolutional layers to extract self-independent features withNmaps from each infrared view. Later, these feature maps are concatenated and merged into one part, the depth of which is 3×N. Then, we feed the concatenated features into the PDFB, intending to fuse features that are most angular-correlated to the center view. After that, the fused feature is further concatenated to all the previous maps and we further adopt one 1×1 convolutional layer followed by a dense residual block to extract both spatial and angular information for residual learning,respectively. In the PFRB, we share weights across paths for both convolutional layers and residual blocks.

2) Recurrent attention fusion: After obtaining the most spatialangular correlated features from different directions by the PPDF module, we design the RAF module to integrate all auxiliary information for the central image. As depicted in Fig. 3(b), in the light of the complementarity of input features from different directions, a recurrent structure is adopted in the RAF module to fuse features step by step. For each step, the merged feature is first processed by one 3×3 convolutional layer. Later, we design the attention fusion block for fusing two features from different directions. Then, the output of attention fusion block is fed back to itself in the next step and simultaneously sent into one3×3 convolutional layer to learn the residual information for the HQ infrared image.

Concretely, in the attention fusion block displayed at the bottom of Fig. 3(b), we expect this attention fusion block could benefit our task by enabling the model to pay different attention across angles.Taking two angular features as input, we adopt the 3×3 convolutional layer to generate the 2D feature map respectively. For producing the attention mask, the Softmax function is utilized on two maps along the angular dimension. Since angular attention is supposed to work as a guide to efficiently integrate angular features,we apply the attention mask on the input feature through elementwise multiplication. Then, the weighted features are concatenated and sent into deep feature extracting blocks which consist of four dense residual blocks with two 3 ×3 convolutional layers at the head and the tail positions.

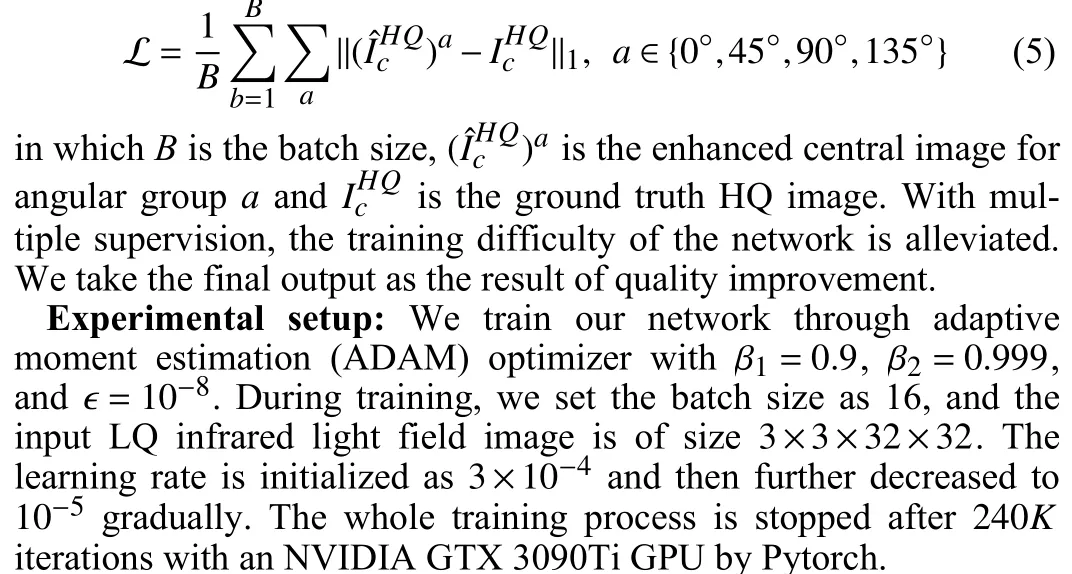

Loss function: Since our method integrates auxiliary information in a recurrent way during the RAF module demonstrated in Fig. 3(b),we adopt a multi-supervised approach to train the whole network,which can be formulated as

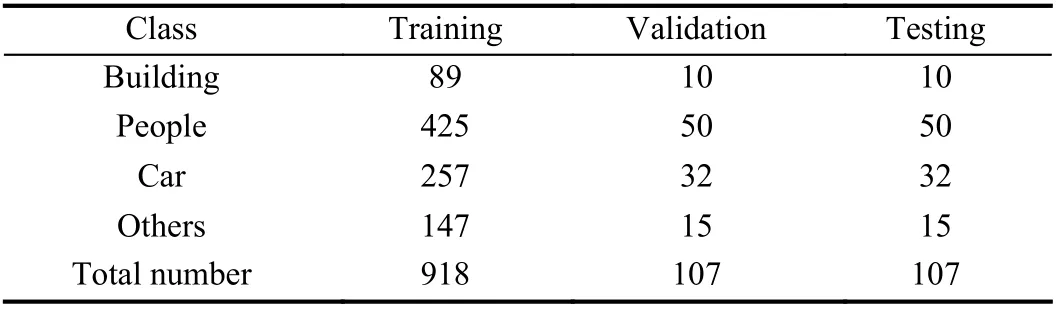

Previous studies on image enhancement [8], [13], [14] are rarely developed for infrared images. The lack of standard and large-scale infrared image datasets limits the development of the field. In this letter, we divide our proposed IRLF-WHU dataset into training,validation and testing sets according to Table 1. For quantitative evaluation, the original images are served as ground truth HQ images and we generate the LQ infrared images array by applying degraded operations such as reducing details, blurring, and adding noise.

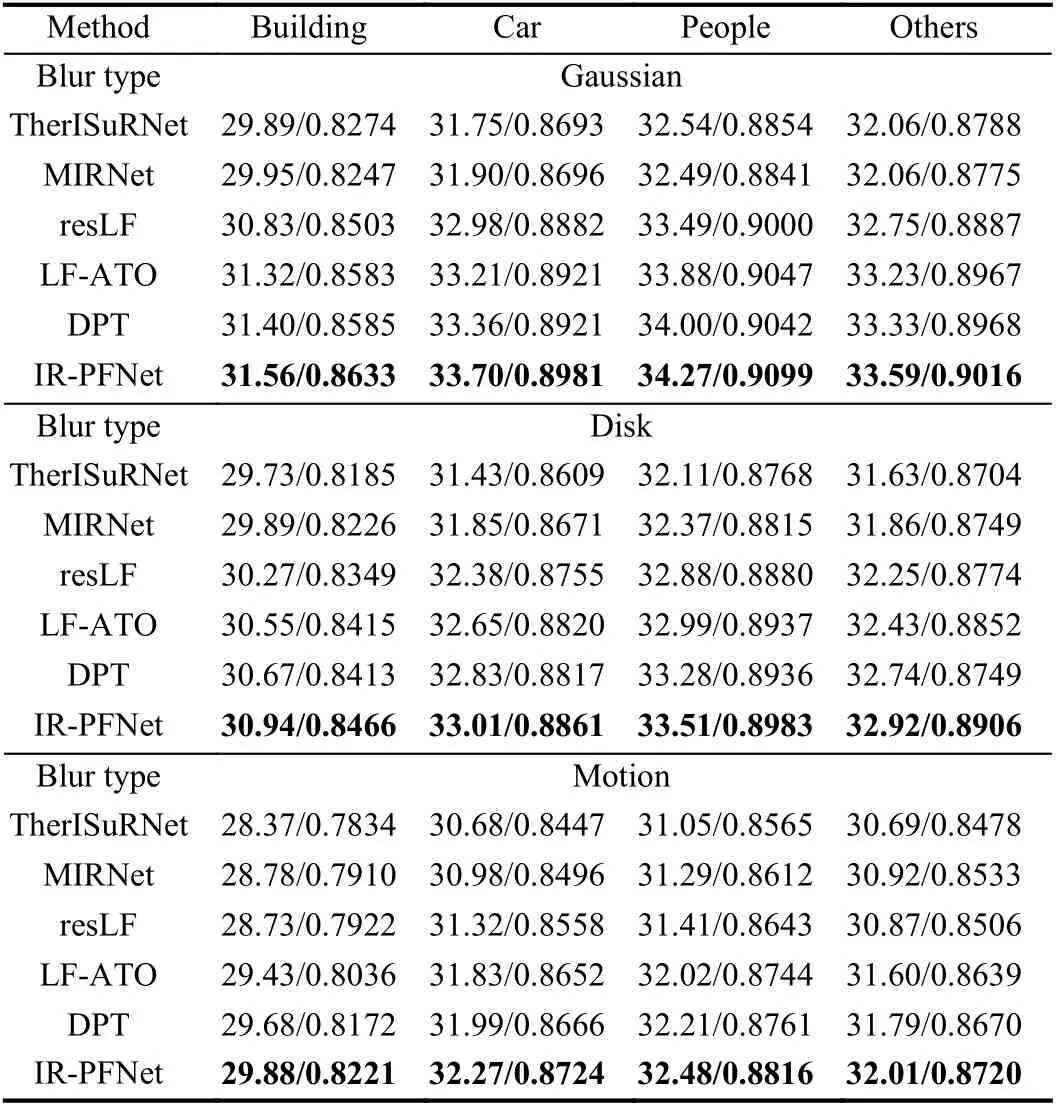

Since we pioneer in designing the infrared light field device, the comparative methods only can be either single image enhancement or light field image enhancement algorithms. Thereby, we compare our proposed method with five algorithms, including two state-of-the-art deep single image enhancement methods, i.e., TherISuRNet [8], and MIRNet [17], and three current advanced deep light field image enhancement methods, i.e., resLF [13], LF-ATO [12] and DPT [11].For a fair comparison, these methods are trained from scratch on the same dataset to achieve their best performance.

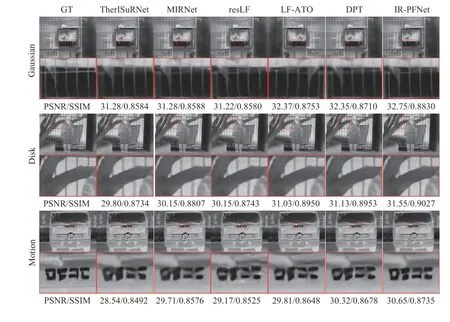

Experiments on different blur degradations: We consider three kinds of blur widely existing in the infrared image, i.e., Gaussian blur, disk blur, and motion blur. Specifically, the Gaussian blur kernel is of 7×7 size with a width of 0.6 and the radius of the disk blur is 2.5. For the motion blur, we set the angle to 10 degrees, and the motion displacement to 10 pixels. To produce LQ images, we first blur the HQ image and use bicubic downsampling and upsampling with a scale factor of 4 to reduce details. Small white Gaussian noise with standard deviation is also added to model real LQ degradation.

The quantitative results evaluated on different blur types are reported in Table 2. As can be observed, our proposed IR-PFNetachieves the best performance in terms of average peak signal to noise ratio (PSNR) and structural similarity (SSIM) for all scenes on different blur degradations. More specifically, our method outperforms the second-best method by an average of 0.2 dB on the PSNR value. We note that our IR-PFNet take angular information from different directions into consideration, unlike DPT [11] which utilizes the whole light field image as input, thereby making full extraction of spatial-angular correlations. As multiple views are grouped in resLF [13] and LF-ATO [12], the proposed IR-PFNet manages to integrate the angular information in a pyramid way, and thus further improves the quality of infrared images.

Table 1.The Dividing Way of The Proposed IRLF-WHU Dataset

Table 2.Quantitative Results (PSNR/SSIM) on Different Blur Types

Fig. 4 provides the qualitative results on three test images blurred by different degradations. Although image quality enhancement can decently reduce those artifacts, the resulting images usually become over-smoothed and lack details. It can be seen that compared to the single image enhancement methods (TherISuRNet and MIRNet), the light field image enhancement methods could produce better results with the help of auxiliary views. All in all, our IR-PFNet can restore sharper structural details and be more robust to blur degradations because of better exploration of spatial-angular information.

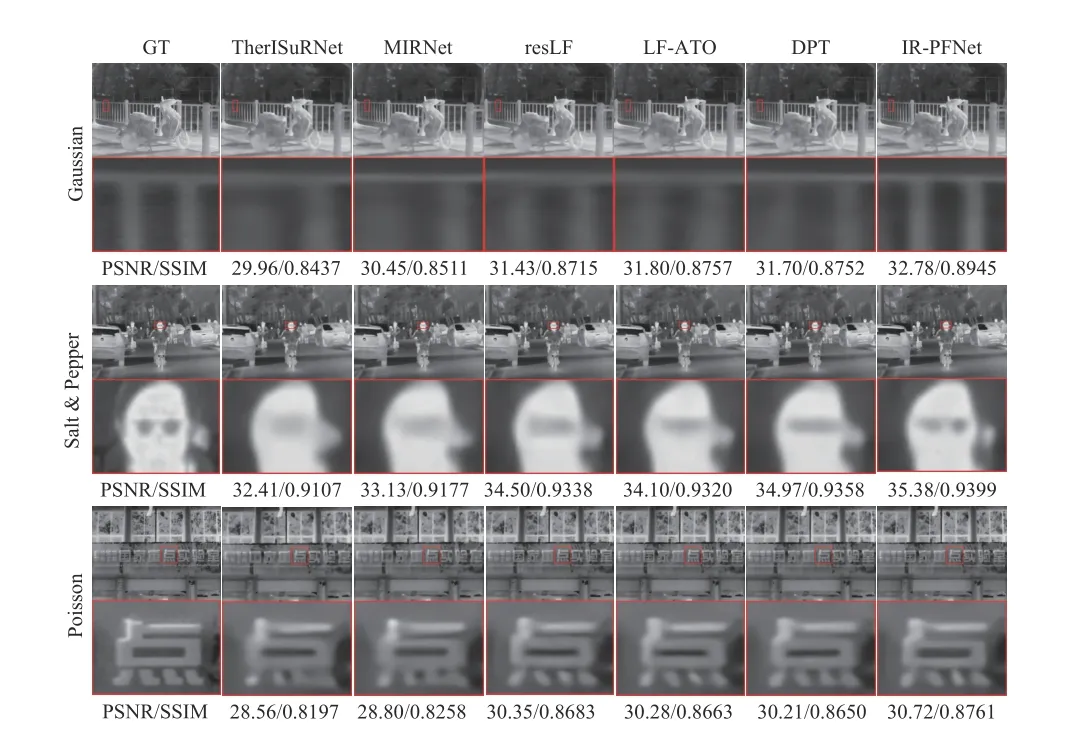

Experiments on different noise degradations: We also conduct experiments on the degradation of different types of noise including Salt&Pepper noise with density 0.06, additive Gaussian white noise with zero mean and standard deviation 10, Poisson noise whose level is determined by the brightness of the input image. After adding noise, we use bicubic downsampling and upsampling with a scale factor of 4 to reduce details, and blur HQ images through a Gaussian kernel with 5 ×5 size and 0.5 width to produce the LQ images.

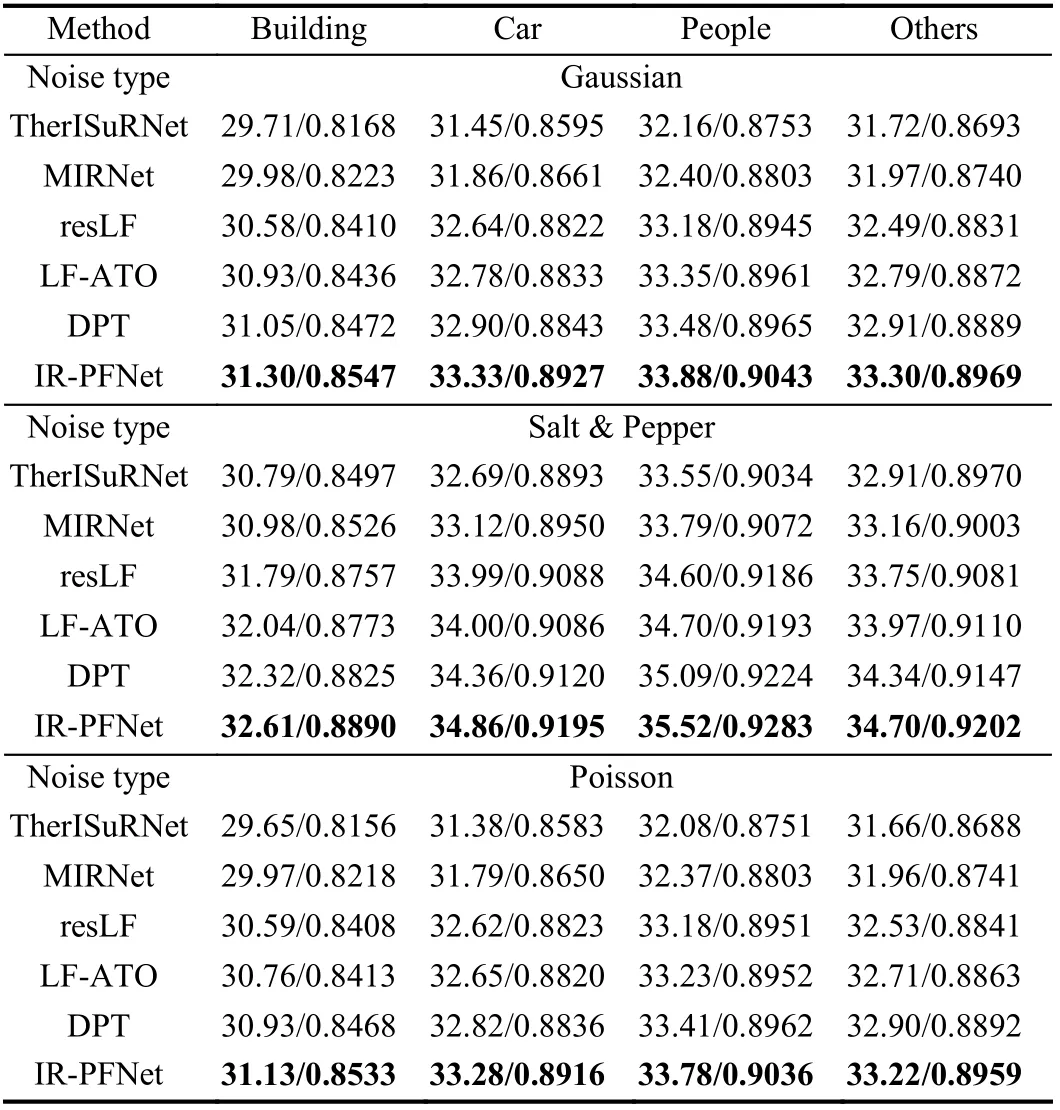

Table 3 reports PSNR and SSIM results for different types of noise on the test set. As demonstrated in Table 3, our model IR-PFNet is superior in all scenes degraded by different types of noise. Due to progressive pyramid integration and recurrent attention fusion in ourproposed model, the IR-PFNet can fully exploit spatial-temporal information, thereby leading to better enhancement results.

Table 3.Quantitative Results (PSNR/SSIM) on Different Noise Types

Fig. 4. Qualitative results of three blur types.

Fig. 5 shows the enhanced images of all comparing methods for three different types of noise. From the visual enhancement results,we can see that most other methods generate blurry or misleading structures. In contrast, our method is able to reconstruct clear and right textures closer to the ground truth ones, which demonstrates higher reconstruction quality.

Conclusion: In this work, we improve the quality of infrared image by our proposed infrared light field imaging enhancement system. We first build a 3×3 infrared light field imaging equipment and a large-scale infrared light field dataset, namely IRLF-WHU, is collected. Relying on this dataset, we tailor the PFNet to exploit spatial-angular correlations and integrate the auxiliary information for the final result. Specifically, spatial-angular correlations are fully fused by progressive fusion residual block coupling with pyramid deformable convolution to avoid registration. The attention fusion module is deployed in a recurrent manner for feature integration from all directions. Based on two fusion modules, our proposed PFNet can achieve better results compared with state-of-the-art approaches.

Fig. 5. Results of three noise types generated by competing methods.

IEEE/CAA Journal of Automatica Sinica2022年9期

IEEE/CAA Journal of Automatica Sinica2022年9期

- IEEE/CAA Journal of Automatica Sinica的其它文章

- Autonomous Maneuver Decisions via Transfer Learning Pigeon-Inspired Optimization for UCAVs in Dogfight Engagements

- Interval Type-2 Fuzzy Hierarchical Adaptive Cruise Following-Control for Intelligent Vehicles

- Efficient Exploration for Multi-Agent Reinforcement Learning via Transferable Successor Features

- Reinforcement Learning Behavioral Control for Nonlinear Autonomous System

- An Extended Convex Combination Approach for Quadratic L 2 Performance Analysis of Switched Uncertain Linear Systems

- Adaptive Attitude Control for a Coaxial Tilt-Rotor UAV via Immersion and Invariance Methodology