Application of endoscopic ultrasonography for detecting esophageal lesions based on convolutional neural network

Gao-Shuang Liu, Pei-Yun Huang, Min-Li Wen, Shuai-Shuai Zhuang, Jie Hua, Xiao-Pu He

Abstract

Key Words: Endoscopic ultrasonography; Convolutional neural network; Esophageal lesion; Automatically;Classify; Identify

INTRODUCTION

Endoscopic ultrasonography (EUS) is a mature and widely used invasive examination technology. It is a combination of ultrasound and endoscopy, and the micro high-frequency ultrasound probe is placed on the top of the endoscope to directly observe gastrointestinal mucosal lesions when the endoscope enters the body. It can be used for preoperative staging of digestive tract tumors[1], estimating the invasion depth and range[2], assessing the nature of submucosal lesions of the digestive tract wall[3-4], and diagnosing bilio-pancreatic system tumors[5-6]. The esophagus is the narrowest part of the digestive tract, and its diameter is not uniform. EUS can be used for high-precision evaluation of the esophagus.At present, common lesions of the esophagus include submucosal tumors (including leiomyoma,stromal tumor, and lipoma), precancerous lesions, and esophageal cancer.

China has a high incidence of esophageal cancer, and the current 5-year survival rate of esophageal cancer is 15%-25%[7-10]. A 13.5-year follow-up study has shown that 74% of patients with high-grade intraepithelial neoplasia (HGIN) have developed esophageal cancer[11]. Surgical and endoscopic resection (ER) has been approved as the standard treatment for esophageal HGIN, and endoscopic therapy has become a widely used method because of its safety, efficiency, and low risk of complications[12-13]. Compared with surgical treatment, endoscopic therapy can reduce complications and preserve esophageal function[9]. EUS plays an important role in the evaluation of esophageal lesions and in the determination of the need for surgery.

With the popularization of endoscopic ultrasonography in clinic, several endoscopic physicians are needed. However, endoscopic ultrasonography is different from other ultrasound techniques in that ultrasonic examination is performed on the basis of digestive endoscopy; therefore, the examiners should be professional endoscopic physicians with ultrasonic training. In addition, with the increase of chronic diseases, ageing populations, and dwindling resources, we need to shift to models that can intelligently extract, analyze, interpret, and understand increasingly complex data. The rapidly evolving field of machine learning is a key to this paradigm shift. Artificial intelligence (AI) is a mathematical predictive technology that automatically learns and recognizes data patterns. Deep learning is an AI algorithm and an advanced machine learning method using neural networks[14]. This method aims to build a neural network that mimics brain’s mechanisms for interpreting data, such as images, sounds,and text. Deep learning combines low-level features to form abstract high-level representation attribute categories or features and discover distributed feature representation of data. It is often used in AI algorithms, and it has been applied to medical diagnosis[15-18]. At present, relevant studies on deep learning in ultrasound have been found, including the classification of thyroid nodules[19-20], the diagnosis of liver diseases[21], the identification of benign and malignant breast masses[22], the imaging interpretation of carotid artery intima media thickness (FEATURED by its length)[23], and the prediction of the pancreatic ductal papillary mucinous tumor[5]. However, the research on the identification and classification of esophageal lesions by endoscopic ultrasonography has not yet been found.

This study aimed to construct a framework of deep learning network and study the application of deep learning in esophageal EUS in identifying the origin of submucosal lesions and defining the scope of esophageal lesions.

MATERIALS AND METHODS

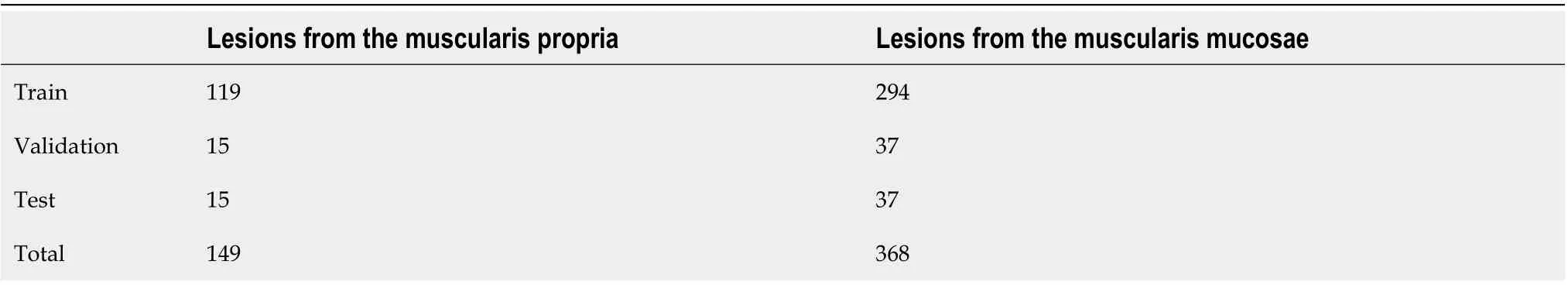

The method proposed in this paper included the following two parts: (1) Location module, an object detection network, locating the main image feature regions of the image for subsequent classification tasks; and (2) Classification module, a traditional classification convolution neural network, classifying the images cut out by the object detection network. In the evaluation phase, all the metrics were calculated based on the ten-fold cross-validation results. The dataset was divided into the training(80%), testing (10%) and validating (10%) datasets, respectively. The detailed data statistics distribution from the esophageal lesion database is shown in Tables 1 and 2.

Location module

For gastric ultrasound images, identifying the classification of the whole image was not necessary because the introduction of background information can cause irrelevant features and affect the determination of the model. Therefore, the core area of the image was located, which can classify the image[24]. In this study, RetinaNet object detection network was used. RetinaNet network is a one-stage object detection method. It uses the focal loss cost function in the classification branch, which addresses the imbalance between positive and negative samples in one-stage object detection method.

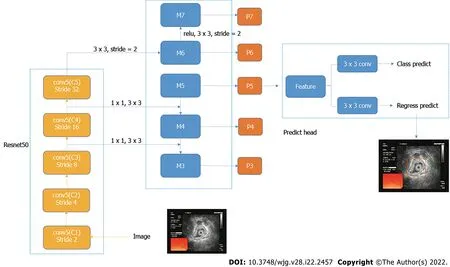

Resnet50 network was used in the feature extraction part of RetinaNet network, and the FPN structure was added, that is, a top-down structure and lateral connection were adopted to detect the object of different scales. Finally, the features obtained were used to predict the classification branch and regression branch. The classification branch was retained; thus, the multitask training can improve the accuracy of the model, and it can be used to compare the experiment with the classification network(Figure 1).

For object detection anchor setting, this study adopted the default anchor setting methods, with anchor sizes of 32, 64, 128, 256, and 512 and aspect ratios of 1:2, 2:1, and 1:1.

Classification module

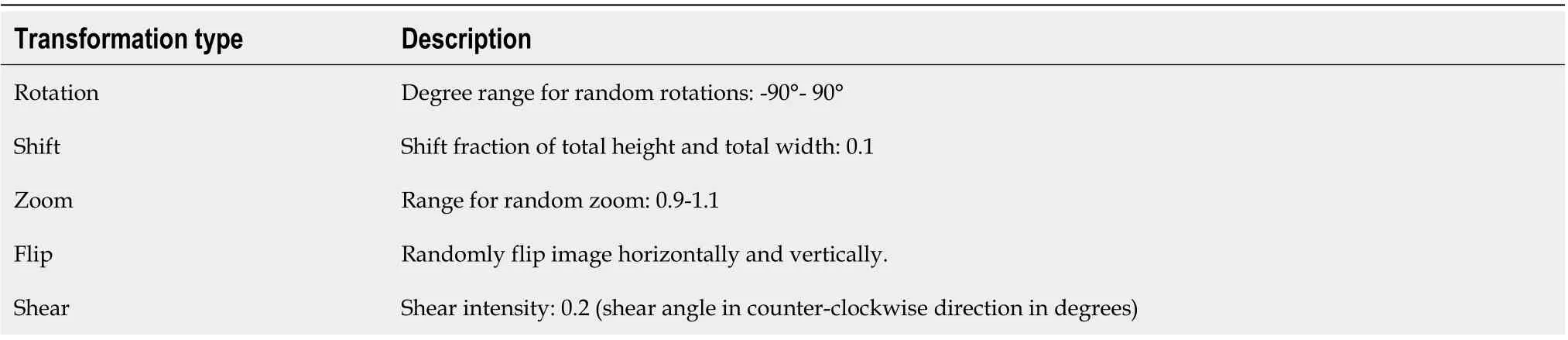

The object detection module cut out the core image block of the image and resize it to 224 × 224. Image augmentation artificially created training images through different ways of processing or combination of multiple processing, such as random rotation, shifts, shear, and flips, to address over-fitting and improve the generalization of the network (Table 3).

The classification convolution neural network used the VGGNet network. Compared with the previous network, the VGGNet network used small convolution and deeper network layers, which achieved better classification accuracy (ACC). The classification network was pre-trained on the ImageNet dataset. The last three fully connected layers of network were removed, and then two fully connected layers were added. The number of neurons was 256 and 5 (5 is the classification number of the dataset used in this paper; Figure 2).

Experiment

During the experiment, GPU configuration was a NVIDIA Geforce GTX1070ti. All the codes were written in Python 3.6, and keras was used as the deep learning library.

In the object detection experiment, smooth L1 cost function was used for regression, and focal loss cost function was used for classification. Adam was used as the optimizer, and the learning rate,momentum, and weight decay were set to 1e-5, 0.9, and 0.0005, respectively. The short side of the input image was rescaled to 800, and the long side was rescaled in equal proportion, with the maximum of 1333.

Table 1 Statistics distribution from esophageal lesion database

Table 2 Statistics distribution from esophageal submucosal lesion database

Table 3 Data augmentation parameter

In the classification experiment, the cross-entropy loss function was used. The optimizer used SGD and set the initial learning rate to 1e-3. When the loss did not decrease, the learning rate was multiplied by a factor of 0.5 until it reached 1e-6. The batch size was set to 16.

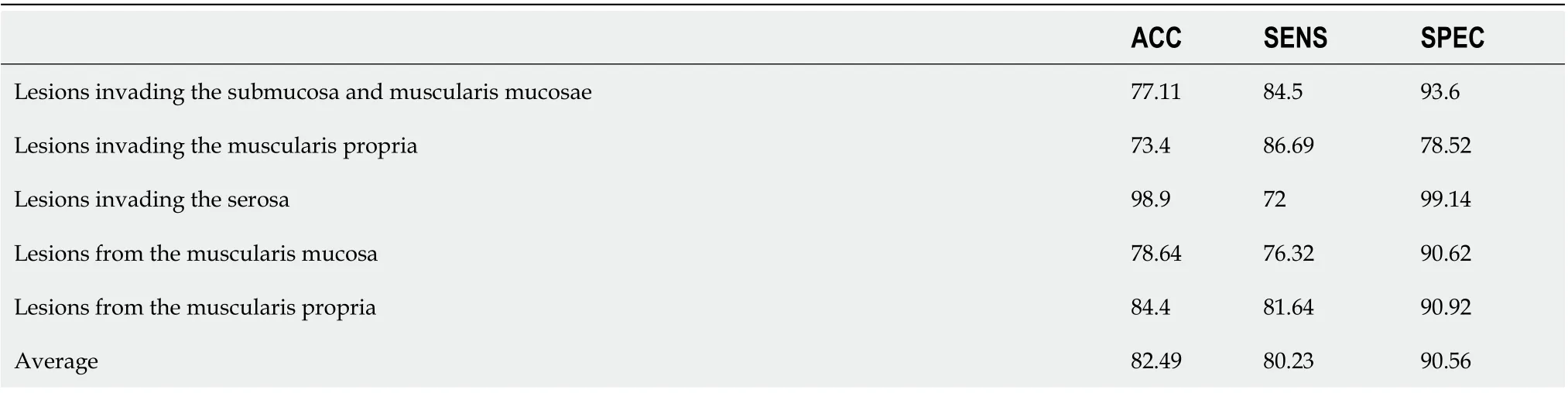

The quantitative and qualitative results of the proposed method were provided. A confusion matrix is a table that is often used to describe the performance of a classification model. Each row of the confusion matrix represents the instances of an actual class, and each column represents the instances of a predicted class. The diagonal values represent the number of correct classifications for each class, and the others represent the confusion number between every two classes. As shown in Figure 3, the ACC of using the classification network is improved compared with the classification branch of the object detection network, which is directly used for classification prediction. The classification ACC in the muscularis propria and muscularis mucosae increased significantly.

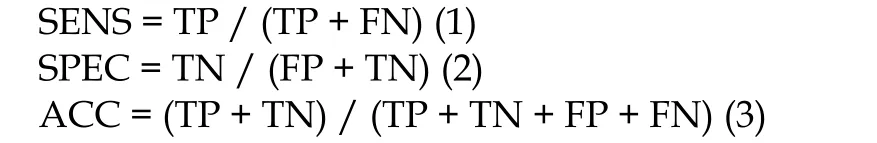

The classification performance was measured using sensitivity (SENS), specificity (SPEC), and ACC to clarify the evaluation metrics. Sensitivity measures how much a classifier can recognize positive examples. Specificity measures how much a classifier can recognize negative examples. ACC is the ratio of correct decisions made by a classifier. The sensitivity, specificity, and ACC were defined by equations(1)-(3):

Where TP is the amount of positive elements predicted as positive; TN is the amount of negative elements predicted as negative; FP is the amount of negative elements predicted as positive; FN is the amount of positive elements predicted as negative.

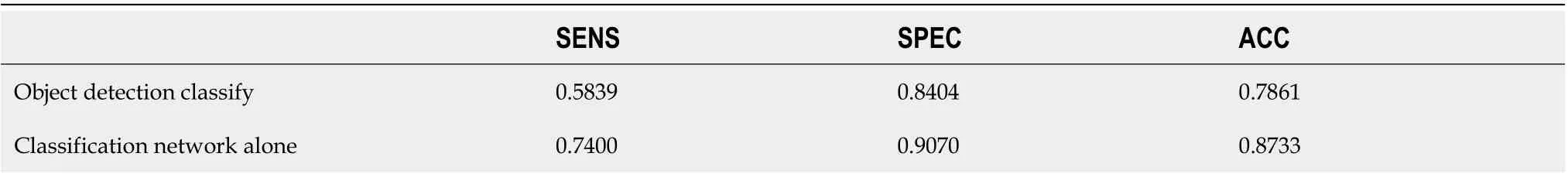

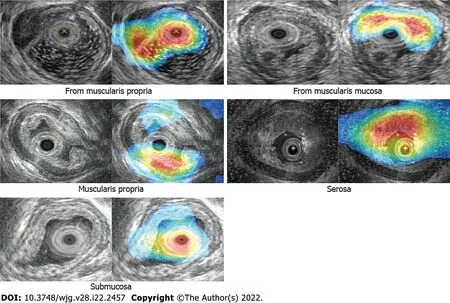

As shown in Table 4, the classification network outperforms the classification branch of the object detection network in SENS, SPEC, and ACC. Class activation maps (CAM) can be used to obtain the discriminative image regions using a convolutional neural network and identify a specific class in the image. Therefore, a CAM can identify the regions in the image that were relevant to this class. The results of CAM are shown in Figure 4.

Table 4 Comparative sensitivity, specificity, and accuracy results of classification branch of object detection network and classification network alone

Figure 1 Structure of object detection network.

RESULTS

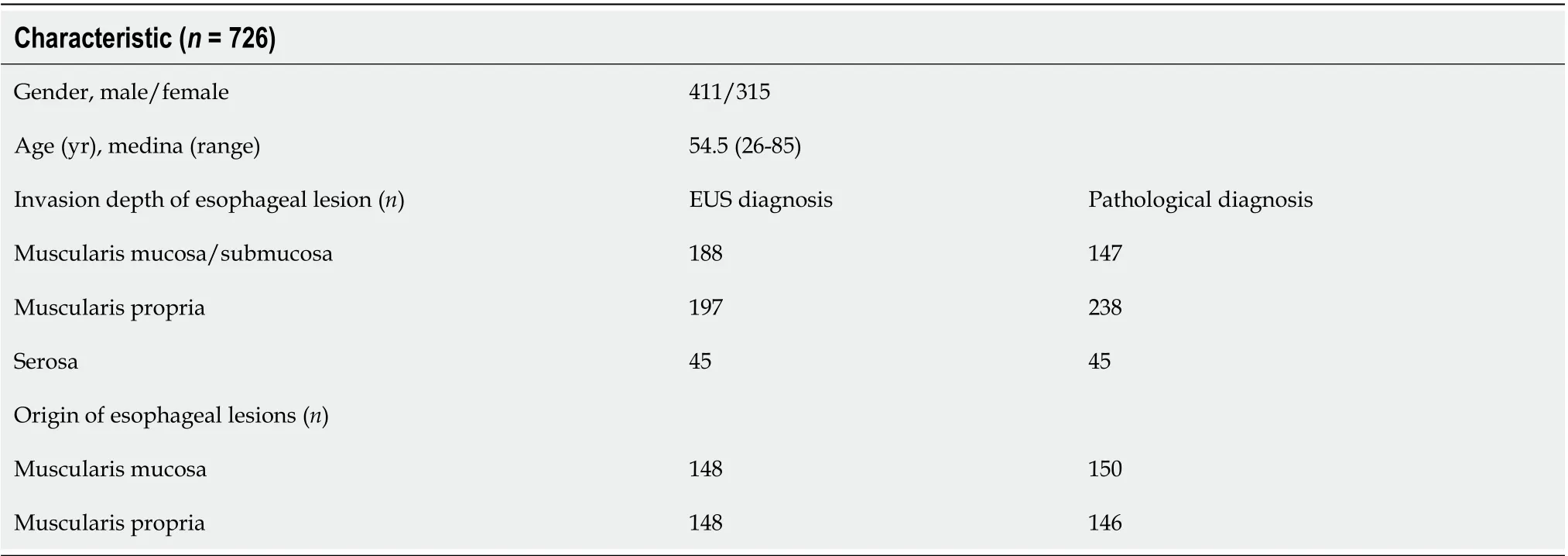

A total of 1115 patients were included in this analysis, including 694 males and 421 females. The age ranged from 24 to 88 years, with the majority of patients aged over 45 years. In this study, patients who underwent surgery or endoscopic treatment were followed for pathology, and 726 of them were compared for ultrasound diagnosis and endoscopic pathology. In the diagnosis of endoscopic ultrasonography, lesions invading the muscularis propria were not detected in 41 patients, and the misdiagnosis rate was approximately 9.5%. In addition, 36 patients showed pathologic invasion of the superficial muscularis propria. All patients with serosal invasion were treated by surgery, and the ACC rate was 100%. For the detection of submucosal lesions by EUS, the ACC was approximately 99.3% (Table 5).

Table 6 shows the ACC, SENS, and SPEC of each category in this study. The ACC of invasion of the serosa was 98.9%, and that of invasion of the muscularis propria and muscularis mucosa and submucosa was 73.4% and 77.11%, respectively. In submucosal lesions, the correct identification rate was 78.64% and 84.4%, respectively. The images of lesions originating from the muscularis mucosa were easily confused with the images of lesions invading the muscularis mucosa and submucosa.

DISCUSSION

Traditionally, the treatment for benign and malignant esophageal lesions is mostly surgery or chemoradiotherapy, but the surgical trauma and sequelae are relatively large. With the development of gastrointestinal endoscopy in recent years, some lesions can be treated by endoscopy, such as ER, particularly submucosal dissection, which has become the standard treatment for esophageal mucosal cancer[25-27]. In Japan, ER of esophageal cancer refers to the tumor located in the mucosal epithelium (T1A-EPis equal to Tis in situ in the TNM stage[28]) or the proper mucosa (T1A-LPM) because the incidence of lymph node metastasis is low (≤ 5%)[29]. The recommended diagnostic methods are endoscopic ultrasonography[30], extended endoscopy, and narrow-band imaging (ME-NBI)[31].

Table 5 Comparison of preoperative enhanced ultrasound diagnosis and postoperative pathological diagnosis in patients

Table 6 Results of proposed network in esophageal lesion database, %

Figure 2 Structure of classification network.

Figure 3 Confusion matrix results of object detection methods (A) and classify methods (B).

Figure 4 Class activation map results.

Endoscopic ultrasonography plays an important role in the diagnosis and treatment of benign and malignant esophageal lesions. Xu et al[3] compared postoperative histological diagnosis with preoperative EUS diagnosis in patients with esophageal leiomyoma, and the diagnostic ACC of EUS was 88.6%. No treatment-related complications occurred in patients undergoing ER, and no recurrence was found in follow-up examination. In addition, Rana et al[32] found that small-probe EUS could predict the response to endoscopic dilation by describing the involvement degree of esophageal benign stenosis and measuring the thickness of the wall. Therefore, EUS plays an important role in the diagnosis of digestive tract diseases. In this study, after follow-up pathology, 726 of the patients were compared with endoscopic pathology. The misdiagnosis rate of endoscopic diagnosis in the lesion invasion range was approximately 9.5%; 41 patients did not show lesion invasion to the muscularis propria, but 36 of them pathologically showed invasion to the superficial muscularis propria. The patients with invasion of the tunica adventitia were all treated by surgery with an ACC rate of 100%.For the examination of submucosal lesions, the ACC of EUS was approximately 99.3%. The results of this study showed that endoscopic ultrasonography had a high ACC rate for the origin of submucosal lesions, and the misdiagnosis rate was slightly higher in the evaluation of the invasion scope of lesions.Misdiagnosis might be due to the different operating and diagnostic levels of endoscopists, unclear ultrasound probes, and unclear lesions. However, when we underestimated the depth of infiltration, the disadvantage became smaller because ER combined with additional chemoradiotherapy and surgical treatment could achieve good therapeutic effects[33-34]. However, the use of EUS is difficult for nonspecialist endoscopist. Therefore, a more advanced measurement method is necessary to help nonspecialists achieve diagnostic quality comparable to that of specialists without special techniques.

Deep learning is a new technique to evaluate image information objectively. In recent years, with the application of deep learning in various medical fields, remarkable progress has been made. AI is also becoming popular in ultrasonic examination. Kuwahara et al[5] predicted benign and malignant IPMNs by studying endoscopic ultrasonography of intraductal papillary mucinous tumors of the pancreas(IPMNs). The results showed that the SENS, SPEC, and ACC of AI for diagnosing malignant probability were 95.7%, 92.6%, and 94.0%, respectively, which were higher than those of human diagnosis.Although few studies have been conducted on AI in endoscopic ultrasonography, the diagnosis based on deep learning has shown its advantages. In this study, we found that deep learning algorithm could be used to clear the invasion scope and origin of lesions in endoscopic ultrasound images. In addition,VGGNet was used to perform classification tasks, and multiple superimposed filters were used to increase the nonlinearity of the whole function and reduce the number of parameters. The overall ACC,SENS, and SPEC were 82.49%, 80.23%, and 90.56%, respectively. However, in this study, the images of lesions invading the mucosal musculus and submucosa were easily confused with the images of lesions from the mucosal musculus, which may be caused by the lack of evident stratification due to tube wall compression or by the excessive noise in the ultrasound images caused by small lesions. No study has been conducted on the application of deep learning-based endoscopic ultrasonography in the esophagus or gastrointestinal tract, and the network framework applicable to endoscopic ultrasonography needs further screening and testing.

This study has some limitations. First, different probes or operators in endoscopic ultrasound images lead to uneven image quality levels, which may explain the low ACC in this study. Second, in this study, although the endoscopic ultrasonography probe could see seven layers of tube wall, 1-3 layers were classified into one category because the resolution of 1-3 layers was not clear in most images.Finally, the number of endoscopic ultrasound images was small, and all images were obtained from one center. Therefore, in the following study, we will obtain multi-center samples to increase the sample size and improve the image quality.

CONCLUSION

This study is the first to recognize esophageal endoscopic ultrasonography images through deep learning, which can automatically identify the lesion invasion depth and lesion source of submucosal tumors and classify such tumors, thereby achieving good ACC. In future studies, this method can provide guidance and help to clinical endoscopists.

ARTICLE HIGHLIGHTS

Research background

The esophagus is the narrowest part of the digestive tract and its diameter is not uniform. Endoscopic ultrasonography (EUS) can be used for high-precision evaluation of the esophagus. With the popularization of endoscopic ultrasonography in clinic, more and more endoscopic physicians are needed. The rapidly evolving field of machine learning is key to this paradigm shift.

Research motivation

Endoscopic ultrasonography is different from other ultrasound modalities in that ultrasonic examination is performed on the basis of digestive endoscopy, so the examiners should be professional endoscopic physicians with ultrasonic training. And as we face the interplay of increasing chronic diseases, ageing populations, and dwindling resources, we need to shift to models that can intelligently extract, analyze, interpret, and understand increasingly complex data. However, the research on the identification and classification of esophageal lesions by endoscopic ultrasonography has not yet been found.

Research objectives

The purpose of this study was to construct a framework of deep learning network to study the application of deep learning in esophageal EUS in identifying the origin of submucosal lesions and defining the scope of esophageal lesions.

Research methods

A total of 1670 white-light images were used to train and validate the convolutional neural network(CNN) system. In the study, VGGNet was used to perform classification tasks, and multiple superimposed filters were used to increase the nonlinearity of the whole function and reduce the number of parameters.

Research results

A total of 1115 patients were included in this analysis, including 694 males and 421 females. The overall accuracy, sensitivity, and specificity were 82.49%, 80.23%, and 90.56% respectively. The images of lesions originating from the muscularis mucosa were easily confused with the images of lesions invading the muscularis mucosa and submucosa.

Research conclusions

This study constructed a CNN system which can automatically identify the lesion invasion depth and the lesion source of submucosal tumors, and classify them, achieving good accuracy.

Research perspectives

In the future of medicine, artificial intelligence will reduce the workload of medical staff and make targeted tests more accurate, and in future studies, it can provide guidance and help to clinical endoscopists.

FOOTNOTES

Author contributions:Liu GS and He XP proposed the conception and design; He XP and Hua J were responsible for administrative support; Hua J and Huang PY provided the study materials or patients; Liu GS, He XP, Huang PY,and Zhuang SS collected and compiled the data; Wen ML did the data analysis and interpretation; all authors participated in manuscript writing and approved the final version of manuscript.

Supported bythe Natural Science Foundation of Jiangsu, No. BK20171508.

Institutional review board statement:The study was approved by the Ethics Committee of the First Affiliated Hospital of Nanjing Medical University (No. 2019-SR-448).

Informed consent statement:Informed consent for upper gastrointestinal endoscopy (UGE) was obtained from all cases.

Conflict-of-interest statement:The authors have no conflicts of interest to disclose.

Data sharing statement:No additional data are available.

Open-Access:This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BYNC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is noncommercial. See: http://creativecommons.org/Licenses/by-nc/4.0/

Country/Territory of origin:China

ORCID number:Gao-Shuang Liu 0000-0001-8450-7308; Pei-Yun Huang 0000-0003-1070-0724; Min-Li Wen 0000-0002-7945-9302; Shuai-Shuai Zhuang 0000-0003-3382-0008; Jie Hua 0000-0003-1243-0596; Xiao-Pu He 0000-0002-9915-5584.

S-Editor:Wu YXJ

L-Editor:Wang TQ

P-Editor:Wu YXJ

World Journal of Gastroenterology2022年22期

World Journal of Gastroenterology2022年22期

- World Journal of Gastroenterology的其它文章

- Prehabilitation prior to intestinal resection in Crohn’s disease patients: An opinion review

- Hepatocellular carcinoma, hepatitis C virus infection and miRNA involvement: Perspectives for new therapeutic approaches

- Metabolic aspects of hepatitis C virus

- Prognostic value of preoperative enhanced computed tomography as a quantitative imaging biomarker in pancreatic cancer

- Endoscopic classification and pathological features of primary intestinal lymphangiectasia

- Saccharomyces cerevisiae I-3856 in irritable bowel syndrome with predominant constipation