Homotopy based optimal configuration space reduction for anytime robotic motion planning

Yang LIU, Zheng ZHENG, Fangyun QIN

School of Automation Science and Electrical Engineering, Beihang University, Beijing 100191083, China

KEYWORDS Collision avoidance;Homotopy;Motion planning;Rapidly-exploring Random Tree (RRT);Robots

Abstract Anytime sampling-based motion planning algorithms are widely used in practical applications due to limited real-time computing resources.The algorithm quickly finds feasible paths and incrementally improves them to the optimal ones. However, anytime sampling-based algorithms bring a paradox in convergence speed since finding a better path helps prune useless candidates but also introduces unrecognized useless candidates by sampling.Based on the words of homotopy classes, we propose a Homotopy class Informed Preprocessor (HIP) to break the paradox by providing extra information. By comparing the words of path candidates, HIP can reveal wasteful edges of the sampling-based graph before finding a better path. The experimental results obtained in many test scenarios show that HIP improves the convergence speed of anytime sampling-based algorithms.

1. Introduction

Motion planning aims to find a dynamically collision-free path that connects an initial state to a goal state.Any robot motion planning algorithm intended for practical use must operate within limited real-time computing resources. This setup prefers ‘‘anytime” algorithms that quickly find some feasible but not necessarily optimal paths and then incrementally improves them to the optimality over time.1,2One practical approach towards robotic motion planning is anytime sampling-based planning featuring simplicity and theoretical proofs,3-6which is widely used in autonomous vehicles1,7and one-arm planning.2,6,8-10The basic idea is to randomly sample the continuous planning domain to construct the discrete graph representation of a configuration space so that the motion planning problem is converted to an optimal path computation on the graph. With tailored anytime strategies involved,1,11-13sampling-based algorithms can be executed in an anytime fashion. The anytime sampling-based planning algorithm class7includes RRT*,3,13-15PRM,*3RRG*,3FMT*,16BIT*,6,8etc. If used with a tailored anytime strategy, RRT1,11and PRM12,17also belong to the class.In motion planning,finding the optimal path in continuous configuration space with polyhedral obstacles is an NP-hard problem.18As the size of the discrete state space grows exponentially with the number of dimensions, the sampling-based approach is unable to obtain even satisfactory solutions within a limited time in highdimensional spaces.19On the other hand, anytime planning procedures usually apply to real-time situations,requiring that these algorithms obtain a satisfactory solution with limited time.Thus,there is a contradiction between the NP-hard problem and the time limitation.

One effective strategy to alleviate the contradiction is the branch and bound design paradigm, which aims to reduce the redundant searching space in the graph. The method of applying the design paradigm to motion planning is to design heuristics focusing on a subset of a workspace.18Namely, the elements in the subset are periodically removed when the heuristic cost of an element is worse than the current solution.Progress has been made based on the graph pruning strategy.Karaman et al.1applied graph pruning to an anytime version of RRT*that improves solutions during execution.They used the current vertex cost plus a heuristic estimate of the cost from the vertex to the goal so as to periodically remove states from the tree that cannot improve the current solution.Arslan and Tsiotras used techniques of a graph structure and Lifelong Planning A* (LPA*)20in the RRT# algorithm to prune the existing graph.21Each existing state is given an LPA*-style key, updated after the addition of each new state. Only keys that are less than the current best solution are updated, and only up-to-date keys are available for connection with newly drawn samples. Gammell et al. proposed Informed RRT*,13which involves an A* style heuristic to prune nodes that fall outside the elliptical boundary by estimating the upper bound of the path. A new addition to the informed strategy is batch informed tree,6,8,22which approximates the configuration space by utilizing batches of samples to define edge-implicit Random Geometric Graph (RGG23). Additionally, a BIT*-based anytime motion planning algorithm for dynamic environments is proposed. Dynamic constraints have been taken into consideration and the optimal planned path is feasible for implementation.7

However,these algorithms face a problem for their anytime property. For the exploiting process of the sampling-based approach, a vast number of states need to be sampled to find the one that shortens the current best path. Each additional sample increases the aggregate cost of collision checking on edges connecting to nearby status.Unfortunately,most efforts are performed on states with no opportunity to be part of an optimal path. The paradox lies in that only finding the path better than the current best path can result in the anytime approach recognizing that some states are nonoptimal candidates. Conversely, obtaining a better path requires more sampling,which introduces more useless candidates.Therefore,the exploiting process of sampling-based planning is timeconsuming for a better path, which typically leads to the slow convergence of the anytime approach.24To break the paradox,we need to obtain extra information before a path is constructed. One promising approach is to use a preprocessor to assist the exploiting process. If the preprocessor can identify some nodes that do not belong to the optimal path during an exploiting process, the fraction of edges undergoing collision checking would be significantly reduced. In this way,focusing more computational resources on the space that needs to be exploited makes the algorithm converge faster to the optimal solution.In short,a subset of configuration space containing the optimal path obtained by a preprocessor could alleviate the paradox that anytime algorithms suffer. Therefore, a research question arises: How can the configuration space in the preprocessing phase be refined to improve the convergence speed?

Mainly two types of preprocessors, swamp hierarchy and goal bounding, have been proposed to address this question.Swamp hierarchy reduces the configuration space by identifying and excluding dead-ends (Dead-ends are the area where there is no pathway to the goal except back out via the entrances). It can reduce search costs without compromising search optimality.25-27However,this method can only be used in grid-based planning algorithms instead of sampling-based ones. The second method, geometric containers,28is a general class of approaches to problems that can be mapped to a metric space. The search space of a planning problem can be reduced by extracting geometric information from a given layout of the graph. Moreover, the precomputed information is encapsulated into geometric objects(containers)in the preprocessing phase. Rabin combines geometric containers with the A-star and jump point search plus algorithm.29The preprocessor improves these algorithms nearly 10 times faster.However,a huge search space leads to high time consumption of preprocessing.The overhead scale of geometric containers is large for the anytime sampling-based method.

Anytime motion planning problem usually requires a solution in limited time or even real time,such as a replanning task for UAV.30-34With a preprocessor involved,a sampling-based planning algorithm is expected to give a better-quality solution within limited time.Although the existing two types of preprocessors can help constrain searching space, Swamp hierarchy cannot be applied to sampling-based motion planning directly,and geometric containers require rather long time for a satisfied result. Therefore, a new preprocessor is needed to help provide a high-quality result for time-limited practical applications. We study the research question from the view of topology based on the words of homotopy class,35which can improve the convergence speed of all sampling-based algorithms. In group theory, a word is any written product of group elements and their inverses. Moreover, every path has its word as the label, which can describe the homotopy class where the path belongs.Therefore,the word could be regarded as the homotopy invariant in topology.36Here,the homotopic invariant indicates that two paths satisfy the relation of homotopy equivalence if they have the same word.Since path homotopy invariance is based on feasible paths that have the same initiate and goal states,it is difficult to be applied to unfinished paths.The introduction of the word in group theory can apply homotopy invariance to unfinished paths by specifying the access order of sampling states.36Thus the word of homotopy class could serve as a new heuristic to accelerate the convergence speed of sampling-based methods. Bhattacharya et al.35introduced the word of homotopy class for a suitable presentation of paths and applied it to a task in which boats with booms in skimming operations are utilized for cleaning oil spills or removing debris on the surface of the water.They proposed automated solutions by constructing presentations of fundamental groups and solved the word problem in such groups.35,37The difference lies in that we aim to find the homotopy class containing the optimal path, while Bhattacharya’s work is aimed to find the local optimal path based on a given homotopy class.

In this work, based on the word of the homotopy class,configuration space is restrained by the Homotopy class Informed Preprocessor (HIP) for sampling-based algorithms.The core idea is partitioning words of all feasible paths from start to goal into two collections by a specific graph under the bound of pruning criteria. One collection containing the optimal path in R2is the optimal collection, and the other is not. Here, the word is a feature to indicate the access order of sampling states. The optimal collection of words constrains some specific orders of edges consisting of the optimal path.Specifically, once the word of an incomplete path is different from the word of any homotopy class in the optimal collection,this path could be abandoned for its inconsistent access order.The specific graph consists of all optimal paths with different words from start to goal in grid-based configuration space.Finding all optimal paths with different words in grids is time-consuming tasks. Fortunately, we found a time acceptable algorithm to obtain these paths and their words. The designed algorithm is based on the Jump Point Search (JPS)algorithm,38which is widely employed in areas such as robotics and video games.29,39JPS successfully deals with symmetric redundancy.40Here,symmetry,in this case,is shown as a path (or a path segment) sharing the same start and goal,having the same length and otherwise identical except for the order in which movement occurs.

Based on the construction of words and the specific graph, we present a preprocessing algorithm named Homotopy class Informed Preprocessor (HIP), which can be used as the preprocessor for sampling-based algorithms. It can reduce the search space fast to improve the convergence speed with a little overhead cost, and it can be directly applied to all sampling-based algorithms. HIP combines the benefits of homotopy invariants and sampling-based techniques to reduce the aggregate cost of collision checking to increase convergence speed.

The contributions of this paper are listed as follows:

(A) Based on the jump point search algorithm,we propose a multiple jump point search algorithm. The algorithm could obtain the graph consisting of all optimal paths in the discrete space. The graph is utilized to determine which homotopy class belongs to the optimal collection,and the optimal collection provides evaluative information on shrinking the search space.

(B) Based on the words of homotopy classes from the focused partition, we propose a Homotopy class Informed Preprocessor (HIP). The preprocessor is able to check whether words of path candidates belong to the optimal collection,which reveals that wasteful edges of the sampling-based graph before a better path are obtained.Moreover,the empty word is intended to indicate the optimal collection, which can significantly reduce the computational overhead of words comparison.

The structure of this paper is listed as follows.In Section 2,the problem statement is introduced, including objective, preliminary and mathematical symbols.In Section 3,the methodology of the preprocessor solving the research question is introduced. The experimental setup and results with related discussion are presented in Section 4 and Section 5, respectively.Concluding remarks are stated in Section 6.The appendix presents a detailed proof procedure.

2. Problem statement

2.1. Objective

In this work,a preprocessor is designed to obtain the subset of the configuration space containing the optimal path. According to path homotopy invariance,35a partition of the configuration space consists of two parts.One part contains the word of the optimal path in R2(referred to as the optimal collection)and the other does not. The partition shows that the sampled graph contains the component of the optimal path. In addition, words in the optimal collection constrain the sequence order of the path. With the guide of the access order, many unfinished redundant paths could be pruned before they become path candidates.

2.2. Preliminary

In this part, we introduce fundamental definitions and concepts of the planning problem, topology and set theory for the following statement.

2.2.1. Principle

A planning problem3is defined as a triplet (Wfree,winit,wgoal).LetW=.{(x,y)|x∈[0,X],y∈[0,Y]} be the configuration space, a.k.a, scenario, whereX,Y∈R+andW⊂R2. LetWobsbe the obstacle region, which is an open set, and denote the obstacle-free space asWfree=.cl(W{Wobs),where cl(·)represents the closure of a set.Here,=. means that the equation is a definition. The initial conditionwinitbelongs toWfree, as does the goal condition thatwgoal∈Wfree.

Based on the problem definition, a feasible path and an optimal path are formalized as follows:

A feasible path σ:[0,1]→R2is a sequence of states,where σ(0)=.winit, σ(1)=.wgoaland σ(r)∈Wfreefor allr∈[0,1].

An optimal path σ*:[0,1]→R2is the feasible path satisfying σ*=.argmin{s(σ):σ ∈∑}, where ∑is the set of all feasible paths ands(·):∑→R≥0is a chosen cost function.

2.2.2. Partition, class and collection

In mathematics,a partition of a set is an expression of a set as a disjoint union of subsets.A class used in mathematics mainly represents a synonym for the term‘‘set”for denoting arbitrary collections of objects possessing some definite property of indication①For example, homotopy class is the set with respect to the homotopy relation.. In this paper, ‘‘collection” is regarded as the set whose element is a set of other objects②For example, a collection of homotopy classes is the set that its elements are classes which are the sets of paths with respect to homotopy relations..

2.2.3. Homotopy

A homotopy between two continuous functionsfandgfrom a topological spaceXto a topological spaceYis defined to be a continuous functionH:X×[0,1]→Yfrom the product of the spaceXwith the unit interval[0,1]toYsuch that,ifx∈X,thenH(x,0)=f(x) andH(x,1)=g(x).36The two bold paths shown in Fig. 1 are homotopic relative to their endpoints.The light trace represents one possible homotopy.

Fig. 1 An example of homotopy relation.

2.2.4. Homotopy classes of feasible paths

The definition of a homotopy between two arbitrary paths with the start and goal fixed is a deformation that one path could be continuously deformed into the other without intersecting any obstacle. These continuously deformed paths are called homotopic, and these paths belong to the same homotopy class. The homotopy class of a feasible path σ is denoted as[σ].35Here,Fig.2 gives an example of homotopy classes.In Fig.2(a),homotopy continuously warps one path into another(from σ0to σ3).Therefore, they belong to the same homotopy class. In Fig. 2(b), the image of the path cannot be continuously warped over a hole in R2, which causes a discontinuity.In this case,the two paths are not homotopic.These two paths belong to different homotopy classes.4

2.2.5. Word of a path

In group theory, a word is any written product of group elements and their inverses. Moreover, every path has a word to label its homotopy class. To define the word of a path, we listed construction in the configuration space R2-O,③means Euclidean plane punctured by obstacles.35,41whereOis the set of obstacles, and the word of a path is defined based on the construction.

(1) Place representative points inside theith connected component of the obstacles Oi⊂O.

(2) Construct nonintersecting rays from the representative points, denoted as r1,r2,...,rn.

Given a path,suppose that there is a node tracing the path.Once the node crosses a ray,we push the ray(ri)as an element into the word. Clockwise direction and anti-clockwise direction are opposite elements, denoted asandrirespectively.Fig. 3 gives an example to show constructed rays, and the word of the feasible path is

2.2.6. Word of a homotopy class

The word of a feasible path σ is a complete homotopy invariant for paths connecting the same set of points, i.e.,σ1,σ2:[0,1]→R2-Oare homotopic if and only if word(σ1)=word(σ2), where σi(0)=winit, σi(1)=wgoal.35

The word of a homotopy class is equal to the word of a feasible path if and only if σ belongs to all homotopically equivalent paths of the homotopy class [σ].

2.3. Preview of symbols

For ease of presentation, symbols used in the remaining part are introduced as follows:

●σ: A feasible path.

●σ*: The optimal path.

●σgrid: A feasible path in grid scenario.

●[σ]: A homotopy class contains the path σ.④If a feasible path could be deformed into σ without intersecting any obstacle, then the feasible path belongs to [σ].

●[σ*]: The homotopy class including the optimal path, short for the optimal homotopy class.

●∑: The set of all feasible paths.

●||σ||: The distance of a feasible path.

●C(·): The collection of homotopy classes that satisfy some criteria.⑤i.e., C(||σ||≤bound) is the collection of all homotopy classes that contain the path with its length shorter than the bound value.

●word(σ): The word of a feasible path.

●word([σ]):The word of a homotopy class,which is equal to word(σ).

It is worth noting that for narrative clearance, we suppose that there only exists one optimal path that is not limited.Otherwise, [σ*] may contain multiple optimal paths belonging to different homotopy classesandare two different paths that satisfy the optimal property).

3. Methodology of a homotopy class informed preprocessor

In this section,we elaborate on our proposed Homotopy class Informed Preprocessor (HIP) algorithm. Section 3.1 presents the methodology of the algorithm which contains 3 steps.The detail of each step is clarified in Sections 3.2-3.4,respectively.

3.1. Framework of methodology

The objective of the HIP algorithm is to find the subset of the configuration space containing the optimal path and prune path candidates, which fall outside the subset. Three procedures are explored to obtain this subset, which are listed as follows:

Step 1.Use homotopic invariants to obtain disjoint homotopy classes as a partition. Each homotopy class is a set of feasible paths which satisfy homotopy relation.

Step 2.Use the Multiple Jump Point Search(Multiple JPS)algorithm to obtain all-shortest paths in a discrete grid space as the criteria of the collection. Then the collection of homotopy classes containing the optimal path σ*could be deduced, a.k.a., the optimal collection.

Step 3.Construct words of the homotopy classes of the optimal collection. Based on the word requirement, the sampling-based algorithm can prune the path candidates not satisfying the requirement.

Fig. 2 Demonstration of homotopy classes.

Fig. 3 Word of a feasible path.

Specifically, Step 1 obtains disjoint classes, denoted as[σ]1,[σ]2,...,[σ]nwith ∪i=1,2,..,nσi=∑ and σi∩σj=Ø fori,j∈[1,n]. Step 2 obtains the set(s) containing the optimal path(s), which is referred to as the optimal collectionC(·).Here, the criteria are constraints of the collection definition calculated by the Multiple JPS algorithm. Step 3 constructs words of homotopy classes belonging to the optimal collection.These words can filter out invalid path candidates through words matching. With reasonable construction, words matching can be transformed to string length matching, which reduces a large amount of calculation.

In the following three subsections, we elaborate on each step of our methodology.Step 1,Step 2 and Step 3 are clarified in Sections 3.2-3.4, respectively.

3.2. Role of homotopy classes

Anytime sampling-based algorithms can iteratively fetch the minimum cost path represented in the current sampling graph.The asymptotic optimal property is guaranteed by a sufficient sampling process.3The optimal path is denoted as,wherekis the iteration of the observed optimal.

The typical process of an anytime algorithm to generate the sequence of optimal pathsis as follows,with Fig. 4 as an example. First, the algorithm yields an initial feasible path with a long path distance (see Fig. 4 (a)). With iterations, the shape of paths jumps over obstacles from one to another, denoted as the process of path jumping. During this process, the algorithm gradually improves the path. The more iterations, the better path generated (see Figs. 4 (b) and (c)).Finally, a good enough result is obtained (see Fig. 4 (d)).

The process of path jumping can be characterized by a homotopy class. According to the property of the homotopy class introduced in Section 2.2.4, we explain the path jumping process from the aspect of homotopy class. First, the algorithm yields a ‘‘bad” path that does not belong to the optimal homotopy collection(see Fig.4(a)).With iterations,when the shape of paths jumps over the obstacle,the homotopy class of the path improves significantly(see Figs.4(b)and(c)).Finally,the algorithm finds the optimal homotopy class and a better result can be exploited (see Fig. 4 (d)). Therefore, the homotopy class is able to describe path jumping and then obtain an optimal partition.

3.3. Deduction of optimal collection

The purpose of this subsection is to obtain the collection of homotopy classes containing the optimal path. The basic idea is listed as follows. The set of all feasible paths ∑could be divided into disjoint homotopy classes.35In each homotopy class, there exists a local optimal path. The optimal path σ*is among these local optimal paths. If the boundary of path distance is given, we can filter out some homotopy classes whose local optimal path is worse than the boundary. Thus,we just need to exploit the remaining homotopy classes,which are regarded as the optimal collection.In practice,it is computationally expensive to calculate these local optimal paths.Therefore, we transform the configuration space into discrete grids to approximate distances of local optimal paths, and the boundary is set as(the shortest path in discrete grids).Technically,we map all the shortest paths to the homotopy classes which they belong to, and the optimal collection consists of these mapped homotopy classes.

In this paper, the Multiple JPS algorithm is applied to obtain all optimal paths in discrete grid space to deduce the collection of homotopy classes containing σ*. Multiple JPS originated from JPS algorithm,and the difference is that Multiple JPS obtains all feasible paths and JPS obtains only one.The deduction procedure calculates the word of the path obtained from Multiple JPS. The homotopy classes by which these words of paths are represented constitute the optimal collection, as shown in Fig. 5. JPS algorithm38reduces the redundant search space by a pruning strategy since there are several equivalent paths⑥Equivalent paths are different paths with the same path distance.in a basic grid.⑦The basic grid is a grid of squares covering the entire scene.In Refs. 38,39,the straight first path and diagonal first path are proposed,and the diagonal first is selected as the only available type of path. For Multiple JPS, both types of paths are utilized to deduce the collection of homotopy classes containing σ*, and other equivalent paths are regarded as invalid paths (see Fig. 6). So, many redundant shortest paths are eliminated.

Fig. 4 A typical process of an anytime algorithm to generate the sequence of optimal paths.

Fig. 5 An example of subsets construction.

Fig.6 Two available types of paths for Multiple JPS(The yellow path and the orange path are a straight first path and a diagonal first path, respectively. Other paths that contain the blue segment are invalid.).

For the subsequent process of deducing the collection,availableare required.⑧Available σ represents straight first paths and diagonal first paths introduced in Fig. 6.For convenience, we require an algorithm to generate the subgraph such that the edges of each availablebelong to this subgraphG(V,E), wherewithirepresenting the index of the optimal path andnthe number of allMultiple JPS is the same as JPS when obtaining the first optimal pathThe distance ofis regarded as a boundary. Then, candidate nodes whose cost values are worse thanin the open list of JPS are filtered by the boundary. The nodes whose cost value ispreserve, and edges of other available optimal paths can be found by exploiting these preserved nodes.Finally, the graph consisting of edges of available optimal paths is obtained.

In practice, changing from a continuous domain to a discrete domain will result in the loss of precision, which may affect the content of the optimal collection deduced by the Multiple JPS. On one hand, the resolution of the discretization, such as the size of the discrete grid, could influence the deduction of the optimal collection. On the other hand, the position of the reference point in the grid⑨The reference point is the corresponding coordinate of the discrete grid in the continuous domain space.can also have an influence on the deduction.Notably,in some cases,the homotopy class[σ*] is lost because of the rules of grid sampling. To overcome this problem,proper grid size should be selected and a loose boundary is required to ensure that the deduced optimal collection contains [σ*]. Here, grid size, denoted assize,refers to the side length of a basic grid and the loose boundary is a path distance that is longer thanConsidering that the change of the reference point is limited to the grid, the influence on the path length is limited to the influence of one grid.That means the path may go through one more grid than σ*. The worst case is that the path moves diagonally on the additional grid.Therefore, we set the distance of optimal pathsize as the loose boundary to filter candidate nodes of the JPS algorithm.

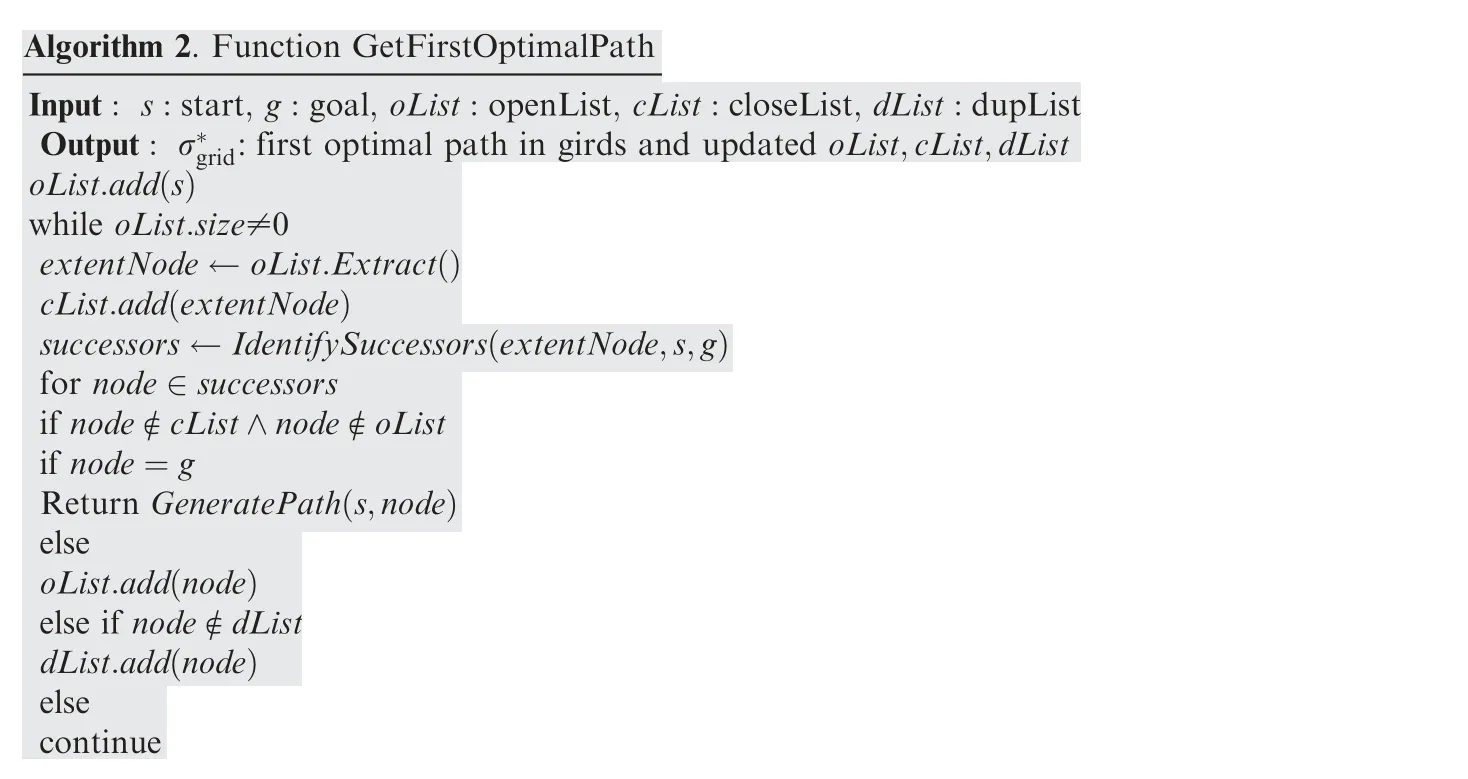

Algorithm 1 consists of three procedures. The first procedure aims to obtain an optimal path in a grid-sampling scenario,which is described in Algorithm 2 in detail.The calculation is almost the same as JPS38except that an open list,a close list and a duplicated list are collected for the following procedures.The meaning of the open list and the close list are consistent with those of JPS algorithm. For the duplicated list, it aims to record other optimal path segment candidates, specifically,the straight first path. Here the straight is consistent with the description of JPS,38which has the opposite meaning to the diagonal. The second procedure introduced in Algorithm 3 explores other optimal paths from the open list until there is no available node in the open list to extract. Finally, the last procedure introduced in Algorithm 4 exploits the duplicated list to find straight first optimal paths, for these paths may belong to different homotopy classes.

Algorithm 1. Multiple JPS Input: s:start, g:goal, oList:openList, cList:closeList, dList:dupList, size:grid size Output: G(V,E): graph of multiple optimal path in grids oList,cList,dList ←φ,φ,φ G(V,E)←φ σ*grid ←GetFirstOptimalPath(s,g,oList,cList,dList)boundary ←■■■2■×size+||σ*grid||G.add(σ*grid)SearchOpenList(s,g,boundary,oList,cList,dList,G)SearchDupList(s,g,boundary,oList,cList,dList,G)Return graph;

Algorithm 2. Function GetFirstOptimalPath Input: s:start, g:goal, oList:openList, cList:closeList, dList:dupList Output: σ*grid:first optimal path in girds and updated oList,cList,dList oList.add(s)while oList.size≠0 extentNode ←oList.Extract()cList.add(extentNode)successors ←IdentifySuccessors(extentNode,s,g)for node ∈successors if node ∉cList ∧node ∉oList if node=g Return GeneratePath(s,node)else oList.add(node)else if node ∉dList dList.add(node)else continue

It is worth noting that theIdentifySuccessorsfunction is exactly the same as JPS algorithm proposed.38TheExtractfunction means popping the node whose cost is the minimal in the open list. TheGeneratePathfunction obtains the path from the first parameter to the second one.the‘‘bad”incomplete path,nonintersecting rays for constructing words should not intersect with edges of the graph obtained in Section 3.3. Once the word of an incomplete path is different from that of any homotopy class in the collection,this incomplete path could be pruned. Furthermore, with the

Algorithm 3. Function SearchOpenList Input: s:start, g:goal, l:boundary, oList:openList, cList:closeList, dList:dupList,G(V,E):graph of multiple optimal paths in grids Output: updated oList,cList,dList,G(V,E)oList ←{node:node ∈oList ∧node.cost ≤l}while oList.size≠0 extentNode ←oList.Extract()cList.add(extentNode)successors ←{node:node ∈IdentifySuccessors(extentNode,s,g)∧node.cost ≤l}for node ∈successors if node=g ∨node ∈V from G(V,E)G.add(GeneratePath(s,node))else if node ∉cList ∧node ∉oList oList.add(node)else if node.cost ≤l ∧node ∉dList dList.add(node)else continue

Algorithm 4. Function SearchDupList Input: s:start, g:goal, l:boundary, oList:openList, cList:closeList, dList:dupList,G(V,E):graph of multiple optimal paths in grids Output: updated oList,cList,dList,G(V,E)dList ←{node:node ∈dList ∧node.cost ≤l}while dList.size≠0 extentNode ←dList.Extract()cList.add(extentNode)successors ←{node:node ∈IdentifySuccessors(extentNode,s,g)∧node.cost ≤l}for node ∈successors if node=g ∨node ∈V from G(V,E)G.add(GeneratePath(s,node))

3.4. Path pruning strategy based on words of paths

The definition of homotopy is based on a full path σ. Here,‘‘full” is used to distinguish incomplete paths that go from the same start configuration but do not reach the same target configuration.On one hand,we aim to figure out which incomplete path should be pruned by homotopy.On the other hand,only by supplementing the incomplete path to a full one can homotopy work. This paradox means that homotopy cannot indicate incomplete paths that should be pruned, but the words of homotopy classes are able to indicate such paths.

Our target in this subsection is to prune invalid path candidates. The word is designed to indicate the incomplete path that does not belong to the optimal collection. To indicate elaborate construction of words, all different available homotopy classes belonging tohave the same word, a.k.a, the empty word. The empty word implies that we do not need to memorize available words and match specific ones for pruning. The key idea is that an incomplete path should be pruned if its word length is greater than zero. For a single-query sampling-based algorithm (e.g.,RRT* and FMT*), once a new steering edge added into the tree intersects a ray, the word of an incomplete path containing this steering edge is not zero. Thus this new steering edge and its corresponding sampling configuration could be pruned.

Recall that the word is defined by rays, as described in Section 2.2.5. The construction of rays consists of two steps.

Step 1.Place representative points inside theith connected component of the obstacles Oi⊂O.

Setp 2.Construct nonintersecting rays from the representative points, denoted as r1,r2,...,rn.

For Step 1,we choose a geometric center as the representative points.If the center does not locate in the obstacle,then a random point inside the obstacle is selected. For Step 2, we construct word rays to avoid intersecting with any edge of the graph. First, the line connecting the start position and the goal position is regarded as the baseline.Then for each representative point,two directions are available for rays that are perpendicular to the baseline. The direction that does not intersect any path is selected as the ray direction.If there exists an overlapped ray, move the representative points away from the geometric center. If a ray intersects with both directions of the edges, this representative point should be omitted.

Algorithm 5 shows the pseudocode for constructing rays of the word.We use the center of the obstacle to check if it is necessary to construct the ray. Based on the fact that the ellipse generated by the informed heuristic (see Algorithm 5, line 6)is exactly an admissible boundary for potential shorter paths(see Fig. 7), no path candidate could break the ellipse boundary. Otherwise, the cost of the new candidate would be worse than the current best path.Here,we name this admissible sampling domain as the informed workspace. According to the description of Algorithm 6, these rays point to the outside of the ellipse. Therefore, the elliptical boundary has already removed those unqualified path candidates before these rays work, and we do not need to check these rays anymore. Further, we give an assurance that although the obstacle with its center outside the ellipse is omitted, the collection represented by the empty word will not change (For detailed proof, see Lemma 1 in Appendix A).

Lemma 1.Let parallel rays are constructed for each obstacle.If the obstacle with the center outside the ellipse is omitted, the lettersofword([σ])wouldnotchange,whereword([σ])∈C(||σ||≤||σ*grid||).

Algorithm 6. Function FindRay Input:lRay:one direction ray, rRay:the opposite direction ray,G(V,E):graph of multiple optimal paths in grids Output: ray: available ray if lRay not cross edge ∈E from G(V,E)Return lRay else if rRay not cross edge ∈E from G(V,E)Return rRay else Return φ

In Algorithm 6,we check two candidates of rays with opposite directions and pick up an available one.If there is no available candidate, then this representative point is omitted. Although the number of rays may be less than the number of obstacles that could lead to incomplete homotopy invariants,this design makes all different available homotopy classes have the same word, a.k.a, the empty word. Moreover, we give an assurance that the empty word could be a sufficient and necessary condition to determine if it belongs to the optimal collection (For detailed proof, see Theorem 1 in Appendix A).

Theorem 1.According to the construction procedure of the word described in Algorithm 5 and Algorithm 6, the empty word is a sufficient and necessary condition to determine if it belongs to the optimal collection of homotopy classes(C(||σ||≤||σ*grid||)).

Algorithm 5. Function SearchDupList Input: s:start, g:goal, l:boundary, obstacles:set of obstacles,G(V,E):graph of multiple optimal paths in grids Output: rayList: list of rays rayList ←φ initDirection ←Vector(g-s)leftDirection ←Vector(initDirection.Y,-initDirection.X)rightDirection ←-leftDirection for obstacle ∈obstacles if Straight disance from s through obstacle.center to g is longer than l continue else if ∀ray ∈rayList s.t. obstacle.center ∉ray ∧obstacle.center ∈obstacle basePoint ←obstacle.center else basePoint ←randomPoint s.t. randomPoint ∈obstacle ∧randomPoint ∉ray,∀ray ∈rayList lRay ←ConstructRay(basePoint,leftDirection)rRay ←ConstructRay(basePoint,rightDirection)ray ←FindRay(lRay,rRay,G)if ray≠NULL rayList.add(ray)Return rayList;

Fig. 7 Informed workspace

Fig. 8 Performance comparison between original algorithms and HIP involved algorithms

Table 1 Statistics for original algorithms and HIP involved algorithms.

4. Experimental setup

In this section,we describe the experimental setup in detail.We first introduce a brief overview of the simulation setup, and then present experiment configurations about parameter settings of scenarios and algorithms.Finally,the evaluation measure is described as the guideline to investigate the advantage of HIP numerically.

To make the description clearer,we recall the following definitions and concepts:

(A) The HIP involved algorithm refers to the motion planning algorithm with its scenario preprocessed by the HIP algorithm.

(B) The informed workspace represents the ellipse area whose focal points are the start and goal position of the scenario, and the value of the major axis equals the maximum distance of paths in this scenario calculated by the HIP involved algorithm (see Fig. 7 and Algorithm 5, line 6)⑩Here the lbest in Fig.7 equals to the distance of the first feasible path obtained by an anytime algorithm.

(C) Convergent solution denotes the optimal result of anytime algorithm obtained before time out.

To validate the effectiveness and efficiency of our proposed HIP algorithm, RRT and RRT*, which are widely used with good performance in different scenarios, are selected as baseline algorithms. We perform HIP involved algorithms and baseline algorithms⑪All experimental algorithms are RRT, RRT*, HIP involved RRT and HIP involved RRT*.in 100 stochastic simulation scenarios with various obstacle distributions. In addition, we repeat our algorithms 20 times in each scenario to avoid randomness and obtain statistical results. Meanwhile, during each algorithm execution round,we save all feasible paths with their distance and generating time. To compare each optimal path in different scenarios,we use the min-max normalization to normalize the distance of the path.

wherex=(x1,x2,...,xn) represents distances of all paths obtained in a scenario from 20 times execution andziis theith normalized distance. For each scenario, we take the distance of the shortest path as min(x) and the longest path as max(x) in normalization.

4.1. Experimental configuration

100 randomly generated scenarios are used for the experiment.The range of all scenarios is set to 100×100 with 5-15 obstacles randomly distributed.Here,obstacles consist of circles and polygons with random size. In each scenario, there always exists a feasible path and an optimal path.

As for parameter settings of baseline algorithms, a biased sampling strategy and a step length parameter are taken into consideration.The probability of biased sampling ζ flows from 0 to 0.3. The step length λ is chosen from 5 to 20. Through comparison,we find that the average of the variance of 20 path results in each scenario is the lowest when λ=10 and ζ=0.05.The lowest variance means that the results of the comparison experiment are least affected by random interference. Therefore, we choose λ=10 and ζ=0.05 as the experimental parameter setting.

Considering the influence of the parameter for HIP, the critical parameter is the step size of the Multiple JPS algorithm.If the step size is too large,the low resolution may cause the preprocessor to miss the optimal word (word(σ*)) and finally lose the optimal path.On the other hand,if the step size goes too small, HIP will obtain the same result at the cost of more calculations. In our experiment, we try 0.25, 0.5, 1 and 2 and choose 1 as the experimental parameter.All simulations were coded in C#and performed on the Windows 10 platform,with I5-4300 Intel CPU and 8G DDR3 RAM.

4.2. Evaluation measure

For the planning problem,the series of solutions found by the anytime algorithm finally converges to the optimal path. We use convergence speed to compare the performance of each algorithm with and without HIP.Specifically,we compare distances of paths obtained from the HIP involved algorithm and baseline algorithm at some specific time thresholds. Here,thresholds are set from 0 to 10 seconds with an interval of 1 second. Then, we divide the collected data into thresholdbased groups for comparison. For example, we select a scenario and execute RRT*and HIP involved RRT*with 10 seconds. Then, we obtain two series of paths⑫For anytime algorithm, a new path is generated based on the old one,iteratively.So we could obtain a series of paths with their distance monotonically decreasing.with a timestamp.⑬Timestamp is the time when the path is generated.We compare the quality of the best results in these two series when the algorithms run to specified time thresholdst,wheret=1,2,3,...,10.To illustrate the effectiveness of the algorithm in different scenarios, we also use the complexity of a scenario, which is defined by the proportion of the obstacle area in the informed workspace. Moreover, 3 typical scenarios are selected to show convergence improvement in more dense timestamps. Finally, the preprocessing time of each simulation is collected to demonstrate the overhead of the HIP algorithm.

5. Experimental results

This section evaluates the effectiveness of HIP quantitatively through experiments and simulations. Based on the evaluation measure introduced in Section 4, we first present a result overview of performance comparison. A total of 8000 simulations are executed to demonstrate the validation of the HIP algorithm. Then, we demonstrate the effectiveness of HIP in detail by a discussion on specific scenarios.These scenarios have complex narrow corridors, which are challenging to sampling-based algorithms. Finally, we discuss the overhead of HIP based on collected experimental data.

5.1. Statistical validity

Two versions of algorithms,the original and the HIP involved,are prepared for comparison. For each algorithm, 2000 simulations are executed based on experimental setup parameters in Section 4.1. For each simulation, we collect the length of the optimal path obtained by the anytime algorithm between 1 second and 10 seconds at 1-second intervals.The normalized path lengths of two versions with the same runtime are compared to evaluate the convergence speed. The experimental results grouped by running time are presented in Fig. 8. As shown in the figure, the horizontal axis in the two subfigures represents the query time of paths, and the vertical axis represents the normalized path length obtained by two versions of algorithms. Green and yellow boxes denote the original algorithm and HIP involved algorithm,respectively.The triangles in each box represent the mean value of the normalized distance.Detailed statistical data presented in Fig.8 is listed in Table 1.It is clearly shown in the figure and the table that, at the same query time, the median and mean value of paths’ distance obtained by the original version are longer than that preprocessed by HIP, which indicates that HIP accelerates the convergence of the anytime algorithm to the optimal path.Meanwhile, HIP decreases the variance of the results and gets more stable results in practice. In general, the median, mean,and ariance of the RRT experiment result decrease from 0.0624 to 0.0283, 0.1345 to 0.0417 and 0.0318 to 0.0019,respectively. For RRT*, the median, mean, and variance decrease from 0.0189 to 0.0150, 0.0740 to 0.0359 and 0.0201 to 0.0031, respectively.

To demonstrate the improvement degree in different scenarios, performance graphs are presented in Fig. 9 according to the complexity of the scenario.The proportion of the obstacle area in the informed workspace, named as the obstacle ratio,is used to indicate the complexity.In the figure,the horizontal axis represents scenario complexity, and the vertical axis represents the average normalized distance of converged paths.⑭Here the l best(l need to be italic and best need to be in subscript)in Fig.7 equals to the distance of the first path obtained by anytime algorithm.Every ‘‘+” symbol in the graph represents an averaged⑮All experimental algorithm are RRT,RRT*, HIP involved RRT and HIP involved RRT*.normalized distance of converged paths in a specific scenario. The closer the image of the probability density locates to the left, the better the algorithm’s performance is.HIP involved algorithms improve the performance with lower variance.

Fig. 9 Scenario obstacle ratio distribution based performance comparison between original algorithms and HIP involved algorithms

5.2. Illustrative scenarios

To demonstrate the effectiveness of the HIP algorithm clearer,3 scenario cases are selected to show the performance in Fig. 10. These scenarios have many narrow or twisted corridors connecting different parts of free space, which is a challenge for sampling-based planning algorithms. For every scenario, each version of the algorithms is executed 100 times.Consistent with the previous box plot, the horizontal axis is the query time of paths,and the vertical axis is the normalized path length obtained by two versions of the algorithms. The only difference is that the time scale in the horizontal axis is different, because the time required for the convergence of each scenario is different. From the experiment result, we can see that when the algorithm running time exceeds a small threshold, the HIP involved algorithm has better performance in the mean,the median and the variance.Moreover,the mean and median value of HIP involved algorithm change more drastically in the early period,which means that the HIP algorithm accelerates the convergence speed of planning algorithms.

Fig. 10 Typical scenarios and performance comparison

Here, we show an example of how HIP prunes search spaces in Fig.11.Fig.11(a)represents the expanding tree generated by the original RRT* algorithm, and Fig. 11(b) represents HIP involved result. In the figure, the expanding tree consists of nodes and edges in green, and rays of words are bold segments labeled in orange. Rays in the figure show how to reveal unrecognized useless samples and edges.In general, HIP preprocessor prunes the useless search space, which would consume lots of computational resources in the original algorithm.In this way,focusing more computational resources on the space that needs to be exploited makes the algorithm achieve higher precision in the same running time. HIP indicates the optimal collection as the empty word, which is regarded as a necessary criterion for the optimal path.The criterion is that no edge could go across any ray.If the edge contains the new sampling against the criteria, the HIP involved algorithm is able to abandon the new sampling. Therefore,useless sampling is avoided, and better performance is achieved.

Fig. 11 Expanding trees generated by original RRT* and HIP involved RRT*

Fig. 12 PDF of preprocessing time

5.3. Overhead analysis

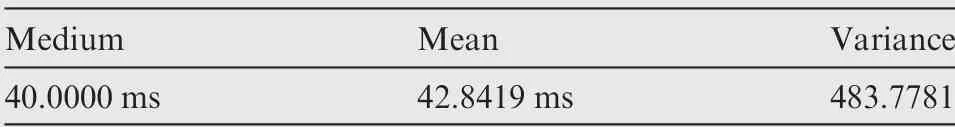

Last but not least,it is common knowledge that any preprocessor has an overhead problem. Any preprocessor gains its benefit with time consumption. For this reason, in Fig. 10(b), the HIP involved algorithm performs worse with 0.4 seconds. On one hand, in this scenario, the preprocessor consumes 63.25 milliseconds on average, which influences the path distance in a short time. On the other hand, the subsequent running result shows that the benefits brought by the HIP algorithm are well worth the effort. To present the time consumption of HIP,the Probability Density Function(PDF)of the preprocessing time for 100 previously generated scenarios is illustrated in Fig. 12. A kernel-density estimation using Gaussian kernels is utilized to estimate the distribution of preprocessing time. Statistics are listed in Table 2. The average execution time of HIP is 42.84 ms, which is acceptable to deal with anytime problems.

In general,HIP performs better than the original version at each comparative moment.Even though Fig.10(b)shows that the preprocessing version is not as good as the original one due to overhead at the first moment,HIP performs better in terms of the medium, mean and variance in the following moments.From the experimental statistics above,HIP can improve convergence speed with an overhead of approximately 42 milliseconds for preprocessing.

Table 2 Statistics of preprocessing time for all experimental scenarios.

6. Conclusions

Sampling-based anytime algorithms face a problem in finding a better path to prune useless candidates while introducing unrecognized useless candidates by sampling. In this paper,we propose a Homotopy class Informed Preprocessor (HIP)to break the paradox. HIP is able to check whether words of path candidates belong to the optimal collection. In this way, HIP reveals wasteful edges of the sampling-based graph before a better path is obtained. To obtain the optimal collection, the multiple jump point search algorithm is proposed to provide extra information for pruning the search space. After implementing HIP on RRT and RRT* algorithms, the experimental result obtained in many random scenarios presents a smaller medium and variance. Compared with original algorithms, HIP involved algorithms give a better solution during the same running time.Therefore,HIP can greatly improve the convergence speed of anytime sampling-based algorithms.Implementations to other sampling-based algorithms and design of construction for words are open avenues for further research.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

This work was co-supported by the National Natural Science Foundation of China (Nos. 61772055, Grant 61872169, the Technical Foundation Project of Ministry of Industry and Information Technology of China (No. JSZL2016601B003),and Equipment Preliminary R&D Project of China (No.41402020102).

Appendix A.

Lemma 1.Let parallel rays be constructed for each obstacle. If the obstacle with the center outside the ellipse is omitted, the lettersofword([σ])wouldnotchange,whereword([σ])∈C(||σ||≤

Proof.Recall that some important symbols are defined as follows:

●σ: A feasible path;

●[σ]: A homotopy class contains the path σ;

●||σ||: The distance of a feasible path;

●word(σ): The word of a feasible path;

●word([σ]):The word of a homotopy class,which is equal to word(σ).

According to Bhattacharya’s work35,37,this construction of word is a complete homotopy invariant for trajectories connecting the same set of points. That is,σ1,σ2:[0,1]→R2-Owith σi(0)=winit, σi(1)=wgoalare homotopic if and only if word(σ1)=word(σ2). For those omitted obstacles,their ray will not intersect any path according to construction rules from Algorithm 18. The reason is listed as follows:

For any rayrstarting from an omitted obstacle, its direction is perpendicular to the baseline from the start position to goal position and pointing to the outside of the ellipse.Let a feasible path σ′intersect withr.Then there exists a waypoint of σ′located outside the boundary of an ellipse,⑯The boundary of an ellipse equals to ||σ*grid||.which i mpliesThen, clearly, for ∀σ whereword(σ′)≠word([σ]). Compared to σ,word(σ′) contains the letter constructed byr. Therefore, the letter coming fromrdoes not belong to ∀word([σ]), where[σ]∈C(||σ||≤||σ*grid||). Letters of word([σ]) would not change if the obstacle with the center outside the ellipse is omitted.

Theorem 1.According to the construction procedure of the word described in Algorithm 5 and Algorithm 6, the empty word is a sufficient and necessary condition to determine if it belongs to the optimal collection of homotopy classes (C(||σ||≤||σ*grid||)).

Proof.Let parallel rays be constructed for each obstacle.According to Bhattacharya’s work37,this construction of word is a complete homotopy invariant for trajectories connecting the same set of points.That is, σ1,σ2:[0,1]→R2-O withσi(0)=winit, σi(1)=wgoalare homotopic if and only ifword(σ1)=word(σ2).According to Lemma1,omitted obstacles and their ray will not influence words of homotopy classesbelonging to C(||σ||Collecting all letters in the word,denoted asΩ,satisfies C(||σ||≤Then,erase all of theseletters from each available word in this scenario.

(Sufficiency.) Clearly, letters of word(σ) that satisfyall belong to Ω. Once all the letters in Ω are erased, word(σ) would be the empty word. Thus, if word(σ) is empty, then [σ] belongs to

(Necessity.) Let [σ] belong toand then letters from word(σ) must belong to Ω. Once all the letters in Ω are erased, no letter belongs to word(σ). Therefore,word(σ) is an empty word.

CHINESE JOURNAL OF AERONAUTICS2021年1期

CHINESE JOURNAL OF AERONAUTICS2021年1期

- CHINESE JOURNAL OF AERONAUTICS的其它文章

- Tangling and instability effect analysis of initial in-plane/out-of-plane angles on electrodynamic tether deployment under gravity gradient

- Aerodynamic periodicity of transient aerodynamic forces of flexible plunging airfoils

- Effects of swirl brake axial arrangement on the leakage performance and rotor stability of labyrinth seals

- Experimental and computational investigation of hybrid formation flight for aerodynamic gain at transonic speed

- Tomography-like flow visualization of a hypersonic inward-turning inlet

- Hypersonic reentry trajectory planning by using hybrid fractional-order particle swarm optimization and gravitational search algorithm