Artificial Intelligence Applications in the Development of Autonomous Vehicles: A Survey

Yifang Ma, Zhenyu Wang, Hong Yang, and Lin Yang,

Abstract— The advancement of artificial intelligence (AI) has truly stimulated the development and deployment of autonomous vehicles (AVs) in the transportation industry. Fueled by big data from various sensing devices and advanced computing resources,AI has become an essential component of AVs for perceiving the surrounding environment and making appropriate decision in motion. To achieve goal of full automation (i.e., self-driving), it is important to know how AI works in AV systems. Existing research have made great efforts in investigating different aspects of applying AI in AV development. However, few studies have offered the research community a thorough examination of current practices in implementing AI in AVs. Thus, this paper aims to shorten the gap by providing a comprehensive survey of key studies in this research avenue. Specifically, it intends to analyze their use of AIs in supporting the primary applications in AVs: 1)perception; 2) localization and mapping; and 3) decision making.It investigates the current practices to understand how AI can be used and what are the challenges and issues associated with their implementation. Based on the exploration of current practices and technology advances, this paper further provides insights into potential opportunities regarding the use of AI in conjunction with other emerging technologies: 1) high definition maps, big data, and high performance computing; 2) augmented reality(AR)/virtual reality (VR) enhanced simulation platform; and 3)5G communication for connected AVs. This paper is expected to offer a quick reference for researchers interested in understanding the use of AI in AV research.

I. Introduction

THE rapid development of autonomous vehicles (AVs) has drawn great attention worldwide in recent years. The promising AVs for innovating modern transportation systems are anticipated to address many long-standing transportation challenges related to congestion, safety, parking, energy conservation, etc. Arguably, many AV technologies in laboratory tests, closed-track tests, and public road tests have witnessed considerable advancements in bringing AVs into real-world applications. Such advancements have been greatly benefited from significant investments and promotions by numerous stakeholders such as transportation agencies, information technology (IT) giants (e.g., Google, Baidu, etc.), transportation networking companies (e.g., Uber, DiDi, etc.), automobile manufacturers (e.g., Tesla, General Motors, Volvo,etc.), chip/semiconductor makers (e.g., Intel, Nvidia, Qualcomm, etc.), and so forth. Nonetheless, the concept of AVs or self-driving vehicles is not new. It is recent major strides in artificial intelligence (AI) together with innovative data collection and processing technologies that drive the research in AVs to unprecedented heights.

The prevailing wisdom of earlier (semi-)automated driving concept was highly related to advanced driver assistance systems (ADAS) that assist drivers in the driving process.These systems are more related to applications such as lanedeparture warning, and blind spot alerting. They aim to automate, adapt, and improve some of the vehicle systems for enhanced safety by reducing errors associated with human drivers [1]. Since the drivers are still required to perform various driving tasks, these systems are considered as the lower levels (Levels 1 and 2) of automation according to the classification of the Society of Automotive Engineers (SAE)[2], [3]. With the reduction of human driver involvement, the vehicle systems will proceed to Level 3 (conditional automation), Level 4 (high automation), and ultimately Level 5 (full automation). At Level 5, a vehicle is expected to drive itself under all environment circumstances. This truly selfdriving level requires that the vehicle must operate with the capabilities of “perceiving”, “thinking”, and “reasoning” like a human driver. The AI advancements in recent years led to natural cohesiveness between AI and AVs for meeting such requirements. In particular, the success of AI in many sophisticated applications such as the AlphaGo has significantly promoted research in leveraging AIs in AV development. Especially, the advent of deep learning (DL) has enabled many studies to tackle different challenging issues in AVs, for example, accurately recognizing and locating obstacles on roads, appropriate decision making (e.g.,controlling steering wheel, acceleration/deceleration), etc.

Overall, it has been shown that various AI approaches can provide promising solutions for AVs in recognizing the environment and propelling the vehicle with appropriate decision making. A few studies have specifically reviewed the applications of AI in a specific component associated AV development, for example, perception [4], motion planning[5], decision making [6], and safety validation [3], [7], [8].Nonetheless, there still lacks a comprehensive review of the state of the art of the progress and lessons learned from AI applications in supporting AVs, especially in latest years after AlphaGo defeating human go masters. A thorough investigation on the methodological evolution of AI approaches, issues and challenges, and future potential opportunities can timely facilitate practitioners and researchers in deploying, improving, and/or extending many of current achievements.

Therefore, this paper aims to provide a survey of contemporary practices on how AI approaches have been involved in AV-related research in recent years and ultimately to explore the challenging problems, and to identify promising future research directions. To fulfill such goals, this paper starts with the description of the framework for performing literature review, and further moves on to a critical descriptive analysis of existing studies. Then it summarizes current practices of using AI for AV development. This is followed by the synthesis of major challenges, issues, and needs regarding current AI approaches in AV applications. Based on the investigation, the paper further provides suggestions on potential opportunities and future research directions. Finally,the conclusion is presented.

II. Preparation for Literature Review

In order to conduct a comprehensive review of existing research efforts we performed an initial exploratory search about the published work from the following critical sources:1) Web of Science (WoS); 2) Scopus; 3) IEEE Xplore; and 4)Google Scholar (GS). The search engine keywords include the combination of the thematic words “Autonomous vehicles”,“AVs”, OR “Self-driving” AND “Artificial intelligence”,“Machine learning”, “ Deep learning”, “Reinforcement learning”, OR “Neural network” . In sequence, we defined the search criteria to filter and select the key papers for a comprehensive review. The key criteria included: keywords related to the research topic, work published in English, peerreviewed papers published by March 2019, and access to full papers. Depending on the data sources, the keywords cover article titles, abstracts, metadata, etc. Next, five research questions (RQs) were developed:

1) RQ1:How are existing research papers structured?

2) RQ2:What are the main focuses of the papers?

3) RQ3:What are the AI approaches applied in the papers?

4) RQ4:What are the main issues and challenges to apply AI approaches?

5) RQ5:What are the future opportunities of AI approaches in conjunction with other emerging technologies?

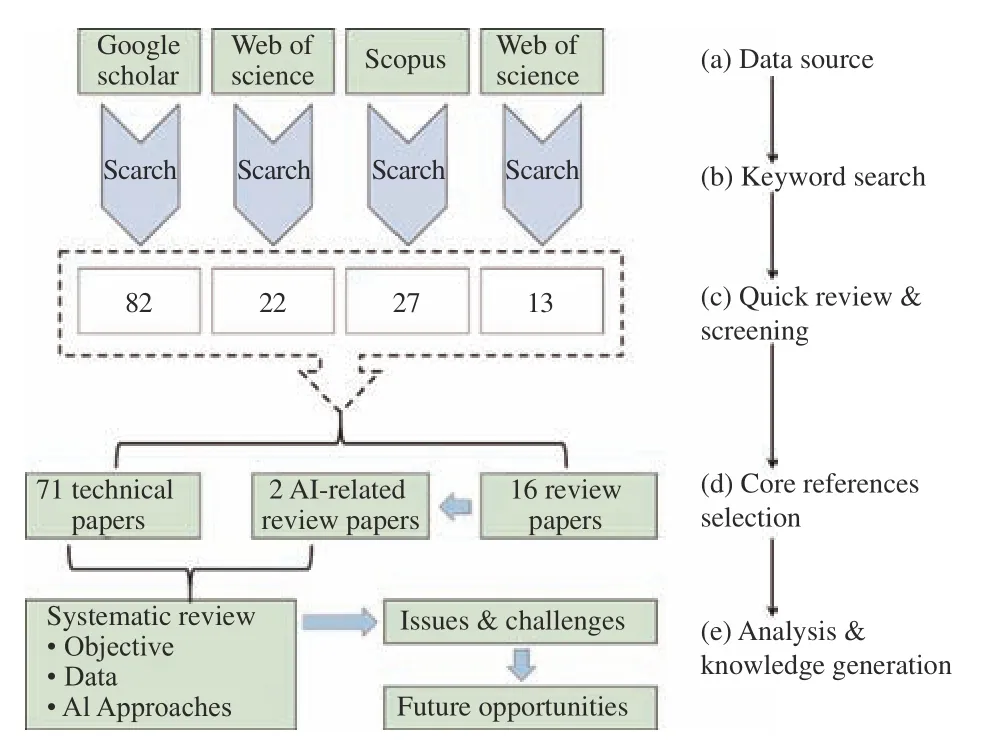

Fig. 1. Schematic of literature identification and research plan.

As shown in Fig. 1 , key relevant studies published by March 2019 were identified. An initial set of 71 technical publications were identified, while 16 review papers pertinent to AVs were also sourced. Some publications were excluded based on the content analysis because they did not specifically deal with AI approaches. Then, two reviews focused on AI approaches in AV applications were identified. Specifically,Zitzewitz [9] investigated the application of neural networks(NN) in AVs but did not focus on other promising and influential AI approaches such as machine learning (ML)approaches (e.g., support vector machine (SVM)), DL approaches (e.g., long short-term memory (LSTM)), and reinforcement learning (RL) approaches (e.g., deep Q-network(DQN)). This review is not publicly available. Later, Shafaeiet al. [7] specifically focused on safety issues raised by uncertainty in ML approaches: 1) incompleteness of training data; 2) distributional shift; 3) differences between training and operational environments; and 4) uncertainty of prediction). Despite existing efforts, there still lacks a comprehensive examination that can help well address the above research questions. Thus, we conducted a systematic review through identifying and discussing key components(objective, data, AI approaches), their issues and challenges,and future opportunities. The identified key publications are summarized and ordered by year in Appendix.

III. Descriptive Analysis

One possible method to infer over a particular state of the art is to conduct a descriptive analysis concerning the factual data, independent from the content of the items. Although limited, the information provided by the temporal,geographical, AI approaches, and application distributions can be of great importance when understanding the current state of the art. Fig. 2 shows the overall trend of studies on AI approaches in various AV applications.

As shown in Fig. 2 , it is noticeable that publications regarding AI approaches in AV applications are on an incremental path, with 2018 being the peak year having 29 identified publications. Six publications were identified by the first quarter of 2019. It can be expected that additional publications will emerge in the rest periods of the year with the focus on AI approaches in AV applications.

Fig. 2. Studies on AI approaches in the development of AVs (Note: data of 2019 include 1st quarter only).

To address geographical distribution, we sorted the identified publications by the nationality of corresponding authors. It was found that most publications involved corresponding authors from the United States (21 publications), followed by publications involving corresponding authors from China (18), European countries(16), and other countries (16). Many publications involve international collaboration.

Considering the AV application distribution, 38 papers mainly examined the applications of AI approaches in AV perception. This is followed by studies primarily concerning decision making (31), as well as localization and mapping (2).One reason is that perception of surrounding environments is the fundamental requirements for AV applications, and the mature and classical image recognition approaches (mainly ML and DL approaches) have been widely applied in many other areas. It should be noted that for those AV related research that do not focus on perception and localization and mapping, direct vehicle states such as location and speed are often acquired from simulation platforms rather than using image/3D cloud points in perception stage to serve as model inputs.

Finally, the key AI approaches used in existing studies have been examined. Seventeen technical publications used ML approaches. Forty-six technical papers introduced DL approaches including convolutional neural networks (CNN),LSTM, and deep belief neural networks (DBN) to applications such as vehicle perception, automatic parking, and direct decision making. Meanwhile, eight technical publications used RL approaches mainly to imitate human-like decision making.

IV. Current Practices of Using AI for AVs

A. Training AI for Perception, Localization, and Mapping

The generalized structure of the AV driving system to recognize objects on roads includes two primary components:1) a perception module that provides detection and tracking information about surroundings such as vehicles, pedestrians,and traffic signs based on inputs collected from various types of sensors such as radar, light detection and ranging (LIDAR)measurements, or cameras; and 2) localization and mapping module that refers to the relative states of AVs to others, for example, the distance of an AV to other vehicles, its position in the map, and relative speed.

1) Perception Algorithms

In this paper, perception is considered as an AV’s action using sensors to continuously scan and monitor the environment, which is similar to human vision and other senses. Based on the needed output and goal, existing perception algorithms can be grouped into two categories: a)mediated perception that develops detailed maps of the AV’s surroundings through analyzing distances to vehicles,pedestrians, trees, road markings, etc.; and b) direct perception that provides integrated scene understanding and decision making. Mediated perception uses AI approaches such as CNN to detect the single or multiple objects. One of the most classical perception tasks mastered by AI approaches is traffic sign recognition. The accuracy ratio of AI approaches such as deep neural network (DNN) has reached the value of 99.46%and outperformed human recognition in some tests [10].Related topics like detecting lanes and traffic lights have also achieved accuracies of a similar level when applied CNN model structures. For example, Liet al.[11] proposed to combine road knowledge and fuzzy logic rules to detect roads for the vision navigation of AVs. Later, Liet al.[12] proposed adaptive randomized Hough transform algorithm (ARHT) to detect lanes and proved its validity compared with genetic algorithm based lane detection for AVs. Petrovskaya and Thrun [13] combined Rao-Blackwellized particle filter and Bayesian network to detect and track moving vehicles with Stanford’s autonomous driving robot Junior. Fagnant and Kockelman [14] proposed a CNN approach that can detect more than 9 000 objects in real-time at 40–70 frames per second (fps) with a mean accuracy of nearly 80%, which makes it capable of detecting almost all things necessary for automotive tasks in a video or an onboard-camera. Such approaches use techniques such as edge detection and saliency analysis to extract high-level features to identify objects.Additional classification approaches such as support vector machines (SVM) have been introduced to further classify such CNN-learnt features. For example, Zenget al. [15] introduced extreme learning machine to classify deep perceptual features via CNN, and achieved a competitive recognition performance of 99.54% on the benchmark data of the German traffic signs.

Unlike single object perception, multi-task object perception imposes knowledge sharing while solving multiple correlated tasks simultaneously. It helps boost the performance of a part or even all the tasks. For example, Chuet al. [16] introduced the region-of-interest voting to implement the multi-task object detection based on CNN, and validated the proposed approach on KITTI and PASCAL2007 vehicle datasets.Meanwhile, Chenet al. [17] introduced the Cartesian productbased multi-task combination to simultaneously optimize object detection and object distance prediction to fully take advantage of the dependency among such tasks.

In addition, instead of detecting objects such as vehicles and traffic signs, semantic segmentation of roads with drivable surface plays an important role in AV perception. Semantic segmentation links each pixel in an image to a class label such as pedestrians, vehicles, and roads. It helps AVs to better understand the context in the environment. For example, Johnet al. [18] used a special CNN encoder-decoder architecture.After the input image was processed through the network, a pixel-wise classification was computed to determine the label of each pixel. It reported a prediction accuracy of 88% for cars and 96% for roads.

As for the direct perception, AVs complete sections of the mapping-related computation (for example, determining AV’s current angle on the road and the distance to surrounding vehicles and lane markings) but do not create a complete local map or any detailed trajectory plans. Thus, direct perception skips the majority of the localization and mapping stage and directly controls the output of the steering angle and vehicle speed. Despite the involvement of decision making, studies on direct perception are considered under the perception group as shown in Fig. 2. For example, Chenet al. [19] used TORCS image data as the input and developed DNN to determine an AV’s steering angle and velocity. Furthermore, the same perception system was tested using videos and images from the KITTI database, showing that the system could recognize lane configurations and transitions in the real world. Similarly,Bojarskiet al. [20], [21] proposed the PilotNet, which is a CNN framework consisting of one normalization layer, five convolutional layers, and three fully connected layers, to train AVs to steer on the road with camera images as the input and steering parameters as the output.

2)Localization and Mapping

Localization and mapping have evolved from stationary,indoor mapping for mobile robot applications, to outdoor,dynamic, high-speed localization and mapping for AVs. This process is also named as simultaneous location and mapping(SLAM).

Many prototype vehicles, such as the Google, Uber, and Navya Arma AVs, have used priori mapping methods. These methods consist of pre-driving specific roads and collecting detailed sensor data, such as 3D images and highly accurate GPS information. Large databases store the created detailed maps for a vehicle to drive autonomously on those specific roads. Local localization is performed by observing similarities between priori maps and the current sensor data,whereas obstacle detection is achieved through observing discrepancies between the a priori map and the current sensor data. For example, Alcantarillaet al. [22] fused data from GPS, inertial odometry, and cameras as the input of the SLAM to estimate vehicle trajectory and a sparse 3D scene reconstruction. Image pairs are aligned based on similarity and further used to detect potential street-view changes. In addition, given the assumption that objects recognized by AV’s sensors should be on the ground plane, Vishnukumaret al.[23] estimated car distances with the camera and LIDAR signals from the KITTI dataset. A two-CNN system was used with one for close-range (2–25 m) and another for far-range(15–55m) object detection considering the low resolution of input images. Then, the outputs of the two CNNs were combined for estimating the final distance projection.ce projection.

B. Training AI for Decision Making

Given the learned information such as surroundings, vehicle states (velocity and steering angle), decision making related applications such as automatic parking, path planning, and car following have been investigated.

Automated parking is automated driving in a restricted scenario of parking with low speed maneuvering. Other than semi-automated parking using ultrasonic sensors or radars,automated parking using camera, radar, or LIDAR sensors have been investigated [24]. Potential applications including 3D point cloud-based vehicle/pedestrian detection, parking slot marking recognition, and free space identification are discussed. Meanwhile, Notomista and Botsch [25] proposed a two-stage random forest-based classifier to assist autonomous parking and validated the approach during the Audi Autonomous Driving Cup, a college-level contest.

Conventional path planning methods have been widely explored. These methods typically include artificial potential field, distance control algorithm, bumper event approach,wall-following, sliding mode control, dijkstra, stereo block matching, voronoi diagram, SLAM, vector field histogram,rapidly exploring random tree, curvature velocity, lane curvature, dynamic window, and tangent graph [26].However, most of these approaches are time-consuming and relatively difficult to implement in real robot platforms. Thus,AI-based approaches such as NN, genetic algorithms (GA),simulated annealing (SA), and fuzzy logic [11] have been introduced and achieved relatively high performance. For example, Hardy and Campbell [27] introduced the obstacle trajectory clustering algorithm and simultaneously optimize multiple continuous contingency paths for AVs. Al-Hasan and Vachtsevanos [28] proposed an intelligent learning support machine to learn from previous path planning experiences for optimized plan planning decisions at high speeds in natural environments. Meanwhile, Chenet al. [29] combined fuzzy SVM and general regression neural network to support path planning in off-road environments. Sales and Correa [30] used NN to perceive surrounding environments and proposed the adaptive finite state machine for navigating AVs under urban road environments. Similarly, Akermiet al. [31] proposed a sliding mode control mode for path planning of AVs. The inner radial basis function neural network (RBFNN) and fuzzy logic system was found to be able to well deal with uncertainties and mismatched disturbance in simulation scenarios.

Compared with conventional linear car-following model,distance inverse model, memory function model, expected distance model, and physiological-psychological model, AI-based car following models are prominent and have outstanding advantages in dealing with nonlinear problems through algorithms such as CNN, RL, and inverse RL (IRL).For example, Daiet al.[32] proposed to use RL to control longitudinal behaviors of AVs. Later, surrounding vehicles’trajectories (especially with human drivers) are predicted and tracked to help make safer car following behaviors. For example, Gong and Du [33] introduced curve matching learning algorithm to predict leading human-driven vehicles’trajectories to facilitate making the cooperative platoon control for a mix traffic flow including AVs and humandriven vehicles. Meanwhile, ML/DL approaches can also directly use images, vehicle metrics such as speed, lateral information, steering angle as the input, and control the output speed and steering angle to perform car following behaviors.For example, Onievaet al.[34] combined the fuzzy logic and genetic algorithm for the lateral control of steering wheels.Liet al. [35] used the abstractions of road conditions as the input and the vehicle makes driving decision based on speed and steering output from a six-layer NN. Chenet al. [36]proposed a rough-set NN to learn decisions from excellent human drivers to make car-following decision. The test on virtual urban traffic simulations proved the better convergence speed and decision accuracy of the proposed approach than that of NN. RL and IRL can gradually learn from surrounding environments, with the benefit of using reward function that evaluates how AVs ought to behave under different environments. For example, Gaoet al. [37] introduced the IRL to estimate the reward function of each driver and proved its efficiency via simulation in a highway environment.

After the multilayer perceptron being used to guide vehicle steering [38], various AI approaches such as CNN, recurrent NN (RNN), LSTM, and RL have been introduced. For example, Eraqiet al. [39] introduced a convolutional LSTM to learn from camera images to decide steering wheel angles.The validation on comma.ai dataset showed that the proposed approach can outperform the CNN and residual neural network (Resnet). Other than simulation, real-world tests have been implemented by Nvidia. Bojarskiet al. [21] proposed the PilotNet to output steering angles given road images as input.Road tests demonstrated that the PilotNet can perform lane keeping regardless of the presence of lane markings.

Further, cooperative negotiation among following AVs have also been examined and found to help improve the control performance. Information can pass through leading AVs and following AVs to make more efficient decision [40], [41]. For example, Kimet al. [40] investigated the impact of cooperative perception and relevant see-through/liftedseat/satellite views among leading and following vehicles.Given the extended perception range, situation awareness can be improved on roads. The augmented perception and situation awareness capability can contribute to better autonomous driving in terms of decision making and planning such as early lane changing and motion planning. In addition,safety issues such as intrusion detection have been explored[42].

C. Current Evaluation Practices

The current evaluation practices can be categorized into three types: 1) dataset based; 2) simulation based; and 3) field test based. Given public datasets such as the German traffic signs and the KITTI dataset, the performance of AI-based approaches were examined. Meanwhile, simulation-based practices used software such as MATLAB/Simulink, TORCS,and CarSim to simulate traffic scenarios and vehicle movements. However, there exists a large gap between such simulated scenarios and real-world scenarios due to ignored hidden aspects such as inclement weather, market penetration ratio of AVs, and human-driven vehicles. In addition, directly using vehicle states such as operating speed and distance to other vehicles archived in simulation ignores the perception errors of AVs. Finally, only few of recent approaches have implemented validation under real-world simplified scenarios such as driving tests on university campus roads [20], [21].

V. Major Challenges for AI-Driven AV Applications

A. Sensor Issues Affecting the Input of AI Approaches

The success of AI approaches largely relies on the quality of the sensor data as the input. Sensors used in AV applications fall into three main categories: self-sensing, localization, and surrounding-sensing. Self-sensing uses proprioceptive sensors to measure the current state of the ego-vehicle, including AV’s velocity, acceleration, and steering angle. Proprioceptive information is commonly determined using pre-installed measurement units, such as odometers and inertial measurement units (IMUs). Localization, using external sensors such as GPS or dead reckoning by IMU readings,determines an AV’s global and local positions. Lastly,surrounding-sensing uses exteroceptive sensors to perceive road markings, road slope, traffic signs, weather conditions,and the state (position, velocity, acceleration, etc.) of obstacles(e.g., other surrounding vehicles). Furthermore, proprioceptive and exteroceptive sensors can be categorized as active and passive sensors. Active sensors emit energy in the forms of electromagnetic waves, and examples include sonar, radar,and LIDAR. On the other hand, passive sensors perceive electromagnetic waves in the environment and examples include light-based and infrared cameras. Detailed applications of sensors used in AVs are listed in Table I. The most frequently used sensors are camera vision, LIDAR,radar, and sonar sensors.

Cameras are one of the most critical components for perception. Typically, the spatial resolution of a camera in AVs ranges from 0.3 megapixels to two megapixels. A camera can generate the video stream at 10–30 fps and captures important objects such as traffic light, traffic sign,obstacles, etc., in real time [4]. A LIDAR system scans the surrounding environment periodically and generates multiple measurement points. This “cloud” of points can be further processed to compute a 3D map of the surrounding environment. Besides cameras and LIDAR, radar and ultrasonic sensors are also widely used to detect obstacles.Their detection areas can be short-range and wide-angle, midrange and wide-angle, and long-range and narrow-angle [43].It should be noted that most AVs integrate multiple types of sensors due to two important reasons. First, fusing the data from multiple sensors improves the overall perception accuracy. For example, a LIDAR system can quickly detect the regions of interest and a camera system can apply highly accurate object detection algorithms to further analyze these important regions. Second, different layers of sensors with overlapped sensing areas provide additional redundancy and robustness to ensure high reliability. For example, Joet al.[44] proposed to fuse data from cameras and LIDAR sensors and achieved better results under poor light conditions.

Abnormal conditions such as severe weather pose precision and accuracy issues on sensor outputs, and can be (partially)addressed by some of the aforementioned sensor fusion technologies. For example, perception under poor weatherconditions such as snow, heavy rain, and fog is an important AV research topic as these scenarios continuously prove to be problematic even for human drivers. In snowy conditions, it has been found that both vision-based and LIDAR-based systems have extreme difficulties. The “heaviness” or density of the snow has been found to affect the LIDAR beams and cause reflections off snowflakes that lead to “phantom obstacles” [45]. Several approaches such as sensor fusion of camera, LIDAR, and radar sensors [45] and taillight recognition [46] were proposed. Perception is also problematic under different environment conditions such as complex urban areas and unknown environments. As such,Chenet al. [36] introduced rough set theory to deal with some possible noise and outliers.

TABLE I Different Sensors Used in AV Development

As shown in Table I , the sensors chosen by different stakeholders are noticeably different. Several key questions should be answered in determining the priority of specific sensors. For example, will the sensor need to work under severe weather conditions? Should the AV sensors be cost effective to sacrifice some level of accuracy, and so on? In practices, for examples, the AVs in the DARPA Urban Challenge were generally outfitted with multiple, expensive LIDAR and radar sensors, but lacked sonar sensors, since the challenges did not focus on low-speed and precise automated parking (e.g., parallel parking). In contrast, many commercial vehicles, such as the Tesla Model S and the Mercedes-Benz S class, include ultrasonic (sonar) sensors for automated parking but not LIDAR to minimize cost. Infrared cameras, which are often used to detect pedestrians and other obstacles at night,are predominantly found on commercial vehicles [47].

In short, the discrepancy of sensors will lead to heterogeneous datasets gathered for serving AI approaches.Also, the quality and reliability of different sensor data should be noted. Therefore, when designing an AI approach, the issues associated with sensor inputs such as data availability and data quality need to be thoroughly examined.

B. Complexity and Uncertainty

AVs are complex systems that involve lots of perceptions and decision making. The implementation of AI approaches unavoidably involves uncertainty in performing these tasks. In general, the uncertainty associated with AI approaches can be grouped in two aspects: 1) uncertainty induced data issues:almost all data collected by the sensor systems will have noise that can bring unpredictable errors in the input for AI models;and 2) uncertainly brought by the implemented models [63].

The previous section has discussed that the used sensors in AV development may not reliably work under different conditions. Thus, the failure or inappropriate working status will induce uncertainty in perceiving environments. To reduce such uncertainty, approaches such as false detection and isolation methods have been used in some research. For example, Pouset al. [64] applied analytical redundancy and nonlinear transformation methods to assess sensor metrics for determining faulty or deviant sensor measurements. Shafaeiet al. [7] considered RL to determine abnormal input due to missing data, distribution shift, and environmental changes.

The uncertainties brought by AI models are rooted in their functional requirements. In AI algorithms, a major assumption is that the training data collected from sensors can always meet the needs of the functioning algorithms. In addition, the used models are supposed to capture the operational environment constantly. These assumptions are often not guaranteed in real-world operations of AVs as the operational environment is highly unpredictable and dynamically changing [7].

In addition, the complexity and uncertainty can be triggered on connected AVs due to malicious attacks that can be launched from any location randomly. Also, a malicious attack does not require physical access to AVs. Thus,malicious attack and intrusion detection is critical in connected AVs. AI approaches such as NN and fuzzy logic have been explored in external communication systems [42],and it is expected to develop more efficient AI approaches that can well address more complex scenarios if many AVs were connected.

C. Complex Model Tuning Issues

Due to the relative simplicity, some research on AVs primarily used ML approaches such as particle filter, random forest, SVM. Given many successful applications of DL approaches in other transportation areas such as traffic flow prediction, some researchers explored emerging AI approaches such as CNN, LSTM, and DBN [23], [65]–[67].However, there are still some issues when using advanced learning algorithms for real-time AV decision making. Firstly,DL and RL approaches often employ more complex model structures and thus the parameter calibration is computationally expensive. Currently, there lacks the guidance on the selection of the model hyper-parameters such as the number of hidden layers, hidden units, and their initial weighting values. This means that the end user must design a suitable model tuning strategy by a costly trial-and-error analysis when used on AVs to decide angles of the steering wheel. Secondly, the supervised learning algorithms such as SVM and NN can not well learn from unlabeled environment.For example, the uncertainty issue mentioned before will be especially severe when the training data significantly differs from testing data, which is expected to be the case in realworld traffic conditions. Training an AV to perceive surroundings in suburban/rural areas and test it in complex urban scenarios will potentially raise safety issues due to uncovered training scenarios (e.g., pedestrian presence).Chenet al. [36] used rough set to reduce the influence of noise and uncertain data on NN models, but the learning ability with unexpected scenes such as mobile work zones remains an issue. Last but not least, the transferability of the trained scenarios will also be a challenge for AI approaches to be involved in every sector of the AV applications.

To learn from drivers and extract key factors, RL approaches have also been explored [37], [68]–[75]. RL categorizes vehicle metrics as diverse states and define rewards and policy to control AV behaviors. The use of RL requires knowledge of the reward function, which needs to be carefully designed. An alternative is to learn the optimal driving strategy using demonstrations of the desired driving behavior. For example, Iseleet al. [72] proposed a deep RL structure to navigate AVs drive across intersections with occlusions. Deep Q-network learns the relationship among rewards and inputs. Although one can approximately recovers expert driving behavior using this approach, the matching between the optimal policy/reward and the features is ambiguous. Special attention needs to be paid to learn the complicated driving behavior with preference on certain actions. In short, more efforts are needed to enhance AI approaches for specific autonomous applications and desired maneuvers.

D. Solving the Hardware Problem

The AI implementations in AV applications require demanding computational resources, and therefore heavily rely on the computing devices [4]. Diverse computing architectures have been proposed, including multicore central processing unit (CPU) system, heterogeneous system,distributed system, etc. Multiple computing devices are usually integrated into the AV system. For example, the AV designed by Carnegie Mellon University deployed four Intel Extreme Processor QX9300s with mini-ITX motherboards equipped with CUDA-compatible graphics processing units(GPUs) [76]. Meanwhile, BMW deployed a standard personal computer and a real-time embedded computer (RTEPC) [77].PC fused sensor output data to percept surrounding environments and RTEPC was connected to the actuators for steering, braking, and throttle control [77]. While the aforementioned hardware systems have been successfully applied for real-time operations of autonomous driving, the field test performance (measured by accuracy, throughput,latency, power, etc.) and cost (measured by price) remain noncompetitive for commercial deployment. Hence, there still needs efforts to advance hardware implementations to address both the technical challenges and the market needs for AI applications in AVs development.

The major challenges for such computing devices rely on GPUs, CPUs, and field-programmable gate array (FPGA).GPUs are originally designed to manipulate computer graphics and image processing, e.g., to meet the need for running high-resolution 3D computer games. With the emergence of DL, GPUs draw wide attention due to its inherent parallel structure that can achieve substantially higher efficiency compared with CPUs when processing large volumes of data in parallel. Thus, it has been considered as promising computing devices for implementing AI approaches for AV applications. Nonetheless, GPUs often consume high energy and pose significant amount of challenges for additional power system load and heat dissipation. Great efforts have been made to address the commercial application of GPUs. For instance, NVIDIA released its advanced mobile processor Tegra X1 implemented with a Maxwell 256-core GPU and an ARM 4-core CPU [4]. It should be noted that Tegra X1 has already been deployed in NVIDA’s DRIVE PX Auto-Pilot platform for autonomous driving.

On the other hand, a FPGA is a reconfigurable integrated circuit to implement diverse digital logic functions. FPGAs are programmed for given applications with their specific computing architectures and for different purposes that result in a reduced non-recurring engineering cost. Hence, it leads to a higher computing efficiency and lower energy consumption compared to CPUs/GPUs aimed at general-propose computing. For instance, Altera released its Arria 10 FPGAs manufactured by 20 nm technology and gained up to 40 percent lower power compared with previous generation FPGAs. Meanwhile, its built-in digital signal processing(DSP) units enable wide applications such as radar designs and motor control applications. Table II illustrates the energy efficiency of CPU, GPU, and FPGA for ALexNet. It suggests that FPGA outperforms CPU and GPU in terms of the higher energy efficiency measured by the throughput over power. It also should be noted that new advanced system architectures have also been developed to facilitate efficient implementation of DL approaches. For instance, Google proposed tensor processing unit (TPU) and validated that it can achieve on average 15 times–30 times faster speed than its contemporary CPU or GPU [78], with TOPS/Watt [79] about 30 times–80 times higher. However, it should be mentioned that there still exists large gaps for hardware improvement in supporting real-time implementations of AI approaches for large-scale commercial applications in AVs.

TABLE II Comparison of CPU, GPU, and FPGA Accelerators for CNN

VI. Opportunities and Future Research

Other than examining the issues with respect to AI use in AV systems in existing literature(e.g., studies shown in Table III), this paper also explores some potential opportunities and research directions that deserve more investigations in future work.

A. Emerging Real-Time High-Definition Maps Associated With Big Data and HPC

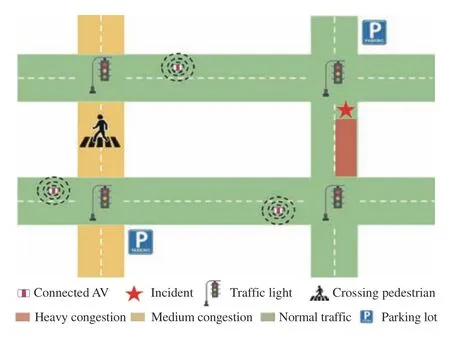

With the development of sensor technologies, many data sources are becoming available. This provided many opportunities to revisit the perception and decision making of AVs under diverse environments. As illustrated in Fig. 3, AVs can collect high-frequency data via sensors such as radar,LIDAR, and cameras to better perceive surroundings. Such big data have shown potential to improve AV performance under complicated conditions [44]. Meanwhile, the development of high performance computing (HPC)devices helps accelerate commercial implementation of the complex AI algorithms on AVs.

Fig. 3. AV driving with HD maps.

Given the incorporation of such big data, HPC, and other relevant information including infrastructure sensor measurements, a promising application is the real-time highdefinition (HD) maps that serve as the key input of the AI approaches, interacts with AVs, and reflects real world scenarios. HD Mapping startups have turned to crowdsourcing, sought to lower the cost of HD mapping software and hardware, or focused only on technical services for HD map development. Diverse crowdsourcing approaches have been introduced to construct a HD map. For example,IvI5 applies computer vision technology and encourages Uber drivers to use its app Payver to collect data, especially recording videos for rewards [84]. Thus, AVs can use such HD maps as the input of the AI approaches and further augment functions such as high-precision localization,environment perception, planning and decision making, and real-time navigation cloud services to autonomous vehicles.For example, AVs can quickly archive input of traffic signal lights and surrounding vehicles via HD maps, then use DL and/or RL approaches to implement direct perception and decision making with a reduced software and hardware cost.In addition, AVs can make an informed path planning when driving in unfamiliar environments. As shown in Fig. 3, AVs can quickly select the suitable parking lot by taking information such as road congestion, distance into consideration, and drive toward the destination assisted by markings in the HD map. Thus, it is expected that an efficient and valid solution to establish real-time digital maps can leverage the value of big data and HPC, and significantly improves the data quality when serving as the input of AI approaches to further increase the performance of AVs.

B. Enhanced Simulation Testbed with AR/VR

The testing of various AI approaches in developing AV applications is time-consuming and expensive. This stimulates the opportunities for leveraging simulation models to generate extensive data and test the developed AI algorithms. Many existing simulation-based research on AVs use platforms such as MATLAB and CarSim to simulate AVs and road environments. However, such simulation testbeds lack the interaction with components such as crossing pedestrians and drivers of ordinary vehicles. The low fidelity of these testbeds can not generate high-quality and realistic data to train AI models to make better decisions in various scenarios. For example, the gap between reactions of simulated pedestrians/drivers and realistic actions would be crucial when analyzing the potential safety impacts with the designed AI algorithms. Alternatively, it is promising to leverage augmented reality (AR)/virtual reality (VR) to get realistic human behaviors for training designed AI algorithms in estimating the potential safety issues of AVs [85], [86]. For example, researchers can simulate the scenario when AVs cross the urban downtown area with lots of pedestrians wearing VR/AR devices. This creates rich data for training the AI algorithms for understanding the possible scenes. Many of the following questions may benefits from the more realistic simulation results: how should an AI algorithm perceive such complex surroundings and recommend decisions such as yielding to crossing pedestrians, identifying aggressive ordinary vehicles, and path planning? How should AVs learn from people’s behavior and evolve their AI approaches to reduce collision risk? In summary, an accelerated simulation platform such as Fig. 4 is beneficial to develop extensive scenarios for testing the AI approaches prior to field implementations.

Fig. 4. Enhanced simulation platform for accelerated testing.

In general, mixed reality (MR) prototyping with AR and VRs provides a safe testing platform for experimenting the performance of AI algorithms adopted by AVs, which are yet to be perfected. For example, some researchers have used MR to study human-machine teaming and has successfully paired human with autonomous aerial vehicles [87]. The level of reality can vary and be improved with the use of geo-specific terrains. AR/VR can also be used to test risky scenarios without exposing human in danger and to optimize the relevant AI approaches for AVs. In particular, this may facilitate the use of RL for AVs to imitate human drivers’decision making. For example, AVs can use RL approaches to interact with actual pedestrians/drivers wearing AR/VR headsets, and optimize its corresponding steering angles and velocity to reduce collision risk. Human participants’ behavior can be collected and served as the training/testing data to support AI-based AVs’ decision making towards enhanced safety and operational performance. It is expected that many of the data issues associated with the use of AI algorithms will be mitigated with the AR/VR generated simulation data.

C. 5G Connected AVs

Connected AVs (e.g., autonomous truck platoon) can implement cooperative decision making, perceptions, and achieve a better performance [40], [41]. Communication technologies such as dedicated short-range communications(DSRC) are limited by the bandwidth to guarantee a high data rate link and are prone to malicious attack. The sub-6GHz bands used by 4 generation long term evolution-advanced (4G LTE-A) systems are highly congested, which leave limited space for AVs. The limited communication capability can impede the implementation of many AI algorithms that requires large volume of real-time data collection and transmission. This stimulates the 5G community to leverage the underutilized mmWave bands of 10–300 GHz [88]. It has been known that mmWave bands are subject to issues such as high path loss and penetration loss, which hinder their widely application. However, latest studies have shown the improved potential of the mmWave bands by taking advantages of directional transmission, beamforming, and denser base stations [88]. This will be important for providing reliable and sufficient connectivity for AVs.

Given the abundant bandwidth, it has been highlighted that the necessity of multi-Gbps links to enable 5G communication of AVs [89]. Transmitting a large volume of data collected by sensors such as radar and LIDAR is expected to be ensured with a relative low latency in the context of 5G communication. Thus, 5G-connected AVs are expected to better support the AI algorithms for environment sensing,perception, and decision making [88]. As illustrated in Fig. 5,the obstacle (incident) occurs in the left lane and is perceived by an AV. Thus, the following AVs can acquire and respond to such safety information faster with their built-in algorithms.It should be noted that each AV serves as an intelligent agent and negotiate with each other. The platoon of multiple AVs that communicate with each other via 5G technology can constitute a multi-agent system. In order to implement such cooperated AVs, there still exists several challenging issues that need to be addressed. For example, which AI approach should be leveraged to select the optimal beam to compensate for the high path loss? How should AVs build the network routing and transmit messages between each other? How should AVs make cooperative decision making such as lane changing, deceleration, and acceleration? How should the multi-agent system composed of AV agents apply AI approaches to learn from the environment and negotiate with each other? The upcoming 5G applications will undoubtedly stimulate more thinking about these questions in AV development.

Fig. 5. Connected AVs with 5G communication.

VII. Conclusions

A number of studies were published on the AI-related work in AV research community. Despite a substantial amount of research efforts, there still exists challenging problems in using AI for supporting AVs’ perception, localization and mapping, and decision making. Many AI approaches such as ML, DL, and RL solutions have been applied to help AVs better sense surroundings and make human-like decisions in situations such as car following, steering, and path planning.However, such applications have been inherently limited by data availability and data quality, complexity and uncertainty,complex model tuning, and hardware restrictions, and therefore still need continuous endeavor in these avenues.Therefore, this paper has provided a summary of current practices in leveraging AI for AV development. More importantly, some of the challenging issues in using AI for meeting the functional needs of AVs have been discussed. The future efforts that can help augment the use of AI for supporting AV development have been identified in the context emerging technologies: 1) big data, HPC, and high resolution digital map for enhanced data collection and processing; 2) AR/VR enhanced platform for constructing accelerated test scenarios; and 3) 5G for low-latency connections among AVs. These research directions hold considerable promise for the development of AVs. Combined with the refinements of AI approaches, one can expect more opportunities will emerge and offer new insights into commercial and widespread AV applications in real world.Thus, it also deserves special attention to the business,economic, and social impacts accompanied with the evolvement of AI and AVs.

Acknowledgements

The authors appreciate Prof. Li Da Xu at Old Dominion University (ODU) and Prof. MengChu Zhou at New Jersey Institute of Technology (NJIT) for their great comments and suggestions that greatly helped develop this paper.

APPENDIX

TABLE III Summary of Identified Key References

TABLE III (continued)Summary of Identified Key References

IEEE/CAA Journal of Automatica Sinica2020年2期

IEEE/CAA Journal of Automatica Sinica2020年2期

- IEEE/CAA Journal of Automatica Sinica的其它文章

- Data-Driven Based Fault Prognosis for Industrial Systems: A Concise Overview

- Review of Antiswing Control of Shipboard Cranes

- Research Progress of Parallel Control and Management

- Influence of Data Clouds Fusion From 3D Real-Time Vision System on Robotic Group Dead Reckoning in Unknown Terrain

- Effect of a Traffic Speed Based Cruise Control on an Electric Vehicle’s Performance and an Energy Consumption Model of an Electric Vehicle

- Proximity Based Automatic Data Annotation for Autonomous Driving