An Integration Testing Framework and Evaluation Metric for Vulnerability Mining Methods

Jin Li, Jinfu Chen*, Minhuan Huang, Minmin Zhou Wanggen Xie Zhifeng Zeng Shujie Chen Zufa Zhang National key laboratory of science and technology on information system security,Beijing institute of system engineering, Beijing, 000, China School of Computer Science and Communication Engineering, Jiangsu University, Zhenjiang, 03, China

I. INTRODUCTION

Vulnerability refers to some of the functional or security logic defects that exist in the system, including all the factors which may lead to any threat or damage to the computer system security. It can also be regarded as the deficiencies that exist in computer system consisting of the hardware, software, and the specific implementation of protocols or even the system security policy [1]. Software vulnerabilities are usually caused by the potential insecurity of the system due to some intentional or unintentional errors during the design and implementation of the software. Vulnerabilities can be divided into functional logic vulnerabilities and security logic vulnerabilities [2]. Functional logic vulnerability means the adverse effects on the normal function of software, such as the error execution resultsand the error execution process. Security logic vulnerability usually does not affect the normal function of the software. However, once the security logic vulnerability is successfully exploited by the attacker, it may cause the software to run incorrectly or even execute a malicious code. For example, buffer overflows vulnerability, cross-site scripting vulnerability in the site (XSS), SQL injection vulnerability,and so on. [3][4].

Nowadays, the main factors that affect the security of information system are the loopholes existing in the operating system, application software and various communication security protocols [5]. Attackers can make some corresponding attack on the information system by exploiting and manipulating these vulnerabilities, and may cause various degrees of damage to the information system. At the same time, with the high societal transition to the use of information technology in all areas, a large variety of application software has emerged. The actual quality of the software varies greatly. What’s more, most of the software has not been rigorously tested or reviewed before delivery, with a large number of potentially serious vulnerabilities that can be exploited [6][7]. The existences of these vulnerabilities in applications are the biggest security risk of information systems. For a variety of reasons, the existence of vulnerabilities is inevitable. Once some of the serious vulnerabilities are found by attackers, it is quite possible to be exploited leading to threat or damage to the computer system under unauthorized access. A timely discovery and repair of these loopholes before they are exploited by attackers can effectively reduce the threat on the network [8] [9]. Therefore, it is of great significance to explore and analyze the system security loopholes. The search on loopholes is divided into two parts: vulnerability mining and vulnerability analysis. Vulnerability mining technology is devoted to exploring as many as possible potential vulnerabilities in software with comprehensive application of various technologies and tools. Vulnerability analysis technology is used to make an indepth analysis of the details of the discovered loopholes and to pave the way for the use of loopholes and the measures for loopholes remediation [10] [11].

Therefore, providing a more accurate and complete analysis and evaluation for these tools and methods is quite necessary for settling the present problem. In this paper, we proposed an evaluation metric for the mining methods, and also designed an integration testing framework based on different software environments and scenarios. This testing framework generates the testing analysis reports by testing and evaluating the existing typical vulnerability detection methods and tools along with making comparison analysis.The final testing results can be used to guide people to select the most appropriate and effective methods or tools in real vulnerability detection activity.

The remainder of this paper is organized as follows. These typical methods of software vulnerabilities detection are described in Section II. The software vulnerability detection tools are introduced in Section III. The evaluation metric is showed in Section IV. The integration testing framework is presented in Section V. The results of our empirical studies and experimental analysis are reported in Section VI. And the conclusion and future work are presented in Section VII.

II. SOFTWARE VULNERABILITIES DETECTION METHODS

The mainstream software for the detection of vulnerabilities currently are fuzzing technology, security scanning technology, static analysis technology, binary detection technology,dynamic analysis technology and so on. The detailed introduction and explanation of these vulnerability detection methods are as following.

2.1 Fuzzing

Fuzzing [12] is a security testing approach based on injecting invalid or random inputs into a program in order to obtain an unexpect-ed behavior and identify errors and potential vulnerabilities. There is no single better fuzzing testing method; this is because fuzzing has no precise rules. Its efficiency depends on the creativity of the author. Its key idea is to generate appropriate tests that are able to crash the target and to choose the most appropriate tools to monitor the process. Fuzzed data generation can be performed in two ways. They can be generated randomly by modifying correct data without requiring any knowledge of the application details. This method is known as Blackbox fuzzing and was the first fuzzing concept.On the other hand, Whitebox fuzzing consists of generating tests by assuming a complete knowledge of the application code and behavior. The third type is Graybox fuzzing which stands between the two methods aiming to take advantages of both. It uses only a minimal knowledge of the target application’s behavior. It is thus the most appropriate method.

Bekrar et al. [12] suggested in their paper that the most effective way to identify software vulnerabilities is by using fuzzing testing. This allows the robustness of the software to be tested against invalid inputs that play on implementation limits or data boundaries.

A high number of random combinations of such inputs are sent to the system through its interfaces. Although fuzzing was identified as a fast technique which detects real errors, the paper also enumerated some drawbacks that if corrected can improve its efficiency.

Among some of the main drawbacks of fuzz testing are its poor coverage which involves missing many errors, and the quality of tests. Enhancing fuzzing with advanced approaches such as data tainting and coverage analysis would improve its efficiency.

2.2 Security scanning technology

Security scanning is yet another method used in detecting vulnerabilities in software applications, it is also known as vulnerability assessment. Scanning vulnerability means scanning and checking the ports information of the computer to find out whether the vulnerability existing in the port that can be exploited [13].The results of the vulnerability scanning only indicate which attacks are possible and which ports are possibly exploited and invaded by hackers [14]. In essence, it is just a security evaluation of the computer system and vulnerability scanning technology is built upon the port scanning technology. On the view of the invasion analysis and the loopholes collection,the vast majority of this kind of technologies is for some particular network service, especially for each specific port.

The principle of vulnerability scanning is to check whether the known security vulnerabilities existed in the target host through initiating a variety of simulated attacks. After the port scanning, we can get the opening ports on the target host and the network service on the port.The next task is to match the relevant information with the vulnerability database provided by the network vulnerability scanning system in order to check for any security vulnerability that meets the matching conditions. So far, the security scanning technology has reached a very mature point [15].

A security scanner is a program that automatically detects remote or local host security vulnerabilities by collecting information from the system. The security scanner adopts the simulated attack to check the known security vulnerabilities which may exist in the target.And the target can be a variety of objects such as workstations, servers, switches, databases,and so on. In general, the security scanner will provide the system administrator with a thorough and reliable security analysis report based on the scan results, which provides an important basis for improving the overall level of network security. Among the various objects of attacks, the security scanning technology has been effectively applied in the field of vulnerability detection in web application.

Black-box web application vulnerability scanners are automated tools that probe web applications for security vulnerabilities, without access to source code used to build the applications. Though there are some intrinsic limitations in black box tools, in comparison with code walkthrough, automated source code analysis tools, and procedures carried out by red teams, automated black-box tools also have advantages. Black-box scanners simulate the external attacks from hackers. They also provide cost-effective methods for detecting a range of important vulnerabilities and may configure and test defenses such as web application firewalls. The effectiveness of blackbox web scanners is directly related to the web developers’ ability and interest in detecting vulnerabilities. This paper [16] measures its effectiveness around the following factors:

• Elapsed scanning time

• Scanner-generated network traffic,

• Scanners for vulnerability detection

• False positive performance.

We therefore focused primarily on the detection performance of the scanners as a group of different vulnerability classifications. We observed that no individual scanner always had the best performance in every vulnerability classification. Often, scanners with a leading detection rate in one vulnerability category lagged in other categories. For example, the leading scanner in both the XSS and SQL Injection categories was among the bottom three in detecting Session Management vulnerabilities, while the leader for Session Vulnerabilities lagged in XSS and SQLI. This makes us believe that scanner vendors may benefit from a cross-vendor pollination as a community. Reiterating briefly from the false positive results, we did find that scanners with high detection rates were able to effectively control false positives, and that scanners with low detection rates could produce many false positives.

2.3 Static analysis

In recent years, static analysis technology has rapidly developed from early lexical analysis to formal verification method and its detection capability has also been improved [17]. It is an effective detection technique that can be used in detecting vulnerabilities in software application to improve the security of the software.

The tools and techniques currently been used in static code analysis are lexical analysis, type inference, theorem proving, data flow analysis, model checking and symbolic execution. The key aspect of static analysis method is that, the code of the program under test is not executed but through direct analysis of the code to detect loopholes. It is simple, fast and can effectively be used in detecting bugs in the code. We observed that the constant indepth analysis and research of static analysis algorithm, its models and tools has made it a more powerful technique. The first static analysis tool is known as FlexeLint and was used around 1980s; it used pattern matching method to identify gaps. In recent years, a number of complex and powerful static analysis tools began to appear. But few studies have analyzed and evaluated different static analysis tools, so it is quite negative to promote and apply static analysis tools.

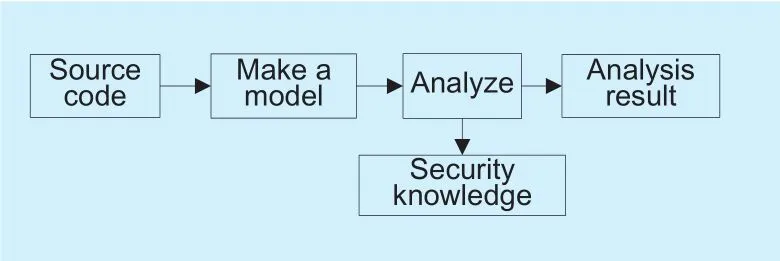

Static detection techniques analyze binary code or source code of the application programs by using program analysis techniques.The static detection techniques detect and scan the source code of the tested program, and understand the program behavior from its semantics and syntax so as to analyze the characteristics of the tested program. Finally, the exception which may result in errors will be detected. The static analysis process is shown in Figure 1 below.

Fig. 1. Static analysis flow chart.

Wagner et al. developed a system for detecting buffer overflows statically in C programs [18]. The approach treats C strings as an abstract data type accessed through the library routines and models buffers as pairs of integer ranges (size and current length), while the detection problem is formulated as an integer constraint problem. The library functions are modeled in terms of how they modify the size and length of strings. By trading precision for scalability their implemented tool gives both false positives and false negatives.

2.4 Binary comparison

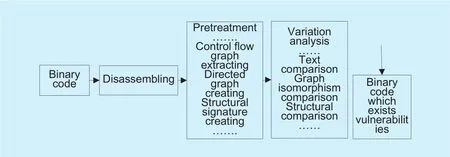

The binary comparison technique, which is also known as the patch comparison technique, is mainly used to exploit known vulnerabilities. In a certain sense, it can be considered as a vulnerability analysis technology.It is a vulnerability analysis technique that locates and finds the cause of the vulnerability by comparing the original binary files and the patched ones. Security personnel can locate vulnerability code by patch comparison analysis, and hence use simple data flow analysis to get the attack codes of the vulnerability exploit quickly. The binary comparison analysis technique can quickly locate the differences between the codes before and after the patch is released, and it can locate where the code in the source program is modified. That is to say, it can locate the code which contains the vulnerabilities in the source program precisely, and it also provides important clues for the subsequent exploitation of the vulnerabilities[19]. Therefore, the binary comparison analysis technique is the basic method against the rapid exploitation of vulnerabilities. Based on the common implementation process of the binary code comparison technique, it can be summarized as shown in the Figure 2.

The primary goal of binary code comparison is to find the differences in the program before and after the patch. Concerning which differences and how these differences are detected and located is based on the specific implementation principle.

Integer-based vulnerability is an extremely dangerous bug for programs written in languages such as C/C++. However, very few software security tools can efficiently detect and accurately locate such vulnerability in practice. In addition, previous methods largely depended on source code analysis and recompilation which are impractical when protecting the program without source code.

Fig. 2. Flow chart of binary comparison technology implementation.

Chen et al. [20] investigated and proposed the design, implementation, and evaluation of BRICK (Binary Run-time Integer-based vulnerability Checker), a tool for run-time detection and location of integer-based vulnerability. Given an integer-based vulnerability exploit, BRICK is able to catch the value which falls out of the range of its corresponding type,then finds the root cause for this vulnerability, and finally locates the vulnerability code and gives a warning, based on its checking scheme. BRICK is implemented based on the dynamic binary instrumentation framework Valgrind and its type inference plug-in: Catchconv. Preliminary experimental results are quite promising: BRICK can detect and locate most of integer-based vulnerabilities in real software, and has very low false positives and false negatives.

2.5 Dynamic analysis

Dynamic analysis technique is a dynamic detecting technique. It runs the target program in the debugger and observes the running state of the program, the memory usage, and the value of the register to identify potential problems and find bugs. Dynamic analysis technique starts with both code flow and data flow: by setting the breakpoint, it dynamically traces code flow of the target program to detect the defective function call and its parameters; it analyzes data flow bi-directionally. By constructing special data, it can trigger potential errors and analyze the results. Dynamic analysis requires a debugger tools; SoftIce, OllyD-bg, WinDbg, etc are powerful dynamic tracing debuggers.

Dynamic detecting technology for computer security vulnerability, detects computer program defects mainly when the source code is unchanged. It has a requirement for running environment processes, so the environment process for the computer needs to be modified.Dynamic buffer overflow vulnerabilities are usually detected with dynamic analysis techniques.

Despite previous efforts in auditing software manually and automatically, buffer overruns are still being discovered in programs in use. A dynamic bounds checker detects buffer overruns in erroneous software before it occurs and thereby prevents attacks from corrupting the integrity of the system. Dynamic buffer overrun detectors have not been adopted widely because they either: (1) Cannot guard against all buffer overrun attacks, (2)break existing code, or (3) incur too high an overhead. This paper presents a practical detector called CRED (C Range Error Detector)that avoids each of these deficiencies. CRED finds all buffer overrun attacks as it directly checks for the bounds of memory accesses.

Unlike the original referent-object based bounds-checking technique, CRED does not break existing code because it uses a novel solution to support program manipulation of out-of-bounds addresses. Finally, by restricting the bounds it checks strings in the program.

CRED’s overhead is greatly reduced without sacrificing protection in the experiments we performed. CRED is implemented as an extension of the GNU C compiler version 3.3.1. The simplicity of our design makes it’s possible a robust implementation that has been tested on over 20 open-source programs,comprising over 1.2 million lines of C code.CRED proved effective in detecting buffer overrun attacks on programs with known vulnerabilities, and is the only tool found to guard against a test bed of 20 different buffer overflow attacks [19]. Finding overruns only on strings impose an overhead of less than 26%for 14 of the programs, and an overhead of up to 130% for the remaining six, while the previous state-of the- art bounds checker by Jones and Kelly breaks 60% of the programs and is 12 times slower. Incorporating well-known techniques for optimizing bounds checking into CRED could lead to further performance improvements.

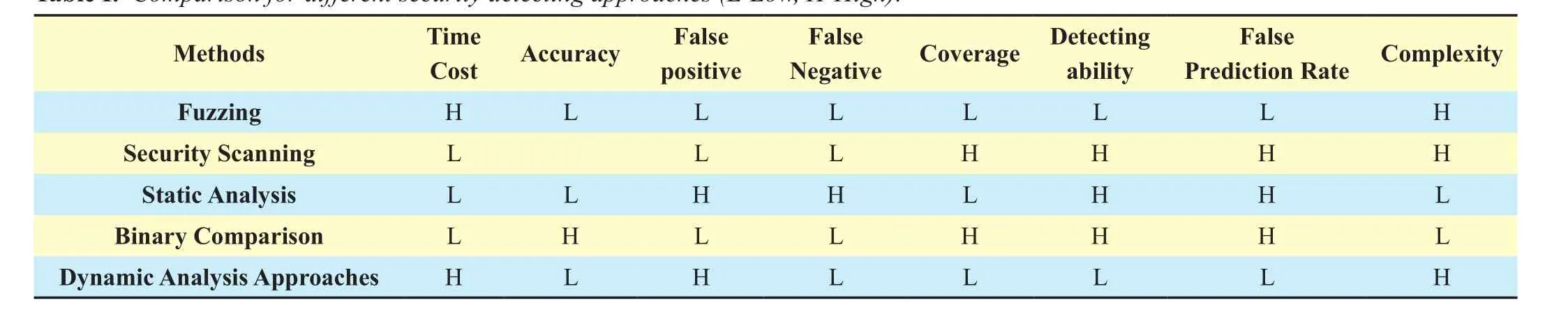

Table 1 shows a summary of the vulnerability detection methods and their effectiveness based on some evaluation metrics.

Table I. Comparison for different security detecting approaches (L-Low, H-High).

III. SOFTWARE VULNERABILITY DETECTION TOOLS

3.1 Software assurance tools

There are many software assurance (SA) tools in the market. Some are commercially available, others are open source. Some of the tools are designed to be used during particular system development life cycle. These tools also support varieties of features. The purpose of the tool survey is to help answer the following questions:

❖ What are the common features among tools of the same type?

❖ Where are the gaps in capabilities among the same types of tools?

❖ How could a purchaser know which tool is the right one for their level of software security assurance?

❖ Should developers of tools provide some kind of “assurance label” to their product that defines what their tool can and cannot do?

❖ What might an “assurance label” look like(for example, on the back of a source code scanner software package)?

There are many ways to classify software assurance tools. One classification is “where”in the software development life cycle the tool is used:

❖ Requirements capture, design, specification tools

❖ Software design/modeling verification tools

❖ Implementation or production testing tools

❖ Operational testing tools This paper[20] focuses on SA tools used during software implementation and production. Some tools specialize in identifying vulnerabilities within a specific type of application. The taxonomy used for the survey of SA tools was delivered from Defense Information Systems Agency’s (DISA) “Application Security Assessment Tool Market Survey,” Version 3.0, July 29, 2004 [21]. This taxonomy is also used to identify SA functions.

3.2 Static analysis techniques and tools for software vulnerability

The advent of computer systems and ever increasing number of software applications makes information security an issue of concern to end-users. In general, the software security problem is caused by software vulnerabilities;these vulnerabilities could be caused by flaws in developer designs or deficiencies within the programming language itself, or may be back doors which developers left behind. According to statistics, the vast majority of hacking attacks are caused by software vulnerabilities.Network worms and Trojans are powerful malware that exploit the flaws in software to comprise computers resulting in information disclosure, systems running slow or even total collapse of the computer system. The general concern of users is how to locate or find these loopholes in software development and applications as well as fixing these vulnerabilities.There are many ways to improve software security [22], such as raising the safety awareness of programmers, using strict development model to design software, making software run in a safe environment and so on. However in recent years, the most efficient technique or procedure used by people for analyzing software vulnerabilities is the static analysis technology [23]. Static analysis method does not require running the program, but through direct code analysis to detect loopholes.

Static analysis is simple, fast and can be effective in finding bugs in code. Therefore,many software analysis tools are designed and achieved with static analysis technology.The first static analysis tool is FlexeLint in the 1980s, which used pattern matching method to identify gaps. Recently, there are a number of complex and very powerful static analysis tools been developed. Some researchers have evaluated their security by looking at some software vulnerabilities.

But few studies have analyzed and evaluated different static analysis tools, so it is quite negative for the promotion and application of the static analysis tools. Generally, no tool can find all the defects in the software under test.Each tool has different advantages in finding vulnerabilities [24]. Jaspan and Chen et al.designed a coverage model of the defects and selected several tools that will complement each other into the model [25-27], which can improve performance to some extent. Static analysis technology has many advantages, but some software vulnerabilities cannot be found by static analysis.

In this paper, we selected some wellknown, publicly available static analysis tools. The study focused on identifying static analysis functionality provided by the tools and surveying the underlying supporting technology. This paper discussed commonly-used static analysis techniques and tools, and then compared these tools from a technical point of view. After that, we analyzed the characteristics of these tools through experiments.Finally, we proposed an efficient method for software vulnerability detection combining dynamic analysis.

3.3 Static analysis tools

We present eight widely used open source software vulnerabilities static analysis tools which were chosen for our analysis and comparison. First the main features of these tools are briefly described, then compared from a technical perspective.

3.3.1 SPLINT

SPLINT (Secure Programming Lint) [16] is the expansion of LCLINT tool (for detecting buffer overflows and other security threats).It employs several lightweight static analyses. SPLINT needs to use notes to perform cross-program analysis. SPLINT sets up models for control flow and loop structure by using heuristic technology.

3.3.2 FindBugs

FindBugs [20] is an open source static detection tool, which checks the class or JAR files.FindBugs can detect potential problems by comparing binarycodes with the defect model set. FindBugs is not to find loopholes through analyzing the form and structure of class files,but by the use of visitor pattern. Currently, the tool contains about 50 error pattern detectors at present.

3.3.3 PMD

PMD is an open source, and rule-based static detection tool. PMD scans Java source codes to finds some potential problems, such as wrong code, duplicate code, fussy code and code to be further optimized. PMD includes a default rule set. In addition, it allows users to develop new rules and apply them.

3.3.4 FlawFinder

FlawFinder is an open source static analysis tool, which is based on lexical analysis technique. It maintains a vulnerability database to read out the content of the database and can scan through the database within the shorted possible time. It can quickly find the existing problems according to the vulnerability level in the code loopholes.

3.3.5 CppChecker

Cppcheck is a static code analysis tool. Generally, it can check non-standard code. Because of its support for the vast majority of static checks, it can be performed at a source code level. The procedure in the static analysis check is very strict.

Table 2 shows the comparison of the tools from a technical point of view.

3.4 Dynamic analysis tools

Dynamic analysis is an important part of vulnerability detection technology and an integral component of vulnerability discovery and utilization. In this paper, we present a detail description of dynamic vulnerability detection technology, detections tools and finally analyze and compare these tools.

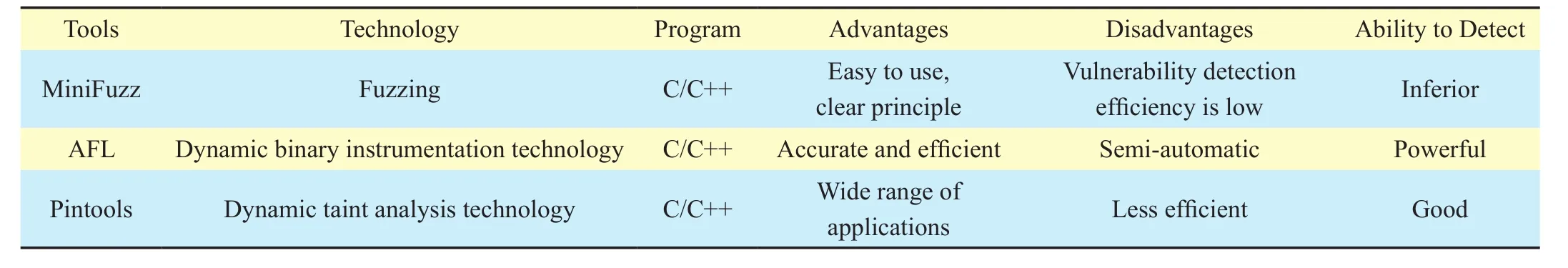

3.4.1 MiniFuzz

MiniFuzz is a dynamic vulnerability detection tool that is designed for fuzzing method and runs on windows systems by modifying the seed file. It works by creating a number of random changes on the temporal test file to generate the target test program process. When the test program behaves abnormally, we can then determine the existence of exceptions in the test files and program.

Table II. Comparison for these different tools.

MInifuzz is a fuzzy test tool that completely uses randomly generated algorithm to generate test cases. In the process of executing the target process, the processes will be created and destructed all over again. Restarting process consumes a lot of time as the tool uses the method making the test process very slow.However the test tool is able to detect vulnerabilities as long there is enough time.

3.4.2 AFL (American Fuzzy Lop)

AFL-fuzz is a fuzzy test vulnerability tool for detecting vulnerabilities in file formats, protocols in the Linux platform, while WinAFL is the Windows version for AFL-fuzz. AFL uses the dynamic binary instrumentation technology, to insert the analyzed code into the target procedure during the execution of the target program in order to achieve the real-time monitoring of the target procedure. It then determines the code segment, it performs the loop like “execution” - “recovery the Pre-register status before the code segment execution “-“execute “, repeats the cycle while testing, so that this tool may be able to achieve dynamic automation vulnerability testing.

Dynamic binary instrumentation technology gets rid of the dependence of source code in the processing of the vulnerability detection,so that the procedures with code and data separated can be effectively detected. However,because of dynamic binary instrumentation,the program will be lost in the process of running at a fastest rate. This type of flaw ensures that, we manually locate the sensitive function of the program offset address, this makes WinAFL semi-automatic.

By locating the offset address of the critical memory code segment of the tested program,the target program needs to start only once and hence every time we only need to restore the value of the register and modify the parameters passed when the program executes code segment. As a result, the program can be re-executed from the function. This method improves the efficiency of the execution of the program and speeds of vulnerability detection.

3.4.3 Pintools

Pin is proprietary software developed by Intel, offering non-commercial use and it is a set of reference tools for free. It was initially designed as a tool for computer architecture analysis, its flexible APIs and active communities (called “Pinheads”) create a variety of tools for security, simulation, and parallel program analysis.

The tools created with Pin are called Pintools and can be used to perform program analysis on user space applications in Linux and Windows. As a dynamic binary vulnerability detection tool, Pintools takes the dynamic taint analysis technology, and allows arbitrary code (written in C or C ++) to be inserted anywhere in the executable file to detect compiled binaries at running time. Therefore, it does not need to recompile the source code, and it can support the dynamic generation of code testing procedures. At the same time,the tool can also use symbol implementation technology.A variety of technical options available in the program makes its implementation easy and highly efficient. Thanks to Intel’s continuous support, Pintool has good adaptability and and will still be in existence. Credit to Intel’s continuous support, Pintool has great adaptability and remains an active tool.

Table 3 shows the comparison of the tools from a technical point of view.

IV. EVALUATION METRIC

To analyze the vulnerability mining methods,an evaluation metric is proposed in this study.The main software vulnerability mining techniques are evaluated based on their characteristics. The evaluation method is designed by taking into account the following evaluation factors: vulnerability detection capability, total time cost, accuracy, false positive rate, recall rate, resource overhead and utilization effect,etc. Below we give the description of some of evaluation factors employed by the proposed evaluation metric in analyzing software vulnerability mining methods.

4.1 Main evaluation factors

Definition 1 Detection capability: refers to the ability of a vulnerability mining method to detect vulnerabilities. This is usually represented by the number of vulnerabilities discovered.

Table III. The comparison for dynamic analysis tools.

The detection ability of vulnerability mining method mainly refers to the number of vulnerabilities that a mining method can find.The more loopholes that a mining method can find, the stronger the detection capability of the mining method will be, and hence its detection efficiency will be higher as well. Without considering the accuracy of the detection and the false positive rate, we only consider the proportion of vulnerabilities found in the total number of software vulnerabilities. Because the vulnerabilities of large software are often more than those of small software, we do not consider the number of vulnerabilities mined. For vulnerability technology analysis,the detection capability is one of the most important indicators for evaluating the technology, and the detection of vulnerabilities in order to carry out the next series of operations.

Calculation formula:the total number of vulnerabilities detected / the total number of vulnerabilities existing in the software.

Implementation steps:(1) Get the total number of vulnerabilities in the vulnerability sample by conducting artificial vulnerability mining to the vulnerability sample.

(2) For static analysis methods, first select the mining method, then select the test case.After running, the total number of vulnerabilities detected can be displayed in the results.

(3) For dynamic analysis methods, there are two cases. One way is to test multiple target programs at once. First of all, count the number of detected vulnerabilities and then count the total number of test programs. Another way is to test only one target program at a time. You first count the number of the unusual situations, and then count the total number of tests.

Definition 2 false positive rate:refers to the probability of the vulnerability mining technology finding incorrect vulnerabilities.The false positive rate is calculated as the ratio between the number of negative events wrongly categorized as positive (false positives) and the total number of detected vulnerabilities.

Security oriented static analysis tools often produce large amounts of redundant or useless information. The detected vulnerabilities will not be readily available in practice. If the false positive rate of the test results is high, it will not only affect the reliability of the results, but also bring a lot of unnecessary trouble to the software developers. After all, they need to take the time to look for misinformation in the huge test results. Therefore, we should reduce the false positive rate as much as possible, and improve the detection efficiency of mining methods.

Calculation formula:the number of vulnerabilities detected / the total number of vulnerabilities detected.

Implementation steps:(1) For static analysis methods, the total number of vulnerabilities detected has been shown directly in the execution results.

(2) The number of vulnerability can be detected can be detected by comparing the results of the test with those found in the vulnerability sample.

(3) For dynamic analysis methods, the false positive rate is calculated according to whether the test results are consistent with the expected results by giving different input data several times.

Definition 3 Recall rate: refers to the level of accuracy of vulnerability detection by a vulnerability mining technology. The higher the degree of accuracy of a vulnerability mining technology, the more credible the detection results; therefore, testers and developers can spend less extra effort in mining vulnerabilities, thereby improving efficiency as a whole.

Calculation formula: the correct number of vulnerabilities detected / the total number of vulnerabilities existing in the software.

Implementation steps: (1) Get the total number of vulnerabilities in the sample by conducting an artificial vulnerability mining.

(2) For static analysis methods, the correct number of vulnerabilities can be detected by comparing the results of the test with the vulnerabilities found in previously known test cases.

(3) For dynamic analysis methods, the test results can only show whether an exception can be detected or cause a program to crash.Therefore, we can get the accuracy by using the same method with the false positive rate.

Definition 4Resource overhead: refers to the specific environment, resources and so on when the vulnerability mining technology is used to detect vulnerabilities.

Computer resources generally include computer hardware resources, computer peripheral resources, as well as software and various electronic data resources. While discussing the resource environment needed for vulnerability mining, we mainly consider the occupancy rate of CPU and the memory utilization. For different vulnerability mining methods, the runtime required varying system resources,such as CPU usage and memory utilization.Therefore, the lower the runtime CPU and memory footprint, we assume that the resource overhead of the vulnerability mining approach is smaller.

Calculation formula: (CPU usage rate +memory usage rate) /2

Implementation steps: During program execution, the CPU usage and memory usage of the program are viewed through the task manager, or by using monitoring software such as CPU-Z, HWiNFO32.

Definition 5Time overhead: refers to the time required to discover vulnerabilities,which also called the time cost.

The time cost of vulnerability discovery is an effective measure to determine the efficiency of a vulnerability mining method. The shorter the time required, the faster the vulnerability detection, and the higher the efficiency;otherwise, the efficiency is lower.

Calculation formula: the difference between the start time and end time of the executing mining method / the maximum time used by all mining methods.

Implementation steps: After selecting the appropriate vulnerability mining method and the corresponding vulnerability samples, we need to record the start time and end time of the program and calculate the time difference,and finally calculate the time overhead.

4.2 Evaluation model and formula

Based on the evaluation factors, an evaluation model is designed to evaluate vulnerability mining methods quantitatively. With more evaluation factors including detection capability(C), false positive rate(F), recall rate(A),time cost(T), resource overhead(R), resource requirements(R), application domains(P),utilization effect(U), technical requirements(E), advantages(V), and shortcoming (D), the quantitative evaluation can be made.

According to the evaluation factors listed above, an evaluation model is designed:VEM= {C, F, A, T, R, P, U, E, V, D}.

Definition and verification of formula:Based on the evaluation model VEM, the quantitative evaluation formula is designed to reflect the effect of mining methods. Let VMM represent vulnerability mining methods, and EFF represent the evaluation score of any mining method (where vmmi∈VMM).The basic quantitative evaluation formula can be expressed as: EFF(vmmi) = Γ(C, F, A, T,R, P, U, E, V, D). Γ represents a computation function and can usually be represented by a weighted sum of coefficients, that is:

Γ(C,F,A,T,R,P,U,E,V,D)=C*ξ1+F*ξ2+A*ξ3+T*ξ4+R*ξ5+P*ξ6+U*ξ7+E*ξ8+V*ξ9+D*ξ10, where ξ represents different weights,and ξ1+ξ2+ξ3+ξ4+ξ5+ξ6+ξ7+ ξ8+ξ9+ξ10=1.

However, in actual situation, the false positive rate (F), the recall rate (A), the time cost (T), the required resource (R) and the utilization effect (U) are the main contributing indexes in the quantitative evaluation formula.In view of these five important indexes, we design the following formula:

EFF(vmmi)={(1-F)*30%+A*30%+(1-T)*10%+(1-R)*10%+U*20%}.

Examples are given to illustrate the use of the formula and the significance of the results:

For example, assuming that a vulnerability mining technology is used to detect vulnerabilities in a software containing a total of 15 vulnerabilities. However, a total of 10 vulnerabilities are found, of which 8 are correct,and 2 are false positives. The time used is 50s,while the five vulnerability mining methods generally use at most 60s. Memory utilization rate is 20% and CPU occupancy rate is 30%,and hence five users score 0.3, 0.4, 0.3, 0.5 and 0.2 respectively. Therefore, the false positive rate is 2/10, the recall rate is 8/15, and the time cost is 50/60. Also, the resource overhead is (20%*50%+30%*50%), and the utilization effect is (0.3+0.4+0.3+0.5+0.2)/5. Thus we can get the score of the vulnerability mining method as follows:

4.3 Evaluation algorithm

To sum up, there are currently five kinds of vulnerability mining methods; with respect to the technologies they employ. They are security scanning technology, Fuzzing technology,binary comparison technology, static analysis technology and dynamic analysis technology.To evaluate these vulnerability mining methods, each method is tested on a number of test cases and the results obtained, which is usually a series of data, is analyzed. Therefore, we design a vulnerability mining technology evaluation algorithm, herein referred to as VDTE algorithm.

The design idea of VDTE algorithm is as follows. First, we select the test cases to be tested and add them to the candidate test case set. Then, we select a vulnerability mining method to test the test case set. From the test results, we can find the number of vulnerabilities, the number of false positives, the required time, the data occupation rate and other data.From these data, we can get the detection capability, false positive rate, accuracy, time cost and resource overhead of the vulnerability mining technology. The user then makes relevant evaluation. Finally, the evaluation reports of vulnerability mining are generated according to these results. From the report, we can clearly see the quantitative evaluation of the indicators and obtain the final evaluation scores by the quantitative indicators. But the VDTE algorithm has its limitations. Example,we cannot get their quantitative evaluation for the application domains and technical requirements, while they can only be evaluated qualitatively.

V. THE DESIGN OF INTEGRATION TESTING FRAMEWORK

Based on the system of vulnerability detection method evaluation standards, we established the testing process model of the vulnerability detection methods; this can make vulnerability detection method work more automatically.In order to make the vulnerability detection method more automated, we also developed a vulnerability detection test model. The system is mainly divided into three modules, the control modules, the monitoring module, the statistical analysis and storage module. Figure 3 depicts the structure of the model.

The control module first generates the test case, then loads it into the detection method environment, and finally informs the detection method module to start the mining work.The monitoring module mainly monitors the running status of program and the output information in the detection environment. The vulnerability detection method is normally divided into dynamic and static method. In the dynamic detection method, the monitoring module is responsible for monitoring the detection state of the goal program state. While in the static detection method, the monitoring module mainly monitors the output information of the detection method environment.When the monitoring module finds that the specified vulnerability of test case is triggered,it will trigger the statistical analysis and storage module, and instantly provide feedback information to the control module. After the statistical analysis and storage module receives the notification information of the monitoring module, the module analyzes the output information of the goal program status and the detection method environment, determines the specific parameters of the possible vulnerability detection information, and saves the evaluation parameters and so on.

VDTE algorithm Input: TestMethod, TestedFile, P, U and R;//TestMethod represents testing methods, TestedFile represents the vulnerability sample set, P represents the application domain evaluation, U represents user utility scores, and R represents the technical requirements evaluation.Output: TestReport, Score;// TestReport represents the test report, and Score represents the final evaluation score for the vulnerability mining method 1: TestReport = {};2: count=0;3: while(count<k)4: {5: TestCase t = SelectTestCase();// Select k vulnerability samples 6: TestedFile.add(t);// Add k vulnerability samples to the set 7: }8: end while;9: Matching different test methods to test the k vulnerability samples, the test method finds a number of vulnerabilities and other related information.10: C = FindBug/ExistBug;//C indicates detection capability, and FindBug indicates vulnerabilities found by the method. ExistBug represents vulnerabilities in the system and is obtained by manually mining vulnerabilities prior to testing 11: F = 1-FalseBug/FindBug;// F stands for false positive rate, and FalseBug means the vulnerability found that were not actually vulnerabilities.12: A = TrueBug/FindBug;//A stands for accuracy, and TrueBug indicates correctly found vulnerabilities.13: T = 1-(EndTime-StartTime)/MaxTime;//T is the time cost, EndTime is the end time, StartTime is the start time, and the maximum time T for the 5 techniques is MaxTime 14: R = 1-MemoryUsage*0.5 + CPUUsage*0.5;// R represents resource overhead, MemoryUsage represents memory usage rate,and CPUUsage represents CPU usage rate.15: U = (U1+U2+…+Un)/n// U represents ease of use, and U1, U2, and Unevaluate scores for users, and n is the number of users 16: TestReport = <C,F,A,T,R,P,U,E>;// P, U, E are entered by the user respectively 17: Score=C*30%+(1-F)*30%+(1-T/MaxTime)*15%+(1-R)*15%+U *10%;18: CGrade (int C);19: FGrade (int F);20: AGrade (int );21: TGrade (int T)22: RGrade (int R);23: UGrade (int U);// According to the detection capacity, false positive rate, accuracy rate, time cost,resource cost and usability score, the corresponding evaluation grades are obtained 24: The advantages and disadvantages of the application domains and the corresponding technical requirements are obtained 25: TestReport.add(C,F,A,T,R,U, Score);// Add indexes to the output report 26: output TestReport;

5.1 Test case set framework of vulnerability detection method

In order to evaluate the vulnerability detection method, we designed a test suite framework with active source code software, passive code software and network protocol, which includes the design scheme of test set framework, the design method of test case and the specific test sets. In order to facilitate the test evaluation,the attribute of each test case needs to include the information of its input, running platform, vulnerability type, vulnerability trigger conditions, vulnerability location range. The attribute description of test case is detailed in Table 4.

5.1.1 Active code software test case set framework

Considering that the analysis of active code software test cases with the vulnerability detection method is usually static analysis method or a combination of static and dynamic methods. Therefore, this type of test case framework mainly focuses on the logic of vulnerability.

Active code test case software mainly con-tains key data code and logic analysis code,the key data code refers to the relevant variables, constants and other data code that can trigger the vulnerability, while the logic analysis code refers to the code which analyzes and processes the key data. Typically, the logic analysis code contains the vulnerability code.

5.1.2 Passive code software test case set framework

The vulnerability detection method of passive code software test case analysis generally uses dynamic analysis method as such the test case is mainly concerned with the program input and processing.

The first stage of the passive code test case is to read the input data, it then extracts the input information, conduct data processing and finally in the processing of extraction and processing the vulnerability code may be implemented.

5.1.3 Network protocol test case set framework

Vulnerability detection method for network protocol testing mainly refers to the testing of the loopholes may be contained in the specific field on the tested network protocol. Therefore, this type of test case is mainly concerned with the processing of the protocol.

The general process of this detection method is that, the network protocol test case are first of all initialized, once this is done the monitor module listens to the port to receive the connection. It will then enter the data reception, protocol analysis and extraction,protocol processing, data return and other cycle stage. It finally disconnects and re-waits for the connection again. The most significant concept about this method is that, the location of the loopholes is the process of data reception, protocol analysis extraction, protocol processing, and data return.

5.2 Test case set management platform for vulnerability detection method

In this paper, we designed and implemented a test case set management platform for vulnerability detection method in order to achieve the control of the test process and the collection of the evaluation indicators. The corresponding description of the design of management platform is given as following.

Fig. 3. The vulnerability detection test process model.

5.2.1 The general structure

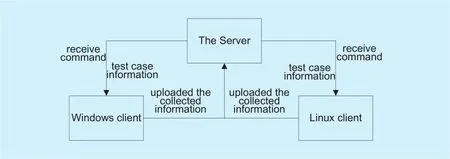

The test case set management platform for vulnerability detection method was designed by CS. Due to the different platforms; the client is divided into the Windows client and the Linux client. Once the client receives the command and the test information from the server, the client will generate the test case for response, control the start of test process, collect the evaluation indicators, and hence it will upload the collected information to the server.After the test process is completed, the server automatically generates test reports through statistical analysis. As shown in Figure 4.

Fig. 4. The overall structure of the test case set management platform.

Fig. 5. The design of server structure.

Fig. 6. The design of the client structure.

5.2.2 The design of platform

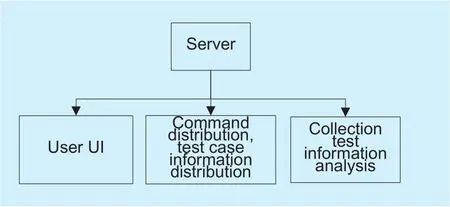

a) the design of the server

The server mainly includes user interface(UI), command distribution, collection information and analysis. After user configures the command, the server sends the command and the information of test sets to the response client, and then it waits for the client to upload the acquisition parameters. Finally, the server will summarize the analysis and generate the report according to all indicators. The design of server is shown in Figure 5.

b) the design of the client

The client receives the server command and the information of test case set first, then it will generate the test case set as well as start the test method. According to the model designed in Figure 3, the control module controls the start of the program cycle of the detection method, the monitoring module monitors the state of measured program or output information of test method, then statistical analysis and storage module collects the attack path of the detected program and the evaluation parameter information such as the address range of the vulnerability, and then the client will upload all the collected information to the server after completing the test results of the given test case set. The design of the client is shown in Figure 6.

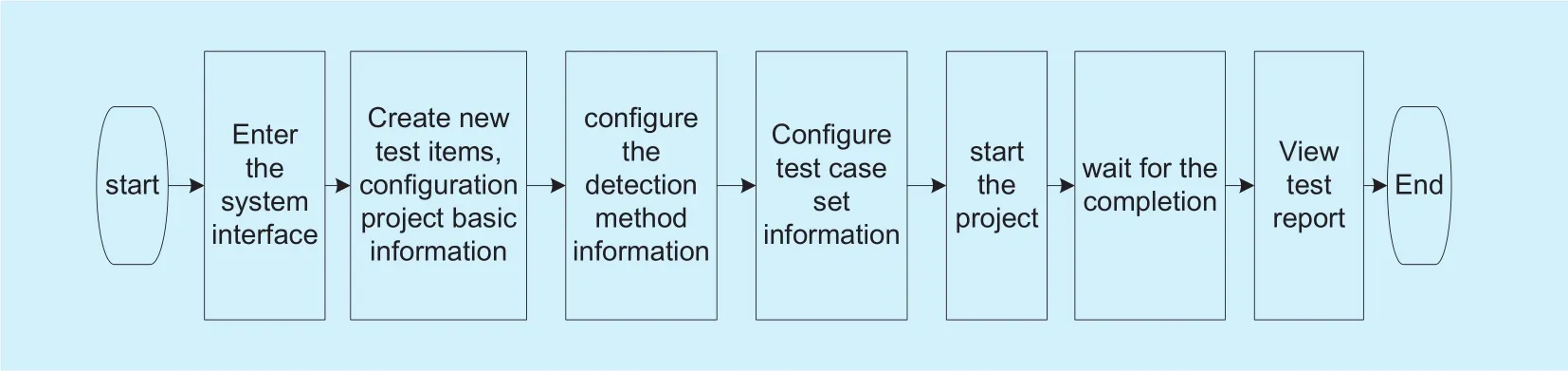

5.2.3 Usage instructions

❖ User opens the system interface;

❖ Create new test items and the basic information of configuration project;

❖ Configure the detection method information, including input, output and operation of the detection method, and deploy the excavation method program onto the client;

❖ Select test case set information, including test type (active code software, passive code software, network protocol software),specific vulnerability test case set and so on;

❖ Start the project, wait for the completion of project command distribution;

❖ After the successful start of the project, user must wait for the completion of the project progress, you can view the progress bar in time;

❖ After the completion of test, click the project test report, you can view the test report of the vulnerability detection method.

The general process is shown in Figure 7.

Fig. 7. The overall process chain of the test case set management platform.

VI. EXPERIMENT ANALYSIS

In order to further verify the feasibility and effectiveness of the proposed framework, subsection A describes the implementation system for the proposed framework: vulnerability detection approach testing platform VMATP. By analyzing and testing the typical vulnerability test suites, subsection B ‘Experiment Analysis’further verify the effectiveness of the proposed framework.

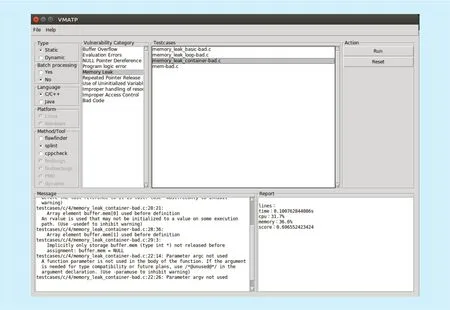

6.1 System implementation

Based on the evaluation method and proposed framework above, we have implemented a testing platform for software vulnerability mining VMATP with Python2.7 on the Linux and Windows operating system. A system snapshot is shown in the Figure 8.

The menu bar of the system includes two main menu options: File and Help. File is mainly used to add test cases and testing methods. It also provides saving, printing and other functions. Firstly, users select the routines of the test cases to import the test cases.Then, users select different types, different languages and different vulnerability detection methods to test the cases. After testing, we can get corresponding evaluation metrics such as time cost, accuracy, detection ability and so on. Finally, by analyzing testing results of the vulnerabilities, the platform will make an evaluation of the corresponding vulnerability exploitation methods.

6.2 Experiment analysis

Fig. 8. System snapshot.

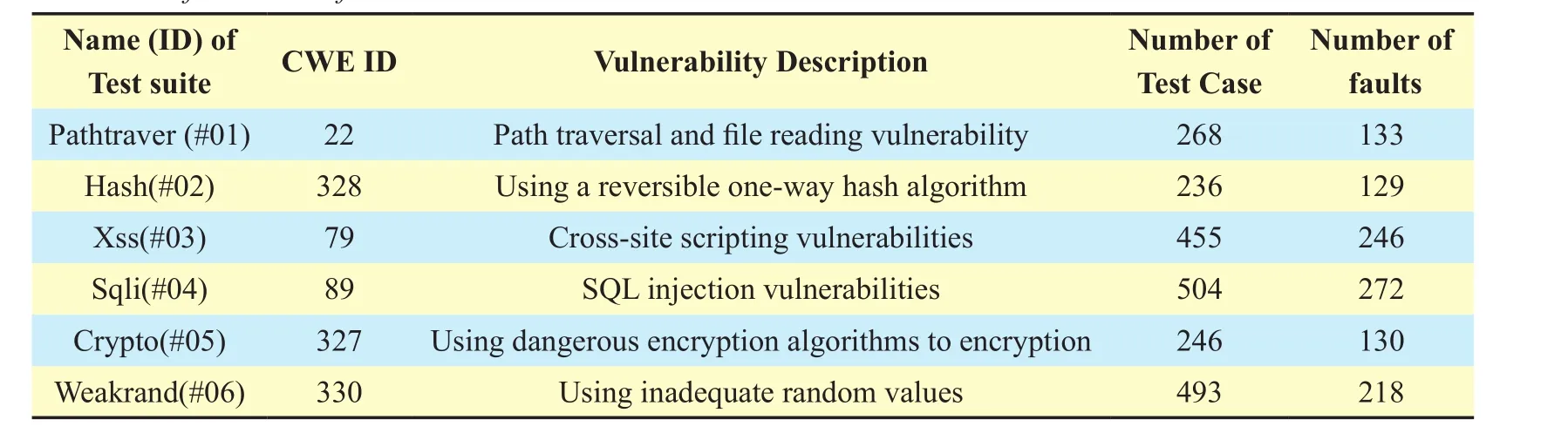

Table V. Information of test case sets.

To further analyze the effectiveness of the testing platform for vulnerability detection methods, we collected six test case sets to evaluate the framework and these methods: pathtraver,hash, xss, sqli, crypto and weakrand [28]. The information of the six test case sets is shown in Table 5. We compared three test tools which aim at Java: findbugs, PMD and findsecbugs.The experiment results are given in Table 6.

Table VI. Experiment results (FP: False Positive, TP: True Positive).

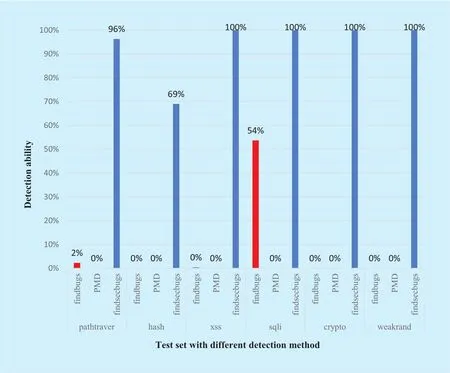

Fig. 9. The comparison for the detection capabilities of different detection methods.

From Table 4 we can observe that findsecbugs performed best among these three methods, followed by findbugs, and PMD performed worse. Some of the causes for this phenomenon are related to the selection of the test case set. However, in general, we can find that the vulnerability exploitation ability of findbugs which is based on java codes is more powerful. That is to say, this tool can find more defects and potential performance problem, followed by findbugs and PMD.

Depending on the data from Table 5 and Table 6, we can get the detection ability for each method that corresponds to different test case sets, shown in the Figure 9. The detection ability is equal to the number of true positive divided by the total number of the actual vulnerabilities. From Table 6 and Figure 9, we can see that findecbugs has the best detection ability, but it also has the largest number of false positive loopholes. Followed by findbugs, with certain detection ability as well as some false positive loopholes existed. In addition, PMD cannot detect any loopholes. The main reason is that the PMD detection rules cannot be applied to some given test case set.

VII. CONCLUSIONS AND FUTURE WORK

Software vulnerability mining is an important way to detect the existing loopholes in the software. Firstly, this paper focused on the analysis of several common techniques for vulnerability detection, and pointed out that various methods have their own advantages and disadvantages. In order to accurately exploit software vulnerabilities, all the methods can be used synthetically in the actual testing.Considering different types of vulnerability detection methods, we present the corresponding testing methods for vulnerability exploitation and build the evaluation testing model for the vulnerability testing methods. Finally,we also formed the evaluation criteria for the vulnerability mining methods. Meanwhile, the test suits and the corresponding testing management platform also have been provided.

In this paper, we analyzed some common vulnerability mining methods. Except for these common methods discussed in this paper, there are some other vulnerability mining methods that still need to be further researched. In addition, we need further research the quantitative and qualitative evaluation metrics for vulnerability mining methods in future.

ACKNOWLEDGEMENTS

This work is partly supported by National Natural Science Foundation of China (NSFC grant numbers: 61202110 and 61502205), and the project of Jiangsu provincial Six Talent Peaks (Grant numbers: XYDXXJS-016).

[1] A.M Osman, A. Dafa-Allah, A.AM Elhag, “Proposed security model for web based applications and services,”Proc.International Conference on Communication Control, Computing and Electronics Engineering, 2017, pp. 1-6.

[2] M. Huang, Q. Zeng, “Research on classification and features of Software Vulnerability,”Computer engineering, vol. 36, no. 1, 2010, pp. 184-186.(in Chinese)

[3] B. Liu, L. Shi, Z. Cai, and M. Li, “Software vulnerability discovery techniques: A survey,”Proc.the fourth International Conference on Multimedia Information Networking and Security, 2013, pp.152-156.

[4] Y. Song, “Security Vulnerability Analysis and Mining Technology,”China Software Security Summit, 2008. (in Chinese)

[5] Q. Chi, H. Luo, X.D Qiao, “Overview of Vulnerability Mining and Analysis Technology,”Computer and Information Technology, 2009.

[6] F. Yamaguchi, N. Golde, D. Arp et al. “Modeling and Discovering Vulnerabilities with Code Property Graphs,”Proc.Security and Privacy, 2014,pp. 590-604.

[7] N. Nethercote, J. Seward, “Valgrind: a framework for heavyweight dynamic binary instrumentation,”ACM SIGPLAN Notices, vol. 42, no.6, 2007, pp.89-100.

[8] Y. Xu, “Vulnerability-based Model Checking of Security Vulnerabilities Mining Method,”Netinfo Security,2011. (in Chinese)

[9] R. Telang, S. Wattal, “An empirical analysis of the impact of software vulnerability announcements on firm stock price,”IEEE Transactions on Software Engineering, vol.33, no. 8, 2007, pp.544-557.

[10] D. Moore, V. Paxson, S. Savage, C. Shannon,S. Staniford, N. Weaver, “Inside the slammer worm,”IEEE Security & Privacy, vol. 1, no. 4,2003, pp.33-39.

[11] S. Staniford, V. Paxson, N. Weaver, “How to Own the Internet in Your Spare Time,”Proc.USENIX Security Symposium, 2002, pp. 149-167.

[12] S. Bekrar, et al, “Finding software vulnerabilities by smart fuzzing,”Proc.Software Testing, Verification and Validation (ICST), IEEE Fourth International Conference, 2011, pp. 427-430.

[13] P. Mell, K. Scarfone, S. Romanosky, “A complete guide to the common vulnerability scoring system version 2.0,”Proc. FIRST-Forum of Incident Response and Security Teams, 2007, pp. 1-23.26.

[

14] T. Wang, T. Wei, Z. Lin, W. Zou, “IntScope: Automatically Detecting Integer Overflow Vulnerability In X86 Binary Using Symbolic Executions,”Proc.Proceedings of the 16th Annual Network and Distributed System Security Symposium,2009.

[15] P. Kapur, V. S. Yadavali, A. Shrivastava, “A comparative study of vulnerability discovery modeling and software reliability growth modeling,”Proc.Futuristic Trends on Computational Analysis and Knowledge Management, 2015, pp. 246-251.

[16] J. Bau, et al, “State of the art: Automated blackbox web application vulnerability testing,”IEEE Symposium on Security and Privacy, vol.41, no.3,2010, pp. 332-345.

[17] P. Li, B. Cui, “A comparative study on software vulnerability static analysis techniques and tools,”Proc.IEEE International Conference on Information Theory and Information Security,2010, pp. 521-524.

[18] M. Zhivich, T. Leek, R. Lippmann, “Dynamic buffer overflow detection,”Workshop on the evaluation of software defect detection tools,2005.

[19] Z. Li, J. Zhang, X. Liao, J. Ma, “Survey of Software Vulnerability Detection Techniques,”Chinses Journal of Computers, vol.38,no. 3, 2015, pp.717-732. (in Chinese)

[20] P. Chen, et al, “Brick: A binary tool for run-time detecting and locating integer-based vulnerability,”Proc. the Forth International Conference on Availability, Reliability and Security, 2009, pp.208-215.

[21] J. Wilander, M. Kamkar, “A Comparison of Publicly Available Tools for Dynamic Buffer Overflow Prevention,”Proc.NDSS, 2003, pp 45-50.

[22] P. E. Black, E.Fong, “Proceedings of Defining the State of the Art in Software Security Tools Workshop,”NIST Special Publication, vol.500,2005, pp 264.

[23] E. Fong, V. Okun, “Web application scanners:definitions and functions,”Proc.Hawaii International Conference on System Sciences, 2007, pp.280.

[24] O. Vadim, “Web application scanners: Definitions and functions,”Proc.Hawaii International Conference on System Sciences, 2007, pp. 280-280.

[25] J. Viega, “CLASP Reference Guide: Volume 1.1 Training Manual,”Secure Software, 2005.

[26] J. Chen, L. Zhu, Z. Xie, et al. “An Eff ective Long String Searching Algorithm towards Component Security Testing,”China Communications,vol. 13, no. 11, 2016, pp.153-169.

[27] J. Chen, J. M. Chen, R. Huang, et al. “An approach of security testing for third﹑arty component based on state mutation,”Security &Communication Networks, vol.9, no. 15, 2016,pp.2827-2842.

[28] “Benchmark-OWASP”, https://www.owasp.org/index.php /Benchmark#tab =Test_Cases, 2017.

- China Communications的其它文章

- CYBERSPACE SECURITY: FOR A BETTER LIFE AND WORK

- A Cloud-Assisted Malware Detection and Suppression Framework for Wireless Multimedia System in IoT Based on Dynamic Differential Game

- Powermitter: Data Exfiltration from Air-Gapped Computer through Switching Power Supply

- CAPT: Context-Aware Provenance Tracing for Attack Investigation

- Decentralized Attribute-Based Encryption and Data Sharing Scheme in Cloud Storage

- TPTVer: A Trusted Third Party Based Trusted Verifier for Multi-Layered Outsourced Big Data System in Cloud Environment