Retinal Image Segmentation Using Double-Scale Nonlinear Thresholding on Vessel Support Regions

Qingyong Li, Min Zheng, Feng Li, Jianzhu Wang, Yangli-ao Geng, and Haibo Jiang

RetinalImageSegmentationUsingDouble-ScaleNonlinearThresholdingonVesselSupportRegions

Qingyong Li*, Min Zheng, Feng Li, Jianzhu Wang, Yangli-ao Geng, and Haibo Jiang

Retinal vessel segmentation is a critical indicator of diagnosis, screening, and treatment of cardiovascular and ophthalmologic diseases. Due to the fact that the retinal vessels usually have some tiny structures and blurred boundaries, especially with remarkable noises, it is difficult to correctly segment the vascular. In this paper, we propose a novel Double-scale Nonlinear Thresholding (DNT) method based on vessel support regions. Firstly, the Double-Scale Filtering (DSF) method is applied to enhance the contrast between the foreground vascular and the background stuffs. Secondly, we segment the fine and coarse vessels by corresponding Adaptive Local Thresholding (ALT) and Fixed-Ratio Thresholding (FRT) method. Finally, we obtain the binary segmentation by fusion of fine and coarse vessels. Experiments are conducted on the publicly available DRIVE and STARE datasets, which show the effectiveness of the proposed method on retinal vessel segmentation.

retinal vessel segmentation;double-scale filtering;adaptive local thresholding;vessel support regions

1 Introduction

Accurate detection and analysis of retinal blood vessels have been widely used for diagnosis, screening, and treatment of cardiovascular and ophthalmologic diseases, including diabetic retinopathy, hypertension, and arteriosclerosis[1-5]. Due to the inhomogeneous distribution of the gray-level profiles, the complicated structure of the vessels, the poor local contrast between foreground objects and background stuffs and the image noise from acquisition, it is difficult to segment vessels from retinal images. In the past decades, several methods have been proposed for the segmentation of vessels in retinal images. In general, they can be divided into two groups: supervised and unsupervised methods. The supervised methods require some prior labeling information to decide whether a pixel belongs to a vessel or not[6-11], while the unsupervised methods do the vessel segmentation without any prior labeling knowledge.

1.1 Supervised method

The supervised methods usually utilize ground truth data for the classification of vessels which is based on given features. In essence, this method exploits a set of manually labeled rules of vessel extraction on the basis of a training set to discriminate whether a pixel belongs to a vessel or not. Undoubtedly, feature extraction and classifier determine the performance of the methods. For example, Staal et al. conducted the feature vector extracted from image ridges and a KNN classifier[6]. In [8], Ricci et al. used line operators and Support Vector Machine (SVM) classification with three features per pixel. In [9], Marin et al. used a Neural Network (NN) scheme for pixel classification and computed a 7-D vector composed of gray-level and moment invariants-based features for pixel representation. In [10], Fraz et al. introduced an AdaBoost classifier for vessel segmentation which utilizes a 9-D feature vector extracted from the green plan of the RGB colored image without any preprocessing. In [11], Cheng et al. introduced a feature-based AdaBoost classifier for vessel segmentation which utilizes a 41-D feature vector at different spatial scales for each pixel. The recently proposed method, presented by Li et al.[4], used a deep neural network to model the vessel map which performed an effective result. In general, supervised methods have effective and efficient performance in segmentation. However, the vessel segmentation based on the classifier still has difficulty in clinic since those massive retinal images which have been segmented completely are inevitable as the criterial ground truth.

1.2 Unsupervised method

Unsupervised methods, also known as the rule-based methods, can be further classified into matched filtering, vessel tracking, morphological processing and partial adaptive thresholds approaches.

Among the various retinal vessel segmentation methods, the classical matched filtering method is a widely simple yet effective one. The concept of matched filter, firstly presented by Chaudhuri et al.[12], was detected by the piecewise linear segmentations of blood vessels in the retinal images. This technique convolved a 2-D kernel with the retinal image and extracted 12 different templates that were used to search for vessel segments along all possible directions. In [13], Hoover et al. proposed an algorithm based on the matched filter that proved the retinal vessels, iteratively decreasing the threshold and complimented local vessel attributes with region-based attributes of the network structure. Later, the matched filtering method has been enhanced from abundant scopes[14,15].

The vessel tracking method is a representative method in the unsupervised methods. For example, in [16], Zou et al. presented a method based on analyzing the consecutive scanlines. The consecutive scanline fitting strategy gives accurate vessel direction estimates and further, these directions can be used in vessel tracking. Recently, Yin and Adel[17]proposed a technique based on the probabilistic tracking method. During the tracking process, vessel edge points are detected iteratively using local grey-level statistics and continuity properties of vessels.

The morphological processing method has been universally used in the vessel segmentation since that the retinal vessels have obvious morphological characteristics such as linearity and inflection. In[18], Mendonca et al. proposed an automated method for the segmentation of the vascular networks in retinal images. This method proposed herein can be described in three main processing phases. Firstly, a top-hat transform with variable size structuring elements is aimed at enhancing vessels with different width. Secondly, in order to obtain the initial vascular network, the morphological reconstruction method is applied. And finally, the final segmentation is carried out by combining the extracted centerlines. Additionally, in [19], the traditional retinal blood vessel segmentation method had been improved. The proposed method is based on the linear combination of line detectors at varying scales, which avoids the issues caused by each individual line detector. Nevertheless, to some degree, there are some errors in the extraction of the retinal blood vessels. Moreover, in [20], firstly, for noise removal and vessel localization, authors applied a multi-scale hierarchical decomposition, which is extremely effective for the normalized enhanced images. And then, the binary map of vasculature was obtained by locally adaptive thresholding. This method can effectively estimate the noise and has a high segmentation precision for the retinal lesion images.

The partial adaptive thresholds method is also a well-known unsupervised method. For example, in [21], Jiang and Mojon proposed a general framework of adaptive local threshoding using a verification-based multi-threshold probing scheme. This approach is regarded as knowledge-guided adaptive thresholding, in contrast to most algorithms known from the literature.

Aiming to address these challenges of retinal vessel segmentation, we present the Double-scale Nonlinear Thresholding (DNT) algorithm, which is based on the vessel support regions. The segmentation task is remolded as a cross-modality problem. The first modality is the fine vessel segmentation (FVS), while the second modality is the coarse vessel segmentation (CVS). Furthermore, the final segmentation result is obtained by fusion of fine and coarse vessels. The main contributions of this study include:

(1) We propose a novel Double-Scale Filtering (DSF) method to respectively improve the contrast of fine and coarse vessels.

(2) For fine vessels, we utilize a new Adaptive Local Thresholding (ALT) method on vessel support regions to achieve the binary segmentation of vessels.

(3) For coarse vessels, we present a Fixed-Ration Thresholding (FRT) method to tackle segmentation.

This paper is organized as follows. Section 2 briefly describes the overview of the proposed Double-scale Nonlinear Thresholding (DNT) method. The proposed method is presented in Section 3. In Section 4, the performance metrics of the algorithm are assessed by experiments. Finally, discussion and conclusion are given in Section 5.

2 Overview

In this paper, we propose a novel method, namely Double-scale Nonlinear Thresholding (DNT). It can be schematically described in Fig.1, where the core segmentation algorithm consists of three main stages.

2.1 Double-scale filtering (DSF)

Blood vessels have poor local contrast and universally, the Gaussian matched filter is used to improve the contrast between the vessels and the background. A Gaussian matched filter in different scales has corresponding enhancement effects for fine and coarse vessels, respectively. Accordingly, to be more specific, to complete the tasks of Fine Matched Filtering Response (FMFR) and Coarse Matched Filtering Response (CMFR), we need to design two kinds of Gaussian functions, i.e., fine scale Gaussian function and coarse scale Gaussian function, which are separately convolved with the green channel of the retinal image.

2.2 Adaptive local thresholding (ALT)

The Gaussian matched filter has enhanced the contrast of the FMFR image, and meanwhile, the regions of macular and lesions have been inhibited. However, there still exists the nuisance that the distribution of gray in FMFR images is inhomogeneous, and considerable grays between the foreground and the background are difficult to be distinguished. Theoretically, it is impossible to discover a global threshold that can achieve the task of linear segmentation between the blood vessels and the background. Vessel Support Region (VSR) refers to a rectangle that contains a vessel segment, which can be automatically detected by algorithms. Usually, the scales of VSRs are not identical. For the large scale VSRs, by virtue of theirs bi-modal histograms, segmentation between the vessels and the background stuffs is achieved by Otsu algorithm[22]. In contrast, for the small scale VSRs, due to theirs unimodal histograms, we use(Swt) algorithm[23]to segment vessels. ALT phase contains three main steps: first, all VSRs in FMFR images are automatically detected by VSR detector. Second, we proposed a Combination of Otsu and Swt (COS) method, which achieves corresponding binarization of large and small VSRs by Otsu and Swt, respectively. Finally, we conduct the binary segmentation by fusion of fine and coarse vessels. And at the same time, all pixels that do not belong to the VSRs are set as the background. In this way, Fine Vessel Segmentation (FVS) is obtained.

2.3 Fusion of fine and coarse vessels (FFC)

This phase consists of two stages: firstly, in order to obtain the Coarse Vessel Segmentation (CVS), we utilize a fixed ratio threshold method to segment the CMFR image. Secondly, by the logic or operator, the FVS and the CVS are fused into a single binary image, which contains the fine vessels and coarse vessels simultaneously.

3 Double-scale Nonlinear Thresholding (DNT)

Double-scale Nonlinear Thresholding (DNT) consists of three main phases: Double-Scale Filtering (DSF), Adaptive Local Thresholding (ALT), and Fusion of Fine and Coarse (FFC). These steps are described as follow:

3.1 Double-scale filtering (DSF)

The retinal colorful image contains three plans-red, green, blue. In which the green channel exhibits the best contrast between the vessels and background while the red and blue ones tend to be more noisy. Thus, we always prefer the green channel for further process in vessel segmentation compared with other two plans.

In retinal vessel images, the center pixels appear darker relatively to their neighborhood. Blood vessel gray-level profile can be approximated by a Gaussian shaped curve. The Gaussian matched filter, therefore, is generally used for enhancing the contrast of retinal images.

Now let us suppose that the vessels may be considered as piecewise linear segments. For our initial calculations, we shall assume that all of the blood vessels in the image are of equal width 2δand of lengthL. Consequently, a Gaussian function is proposed as a model for the gray-level profile of the cross section of the vessels. And such a kernel may be mathematically expressed as

(1)

whereK(x,y) is the kernel function,δrepresents the scale of the filter andLis the length of the segment for which the vessel is assumed to have a fixed orientation. Here the direction of the vessel is assumed to be aligned along the y-axis. In our implementation,L=5.

Given the arbitrary directions of the blood vessels, we firstly construct 12 different templates that are used to search for vessel segments along all possible directions. And then, a set of 12 such templates is applied to a fundus image and only the maximum of their responses is retained at each pixel. In order to facilitate the sub-processing, the matched filtering response image is usually normalized and quantified as the 256 gray-level scales.

Usually, retinal vessel images consist of fine and coarse vessels simultaneously. We observe that Fine Matched Filter (FMF) is adapted to respond fine vessels and erode coarse vessels. Conversely, Coarse Matched Filter (CMF) is adapted to segment coarse vessels and blur fine vessels. Fig.2 illustrates the corresponding matched filter image in different values ofδ.

Fig.2Thepre-processingofretinalimages: (a)anexampleofcolorimage, (b)thegrayimageofgreenchannel, (c)fineMFRimagewithδ=1.3andL=5,and(d)coarseMFRimagewithδ=2.3andL=5.

In this step, we look for a Double-Scale Filtering (DSF) for pre-processing. For fine vessels, we apply Fine Matched Filter (FMF) to segment fine vessels. For coarse vessel, we apply Coarse Matched Filter (CMF) to respond the coarse vessels.

3.2AdaptiveLocalThresholding(ALT)onvesselsupportregions

Adaptive local thresholding method finds an optimal threshold for each local region and non-linearly segments FMFR images, in order to overcome the linearly non-separable problem faced by global thresholding methods. This section presents a new ALT algorithm, which is based on vessel support regions, known Otsu thresholding method and Swt algorithm. ALT first detects the VSRs in the gradient space of FMFR images using a vessel segment detector, and then we present a Combination of Otsu and Swt (COS) method to achieve corresponding binarization of large and small VSRs. Finally, the small and large VSRs are fused into one binary image.

3.2.1 VSR detection

A VSR denotes a locally straight vessel segment in a retinal image. Generally, a VSR contains a line contour where the grey level is notably changing from dark to light or the opposite. The gradient of FMFR images, therefore, is applied for detecting VSRs since gradient represents such grey change. The VSR detector starts by computing the gradient of each pixel at a FMFR image. The image then is segmented into connected regions of pixels that share the same gradient angle up to a toleranceτ. Such connected regions are denoted as vessel support regions. Each VSR is a candidate of a straight vessel segment and is associated with a rectangle.

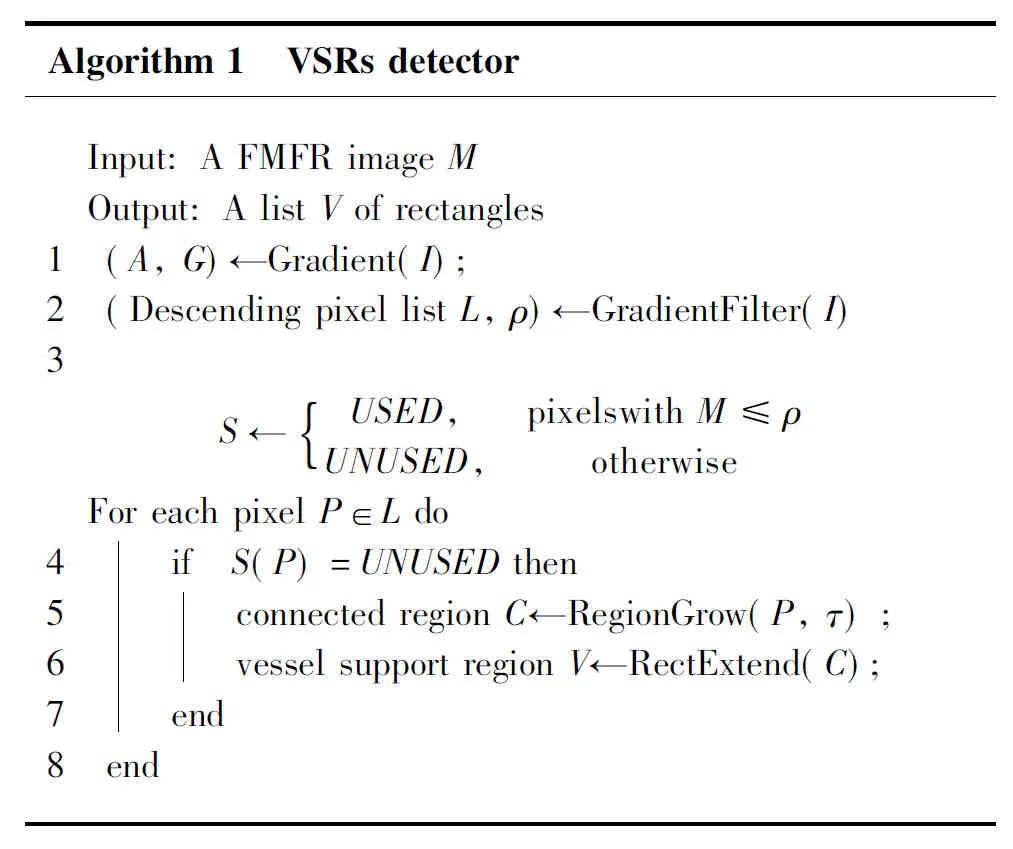

Algorithm1 VSRsdetector Input:AFMFRimageM Output:AlistVofrectangles1 (A,G)←Gradient(I);2 (DescendingpixellistL,ρ)←GradientFil-ter(I)3 S←USED,pixelswithM≤ρUNUSED,otherwise{ ForeachpixelP∈Ldo4567 if S(P)=UNUSEDthen connectedregionC←RegionGrow(P,τ); vesselsupportregionV←RectExtend(C); end8 end

The VSR detector takes a FMFR image as input and returns a list of rectangles that include a vessel segment. This algorithm includes three main steps, i.e., gradient computing and filtering, region growing, and rectangle extension. Algorithm 1 presents a complete description in pseudo-code.

(1) Gradient computing and filtering

Given a FMFR imageM, and a pixelPat a coordinate (x,y), its gradient is computed as follows:

(2)

(3)

whereM(x,y) refers to the grey value of the pixelP(x,y). The gradient angle is calculated as

(4)

and the gradient magnitude is computed as

(5)

Generally, pixels with high gradient magnitude correspond to the edges or noises. In a vessel segment, the edge pixels usually have the highest gradient magnitude, so it is a good choice to set such pixels as seed points to search vessel segment.

A bucket sorting algorithm is applied to obtain a descending bin sequence, and each bin contains pixels with similar gradient magnitude. The VSR detector first uses the pixels in the largest bin as seed pixels, and then it takes seed pixels from the second bin, and so on until it uses up all considered bins.

We observe that pixels with small gradient magnitude often correspond to the background and they should not be treated as seed pixels. In addition, the proportion of vessel pixels in a retinal image is far lower than that of background. For example, the average proportion of vessel pixels in DRIVE and STARES datasets is 8.43% and 7.60%, respectively. So the descending bin sequence is truncated by a thresholdρ, which makes about the top 10% proportion of pixels be considered as seed points. Our experiments verify that such truncation strategy can notably improve the computing efficiency and does not degrade segmentation performance.

(2) Region growing

A region growing algorithm is applied to form a VSR starting from an unused seed pixel. Note that the status of all pixels, whose gradient magnitude is greater thanρ, is set toUNUSEDand the other pixels are set toUSED. The status is saved in an auxiliary matrixS, which has the same size as the FMFR imageM. Firstly, a seed pixelPis selected and creates a regionCwhose angleACis initialized withA(P). Recursively, all unused neighbors of the pixels included inCare checked, and the neighbors whose gradient angle is equal toACup to a toleranceτare included toC. Note that an 8-connected neighborhood is used. When a pixel is added toC, the region angle value is updated to

(6)

wherekrefers to the index of pixels added toC. This process is repeated until no other pixel can be added toC.

(3) Rectangle extension

After the region growing algorithm collects a set of pixels with similar gradient angles, the rectangleRassociated withCwill be computed and extended. The centerOof the rectangle is set to the center of the mass ofCand the main direction ofRis set to the first inertia axis ofC, as applied in [23]. In addition, the widthωand the lengthhofRare set to the smallest values that make the rectangle to cover the full connected regionC. In other words,Ris the minimum circumscribed rectangle ofC.

The circumscribedRcan cover most edge pixels of a vessel segment, but it needs to be extended because of two factors. First, a vessel segment is usually be divided into two connected regions because the gradient direction of the pixels at different side of the vessel is opposite. Second, some intersections of vessels are neglected because of the irregular of their gradient magnitude or angle. Fig.3(a) shows some examples of such rectangles.

Fig.3Illustrationofrectangleextension: (a)rectangleobtainedbyregiongrowingalgorithm, (b)rectangleextensiononboththefirstandthesecondinertiaaxes,and(c)extendedrectangle.

The rectangleRextendsω/2 pixels from both the first and the second inertia axes, and produces a vessel support regionVas demonstrated in Fig.3(b) and Fig.3(c). This procedure is simple but effective to cover all pixels of a vessel segment. Note that the pixels in the extended region are marked withUSEDsince they are included in a VSR.

3.2.2 VSR binarization

A set of VSRs in a FMFR image is detected by above VSR detection method. We observe that the VSR, whose scale is greater than 2s, appears bi-modal histogram. And the VSR, whose scale is less than 2s, appears unimodal histogram. Fig.4 shows that the gray histogram of VSRs. In this step, we proposed a Combination of Otsu and Swt (COS) method to achieve corresponding binarization of large and small VSRs. For the large scale VSRs, we segment the vessels from the background by Otsu algorithm. And for the small scale VSRs, we utilize Swt algorithm to segment. Subsequently, the small and large VSRs are fused into one binary image. The process of VSR binarization is shown in Fig.5.

3.3 Fusion of fine and coarse vessels (FFC)

Fine vessels can be segmented by ALT algorithm. Unfortunately, for coarse vessels, ALT can only segment their rough skeleton and still misses some pixels. In our method, firstly, we present a novel Fixed-Ratio Threshold (FRT) method to segment the CMFR image, and then the Coarse Vessel Segmentation (CVS) is obtained. Secondly, we use the operator of logic or to fuse the FVS and CVS into one single binary image, which consists of the fine vessels and coarse vessels simultaneously. Fusion of fine and coarse vessels is shown in Fig.6.

Fixed-Ratio Thresholding (FRT) method consists of three steps: firstly, sort the pixels of CMF image in descend by bucket order algorithm; secondly, calculate the optimal thresholdTr; thirdly, binarize the CMF image according toTr. Directly, the retinal images have obvious distinction of distribution between the linear vascular and the background stuffs. And the ratio of the foreground is usually lower than the background. In this method, the thresholdTris given by

(7)

whererrepresents expected ratio of the vascular,Totalis the quantity of all pixels andNumis the frequency function. FRT has binary outputs. Fig.6(b) shows the segmentation result of FRT.

Fig.4ThegrayHistogramofVSRs: (a)aMFRimageandfourrandomlyselectedVSRsthataremarkedwithredrectangle, (b)thehistogramofVSR1, (c)thehistogramofVSR2, (d)thehistogramofVSR3,and(e)thehistogramofVSR4.

Fig.5TheprocessofVSRbinarization: (a)thegreenchannelofretinalimage, (b)FMFRimage,and(c)segmentationresultofVSRbinarization.

Fig.6FusionofFineandCoarseVessels: (a)asegmentationresultofALT-VSR, (b)segmentationresultofFixed-ratioThresholding,and(c)thefinalsegmentationresult.

4 Experiment Results and Analysis

In this section, we evaluate and compare the performance of our algorithm against previous algorithms on two representative benchmark datasets: the DRIVE dataset and the STARE dataset.

4.1 Experiment setup

4.1.1 Datasets

The DRIVE dataset[6]contains a total of 40 color retinal images, which are divided into a training set and a test set, both containing 20 images. Each image was captured at 565×584 pixels. For each image in DRIVE, the manually segmented vessel tree is provided together with a binary mask for the FOV area.

The STARE dataset[13]contains 20 retinal images, in which 10 of them are from healthy ocular fundus and the other 10 images are from unhealthy ones. Each image was sized to 700×605 pixels.

Two manual segmentations, which were made by two independent human observers, are available for each image of the three datasets. The manually segmented images by the first human observer are used as a ground truth as used in [10,4], and the human observer performance is measured using the manual segmentations by the second human observer. The binary mask for the FOV for each image in DRIVE is available, and the FOV binary masks for STARE and CHASE are manually created as described in [7].

4.1.2 Evaluation criterion

Vessel segmentation can be regarded as binary classification problem, and there are two class labels for each pixel of a retinal image: vessel and non-vessel. Comparing the automatically segmented results with the manually ground truth, we can observe four types of results: true positives (TP) refer to the vessel pixels that are predicted as vessels, false negatives (FN) refer to the vessel pixels that are predicted as non-vessels, true negatives (TN) refer to the non-vessel pixels that are predicted as non-vessels, and false positives (FP) denote the non-vessel pixels that are predicted as vessels.

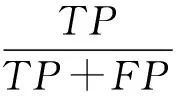

We use four criterions to compare the performance of the proposed method with other state-of-the-art methods, i.e. the sensitivity (Se), specificity (Sp), accuracy (Acc) and F-measure (F). These measures are computed as

(8)

(9)

(10)

(11)

4.2 Evaluation metric

In our experiment, a Double-Scale Filtering (DSF) is applied for pre-processing. TABLE 1 summarizes the result of the proposed DSF method in comparison with uni-scale thresholding method, i.e. Fine Matched Filtering (FMF) and Coarse Matched Filtering (CMF) on both DRIVE and STARE datasets. From this TABLE, we observe that our DSF algorithm has excellent pro-precessing performance. The proposed DSF method achieves better performance than the uniscale thresholding methods regarding theSe,Sp,Acc, andFjointly.

Table1DSFperformancecomparisonwithFMFandCMFmethodsonDRIVEandSTAREdatasets.

DatasetMethodSeSpAccF-measureDRIVEFMF0.48510.99000.92540.6221CMF0.65310.98060.93860.7290DSF0.69240.97830.94160.7500STAREFMF0.54440.98350.93890.6377CMF0.60740.98300.94340.6855DSF0.71260.97460.94740.7332

The effectiveness of the proposed DSF strategy is validated through experiments on two public benchmark datasets above. Consequently, we should conduct optimal thresholdδof DSF to improve the contrast between the vessels and the background.

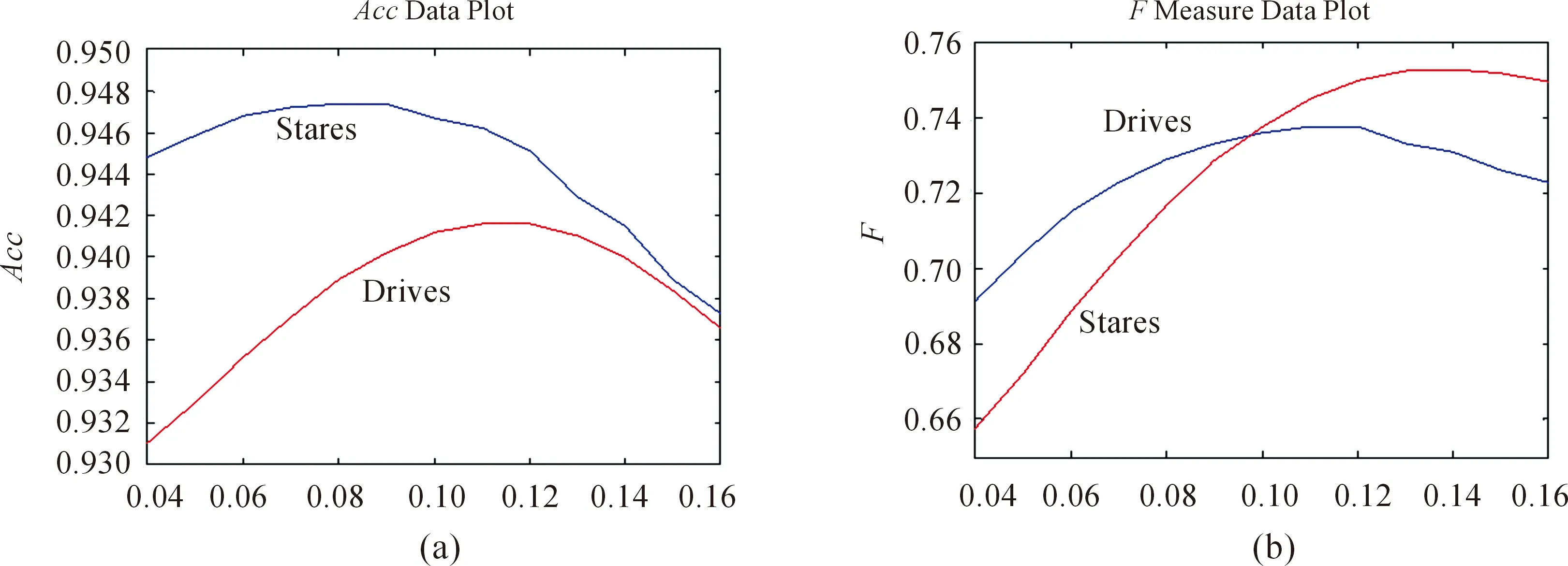

For fine scale Gaussian function and coarse scale Gaussian function, we assume thatδ=δfandδ=δc, respectively, whereδrepresents the scale of Gaussion matched filter. The segmentation performances of DNT as a function ofδfandδcon DRIVE and STARE datasets are respectively shown in Fig.7 and Fig.8. We see that whenδfis fixed,AccandFare from increasing to decreasing along with the sustained rising ofδc.AccandFreach the maximum whileδc=2.3. Similarly, fixingδc, whenδf=2.3,AccandFget the peak. Therefore, in our experiment, we set thatδc=2.3 andδf=1.3.

Fig.7ThesegmentationperformanceofDNTasafunctionofδfandδconDRIVEdataset:(a)resultofAccand(b)resultofF-measure.

Fig.8ThesegmentationperformanceofDNTasafunctionofδfandδconStaredataset: (a)resultofAccand(b)resultofF-measure.

In addition, in ALT phase, we utilize a novel Combination of Otsu and Swt (COS) algorithm to achieve the processing of VSR binarization. COS values are higher than those of single method, Otsu or Swt method. The results of the proposed COS method are compared to those of the previous Otsu or Swt methods in Table 2 for the DRIVE and STARE datasets.

Table2COSperformancecomparisonwithOtsuandCMFmethodsonDRIVEandSTAREdatasets.

DatasetMethodSeSpAccF-measureDRIVECOS0.69240.97830.94160.7500Otsu0.68190.97940.94120.7460Swt0.70100.97190.93710.7383STARECOS0.71260.97460.94750.7332Otsu0.67250.97930.94730.7208Swt0.73140.96750.94270.7232

For the coarse vessels, in our experiment, we apply FRT method to segment. Fig.9 shows the segmentation performance of DNT as a function ofr. Obviously, bothAccandFare from increasing to decreasing with the increasing ofr. On the DRIVE dataset, whenr=0.12,Accreaches the peak. On the STARE dataset, whenr=0.12,Accreaches the peak. Similarly,Falso presents this trend.

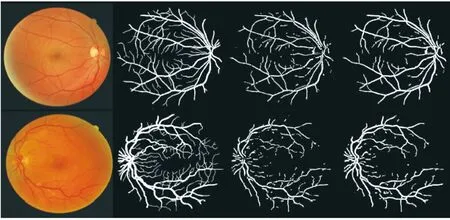

Fig.10 and Fig.11 show the final segmentation results for the DRIVE and STARE dataset.

4.3 Comparison with the state-of-art

Table 3 and Table 4 list the qualitative evaluation of our approach along with the results reported in previous studies for DRIVE and STARE datasets. From these Tables, we see that compared with unsupervised methods, the proposed method produces greater results and outperforms previous state-of-the-art unsupervised solutions. And compared with supervised methods, our algorithm does not require a set of manually labeled rules of vessel extraction on the basis of a training set and has the excellent clinic value.

Fig.9 The segmentation performance of DNT as a function of r.

Fig10SegmentationresultsfortheDRIVEdateset. (Thefirstfourcolumnsaretheretinalimages,manualsegmentation,ALTsegmentationandDNTsegmentation.Thefirstrowhasthebestaccuracy,andthesecondrowhastheworstaccuracy.)

Fig.11SegmentationresultsfortheSTAREdateset. (Thefirstthroughfourthcolumnsaretheretinalimages,manualsegmentation,ALTsegmentationandDNTsegmentation.Thefirstrowisthebestaccuracy,andthesecondrowistheworstaccuracy.)

Table 3 Performance comparison with the state-of-the-art methods on the DRIVE dateset.

Table 4 Performance comparison with the state-of-the-art methods on the STARE dateset.

5 Conclusion

We have presented a Double-Scale Thresholding method based on vessel support regions to segment vessels from retinal images. Firstly, we utilize the Double-Scale Filtering method to enhance the contrast between the foreground vascular and the background stuffs. Secondly, we segment the fine and coarse vessels by the proposed Adaptive Local Thresholding (ALT) and Fixed-Ratio Thresholding (FRT) method, respectively. Thirdly, the final binary segmentation is obtained by fusion of fine and coarse vessels. As a result, our method achieves a fine segmentation in comparison to the other state-of-the art unsupervised methods.

Acknowledgment

This work is partly supported by the Fundamental Research Funds for the Central Universities (2014JBZ003, 2016JBZ006), Beijing Natural Science Foundation (No. J160004) and Shanghai Research Program (No. 17511102900).

[1]R.Pires, H.F.Jelinek, J.Wainer, S.Goldenstein, E.Valle, and A.Rocha, Assessing the need for referral in automatic diabetic retinopathy detection,IEEETransactionsonBiomedicalEngineering,vol.60,no.12,pp.3391-3398, 2013.

[2]R.Welikala, M.Fraz, T.Williamson, and S.Barman, The automated detection of proliferative diabetic retinopathy using dual ensemble classification,InternationalJournalofDiagnosticImaging,vol.2,no.2,pp.72, 2015.

[3]Y.Zhao, L.Rada, K.Chen, S.P.Harding, and Y.Zheng, Automated vessel segmentation using infinite perimeter active contour model with hybrid region information with application to retinal images,IEEEtransactionsonmedicalimaging,vol.34,no.9,pp.1797-1807, 2015.

[4]Q.Li, B.Feng, L.Xie, P.Liang, H.Zhang, and T.Wang, A cross-modality learning approach for vessel segmentation in retinal images,IEEEtransactionsonmedicalimaging,vol.35,no.1,pp.109-118, 2016.

[5]C.Zhu, B.Zou, R.Zhao, J.Cui, X.Duan, Z.Chen, and Y.Liang, Retinal vessel segmentation in colour fundus images using extreme learning machine,ComputerizedMedicalImagingandGraphics, vol.55, pp.68-77,2017.

[6]J.Staal, M.D.Abramoff, M.Niemeijer, M.A.Viergever, and B.van Ginneken, Ridge-based vessel segmentation in color images of the retina,IEEEtransactionsonmedicalimaging, vol.23,no.4,pp.501-509, 2004.

[7]J.V.Soares, J.J.Leandro, R.M.Cesar, H.F.Jelinek, and M.J.Cree, Retinal vessel segmentation using the 2-d gabor wavelet and supervised classification,IEEETransactionsonmedicalImaging,vol.25,no.9,pp.1214-1222, 2006.

[8]E.Ricci and R.Perfetti, Retinal blood vessel segmentation using line operators and support vector classi_cation,IEEETransactionsonMedicalImaging, vol.26,no.10,pp.1357-1365, 2007.

[9]D.Marin, A.Aquino, M.E.Gegundez-Arias, and J.M.Bravo, A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features,IEEEtransactionsonmedicalimaging,vol.30, no.1,pp.146-158, 2011.

[10] M.M.Fraz, P.Remagnino, A.Hoppe, B.Uyyanonvara, A.R.Rudnicka, C.G.Owen, and S.A.Barman, An ensemble classification-based approach applied to retinal blood vessel segmentation,IEEETransactionsonBiomedicalEngineering,vol.59,no.9,pp.2538-2548, 2012.

[11] E.Cheng, L.Du, Y.Wu, Y.J.Zhu, V.Megalooikonomou, and H.Ling, Discriminative vessel segmentation in retinal images by fusing context-aware hybrid features,MachineVisionandApplications,vol.25,no.7,pp.1779-1792, 2014.

[12] S.Chaudhuri, S.Chatterjee, N.Katz, M.Nelson, and M.Goldbaum, Detection of blood vessels in retinal images using two-dimensional matched filters,IEEETransactionsonmedicalimaging,vol.8,no.3,pp.263-269, 1989.

[13] A.Hoover, V.Kouznetsova, and M.Goldbaum, Locating blood vessels in retinal images by piecewise threshold probing of a matched _lter response,IEEETransactionsonMedicalimaging,vol.19,no.3,pp.203-210, 2000.

[14] B.Zhang, L.Zhang, L.Zhang, and F.Karray, Retinal vessel extraction by matched filter with first-order derivative of gaussian, Computers in biology and medicine,vol.40,no.4, pp.438- 445, 2010.

[15] Q.Li, J.You, and D.Zhang, Vessel segmentation and width estimation in retinal images using multiscale production of matched filter responses,ExpertSystemswithApplications,vol.39,no.9,pp.7600-7610, 2012.

[16] P.Zou, P.Chan, and P.Rockett, A model-based consecutive scanline tracking method for extracting vascular networks from 2-d digital subtraction angiograms,IEEEtransactionsonmedicalimaging,vol.28,no.2,pp.241-249, 2009.

[17] Y.Yin, M.Adel, and S.Bourennane, Retinal vessel segmentation using a probabilistic tracking method,PatternRecognition,vol.45,no.4,pp.1235-1244, 2012.

[18] A.M.Mendonca and A.Campilho, Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction,IEEEtransactionsonmedicalimaging,vol.25,no.9, pp.1200-1213, 2006.

[19] U.T.Nguyen, A.Bhuiyan, L.A.Park, and K.Ramamohanarao, An effective retinal blood vessel segmentation method using multi-scale line detection,Patternrecognition,vol.46,no.3,pp.703-715, 2013.

[20] Y.Wang, G.Ji, P.Lin, and E.Trucco, Retinal vessel segmentation using multiwavelet kernels and multiscale hierarchical decomposition,PatternRecognition,vol.46,no.8,pp.2117-2133, 2013.

[21] X.Jiang and D.Mojon, Adaptive local thresholding by veri_cation-based multithreshold probing with application to vessel detection in retinal images,IEEETransactionsonPatternAnalysisandMachineIntelligence,vol.25,no.1,pp.131-137, 2003.

[22] B.Epshtein, E.Ofek, and Y.Wexler, Detecting text in natural scenes with stroke width transform, InProceedingsofIEEEConferenceonComputerVision&PatternRecognition, California, USA, 2010, pp.2963-2970.

[23] R.G.Von Gioi, J.Jakubowicz, J.M.Morel, and G.Randall, Lsd: A fast line segment detector with a false detection control,IEEETransactionsonPatternAnalysisandMachineIntelligence,vol.32,no.4,pp.722-732, 2010.

[24] M.E.Martinez-Perez, A.D.Hughes, S.A.Thom, A.A.Bharath, and K.H.Parker, Segmentation of blood vessels from red-free and uorescein retinal images,MedicalImageAnalysis,vol.11.no.1,pp.47-61, 2007.

[25] X.H.Wang, Y.Q.Zhao, M.Liao, and B.J.Zou, Automatic segmentation for retinal vessel based on multi-scale 2d gabor wavelet,ActaAutomat-ICASINICA,vol.41,no.5, pp.970-980, 2015.

MinZhengis currently working toward the Ph.D. degree at the School of Computer Science and Technology, Beijing Jiaotong University, Beijing, China. Her research interests include computer vision, image processing.

FengLireceived the B.Sc. degree in computer science and technology from Tianjin Polytechnic University of Science and Technology, Tianjin, China, in 2014 and the Master’s degree at the School of Computer Science and Technology, Beijing Jiaotong University, Beijing, China, in 2016. He is currently working in NetEase. His research interests include computer vision and deep learning.

JianzhuWangis currently working toward the Ph.D. degree at the School of Computer Science and Technology, Beijing Jiaotong University, Beijing, China. His research interests include computer vision, image processing, pattern recognition, and machine Learning.

Yangli-aoGengis currently working toward the Ph.D. degree at the School of Computer Science and Technology, Beijing Jiaotong University, Beijing, China. His research interests include computer vision, image processing, pattern recognition, and machineLearning.

HaiboJiangreceived the B.Sc. degree in clinical medicine from Central South University, Changsha, China, in 2001 and the M.D. degree in Ophthalmology from Central South University, Changsha, China, in 2014. He is currently a Associate Professor with Central South University, Changsha, China. His research interests include glaucoma, cataract, and retinal disease.

the B.Sc. degree in computer science and technology from Wuhan University, Wuhan, China, in 2001 and the Ph.D. degree in computer science and technology from the Institute of Computing Technology, Chinese Academy of Sciences, Beijing, China, in 2006. He is currently a Professor with the Beijing Jiaotong University, Beijing. His research interests include computer vision and artificial intelligence.

•Qingyong Li, Min Zheng, Feng Li, Jianzhu Wang, and Yangli-ao Geng are with Beijing Key Lab of Trransportation Data Analysis and Mining, Beijing Jiaotong University, Beijing 100044, China. E-mail: liqy@bjtu.edu.cn; minzheng@bjtu.edu.cn; fengli@bjtu.edu.cn; jzWang@bjtu.edu.cn; 16112081@bjtu.edu.cn.

•Haibo Jiang is with Department of Ophthalmology, Xiangya Hospital, Central South University, Changsha 410083, China.

*To whom correspondence should be addressed. Manuscript

2017-05-10; accepted: 2017-06-15

CAAI Transactions on Intelligence Technology2017年3期

CAAI Transactions on Intelligence Technology2017年3期

- CAAI Transactions on Intelligence Technology的其它文章

- Interactions Between Agents:the Key of Multi Task Reinforcement Learning Improvement for Dynamic Environments

- Technology and Application of Intelligent Driving Based on Visual Perception

- Technology of Intelligent Driving Radar Perception Based on Driving Brain

- Enriching Basic Features via Multilayer Bag-of-words Binding for Chinese Question Classification

- The Fuzzification of Attribute Information Granules and Its Formal Reasoning Model

- Micro Structure of Injection Molding Machine Mold Clamping Mechanism: Design and Motion Simulation