SRAFE: Siamese Regression Aesthetic Fusion Evaluation for Chinese Calligraphic Copy

Mingwei Sun| Xinyu Gong | Haitao Nie | Muhammad Minhas Iqbal,3 |Bin Xie

Abstract Evaluation of calligraphic copy is the core of Chinese calligraphy appreciation and inheritance.However, previous aesthetic evaluation studies often focussed on photos and paintings, with few attempts on Chinese calligraphy.To solve this problem, a Siamese regression aesthetic fusion method is proposed, named SRAFE, for Chinese calligraphy based on the combination of calligraphy aesthetics and deep learning.First, a dataset termed Evaluated Chinese Calligraphy Copies (E3C) is constructed for aesthetic evaluation.Second,12 hand-crafted aesthetic features based on the shape,structure,and stroke of calligraphy are designed.Then, the Siamese regression network (SRN) is designed to extract the deep aesthetic representation of calligraphy.Finally, the SRAFE method is built by fusing the deep aesthetic features with the hand-crafted aesthetic features.Experimental results show that scores given by SRAFE are similar to the aesthetic evaluation label of E3C, proving the effectiveness of the authors’ method.

K E Y W O R D S calligraphy evaluation, hand-crafted aesthetic features, siamese regression network

1 | INTRODUCTION

Chinese calligraphy,as one of the treasures of Chinese culture,shines splendidly in the world's culture and art.As the core of Chinese calligraphy,aesthetic evaluation is of great significance to art research, education and inheritance.With the development of computational aesthetic evaluation, there is some research in the field of photographic images [1], western paintings [2, 3], ink paintings [4], webpage [5] etc.However,how to make an objective,accurate and interpretable aesthetic evaluation of Chinese calligraphy images is still a challenge.Aesthetic evaluation aims to evaluate the aesthetic quality of images by simulating human aesthetics and the perception of beauty automatically.At present, the relevant research of aesthetic evaluation is mainly based on hand crafted and deep learning methods [6].Based on hand-crafted features such as colour, contrast, Sharpness, Mavridaki et al.[7] proposed an evaluation system using five basic photography rules,and Redi et al.[8]introduced a framework to access the quality of digital portraits.They all performed well on the AVA dataset.Also for the AVA dataset, Li et al.[9] presented a personality-assisted multi-task deep learning framework and Zhang et al.[10]proposed a multi-modal self and collaborative attention network to make photo aesthetic assessment, which were all based on deep learning.

In terms of Chinese calligraphy,most calligraphy evaluation research is based on the hand-crafted features.From the aesthetic perspective, the features of calligraphy stroke and structure were designed in several studies.Gao et al.[11] proposed a method of Chinese handwriting quality evaluation based on an 8-Directionalfeature.The evaluation model designedbyLi et al.[12]computed the difference in the WF-histogram between the initial style and standard style to generate the score of the target-style after training.Zhou et al.[13] extracted Chinese calligraphyintothree featurestoproduce an evaluationvalue bya proposed PPD method.To improve aesthetic evaluation performance,Lian etal.[14]presentedmoreglobal shapefeaturesto describe and evaluate the aesthetic quality of a Chinese character.

Meanwhile, to evaluate the quality of calligraphic copies,several studies designed the features based on the relationship between calligraphy copies and their corresponding template,which can provide a reference to make the aesthetic evaluation more objective.Based on the features of centre, size, and projection, Han et al.[15] used fuzzy inference techniques to score calligraphy characters on three ranks.Wang et al.[16]calculated stroke sequence, character shape and stroke shape similarities between calligraphic copies and templates.They also implemented a comprehensive evaluation involving the whole character and stroke similarities to achieve a final composited evaluation score[17].In addition,although the fuzzy evaluation method constructed by Ref.[18]is more accurate,the method based on the writing process recorded by resistive touch screen has limitations for hardware facilities.

From the previous studies above,with the strong aesthetic regularity of Chinese calligraphy, hand-crafted features based on calligraphy aesthetic attributes can allow the evaluation method interpretable and reasonable.However, the handcrafted features require calligraphy professional knowledge,and the limited hand-crafted features always ignore some hidden aesthetic information of calligraphy, resulting in the insufficient ability of aesthetic evaluation.Deep learning can effectively extract hidden aesthetic information in calligraphy images to supplement the hand-crafted features,although it is a black box with a lack of interpretability.Therefore, we try to achieve aesthetic evaluation performance and interpretability at the same time by combining the deep network with the handcrafted features.

In this paper, we make the following three contributions.

• To construct dataset E3C, we collect 11,008 calligraphic copy images from students and amateurs,including 59 kinds of Chinese characters, and invite 121 people to evaluate aesthetic scores, thus providing data for the study of calligraphy aesthetics.E3C will be public.

• Based on the aesthetics of calligraphy,we designed 12 handcrafted features by comparing calligraphic copies and corresponding templates from the perspective of shape,structure and strokes of calligraphy and analysed feature validation.Through machine learning algorithms including multiple linear regression, Xgboost [19], and LightGBM [20] regression,we obtained continuous scores of calligraphic copies in the range of 1–10,which were similar to calligraphy experts'scores.

• In order to fully extract the aesthetic hidden information of calligraphy copy images, based on different pre-training backbones, we design the Siamese regression network(SRN) to extract the deep aesthetic features for calligraphy evaluation.To further improve the performance of aesthetic evaluation algorithm, innovatively, the SRAFE method is proposed for Chinese calligraphy by fusing the deep aesthetic features with the 12 hand-crafted features (See Figure 1).The effectiveness of our method is proved by ablation experiments.

2 | PROPOSED DATASET E3C

Being treated as a machine learning problem,the construction on the dataset becomes the key precondition in aesthetic evaluation of Chinese calligraphic copies.We build a dataset termed Evaluated Chinese Calligraphy Copies(E3C),in which dataset E3C is split in a reasonable way with appropriate evaluation metrics to evaluate the prediction performance of our proposed method.

2.1 | Dataset E3C

F I G U R E 1 Overview of our SRAFE method for Chinese calligraphy

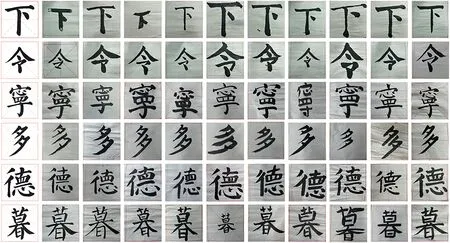

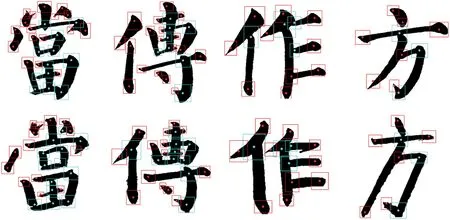

As shown in Figure 2,we constructed dataset E3C to train the model.The dataset collected 11,008 calligraphic copy images from students and amateurs, including 59 kinds of Chinese characters, and invited 121 people (including 10 experts and 111 majors)to evaluate aesthetic scores in our scoring system.Each calligraphy copy was graded by three experts and more than 10 majors ranging from 1 to 10,where 1 means the most negative aesthetic score and 10 means the most positive aesthetic score.The aesthetic score graded by experts is obtained by calculating the average.The majors aesthetic score is generated as follows: The highest and lowest scores from majors are removed and replaced by the mode.Then,the final score label of each calligraphic copy is calculated by the weighted average from the expert and majors aesthetic score,among which the weight of the expert aesthetic score is 0.7 and the amateurs is 0.3.Figure 3 shows the distribution of aesthetic labels, similar to a normal distribution.

2.2 | Dataset for experiments

As the benchmark of the experiment, E3C is divided into training set, validation set and test set at the ratio of 6:2:2,on the premise of the same distribution.The test set contains Chinese characters that have never appeared in the training set and verification set to prove the robustness and generalisation of the methods.What's more, the calligraphic copies of Chinese character ‘Ming’ never appears in the training set and verification set but only in the test set.The aesthetic prediction of the algorithm for character ‘Ming’ can truly reflect the performance of the aesthetic evaluation methods.

F I G U R E 2 Samples of dataset E3C

2.3 | Evaluation metrics

To evaluate the prediction performance of our proposed method, we calculate the average mean absolute error (MAE)and Pearson correlation coefficient (PCC).MAE is formally expressed as

wheremis the number of samples,^yiis the predicted value of theith sample, andyiis the true value of theith sample.It is worth noting that MAE represents the error of scores on a scale of one to ten; the smaller the MAE the better the prediction performance.PCC is used to measure the correlation between prediction and label; higher absolute value means better performance.PCC is defined as

whereμYandμ^Yrepresent the mean value of the true label and the mean value of the predicted value, respectively,σYandσ^Yrepresent the standard deviation of the true label and the standard deviation of the predicted value,respectively.

3 | METHODS ON AESTHETIC FEATURE EXTRACTION

In this section, based on the calligraphic aesthetic, 12 handcrafted aesthetic features consisting of calligraphic shape,structure,and stroke feature are designed from the comparison between calligraphic copyCand the corresponding templateT.These hand-crafted features are the quantification of human aesthetics of calligraphy and can contribute to the calligraphy aesthetic evaluation methods.

3.1 | Calligraphic image registration

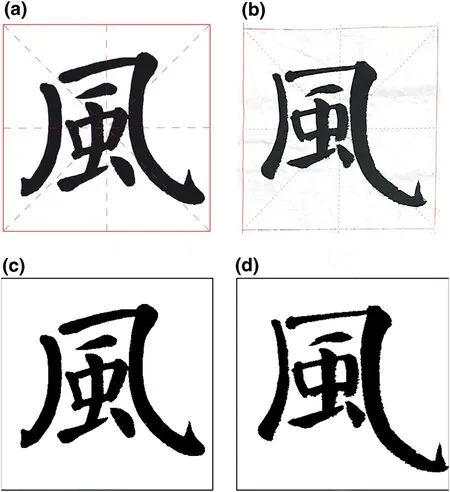

The calligraphic copy photos often have different sizes.Moreover,the relative position and relative size of the character in the calligraphic image are uncontrollable, as shown in Figure 4b.Therefore, calligraphic image registration is an indispensable basis for evaluating calligraphic copy quality with an objective score.From an aesthetic point of view, the following two conditions should be satisfied by calligraphic image registration.1) The number of pixels in the copy character and template character is the same.2)The centre of gravity of the copy character and the template character is the same.In this section, calligraphic image registration of the calligraphic copyCand the calligraphic templateTcan be implemented as follows.

3.1.1 | Calligraphic image preprocessing

First,CandTare resized to the same width with the original aspect ratio fixed and binarised after grey-scale transformation.Then noise such as dropped ink is removed by setting the threshold of the connected domain area.After image preprocessing, useless interference information can be filtered out and calligraphic characters can be retained.

3.1.2 | Calligraphic size registration

We extract the smallest fitting rectangle of the calligraphic character inCandTas the ROI.The parameterswidth* andheight* denote theC-ROI's width and height respectively.After resizing, the two parameters can be calculated as

whereSCandSTare the total number of pixels of the calligraphic character inC-ROI andT-ROI, respectively.After resizing, the calligraphic characters ofCandThave the same number of pixels.

F I G U R E 4 Calligraphic image registration.(a) Original calligraphic template.(b) Original calligraphic copy.(c) Calligraphic template afterimage registration.(d) Calligraphic copy after image registration

3.1.3 | Calligraphic location registration

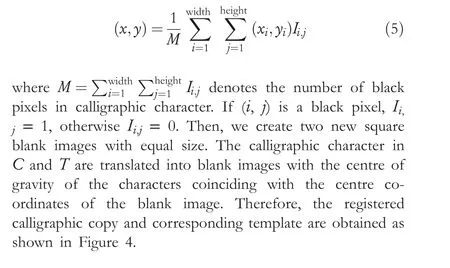

For calligraphic characters ofTandC, the centre of gravity is calculated as

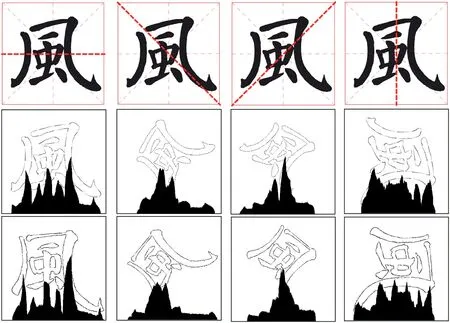

3.2 | Feature on calligraphic shape

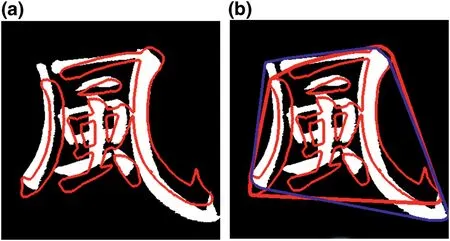

The most intuitive way to evaluate the difference betweenCandTis to compare their shapes.To quantify the difference in aesthetics, we use the overlapping ratio to represent the similarity in calligraphic shape, as shown in Figure 5a.The features on calligraphic shape can be formally expressed as

whereACandATrepresent the area of the calligraphic character inCand the corresponding character inT, respectively.f1is in the range of 0–1 and has an obviously positive correlation with the quality of calligraphic copy.Then,we mapf1to the shape aesthetic scoref2with a scope of 1-10 by

F I G U R E 5 (a)The visual calligraphy guidance based on shape feature,where the red contour reflects the template,and f1 is 0.368.(b) The visual calligraphy guidance based on structure feature, where the red contourreflects the template's structure while the blue reflects the copy's structure,and f2 is 0.876

The shape feature can be used in calligraphy teaching.As shown in Figure 3a, by superimposing the red contour of the template on the copy image, the difference between the copy and the template can be presented clearly, which can provide students with intuitive visual guidance.

3.3 | Features on calligraphic structure

In Chinese calligraphy, the structure of Chinese characters usually determines its overall aesthetic quality.In this section,the features on a calligraphic structure are mainly quantified by convex hull feature extraction and projection feature extraction.

3.3.1 | Convex hull feature extraction

The convex hull of the calligraphic character can reflect the overall structure of the character.We compare the convex hulls of the characters inTandCto obtain an overall structure feature by the overlapping ratiof3, which is defined

whereSCandSTrepresent the area of the convex hull of calligraphic character in C and T,respectively.f3is in the range of 0–1, which is all positively associated with the quality of calligraphic copy.Moreover, as Figure 5b shows, the convex hull feature can describe the difference on calligraphic structure and can provide visual guidance.

3.3.2 | Projection feature extraction

F I G U R E 6 The projection of T and C to the direction of 0°,-45°,45°,and 90°.The red dotted line represents the projected baseline in‘mizi’grid,and the black histograms represent the projected histograms oncorresponding red dotted lines

When practicing Chinese calligraphy, the character is usually written in a‘mizi’ grid, which is an intersected figure designed specifically for writing Chinese characters,as shown by the red grid in Figure 6.Through the horizontal line, the vertical line,left diagonal and right diagonal of the ‘mizi’ grid, it is convenient for beginners to determine the position and direction of the strokes when writing and provide them with a structural reference.Inspired by this,we projectTandCto the direction of the four baselines of the ‘mizi’ grid, which are in the directions of 0°,-45°,45°,and 90°,as shown in Figure 6.HTdenotes the projected histogram ofT, andHCdenotes the projected histogram ofCin the direction of 0°.The overlapping ratio in the directions of 0° is formally expressed as

Then,we can calculate the correlation ofHTandHCin the direction of 0° by

Accordingly, featuresf6∼f11are obtained by calculating the overlapping ratio and correlation in the directions of -45°,45°, and 90° to quantify the histograms.

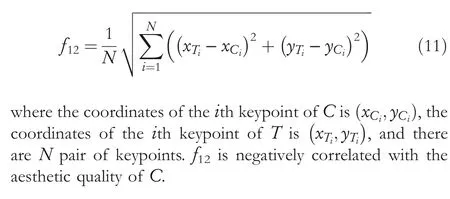

3.4 | Features on calligraphic stroke

The details and layout of the strokes have a significant impact on the aesthetic quality of calligraphy.To quantify the aesthetic feature of strokes,first,we detect the keypoints of calligraphic strokes based on Faster RCNN [21], then realise the keypoint registration through CPD [22], and finally calculate the difference according to the keypoint information of the template,as seen in Figure 1d.

3.4.1 | Keypoint detection on calligraphic stroke

With strong regularity on visual characteristics, the keypoints of calligraphic strokes can be divided into two categories; one is the beginning and ending of strokes and the other is the turning and crossing of strokes.Based on the two categories,we established the calligraphy keypoint detection dataset with 800 calligraphic images and 13,648 keypoint boxes.The keypoint boxes with two category labels contain the edge information of the key parts of the strokes, and their centre points are the keypoints of the strokes.The experimental results show that in the test set of 100 calligraphic images with 17 characters, the detection precision is 0.988, and the recall is 0.917.Therefore,the keypoint detection of calligraphic characters can be realised with excellent performance based on Faster RCNN.

3.4.2 | Keypoint registration based on CPD

F I G U R E 7 Samples of the keypoint detection result on the calligraphic copy

As Figure 7 shows,we can obtain keypoints ofTandCby the above method.To achieve keypoint registration ofTandC,based on CPD, we make some improvements to solve the problem of registering to the same keypoint when some keypoints are too close.We try to split the connected domains of characters to reduce the registration scope based on corresponding connected domains.For the keypoints registered repeatedly in the same connected domain, only the closest keypoint is kept, and the remaining points are rematched to avoid pairing errors.

3.4.3 | Quantification of calligraphic stroke feature

The calligraphic stroke feature is quantified by calculating the average Euclidean distance of the keypoints as follows

4 | PROPOSED SRAFE METHOD

If only the above hand-crafted features are used, there will be the lack of aesthetic information and unsatisfactory evaluation performance due to the current limited and incomplete handcrafted features.Therefore, in this section, we propose the SRAFE method, which fuses the hand-crafted features with deep learning methods; Figure 1 illustrates the framework of our proposed method.First, we transform the basic Siamese network by pre-training the backbone with shared weights,extract and contrast deep feature combined with the regression layer for supervised learning.Then, based on the 12 aesthetic features consisting of calligraphic shape, structure, and stroke features from the section 3, our SRAFE method fuses them with the deep features from SRN,which are trained by lightGBM regression to build our calligraphy evaluation model.

4.1 | SRN for calligraphy evaluation in SRAFE

The Siamese network[23]takes two samples as input,with the representation of their embedding in high-dimensional space as output, to compare the similarity of the two samples.Meanwhile, through transfer learning, using the pre-training model based on CNN as the backbone, we can achieve better and faster performance in the task of computer vision[24].Inspired by this, based on the Siamese network, we take the pre-training model of ImageNet as the backbone to learn the deep representation reflecting the aesthetics of calligraphy.As shown in Figure 1a, the two backbone branches input by calligraphic copyCand the corresponding templateTshare weights, and finally connect the 10-dimensional linear layer,respectively.We design the regression layer as the last layer by replacing the cross entry loss to the European loss of theT's 10-dimensional tensor and theC's 10-dimensional tensor.To better learn the differences ofTandC, the specific loss function is defined as follows:

where 10-ynrepresents the gap betweenthe Euclidean distance and the full aesthetic score.The smaller the Euclidean distance between the deep features ofTandC,the higher the calligraphy aesthetic score.Therefore, in this MSE loss, the Euclidean distance is used to approach the gap between the label and the full score.As the output of our SRN,10-ynis also a prediction of the aesthetic quality of calligraphy copy.

4.2 | Aesthetic fusion and regression in SRAFE

As shown in Figure 1, SRAFE mainly fuses the hand-crafted aesthetic features on the basis of the SRN to improve the performance and interpretability of the evaluation model.Feature fusion is mainly the fusion offeaturedeep,Euclideanandfeaturehandshown in Figure 1.The deep aesthetic features with 10 dimensions ofTandCcan be extracted through the SRN,whereEuclideanis the European distance of the two deep features with 1 dimension.Meanwhile,featuredeepis the difference obtained by subtraction of two deep aesthetic features,with 10 dimensions.featurehandis the 12-dimensional tensor obtained by the hand-crafted features.We set the dimension of the deep feature 10 to achieve a balance between the deep features and hand-crafted features in learning.With the fusion offeaturedeep,Euclideanandfeaturehand, the 23-dimensional fused features can be obtained, and the final aesthetic evaluation scores are predicted by lightGBM regression.

If we only rely on the SRN for aesthetic evaluation, the deep features are in the black box, which lacks interpretability and aesthetic prior knowledge of calligraphy.From the perspective of professional aesthetic evaluation on character shape,structure,and stroke,the hand-crafted aesthetic features can not only improve the interpretability of evaluation but also supplement the aesthetic information ignored by the deep features.However,if only the hand-crafted features are used,it will also lead to the lack of aesthetic information and unsatisfactory evaluation performance due to the current limited and incomplete hand-crafted features.Therefore, theoretically,our SRAFE method can make the deep features and the handcrafted aesthetic features complement each other, increase the interpretability and improve the performance of aesthetic evaluation at the same time.

5 | EXPERIMENTS AND DISCUSSION

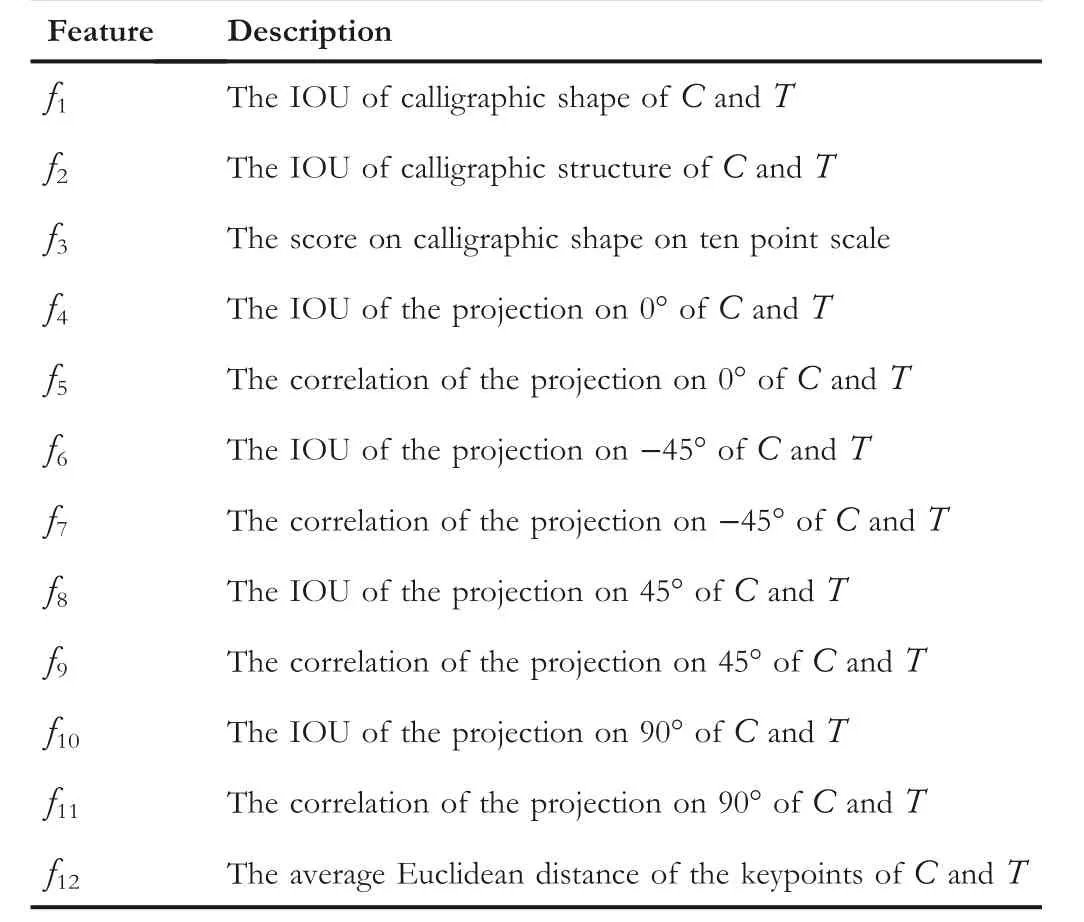

In the experiments, we validate the validity of the 12 handcrafted aesthetic features on the dataset E3C and Table 1 summarizes the 12 features.Meanwhile, based on the 12 features, we compared the prediction performance of multiple linear regression (MLE), Xgboost and LightGBM on the test set of E3C, and chose LightGBM as the regressor for the following experiments.Then, we compare different pretraining models in our SRN to determine the backbone, to extract the hidden aesthetic information of calligraphy copies better.Finally, through ablation experiments, the effectiveness of our SRAFE method is verified.The experimental results show that the score of calligraphic copy quality predicted by our proposed SRAFE method is similar to that of human.

5.1 | Feature validation

As shown in Figure 8, the correlation coefficient betweenf1andf2is high,becausef2is the mapping off1between 0 and 10 points.We find that the features extracted by projection onthe same direction,such asf4andf5,f6andf7,f8andf9,f10andf11have a strong correlation, because they are constructed by quantifying the similarity of the projection histogram of a same angle.The correlation coefficient betweenf1and the label is 0.41, which is the most positive correlation with the label among the 12 features, indicating that the aesthetic feature designed from the perspective of calligraphy shape is close to the aesthetic of public and is more consistent with human aesthetics.In addition to the features designed from the perspective of shape, the correlation coefficient betweenf12and label is -0.34, which has the largest negative correlation with the label among the 12 features, indicating that the aesthetic features designed from the perspective of calligraphy strokes are close to the aesthetic score of calligraphy public and are in good agreement with human aesthetics.Among the 12 features, the smallest correlation with the label isf12, 0.12,which also shows a correlation with the label.

T A B L E 1 Feature description

5.2 | Performance of the hand‐crafted aesthetic features

As human evaluation for calligraphy is often based on subjective aesthetics, it is difficult to give accurate and objective scores,so we use regression algorithms to fit the integer scores,so as to obtain continuous prediction scores on calligraphy evaluation.Considering the performance of algorithms on different datasets may be different, multiple linear regression(MLR), Xgboost and LightGBM were used to train the regression model and compared on performance.

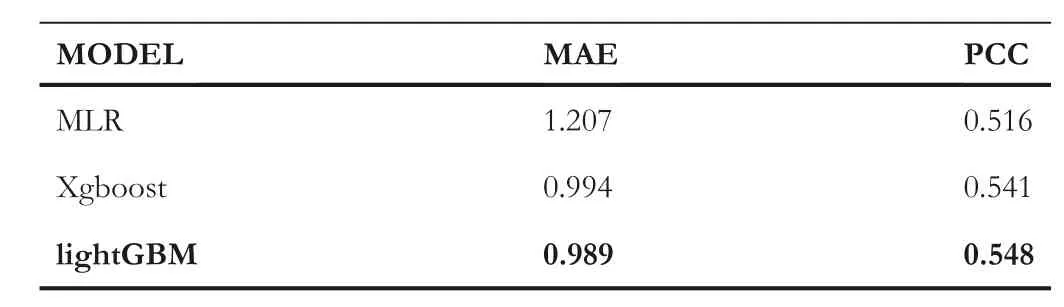

According to Table 2,LightGBM performs better than MLR and Xgboost on the test set of E3C containing 1799 calligraphic copies with 0.989 MAE under the ten-point scoring rule and 0.548 PCC.So,we choose the LightGBM as the regressor for the following aesthetic evaluation algorithms.

F I G U R E 8 Pearson correlation coefficients of the 12 features and labels

Although this method can predict the aesthetic quality of calligraphy copies,its performance is not satisfactory,and there is still room for improvement,which is also consistent with our previous conjecture.It is necessary to further extract the deep aesthetic information of calligraphy copy images to improve the performance.

5.3 | Performance of SRN

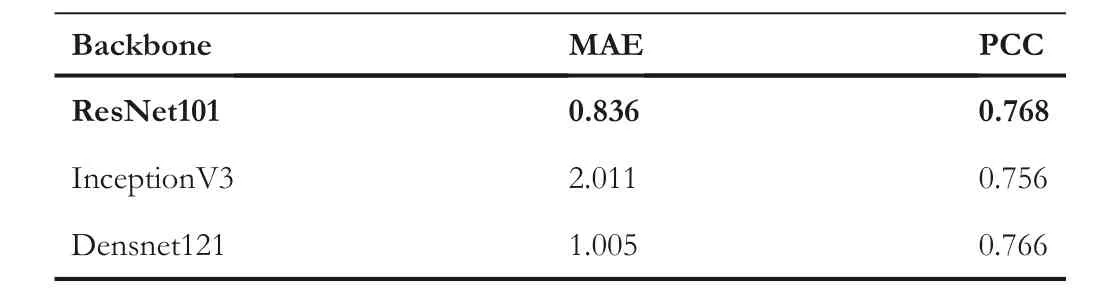

In the implementation,all the network training and testing are performed with NVIDIA RTX3090 GPU and PyTorch 1.8.We use Adam optimiser with the 0.001 base learning rate and(0.9,0.990)betas.Table 3 shows the performance comparison in selecting ResNet101[25],InceptionV3[26]and Densnet121[27] as the backbone.The statistics in Table 3 that the model with ResNet101 yields a higher PCC and a lower MAE than other two pre-trained backbones.Therefore, we choose pretrained ResNet101 as the backbone in the SRN.

Through this method,we can obtain the deep features and the Euclidean distance reflecting the calligraphy aesthetic evaluation.However, the 0.836 MAE still has plenty of room for improvement,and the deep features lack the interpretability of calligraphy aesthetics.Therefore, in the experiment on the SRAFE method,we fuse the 12 hand-crafted aesthetic features of calligraphy with our SRN.

5.4 | Performance of SRAFE

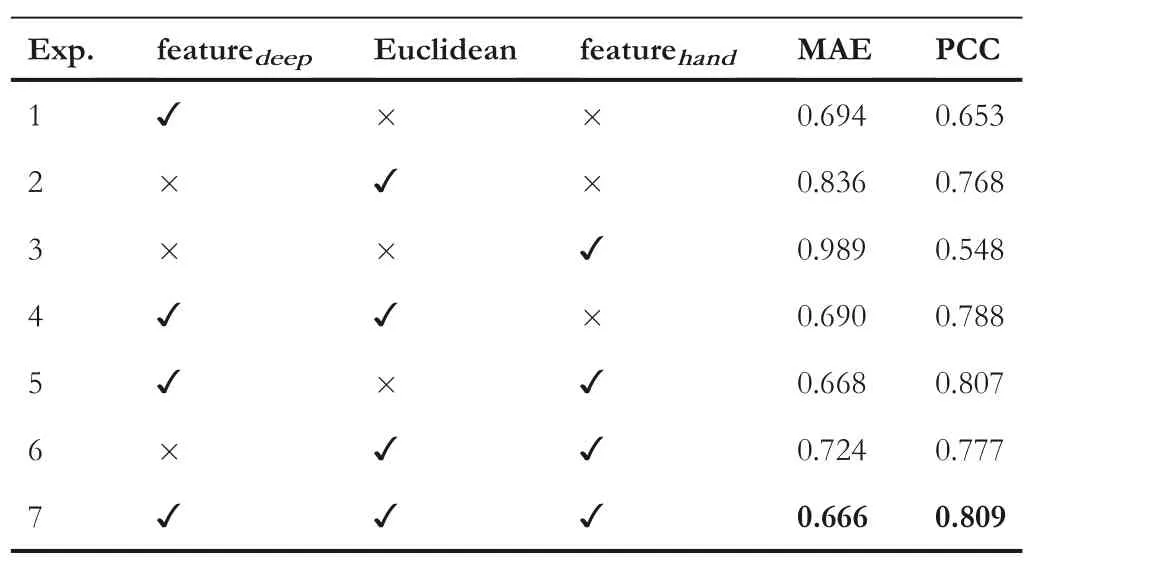

To further improve the performance of calligraphy evaluation,our SRAFE method fuses the deep features from the SRN and the hand-crafted aesthetic features.We further investigate the effectiveness of the SRAFE method by ablation experiments,which also verify the effect of submodules (deep features,European distance of two deep features, and hand-crafted aesthetic features) in SRAFE on performance.The result of ablation shows in Table 4.

T A B L E 2 Performance comparisons of different models based on the 12 features

T A B L E 3 Performance comparisons of SRN in different backbones

According to Table 4 above, Exp.7 is of the optimal prediction performance.It shows that our SRAFE method improves 0.170 on MAE and 0.041 on PCC compared with SRN,meanwhile,improves 0.323 on MAE and 0.261 on PCC compared with only hand-crafted features.Exp.2 and 4(3 and 5,6 and 7)demonstrated the effectiveness of the deep feature from the SRN.The deep feature can characterise the aesthetic differences betweenTandC,which would facilitate the model robustness enhancement.Through comparing the results of Exp.1 and 4 (3 and 6, 5 and 7), it can be found that the addition of Euclidean distance could be conducive to aesthetic prediction, although the improvement is small when the deep feature exists.The Euclidean distance is the output of the SRN,and it also directly reflects the aesthetic gap betweenTandC.It is discovered in Exp.1 and 5 (2 and 6, 4 and 7) that the performance improvement brought about by the hand-crafted features is less than 0.112 on MAE.Furthermore,we find that the deep feature contributes the most to the performance of calligraphy evaluation.It also proves that the deep network can extract useful hidden aesthetic information, which is an effective supplement to hand-crafted aesthetic features.

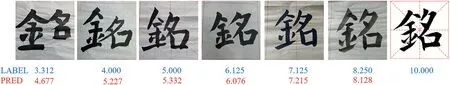

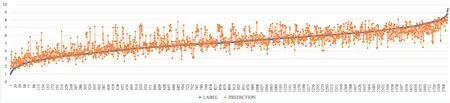

In Figure 9,we select the character‘Ming’from the test set as the display of the aesthetic evaluation of calligraphy by SRAFE method.The blue part ‘LABEL’ is the label of E3C dataset, which is the public aesthetic score, and the red part‘PRED’ is the predicted aesthetic score of SRAFE algorithm,and the real aesthetic score of‘Ming’increases from left to right.It is worth mentioning that the character‘Ming’is never in the training set and validation set.It shows that the SRAFE method can give a good aesthetic score on Chinese calligraphy with good generalisation performance.

As Figure 10 shows, the aesthetic score labels of the test set, which contains 1786 calligraphic copy images, are arranged from low to high (blue scatter plot) and are compared with the corresponding aesthetic predicted scores obtained by the SRAFE method (orange scatter plot).In the figure, the ordinate is the aesthetic prediction score(1–10),the abscissa is the number of the calligraphy copy test case image.The orange dots are relatively dense from low to high, indicating that our method can predict the aesthetic score with good performance.We also analyse the samples with large prediction deviations.Some bad cases are of unsatisfactory font segmentation in image pre-processing, resulting in the deviation of feature extraction, which needs to be optimised and improved in the future.Meanwhile, some copies with low scores are predicted to have high scores due to there are few low score samples during training.Moreover, the ink stains that will be removed and ignored by the algorithm are an important factor to reduce the scoring of aesthetic labels.Therefore, the treatment of sample imbalance and the impact of ink stains on aesthetic evaluation are follow-up research work.

T A B L E 4 Performance of SRAFE method ablation experiments

F I G U R E 9 Calligraphy copy examples of ‘Ming’ evaluated by the SRAFE method

F I G U R E 1 0 SRAFE prediction results on the test set of E3C

6 | CONCLUSION AND FUTURE WORK

To build a connection between Chinese calligraphy and computational aesthetic evaluation, which is a challenging work, we proposed a Siamese aesthetic fusion framework for calligraphy(SRAFE).The novelty of SRAFE is to design some handcrafted features from the perspective of calligraphy aesthetics,combined with the deep features learnt from the SRN.The 12 hand-crafted features are more consistent with the human aesthetics of calligraphy, contributing to model interpretation.Meanwhile, the deep features reflect the deep aesthetic gap from calligraphic templates, improve the performance and generalisation of the model.What's more,in the future, dataset E3C will be public for the research on calligraphy evaluation.

Currently, our SRAFE method can achieve good evaluation performance in regular script, while Chinese calligraphy contains many fonts such as running script and cursive script with quite different appearances.For the E3C dataset, the current calligraphy images mainly come from the copying homework of primary school students, which are mainly regular calligraphy images and lack of calligraphy images of other fonts.To address this limitation, we will include more calligraphic copies of seal character, official script and running script in our E3C dataset which is the research basis for aesthetic evaluation of other fonts.For our SRAFE method, different fonts may have different aesthetic prior knowledge and unique aesthetic characteristics, which may limit the evaluation performance of the SRAFE for other fonts.Therefore, it is necessary to design more targeted artificial aesthetic features for aesthetic evaluation.Besides,we will extend more deep hidden aesthetic features and split strokes based on deep learning for further evaluation, and research on how to make SRAFE better assist the aesthetic evaluation task on different fonts.Moreover, we will perform a more in-depth study on quantitative aesthetic evaluation in Chinese calligraphy.

CONFLICT OF INTEREST

The authors declare that they have no conflict of interest.

DATA AVAILABILITY STATEMENT

Data available on request due to privacy/ethical restrictions.

ORCID

Mingwei Sunhttps://orcid.org/0000-0001-6196-9833

Bin Xiehttps://orcid.org/0000-0003-2135-0694

CAAI Transactions on Intelligence Technology2023年3期

CAAI Transactions on Intelligence Technology2023年3期

- CAAI Transactions on Intelligence Technology的其它文章

- Fault diagnosis of rolling bearings with noise signal based on modified kernel principal component analysis and DC-ResNet

- Leveraging hierarchical semantic‐emotional memory in emotional conversation generation

- Forecasting patient demand at urgent care clinics using explainable machine learning

- A federated learning scheme meets dynamic differential privacy

- Wafer map defect patterns classification based on a lightweight network and data augmentation

- Kernel extreme learning machine‐based general solution to forward kinematics of parallel robots