The ChatGPT After: Building Knowledge Factories for Knowledge Workers with Knowledge Automation

By Yutong Wang ,,, Xiao Wang , Senior,, Xingxia Wang , Jing Yang ,Oliver Kwan , Lingxi Li , Senior,, Fei-Yue Wang ,,

Introduction

The big hit of ChatGPT makes it imperative to contemplate the practical applications of big or foundation models [1]—[5].However, as compared to conventional models, there is now an increasingly urgent need for foundation intelligence of foundation models for real-world industrial applications.To this end, here we would like to address the issues related to building knowledge factories with knowledge machines for knowledge workers by knowledge automation, that would effectively integrate the advanced foundation models, scenarios engineering, and human-oriented operating systems (HOOS)technologies for managing digital, robotic, and biological knowledge workers, and enabling decision-making, resource coordination, and task execution through three operational modes of autonomous, parallel, and expert/emergency, to achieve intelligent production meeting the goal of “6S”:Safety,Security,Sustainability,Sensitivity,Service,and Smartness [6]—[10].

Being a generative AI language model, ChatGPT [11]—[14]adheres to the “Big Problems, Big Models” paradigm [5].Its training data consists of Common Crawl, a vast collection of textual data from web pages,books,articles,and other publicly available resources, which makes it proficient in addressing general queries.While ChatGPT is trained on a vast array of topics, its depth of knowledge on highly specialized subjects might not match that of dedicated experts in a specific field.It does not have the capability to analyze real-time data or trends either.In view of this, we must advocate a “Small Problems,Big Models”paradigm,training big models[2]with multimodal data from extremely specific subjects.In this way,widely applying these big models in factories for workers during production and other crucial scenarios, we could solve domain-specific queries, and enable real-time analysis of data with continuous learning ability.

Nonetheless, given that small problems should and have traditionally been resolved with small models, why are large models needed? If so, do we have sufficient data to train big models for small problems? In reality, and especially in the current trend, a small problem must be solved together with many surrounding other small problems.Therefore,today we have to address those small problems deeply in vertical and widely in horizontal, thus the need for domain-specific foundation models and the source of big data for their training.Those special big models offer the capacity to holistically evaluate and generate effective and comprehensive solutions for small problems.

Furthermore, we need to structure and organize a new ecosystem to coordinate biological workers, robotic workers,and digital workers for future smart production [15], [16], specifically by building knowledge factories with knowledge machines for knowledge automation.We also need to design corresponding operational processes and assign proper roles for those three types of knowledge workers, so they can work together synergistically and efficiently.Let us address those important issues in the following sections.

Essential Elements of Knowledge Factories

Aiming at knowledge automation [17], the essential elements for knowledge factories include business big models,scenarios engineering, and HOOS.The schematic diagram of the collaboration of these elements in knowledge factories is shown in Fig.1.

• Business big models.Knowledge factories [1], [4]—[7]would involve three types of workers: digital workers,robotic workers, and biological workers, as described in the next section.Business big models are the key technology that assists biological workers and drives digital and robotic workers to execute operational tasks more efficiently and intelligently.They are the cognitive knowledge bases storing domain knowledge and skills for production.Essentially, a knowledge worker itself is a foundation model for special functions in a knowledge factory, and interaction among knowledge workers with business big models is an important issue to be addressed.Note that the theory and method of parallel cognition[18]should be useful in constructing business big models by facilitating the design of efficient Q&A sessions among various knowledge workers and business big models.

Fig.1.The collaboration of essential elements for knowledge factories.

• Scenarios engineering.Traditional feature engineeringbased deep learning has achieved the state-of-the-art(SOTA) performance.However, these algorithms are implemented without the in-depth consideration of interpretability, security, and sustainability.Thus, it is impossible to apply these SOTA algorithms to real-world factories directly.In knowledge factories, scenarios engineering [19] can be seen as the integration of industrial scenarios and operations within a certain temporal and spatial range, where a trustworthy aritificial intelligence model could be established by intelligence&index(I&I),calibration & certification (C&C), and verification &validation(V&V).Through the effective use of scenarios engineering,knowledge factories should achieve the goal of “6S” [20], [21].

• HOOS.The primary function of HOOS[22],which is an upgraded version of management and computer operating systems, is to set up task priority, allocate human resources,and make interruptions.With the help of HOOS,workers in the knowledge factories could communicate and cooperate more efficiently, thus greatly reducing the laborious and tedious works and related physical and mental loads to biological workers.Many research on conventional and smart operating systems can be used in HOOS design and implementation [23]—[25].

The Knowledge Workforce:Digital, Robotic, and Biological Workers

Knowledge workforce in knowledge factories is categorized into three primary classes: digital workers, robotic workers,and biological workers.The interplay of these worker types in knowledge factories is illustrated in Fig.2.Biological workers are real humans, while robotic workers [26] are designed to aid biological humans in performing complex physical-world tasks, and digital workers are introduced to serve as virtual representations of both biological and robotic workers.The role and function of digital workers encompass facilitating human-machine interactions, coordinating tasks, conducting computational experiments, and other activities that broaden the scopes of both biological and robotic workers [27].

The advancements in foundation model technologies,exemplified by tools like ChatGPT,should accelerate the integration of digital workers in knowledge factories [12], [28].Digital,robotic,and biological workers interact,align,and collaborate under the DAOs (Decentralized Autonomous Organizations and Decentralized Autonomous Operations) framework [17],[29].The various elements of physical, social, and cyber spaces interact with each other through digital workers to ensure the completion of required tasks under distributed,decentralized, autonomous, automated, organized, and orderly working environments.

In knowledge factories, digital workers should be the primary source of workforce, facilitating the synergy between biological and robotic workers by automating task distribution and process creation.In our current design, at least 80% of the total workforce should consist of digital workers.Robotic workers,responsible mainly for physical tasks,should make up no more than 15% of the workforce.Biological workers are responsible for decision-making and emergency intervention and should be less than 5%of the total workforce.Knowledge factory utilizes HOOS to achieve interaction and collaboration among three types of knowledge workers.By leveraging the majority of digital and robotic workers, knowledge factories boost efficiency, lessen the strain on biological workers, save resources, and promote sustainable production.

Fig.2.The knowledge workforce in knowledge factories.

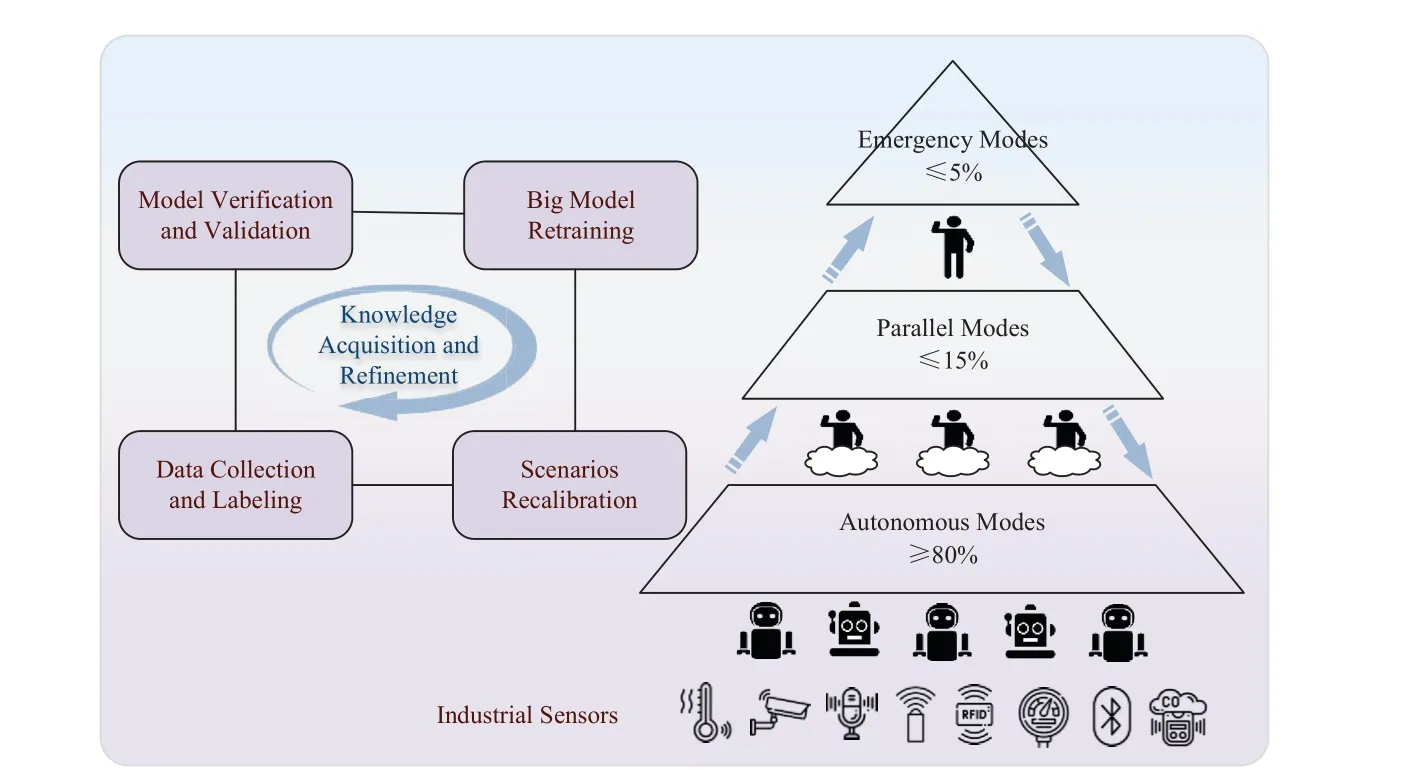

The Process for Knowledge Automation: APeM

The process for knowledge automation involves three distinct working modes: autonomous modes (AM), parallel modes (PM), and expert/emergency modes (EM), collectively known as APeM.These modes play various roles in the workflow of knowledge factories, as described in Fig.3.AM represents the ultimate concept of unmanned factories.AM should be the primary mode of operations, accounting for over 80% of the production process, requiring mainly the involvement of digital and robotic workers.PM should be activated in fewer than 15% of cases, providing remote access for human experts to resolve any unforeseen issues or failures that arise during production.If an issue persists even after PM deployment, the corresponding production process switches to EM, which accounts for less than 5% of the time,where experts or emergency teams are dispatched to the site to resolve the problem directly.Once the issue is rectified, the production process reverts to PM, monitored remotely for a set duration, and then transitioned back to AM.

Fig.3.The process for knowledge automation: APeM.

In general, PM should address unpredictable and rare longtail issues in most of production processes.These issues might involve unexpected defects in a production chain or an equipment malfunction.Using this mode, experts can manipulate robotic workers and identify problematic areas through anomaly detection and diagnosis during remote access operations.Nonetheless, some production challenges elude solutions via PM, especially if the data is not accessible by industrial sensors or robotic workers, or if robotic workers cannot emulate specific human actions.In such cases,the data in actual factories should be collected and labeled,and related scenarios need to be recalibrated.Big models undergo iterative retraining as new data is introduced, and perform verification and validation to ensure the revised models are up to par.Knowledge acquisition and refinement will then be achieved as modes are toggled.

Conclusion Remarks

This article presents the framework of building knowledge factories with knowledge machines for knowledge automation by knowledge workers.Equipped with domain-specific big models, digital and robotic workers would assist biological workers to perform decision-making, resource coordination,and task execution.Through knowledge processing under AM,PM,and EM,big models are iteratively optimized and verified through scenarios engineering and acquire new knowledge and refine its knowledge base.

Current big models lack the ability to defend against malicious attacks, as well as the capability to reason about complex problems.In the future, for trustworthy and explainable knowledge factories, it is essential to incorporate federated intelligence and smart contracts technologies in constructing and training big models to ensure their safety, security, sustainability, privacy, and reliability.

ACKNOWLEDGMENT

This work was partially supported by the Science and Technology Development Fund of Macau SAR (0050/2020/A1).

IEEE/CAA Journal of Automatica Sinica2023年11期

IEEE/CAA Journal of Automatica Sinica2023年11期

- IEEE/CAA Journal of Automatica Sinica的其它文章

- Multi-Objective Optimization for an Industrial Grinding and Classification Process Based on PBM and RSM

- Containment-Based Multiple PCC Voltage Regulation Strategy for Communication Link and Sensor Faults

- GraphCA: Learning From Graph Counterfactual Augmentation for Knowledge Tracing

- Adaptive Graph Embedding With Consistency and Specificity for Domain Adaptation

- An Optimal Control-Based Distributed Reinforcement Learning Framework for A Class of Non-Convex Objective Functionals of the Multi-Agent Network

- Can Digital Intelligence and Cyber-Physical-Social Systems Achieve Global Food Security and Sustainability?