Research on Maize Seed Classification Method Based on Convolutional Neural Network

Guowen ZHANG, Shuxin YIN

AbstractThe quality of maize seeds affects the outcome of planting and harvesting, so seed quality inspection has become very important. Traditional seed quality detection methods are labor-intensive and time-consuming, whereas seed quality detection using computer vision techniques is efficient and accurate. In this study, we conducted migration learning training in AlexNet, VGG11 and ShuffleNetV2 network models respectively, and found that ShuffleNetV2 has a high accuracy rate for maize seed classification and recognition by comparing various metrics. In this study, the features of the seed images were extracted through image pre-processing methods, and then the AlexNet, VGG11 and ShuffleNetV2 models were used for training and classification respectively. A total of 2 081 seed images containing four varieties were used for training and testing. The experimental results showed that ShuffleNetV2 could efficiently distinguish different varieties of maize seeds with the highest classification accuracy of 100%, where the parameter size of the model was at 20.65 MB and the response time for a single image was at 0.45 s. Therefore, the method is of high practicality and extension value.

Key wordsConvolutional neural network; Deep learning; Variety classification

DOI:10.19759/j.cnki.2164-4993.2023.04.026

Maize seeds are an indispensable means of production in todays society. China is also a large population and has a high demand for food. The classification and identification of maize varieties will not only help farmers to better select varieties and optimize agricultural production, but also to achieve food safety and quality control. Research into maize quality classification is therefore particularly important. In recent years, computer vision and machine learning techniques have become increasingly sophisticated. The classification methods of crop varieties have also transitioned from traditional manual classification methods to computer vision related methods. Lyu et al.[1] proposed a fine-grained image classification method based on ResNet network for accurate classification of maize seeds, which enabled the accuracy of the network model to be maintained at 92%. Shi et al.[2] trained the model parameters by improving the VGG network by adding a CBAM-Inception structure inside it, and finally achieved a model accuracy of 96%. Wang et al.[3] conducted experiments using the improved InceptionV3 model and compared the results with traditional machine learning algorithms to achieve an accuracy of 94.39%. Dai et al.[4] proposed a residual network-based recognition model for grapevine leaf disease identification, and the accuracy of the model for disease identification was 98.02% by comparison tests. In summary, for most models the average level of accuracy remains around 90%, while the model parameters are large and the recognition time is slow. For the embedded end of the application, what is needed is a model with fast detection speed and high model accuracy. The use of traditional convolutional neural networks to identify maize varieties requires a lot of time and low accuracy, and does not correspond well to the actual situation. Therefore, in this study, AlexNet, VGG11 and ShuffleNetV2 network models were compared to produce a ShuffleNetV2-based maize variety classification method.

Materials and Methods

Data sources and processing

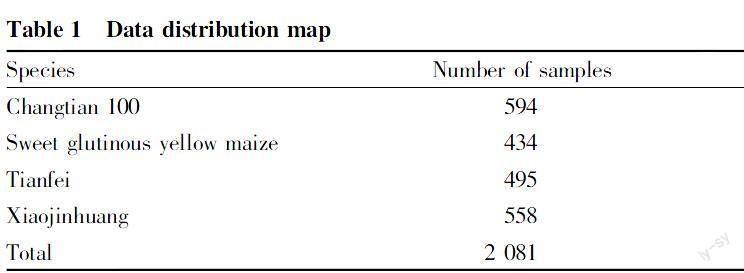

Test materialsThe data set samples for this study were collected at Bayi University of Agriculture and Conservation in Heilongjiang. Samples were taken from the seeds of Changtian 100, sweet glutinous yellow maize, Tianfei and Xiaojinhuang, where the background was chosen to be mainly dark. The equipment light sources were sampled during the day and at night. The distribution of data is shown in Table 1.

Image pre-processingImage pre-processing operations refer to the process of processing and cleaning raw data prior to modeling. In all of the experiments in this study prior to the start of training, the data were processed through following steps: normalisation operations to eliminate differences in brightness between images, resizing the images to unify them in subsequent processing, and adjusting the brightness and contrast of the images as a way to improve visualisation and feature differentiability[5].

It was aimed to obtain the best pre-processing effect and to obtain a network model with high robustness and accuracy.

Identification methods

AlexNet networkAlexNet is the first deep neural network model to be successfully trained on a large-scale dataset[6]. Its design contains multiple convolutional and fully connected layers. One of the activation functions inside AlexNet mainly uses the ReLU activation function, which can accelerate the convergence of the model during training and reduce the problem of gradient disappearance. Dropout regularization is used, dropping a random portion of neurons between the fully connected layers to reduce the complexity of the neural network and improve generalisation. The network will be used as a comparison model.

VGG11 networkVGG11 is a convolutional neural network model with a depth of 11 layers. VGG was proposed by the Computer Vision Group at the University of Oxford and uses a very small convolutional kernel of 3*3 and a deep network structure to achieve good image classification results. The input to the VGG11 model is a color image of size 224*244. This network model was used as a comparison model in this experiment.

ShuffleNetV2 networkShuffleNetV2 is a lightweight convolutional neural network, which is mainly designed to solve the limitations of the size of the model and the amount of calculation encountered in real-time image processing on mobile devices and access devices. The main features and innovations of ShuffleNetV2 are channel integration, grouped convolution, and pointwise convolution. Channel integration facilitates the transfer flow and dissemination of information by putting the input feature maps into integral groups and rearranging along the channel dimension. The group product groups the product operation into smaller convolution kernels, and the product kernel in each group is only multiplied with the input feature map in the same group to reduce the amount of calculation and model parameters. Point-by-point convolution uses a 1x1 convolution kernel to convolve the feature map for feature fusion and dimensionality reduction in the channel dimension. Fig. 1 is a network structure diagram of ShuffleNetV2.

Migration learning and model training

Transfer learningMigration learning will apply the trained model to the target domain task to be determined, so as to speed up and improve the training effect of the target domain task. The main idea is to transfer the parameters of the source domain to the target domain. The knowledge already learned in the source domain is used to help the learning task to be achieved[7]. When traditional deep learning and machine learning deal with tasks such as data distribution, dimensionality, and model output changes, the model is less flexible and the results are not good enough, and the use of transfer learning can just solve these problems. In this experiment, transfer learning was used for training on VGG11, AlexNet, and ShuffleNetV2 network models to effectively solve problems such as insufficient data, insufficient computing resources, and poor model effects.

Model trainingIn this study, four varieties of corn seeds were classified and identified, and 1 875 images were input into the convolutional neural network for training. The convolutional neural network structure mentioned above was used in the training process. The SGD optimizer was used during training, where the learning rate was set to 0.000 3, the momentum value was set to 0.9, and the batch size was set to 32. The loss function used the cross-entropy loss function. The accuracy of the network model reached the highest value after 35 iterations and tended to be stable.

Results and Analysis

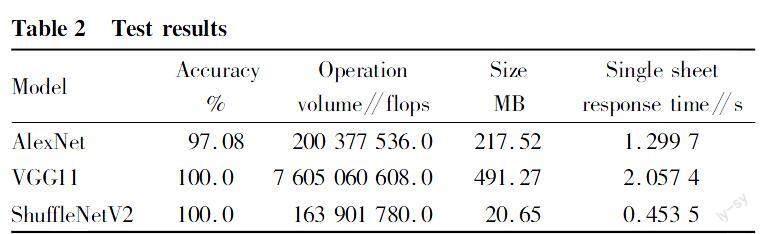

In this study, 2 081 data were divided into training and test sets under the same experimental conditions, where the training and test sets occupied 1 875, 206 respectively. In this study, AlexNet, VGG11 and ShuffleNetV2 models were compared in the experiment. From Table 2, it could be obtained that the accuracy of VGG11 and ShuffllNetV2 models remained at 100%, and the accuracy of AlexNet model was 97.08%. Comparing the parametric size of the network models, we could conclude that the ShuffleNetV2 model was 20.65 MB, which was 3 percentage points more accurate than AlexNet. Comparing the single run time, we could see that the ShuffleNetV2 model was 0.453 s, which was 0.8 and 1.6 s faster than AlexNet and VGG11 respectively. The comparison showed that the ShuffleNetV2 model outperformed other two models in terms of accuracy, number of parameters and size, thus better demonstrating the superiority of the ShuffleNetV2 model in classifying maize varieties. The ShuffleNetV2 model is also more suitable for deployment on embedded platforms due to its fast single-sheet response speed and the number of model parameters.

The analysis of the confusion matrix shown in Fig. 1 effectively demonstrates the superiority of ShuffleNetV2 on this maize dataset.

Discussion

The ShuffleNetV2 model was analyzed by comparing the results with the VGG11 and AlexNet models. The model not only outperformed the VGG11 and AlexNet models in terms of accuracy, but also in terms of speed and model size. By taking photographs of maize varieties and feeding them into the network model, a high accuracy rate could be achieved in a short time.

Conclusions

In this study, we performed convolutional operations on maize seeds through convolutional neural networks to extract image features. By comparing the experimental results, it was found that the ShuffleNetV2 model had a higher classification accuracy while still maintaining a greater advantage in recognition speed and number of model parameters.

Guowen ZHANG et al. Research on Maize Seed Classification Method Based on Convolutional Neural Network References

[1] LYU MQ, ZHANG RX, JIA H, et al. Research on improved ResNet-based maize seed classification method[J]. Chinese Journal of Agricultural Chemistry, 2021, 42(4): 92-98. (in Chinese).

[2] SHI YP, WANG ZG. Research on disease identification of maize leaves based on convolutional neural network[J]. Agriculture and Technology, 2023, 43(6): 24-29. (in Chinese).

[3] WANG LB, LIU JY, ZHOU YH, et al. Quality detection of maize seeds based on watershed algorithm combined with convolutional neural network[J]. Chinese Journal of Agricultural Chemistry, 2021, 42(12): 168-174. (in Chinese).

[4] WANG MJ, YIN F. Multiscale improvement of convolutional neural network and its application in maize disease symptom recognition[J]. Journal of Henan Agricultural University, 2021, 55(5): 906-916. (in Chinese).

[5] DAI JJ, MA YH, WU J, et al. Identification of grape leaf diseases based on improved residual networks[J]. Jiangsu Agricultural Science, 2023, 51(5): 208-215. (in Chinese).

[6] HUANG T, CHEN YY, CHEN YM, et al. A non-image data preprocessing method for convolutional structures[J]. Journal of Hebei Normal University: Natural Science Edition, 2023, 47(3): 232-238. (in Chinese).

[7] HE Q, GUO FL, WANG ZH, et al. An improved AlexNet-based algorithm for classifying grape leaf diseases[J]. Journal of Yangzhou University: Natural Science Edition, 2023, 26(2): 52-58. (in Chinese).

Editor: Yingzhi GUANGProofreader: Xinxiu ZHU

- 农业生物技术(英文版)的其它文章

- Optimization of Extraction Conditions for Total Flavonoids from Fructus Aurantii Immaturus and Its Anti-UVB Radiation Activity

- Common Species Distribution Models in Biodiversity Analysis and Their Challenges and Prospects in Application

- Vegetable Tunnel House Technology in Tropical Island Countries

- Purification and Characterization of Hyaluronate Lyases Produced by Two Types of Bacteria

- Renewable Energy Seawater Desalination Technology and Application Analysis

- Impact of Water Quality Sampling Process on Environmental Monitoring Results