Deep Learning Applications Based on WISE Infrared Data: Classification of Stars,Galaxies and Quasars

Guiyu Zhao ,Bo Qiu,* ,A-Li Luo ,Xiaoyu Guo ,Lin Yao ,Kun Wang ,and Yuanbo Liu

1 School of Electronic and Information Engineering,Hebei University of Technology,Tianjin 300401,China;1263730840@qq.com,qiubo@hebut.edu.cn,1799507446@qq.com,1286789387@qq.com,1848896968@qq.com,1220617881@qq.com

2 CAS Key Laboratory of Optical Astronomy,National Astronomical Observatories,Beijing 100101,China;lal@bao.ac.cn

3 University of Chinese Academy of Sciences,Beijing 100049,China

Abstract The Wide-field Infrared Survey Explorer(WISE)has detected hundreds of millions of sources over the entire sky.However,classifying them reliably is a great challenge due to degeneracies in WISE multicolor space and low detection levels in its two longest-wavelength bandpasses.In this paper,the deep learning classification network,IICnet (Infrared Image Classification network),is designed to classify sources from WISE images to achieve a more accurate classification goal.IICnet shows good ability on the feature extraction of the WISE sources.Experiments demonstrate that the classification results of IICnet are superior to some other methods;it has obtained 96.2%accuracy for galaxies,97.9%accuracy for quasars,and 96.4%accuracy for stars,and the Area Under Curve of the IICnet classifier can reach more than 99%.In addition,the superiority of IICnet in processing infrared images has been demonstrated in the comparisons with VGG16,GoogleNet,ResNet34,MobileNet,EfficientNetV2,and RepVGG-fewer parameters and faster inference.The above proves that IICnet is an effective method to classify infrared sources.

Key words: methods: data analysis– techniques: image processing– infrared: general

1.Introduction

Infrared astronomical observation is one of the most important branches of observational astronomy today,which mainly focus on the study of various types of celestial sources in the universe through observations in the infrared band(Glass 1999),and the objects which are too dim in the visible band can also be detected in the infrared band.

The Earth is surrounded by a thick layer of atmosphere that contains many substances,such as water vapor,carbon dioxide,oxygen,and ozone.They have a strong scattering and absorption effect on celestial radiation from outer space at infrared wavelengths (Liou 2002),which limits ground-based infrared astronomical observations.Some initial observatories,such as the Kuiper Airborne Observatory(Erickson et al.1985)and Stratospheric Observatory for Infrared Astronomy(Erickson 1992),developed to infrared space telescopes,such as the Infrared Astronomical Satellite (Duxbury &Soifer 1980),the Infrared Space Observatory (Kessler et al.1996),and the Wide-field Infrared Survey Explorer(WISE)(Wright et al.2010).

Classification is an essential means for humans to acquire knowledge,and the problem of classifying celestial targets has been studied for a long time (Lintott et al.2008).The classification scheme of galaxies,quasars,and stars is one of the most fundamental classification tasks in astronomy(Kim&Brunner 2016;Ethiraj &Bolla 2022).The classification of celestial objects usually includes spectral classification and morphological image classification.

Spectral classification is very popular and there are many reported works.The classification of stars,galaxies,and quasars by spectroscopy has been studied commonly,but generally it requires a large workload by comparing the observed spectra with a template.Later,a random forest method was also used to do the same task,but the classification accuracy of quasars was only 94% (Bai et al.2018).

The morphological classification is also a common experiment.A self-supervised learning method was used to classify the three classes based on photometric images,and the accuracy could only reach 88% (Martinazzo et al.2021).Some researchers have classified sources into stars,galaxies,and quasars with high accuracy based on Sloan Digital Sky Survey (SDSS) photometric images using deep learning methods,which is instructive for our work (He et al.2021).

A support vector machine (SVM) (Steinwart &Christmann 2008) method was used to classify three classes based on WISE and SDSS with information from the W1 band (Kurcz et al.2016).Classification of galaxy morphology based on WISE infrared images has been previously investigated (Guo et al.2022),and we have taken the classification of infrared images a step further.

In this paper,the data used with their pre-processing details are introduced in Section 2;the Infrared Image Classification Network (IICnet) with the modules is introduced described in Section 3;the classification results are presented,and some comparison experiments are performed in Section 4;the experimental results are analyzed in Section 5;and the summary in Section 6.

2.Data

The data set is constructed on some selected infrared image data from WISE.4https://irsa.ipac.caltech.edu/applications/wise/

2.1.Data Preparation

WISE has four bands,W1,W2,W3,and W4,at wavelengths of 3.4 μm,4.6 μm,12 μm,and 22 μm,respectively (Wright et al.2010).The WISE all-sky images and source catalog,released in 2012 March,contain over 563 million objects and provide a massive amount of information on mid-infared(MIR) properties of many different types of celestial objects and their related phenomena (Wright et al.2010;Tu &Wang 2013).By 2013,WISE had detected over 747 million objects with SNR >5 and publicly released in the AllWISE source catalog (Cutri et al.2013).

When acquiring raw data in WISE,if the image size is set to 600〞 (the default value),there will be too many sources in the image,as shown in Figure 1(a).To find the specific source corresponding to the R.A.and decl.,the image size is set to 50〞,as shown in Figure 1(b).The data corresponding to each R.A.and decl.in this paper was obtained in INFRARED SCIENCE ARCHIVE (IRSA).5https://irsa.ipac.caltech.edu/frontpage/The band information of W1,W2,W3,and W4 of the corresponding sources are obtained from WISE after the crossover between SDSS6http://skyserver.sdss.org/CasJobs/SubmitJob.aspxand WISE to form the experimental database of this project.

Figure 1.Images corresponding to different arcseconds.We chose 50〞 for processing,as WISE website defaults to 600〞.

2.2.Image Pre-processing

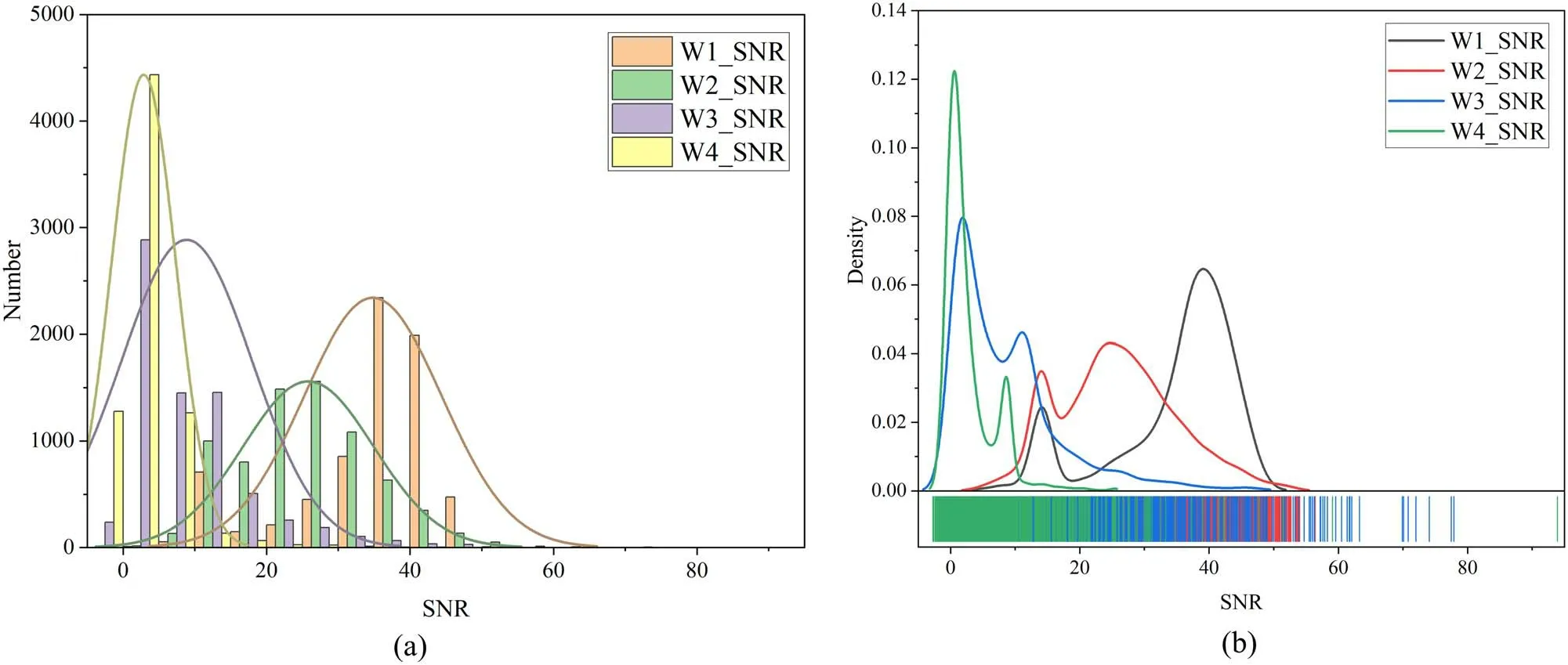

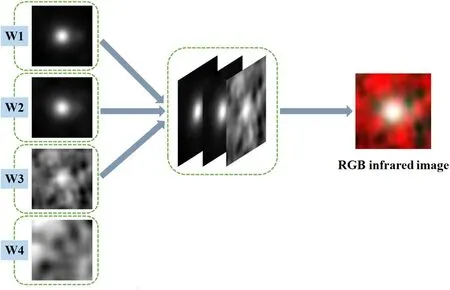

WISE image classification can be adversely affected by excessive dust around the sources,and the presence of more dust in the W4 band and the lower signal-to-noise ratio (SNR)compared to the other three bands are shown in Figure 2.W4 exhibits a significantly lower SNR than the other three bands,therefore,in this paper,the W1,W2,and W3 bands have been used as the three channels of the RGB image to synthesize the infrared image,as shown in Figure 3.

Figure 2.Statistical and probability distribution figures of SNR for the four bands.(a) Statistical figure of SNR.(b) Probability distribution of SNR.

Figure 3.A galaxy image of W1,W2,W3,W4 bands and an RGB infrared image synthesized by W1,W2,W3.

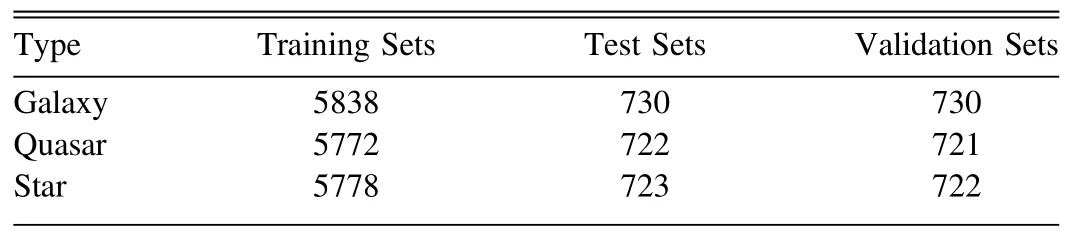

Further more,7298 galaxy images,7215 quasar images,and 7223 star images are chosen to form the data set finally.Their numbers are approximately equal to each other to ensure data balance between different classes for satisfying the demands of deep learning algorithms.The data set is randomly divided into training,validation,and test sets with a ratio of 8:1:1,as shown in Table 1.

Table 1Datasets Division of Three Types of Celestial Bodies

One of the difficulties of the classification is that some infrared images of galaxies,quasars,and stars look highly similar.As shown in Figure 4,they all have a brighter light source in the image center and lack obvious image features that can clearly distinguish them from each other by human eyes.This paper introduces the IICnet method to do the classification automatically.The basis of this method is that convolutional neural networks can extract image features that human eyes cannot distinguish (Egmont-Petersen et al.2002).

Figure 4.Sample images for each type.The three types of objects have confusing features.(a) A galaxy.(b) A star.(c) A quasar.

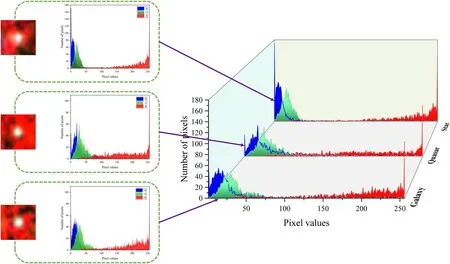

Figure 5.3D waterfall of galaxies,stars and quasars.On the left there are RGB histograms of sample images of a star,a quasar,and a galaxy,respectively,and on the right it is a 3D waterfall combination of the left.

When the RGB histogram is used to distinguish the three images in Figure 4,the results are shown in Figure 5.It can be found that three histograms are similar to each other.So simple image features like histogram cannot distinguish the three types,the deep learning method is designed to do the classification.

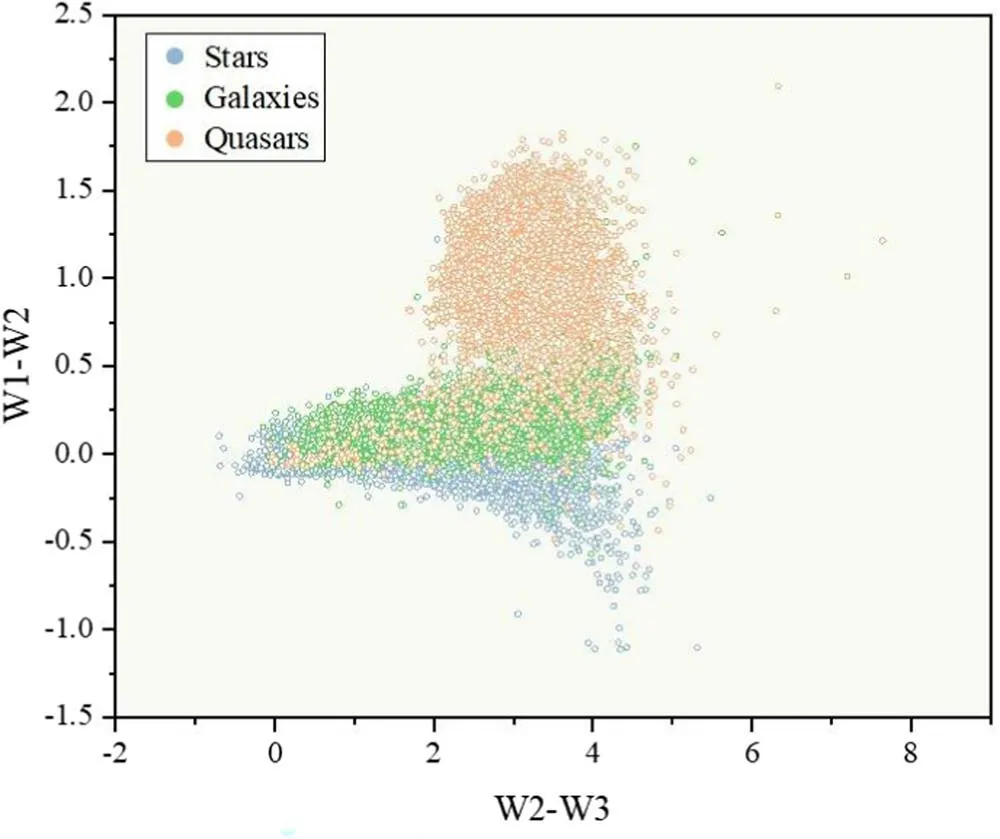

In the low-redshift universe,the stars and galaxies of W1-W2 exhibit very similar colors (Kurcz et al.2016).If the color–color diagram composed of W1,W2,and W3 is used to analyze the distributions between stars,galaxies,and quasars(Wright et al.2010)(Figure 6),it can be found that there are large overlap regions among the three types,especially the overlap between stars and galaxies is very obvious.This illustrates that it is difficult to accomplish the infrared image classification task by conventional means.

Figure 6.Color–Color diagram showing the locations of three types.There are large areas of overlap between the three types of objects.

3.Methods

In this paper,a new deep learning algorithm IICnet is designed to accomplish the task of infrared image classification.For this task,experiments are conducted based on the Pytorch architecture and the Python programming language.An NVIDIA TESLA V100 GPU (5120 CUDA cores and 32 GB of video memory) is used for training.

3.1.Infrared Image Classification Network: IICnet

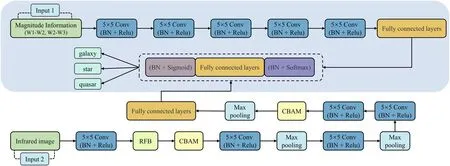

The structure of IICnet is shown in Figure 7.The network includes five convolutional layers,three down-sampling layers(pooling layers),one feature extraction module (Receptive Field Block,RFB) (Liu et al.2018),and two convolutional block attention modules (CBAM) (Woo et al.2018) at the beginning and the end.

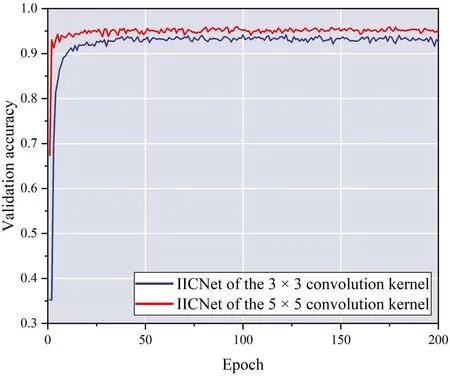

In IICnet,the first block is a large convolutional kernel of 5×5,it has been demonstrated by several researchers that large convolutional kernels are more capable of extracting semantic information(Peng et al.2017).It extracts information from an image’s more extensive neighborhood range to ensure its relative integrity after it starts convolution.The subsequent addition of the BN layer and ReLU can suppress gradient explosions and help extract deeper semantic information.The experiments demonstrate that the 5×5 convolutional kernel for this task outperforms the 3×3 kernel.As shown in Figure 8,the validation accuracy of the network using the 5×5 convolutional kernel is significantly higher than that of the 3×3 kernel.

Figure 8.Verification accuracy of different convolution kernels.The accuracy of the 5×5 convolution kernel is significantly higher than that of the 3×3.

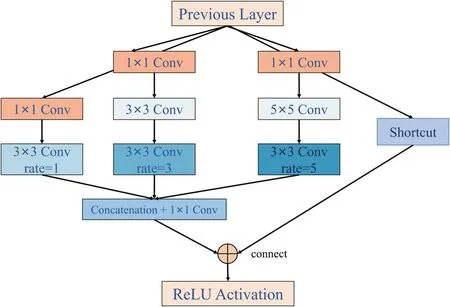

After the first layer of convolution,the raw feature map is generated and in the following fed to the Receptive Field Block(RFB) (the first module) for further processing.As shown in Figure 9,RFB is a feature extraction module that can enhance the feature extraction capability of the network by simulating the perceptual field of human vision.The first half of the module is similar to GoogleNet in which it can simulate group receptive fields of various sizes and adds dilated convolution to increase the receptive fields effectively.The latter half reproduces the relationship between the size and eccentricity of the population receptive field (pRF) (Wandell &Winawer 2015) in the human visual system,increasing the distinguishability and robustness of the features.

Figure 9.The architectures of RFB.

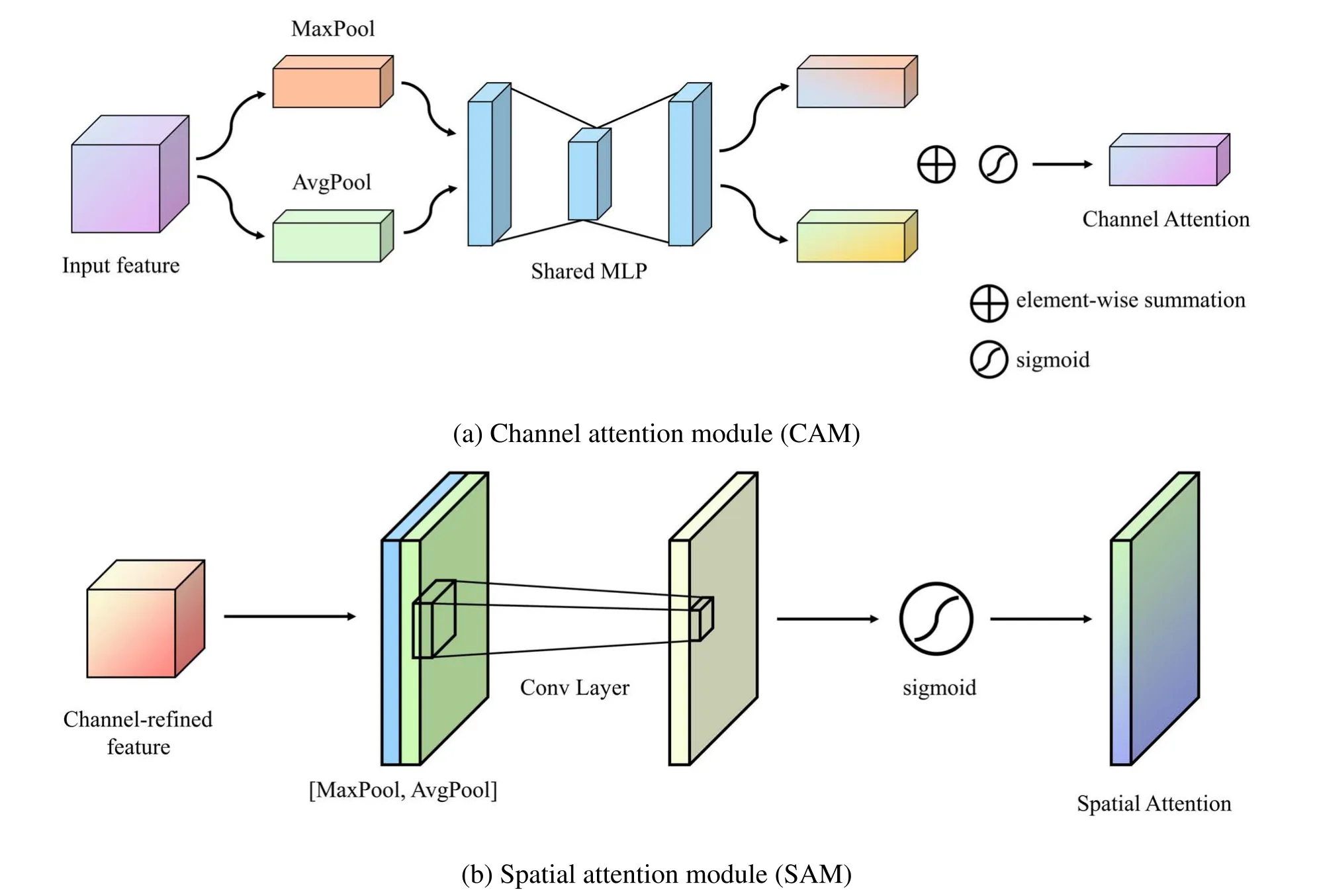

An attention module,the Convolutional Block Attention Module (CBAM) (the second module),is connected after the RFB and at the last layer of the network,respectively.CBAM not only indicates the direction of attention but also improves the representation of regions of interest.IICnet aims to improve feature representation by focusing on essential features and suppressing unnecessary ones.The channel and spatial attention modules are combined by CBAM,as shown in Figure 10.The Channel Attention Module (CAM) is shown in Figure 10(a).After the feature map is input,the onedimensional vector of channel attention is first obtained through the global MaxPool and the global AvgPool;the respective one-bit vector is obtained after a shared Multi-Layer Perception (MLP) for element addition.Finally the spatial attention vector is obtained through sigmoid activation.Through the above process,the CAM can focus on the meaningful information in the image.The Spatial Attention Module (SAM) is shown in Figure 10(b),which is complementary to channel attention which focuses on the target’s location information.The Spatial SAM first uses MaxPool and AvgPool to obtain the channel-refined features in CAM,concatenates them and generates a feature descriptor,and finally activates them by sigmoid to obtain the feature map of SAM.The joint use of the two modules can achieve better results.The equations for CAM and SAM are expressed as follows:

Figure 10.Diagram of each attention sub-module.CAM makes use of average and maximum pooling in simultaneously.SAM connects two feature layers together to create one feature layer.

where σ denotes the sigmoid function andf7×7represents a convolution operation with the filter size of 7×7.

A softmax function is used at the end of the network to calculate the probability distribution of each class (Liu et al.2016),which ultimately classifies the targets into stars,galaxies,and quasars.

The IICNet plays an essential role in improving the classification accuracy by performing feature extraction through each convolutional layer and downsampling layer.The RFB and CBAM modules can improve attention to the key position of the image,and the performance is significantly improved.Adam(Kingma&Ba 2014)is one of the optimizers that uses hyperparameter computation efficiently,usually requires no tuning,and is simple to be implemented.It is used during training.In the training process,it is set to 200 epochs,and the initial value of the learning rate is set to 10−4,and after 50 epochs,it is set to half of the initial value (5×10−5),to ensure reasonable convergence of the training.

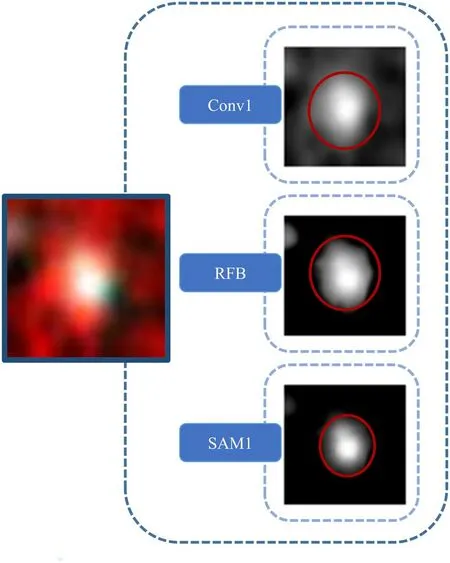

3.2.Feature Visualization of Network Layers

When analyzed with our data set,the image central source is the most important part to be focused on.There are different information around different sources,such as the predominance of red around stars,black and red around galaxies,and the more complex colors shown around quasars,with some blue and green mixed.The region of interest generated by IICnet can be observed by visualizing the features of the middle layer of the network,as shown in Figure 11.The feature maps are processed by the first layer convolution,RFB and CBAM respectively,and the regions of interest are more and more concentrated,which proves the importance of the feature extraction capability of RFB and the attention mechanism of CBAM for classification.

Figure 11.Middle layer visualization of the IICnet model.After the image passes through RFB and CBAM,the middle layer shows the focus on the central source.

4.Result

4.1.Influence of Image Size

In the network of Convolutional Neural Network(CNN),the input image size is an essential factor affecting the network’s performance(Touvron et al.2019).To obtain the optimal input size of the image,this paper tested the accuracy from 64×64 to 128×128,spanning 8,using 64×64 as the starting size.The relationship between different input sizes and accuracy is shown in Figure 12.The image size achieves the highest accuracy at 80×80.The accuracy gradually decreases as the image size increases,so 80×80 is the most adaptable size for IICnet.

Figure 12.A plot of the relationship between input image size and IICnet accuracy.The accuracy achieves a maximum value of 0.9521 when the input image size is 80×80 pixels.

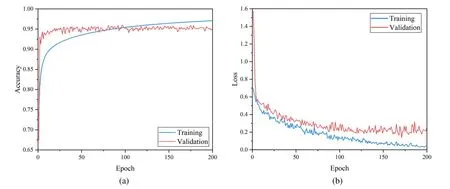

4.2.Influence of Epoch

In this paper,the pre-processed infrared images of galaxies,stars,and quasars are input into IICnet,and the accuracy and loss obtained through the experiments are shown in Figure 13.In this experiment,accuracy and loss were analyzed through 200 epochs.The accuracy increased with the increase of epochs and then leveled off.The loss decreases as the epoch increases and then levels off.The accuracy of the validation set can reach up to 95% or more.IICnet’s ability to get better results on infrared image classification is proven.

Figure 13.(a)The curve of IICnetʼs loss against training set and validation set with epoch.(b)The curve of IICnetʼs accuracy against training set and validation set with epoch.

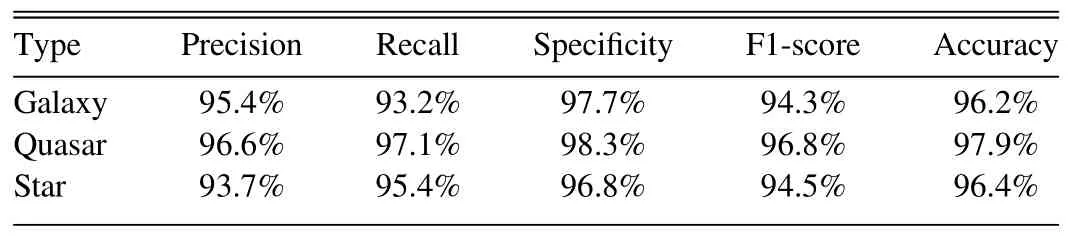

4.3.Evaluation Indices

For the classification task,the following statistical metrics are used in this paper: precision,recall (Harrington 2012),specificity,F1-score (Chinchor &Sundheim 1993),and accuracy,and the specific values are shown in Table 2.Precision indicates the number of correctly classified positive samples as a proportion of the total number of samples predicted to be positive,and recall indicates the number of correctly classified positive samples as a proportion of the actual total number of positive samples.The higher these two metrics are,the better,but they are a pair of contradictory metrics,so we use the F1-score (the summed average of precision and recall) to evaluate the classification results,and the formula is shown below.

Table 2The Classification Index of IICnet Including Precision,Recall,Specificity,F1-score,and Accuracy

Specificity measures the classifier’s ability to recognize positive examples;sensitivity measures the classifier’s ability to recognize negative ones,which is calculated similarly to recall.The Receiver Operating Characteristic curve (ROC)(Chawla et al.2002) can also prove the superiority of the classifier in this paper,as shown in Figure 14.The ROCs of galaxies,quasars,and stars all rise rapidly to around 1,effectively demonstrating that the algorithm in this paper has good classification results for all types of objects.

Figure 14.ROC for galaxies,quasars and stars.

4.4.Comparative Experiment

This section compares IICnet with some classic novel classification networks,including VGG16(2014)(Simonyan&Zisserman 2014),GoogleNet (2015) (Szegedy et al.2015),ResNet34 (2016) (He et al.2016),Mobilenet (2017) (Howard et al.2017),EfficientNetV2 (2021) (Tan &Le 2021),and RepVGG (2021) (Ding et al.2021) (EfficientNetV2 and RepVGG are the latest CNN-based networks we could find so far).The accuracy curves on the validation set for each network are shown in Figure 15(a).Except for the comparison experiments using 7 models,this work is also experimented on different data sets (infrared images,spectra,color–color and“infrared images+color–color”).

Figure 15.(a) Comparison results of IICnet and other image classification networks validation accuracy.(b) Comparison results of 3-channel (W1,W2,W3) and 4-channel (W1,W2,W3,W4) validation accuracy.

It seems that the results of IICnet are better than the other mainstream classification networks.As shown in Figure 15(a),only IICnet can achieve more than 95% accuracy.Besides of this,it can maintain a small computational and parametric volume while improving accuracy,as shown in Table 3.IICnet can obviously reduce the amount of computation by more than a half and the number of parameters by 1.47M compared to Mobilenet,which is the least computationally intensive way in Table 3.

Table 3Comparison of Flops and Params in the Seven Networks

As mentioned in Section 2.2,only W1,W2 and W3 bands are used to synthesize the images,due to the lower SNR of the W4 band.The performance of using 3-channel and 4-channel images are conducted,which shows that the former are slightly better than the latter,as shown in Figure 15(b).

Color-color classification and “infrared image+color–color” classification are based on rvised IICnet,as shown in Figure 16,where the upper part covered with blue shading is the color–color classification network,and the composition of the upper and the lower form the “infrared image+color–color” classification network.

Figure 16.“Infrared image+color–color” classification network.The upper part,covered by the blue shade,is the color–color classification network.

The accuracy curves of the validation sets,which are respectively obtained by IICnet and rvised IICnet,are shown in Figure 17.Spectral classification has the highest accuracy,but it is difficult to obtain.The image classification accuracy can exceed 95%,so using image classification will be a more common way.The color–color classification results are the worst,which also corresponds to the results shown in Figure 6.The results of“infrared image+color–color classification”are about 1%higher than infrared image classification results.The reason is that some color information is lost when extracting features from infrared images,which can be alleviated by adding the magnitude information.The fused features will be further investigated in the subsequent work.

Figure 17.The accuracy curves of the validation sets corresponding to different data sets (infrared images,spectra,color–color,“infrared images +color–color”).

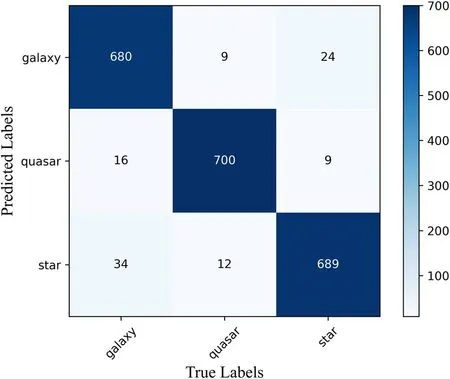

4.5.Confusion Matrix

The confusion matrix can be used to demonstrate the classification effect.The confusion matrix drawn for the test set in this paper is shown in Figure 18.Of these,the number of misclassified samples is tiny,with the vast majority concentrated on the diagonal.

Figure 18.Confusion Matrix of IICnet.Each column of the confusion matrix represents the number of true labels for each class,and each row represents the number of predicted labels for each class.

The histogram in Section 2.2 (Figure 5) cannot distinguish the types to which the three images in Figure 4,but inputting the three images into the IICnet model gives evident confidence in the classification,as shown in Table 4.All three images are classified correctly,with a confidence level close to 1.

Table 4The Confidence of the Three Samples in Figure 4

5.Discussion

5.1.Analysis of Misclassified Samples

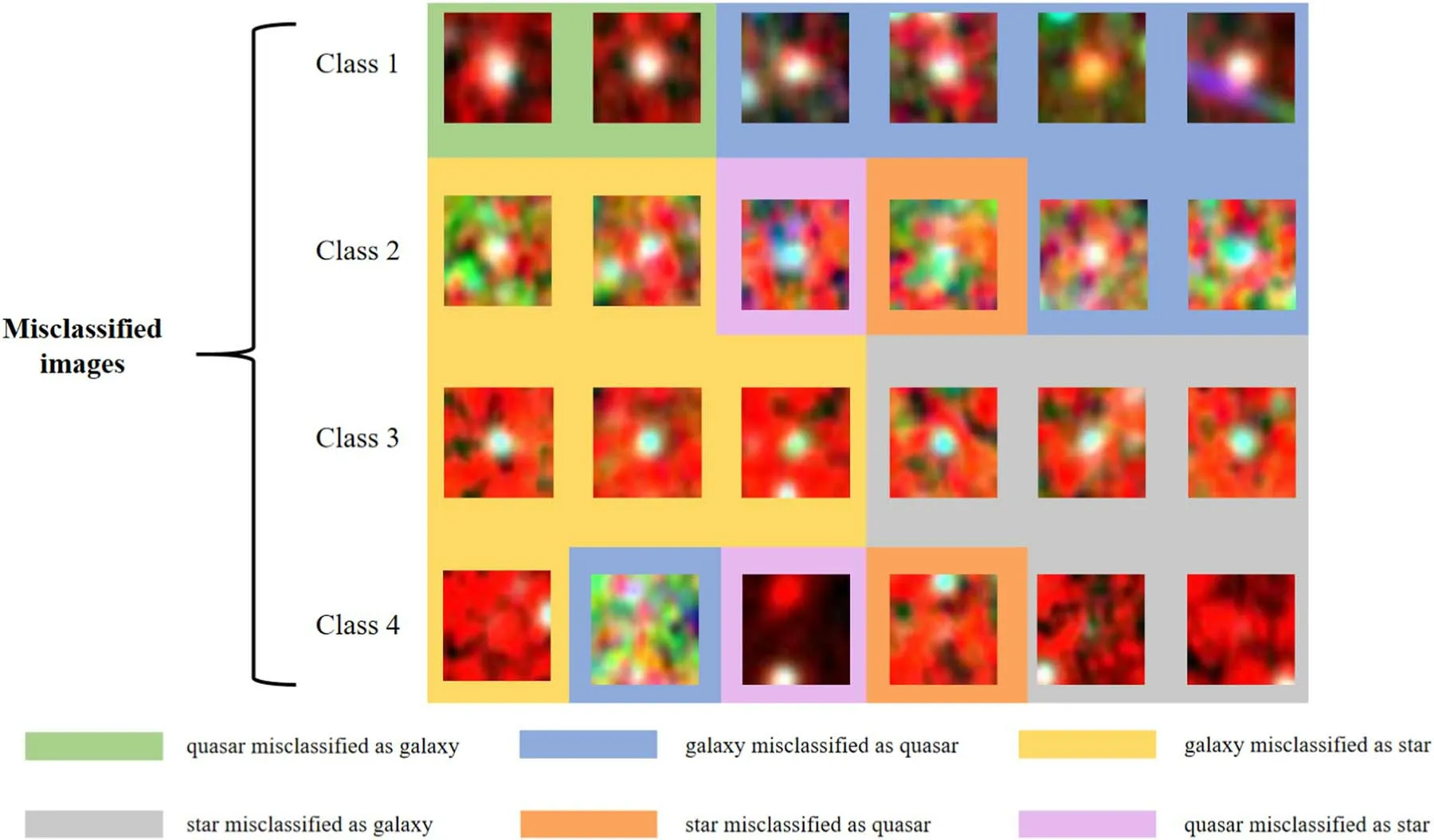

In Figure 18,there are 104 misclassified images,which are divided into four classes,namely Class 1 (37 images),Class 2(13 images),Class 3 (45 images),and Class 4 (nine images).Some examples of misclassified images are shown in Figure 19,and the analysis is as follows.

Figure 19.A few misclassified images.Class 1,2,and 3 are the three types obtained by K-means.Class 4 involves some images in which the source is obscured or absent entirely from the center.

K-means clustered the misclassified samples to obtain three classes of images:Class 1,Class 2,and Class 3.Visually,it can be seen that the images in Class 1 are darker,mainly showing the confusion of galaxies and quasars;in Class 2,the colors are complex,so the misclassification is more complicated;and in Class 3,the colors are brighter,mainly showing the confusion of galaxies and stars.How to further distinguish these images requires more effort in future work.Class 4 is a particular type found in misclassified samples,because its sample center has no source,which is unfavorable for feature extraction in IICnet.IICnet is more concerned with central sources,as evidenced by Section 3.2,so how to handle such images is to be considered in the subsequent work.

5.2.Analysis of Outlier Samples

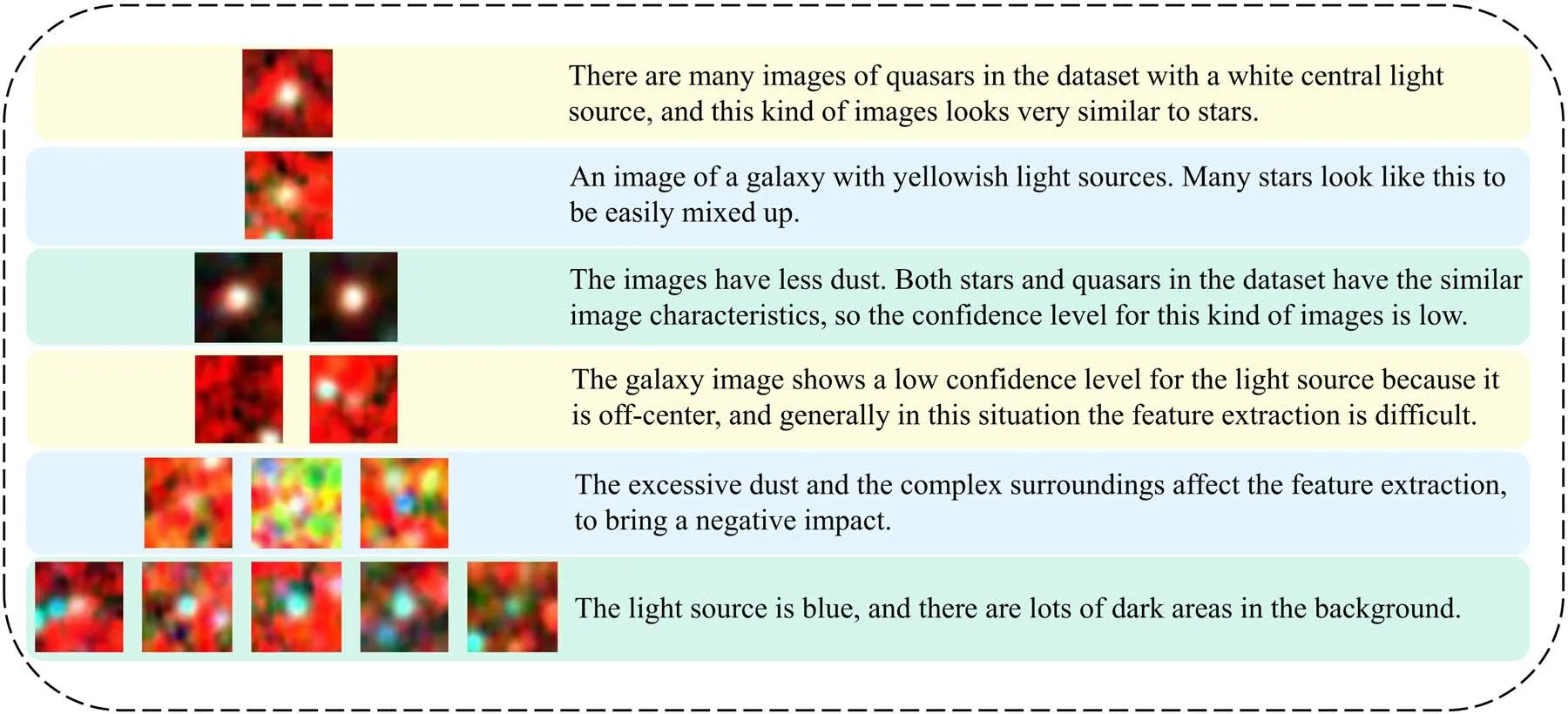

In addition to the misclassified samples,some images are correctly classified but have low confidence in the classification,which are called outlier samples in this paper.These samples have features easily confused with other types,so it is necessary to analyze them.

When the test set is inputted into IICnet for testing,the classification confidence for each image is obtained.By filtering the classification confidence,the filtering condition is the images with a confidence below 0.6,although the classification is correct.A total of 14 images were chosen,as shown in Figure 20,and combined with Figure 6 to facilitate viewing the distribution.According to the image characteristics,the analysis of these samples are presented in Figure 21 and are divided into six cases.Each case has its unique characteristics.The classifier in this paper obtains a lower confidence level in distinguishing images whose features need to be clarified but still obtains correct classification results.The above proves the superiority of IICnet.

Figure 20.Samples with correct classification but low confidence.The blue triangle represents the outlier sample.

Figure 21.Analysis of outlier samples.Manually watching and labeling the outlier samples,and giving the characteristics of the images based on color distribution and textures,and summarizing the images’ characteristics for each case.

6.Conclusions

The task of the infrared image classification of galaxies,quasars,and stars has been rarely reported in past literatures.For many images it becomes extremely difficult owing to the complexity of the images and similarities between different types.This paper uses W1,W2,and W3 for WISE to synthesize RGB images and specifically designs the IICnet to classify infrared images into galaxies,quasars,and stars.IICnet intergrates RFB and CBAM (Section 3.1),which improve feature extraction for the sources and enable higher classification accuracy rates.In the experiments,by comparing IICnet with VGG16,GoogleNet,Resnet34,MobileNet,EfficientNetV2,and RepVGG,it is proved that IICnet outperforms all the other methods for the classification of infrared images.

For the analysis of misclassification samples,K-means clustering is used and four cases are discussed.Case 1,2,3 are misclassified because the images’ features are highly similar.Case 4 misclassified because the source is off-center and cannot be extracted efficiently.

Outliers are also analyzed which are the correctly classified images but with low confidence.Outliers are at the borders of the types.Because the confidence level is low,it seems to be lucky that they can be classified correctly by the current method,IICnet.In the future,an SVM mechanism may be considered to be used because the outliers here are like support vectors.

In summary,experiments have proven that IICnet is very effective in classifying infrared images.It may provide a new tool for astronomers.It can be further enhanced by a better feature extraction block,a new post-processing block like SVM,etc.

Acknowledgments

This work is supported by the Natural Science Foundation of Tianjin (22JCYBJC00410) and the Joint Research Fund in Astronomy,National Natural Science Foundation of China(U1931134).We are grateful for the Sloan Digital Sky Survey(SDSS) and the Wide-field Infrared Survey Explorer (WISE)that provide us with open data.

ORCID iDs

Research in Astronomy and Astrophysics2023年8期

Research in Astronomy and Astrophysics2023年8期

- Research in Astronomy and Astrophysics的其它文章

- Preliminary Exploration of Areal Density of Angular Momentum for Spiral Galaxies

- A Pre-explosion Effervescent Zone for the Circumstellar Material in SN 2023ixf

- Type Ia Supernova Explosions in Binary Systems: A Review

- Velocity Dispersion σaper Aperture Corrections as a Function of Galaxy Properties from Integral-field Stellar Kinematics of 10,000 MaNGA Galaxies

- A Catalog of Collected Debris Disks: Properties,Classifications and Correlations between Disks and Stars/Planets

- Decametric Solar Radio Spectrometer Based on 4-element Beamforming Array and Initial Observational Results