Images2Poem in different contexts with Dual‐CharRNN

Jie Yan|Yuxiang Xie|Xidao Luan

Abstract Image to caption has attracted extensive research attention recently.However,image to poetry,especially Chinese classical poetry,is much more challenging.Previous works mainly focus on generating coherent poetry without taking the contexts of poetry into account.In this paper,we propose an Images2Poem with the Dual‐CharRNN model which exploits images to generate Chinese classical poems in different contexts.Specifically,we first extract a few keywords representing elements from the given image based on multi‐label image classification.Then,these keywords are expanded to related ones with the planning‐based model.Finally,we employ Dual‐CharRNN to generate Chinese classical poetry in different contexts.A comprehensive evaluation of human judgements demonstrates that our model achieves promising performance and is effective in enhancing poetry's semantic consistency,readability,and aesthetics.We present an Images2Poem with the Dual‐CharRNN model exploiting images to generate Chinese classical poems in different contexts,which effectively improves the semantic consistency,readability and aesthetics of the generated poetry.

KEYWORDS computer vision,deep learning,image captioning,poetry generation

1|INTRODUCTION

Generating a description of an image is called image captioning[1],sometimes“image automatic annotation”,“image tagging”or“image subtitle generation”.It is a popular research area of artificial intelligence(AI)that deals with computer vision(CV)and natural language processing(NLP)[2].It's easy for human beings to describe the content of an image in natural language[3],however,it's very difficult for machines to do the same.Existing approaches to image captioning largely concentrate on generating basic sentence descriptions of the given image[4–6],mainly including improving its accuracy,fluency,and flexibility.Therefore,the language styles of these descriptions are mostly boring,making it even harder for image observers to convey their emotions.

Chinese classical poetry constructed with strict rules and patterns is an invaluable Chinese cultural treasure with thousands of years of history.It is one of the oldest and largest continuous traditions in world literature and still fascinates us today[7].Element composition,together with the poet's spiritual conception provides indispensable clues to understand the contents of Chinese classical poems.Because when a poet creates his poem,the poem will not only be affected by what he sees,but also by what he feels.Therefore,we take elements of the image and emotions of the poet into full consideration and propose an Images2Poem with the Dual‐CharRNN model to automatically generate a classical Chinese poem in different contexts from a given image.

Generally speaking,for the image poetry generation,a direct way is to train a neural network model.However,there is no large‐scale and high‐quality image‐poetry(Chinese classical poetry)paired dataset available for the end‐to‐end training at the present.Therefore,an indirect way is to identify elements in the images and generate poems with element‐related keywords.In this paper,we exploit multi‐label image classification as a bridge to convey the visual semantics of images.The keywords extracted from the image are expanded to form the poetry topic with the planning‐based method.As the saying goings,“there are a thousand Hamlett for a thousand readers”.In the same way,different people feel differently about the same image.When expressing their different emotions with poems,the contexts of poems differ.To the best of our knowledge,few pieces of research on automatic poetry generation have taken different contexts of poetry into consideration,which makes the generated poetry monotonous and less emotive.Fortunately,our Images2Poem with the Dual‐CharRNN model will effectively figure out the challenge.The main contributions of our work are summarised as follows:

·We integrate the contexts of poetry and introduce a novel poetry generation method that automatically generates Chinese classical poetry in different contexts.

·We propose Dual‐CharRNN,the Context CharRNN captures the contexts of different kinds of poetry,and the Poetry CharRNN generates poems within different contexts.The two of them work together to generate Chinese classical poetry in different contexts.

·The comprehensive evaluation results show the poetry generated in different contexts leads to outstanding performance on poetry's semantic consistency,readability,and aesthetics.

2|RELATED WORKS

2.1|Image captioning

Poetry generation from the image is an expansion of the task of image captioning.On the whole,the traditional feature‐based method and the current deep‐learning‐based method are two stages in the development of image captioning.Specifically,retrieval and template‐based methods dominate the development of image captioning in the traditional stage.For example,on the one hand,the captions can be generated by selecting the sentences with the most similar semantics from the sentence pool through the retrieval‐based methods[8–11].On the other hand,the template‐based methods[5,12,13]generate image captions by aligning the pre‐defined slotted sentence templates with the visual content extracted from the image.Enlightened by the successful application of deep neural networks in machine translation,the encoder‐decoder framework[14]has been attached to great importance for image captioning.More specifically,CNNs are normally employed as encoders to extract image features,while RNNs usually serves as the decoder to generate captions.For example,literature[4]firstly take image captioning as a machine translation task and attempt to translate the image into natural language with CNN‐RNN.Furthermore,in[15],the semantic information is integrated to guide the LSTM neural network for caption generation.In[16,17],the attention mechanism is introduced to deal with the task by concentrating on the specific area of the image when generating an entity word.

2.2|Poetry generation

Inspired by image captioning,along with the glorious Chinese classical poetry history,automatic poetry generation from an image is becoming a popular research topic in artificial intelligence[18].However,most of the poetry generation research focus on generating poetry from the text.Among them,References[19,20]employ rules or templates,especially,Tosa et al.[19]have developed a traditional Japanese poetry generation system with the template‐based method,but the poetry generated is not flexible.Besides,Manurung[21]generates poetry with a genetic algorithm by considering the poetry generation problem as a search problem.More recently,the development of deep learning brings new opportunities to automatic poetry generation.In specific,Mikolov et al.[22]have constructed the Recurrent Neural Network Language Model(RNNLM)to generate poems from the poetry corpus.Given the first keyword,Zhang and Lapata[23]generate the first line of a poem from the Recurrent Neural Network(RNN),and the rest of the lines are generated by taking the previously generated lines as input.Qixin et al.[24]propose a new two‐stage poetry generation method based on planning neural networks.In[25],a baseline model for acrostic poetry generation is proposed,which combines the conditional neural language model and the neural rhyming model to generate acrostic poems with several constraints.

Nevertheless,none of these studies concern non‐textual modality,they all pay attention to generating poetry from the text input.Generating poetry from an image input is challenging because the fusion of different modes is much more difficult[26].To figure it out,[27]establishes a multi‐modal RNN to extract high‐level semantic features of images,and builds a generation model of classical Chinese poems based on a two‐layer Long and Short‐Term Memory(LSTM)network.Liu et al.[28]propose an image model with a selection mechanism and adaptive attention mechanism by constructing an image stream dataset related to images in poems.In[18],Microsoft XiaoIce generates poems with a two‐step language model.However,all these works ignore the different contexts that the same poetry can express.To improve the semantic consistency,readability,and aesthetics of the poetry to be generated,we make a point of generating Chinese classical poetry in different contexts in this work.

3|METHODS

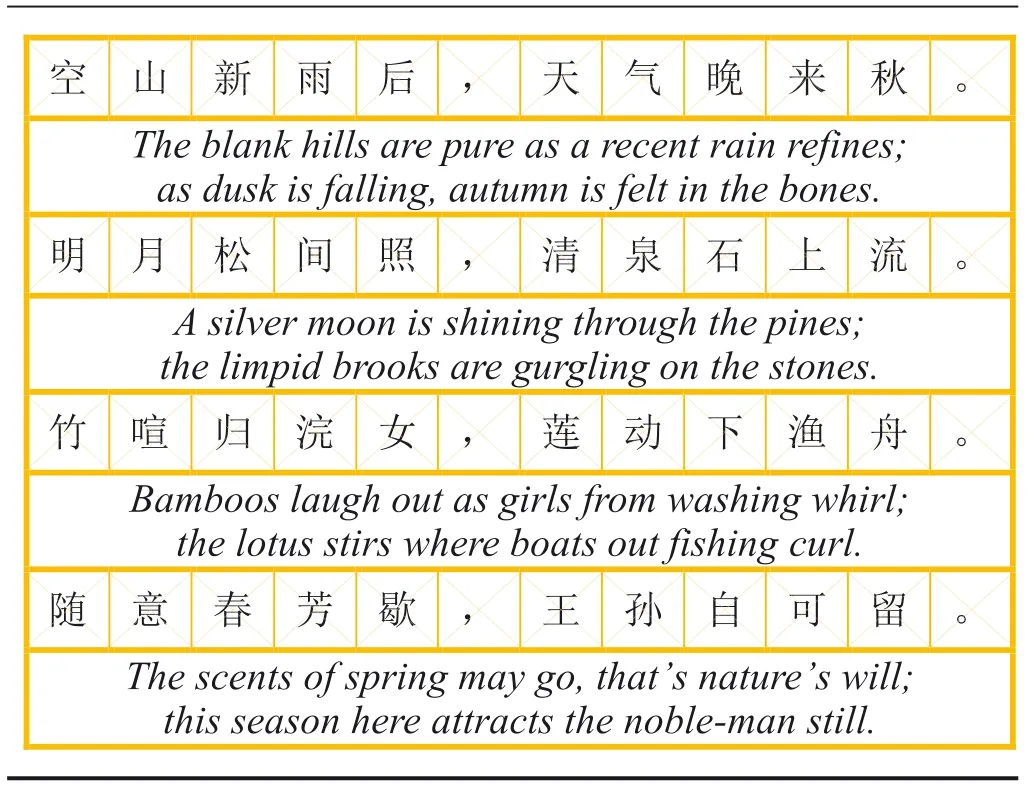

We aim to automatically generate Chinese classical poetry in different contexts from images.The‐form of poetry to be generated is shown in Table 1,where each line consists of two sentences and there are four lines and eight sentences in total.In specific,for the semantic consistency between images and poems,we employ the multi‐label image classification as a bridge to extract keywords from the image.Then a keyword sequence that served as the poetry topic is constructed based on the planning‐based model[29].Finally,Dual‐CharRNN is proposed to generate Chinese classical poetry in different contexts.The problem can be formulated as follows:for a given imageI,we try to generate a poemp={L1,L2,L3,L4},whereLidenotes theith line ofP.Andpis supposed to be relevant to the image content,fluent in the language,and coherent in semantics[18].Figure 1 provides an overview ofthe structure of our three‐step Images2Poem model.The three steps are multi‐label image classification,keywords expansion,and poem generation in different contexts,respectively.

TABLE 1 An example of the poem with four lines and eight sentences

3.1|Multi‐label image classificatio n

To create poetry related to the given image,we need to mine semantic information from the visual image[30],because an image not only contains the visible objects,but also the relationships between them.These objects play a crucial role in the content of the poetry,and their relationships influence the creator's emotions or the context of the poetry.As there is no available image‐poetry paired dataset,we exploit the multi‐label image classification as a bridge instead and construct a keyword sequence based on objects identified from the image.

Multi‐label classification is to predict all possible labels for an image[31].Given a dataset of imagesX={x1,x2,…,xn}and their corresponding labelsY={y1,y2,…,yn},wherenis the number of images in the dataset.For theith imagexi,we denote its corresponding labels asyi={yi1,yi2,…,yim},wheremis the number of possible labels,and if the imagexiis labelled with the labelj,thenyij=1;otherwise,yij=0.

FIGURE 1 The overall architecture of our model.From the input image to the Chinese classical poetry output,we firstly extract object elements in an image with multi‐label image classification and make these objects one part of the keyword sequence.Secondly,if the length of the keyword sequence is less than 4,the object elements obtained from the input image are fed into a planning‐based model to expand some new keywords until there are 4 keywords in the keyword sequence.Thirdly,we exploit a novel Dual‐CharRNN to generate poems in different contexts.Specifically,the hidden contexts of different kinds of poetry are captured by the Context CharRNN,and then the elements in keyword sequence are provided to the pre‐trained Poetry CharRNN to generate poems within different contexts

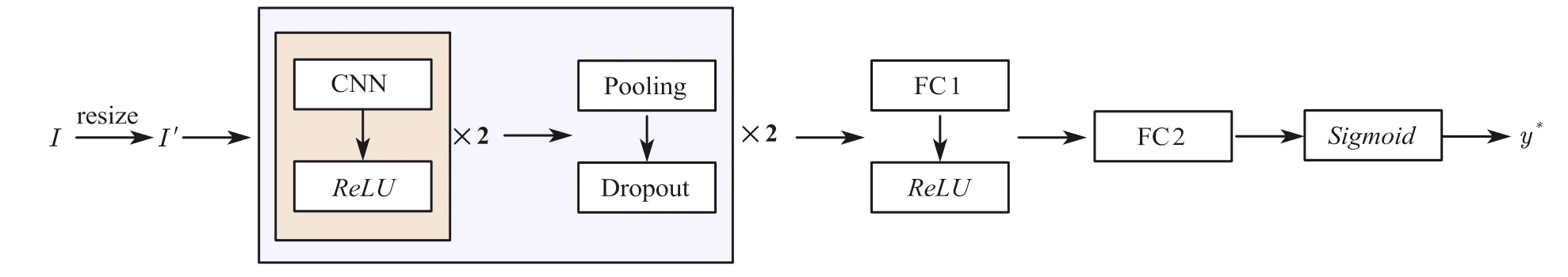

FIGURE 2 Structure diagram of the multi‐label image classification model

(1)Model Structure:The multi‐label image classification is done with Convolutional Neural Network(CNN).Figure 2 shows the structure of our multi‐label image classification model,including the following three parts.

(a)The input part:The original input RGB image is marked asI.Since the input to the CNN must be a fixed‐size vector,we get an RGB imageI′with a height and width of 100 pixels after normalising the size ofI.

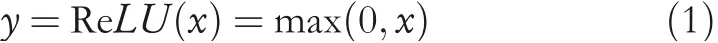

(b)Feature extraction:Firstly,four convolutional layers are used to extract visual features of the imageI′,and we chooseReLUshown in Equation(1)as the activation function.The formula indicates that when the input valuexis greater than 0,the output value isx;otherwise,the output is 0.Secondly,to reduce the training parameters of the network,a pooling layer is added after every two convolutional layers,and a dropout layer is also added to avoid over‐fitting.Finally,the features of each image are transformed into a 512‐dimensional feature vectorVafter a fully connected layer(FC1)of 512 neurons.

(c)The output part:Having extracted the features of imageI,the feature vectorVis fed into the fully connected layer(FC2)withmneurons,wheremdenotes the number of possible categories of object elements.And then we use theSigmoidactivation function mapping the input values to(0,1)at the end of the model to output the probability that the image belongs to each label.TheSigmoidactivation function is represented as shown in Equation(2).

(2)Loss Function:We chooseBinary Cross Entropyas the loss function,as shown in Equation(3)wherenis the number of image samples,yiis the right label of samplei,andyi*is the probability that the sampleiis predicted to be labelled with the right label.

3.2|Keywords expansion

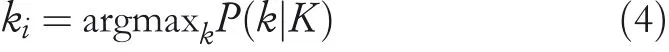

After multi‐label image classification,if the image is predicted to have more than or equal to 4 labels,we will select the top 4 labels with the highest prediction probability as keywords to construct the keyword sequence.However,if the number is less than 4,we will expand the keywords based on current labels until the length of the keyword sequence is 4.In this paper,we expand the keywords with the planning‐based model proposed by Wang et al.[29],which is based on the RNNLM.The model is used to predict the next keywords according to the former sequence of keywords.And Equation(4)shows how the next keywords are determined.

wherekiis theith keyword to be predicted,andK={K1,K2,…,Kn}is the input keywords sequence.

3.3|Generate poems in different contexts

CharRNN,a character‐level recurrent neural network,is used to predict the following characters from the previous ones[32].Since it focuses on the dimension of a single character rather than the whole word or sentence.When training CharRNN,we take the previous word of a sentence as the input and the following word as the label.In this paper,we employ the Context CharRNN to capture the hidden contexts of different kinds of poetry,and the pre‐trained Poetry CharRNN is applied to generate poems within different contexts;the details of our Dual‐CharRNN model are given as

(1)The Context CharRNN:Chinese classical poetry is an important way to understand the spiritual world of poets.When a poet writes a poem,what influences the content of the poem is not only the objects in his sight but more importantly,his inner emotions,which helps to create the context of the poem.Therefore,we introduce the Context CharRNN to constrain the context of poetry,which shows how different people in different emotions perceive the same image differently.The main approaches are as follows.

(a)First,we construct the datasets.Assuming that there arenkinds of emotions expressed in poetry,theith emotion is recorded asQi,and we get the setQ={Q1,Qi,…,Qn}composed of all emotions.By collecting verses expressing these emotions separately on the ancient poetry website,we getntraining datasets recorded asT={T1,Ti,…,Tn},and each of them includes verses that express the same emotion of different poets.

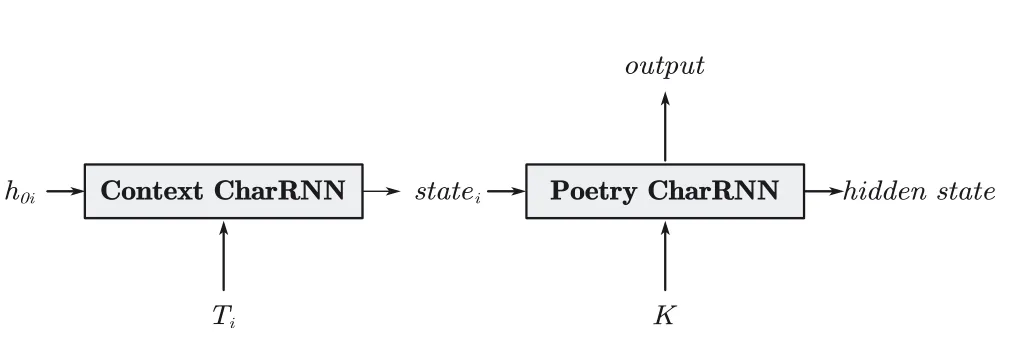

(b)Second,we train the Context CharRNN model.The Context CharRNN with the initial hidden stateh0iis trained onTi(i=1,2,…,n)respectively.After training,we obtain the last hidden statestateiunder theith emotionQi,as shown in Figure 3.

(2)The Poetry CharRNN:In the poetry generation stage,we use the Poetry CharRNN pre‐trained on the Chinese poetry corpusPto generate poems in different contexts,each line of the poem is generated by taking the keyword in keyword sequence specified by the planning‐based model as input.And the specific methods are as follows.

(a)First,we seth0=statei,whereh0is the initial hidden state of the Poetry CharRNN andstateiis the last hidden state of the Context CharRNN under theith emotionQi.

(b)Second,we feed the keyword sequenceKinto the Poetry CharRNN with the initial hidden statestatei,and the poem in theith context can be generated,as shown in Figure 4.Each keyword in the keyword sequenceKis related to a certain line of the generated poem,therefore,a poem with four lines and eight sentences is finally obtained.The overall schematic of generating poetry in different contexts with Dual‐CharRNN is shown in Figure 5.

4|EXPERIMENTS

4.1|Multi‐label image classification

As there is no parallel image‐poetry dataset available for Imag2Poetry generation,it is necessary to construct our dataset.Our image dataset mainly centres on the images with the natural landscape,consisting of 10 kinds of object elements commonly appearing in Chinese classical poetry,including“Desert”,“Mountain”,“Sea”,“Sunset”,“Tree”,“Moon”,“Flower”,“Grass”,“Man”and“Cloud”.We collect 400 images for each kind of element and finally get 4000 distinct images.Then we split 800 for validation and the other for training.

FIGURE 3 The context CharRNN

The parameters of the multi‐label image classification model are trained on our dataset with the Keras framework.Specifically,64 convolution kernels with a size of 3×3 are selected to extract image features,and the dropout ratio is set to 0.25 after the maximum pooling is performed with a 2×2 pooling window.The model is trained with the SGD algorithm[33],where the minibatch is set to be none because the size of our dataset is not too large.We stop training the model with Binary Cross Entropy loss after 600 epochs,at the 50th epoch,the accuracy both on the training and test dataset no longer changes significantly,meanwhile,the accuracy on the training dataset is 100%,while it is 95.15% on the test dataset.However,since Keras does not provide a requirement for multi‐label output,it is necessary to find the best threshold for each label after training.In this paper,the Matthews correlation coefficient(Matthews correlation coefficient,MCC)method is used to determine the best threshold for each label,as shown in Equation(5)

where TP represents the number of test images where both the predicted and actual results are positive,TN represents the number of test images where both the predicted and actual results are negative;FP represents the number of test images with positive predicted results but negative actual results;FN represents the number of test images with negative predicted results but positive actual results.It indicates that if the predicted probability of an image belonging to a certain label exceeds its threshold,then the image will be labelled with it.

4.2|Keywords expansion

FIGURE 4 The poetry CharRNN

FIGURE 5 The overall schematic of Dual‐CharRNN

TABLE 2 Examples of keywords expansion experiment

We adopt the planning‐based model proposed by Wang et al.[29]to expand new keywords.First,we collect 76,859 classical Chinese poems from the Internet and then use the TextRank algorithm[34]to extract a keyword sequence consisting of four keywords from each line of the four‐line poems,and finally,a total of 76,859 keyword sequences are obtained.Then,the 4000 keyword sequences are randomly selected as the test dataset and the verification dataset.Each dataset contains 2000 keyword sequences.Table 2 gives a part of the results of keywords expansion.

4.3|Generate poems in different contexts

Different from visual image captioning which aims to generate descriptions reflecting the image content faithfully,poetry generation is a much more creative and challenging task.We exploit the Dual‐CharRNN model in the poetry generation stage to generate poems in different contexts.

More specifically,on the one hand,we adopt the Poetry CharRNN pre‐trained on 57,580 original Tang poems collected from the Internet,the number of lines of poems to be generated is set to 4,while the maximum length of each line to 12.On the other hand,to generate poems in different contexts,firstly,we collect verses expressing different emotions from the ancient poetry website.These emotions include“praising the spring”,“yearning for the countryside”,“loving the landscape”,“missing relatives and friends”and so on.The poetry context dataset consists of verses corresponding to the emotion they conveyed.Then,the value of the last hidden state of the Context CharRNN is trained on the poetry context dataset,which is finally assigned to the initial hidden state of the Poetry CharRNN to control the context of the poetry to be generated.Table 3 gives two examples of poems generated based on our Dual‐CharRNN model in different contexts.

4.4|Image captioning

In image‐inspired Chinese classical poetry generation,without an image‐poetry paired dataset,we attempt to extract the element‐related keywords from the given image through the image captioning method with its common datasets at the beginning.

There are two types of datasets we can make full use of.As for the first one,the English image captioning dataset,for example,MS COCO and Flickr30k.To generate poetry in Chinese,Reference[14]maps the English keywords extracted from the English captions to synonymous Chinese ones in the poetry corpus.However,the problem lies in that there does not exist a one‐to‐one correspondence between English and Chinese‐words.For example,the English‐word“people”can be mapped to Chinese words such as“佳人(beauty)”,“才子(gifted scholar)”,“翁(old man)”,“媪(old woman)”and so on,which makes it a tough task to automatically match suitable Chinese keywords when generating poems.Therefore,we consider generating poems in English with English keywords first and then translate them into Chinese.However,the two languages are quite different in terms of word usage,language structure,mode of thinking,so the translated poems in Chinese will not be comparable to the ones generated directly based on Chinese keywords,whether in content or context.

As for the second type of dataset,the Chinese image captioning dataset,for example,AI Challenger 2017[35].It consists of 300,000 images and 1.5 million Chinese captions.We train one of the most advanced image captioning models“Show,Attend and Tell”[16]on AI Challenger 2017.Specifically,we split 210,000 images for training,30,000 for validation,and the other for testing to get the Chinese captions of the images input.Some of the experimental results are shown in the last row of Table 3.As can be seen from the caption results,the“Show,Attend and Tell”model trained on Chinese image caption dataset AI Challenger 2017 has poor text description quality for landscape images.Besides,whether there are people in the image,words related to“people”will appear in the generated captions.The main reason is that AI Challenger 2017 dataset contains many character images,while our task pays more attention to landscape images.As a result,we obtain poor captions with the“Show,Attend and Tell”model,since there are great differences between the two kinds of images.In this way,the compatibility between the keywords extracted through the image captioning method and the image is also poor.Finally,taking all the above analysis into account,we employ multi‐label image classification as a bridge to extract keywords from the image.

5|EVALUATION

5.1|Automatic evaluation

Until now,it has been a very difficult problem to evaluate a text generation system accurately and automatically.For image captioning,the common evaluation metrics are BLEU[36–38],ROUGE[39],METEOR[40]and CIDEr[41].However,considering the content,context,and rhythm of the poem,it is obvious that these metrics cannot effectively evaluate thequality of the poem automatically generated by the machine.In addition,all of these metrics are very feebly correlated with human judgements[28],that is,the poems generated based on our model cannot be fully evaluated with such metrics.

TABLE 3 Two examples of the experimental results

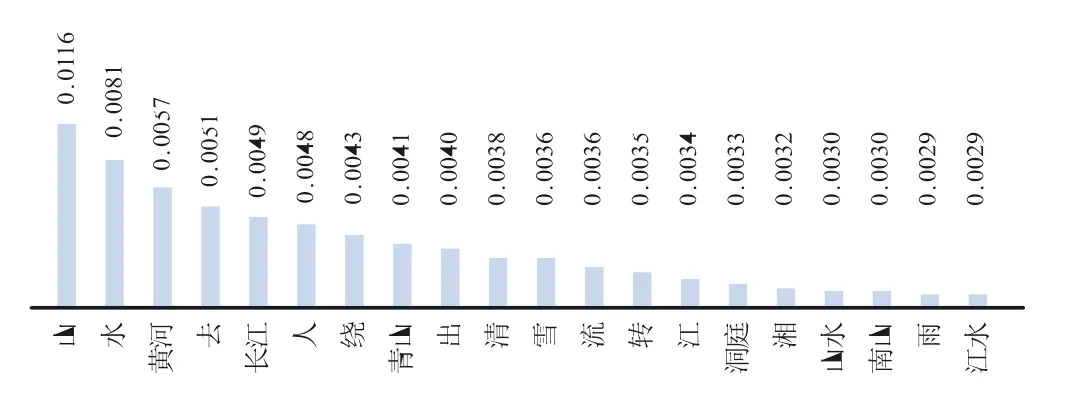

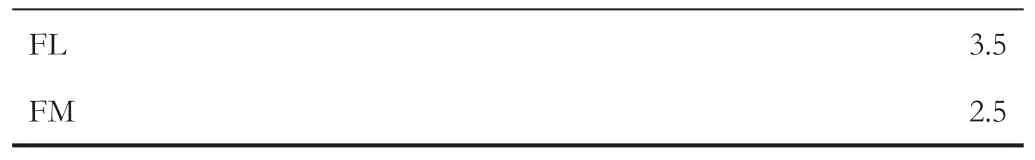

Previous works mainly concentrate on generating coherent poetry without considering the contexts.In this paper,we pay more attention to the contexts of our generated poetry.To preliminarily test the effect of the context,we use the TextRank algorithm to extract keywords from the poetry context dataset expressing the emotions of“loving the landscape”and“missing relatives and friends”respectively,and calculate the importance score of each keyword.The TOP 20 keywords with the highest importance score are shown in Figures 6 and 7.The average frequency of TOP 20 keywords appearing in the generated poems of different contexts is also counted.The results are shown in Table 4,where FL represents the average frequency of the keywords in Figure 6 appearing in the poems generated of the“loving the landscape”context,and FM represents the average frequency of the keywords in Figure 7 appearing in the poems generated of the“missing relatives and friends”context.

FIGURE 6 TOP 20 keywords in the poetry context dataset of“loving the landscape”context

FIGURE 7 TOP 20 keywords in the poetry context dataset of“missing relatives and friends”context

TABLE 4 The frequency of keywords

According to Table 4,an average of 3.5 keywords is found in Figure 6 in the poems generated of the“loving the landscape”context,while the poems generated of the“missing relatives and friends”context have an average of 2.5 keywords found in Figure 7.The matching results preliminarily show that the poetry generated in different contexts from images conveys some kind of emotion,which is related to the specific context.

5.2|Human evaluation

5.2.1|Evaluation metrics

Evaluating the quality of Chinese classical poetry is a very challenging task because poetry,like other works of art,relies on intuition,and we cannot accurately infer the quality of a poem with mathematical logic.In addition,just as we can't decide whether the poetry of Li Bai or Du Fu is superior,poetry is a highly creative way of expressing personal emotions.Poetry is supposed to be oriented to the public,and good poetry should be accepted and liked by the public.Referring to[23,30,42],we use three evaluation standards to evaluate the quality of generated pomes,which are semantic consistency,readability,and aesthetics:

·Semantic consistency(S):Whether the theme expressed in the poetry corresponds to the image.

·Readability(R):Whether a single sentence of the poetry is fluent,and whether all sentences are coherent.

·Aesthetics(A):Whether the poetry expresses aesthetics and emotions poetically.

5.2.2|Baselines

Sequence model[14]:It is based on the Seq2Seq framework and uses the LSTM model as encoder and decoder to generate poetry.

PPG[29]:It first plans the subtopics of the poem according to the user's writing intention,and then uses the improved RNN as the encoder and decoder to generate each line of the poem to ensure its coherence.

XiaoIce[18]:It is a chatbot system from Microsoft that generates poems based on images.

Constrained Topic‐aware Model(CTAM)[30]:It constructs a visual semantic vector to embed visual content through the image CTAM to create modern Chinese poetry.

Human:It means human‐written poems.To provide ground truth for the images,we manually annotated a part of the images with related Chinese classical poetry to form image‐poetry pairs.

5.2.3|Implementation details

To conduct a human evaluation,we use questionnaires to ask participants to evaluate the results of our experiment.The questionnaire consists of a portion of image‐poetry pairs from our experimental results and some man‐made image‐poetry pairs,followed by five questions.Each question in the questionnaire contains a five‐point scale assessment for the semantic consistency,readability,and aesthetics of the poem,with 1 being the lowest score and 5 the highest,and the higher the score,the better the poem.And then,for each image‐poetry pair,we list six common poetic contexts and ask participants which context the poem conveys and whether they think the poems are written by humans.

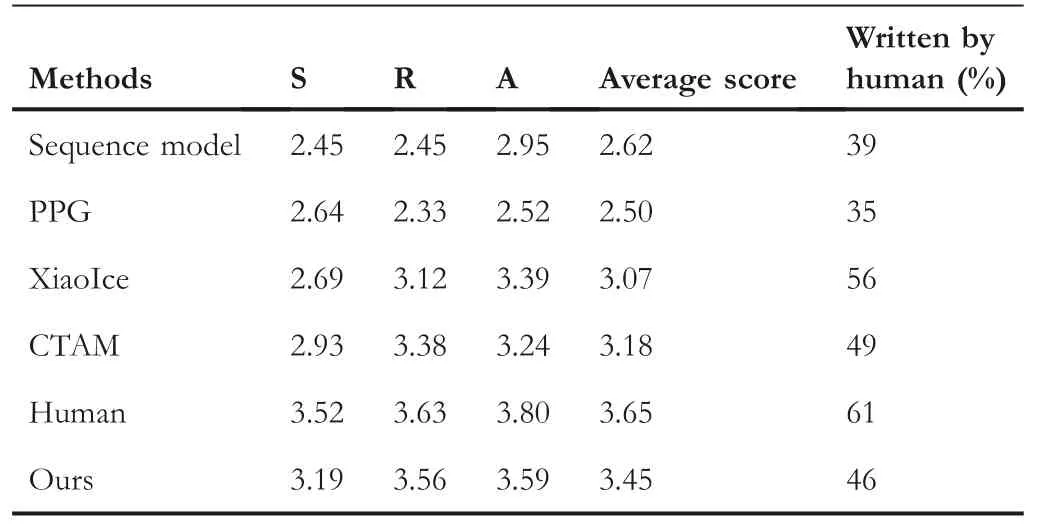

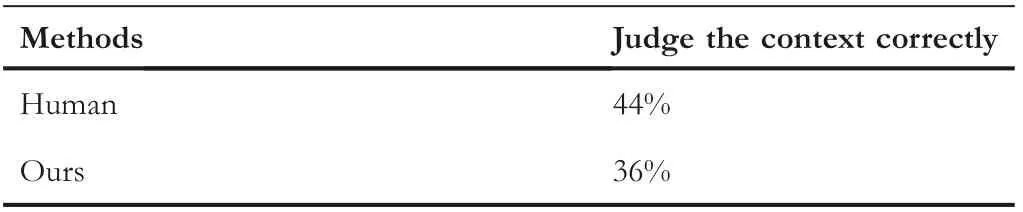

We distribute questionnaires to the public on the WeChat platform.The participants are between the age of 20 and 55.We ask them to read each poem patiently and answer each question carefully when filling in the questionnaire.The reason why we have this questionnaire for the general public rather than experts of Chinese language and literature is,first,that the purpose of the poet writing poetry is not to be commented upon or appreciated by fellow poets,it's about making the public understand what they want to express and how they feel.Second,no matter how classic or good a poem is in the expert's opinion,it cannot be called a good poem if it does not resonate with the public,at least not yet.Finally,we consider the overall quality of the image‐poetry pair through the average score of semantic consistency,readability,and aesthetics of 130 valid questionnaires,and report the percent of participants who consider the poems written by human beings.Besides,we also report the percentage of participants who select the context correctly.The evaluation results are shown in Tables 5 and 6.

5.3|Results analysis

We can conclude from Table 5 that,on the one hand,among all the evaluation criteria,the poems written by humans get the highest score in semantic consistency,readability,and aesthetics.Meanwhile,there are 61% of the participants who consider the poems written by humans correctly.It also shows poems written by humans are more distinguishable than poemsgenerated by machines.Moreover,of all the poetry generation models,ours obtains an average score of 3.45,which is second only to the average score of poems created by humans,indicating that the setting of poetic context has effectively improved the semantic consistency,readability,and aesthetics of the generated poems.Besides,compared with four‐line and four‐sentence poems,our four‐line and eight‐sentence ones express more content and artistic conceptions,which contributes to the overall quality of the generated poetry.However,on the other hand,only 46% of the participants consider the poetry generated using our model to be written by humans,a lower percentage than that using XiaoIce and CTAM.After analysing the result and communicating with the questionnaire participants,we found that although the sentences of the poetry generated by our model were fluent,coherent,and poetic,the poetry was short of a certain rhythm.And this makes more participants able to distinguish between poems created by our model and by human beings.

TABLE 5 Human evaluation results of the poetry

TABLE 6 Human evaluation results of the context

The poetry generated by our model conveys specific poetic context,Table 6 shows that 36% of the participants can correctly match the poems to the context we set during the experiment.In addition,there are 44%of the participants who could give correct judgement on the context of poems written by humans.We conclude that even poems written by real poets are difficult for humans to decide which context they express,that's because most of the poems convey more than one context.Multiple contexts have caused some confusion to evaluators,leading to a low percentage of the results.However,due to the small difference between the two results in Table 6,from this point of view,combining contexts with poetry makes sense.

6|CONCLUSION

In this work,we present an Images2Poem with a Dual‐CharRNN model to automatically generate a classical Chinese poem description of an image in different contexts.Firstly,we exploit multi‐label image classification as a bridge to convey visual semantics by extracting a few keywords related to object elements from the given image.Then,these keywords are expanded to related ones based on the planning‐based model.Finally,we employ Dual‐CharRNN to generate Chinese classical poetry in different contexts,among which the Context CharRNN captures the hidden contexts of different kinds of poetry,and the Poetry CharRNN generates poems within different contexts.Our experimental results show that of all the poetry generation baselines,ours gets a high average score,which is second only to that of poems created by human beings,reflecting the setting of poetic context that can effectively improve the semantic consistency,readability,and aesthetics of the poetry.However,CharRNN is a character‐level poetry generation model focussing on a single character without considering the whole sentence,so there is a lack of rhythm of the generated poetry.Besides,when training the Context CharRNN on poetry context datasets,the same verse may be contained in different poetry context datasets,increasing the ambiguity and uncertainty of the context.And that's what we are going to figure out in the future.

ACKNOWLEDGEMENT

This work has been supported by the National Natural Science Foundation of China under Contract Nos.61571453 and Nos.61806218.

CONFLICT OF INTEREST

None.

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.

ORCID

Jie Yanhttps://orcid.org/0000-0002-6079-6907

CAAI Transactions on Intelligence Technology2022年4期

CAAI Transactions on Intelligence Technology2022年4期

- CAAI Transactions on Intelligence Technology的其它文章

- CORRIGENDUM

- Guest Editorial:Special issue on recent developments in advanced mechatronics systems

- ST‐SIGMA:Spatio‐temporal semantics and interaction graph aggregation for multi‐agent perception and trajectory forecasting

- Construction of the rat brain spatial cell firing model on a quadruped robot

- Rethinking the image feature biases exhibited by deepconvolutional neural network models in image recognition

- Cross‐domain sequence labelling using language modelling and parameter generating