A Content-Aware Bitrate Selection Method Using Multi-Step Prediction for 360-Degree Video Streaming

GAO Nianzhen,YU Yifang,HUA Xinhai,FENG Fangzheng,JIANG Tao

(1.Huazhong University of Science and Technology,Wuhan 430074,China;2.ZTE Corporation,Shenzhen 518057,China)

Abstract: A content-aware multi-step prediction control (CAMPC) algorithm is proposed to determine the bitrate of 360-degree videos,aiming to enhance the quality of experience (QoE) of users and reduce the cost of video content providers (VCP).The CAMPC algorithm first employs a neural network to generate the content richness and combines it with the current field of view (FOV) to accurately predict the probability distribution of tiles being viewed.Then,for the tiles in the predicted viewport which directly affect QoE,the CAMPC algorithm utilizes a multi-step prediction for future system states,and accordingly selects the bitrates of multiple subsequent steps,instead of an instantaneous state.Meanwhile,it controls the buffer occupancy to eliminate the impact of prediction errors.We implement CAMPC on players by building a 360-degree video streaming platform and evaluating other advanced adaptive bitrate (ABR) rules through the real network.Experimental results show that CAMPC can save 83.5% of bandwidth resources compared with the scheme that completely transmits the tiles outside the viewport with the Dynamic Adaptive Streaming over HTTP (DASH) protocol.Besides,the proposed method can improve the system utility by 62.7% and 27.6% compared with the DASH official and viewport-based rules,respectively.

Keywords: DASH;content-aware FOV prediction;bitrate adaptation;multi-step prediction;generalized predictive control

1 Introduction

With the rapid development of 5G technologies,the immersive viewing experience led by Virtual Reality (VR) is becoming increasingly popular.The 360-degree video,which is one of the most critical portions of VR applications,has attracted a good deal of attention on some commercial streaming platforms,such as Youtube and Bilibili.Although the 360-degree video brings a brand-new experience to users,it confronts new challenges as well.Compared with conventional 2D video streaming,the delivery of 360-degree video has much more bandwidth requirements due to its panorama feature.

Driven by the characteristic of the user field of view (FOV),researchers are exploring a solution that spatially divides a 360-degree video into small parts called tiles and transmits them in different video qualities to cope with the large consumption of bandwidth.In addition,benefitting from the Dynamic Adaptive Streaming over HTTP (DASH) Protocol,adaptive bitrate (ABR) algorithms have made an enormous contribution to video streaming,especially over dynamic wireless network conditions.By pre-coding the video into multi-bitrate,the client may request video segments of different qualities to meet the challenge of network fluctuations.

Therefore,tile-based hyper text transfer protocol (HTTP)adaptive streaming is a promising approach to achieving a better quality of experience (QoE) in a 360-degree video streaming system.The HTTP server usually crops the panoramic video into multiple tiles spatially,and then slices and encodes each tile into multi-bitrate segments.The client requests the most appropriate bitrate version of each tile based on his viewport and current network status,decodes these tiles,and then renders them into a 360-degree video for playback.In general,the tile that overlaps viewports is delivered in high quality,while other tiles outside the FOV are delivered in lower quality.Due to the human visual characteristics,the user can only see the FOV areas,so a significant reduction in the video bitrate outside the viewport will not affect the users’ experience;on the contrary,it can save bandwidth and transmission costs,and avoid network congestion in the case of multiple users.

In fact,due to the randomness of the user’s viewing angle and wireless network bandwidth,the low prediction accuracy will lead to an inappropriate bitrate version selected by the tiles in the viewport.To handle these prediction errors,the QoE is guaranteed by delivering tiles around the prediction viewport at high quality and keeping the buffer at a reasonable range without stopping the playback waiting buffer.In order to reasonably allocate the wireless network bandwidth resources,it is necessary to estimate the future viewport distribution and network state,and determine the bitrate version of the prefetched segments that match the overall network capacity.

Specifically,we propose a bandwidth resource scheduling algorithm content-aware multi-step predictive control(CAMPC) for 360-degree video streaming,which uses an online predictor to obtain throughput estimation.The content perception score obtained by the offline machine learning method and online user viewport trace is used to predict the probability distribution of future user viewport locations.For tiles with high viewport probability,the change of buffer occupation in the next period is predicted based on the throughput estimation.The bitrate decision is made by optimizing the predicted QoE within the viewport and the future buffer occupancy prediction.The remaining total bandwidth will be distributed according to the distribution probability for tiles with low viewport probability.The main contributions are shown as follows.

·We develop a content-aware method to predict the user’s viewport location.The grayscale image is obtained by a trained semantic segmentation model with each frame of the video after spatial partition as input,and the numbers of different grayscale pixels are calculated to obtain the content richness of the current frame.Since users prefer to view the frame with richer content,the probability distribution of future viewing is obtained by the weighted content richness with the current user viewport.

·We propose a multi-step predictive bitrate adaptation algorithm to generate prospective bitrate decisions for players with high probability in the future viewport,which includes predicting network throughput using the Kalman filter,predicting buffer occupation,and solving predictive control problems using the generalized predictive control method.

·We provide experimental tests by building a 360-degree video streaming platform to implement the proposed bandwidth resource scheduling algorithm and evaluate the network status algorithm through the practical network.Experimental results show that compared with the existing online bandwidth resource scheduling algorithms,the proposed algorithm can save bandwidth while ensuring the quality of user experience.Compared with the complete transmission of 360-degree videos,the bandwidth can be saved by 83.5% in the tiles out of the viewport,and the CAMPC can improve the system utility by 62.7% and 27.6% compared with low-on-latency-plus(LOLP) and DYNAMIC solutions which have been integrated to the official DASH.js player in v3.2.0 and the most straightforward viewport-based bitrate adaptation algorithm.

The rest of the paper is organized as follows.Section 2 surveys related work on a tile-based 360-degree video streaming over DASH.Section 3 presents the system structure and QoE model for evaluation.Section 4 proposes the FOV prediction algorithm combining FOV and content priority.The bandwidth prediction and the bitrate selection algorithm are in Section 5.Section 6 describes the system implementation and throughput measurement in reality besides the performance evaluation.Finally,Section 7 concludes the paper and outlines future directions.

2 Background and Motivation

The 360-degree video is constructed by camera splicing.To play a 360-degree video,the client needs to run on a custom 360-degree video player or head-mounted displays (HMDs) to render the video.Some commercial 360-degree video content providers usually employ a simple approach that streams the entire panoramic content regardless of the viewport[1],such as the widely used equirectangular projection (ERP) format,which causes considerable waste of wireless bandwidth resource,as users always pay attention to only a tiny portion of the panoramic scene in their viewports.

Inspired by these observations,several studies have abandoned traditional flat video transmission methods and begun to propose tile-based solutions that adaptively fetch only the content inside the predicted FOV or fetch the content in FOV with higher quality than the parts out of FOV to meet the demand of 360-degree video streaming systems.XIE et al.[2]leveraged a probabilistic approach to prefetch tiles countering viewport prediction errors,apparently reduced the side effects caused by wrong head movement prediction,and designed a QoE-driven viewport adaptation system.QIAN et al.[3]adopted a viewport-adaptive approach and formulated an optimization algorithm to determine the tile quality,achieving high bandwidth reduction and video quality enhancement on Long Term Evolution (LTE).SONG et al.[4]proposed a two-tier streaming architecture using scalable video coding (SVC) techniques,which included two layers,namely,the basic layer (BL) and the enhanced layer (EL).In contrast,a fast-switching strategy was proposed by generating multiple video streams with different start times for each encoded enhanced layer chunk,which can be randomly accessed at any instant to adapt to the user viewport change immediately to achieve the optimal trade-off between video quality and streaming robustness.

According to the above research,tile-based 360-degree video transmission methods have been proven to save many bandwidth resources,whereas viewport prediction and bandwidth prediction are two of the most critical factors.To a great extent,the user’s FOV would be influenced by the video content.Conventional viewport prediction approaches pay attention to the past viewing behavior of many users who have watched the same or similar videos,based on the head movement trajectory in the dataset.SUN et al.[5]developed a truncated linear prediction method by which we only use past samples that are monotonically increasing or decreasing for extrapolation.EPASS360[6]studied the similarity of multi-user viewing spatial locations,looking for similarities in patterns across a wide range of data through a deep learning LSTM network.These approaches apply only to the video on demand(VOD) case because the past viewing behavior is not available for live video streaming for the first time.The Pano[7]drew researchers’ attention to the content of the video.FENG et al.[8]developed a new viewport prediction scheme for live 360-degree video streaming using video content-based motion tracking and dynamic user interest modeling.QIAO et al.[9]studied human attention over the viewport of 360-degree videos and proposed a novel visual saliency model to predict fixations over 360 videos through the multi-task deep neural networks(DNN) method.YUAN et al.[10]proposed a simple yet effective rate adaptation algorithm to determine the requested bitrate for downloading the current video segment and preserved both the quality and the smoothness of tiles in FoV.WEI et al.[11]proposed a hybrid control scheme presented for segment-level continuous bitrate selection and tile-level bitrate allocation for 360-degree streaming over mobile devices to increase users’quality of experience.

On the other hand,to optimize QoE in the DASH video streaming system,the bitrates decided by the client-side ABR algorithm should meet the bandwidth requirements.Throughput-based methods often employ various mechanisms to predict the end-to-end available bandwidth,such as Exponential Weighted Moving Average (EWMA) and Support Vector Regression.The estimation accuracy of throughput will affect the allocation decision.SOBHANI et al.[12]utilized Autoregressive-Moving-Average (ARMA)[13]and Generalized Autoregressive Conditional Heteroscedastic (GARCH) in order to predict the average and the variance of bandwidth.YUAN et al.[14]proposed an ensemble rate adaptation framework for DASH,which aims to leverage the advantages of multiple methods involved in the framework to improve the QoE of users.The buffer-based algorithm,such as BOLA[15],formulated bitrate adaptation as a utility maximization problem,devised an online control algorithm,and used Lyapunov optimization techniques to minimize rebuffering and maximize video quality.

However,to achieve a fast and smooth response among multiple players of the 360-degree video at the same time,ABR algorithms should quickly adapt to sustainable changes while avoiding the bit rate jitter caused by sudden throughput changes.Existing methods are inherently unable to achieve this goal because they cannot determine whether a current change is transient or persistent with a single step of predictive information.Thus,our work uses the idea of combining content awareness with the current viewport to calculate the viewing probability of spatial video blocks and provides efficient network state quantification and prediction algorithms.

3 Proposed Framework

3.1 System Architecture

As shown in Fig.1,the framework of the 360-degree video transmission system consists of a server and a set of video players.The server includes a preprocessing module and a sending module.The preprocessing module converts a 360-degree video from the ERP format to the Cubemap Projection(CMP) and separates it into six tiles spatially so that each tile corresponds to a cube map.Then each tile is divided into a set of temporal segments and encoded at different bitrate levels according to DASH,and the information which describes the structure of bitrate representations for each tile is stored in the media presentation description (MPD).The sending module sends the segments at a specific bitrate selected by the ABR controller of the player.

The client includes a receiving module,video players,a system monitor,multi-step predictors,and bitrate decision engines.The receiving module receives and decodes the tiles to reconstruct the 360-degree video.Players extract and display the viewport corresponding to the current viewing direction of the user.The system monitor is responsible for monitoring the viewport,network status (e.g.,throughput),and player status(e.g.,buffer occupancy).Multi-step predictors and bitrate decision engines assist ABR controllers to compute the bitrate level of the next segment and return it to the server.Each multi-step predictor module calculates the future throughput through the network status,and the bitrate decision engines obtain the bitrate level by optimizing the target based on the multi-step throughput and buffer occupancy prediction.

3.2 Problem Formulation

The server divides a 360-degree video intoNtiles of the same size in space corresponding to a cube map,and each tileVis initialized to a DASH player.Then,each tile is sliced intoM×Ksegments,indicating that there areMoptimal bitrate versions divided intoKsegments in time,and each segment has the exact duration ofLseconds.The system encapsulates and storesN×M×Ksegments in an HTTP server for adaptive streaming.

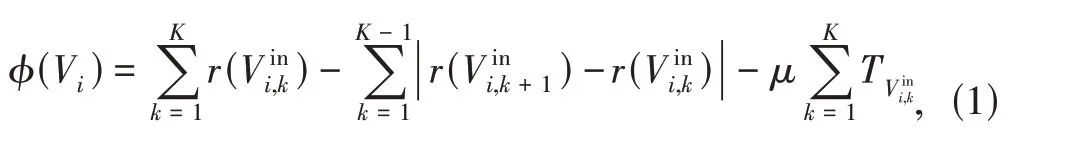

The serial number of the tileVis represented byi,wherei∈Z.VinandVoutindicate that the current tile is located inside and outside the viewport,respectively.ForVin,the quality of experience is determined by three factors: the selected bitrate version,the bitrate fluctuation range,and the rebuffering time.Spatial tiles that are not in the viewport will not affect QoE.For this reason,the value of QoE is given by:

▲Figure 1.Streaming video system structure

The system ensures that the bandwidth is saved as much as possible when the QoE is the highest.The system utility includes the QoE and the bandwidth consumed compared with the situation where players request all chunks at the highest bitrate,which is:

We find a sequence of suitable bitrate versions for each tileVito schedule bandwidth resources that maximize the system utility and satisfy:

Our solution consists of the following aspects:

·Prediction of the probability distribution of the viewport on spatial tiles,including viewport estimation and prediction based on content;

·Computation of multi-player total bandwidth and estimation of the bitrate constraintC(or throughput);

·Decision on the optimal version of each tile.

In the following section,we will address each of these aspects.

4 Viewport Prediction

A distinctive feature of 360-degree video is that the attention is not evenly distributed.Therefore,the viewport-driven stream is an effective solution to the improvement of the quality of the 360-degree video,but it is always challenging to predict the viewpoint trajectory accurately.Recent studies have proposed adaptive bitrate algorithms based on FOV prediction,but these algorithms have the following shortcomings.Firstly,it lacks consideration for the video content itself.Secondly,there is a strong dependence on the FOV,and any FOV prediction error probably causes a decline in video quality and even significant rebuffering.Therefore,in this section,we propose a viewport prediction approach based on the priority of FOV and content.

4.1 FOV priority

The system divides the 360-degree video into six faces corresponding to a cube map as in Fig.2(a),where six video tiles are placed on the six faces of the cube.The browser renders it into a sphere,and the VR user is located in the center of the sphere to observe the surface of the sphere.The red point is the center point on each surface of the cube,with the Cartesian coordinates of the point in brackets.The spherical coordinates of each tile mapped on the sphere are shown in Table 1,expressed in the form of latitude and longitude,and the latitude and longitude centers mean the spherical coordinate of the center of the tile.In Fig.2(b),the green area shows the user’s FOV,the yellow area indicates that a part of the current tile is inside the FOV and may be viewed later,and the red area illustrates that the current tile is absolutely outside the FOV.We calculate the overlap between the tile and FOV according to Table 2 to get the priority of the tile.The higher the value,the higher the proportion of the tile in the FOV,and the higher the bitrate version should be buffered later.

▲Figure 2.(a) 360-degree video segmentation that the content-aware multi-step prediction control algrithm (CAMPC) uses to judge the importance of tiles;(b) example of FOV priority allocation according to FOV at a certain moment

The FOV of common head-mounted devices is about 110 degrees.Since the final verification scene is a browser window,obviously it is easier to obtain the spherical coordinates of the center of the window.We define the priority of each tile based on the relative position of each face and the center of the viewport.As shown in Table 2,the system divides the priority into five levels: 100,75,50,25,and 0.In order to obtain the final FOV priority,the adaptive bitrate algorithm traverses latitude and longitude in order from high score to low score to find the mapping interval of the two dimensions.

▼Table 1.Spherical coordinates of tiles

▼Table 2.Tile priority and spherical coordinates mapping relations

4.2 Content Priority

Different from predicting the future viewport based only on the online viewport,the system server will convert the original video into frames in advance,and perform operations such as gradient calculation and semantic segmentation through the pre-trained neural network model,to get the priority of bitrate allocation shown in Fig.2(b).

In this work,we mainly use FC-DenseNets with U-Net structure as a model for extracting features.Through the 56-layer network,the model analyzes content features and then classifies and slices them.After training 300 samples in 300 epochs through the model,a better checkpoint is obtained,and the following semantic segmentation results are obtained by using the own dataset as the test data.Among them,the segmented category must be consistent with the category applied in training.

The original image shown in Fig.3(a) is inputted into the trained network model to obtain segmentation results as in Fig.3(b) and then transformed into an image with only 0 and 255 grayscales as shown in Fig.3(c).Despite some errors and the defect that there are unclear boundaries of categories,the results are generally sufficient to judge the priority of content perception.We count the number of black and white pixels on the obtained grayscale image.The whiter pixels there are,the richer the image content.The higher priority of content perception means that in the MPD file of the video tiles,the bandwidth attribute corresponding to each bitrate level will be relatively higher.In other words,the video segment has a larger file size than the segment with a lower perception priority,containing complex content that may grab the user’s attention.After the above process is performed on each frame of the original video,the average value of the content richness of each video segment can be calculated,and this information is stored in the JavaScript Object Notation (JSON) file used for the request for the terminal to read while allocating bandwidth among multi-players.

4.3 Probability Distributions of Future FOV

The viewport distribution probabilityαiof any tileViin the future is determined by the FOV prioritySFand content perception prioritySC.The total priorityαiof a tile can be calculated byS=ωFSF+ωCSC,whereωFrepresents the weight ofSF,ωCrepresents the weight ofSC,and they depend on the current occupancy of the bufferBf,k:

·Bf,k≥Br-L(whenωF=0.3,ωC=0.7) means that when the video buffer is sufficient,more emphasis is placed on the score based on the richness of video content,whereBrrepresents the length of the safe buffer that players want to keep,andLrepresents the duration of the video segment.

·Bf,k≤L(whenωF=0.8,ωC=0.2) means that the FOV score is more emphasized while the insufficient buffered video is facing the danger of rebuffering.

·L≤Bf,k≤Br-LandωF=0.5,ωC=0.5 represent that when the video buffer occupancy is within the normal range,the content score and the FOV score are jointly influenced by the priority.

5 Adaptive Bitrate

5.1 Video Streaming Model

▲Figure 3.Analyzing the priority of content perception under the semantic segmentation model

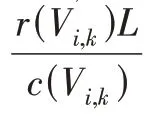

In order to describe the relationship between the bitrate change and the buffer occupancy evolution,we make a subtraction between Eqs.(4) and(5).Since previous studies showed that the throughput of a cellular network would not change significantly for a period of time,we assumec(Vi,k+1)=c(Vi,k),andξ(Vi,k) is used to express the impact of this assumption,which obtainins that

The adaptive bitrate algorithm among multi-players needs to balance a set of conflicting QoE elements such as video quality maximization,rebuffering events minimization,and quality fluctuations.For chunks inVinthat directly affect the QoE,we optimize the QoE metrics over the multi-step prediction horizon and at the same time control the future buffer occupancy.And for chunks inVoutof which the importance is obviously less than chunks inside FOV,we subtract the predicted bandwidth and the used bandwidth to obtain the currently available bandwidth,and then according to (α1,α2,…,αN) we allocate the remaining bandwidth to each tile.Note that the tiles contained in setsVinandVoutare updated in real-time as the FOV changes.

Since the network status,in reality,is hard to predict accurately,it requires the algorithm performance to be robust to the prediction error.Therefore,minimizing buffer control error achieves quality maximization and rebuffering minimization simultaneously by keeping buffer occupancy at a constant levelBr.However,due to changes in throughput,accurate control of buffer occupancy requires frequent quality switching.Since buffer occupancy changes will not affect QoE directly and switching bitrates reduces QoE,it should tolerate some buffer occupancy fluctuations but limit the variability of video quality.Therefore,the optimization goal is designed to minimize the buffer control error and the weighted combination of the bitrate change Δr(Vi,k)=r(Vi,k) -r(Vi,k-1) between two consecutive video chunks.Then the total cost function from chunks 1 toKfor any tiles inVinis expressed as

whereλkis the penalty of changing the bitrate atVi,K.The algorithm controlsb(Vi,k+1) to smoothly reachbr(Vi,k+1) along the trajectorybr(Vi,k+1)=βb(Vi,k)+(1 -β)Br,whereβ∈[0,1).A smallerβmeans moving towardsbr(Vi,k+1) faster.The definition of the cost function allows us to meet the requirements of different users for video playback.A largerλis employed if users are more concerned about smooth playback.A trimmer is adopted in cases where they do not care about bitrate variations.

When the average throughputc(Vi,k),…,c(Vi,K) of downloadingKchunks in the future is known,the bitrater(Vi,k),…,r(Vi,K) allocated to each chunk can be obtained by minimizing the cost function.Therefore,the bitrate selection forVi,KinVincan be formulated as an optimal predictive control problem over anN-step horizon.

5.2 Link Bandwidth Predictor

The algorithm first gets the real-time throughput and then uses the Kalman filter method to obtain the predicted value used in the bitrate selector for control optimization.

The Kalman filter dynamically adjusts parameters to output the estimated value for online prediction.The prediction is based on two equations: a dynamic state equation that describes the dynamics hidden state (e.g.,the predicted bandwidth) and a static output equation describing the relationship between the hidden state and the measured value (e.g.,throughput).The Kalman filter method,which can filter out temporary throughput fl uctuations and reflect the stable change,matches the observations in the previous work that the evolution of the throughput within a session exhibits stateful characteristics and the throughput is essentially Gaussian within each state.

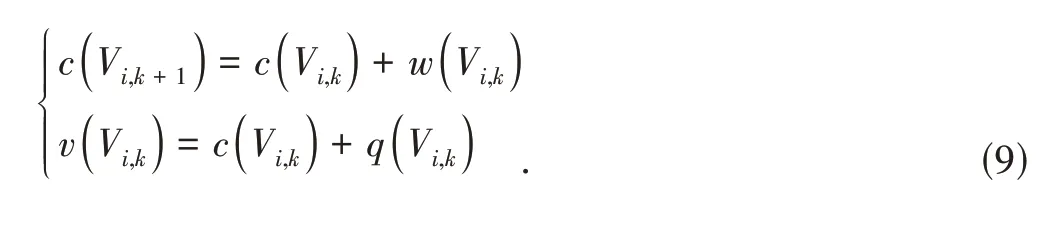

Letv(Vi,k) denote the video throughput measurement when downloading chunkVi,k.Recent studies show that the observed throughput fluctuates around the link capacity following Gaussian.Therefore,v(Vi,k) is modeled as the summation of capacityc(Vi,k) and measurement noiseq(Vi,k) denoted byQ=E[q(Vi,k)2].Finally,the whole system model is given by:

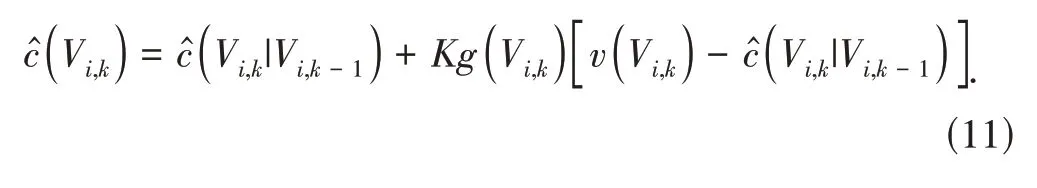

The Kalman filter consists of model prediction and measurement correction.In the prediction stage,the Kalman filter uses the estimated value of the previous link capacity(Vi,k-1) to predict the current state:

Then the initial estimate of capacity(Vi,k|Vi,k-1) is corrected to a new estimated value(Vi,k) by the measurement correction model.The correction equation is:

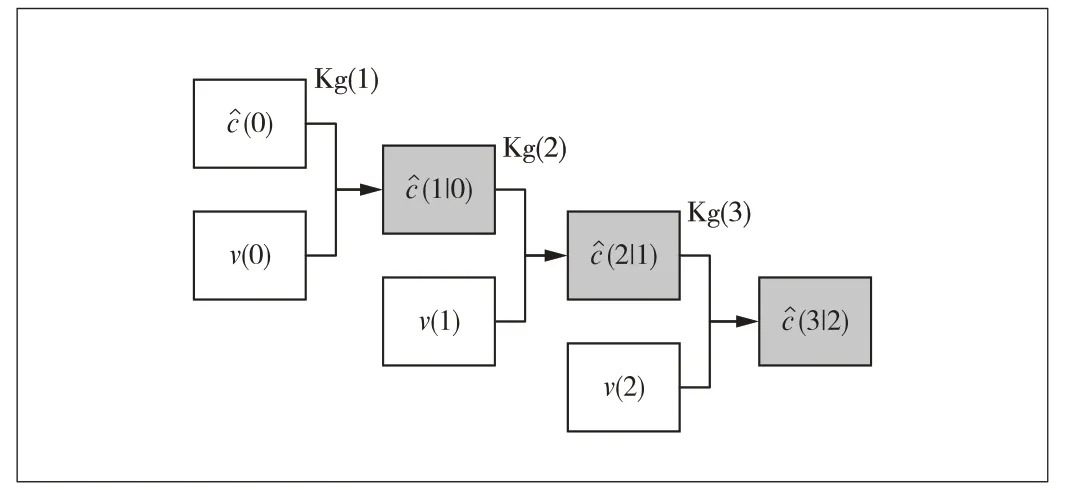

▲Figure 4.Bandwidth predictor using a Kalman filter

The above process is executed continuously,obtaining the predicted throughput as the gray blocks in Fig.5 while buffering the next chunk every time.In order to obtain the multi-step bandwidth prediction value,the average throughput of five video chunks in the future is predicted at each step.Since the average throughput measurementsv(Vi,k+1),v(Vi,k+2),v(Vi,k+3),andv(Vi,k+4) are unknown when downloading chunksVi,k,the network throughput can be approximately stable for a while[17–18].Therefore,it is assumed that the measurement throughputv(Vi,k) of the next chunks meetsv(Vi,k+1)=v(Vi,k+2)=v(Vi,k+3)=v(Vi,k+4)=v(Vi,k).Fig.6 shows the prediction iteration process,which is the continuation of the one-step Kalman filter.Blocks of the same color have the same predicted value,and the gray blocks have the same value as the same block in Fig.5.Various parameter initialization problems involved in the process are described in Section 6.

5.3 Bitrate Selector

The CAMPC selects the bitrate according to the minimization cost function in Eq.(8) within the prediction range.To solve it,we first obtain the multi-step prediction of buffer occupancy based on the predicted link bandwidth.With the future buffer occupancy expressed by a bitrate function,the cost function can be derived to be only relevant to the variable video bitrate.Then,the bitrate that minimizes the cost function can be obtained.

After obtaining the average throughputc(Vi,k),…,c(Vi,K) of downloadingKchunks in the future,the buffer occupation of thet-step is defined asb(Vi,k) andb(Vi,k-1),experiencing a recursive process:

▲Figure 5.Continuous bandwidth predictor working process

▲Figure 6.Multi-step bandwidth predictor working process

Then the buffer occupation of the playerViis finally given by the following:

The above bandwidth scheduling method helps spatial tilesVinwith high probability in the future viewport allocated bandwidth.For tilesVoutsegmentation with low probability in the FOV,the system first uses the one-step bandwidth predictor to obtain the predicted link capacity.After the bandwidth allocation of theVin,the difference between the predicted value and the consumed value indicates the currently available bandwidth,which will be scheduled to each tile out of FOV according to the weight (α1,α2,…,αN) given in Section 4.

6 Evaluation

6.1 Methodology

6.1.1 Experimental Setup

Our emulated DASH system consists of a server and a video player based on Dash.js (version 3.2),an open-source implementation of the DASH standard.The client video player is a Google Chrome browser.The throughput is computed by the method in the next section in real time.Key classes of adaptive streaming-related functions are modified.First of all,we modify the media-player settings and add attributes that indicate the current chunk and tile serial number.ABR algorithms are implemented in the AbrController class,and HTTP requests for throughput measurement are collected from the Throughput History function.At the same time,we modify the buffer threshold of each player when the FOV is switched.For the tile in the viewport,the maximum buffer size is 10 s,and the safety threshold is 6 s.While the tile is outside FOV,the above two values are 4 s and 2 s,respectively.The purpose is to respond to this change faster when the viewing angle is switched.We use the Angular.js framework to unify the frontend communication of the platform,monitoring system state information such as buffer occupancy,bitrates,rebuffering time,and the predicted/actual capacity.These also are logged for the performance analysis.

6.1.2 Link Capacity Traces

To verify the effectiveness of the throughput predictor and control optimizing model on a single media player in realistic network conditions,we use link capacity traces based on the public dataset,which collects throughput measurements in 4G/LTE networks.Belgium 4G/LTE dataset records the available bandwidth while downloading a large file in and around Ghent,Belgium.Figs.7(a) and 7(b) show the single simulator’s predictor and selector,respectively,running status on a 4G/LTE trace,which can verify the algorithm accuracy on one player.

▲Figure 7.Single simulator running status over Belgium 4G/Long Term Evolution (LTE) dataset

6.1.3 Video Parameters

We transform a 4K 360-degree panoramic video into six faces corresponding to a cube map for evaluation.Each video tile with 1 080 resolution is split into 123 chunks of 1 s and is encoded by VP9 codec in six bitrate versions,i.e.,{0.18,0.45,0.91,3.10,4.55,6.05} Mbit/s.The specific bitrate mapping to the same level among different video tiles varies with the video content richness;that is,a video about the sky is often simpler and has a lower bitrate than a portrait video.

6.1.4 Adaptation Algorithms

We evaluate CAMPC and the following widely adopted ABR algorithms and simple ABR rules for a 360-degree video:

·DASH.js.DYNAMIC dynamically switches between ThroughputRule and BolaRule.These two algorithms are based on rate and buffer to select bitrate.

·DASH.js.LoL+is based on the learning adaptive bitrate algorithm Low-on-Latency (LoL),with improving adjustment of the weight for the self-organizing mapping (SOM) features and controlling the playback speed and taking into account latency and buffering levels.

·FOV is an adaptive bitrate algorithm based on the realtime viewport implemented by our experiment platform.The bandwidth allocation weight is calculated according to the angle deviation between each tile in the viewport and the center point of the FOV.

·FOVCONTENT is an adaptive bitrate algorithm based on the real-time viewport and content perception implemented by our experiment platform.It predicts the viewport based on the user’s current viewport and the content richness obtained from offline training,and the average throughput within a period is used for bit rate allocation.

6.2 Choice of CAMPC Parameters

In the bandwidth predictor,we set the initial system error varianceP(Vi,0) to 7 Mbit/s and the process noise varianceWto 3 Mbit/s,which denotes that the prior estimate of the network bandwidth fluctuates by 3 Mbit/s.When the initial value is set,it is necessary to ensure that the system error variance is not less than the process noise variance.A higher variance of the system error will make the prediction process more trustworthy in the throughput measurement at the initial stage,resulting in a better fitting curve.The measuring noise is updated byQ(k)=αQ(k-1) +(1 -α)[v(k) -(k|k-1)]2,α=0.8.The initial value of the throughput estimatec(Vi,0) is set to 8 Mbit/s.

6.3 Real-Time Throughput Measurement

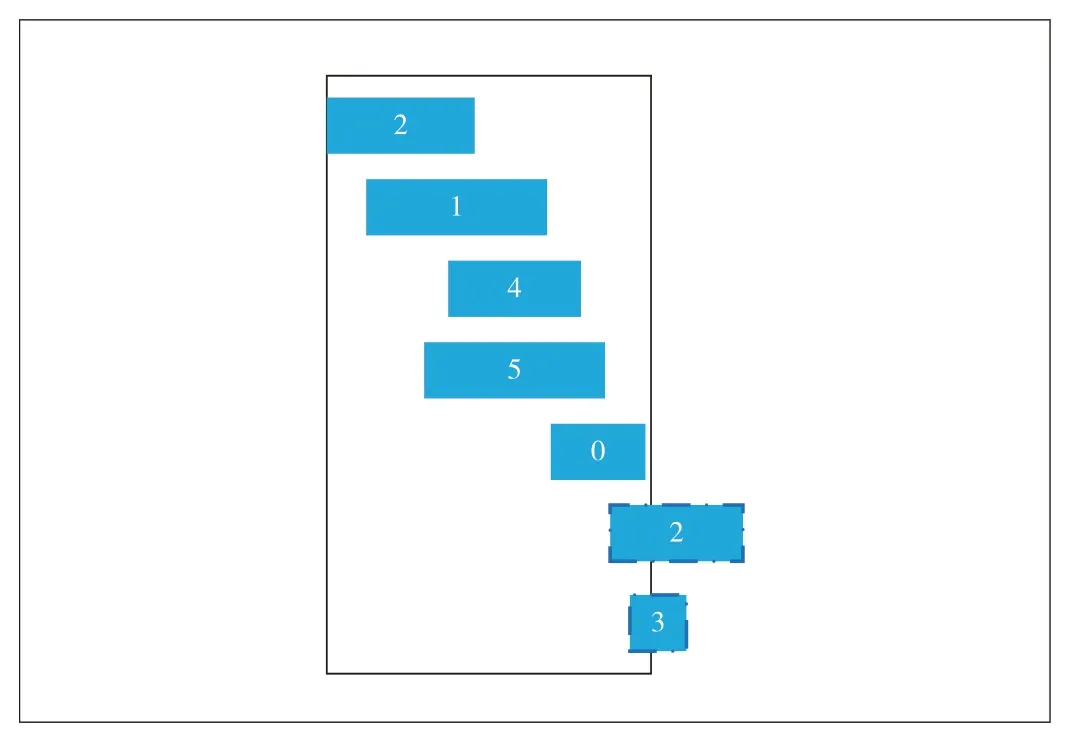

Different from the single player,which can directly measure the throughput according to the application programming interface (API) in DASH to get the throughput,multiple players share the link for synchronous transmission,with inaccuracy in the throughput obtained by any player from API.According to DASH,at the end of transmission for a chunk,the start timestampd,the end timestamp,and the number of data transferred can be obtained by the player.Therefore,the transmission state of the video chunks,as in Fig.8,shows a possible state.

The outermost black box represents a time slot,which is the smallest unit of our timing measurement throughput.The blue blocks in Fig.8 indicate the downloading process of each player.The value on the blue block indicates which player is downloading.Although they request videos in sequential orderVi,k,Vi,k+1,Vi,k+2,…,the order in which the players appear in the picture is random since each player is an independent download process.The blue blocks marked by the dotted line represents the download process that players’ monitor cannot capture at the end of the time slot.Therefore,when calculating the throughput,the time should remove the part that has no data transmission from a slot and the total number of data should be all the data that can be sensed.

Assuming that the start time of the slot istand the end time ist+d,in order to avoid the impact of the chunks that cannot be captured as much as possible,we move the start and end timestamps forward for a short perioddback,then the actual start time of the time slot for calculating the throughput ist-dback,and the actual end time ist+d-dback.

The calculator cyclically judges whether the response starts timestamp reqkand finishes timestamp finkin the HTTP request list satisfy reqk<t+d-dbackandt-dback<fink;if satisfied,it indicates that at least part of the download process within the time gap needs to be further judged and calculated:

·When the start and end time of the request response is both within the timestamp,that ist-dback<reqkand fink≤t+d-dback,the total number of downloaded dataDkis directly added to the total number of downloaded data in this time slot;

▲Figure 8.Time sequence of downloading process that may occur in multiple players

In addition,it is necessary to avoid gaps in which no data is transmitted to the middle,beginning,and end of the slot due to buffer control rather than the current link capacity being 0.In the process,we record the current minimum requestresponse start timestamp reqmin,which means there is no gap after the timestamp.If finkis less than reqmin,then we subtract the gap time reqmin-finkfromd.Finally,the throughput of the current time slot can be obtained by dividing the actual time interval by the total number of actual transmitted data.

6.4 Performance

We compare the performance of ABR algorithms in a real and a weak network environment limited by the NetLimiter 4.

As shown in Fig.9(a),the momentary fluctuation of QoE occurs when the viewport changes.Because the two rules of Dash.js treat each video player fairly,even if the viewport changes suddenly,the QoE fluctuation is minimal.For other rules,the tiles not in the FOV are often transmitted at a lower bitrate to save bandwidth,resulting in severe (30–50 s) QoE fluctuation when the FOV suddenly changes to a player outside the original viewport.However,the CAMPC rule can recover to the highest achievable QoE and remain stable in about 5 s,while the FOV and FOVContent rules need about 10 s to deal with this sudden change.

Fig.9(b) shows the time-varying bandwidth saving rate compared with full video transmission.Although the CAMPC rule is slightly inferior to the LoL+rule on QoE,it can achieve a bandwidth saving rate of 83% for tiles not in the viewport.More costs for transmissions are saved and congestion is reduced when multiple users request the same video source.No rebuffering event has occurred in the actual network environment.

In the actual network environment,the performance comparison of each ABR algorithm is shown in Table 3.The user QoE of the LoL+rule can reach 100;the DYNAMIC rule shows that the initial bitrate can be reached in multiple tests,but the bitrate requested gradually decreases owing to the vicious circle of continuously requesting lower bitrates;the average QoE of the CAMPC rule proposed by this paper can be maintained at 80.The FOV and FOVContent rules cannot respond to the change of the user viewport in time,resulting in a sudden decrease in quality every time the user viewport changes.The bitrate is allocated according to the weight directly,leading to the case that the bandwidth within the user viewport is not made full use of,and the average QoE is low.The FOV and FOVContent rules have the highest bandwidth savings,reaching about 90%,and CAMPC can achieve 83%bandwidth savings.If both bandwidth savings and QoE are given a weight of 0.5,CAMPC can get the highest system utility of 81.9.The weight can be changed according to the importance of the cost and the QoE.

We conducted the same test in a weak network environment(the speed limit is 0.5 MB/s).Changes in the system utility metrics of each ABR rule over time show the same laws as in the natural environment,but the average QoE is lower and unstable.The performance comparison of the ABR rules in a weak network environment is shown in Table 4,where the bandwidth saving rate of each rule is about 90%.Rebuffering time changes are shown in Fig.9(c).The FOV rule does not have rebuffering,the FOVContent rule has a rebuffering time of less than 1 s,and the CAMPC algorithm is the next,but all of these are below 2 s,which accounts for less than 1.64% of the total length of the video.The LoL+and DYNAMIC rules have a relatively high rebuffering time,accounting for 4.73%and 6.64% of the total video length,respectively.The FOV rule has the highest system utility because,with the current rate limit of 0.5 MB/s,it happens to be enough for the player with the highest weight in FOV to request a video chunk with a quality of 6.If the network speed is lower than 0.5 MB/s,the FOV rule must have a severe rebuffering event.However,what is certain is that the FOV rule has better system utility in the range of about 0.5 MB/s.CAMPC rules are better than LoL+and DYNAMIC rules in QoE,bandwidth saving rate,and rebuffering time.The overall utility is slightly inferior to the FOV and FOVContent rules.

▲Figure 9.Changes in the system utility metrics of each advanced adaptive bitrate (ABR) rule over time

▼Table 3.Performance comparison of ABR algorithms in a real network environment

▼Table 4.Performance comparison of ABR algorithms in a weak network environment

7 Conclusions

Existing adaptive bitrate algorithms cannot provide smooth video quality for the 360-degree video in a network with high dynamic characteristics because of uncertain viewport prediction and bitrate selection.In order to achieve good QoE,the algorithm proposed in this paper considers the future multi-step network status and combines the richness of video content and the real-time user viewport to predict the future FOV,which can effectively save bandwidth resources.CAMPC uses a multi-step predictive control formulation that selects bitrate by controlling the buffer occupancy and optimizing QoE metrics over the prediction horizon.The formulation can select the bitrate level with the highest QoE and high fault tolerance.Through the above two prediction algorithms and control optimization,a content-aware 360-degree video ABR algorithm has been designed.The algorithm is implemented on the DASH video player and evaluated in reality.Experimental results show that CAMPC can save 83.5% of bandwidth resources compared with the scheme that completely transmits the tiles outside the viewport with the DASH protocol.Besides,the proposed method can improve the system utility by 62.7% and 27.6% compared with official and viewport-based rules,respectively.

- ZTE Communications的其它文章

- Editorial: Special Topic on Wireless Communication and Its Security:Challenges and Solutions

- Security in Edge Blockchains:Attacks and Countermeasures

- Utility-Improved Key-Value Data Collection with Local Differential Privacy for Mobile Devices

- Key Intrinsic Security Technologies in 6G Networks

- Air-Ground Integrated Low-Energy Federated Learning for Secure 6G Communications

- Autonomous Network TechnologyInnovation in Digital and Intelligent Era