Recognition of moyamoya disease and its hemorrhagic risk using deep learning algorithms: sourced from retrospective studies

Yu Lei, Xin Zhang, Wei Ni, Heng Yang, Jia-Bin Su, Bin Xu, Liang Chen,Jin-Hua Yu, Yu-Xiang Gu, , Ying Mao,

Abstract Although intracranial hemorrhage in moyamoya disease can occur repeatedly, predicting the disease is difficult. Deep learning algorithms developed in recent years provide a new angle for identifying hidden risk factors, evaluating the weight of different factors, and quantitatively evaluating the risk of intracranial hemorrhage in moyamoya disease. To investigate whether convolutional neural network algorithms can be used to recognize moyamoya disease and predict hemorrhagic episodes, we retrospectively selected 460 adult unilateral hemispheres with moyamoya vasculopathy as positive samples for diagnosis modeling, including 418 hemispheres with moyamoya disease and 42 hemispheres with moyamoya syndromes. Another 500 hemispheres with normal vessel appearance were selected as negative samples. We used deep residual neural network (ResNet-152) algorithms to extract features from raw data obtained from digital subtraction angiography of the internal carotid artery, then trained and validated the model. The accuracy, sensitivity, and specificity of the model in identifying unilateral moyamoya vasculopathy were 97.64 ± 0.87%, 96.55 ± 3.44%, and 98.29 ± 0.98%, respectively. The area under the receiver operating characteristic curve was 0.990. We used a combined multi-view conventional neural network algorithm to integrate age, sex, and hemorrhagic factors with features of the digital subtraction angiography. The accuracy of the model in predicting unilateral hemorrhagic risk was 90.69± 1.58% and the sensitivity and specificity were 94.12 ± 2.75% and 89.86 ± 3.64%, respectively. The deep learning algorithms we proposed were valuable and might assist in the automatic diagnosis of moyamoya disease and timely recognition of the risk for re-hemorrhage. This study was approved by the Institutional Review Board of Huashan Hospital, Fudan University, China (approved No. 2014-278) on January 12,2015.

Key Words: brain; central nervous system; deep learning; diagnosis; hemorrhage; machine learning; moyamoya disease; moyamoya syndrome; prediction; rebleeding

Introduction

Moyamoya disease (MMD) is a chronic cerebrovascular disease that is characterized by progressive stenosis and occlusion of the supraclinoid internal carotid artery (ICA)and its proximal branches and abnormal collateral vessels at the base of the brain, both with unknown etiologies(Suzuki and Kodama, 1983; Su et al., 2019). The pathological angioarchitecture of MMD involves bilateral ICA, whereas patients with unilateral moyamoya vasculopathy are generally categorized as quasi-MMD or moyamoya syndrome (Research Committee on the Pathology and Treatment of Spontaneous Occlusion of the Circle of Willis and Health Labour Sciences Research Grant for Research on Measures for Infractable Diseases, 2012). Nevertheless, the disease and syndrome are often investigated together because of their common clinical presentations and surgical strategies (Scott and Smith, 2009).Intracranial hemorrhage is a major clinical manifestation of moyamoya among adults, and several morphological changes such as fragile moyamoya vessels and saccular aneurysms in the circle of Willis are thought to be the main causes(Kuroda and Houkin, 2008). Published studies indicate that untreated hemorrhagic MMD presents with high rebleeding rates (Kobayashi et al., 2000; Kang et al., 2019). Therefore, risk factors of hemorrhage remain after the initial bleeding and recognition of these features is crucial for predicting future rebleeding events.

Recently, machine learning has been widely recognized as a powerful tool for discovering hidden information that may not be expressed explicitly. Commonly used algorithms in neuroscience include the support vector machine, Bayesian algorithm, and artificial neural network (Lo et al., 2013;Fukuda et al., 2014; Wang, 2014). Models that incorporate these algorithms often need a manually labeled dataset based on human experience and recognition. Thus, some crucial but unknown information may be omitted. The convolutional neural network (CNN) is a deep learning algorithm inspired by biological neuronal responses and is designed to extract information automatically (Cireşan et al., 2013). The application of CNNs in image recognition and facial recognition is considered a landmark because the efficiency of object classification and detection is very high (Ciregan et al., 2012;Krizhevsky et al., 2017). Additionally, CNNs have achieved state-of-the-art accuracies in joint prediction from multi-view images of three-dimensional shapes (Su et al., 2015).

In the present study, we first applied a pre-trained deep residual neural network (ResNet) to detect hemispheric moyamoya vasculopathy after learning the relevant features of the ICA as seen on digital subtraction angiography (DSA) (He et al., 2016). Next, to detect hemorrhagic risk in moyamoya,we applied a combined multi-view CNN (MV-CNN-C) algorithm that integrated individual clinical characteristics and DSA features of all intracranial vessels on the side of the brain with a history of hemorrhage. The models were finally assessed through cross-validation.

Participants and Methods

Participants

The inclusion criteria were as follows: (1) Aged between 18 and 68 years; (2) Diagnosis confirmed by DSA and in accordance with published guidelines (Research Committee on the Pathology and Treatment of Spontaneous Occlusion of the Circle of Willis and Health Labour Sciences Research Grant for Research on Measures for Infractable Diseases, 2012);(3) No evidence of other cerebrovascular diseases, brain tumor, brain trauma, or any medical history of neurosurgery.From January 2017 to September 2019, 460 eligible adult patients with moyamoya (418 MMD and 42 moyamoya syndrome) were retrospectively identified using data from the Department of Neurosurgery, Huashan Hospital located at Fudan University in China. The bilateral intracranial vessels in cases of MMD and the unilateral intracranial vessels in cases of moyamoya syndrome with vasculopathy were collected as positive samples.

Additionally, 500 adult patients with unruptured unilateral intracranial aneurysms were selected from the hospital’s database and their contralateral intracranial vessels were used as negative samples. The above 500 patients were involved after being screened through the following exclusion criteria: (1) Those aged over 68 years; (2) Those with aneurysms located in either the anterior communicating artery or posterior circulation which may interfere with feature recognition; (3) Those with evidence of any obvious abnormalities in the hemisphere contralateral to the aneurysms.

All patients in our database were diagnosed independently by two senior neurosurgeons as routine procedures. If a consensus was not reached, the whole treatment team discussed the case together and came to a final consensus.This study was conducted in accordance with the Declaration of Helsinki after approval by the Institutional Review Board of Huashan Hospital, Fudan University (approval No. 2014-278).All participants or their legal guardians provided informed consent.

Diagnosis modeling

Referring to the definition and diagnostic criteria (Research Committee on the Pathology and Treatment of Spontaneous Occlusion of the Circle of Willis and Health Labour Sciences Research Grant for Research on Measures for Infractable Diseases, 2012), we examined unilateral ICA angiography for moyamoya vasculopathy. Thus, dynamic raw DSA data from the unilateral ICA were defined as a sample. Considering the usual clinical practice and algorithm simplification, only images in the anteroposterior position view were used. In total, we collected 878 positive samples (bilateral hemispheres from the 418 patients with MMD and unilateral hemispheres from the 42 patients with moyamoya syndrome) and 500 negative samples.

First, all right hemisphere samples were flipped horizontally to align with the left hemisphere samples. Then, randomized crops and rotations were applied to the input images to improve the robustness of the small displacements and orientations. The brightness, contrast, saturation, and hue of the images were adjusted, with adjustment factors being randomly selected from within the interval [0.9, 1.1].Transformation procedures were applied before each epoch of model training.

A deep ResNet-152 model (CVPR 2016, Las Vegas, NV, USA)was initiated by ImageNet (CVPR 2009, Miami, FL, USA)with pre-trained weights. This model was fine-tuned using 30 epochs and a minibatch size of 32. The learning rate was 0.001 with an exponential decay factor of 0.1 for every seven epochs. A five-fold cross-validation strategy was applied to avoid sampling bias. Thus, the samples were divided into five sets with equal positive/negative sample ratios, and four sets were used for training, and the remaining set was used for validation. Then, the whole procedure was repeated five times until all sets had been used once for validation. The final result was the mean of the five individual validations. Afterward,the model was evaluated by calculating the sensitivity and specificity, as well as the area under the receiver operating characteristic (ROC) curve.

Hemorrhagic-risk modeling

Dataset construction

The natural history of hemorrhagic MMD indicates that rebleeding episodes frequently occur in the original hemisphere at different sites (Houkin et al., 1996; Saeki et al., 1997; Ryan et al., 2012; Kang et al., 2019). Thus,hemispheres with bleeding remain at high risk for future bleeding, which might not be attributed to a single feature.Thus, the features of these hemorrhagic hemispheres should be learned. In total, we obtained 126 positive samples with prior bleeding episodes (ipsilateral hemispheres from 118 cases of MMD and 8 cases of moyamoya syndrome) and 634 negative samples without any history of intracranial bleeding(bilateral hemispheres from 300 MMD cases and 34 ipsilateral hemispheres from cases of moyamoya syndrome). The contralateral hemispheres from cases of hemorrhagic MMD were excluded considering their unclear involvement in some intraventricular hemorrhage episodes and obscure basis for grouping.

For each sample, demographic characteristics (age, gender,and risk factors of hypertension, smoking, and drinking) were collected for modeling, as well as dynamic DSA raw data of the ICA, external carotid artery, and vertebra-basilar artery in both anteroposterior and lateral position views. Afterward, all temporal DSA images of the same artery and position were integrated into one combined image, and all combined images of the same hemisphere were stored together.

Development of the CNN algorithms

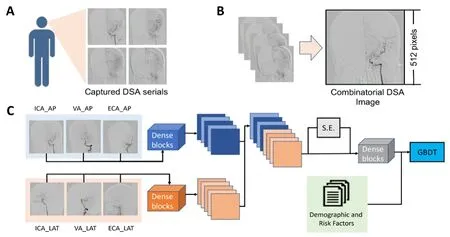

We proposed the MV-CNN to extract image features, and its architecture can be seen in Figure 1. Feature maps of the input images were extracted by two feed-forward densely connected convolutional blocks (Huang et al., 2017). The dense block comprised five convolutional layers, all of which were forwardly connected with each other to reduce information loss and gradient vanishing. Each input image generated 256 feature maps, the aggregation of which was learnable (Su et al., 2015). Instead of direct max-pooling, all feature maps were jointly reweighted by the corresponding adaptive importance vector, which was learned from feature maps by a two-layer squeeze and excitation block (Hu et al., 2018). The reweighted feature maps were encoded by two shared dense blocks to generate the final image feature vector X ∈ R4416×1. The feature vector X was followed by a fully connected layer with Softmax activation, which outputted a prediction of risks R = σ(βTX), where β ∈ R4416×2was the weights vector of the fully connected layer and σ was the Softmax activation function. To provide a loss function for backpropagation, the risks were inputted to a cross-entropy layer for calculating the negative log likelihood: (β,X,Y) =–Σ αtyilog(σ(βTxi)), where xiand yiwere the image feature vector and its corresponding risk annotation. Specifically, we introduced a weighted factor αtas the inverse class frequency,which was 634/(126 + 634) for positive samples and 126/(126+ 634) for negative samples. Thus, the positive and negative samples contributed equally to the total loss. A stochastic gradient descent with momentum was used to minimize the negative log likelihood via backpropagation that optimized model weights and biases. The momentum was set to 0.9 and the initial learning rate was set to 0.01, which was applied with an exponential decay factor of 0.1. The feature extraction layers for each input image were initialized by the ImageNet pre-trained weights, whereas the dense blocks that followed were initialized (Glorot and Bengio, 2010; Lo et al., 2013).Models were trained for 200 epochs in which the size of the minibatch was four. Batch normalization and dropout were used to mitigate overfitting (Ciregan et al., 2012; Ioffe and Szegedy, 2015).

Figure 1| Methodological process of DSA features extraction (A, B) and the architecture of a combined deep multi-view convolutional neural network (C).

Afterward, the gradient-boosting decision-tree method was used to integrate images with clinical features and development of the MV-CNN-C algorithm (“C” for combined;Figure 1). As a multivariant tree-boosting method, the gradient-boosting decision tree is often used to integrate and analyze multiple factors. It produces the prediction from the linear ensemble of multiple decision trees by iteratively reducing the training residuals, which enables the quick capture of the differentiated feature combinations. To normalize the features, the aggregation feature vectors were Z-normalized and the major components of both the clinical and image features were extracted via the singular value decomposition.

We compared performance of the MV-CNN-C with the MVCNN to evaluate the significance of clinical features. Two other basic CNN models (vanilla CNN and MV-CNN-NA) were also constructed and compared. The vanilla CNN architecture is similar to that of DenseNet, except that the number of nodes in the last fully connected layer is changed to three, whereas the input is the concatenate of all the input images along the width axis (Huang et al., 2017). The MV-CNN-NA architecture is similar to that of the MV-CNN in that the separate feature vectors from each input image are combined directly by concatenation instead of by the squeeze-and-excitation block.

Model training and validation

Samples were randomly assigned to nonoverlapping training(80%) and validation (20%) sets. For MMD with bilateral samples, they were simultaneously assigned to the same training or validation set. This ensures that no data from any patient were represented in both training and validation sets at the same time, and avoids overfitting and optimistic estimates of generalization accuracy. The randomized assignment was repeated five times until all sets had been used for validation. Afterward, the final validation result was generated as the mean of the five individual validations.

Statistical analysis

The models were trained using PyTorch 0.4.0 (https://pytorch.org/previous-versions/) under python 3.5 (https://www.python.org/downloads/release/python-350/) on servers equipped with Intel(R) Core (TM) i7-6800K CPU @ 3.40 GHz CPUs, 64 GB RAM, and dual NVIDIA GTX 1080Ti graphic cards. DSA data were obtained from the Philips and GE X-ray intensifiers in Huashan Hospital, Fudan University (Philips UNIQ Clarity FD20/20, Philips, Amsterdam, Netherlands; GE Innova IGS 630; GE, Boston, MA, USA).

Results

Diagnosis modeling of MMD

The clinical and image characteristics of all participants in this study are summarized in Table 1. Patients with MMD,moyamoya syndrome, and intracranial aneurysm did not show significant differences in age, gender, or current smoking or drinking status (P> 0.05). Significant differences were found for hypertension (P< 0.001). Most patients with MMD exhibited a Suzuki stage of III or IV, which was similar in patients with moyamoya syndrome (P> 0.05). Additionally,hemorrhage rates did not differ significantly between MMD and moyamoya syndrome (P> 0.05).

DSA image features extracted through the ResNet-152 model for one sample is shown in Figure 2. After repeating training and validation five times, the average accuracy of the proposed method was 97.64 ± 0.87%, with sensitivity and specificity of 96.55 ± 3.44% and 98.29 ± 0.98%, respectively. The quality of the model was also evaluated by a ROC, and the area under the ROC curve reached 0.990 (Figure 3).

Hemorrhagic risk modeling of MMD

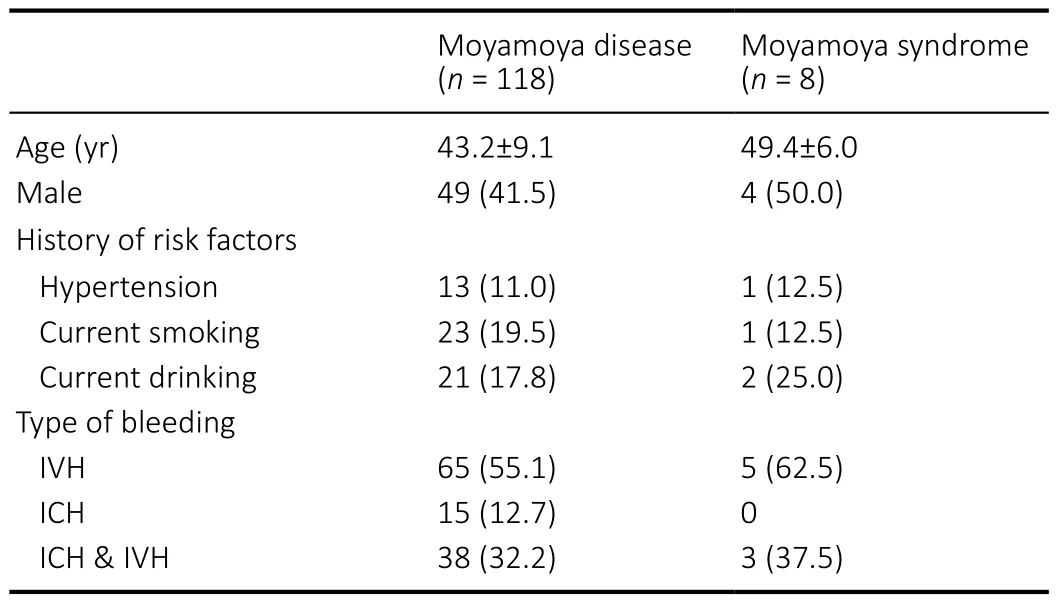

The baseline characteristics of adult moyamoya with an episode of prior bleeding are shown in Table 2. To determine whether the model that we constructed had any advantages,we compared the performance of the MV-CNN-C algorithm with that of the MV-CNN, vanilla CNN, and MV-CNN-NA (Figure 4). The results indicated that the MV-CNN-C reached the highest mean classification accuracy and precision, implying that the gradient boosting decision-tree algorithm (vs. the MV-CNN), the SE block (vs. the MV-CNN-NA), and the twoway input structure (vs. the vanilla CNN) all contributed to the improved performance of the MV-CNN-C.

As an example, deep features extracted from the fully connected layer of the MV-CNN-C for 16 positive and 16 negative samples were converted to 64 × 69 matrices, which revealed an obvious difference in features between samples(Figure 5). After repeating training and validation five times,the mean accuracy of the proposed method was 90.69 ±1.58%, and the sensitivity and specificity were 94.12 ± 2.75%and 89.86 ± 3.64%, respectively.

Discussion

Here, we proposed a series of deep MV-CNN algorithms as a reliable, automatic, and objective tool for detecting cases of moyamoya disease/syndrome and for evaluating the clinical risk of hemorrhage. We developed a ResNet-152 model to extract image features related to moyamoya, resulting in improved diagnostic efficacy and automation, and laying a solid foundation for the detection of hemorrhagic risk. An MVCNN-C model was then proposed to integrate both clinical and image features and generate a hemorrhagic risks classifier.Finally, the classifier was evaluated using a cross-validation strategy.

Referring to the natural history of hemorrhagic MMD, Kang et al. (2019) reported that rebleeding events occurred in 36.7%of patients who received conservative treatment. Additionally,Morioka et al. (2003b) revealed a rebleeding rate of 61.1% in another hemorrhagic MMD cohort. Therefore, hemorrhagicrisk remains high after an initial bleeding episode and should be recognized and prevented. Furthermore, the sites of rebleeding have been reported to vary from the initial site,but are often in the same side (Houkin et al., 1996; Saeki et al., 1997; Kuroda and Houkin, 2008; Kang et al., 2019). Thus,we conclude that the hemisphere in which bleeding initially occurs in moyamoya remains at high risk of future rebleeding,and this might not be attributed to a single risk factor.

Table 1 |Clinical and image characteristics of the participants

Table 2 |Baseline characteristics of adult moyamoya with prior bleeding episodes

Of all the clinical characteristics reported in studies of hemorrhagic MMD, smoking is the only one that has been related to rebleeding, while hypertension has been proved irrelevant (Yoshida et al., 1999; Morioka et al., 2003a; Kang et al., 2019). Nevertheless, here we included all these clinical factors to avoid missing any relevant features. Previous studies have provided several morphological features via angiography, including fragile moyamoya vessels (Kuroda and Houkin, 2008), brand extension of anterior choroidal arteryposterior communicating artery (Morioka et al., 2003a;Jiang et al., 2014), and cerebral aneurysms developed from shift circulation (Kawaguchi et al., 1996). Although these features are deemed to be important references for clinicians,their use is limited and controversial. For example, fragile moyamoya vessels and some circulation-related aneurysms may vanish gradually as the disease progresses to high Suzuki stages. Thus, we are not sure whether hemorrhagic risks increase as the disease worsens. For brand extension of the anterior choroidal artery-posterior communicating artery, the diameters of the vessels should be calculated and correlated with hemorrhagic risks. Additionally, most of the aforementioned studies focused on univariant analysis of a single factor and generated a paradoxical result that is difficult to refer to in clinical practice. Thus, all these features should be considered simultaneously, quantified, and weighted to generate a practical risk recognition model. However, this is difficult based on the present clinical experience.

Figure 2| Example of image feature extraction from the digital subtraction angiography.

Figure 3|The ROC for diagnosis modeling of moyamoya disease which was constructed by the ResNet-152 algorithm.

Figure 4|Classification accuracy (A) and precision (B) among the MV-CNN, MV-CNN-C, MV-CNN-NA, and vanilla CNN models.

Figure 5 | The 64 × 69 matrices of 16 randomly selected positive samples with prior bleeding episodes (A) and 16 negative samples without prior bleeding episodes (B).

Compared with other intelligent models, the CNN-based deep learning model has several advantages. First, the CNN learning paradigm differs from the feature engineering paradigm in that the predictive features are adaptively transformed from the input images instead of manually designed and extracted, thus relieving clinicians of the burden of manual input and saving time. Second, the network is composed of non-linear transformations and learnable filter kernels that summarize the high-level semantic features from the lowlevel morphological textures. It does not use prior knowledge,which may include some hidden errors, but instead generates knowledge directly derived from the medical image data.Finally, elements of the structure, such as the number and size of filters, type of convolutional layers and blocks,loss functions, hyper-parameters, and even numbers of input pathways can be customized to be suitable for any classification, detection, segmentation, or other artificial intelligence tasks. This makes the CNN model much more flexible than the traditional models.

In the first stage, the ResNet model we proposed has a novel network structure called the residual unit, which consists of a primary path of several convolution layers and an alternative path that short-circuits the input to the output of the residual unit. This structure of the residual unit helps to counter the problem of vanishing and exploding gradients and results in many more layers and better performance than other deep learning networks (Deng et al., 2009). In the second stage, the MV-CNN-C model we developed can simultaneously extract a large amount of information from different dimensions,making it suitable for this application.

This study had several limitations. First, because developing and stabilizing deep learning models requires large amounts of data, the model in this study still needs to be improved by including a larger training set. Second, an independent testing set may provide a more convincing result. Third, features extracted from the deep learning algorithm are difficult to explain in terms of medical significance, and relevant clinical work of deep learning is needed in cooperation with clinicians and engineers.

In summary, the deep learning algorithms we proposed have been shown to be valuable and could assist in automatic diagnosis of MMD and timely recognition of the risk for rebleeding. We are establishing a national database to help build a better deep learning model through an ongoing multi-center study of MMD (A Multi-Center Registry Study of Chinese Adult Moyamoya Disease).

Acknowledgments:We thank Shanghai Zhong’an Technology for their equipment support.

Author contributions:Data collection: WN, BX, YXG; methodology:jHY; original draft writing: YL, XZ; analysis: HY, jBS; manuscript review &editing: LC, YXG, YM; All authors read and approved the final manuscript.

Conflicts of interest:There is no conflict of interest.

Financial support:This study was supported by the National Natural Science Foundation of China, Nos. 81801155 (to YL), 81771237 (to YXG); the New Technology Projects of Shanghai Science and Technology Innovation Action Plan, China, No. 18511102800 (to YXG); the Shanghai Municipal Science and Technology Major Project and ZjLab, China, No.2018SHZDZX01 (to YM); and the Shanghai Health and Family Planning Commission, China, No. 2017BR022 (to YXG). The funding sources had no role in study conception and design, data analysis or interpretation, paper writing or deciding to submit this paper for publication.

Institutional review board statement:This study was approved by the Institutional Review Board in Huashan Hospital, Fudan University, China(approved No. 2014-278) on january 12, 2015. All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Declaration of participant consent:The authors certify that they have obtained consent forms from the participants or their legal guardians. In the forms, the participants or their legal guardians have given consent for the participant images and other clinical information to be reported in the journal. The participants or their legal guardians understand that the participants’ names and initials will not be published and due efforts will be made to conceal their identity.

Reporting statement:This study followed the STAndards for Reporting Diagnostic accuracy studies (STARD) guidance.

Biostatistics statement:The statistical methods of this study were reviewed by the biostatistician of Huashan Hospital, Fudan University,China.

Copyright license agreement:The Copyright License Agreement has been signed by all authors before publication.

Data sharing statement:Datasets analyzed during the current study are available from the corresponding author on reasonable request.

Plagiarism check:Checked twice by iThenticate.

Peer review:Externally peer reviewed.

Open access statement:This is an open access journal, and articles are distributed under the terms of the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License, which allows others to remix,tweak, and build upon the work non-commercially, as long as appropriate credit is given and the new creations are licensed under the identical terms.

- 中国神经再生研究(英文版)的其它文章

- Therapeutic effectiveness of a single exercise session combined with WalkAide functional electrical stimulation in post-stroke patients: a crossover design study

- Enriched environment boosts the post-stroke recovery of neurological function by promoting autophagy

- Surgical intervention combined with weight-bearing walking training improves neurological recoveries in 320 patients with clinically complete spinal cord injury:a prospective self-controlled study

- D-serine reduces memory impairment and neuronal damage induced by chronic lead exposure

- An integrative multivariate approach for predicting functional recovery using magnetic resonance imaging parameters in a translational pig ischemic stroke model

- AAV8 transduction capacity is reduced by prior exposure to endosome-like pH conditions