Deep learning for diagnosis of precancerous lesions in upper gastrointestinal endoscopy: A review

Tao Yan, Pak Kin Wong, Ye-Ying Qin

Abstract Upper gastrointestinal (GI) cancers are the leading cause of cancer-related deaths worldwide. Early identification of precancerous lesions has been shown to minimize the incidence of GI cancers and substantiate the vital role of screening endoscopy. However, unlike GI cancers, precancerous lesions in the upper GI tract can be subtle and difficult to detect. Artificial intelligence techniques,especially deep learning algorithms with convolutional neural networks, might help endoscopists identify the precancerous lesions and reduce interobserver variability. In this review, a systematic literature search was undertaken of the Web of Science, PubMed, Cochrane Library and Embase, with an emphasis on the deep learning-based diagnosis of precancerous lesions in the upper GI tract. The status of deep learning algorithms in upper GI precancerous lesions has been systematically summarized. The challenges and recommendations targeting this field are comprehensively analyzed for future research.

Key Words: Artificial intelligence; Deep learning; Convolutional neural network;Precancerous lesions; Endoscopy

INTRODUCTION

Upper gastrointestinal (GI) cancers, mainly including gastric cancer (8 .2 % of total cancer deaths) and esophageal cancer (5 .3 % of total cancer deaths), are the leading cause of cancer-related deaths worldwide[1 ]. Previous studies have shown that upper GI cancers always go through the stages of precancerous lesions, which can be defined as common conditions associated with a higher risk of developing cancers over time[2 -4 ]. The detection of the precancerous lesions before cancer occurs could significantly reduce morbidity and mortality rates[5 ,6 ]. Currently, the main approach for the diagnosis of disorders or issues in the upper GI tract is endoscopy[7 ,8 ].Compared with GI cancers, which usually show typical morphological characteristics,the precancerous lesions often appear in flat mucosa and exhibit few morphological changes. Manual screening through endoscopy is labor-intensive, time-consuming and relies heavily on clinical experience. Computer-assisted diagnosis based on artificial intelligence (AI) can overcome these dilemmas.

Over the past few decades, AI techniques such as machine learning (ML) and deep learning (DL) have been widely used in endoscopic imaging to improve the diagnostic accuracy and efficiency of various GI lesions[9 -13 ]. The exact definition of AI, ML and DL can be misunderstood by physicians. AI, ML and DL are overlapping disciplines(Figure 1 ). AI is a hierarchy that encompasses ML and DL; it describes a computerized solution to address the issues of human cognitive defined by McCarthy in 1956 [14 ].ML is a subset of AI in which algorithms can execute complex tasks, but it needs handcrafted feature extraction. ML originated around the 1980 s and focuses on patterns and inference[15 ]. DL is a subset of ML and became feasible in the 2010 s; it is focused specifically on deep neural networks. A convolutional neural network (CNN)is the primary DL algorithm for image processing[16 ,17 ].

The diagnostic process of an AI model is similar to the human brain. We take our previous research as an example to illustrate the diagnostic process of ML, DL and human experts. When an input image with gastric intestinal metaplasia (GIM), a precancerous lesion of gastric cancer, is fed into the ML system, it usually needs a manual feature extraction step, while the handcrafted features are unable to discern slight variations in the endoscopic image (Figure 2 )[15 ]. Unlike conventional ML algorithms, CNNs can automatically learn representative features from the endoscopic images[17 ]. When we apply a CNN model to detect GIM, it performs better than conventional ML models and is comparable to experienced endoscopists[18 ]. For a broad variety of image processing activities in endoscopy, CNNs also show excellent results, and some CNN-based algorithms have been used in clinical practice[19 -21 ].However, DL, especially CNN, has some limitations. First, DL requires a lot of data and easily leads to overfitting. Second, the diagnostic accuracy of DL relies on the training data, but the clinical data of different types of diseases are always imbalanced,which easily causes diagnosis bias. In addition, a DL model is complex and requires a huge calculation, so most researchers can only use the ready-made model.

Despite the above limitations, DL-based AI systems are revolutionizing GI endoscopy. While there are several surveys on DL for GI cancers[9 -13 ], no specific review on the application of DL in the endoscopic diagnosis of precancerous lesions is available in the literature. Therefore, the performance of DL on gastroenterology is summarized in this review, with an emphasis on the automatic diagnosis of precancerous lesions in the upper GI tract. GI cancers are out of the scope of this review.Specifically, we review the status of intelligent diagnoses of esophageal and gastric precancerous lesions. The challenges and recommendations based on the findings of the review are comprehensively analyzed to advance the field.

Figure 1 Infographic with icons and timeline for artificial intelligence, machine learning and deep learning.

Figure 2 Illustration of the diagnostic process of physician, machine learning and deep learning. A: Physician diagnostic process; B: Machine learning; C: Deep learning. Conv: Convolutional layer; FC: Fully connected layer; GIM: gastric intestinal metaplasia.

DL IN ENDOSCOPIC DETECTION OF PRECANCEROUS LESIONS IN ESOPHAGEAL MUCOSA

Esophageal cancer is the eighth most prevalent form of cancer and the sixth most lethal cancer globally[1 ]. There are two major subtypes of esophageal cancer:Esophageal squamous cell carcinoma (ESCC) and esophageal adenocarcinoma(EAC)[22 ]. Esophageal squamous dysplasia (ESD) is believed to be the precancerous lesion of ESCC[23 -25 ], and Barrett’s esophagus (BE) is the identifiable precancerous lesion associated with EAC[4 ,26 ]. Endoscopic surveillance is recommended by GI societies to enable early detection of the two precancerous lesions of esophageal cancer[25 ,27 ].

However, the current endoscopic diagnosis methods for patients with BE, such as random 4 -quadrant biopsy, laser-induced endomicroscopy, image enhanced endoscopy,etc., have disadvantages concerning the learning curve, cost, interobserver variability and time-consuming problems[28 ]. The current standard for identifying ESD is Lugol’s chromoendoscopy, but it shows poor specificity[29 ]. Besides, iodine staining often presents a risk of allergic reactions. To overcome these challenges, DLbased AI systems have been established to help endoscopists identify ESD and BE.

DL in ESD

Low-grade and high-grade intraepithelial neoplasms, collectively referred to as ESD,are deemed as precancerous lesions of ESCC. Early and accurate detection of ESD is essential but also full of challenges[23 -25 ]. DL is reliably able to depict ESD in realtime upper endoscopy. Caiet al[30 ] designed a novel computer-assisted diagnosis system to localize and identify early ESCC, including low-grade and high-grade intraepithelial neoplasia, through real-time white light endoscopy (WLE). The system achieved a sensitivity, specificity and accuracy of 97 .8 %, 85 .4 % and 91 .4 %,respectively. They also demonstrated that when referring to the results of the system,the overall diagnostic capability of the endoscopist has been increased. This research paved the way for the real-time diagnosis of ESD and ESCC. Following this work, Guoet al[31 ] applied 6473 narrow band (NB) images to train a real-time automated computer-assisted diagnosis system to support non-experts in the detection of ESD and ESCC. The system serves as a “second observer” in an endoscopic examination and achieves a sensitivity of 98 .04 % and specificity of 95 .30 % on NB images. The perframe sensitivity was 96 .10 % for magnifying narrow band imaging (M-NBI) videos and 60 .80 % for non-M-NBI videos. The per lesion sensitivity was 100 % in M-NBI videos.

DL in BE

BE is a disorder in which the lining of the esophagus is damaged by gastric acid. The critical purpose of endoscopic Barrett’s surveillance is early detection of BE-related dysplasia[4 ,26 -28 ]. Recently, there have been many studies on the DL-based diagnosis of BE, and we review some representative studies. de Groofet al[32 ] performed one of the first pilot studies to assess the performance of a DL-based system during live endoscopic procedures of patients with or without BE dysplasia. The system demonstrated 90 % accuracy, 91 % sensitivity and 89 % specificity in a per-level analysis.Following up this work, they improved this system using stepwise transfer learning and five independent endoscopy data sets. The enhanced system obtained higher accuracy than non-expert endoscopists and with comparable delineation performance[33 ]. Furthermore, their team also demonstrated the feasibility of a DLbased system for tissue characterization of NBI endoscopy in BE, and the system achieved a promising diagnostic accuracy[34 ].

Hashimotoet al[35 ] borrowed from the Inception-ResNet-v2 algorithm to develop a model for real-time classification of early esophageal neoplasia in BE, and they also applied YOLO-v2 to draw localization boxes around regions classified as dysplasia.For detection of neoplasia, the system achieved a sensitivity of 96 .4 %, specificity of 94 .2 % and accuracy of 95 .4 %. Hussein et al[36 ] built a CNN model to diagnose dysplastic BE mucosa with a sensitivity of 88 .3 % and specificity of 80 .0 %. The results preliminarily indicated that the diagnostic performance of the CNN model was close to that of experienced endoscopists. Ebigboet al[37 ] exploited the use of a CNN-based system to classify and segment cancer in BE. The system achieved an accuracy of 89 .9 % in 14 patients with neoplastic BE.

DL has also achieved excellent results in distinguishing multiple types of esophageal lesions, including BE. Liuet al[38 ] explored the use of a CNN model to distinguish esophageal cancers from BE. The model was trained and evaluated on 1272 images captured by WLE. After pre-processing and data augmentation, the average sensitivity, specificity and accuracy of the CNN model were 94 .23 %, 94 .67 % and 85 .83 %, respectively. Wu et al[39 ] developed a CNN-based framework named ELNet for automatic esophageal lesion (i.e.EAC, BE and inflammation) classification and segmentation, the ELNet achieved a classification sensitivity of 90 .34 %, specificity of 97 .18 % and accuracy of 96 .28 %. The segmentation sensitivity, specificity and accuracy were 80 .18 %, 96 .55 % and 94 .62 %, respectively. A similar study was proposed by Ghatwaryet al[40 ], who applied a CNN algorithm to detect BE, EAC and ESCC from endoscopic videos and obtained a high sensitivity of 93 .7 % and a high F-measure of 93 .2 %.

The studies exploring the creation of DL algorithms for the diagnosis of precancerous lesions in esophageal mucosa are summarized in Table 1 .

DL IN ENDOSCOPIC DETECTION OF PRECANCEROUS LESIONS IN GASTRIC MUCOSA

Gastric cancer is the fifth most prevalent form of cancer and the third most lethal cancer globally[1 ]. Even though the prevalence of gastric cancer has declined during the last few decades, gastric cancer remains a significant clinical problem, especially in developing countries. This is because most patients are diagnosed in late stages with poor prognosis and restricted therapeutic choices[41 ]. The pathogenesis of gastric cancer involves a series of events starting withHelicobacter pylori-induced (H. pyloriinduced) chronic inflammation, progressing towards atrophic gastritis, GIM, dysplasia and eventually gastric cancer[42 ]. Patients with the precancerous lesions (e.g.,H. pyloriinduced chronic inflammation, atrophic gastritis, GIM and dysplasia) are at considerable risk of gastric cancer[3 ,6 ,43 ]. It has been argued that the detection of such precancerous lesions may significantly reduce the incidence of gastric cancer.However, endoscopic examination is difficult to identify these precancerous lesions,and the diagnostic result also has high interobserver variability due to their subtle morphological changes in the mucosa and lack of experienced endoscopists[44 ,45 ].Currently, many researchers are trying to use DL-based methods to detect gastric precancerous lesions; here, we review these studies in detail.

DL in H. pylori infection

Most of the gastric precancerous lesions are correlated with long-term infections withH. pylori[46 ]. Shichijo et al[47 ] performed one of the pioneering studies to apply CNNs in the diagnosis ofH. pyloriinfection. The CNNs were built on GoogLeNet and trained on 32208 WLE images. One of their CNN models has higher accuracy than endoscopists. The study showed the feasibility of using CNN to diagnoseH. pylorifrom endoscopic images. After this study, Itohet al[48 ] developed a CNN model to detectH. pyloriinfection in WLE images and showed a sensitivity of 86 .7 % and specificity of 86 .7 % in the test dataset. A similar model was developed by Zhenget al[49 ] to evaluateH. pyloriinfection status, and the per-patient sensitivity, specificity and accuracy of the model were 91 .6 %, 98 .6 % and 93 .8 %, respectively. Besides WLE,blue laser imaging-bright and linked color imaging systems were prospectively applied by Nakashimaet al[50 ] to collect endoscopic images. With these images, they fine-tuned a pre-trained GoogLeNet to predictH. pyloriinfection status. As compared with linked color imaging, the model achieved the highest sensitivity (96 .7 %) and specificity (86 .7 %) when using blue laser imaging-bright. Nakashima et al[51 ] also did a single center prospective study to build a CNN model to identify the status ofH.pyloriin uninfected, currently infected and post-eradication patients. The area under the receiver operating characteristic curve for the uninfected, currently infected and post-eradication categories was 0 .90 , 0 .82 and 0 .77 , respectively.

DL in atrophic gastritis

Atrophic gastritis is a form of chronic inflammation of the gastric mucosa; accurate endoscopic diagnosis is difficult[52 ]. Guimarães et al[53 ] reported the application of CNN to detect atrophic gastritis; the system achieved an accuracy of 93 % and performed better than expert endoscopists. Recently, another CNN-based system for detecting atrophic gastritis was reported by Zhanget al[54 ]. The CNN model was trained and tested on a dataset containing 3042 images with atrophic gastritis and 2428 without atrophic gastritis. The diagnostic accuracy, sensitivity and specificity of the model were 94 .2 %, 94 .5 % and 94 .0 %, respectively, which were better than those of the experts. More recently, Horiuchiet al[55 ] explored the diagnostic ability of the CNN model to distinguish early gastric cancer and gastritis through M-NBI; the 22 -layer CNN was built on GoogleNet and pretrained using 2570 endoscopic images, and the sensitivity, specificity and accuracy on 258 images were 95 .4 %, 71 .0 % and 85 .3 %,respectively. Except for high sensitivity, the CNN model also showed an overall test speed of 0 .02 sperimage, which was faster than human experts.

Table 1 Summary of studies using deep learning for detection of esophageal precancerous lesions

DL in GIM

GIM is the replacement of gastric-type mucinous epithelial cells with intestinal-type cells, which is a precancerous lesion with a worldwide prevalence of 25 %[56 ]. The morphological characteristics of GIM are subtle and difficult to observe, so the manual diagnosis of GIM is full of challenges. Wanget al[57 ] reported the first instance of an AI system for localizing and identifying GIM from WLE images. The system achieved a high classification accuracy and a satisfactory segmentation result. A recent study developed a CNN-based diagnosis system that can detect atrophic gastritis and GIM from WLE images[58 ], and the detection sensitivity and specificity for atrophic gastritis were 87 .2 % and 91 .1 %, respectively. For detection of GIM, the system also achieved a sensitivity of 90 .3 % and a specificity of 93 .7 %. Recently, our team also developed a novel DL-based diagnostic system for detection of GIM in endoscopic images[18 ]. The difference from the previous research is that our system is composed of three independent CNNs, which can identify GIM from either NBI or M-NBI. The per-patient sensitivity, specificity and accuracy of the system were 91 .9 %, 86 .0 % and 88 .8 %, respectively. The diagnostic performance showed no significant differences as compared with human experts. Our research showed that the integration of NBI and M-NBI into the DL system could achieve satisfactory diagnostic performance for GIM.

DL in gastric dysplasia

Gastric dysplasia is the penultimate step of gastric carcinogenesis, and accurate diagnosis of this lesion remains controversial[59 ]. To accurately classify advanced lowgrade dysplasia, high-grade dysplasia, early gastric cancer and gastric cancer, Choet al[60 ] established three CNN models based on 5017 endoscopic images. They found that the Inception-Resnet-v2 model performed the best, while it showed lower fiveclass accuracy compared with the endoscopists (76 .4 % vs 87 .6 %). Inoue et al[61 ]constructed a detection system using the Single-Shot Multibox Detector, which can automatically detect duodenal adenomas and high-grade dysplasia from WLE or NBI.The system detected 94 .7 % adenomas and 100 % high-grade dysplasia on a dataset containing 1080 endoscopic images within only 31 s. Although most of the AI-assisted system can achieve high accuracy on endoscopic diagnosis, no study has investigated the role of AI in the training of junior endoscopists. To evaluate the role of AI in the training of junior endoscopists in predicting histology of endoscopic gastric lesions,including dysplasia, Luiet al[62 ] designed and validated a CNN classifier based on 3000 NB images. The classifier achieved an overall accuracy of 91 .0 %, sensitivity of 97 .1 % and specificity of 85 .9 %, which was superior to all junior endoscopists. They also demonstrated that with the feedback from the CNN classifier, the learning curve of junior endoscopists was improved in predicting histology of gastric lesions.

The studies exploring the creation of DL algorithms for the diagnosis of precancerous lesions in gastric mucosa are summarized in Table 2 .

CHALLENGES AND RECOMMENDATIONS

AI has gained much attention in recent years. In the field of GI endoscopy, DL is also a promising innovation in the identification and characterization of lesions[9 -13 ]. Many successful studies have focused on GI cancers. Accurate detection of precancerous lesions such as ESD, BE,H. pylori-induced chronic inflammation, atrophic gastritis,GIM and gastric dysplasia can greatly reduce the incidence of cancers and require less cancer treatment. DL-assisted detection of these precancerous lesions has increasingly emerged in the last 5 yrs. To perform a systematic review of the status of DL for diagnosis of precancerous lesions of the upper GI tract, we conducted a comprehensive search for all original publications on this target between January 1 , 2017 and December 30 , 2020 . A variety of published papers has verified the outstanding performance of DL-assisted systems, several challenges remain from the viewpoint of physicians and algorithm engineers. The challenges and our recommendations on future research directions are outlined below.

Prospective studies and clinical verification

The current literature reveals that most studies were designed in a retrospective manner with a strong probability of bias. In these retrospective studies, researchers tended to collect high-quality endoscopic images that showed typical characteristics of the detected lesions from a single medical center, while they excluded common lowquality images. This kind of selection bias may jeopardize the precision and lead to lower generalization of the DL models. Thus, data collected from multicenter studies with uninformative frames are necessary to build robust DL models, and prospective studies are needed to properly verify the accuracy of AI in clinical practice.

Handling of overfitting

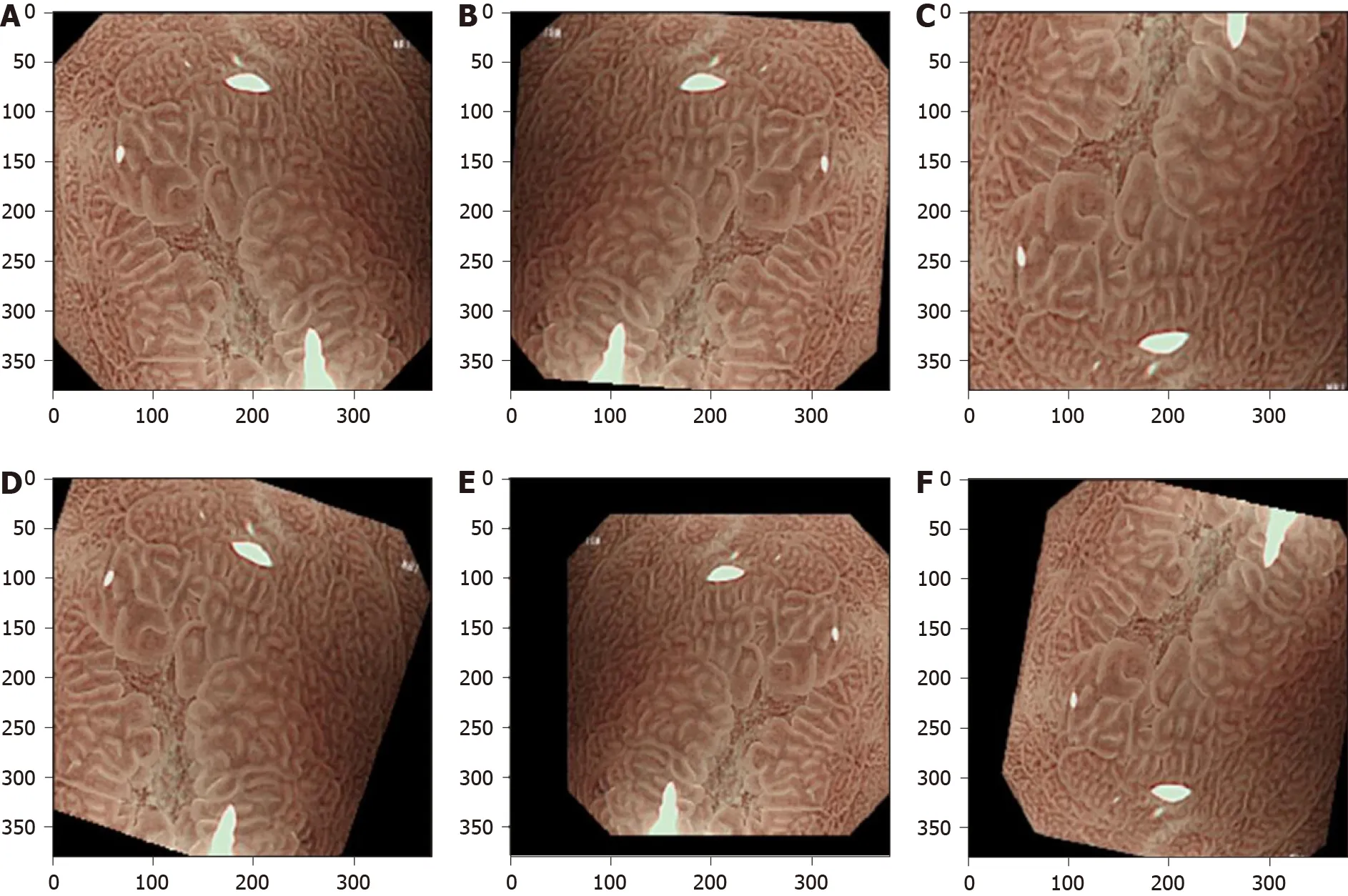

Overfitting means an AI model performs well on the training set but has high error on unseen data. The deep CNN architectures usually contain several convolutional layers and fully connected layers, which produce millions of parameters that easily lead to strong overfitting[16 ,17 ]. Training these parameters needs large-scale well-annotated data. However, well-annotated data are costly and hard to obtain in the clinical community. Possible solutions for overcoming the lack of well-annotated data to avoid overfitting mainly include data augmentation[63 ], transfer learning[17 ,64 ], semisupervised learning[65 ] and data synthesis using generative adversarial networks[66 ].Data augmentation is a common method to train CNNs to reduce overfitting[63 ].According to current literature, almost all studies use data augmentation. Data augmentation is performed by using several image transformations such as random image rotation, flipping, shifting, scaling and their combinations are shown in Figure 3 . Transfer learning involves transfer knowledge learned from a large source domain to a target domain[17 ,64 ]. This technique is usually performed by initializing the CNN using the weights pretrained on ImageNet dataset. As there are manyimaging modalities such as WLE, NBI and M-NBI and the images share the common features of the detected lesions, Struyvenberget al[34 ] applied a stepwise transfer learning approach to tackle the shortage of NB images. Their CNN model was first trained on many WLE images, which are easy to acquire as compared with NB images.Then, the weights were further trained and optimized using NB images. In the upper endoscopy, although well-annotated data are limited, the unlabeled data are abundant and easily available in most situations. Semi-supervised learning, which utilizes limited labeled data and large-scale unlabeled data to train and improve the CNN model, is useful in the GI tract[65 ,67 ,68 ]. Generative adversarial networks are widely used in the field of medical image synthesis; the synthetic images can be used to provide auxiliary training to improve the performance of the CNN models[66 ]. de Souzaet al[69 ] introduced generative adversarial networks to generate high-quality endoscopic images for BE, and these synthetic images were used to provide auxiliary training. The detection results suggested that with the help of these synthetic images the CNN model outperformed the ones over the original datasets.

Table 2 Summary of studies using deep learning for detection of gastric precancerous lesions

Although the above techniques were used to reduce overfitting to a certain extent,the single use of one type did not guarantee resolution of the overfitting problem.Integration of these techniques is a promising strategy, and it was verified in our research[18 ,70 ].

Figure 3 Data augmentation for a typical magnifying narrow band image for training a convolutional neural network model. This is performed by using a variety of image transformations and their combinations. A: Original image; B: Flip horizontal and random rotation; C: Flip vertical and magnification; D: Random rotation and shift; E: Flip horizontal, minification and shift; F: Flip vertical, rotation and shift.

Improvement on interpretability

Lack of interpretability (i.e.the “black box” nature), which is the nature of DL technology, is another gap between studies and clinical applications in the field of precancerous lesion detection from endoscopic images. The black box nature means that the decision-making process by the DL model is not clearly demonstrated, which may reduce the willingness of doctors to use it. Although attention maps can help explain the dominant areas by highlighting them, they are constrained in that they do not thoroughly explain how the algorithm comes to its final decision[71 ]. The attention maps are displayed as heat maps overlaid upon the original images, where warmer colors mean higher contributions to the decision making, which usually correspond to lesions. However, the attention maps also have some defects such as inaccurate display of lesions as shown in Figure 4 , where the attention maps only cover partial areas associated with BE and GIM. This is the inherent shortcoming of attention maps.Therefore, understanding the mechanism used by the DL model for prediction is a hot research topic. The network dissection[72 ], an empirical method to identify the semantics of individual hidden nodes in the DL model, may be a feasible solution to improve interpretability.

Network design

In this review, we analyzed the DL model used in the detection of precancerous lesions in the upper GI tract. The literature shows that almost all the DL-based AI systems are developed based on state-of-the-art CNN architectures. These CNNs can only handle a single task, such as GoogLeNet for disease classification[47 ,48 ,50 ,55 ],YOLO for lesion identification[36 ] and SegNet for lesion segmentation[31 ]. Few networks can handle multiple tasks simultaneously. Thus, networks must be designed for multitask learning, which is valuable in clinical applications. Networks designed for handling high-resolution images can help detect micropatterns, which is profitable for small precancerous lesions. Moreover, attempts should be guided to exploit the use of videos rather than images to minimize the processing time and keep DL algorithms working at almost real-time level. Therefore, as suggested by Moriet al[73 ] and Thakkaret al[74 ], the AI systems may be treated as an extra pair of eyes to prevent the absence of subtle lesions.

Figure 4 Informative features (partially related to lesions areas) acquired by the convolutional neural networks, where warmer colors mean higher contributions to decision making. A: Original endoscopic images; B: Corresponding attention. BE: Barrett’s esophagus; GIM: Gastric intestinal metaplasia.

CONCLUSION

Upper GI cancers are a major cause of cancer-related deaths worldwide. Early detection of precancerous lesions could significantly reduce cancer incidence. Upper GI endoscopy is a gold standard procedure for identifying precancerous lesions in the upper GI tract. DL-based endoscopic systems can provide an easier, faster and more reliable endoscopic method. We have conducted a thorough review of detection of precancerous lesions of the upper GI tract using DL approaches since 2017 . This is the first review on the DL-based diagnosis of precancerous lesions of the upper GI tract.The status, challenges and recommendations summarized in this review can provide guidance for intelligent diagnosis of other GI tract diseases, which can help engineers develop perfect AI products to assist clinical decision making. Despite the success of DL algorithms in upper GI endoscopy, prospective studies and clinical validation are still needed. Creation of large public databases, adoption of comprehensive overfitting prevention strategies and application of more advanced interpretable methods and networks are also necessary to encourage clinical application of AI for medical diagnosis.

ACKNOWLEDGEMENTS

The authors would like to thank Dr. I Cheong Choi, Dr. Hon Ho Yu and Dr. Mo Fong Li from the Department of Gastroenterology, Kiang Wu Hospital, Macau for their advice on this manuscript.

World Journal of Gastroenterology2021年20期

World Journal of Gastroenterology2021年20期

- World Journal of Gastroenterology的其它文章

- State of machine and deep learning in histopathological applications in digestive diseases

- COVID-19 in normal, diseased and transplanted liver

- Upregulation of long noncoding RNA W42 promotes tumor development by binding with DBN1 in hepatocellular carcinoma

- Development and validation of a prognostic model for patients with hepatorenal syndrome: A retrospective cohort study

- Inflammatory bowel disease in Tuzla Canton, Bosnia-Herzegovina: A prospective 10 -year follow-up

- Open reading frame 3 protein of hepatitis E virus: Multi-function protein with endless potentia