第四范式:基于机器学习的荷兰智慧城市宜居性预测模型研究

邬峻

1 研究背景

1.1 第四次工业革命:开启以人为本的“人工智能”新时代

18世纪中叶以来,人类历史上先后发生过3次工业革命。第一次工业革命开创了“蒸汽时代”(1760—1840年),标志着农耕文明向工业文明的过渡,是人类发展史上的第一个伟大奇迹;第二次工业革命开启了“电气时代”(1840—1950年),使得电力、钢铁、铁路、化工、汽车等重工业兴起,石油成为新能源,促进了交通迅速发展以及世界各国之间更频繁地交流,重塑了全球国际政治经济格局;两次世界大战之后开始的第三次工业革命,更是开创了“信息时代”(1950年至今),全球信息和资源交流变得更为便捷,大多数国家和地区都被卷入全球一体化和高度信息化进程中,人类文明达到空前发达的高度。

2016年1月20日,世界经济论坛在瑞士达沃斯召开主题为“第四次工业革命”的主题年会,正式宣告了将彻底改变世界发展进程的第四次工业革命的到来。论坛创始人、执行主席施瓦布(Schwab)教授在其《第四次工业革命》(The Fourth Industrial Revolution)中详细阐述了可植入技术、基因排序、物联网(IoT)、3D打印、无人驾驶、人工智能、机器人、量子计算(quanturn computing)、区块链、大数据、智慧城市等技术变革对“智能时代”人类社会的深刻影响。这次工业革命中大数据将逐步取代石油成为第一资源,其发展速度、范围和程度将远远超过前3次工业革命,并将改写人类命运以及冲击几乎所有传统行业的发展[1],建筑、景观与城市的发展也不例外。

1.2 第四范式:探索“数据密集型”科研新范式的紧迫性

第四次工业革命带来的数据爆炸正在改变我们的生活和人类未来。过去十几年,无论是数据的总量、种类、实时性还是变化速度都在呈现几何级别的递增[2]。截至2013年全世界电子数据已经达到460亿兆字节,相当于约400万亿份传统印刷本报告,它们拼接后的长度可以从地球一直铺垫到冥王星。而仅仅在过去2年里,我们创造的数据量就占人类已创造数据总量的90%[3]。大数据已被视为21世纪国家创新竞争的重要战略资源,并成为发达国家争相抢占的下一轮科技创新的前沿阵地[4]。

虽然数据大井喷带来了符合“新摩尔定律”的数据大爆炸,2020年全世界产生的数据总量将是2009年数据总量的44倍[5],但是由于缺乏应对数据大爆炸的新型研究范式,世界上仅有不到1%的信息能够被分析并转化为新知识[6]。Anderson指出这种数据爆炸不仅是数量上的激增,更是在复杂度、类别、变化速度和准确度上的激增与相互混合。他将这种混合型爆炸定义为“数据洪水”(data deluge),认为在出现新的研究范式以前,“数据洪水”将成为制约现有所有学科领域科研发展的瓶颈[7]1。在城市和建筑设计领域,尽管我们早已经生活在麻省理工学院米歇尔教授(William J. Mitchell)生前预言的“字节城市”(city of bits)里[8],但是却远远没有赋予城市研究与设计“字节的超能量”(power of bits)[9]。我们必须开发新型研究范式以应对“数据洪水”的强烈冲击。

常规研究方法与传统范式越来越捉襟见肘,这迫使一些科学家探索适合于大数据和人工智能的新型研究范式。Bell、Hey和Szalay预警道,所有研究领域都必须面对越来越多的数据挑战,“数据洪水”的处理和分析对所有研究科学家至关重要且任务繁重。Bell、Hey和Szalay进而提出了应对“数据洪水”的“第四范式”。他们一致认为:至少自17世纪牛顿运动定律出现以来,科学家们已经认识到实验和理论科学是理解自然的2种基本研究范式。近几十年来,计算机模拟已成为必不可少的第三范式:一种科学家难以通过以往理论和实验探索进行研究的新标准工具。而现在随着模拟和实验产生了越来越多的数据,第四种范式正在出现,这就是执行数据密集型科学分析所需的最新AI技术[10]。

Halevy等指出,“数据洪水”表明传统人工智能中的“知识瓶颈”,即如何最大化提取有限系统中的无限知识的问题,将可以通过在许多学科中引入第四范式得到解决。第四范式将运用大数据和新兴机器学习的方法,而不再纯粹依靠传统理论研究的数学建模、经验观察和复杂计算[11]。

美国硅谷科学家Gray、Hey、Tansley和Tolle等总结出数据密集型“第四范式”区别于以前科研范式的一些主要特征(图1)[12]16-19:1)大数据的探索将整合现有理论、实验和模拟;2)大数据可以由不同IoT设备捕捉或由模拟器产生;3)大数据由大型并行计算系统和复杂编程处理来发现隐藏在大数据中的宝贵信息(新知识);4)科学家通过数据管理和统计学来分析数据库,并处理大批量研究文件,以获取发现新知识的新途径。

第四范式与传统范式在研究目的和途径上的差异主要表现在以下方面。传统范式最初或多或少从“为什么”(why)和“如何”(how)之类的问题开始理论构建,后来在“什么”(what)类问题的实验观测中得到验证。但是,第四范式的作用相反,它仅从数据密集型“什么”类问题的数据调查开始,然后使用各种算法来发现大数据中隐藏的新知识和规律,反过来生成为揭示“如何”和“为什么”类问题的新理论。安德森在其《理论的终结:数据洪水使科学方法过时了?》(“The End of Theory: The Data Deluge Makes the Scientific Method Obsolete?”)一文中指出, 首先,第四范式并不急于从烦琐的实验和模拟,或严格的定义、推理和假设开始理论构建;相反,它从大型复杂数据集的收集和分析开始[7]1。其次,隐藏在这些庞大、复杂和交织的数据集中的宝贵知识很难处理,通常无法使用传统的科学研究范式完成知识 发现[12]16-19。

在国内外,使用第四范式进行城市和风景园林研究的探索仍处于起步阶段,其方法和目标多种多样。由于篇幅所限,本研究直接使用了一些开放数据,并将其与荷兰政府关于宜居性的调查结果相结合,重点是将“宜居性”(livability)作为机器学习的预测目标,以引入系统的数据密集型研究方法论证第四范式在城市和风景园林研究中的可行性和实用前景。

2 研究现状与研究目标

2.1 国际经典范式城市宜居性相关研究简述

宜居性是衡量与评价城市与景观环境可居性与舒适性的重要指标,近年来更是成为智慧城市开发的重要切入点,澳大利亚-新西兰智慧城市委员会(The Council of Smart Cities of Australia/New Zealand)执行主席Adam Beck将智慧城市定义为:“智慧城市将利用高科技和大数据加强城市宜居性、可操作性和可持续性。”[13]

“Eudaimonia”(宜居性)在西方最初由亚里士多德提出,意味着生活和发展得很好。长期以来,关于宜居性并没有统一的定义,它在不同城市发展阶段、不同地区和不同学科领域有多样化的含义与运用,这导致了宜居性概念上的混乱和可操作性上的难度。尽管宜居性缺乏统一的认知和可量化的度量系统,多年来,经典理论研究尝试从经济、社会、政治、地理和环境等维度来探索宜居性的相关指标。

Balsas强调了经济因素对宜居性的决定性作用,他认为较高的就业率、人口中不同阶层的购买力、经济发展、享受教育和就业的机会、生活水准是决定宜居性的基础[14]103。Litman也指出人均GDP对宜居性的重要影响。同时,居民对于交通、教育、公共健康设施的可及性和经济购买力也应被视为衡量宜居性的重要指标[15]。Veenhoven的研究也发现GDP发展程度、经济的社会需求和居民购买力对于评价宜居性起到重要作用[16]2-3。

Mankiw对以经济作为单一指标评价宜居性提出批评,他认为仅仅用人均GDP维度来衡量宜居性是不够的[17]。Rojas建议其他维度也必须纳入考虑范围,例如政治、社会、地理、文化和环境的因素[18]。Veenhoven增加了政治自由度、文化氛围、社会环境与安全性作为宜居性评价指标[16]7-8。

Van Vliet的研究表明,社会融合度、环境清洁度、安全性、就业率以及诸如教育和医疗保健等基础设施的可及性对城市宜居性具有直接影响[19]。Balsas还承认,除了经济以外,诸如完备的基础设施、充足的公园设施、社区感和公众参与度等因素也对城市宜居性的提升发挥了积极作用[14]103。

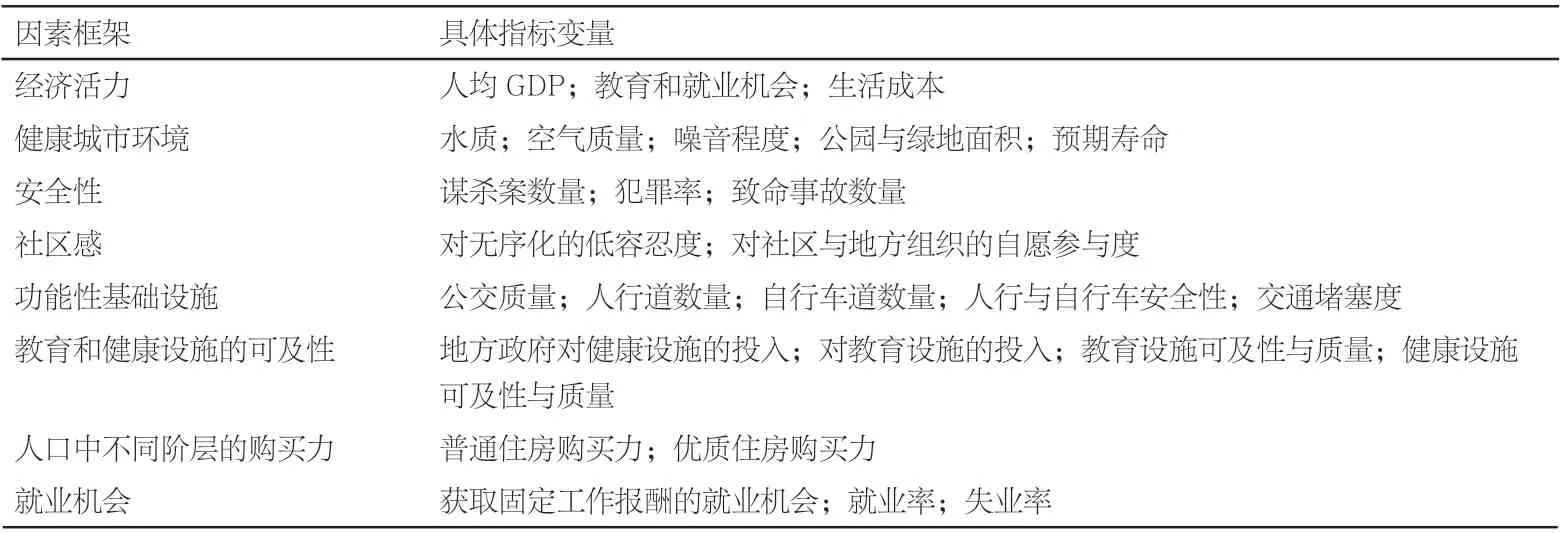

尽管上述学者提出了评估宜居性的政治、社会、经济和环境因素,但他们并没有给出评估城市宜居性的具体指标建议。Goertz试图通过整体性的3层方法系统地评估宜居性,从而整合上述要素,并为每个子系统提出相关的评估指标。第一层界定了宜居类型,第二层构建了因素框架,而第三层则定义了具体指标变量[20](表1)。

表1 根据Goertz三层结构系统总结的宏观宜居性因素框架与相关指标变量Tab. 1 The framework of macro livability factors and related measurement indexes based on Goertz’s three-tiers system

通过对宜居性经典研究方法进行总结和整合,Goertz在宏观层面对宜居性进行了定性研究,其中一些因素为未来的定量研究指明了方向。但是,他未能在中观层面上呈现不同城市系统中相应的可控变量。作为回应,Sofeska提出了一个中观层面的城市系统评价因素框架[21],包括安全和犯罪率、政治和经济稳定性、公众宽容度和商业条件;有效的政策、获得商品和服务的机会、高国民收入和低个人风险;教育、保健和医疗水平、人口组成、寿命和出生率;环境和娱乐设施、气候环境、自然区域的可及性;公共交通和国际化。Sofeska特别强调了建筑质量、城市设计和基础设施有效性对中观层面城市系统宜居性的影响。

超越宏观的政治、经济、社会、环境体系和中观的城市体系,Giap等认为“宜居性”更应该是一个微观的地域性概念。在定性研究微观的社区尺度的宜居性时,他更强调城市生活质量与城市物质环境质量对宜居性的微观影响,并将绿色基础设施作为一个重要的指标[22]。因此,Giap等提出在社区单元尺度上定性研究宜居性的重要性。

但是无论Goertz、Sofeska还是Giap都未建立关于社区宜居性的定量评价指标体系和对应的预测方法。目前对宜居性作定量分级调查主要来自两套宏观系统:基于六大指标体系的经济学人智库(Economist Intelligence Unit, EIU)宜居指标与基于十大指标体系的Mercer生活质量调查体系(Mercer LLC)。不过这两套体系仅提供了主要基于经济指标的不同城市之间宜居性的宏观分级比较工具,在社区微观层面进行量化研究和预测时并不具备可操作性[23]。

2.2 荷兰城市宜居性相关研究简述

在荷兰语大辞典中,“leefbaarheid”(宜居性)的一般定义如下:“适合居住或与之共存”(荷兰语:geschikt om erin of ermee te kunnen leven)。因此,荷兰语境的宜居性实际是关于主体(有机体,个人或社区)与客体环境之间适宜和互动关系的陈述[24]。

1969年,Groot将宜居性描述为对获得合理收入和享受合理生活的客观社会保障,充分满足对商品和服务需求的社会主观认识。在这个偏经济和社会目标的定义中,对客观和主观宜居性的划分隐含其中。客观保障涉及实际可记录的客观情况(劳动力市场、设施、住房质量等);主观意识涉及人们体验实际情况的主观方式[25]。在20世纪70年代,宜居性进入了地区政治的视角。人们意识到宜居性的中心不应该是建筑物,而是人;物质生活不仅在数量,更在质量。当时的鹿特丹市议员Vermeulen将这一社会概念的转换描述为:“你可以用你住房的砖块数量算出你房子的大小,但却无法知道它的宜居性。” 2002年,荷兰社会和文化规划办公室(The Social and Cultural Planning Office of The Netherlands)对宜居性给出了以下描述:物质空间、社会质量、社区特征和环境安全性之间的相互作用。2005年Van Dorst总结了宜居性的3个视角:显著的宜居性是人与环境的最佳匹配;以人为本体验环境的宜居性;从可定义的生活环境去推断宜居性[26]。

荷兰政府自1998年以来设计并分发了大量调查问卷,定期对全国部分地区进行宜居性调查和统计。调查问卷的内容主要包括:基于居住环境、绿色与文体设施、公共空间基础设施、社会环境、安全性等方面的满意度,对宜居性从极低到极高(1~9)打分。

同时, 荷兰住建部(Ministerie van Volkshuisvesting, Ruimtelijke Ordening en Milieu, VROM)委托国立公共健康和环境研究院(Rijksinstituut voor Volksgezondheid en Milieu, RIVM)以及荷兰建筑环境研究院(Het Research Instituut Gebouwde Omgeving, RIGO)依据调查问卷结果进行深入分析。他们首先对过去150年内建筑、城市规划、社会学、经济学角度关于宜居性的研究进行了相关文献梳理,发现对于宜居性的定义和研究长期以来存在广泛的不同定义甚至分歧。他们通过文献研究认为:如果想在关于宜居性的研究领域取得突破,那么就必须建立一个超越当前文献中学科差异的关于宜居性的多学科融合的理论框架。为此,他们提出了与客观环境相对应的宜居性、感知和行为的主观评估系统。该系统包括:研究环境和人的各方面如何影响对生活环境宜居性的感知;纵向研究宜居性的交叉特征;对宜居性决定性因素进行跨文化比较,旨在根据时间、地点和文化确定普遍要素、基本需求和相对要素。

从调查问卷结果来看,该研究认为生活质量是连接人的主观评价和客观环境的一个研究切入点。而在评价生活质量时,社区尺度的环境、经济和社会质量的因子选择是至关重要的。他们列出50个因子作为评价荷兰社区宜居性的指标,并将这些因子分为居住条件、公共空间、环境基础设施、人口构成、社会条件、安全性等几个领域(图2)。他们提出尽快利用大数据和开发高级AI预测工具支持城市建设、决策和制定规划的紧迫性[27]。

2.3 研究目标与框架

为了响应后工业社会的到来,Battey在 20世纪90年代率先提出了“智慧城市”的概念。由于当时的大数据还处于初期阶段,Battey只强调了互联网技术在增强信息交流和城市竞争力中的重要性[28]。鉴于智慧城市的内涵太广泛,并且涉及整个城市系统,因此智慧城市很难获得统一的认同。目前,一个由6个子系统构成的智慧城市框架逐步被许多学者接受,其中智慧公民、智慧环境和智慧生活是3个重要的环节[29]。这符合荷兰宜居性理论研究关键结论中关于社区居民、主观宜居性和客观环境质量间互动关系的描述。

如RIVM和RIGO研究中心的结论所示,经典分析研究结果和问卷调查结果基本吻合。但是他们认为所有使用传统范式的研究都存在一定局限性,无论它们来自问卷、观察、系统理论、数学模型还是统计方法,在方法论创新上都没有太大的区别。因此,除了传统研究之外,有必要探索利用大数据的新方法并开发先进的AI工具,这也将为宜居性评估创造新条件。第四范式带来的上述大数据的挑战和机遇恰好为这种转变提供了机会。这项研究的目的就是开发一种基于机器学习的新型数据密集型AI工具箱,以监测荷兰人居环境中的宜居性。

新工具箱旨在最大限度地从所有开源数据中提取数据并开发相关变量,然后它将通过高级数据工程和数据库技术完成转换、集成和存储数据。此后,宜居性问卷结果获取的宜居性等级将作为机器学习中的预测目标。在数据仓库中,将来自调查问卷的历史宜居性等级与同一历史时期内最相关的变量进行集成,以建立机器学习的预测模型。先进的AI算法能够根据最相关变量的新的数据输入来预测未来的宜居性。同时,可以将其与传统范式得出的结论进行比较,以确定通过第四范式发现新知识的有效性和优势。新的输入可以支持机器学习的再训练,以改善模型。图3总结了基于大数据的关键研究框架。

3 研究方法与过程

基于大数据爆炸及相伴产生的第四范式,这种新的预测工具箱将不依赖于现有常用研究范式,而是首先搜寻可用的开源大数据、再通过数据密集型的机器学习来研究这些数据,并构建算法和预测模型。就可以在提供相应参数的情况下对任何社区的宜居性进行科学预测甚至提前干预,为智慧城市的宜居性评价和规划打下基础。

3.1 数据转录与初步建模

社区宜居性等级的历史记录是通过RIVM问卷和RIGO研究获得的,而这些社区的人口、经济、社会和环境领域的所有可用变量均来自同一时期荷兰中央统计局(Het Centraal Bureau voor de Statistiek, CBS)和其他开源数据的数据集。这2个数据集可以通过它们的邮政编码相互连接,派生出的数据用于形成可能的机器学习数据集。由于数据来源不同、格式不同、规模大且杂乱无章,并且具有不同的实时更新频率,因此它们符合大数据的最典型特征,即“四个V”:数量(volume)、种类(variety)、准确性(veracity)和速度(velocity)[30]。首先必须执行必要的数据工程学流程,以满足机器学习对数据质量的基本要求。

量子力学的先驱,维尔纳·海森堡指出,“必须记住,我们观察到的不是自然本身,而是暴露在我们质疑方法下的自然”。传统范式研究带来的主观认知局限性是显而易见的。而人工智能和大数据的涌现,无疑提供了第四范式这样一个更加客观的认知方法论,来分析隐藏在繁杂多变的数据后面的神秘自然规律。因而,Wolkenhauer将数据工程总结为认知科学和系统科学的完美结合,并称之为知识工程中数据和模型相互匹配的最佳实践(图4)[31]。

根据Cuesta的数据工程学工艺[32],在预处理繁复数据集合时,我们可以通过数据流程管理、数据库设计、数据平台架构、数据管道构建、数据计算机语言脚本编程等关键流程来实现数据的获取、转换、清理、建模和存储。这个复杂过程可以用最典型的ETL(Extract-Transform-Load)流程简述(图5)。

在通过上述复杂过程对所有不同来源的数据进行清理和规范化处理之后,应为数据仓库(data warehouse, DWH)的构建设计合适的数据模型。数据应定期存储在数据仓库中,以利于深入分析并及时进行机器学习。基于当前数据环境,并以宜居性为核心预测目标,设计了星形数据模型。在此关系数据模型中,在中心构建记录每个社区宜居性级别的事实表,并通过主键和外键将其与各个域的维度表相关联。该数据模型的设计参考了上述针对宜居因子分类框架的经典研究方法的相关结果(表1,图2)。6个主要维度分别是人口维度、社会维度、经济维度、住房维度、基础设施维度、土地利用和环境维度(图6)。

3.2 数据清理

实际上,通过前数据工程收集和建模得到的源数据通常高度混乱,整体质量低下,不适合直接用于机器学习。需要进行广泛的数据清理,以符合机器学习对数据质量的基本要求。尽管它绝对不是机器学习最动人的部分,但它代表了每个专业数据科学家必须面对的流程之一。此外,数据清理是一项艰巨而烦琐的任务,需要占用数据科学家50%~80%的精力。众所周知,“更好的数据集往往胜过更智能的算法”。换句话说,即使运用简单的算法,正确清理过的数据集也能提供最深刻的见解。当数据“燃料”中存在大量杂质时,即使是最佳算法(即“机器”)也无济于事。

鉴于数据清理工作如此重要,我们首先必须明白什么是合格的数据。一般而言,合格数据应该至少具备以下质量标准(图7)[33]。 1)有效性。数据必须满足业务规则定义的有效约束或度量程度的有效范围,包括满足数据范围、数据唯一性、有效值、跨字段验证等约束。2)准确性。与测量值或标准以及真实值、唯一性和不可重复性的符合程度。为了验证准确性,有时必须通过访问外部附加数据源来确认数值的真实性。3)完整性。数据的缺省或者缺失以及各范围的数据值的完整分布对机器学习的结果将产生相应的影响。如果系统坚持某些栏位不应为空,则可以通过指定一个表示“未知”或“缺失”的值,仅仅提供默认值并不意味着数据已具备完整性。4)一致性。指的是一套度量在整个系统中的等效程度。当数据集中的2个数据项相互矛盾时,就会发生不一致。数据的一致性包括内容、格式、单位等。

显然,不同类型的数据需要不同类型的清理算法。在评估了适用于机器学习的宜居性数据集的特征和初始质量之后,应使用以下方法清理所提议的数据。1)删除不需要的或无关的观察结果。2)修正结构错误。在测量、数据传输或其他“不良内部管理”过程中会出现结构错误。3)检查数据的标签错误,即对具有相同含义的不同标签进行统一处理。4)过滤不需要的离群值。离群值可能会在某些类型的模型中引起问题。如果有充分的理由删除或替换了异常值,则学习模型应表现得更好。但是,这项工作应谨慎进行。5)删除重复数据,以避免机器学习的过度拟合。6)处理丢失的数据是机器学习中一个具有挑战性的问题。由于大多数现有算法不接受缺失值,因此必须通过“数据插补”技术进行调整,例如:删除具有缺失值的行;用“0”、平均值或中位数替换丢失的数字标值。缺失值也可以使用基于特殊算法的其他非缺失值的变量来估算。实际的具体处理应根据值的实际含义和应用场景确定。

通过以上专业操作,数据中重复或者相同社区不同名字的社区单元被合并,同样社区的不同数字标签经过自动对比与整合,错误的标签和数据被校正,一些异常值经再度确认后将被删除,对重复数据进行去重复处理。缺失的数据按不同情况进行相关“数据插补”处理后,使数据基本达到机器学习的要求。

3.3 特征工程

作为数据预处理的重要组成部分,特征工程有助于构建后续的机器学习模型以及知识发现的关键窗口。特征工程是一种搜索相关特征的过程,该特征可以利用AI算法和专业知识来最大化机器学习的效率,还用作机器学习应用程序的基础。但是提取特征非常困难且耗时,并且该过程需要大量的专业知识。斯坦福大学教授Andrew Ng指出:“应用型机器学习的主要任务是特征工程学。”[34]

在这个宜居性机器学习模型中,已经研究了维度表局域各自的特征,以获得局部特征的排名。接下来对整个全域的维度表进行研究以获取全局特征的排名。局部特征排名能够了解局部各维度特征对宜居性的影响,从而有助于发现隐藏在大数据中的新知识。全局特征被用于为机器模型构建特定的算法,以达到最高的预测精度。

通过机器学习发现:人口维度、社会维度、经济维度、住房维度、基础设施维度以及土地利用与环境维度的局部特征对宜居性的局部影响权重排序各不相同(图8)。现有数据显示,土地利用与环境因素对宜居性的影响是最不均衡的。

在第一组维度(人口维度)中,宜居性影响因子权重排名最靠前的依次是社区婚姻状态、人口密度、25~44岁人口比率、带小孩家庭数量等人口特征。提示只有一定的人口密度才能形成宜居性,而青壮年人口、婚姻稳定状态、有孩子家庭数量对社区宜居性具有良性作用。

在第三组维度(经济维度)中,宜居性影响因子权重排名最靠前的依次是家庭平均年收入、每家拥有汽车平均数、家庭购买力等方面的指标。

在第四组维度(住房维度)中,宜居性影响因子权重排名最靠前的依次是政府廉租房比率、小区内买房自居家庭数、新建住宅数、房屋空置率等方面的指标。

在第五组维度(基础设施维度)中,社区内超市数量、学校托幼机构数量、医疗健康机构数量、健身设施数量、餐饮娱乐设施数量、这些设施的可及性对社区宜居性影响较大。

在第六组维度(土地利用与环境维度)中,城市化程度、公园、绿道、水体、交通设施以及到不同土地功能区的可达性对社区宜居性影响较大。

全局特征组已被应用来建立用于构建机器学习模型的特定算法,以获得最高的预测精度。在按全局变量影响因子排序的140个可收集变量中,只有第二组维度(社会维度)、第三组维度(经济维度)和第四组维度(住房维度)的变量出现在最具影响力因子前20名中。这表明,总体而言,社会、经济和住房方面对社区的宜居性具有更大的影响。通过对具体排名简化整合,以下综合因素出现在最具影响力因子前10名中,是影响社区宜居性的最具决定性因素:获得社会救济的人口比例、非西方移民比例、政府廉租住房比率、购买住房的平均市场价格、高收入人口比率、固定收入住户数量、新住房数量、总体犯罪率、户年均天然气消耗量以及户年均电力消耗量(图9)。

完成上述必要的数据扫描和研究工作后,我们将进入机器学习的最核心阶段:开发算法并优化模型。这个阶段的工作重点是获得最佳的预测结果。

4 研究结果

4.1 机器学习关键过程的生成

因为必须先标注数据以便指定预测目标从而进行学习,所以本机器学习实际上为监督式机器学习(supervised machine learning)。在进行机器学习前通过必要的数据扫描和研究,得到一个初步的数据评估结论。根据上述数据清理工程原理进行大量的数据清理,得到满足机器学习基本标准的数据。再通过上述数据特征工程得到综合简化后的10个最强影响因子作为预测因子参与后续机器学习,以便生成算法和建模。

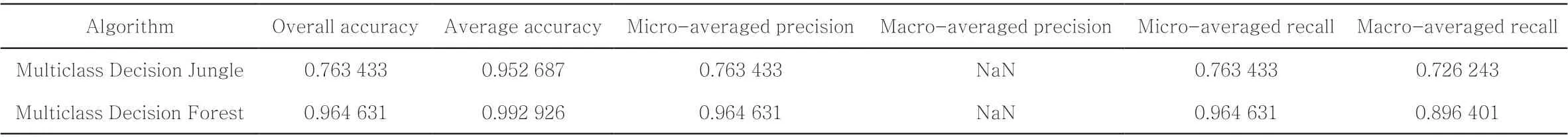

然后将上述数据集划分为训练数据集和测试数据集,划分比例为7∶3。将第一组数据集(训练数据)输入机器学习算法得到训练模型,对训练模型进行打分。将第二组数据集(测试数据)输入训练模型进行比较和评估。本机器学习目标是宜居性的不同等级,属于多分类问题机器学习,拟采取两组常用决策林算法进行比选优化:多类决策丛林算法和多类决策森林算法。这2种通用算法的工作原理都是构建多个决策树,然后对最常见的输出类进行投票。投票是一种聚合形式,在这种形式中,分类决策林中的每个树都输出标签的非规范化频率直方图。聚合过程对这些直方图求和并对结果进行规范化处理,以获取每个标签的“概率”。预测置信度较高的树在最终决定系综时具有更大的权重。通常,决策林是非参数模型,这意味着它们支持具有不同分布的数据。在每个树中,为每个类运行一系列简单测试,从而增加树结构的级别,直到到达叶节点(决策)能最佳满足预测目标。

该机器学习的决策森林分类器由决策树的集合组成。通常,与单个决策树相比,集成模型提供了更好的覆盖范围和准确性。通过后台代码将算法部署到云计算环境中运行,生成的该机器学习的具体工作流程如图10所示。在部署到云端之后,仍然需要在后台定期使用新输入的数据来改进算法,换言之,通过重新训练来更新和完善数据模型和算法。图11显示了机器学习的整个生命周期。

随后根据细过滤器滤料要求,从仓库调拨合适粒径的细石榴石,粗石榴石,无烟煤3种滤料,对细过滤器进行彻底的清罐防腐,对集水器分水器结构进行了检查并按照设计滤料厚度更换滤料。滤料更换完成后,注水系统恢复正常(图3)。

4.2 机器学习结果

从机器学习的背景中提取出两组算法的混淆矩阵(图12)。与多类决策丛林算法相比,多类决策森林算法具有更好的性能。多类决策丛林算法的主要错误是,易将宜居性 1~2级高估为3~4级,且对5~9级的预测不够准确。在多类决策森林算法中很少发生类似的错误,因此它具备更好的总体性能。另外,多类决策丛林算法总体预测准确率为76%,而多类决策森林算法总体预测准确率为96%,高于前者,所以决定在云端生产环境中部署多类决策森林算法(表2)。

表2 两种不同机器学习算法的预测性能比较Tab. 2 A comparison of predictive performances of two different machine learning algorithms

对全荷兰人居环境宜居性进行反复机器学习和预测后,可在全国地图上进行可视化和监测(图13)。其中颜色越偏绿的区域宜居性越高,越偏红的地方宜居性越低。该图显示预测宜居性高低分布全国相对均衡,在链型城市带(Randstad)和靠近德国东部地区的高宜居性区域相对比较集中。宜居性比较低的相对集中在近年围海造地形成的新省份弗莱福兰(Flevoland),可能是人口密度较低以及配套基础设施比较滞后造成的。

此外,该预测工具还能够对中观层面的城市群和微观层面的社区进行深入的研究和预测。如大鹿特丹地区和海牙地区的宜居性预测结果表明(图14),一些老城区的市区宜居性不高,而郊区的宜居性通常较高。特别是鹿特丹和代尔夫特交界处的北郊,以及海牙西北部的沿海地区,相对宜居且人口密集。

5 结论与展望

第四范式荷兰智慧城市宜居性预测研究的主要结果表明,基于可用大数据和必要的数据工程,由人工智能算法可直接推演得到最影响人居环境宜居性的十大主导要素简化后总结为:获得社会救济的人口比例、非西方移民比例、政府廉租住房比率、购买住房的平均市场价格、高收入人口比率、固定收入住户数量、新住房数量、总体犯罪率、户年均天然气消耗量以及户年均电力消耗量。此外,可以通过输入最新数据集和机器再学习改进模型来更新变量,以执行环境宜居性的实时预测。

这项研究的结果可以应用于宜居性分析的4个不同阶段(图15):宜居性描述研究、宜居性诊断研究、宜居性预测研究、宜居性预视研究。因此,它可以根据实时更新的大数据和经过重新训练的算法,对人类住区中的宜居性进行监测和早期干预。

将根据第四范式进行的本研究与前述传统范式的研究进行比较,发现不需要依赖传统人工智能系统的专家体系(expert system)或者专业研究人员的长期大量研究积累就能得到一些最有效的知识发现和高精度的预测模型。通过机器学习得到的宜居性研究结论,无论在主导要素、局域特征还是全局特征上,基本与RIVM与RIGO宜居性的相关定性研究结果相互吻合。此外,本研究能够以定量的方式对预测中最具决定性的因素进行快速排名,从而使科学研究更加高效、快捷,并且实现实时数据更新和预测。

本研究可用数据集中的土地利用与环境簇群依然偏少,导致其锥型图比较尖锐。这个不足之处需要在将来通过收集更多土地利用与环境相关变量执行强化学习,进行更多的知识发现,拓宽预测模型的观察视野。

另外,在收集和处理实时大量数据时需要更强大的数据收集与处理能力、计算能力和更复杂的运算环境。通过最新的5G、物联网和量子计算等新科技,将来研究可以收集更多、更复杂甚至非结构性实时数据扩展当前研究,从而具备更广阔的智慧城市运用前景。

图表来源:

图1引自参考文献[12];图2引自参考文献[27];图3、5、6、8~14由作者绘制;图4引自参考文献[31];图7引自参考文献[33];图15由作者根据Gartner概念绘制;表1~2由作者绘制。

(编辑/王一兰)

The Fourth Paradigm: A Research for the Predictive Model of Livability Based on Machine Learning for Smart City in The Netherlands

WU Jun

1 Research Background

1.1 The Fourth Industrial Revolution: Entering a New Age of Human-Orientated Artificial Intelligence

Since the mid-18th century, mankind has gone through three industrial revolutions. The first industrial revolution had ushered in the“steam age” (1760—1840), which marked our transition from agriculture to industry, and represented the first great miracle in the history of human development. The second industrial revolution had launched the“electric age” (1840—1950), which led to the rise of heavy industries such as electricity, steel, railways, chemicals and automobiles, with oil becoming a new energy source. It promoted the rapid development of transport and more frequent exchanges among countries around the world, and reshaped the global political and economic landscape. The third industrial revolution, which began after the two world wars, had initiated the“information age” (1950—present). As it becomes easier to carry out global exchanges of information and resources, most countries and regions are involved in the process of globalization and informatization, and human civilization has reached an unprecedented level of development.

On the 20th January of 2016, the World Economic Forum held its annual meeting in Davos, Switzerland, with a focus on the theme, “The fourth industrial revolution”, and officially heralded the arrival of the fourth industrial revolution that shall bring about a radical change to the global development process. In his address onThe Fourth Industrial Revolution, Professor Schwab, founder and executive chairman of the World Economic Forum, had elaborated on the profound impact of new technologies, such as implantable technology, genetic sequencing, Internet of Things(IoT), 3D printing, autonomous vehicle, artificial intelligence, robotics, quantum computing, blockchain, big data, and smart cities, on the human society in the“Age of Intelligence”. In this industrial revolution, big data will gradually replace oil as the first resource. The pace, scope, and extent of its development shall far exceed those of the previous three industrial revolutions. It shall rewrite the fate of mankind and make a great impact on the development of almost all traditional industries, including architecture, landscape and urban development[1].

1.2 The Fourth Paradigm: The Urgency of Exploring the New Paradigm of“Data-Intensive” Research

Our future and our lives are being changed by the data explosion brought about by the fourth industrial revolution. Over the last decades, there had been an exponential growth in the total volume, variety, veracity of data, as well as the velocity in data[2]. In 2013, the amount of electronic data generated worldwide had reached 46 billion terabytes, equivalent to about 400 trillion traditional printed reports, which could have paved the way from Earth to Pluto. In the past two years alone, we have generated more than 90% of the data worldwide[3]. Regarded as an important strategic resource in nations that are competing for innovation in the 21st century, big data is at the forefront of the next round of scientific and technological innovation that developed countries are scrambling to seize[4].

Despite the“New Moore’s Law” data explosion caused by the big data blowout, and the worldwide generation of 44 times more data in 2020 than in 2009[5], less than 1% of the information in the world can be analyzed and translated into new knowledge[6], due to the lack of a new research paradigm to respond to the data explosion. Anderson pointed out that apart from triggering a surge in quantity, data explosion also represents a mixture and explosion of data in terms of volume, variety, veracity, and velocity. He defined this hybrid explosion as a“data deluge” and argued that the“data deluge” would become a bottleneck for scientific researches in all existing disciplines before the emergence of a new research paradigm[7]1. Although we are already living in the“city of bits” as predicted by the late MIT professor William J. Mitchell in the field of urban and architectural design[8], we are still a distance away from the acquisition of“power of bits” in the urban research and design sector[9]. We must develop a new research paradigm to counter the strong impact of data deluges.

The conventional research methods and traditional paradigms are becoming increasingly inadequate. This has compelled some scientists to explore new research paradigms that are suitable for big data and artificial intelligence. Bell, Hey and Szalay warned that all fields of research shall have to face increasing data challenges, and the handling and analysis of“data deluge” are becoming increasingly onerous and vital for all researchers and scientists. As such, Bell, Hey and Szalay proposed the“fourth paradigm” to deal with the“data deluge”. They concurred that: since Newton’s Laws of Motion in the 17th century or earlier, scientists have recognized that experimental and theoretical sciences are two basic research paradigms for the understanding of nature. In recent decades, computer simulation has become an essential third paradigm and a new standard tool for scientists to explore hard-to-reach areas of theoretical research and experimentation. Nowadays, as an increasing amount of data is generated by simulations and experiments, the fourth paradigm is emerging, and this shall be the latest AI technology desired to perform data-intensive scientific research[10].

Halevy et al. pointed out that the“data deluge” highlighted the“knowledge bottleneck” in traditional AI. This indicates that the question of how to maximize the extraction of infinite knowledge with limited systems shall be solved and applied in many disciplines through big data and emerging machine learning algorithms brought forth by the fourth paradigm. This is in contrast with traditional paradigms that rely solely on pure mathematical modeling, empirical observation, and complex computation[11].

The scientists from the Silicon Valley, Gray, Hey, Tansley and Tolle, have summed up the key features of a data-intensive“fourth paradigm”, that is unlike earlier scientific paradigms, as follows(Fig. 1)[12]16-19: 1) Exploration of big data shall lead to an integration of existing theories, experiments, and simulations; 2) Big data can be captured by different IoT devices or generated by simulators; 3) Big data is processed by large parallel computing systems and complex programming to discover valuable information/new knowledge hidden in the big data; 4) Scientists shall obtain novel methodologies to acquire new knowledge through data management and statistical analysis of databases and mass research documents.

The differences between the fourth paradigm and the traditional paradigms in research purposes and approaches are mainly reflected in the following aspects. The traditional paradigms started with more or less a theoretical construction from“why” or“how”, and was later verified in more experimental observations of“what”. However, the fourth paradigm has the opposite effect. It initially starts with data-intensive“what” surveys, and then uses various algorithms to discover new knowledge and laws hidden in big data, which in turn become the“how” or“why” New theory. In his articleThe End of Theory: The Data Deluge Makes the Scientific Method Obsolete?, Anderson pointed out that, firstly, the fourth paradigm is not in a hurry to start theoretical construction from tedious experiments and simulations, or strict definitions, inferences, and assumptions. Instead, it starts with the collection and analysis of large and complex datasets[7]. Secondly, the valuable knowledge hidden in these huge, complex and intertwined datasets is hard to be processed with the traditional scientific research paradigms to discover new knowledge[12]17-19.

The exploration of urban and landscape research using the fourth paradigm is still in its infancy, both at home and abroad, with a myriad of methods and objectives available. Due to space limitations, this research has directly used some various open data integrated with the results from the governmental survey for livability in The Netherlands, focused on the“livability” as the predictive goal in the machine learning to introduce a systematic data-intensive research method to demonstrate the feasibility and prospects of the fourth paradigm in urban and landscape research.

2 Research Status and Objectives

2.1 Summary of the Relevant International Researches on Urban Livability with Traditional Paradigms

As an important indicator used to measure and assess the level of comfort and habitability in urban and landscape environments, livability has become a key point for the development of smart cities in recent years. Adam Beck, executive director of the Smart Cities Council, of Australia/New Zealand defines a smart city as, “The smart city is one that uses technology, data and intelligent design to enhance a city’s livability, workability and sustainability.”[13]

“Eudaimonia” (Livability) was first proposed in the West by Aristoteles, who defined it as“doing and living well”. For a long time, there had been no unified definition for livability. Instead, there are different implications and applications in different stages of urban development, in different regions, and in different disciplines. This has led to confusion and difficulty in implementing the concept of livability. Although livability lacks a unified and quantifiable measurement system, classical theoretical studies have attempted to explore relevant indicators of livability from the economic, social, political, geographical, and environmental dimensions over the last decades.

Balsas emphasized the decisive role of economic factors in livability. He argued that the foundation of livability is made up of factors such as high employment rates, affordability from a diverse population, economic development, living standards, and accessibility to education and employment[14]103. Litman also pointed out the significant impact of per capita GDP on livability. In addition, the accessibility of residents to transport, education, and public health facilities, as well as their economic power to afford such services, should be recognized as important indicators of livability[15]. Veenhoven’s research also found that the level of GDP development, social needs of the economy, and the purchasing power of residents were vital to the assessment of livability[16]2-3.

Mankiw criticized the use of economy as the only indicator to assess livability and argued that it was inadequate to measure livability solely using per capita GDP[17]. Rojas suggested that other dimensions, such as political, social, geographical, cultural, and environmental factors, should also be taken into account[18]. Furthermore, Veenhoven recommended political freedom, cultural atmosphere, social climate, and safety as additional indicators for the assessment of livability[16]7-8.

Van Vliet’s research showed that social integration, environmental cleanliness, safety, employment rate, and accessibility of infrastructures such as education and medical care had a direct impact on urban livability[19]. Balsa also conceded that factors other than the economy, such as completed infrastructure, adequate park facilities, community spirit, and public participation also played a positive role in urban livability[14]103.

Although the aforementioned scholars had proposed the political, social, economic and environmental foundations to evaluate livability, they have not recommended concrete indexes for the appraisal of urban livability. Goertz has tried to integrate the above elements by systematically evaluating livability with a holistic three-tiers approach and proposing relevant evaluation indicators for each sub-system. The first tier is composed of the types of livability, the second tier includes the framework of factors, while the third tier shows the variables of indicators[20](Tab. 1).

Tab. 1 The framework of macro livability factors and related measurement indexes based on Goertz’s three-tiers system

Through his summary and integration of classical livability, Goertz has made the qualitative research of livability feasible at the macro-level and some of the factors have indicated the direction for future quantitative research. However, he has failed to present the corresponding controllable variables in different urban systems at the meso-level. In response, Sofeska has proposed a framework of evaluation factors in urban systems at the mesolevel[21], including security and crime rates, political and economic stability, public tolerance, and business conditions; effective policies, access to goods and services, high national income and low personal risks; education, health and medical levels, demographic composition, longevity and birth rates; environment and recreation facilities, climatic environment, accessibility of natural areas; public transport, and internationalization. In particular, Sofeska hasemphasized the impact of building quality, urban design, and effectiveness of infrastructure on the livability of urban systems at the meso-level.

Apart from the nation’s political, economic, social, and environmental systems at the macrolevel, as well as urban systems at the meso-level, Giap et al. believed that“livability” should be a community concept at the micro-level. While carrying out the qualitative research of livability on the micro-community scale, he emphasized the micro impacts of the quality of urban life and the city’s physical environment on livability and regarded green infrastructure as the significant indicators[22]. Therefore, Giap et al. proposed the importance of qualitative research of livability on the community scale.

However, neither Goertz, Sofeska nor Giap have established a quantitative evaluation of index system and the corresponding method to predict community livability. At present, the quantitative classification survey of livability is mainly derived from two macro systems: Economist Intelligence Unit(EIU)’s Livability Index, which is based on six major indicators; and Mercer’s Quality of Living Survey, which is based on ten indicators. Nevertheless, these two systems provide only macrolevel tools to compare livability among different cities and are mainly based on economic indicators, hence they are not feasible in quantitative research and prediction at the micro-level of communities[23].

2.2 Summary of the Researches on Urban Livability in The Netherlands

In the Dutch dictionary, “leefbaarheid” (livability) is generally defined as: “suitable for living or coexistence” (“geschikt om erin of ermee te kunnen leven”). Therefore, livability in the Dutch context is actually a statement on the appropriate and interactive relationship between the subjects(living beings, individuals or communities) and the environment as the object[24].

In 1969, Groot described livability as the objective social security with the means to obtain a reasonable income and enjoy a reasonable life, thus fulfilling the social subjective understanding of demands for goods and services. This definition, which is in favor of economic and social objectives, implies the distinction between objective and subjective livability. The former involves tangible objective information(labor market, facilities, housing quality, etc.), while the latter relates to subjective ways that the actual situation is experienced by people[25]. Livability was brought into regional politics in the 1970s, when people realized that livability should not be centered on buildings. It was more important to consider the quality and not simply the quantity of our material lives. Vermeulen, a city councilor of Rotterdam, described the conversion of this social concept as, “You can figure out the size of your house with the number of bricks, but you can’t know its livability.” In 2002, the Social and Cultural Planning Office of The Netherlands provided the following description of livability: interactions between the physical space, social quality, community characteristics, and environmental security. In 2005, Van Dorst summarized three perspectives on livability: Remarkable livability is the optimal match between human and environment, the livability of the environment should be experienced in a peopleoriented approach, and livability should be inferred from a definable living environment[26].

Since 1998, the Dutch government has designed and distributed a large number of questionnaires to conduct livability surveys and gather the statistics in different parts of the country on a regular basis. The questionnaires mainly comprise livability scores(1-9), which reflect the people’s satisfaction with the living environment, green spaces, cultural and sports facilities, public space infrastructure, social environment, security and etc.

Simultaneously, the Dutch Ministry of Housing, Spatial Planning and the Environment(Ministerie van Volkshuisvesting, Ruimtelijke Ordening en Milieu, VROM) commissioned the Dutch Institute for Public Health and Environment(Rijksinstituut voor Volksgezondheid en Milieu, RIVM) and the RIGO Research Centre to conduct in-depth analysis based on the results of the questionnaires. After reviewing the literature on livability over the past 150 years from the perspectives of architecture, urban planning, sociology, and economics, RIVM and RIGO found that a wide range of differences and even divergences in the definition and research of livability had been present for a long time. Having studied relevant literature, the institutes believed that it was necessary to establish a theoretical framework for multi-disciplinary integration of livability beyond the differences across disciplines of current literature, so as to achieve breakthroughs in the research of livability. To this end, they proposed systematic screening of subjective assessment of livability, perceptions, and behaviors that correspond to the objective environment and relationships. They recommended studies on the influence of environment and people on the perception of environmental livability, longitudinal studies on the inter-disciplinary features of livability, as well as cross-cultural comparison of decisive factors in the assessment of livability, so as to identify the universal, basic, and relative elements based on time, location, and culture.

Based on results from the survey, this research identified the quality of life as a research hub to bridge subjective evaluation and objective environment. The quality of the environment, economy, and society at the community level is crucial in the evaluation of the quality of life. A total of 50 factors have been listed as indicators that can be used to evaluate the livability of Dutch communities. These factors have been divided into several clusters, such as living conditions, public spaces, environmental infrastructure, population composition, social conditions, and security(Fig. 2). They appealed the urgency of using big data and developing advanced AI forecasting tools to support urban design, decision-making in urban planning[27].

2.3 Research Objective and Framework

In response to the advent of the postindustrial society, Battey was the first to propose the concept of“smart city” in the 1990s. As big data was in its early stages at that time, Battey had only stressed the importance of Internet technology in the enhancement of information exchange and competitiveness of cities[28]. Due to its too extensive connotations and involvement in the entire urban system, it is difficult for smart cities to gain unified attention and acceptance. At present, a smart city framework with six sub-systems has been gradually accepted by many scholars, in which the smart citizen, smart environment, and smart life represent three important elements[29]. This conforms to the interactive relations among community residents, subjective livability, and objectively environmental quality in the key conclusions of the theoretical research of livability in The Netherlands.

As illustrated in the conclusions of the RIVM and the RIGO Research Centre, the classical analyses follow the results from the questionnaire. There are limitations in all studies using the traditional paradigms, and it makes no big difference whether or not they come from the questionnaire, observation, systematic theory, mathematical models, or statistical methods. Therefore, beyond the traditional studies, it is necessary to explore the new methodology using big data and developing advanced AI tools to create the basis for the integration of livability evaluation and prediction. The aforesaid big data challenges and opportunities from the fourth paradigm happen to provide an opportunity for this transit. The objective of this research is to develop such a novel dataintensive toolbox based on machine learning to monitor and even to predict livability in the Dutch settlement environment.

The new toolbox aims to maximize the application of all open-source data and develop referenced variables, after that it will transform, integrate and store the data through advanced data engineering and database technologies. Thereafter, the results of livability questionnaires shall be extracted as the target to be predicted in machine learning through the fourth paradigm. In the data warehouse the historical livability grades from the questionnaires are integrated with the most relevant variables at the same historical period, to build predictive models form the machine learning. The developed AI algorithms are able to predict future livability based on the new inputs of the most relevant variables. Consequently, it can be compared with those conclusions drawn from the traditional paradigms to identify the new knowledge discovered by the fourth paradigm. The new inputs can support the retraining in machine learning to improve the model as well. The big data based key research framework is summarized in Fig. 3.

3 Research Method and Process

Due to the big data explosion and the resulting fourth paradigm, the new prediction toolbox shall no longer rely on the existing and widely-used research paradigms. Instead, it shall firstly search for available open-source big data. After that, it will investigate the data via data-intensive machine learning and further build algorithms and prediction models. Thereafter, it may predict and even intervene to the livability of any community with the corresponding parameters in a scientific manner, thus laying a solid foundation for the evaluation and planning of livability in the smart city.

3.1 Data Engineering and Preliminary Data Modeling

The historical grades of community livability were obtained from RIVM and RIGO questionnaires, while all available variables on the population, economic, social, and environmental fields of these communities were obtained from the Dutch Central Bureau of Statistics(Het Centraal Bureau voor de Statistiek, CBS) and other open-source data at the same period. These two datasets are inner joined with each other by their postcodes correspondently. The derived data were used to form possible machine learning data sets. As the data came from different sources, in different formats, were large and disorganized, and had varying frequencies of real-time updates, they match the most typical features of big data, namely the“four V’s”: volume, variety, veracity, and velocity[30]. The necessary data engineering process must be carried out to meet the basic requirements for the data quality of machine learning.

Werner Karl Heisenberg, a pioneer in quantum mechanics, pointed out that, “It must be remembered that what we observe is not nature itself, but nature exposed to our questioning methods”. Consequently, the subjectively cognitive limitation of the traditional paradigm research is evident. The emergence of AI and big data undoubtedly lend a more objectively cognitive methodology, such as the fourth paradigm, to analyze the mysterious natural laws hidden behind the dynamic and complex data. Wolkenhauer summed up data engineering as the perfect combination of cognitive science and systems science, and called it the best practice for the matching of data and models in knowledge engineering(Fig. 4)[31].

According to Cuesta’s data engineering process[32], data acquisition, conversion, cleansing, modeling, and storage can be achieved through key steps such as data flow management, database design, data platform architecture, data pipeline construction, and data script throught computer language during the preprocessing of complex data sets. This complex process can be described using the most typical ETL process(Fig. 5).

After data from all different sources had been cleansed and normalized through the above complex processes, a suitable data model should be designed for the construction of the data warehouse(DWH). Data should be regularly stored in the data warehouse to facilitate in-depth analysis and timely machine learning. A star-schema data model is made based on the current data circumstances and with livability as the core predictive goal. In this relational data model, a fact table with livability grades from each community is found at the center and is associated with the dimensional tables of various domains through the primary and foreign keys. This data model has been designed with reference to the results from the aforesaid classical research based on the framework for livability factors classification(Tab. 1, Fig. 2). The six major dimensions are population dimension, social dimension, economic dimension, housing dimension, service facility dimension, land use and environment dimension(Fig. 6).

3.2 Process of Data Cleansing

In practice, source data collected and modeled through pre-data engineering are often highly disorganized, low in overall quality, and unsuitable for direct machine learning. Extensive data cleansing is required to meet the data quality input requirements for machine learning. While it is definitely not the“sexiest” part of machine learning, it represents one of the required courses for each professional data scientist. Furthermore, data cleansing can be a tough and exhausting“task” that takes up 50%-80% of the energy of a data scientist. It is known that“better data sets tend to outperform smarter algorithms”. In other words, a properly cleansed data set will deliver the deepest insight even with simple algorithms. When a large amount of impurities is present in your data“fuel”, even the best algorithm, i.e. “machine”, is of no help.

Given the importance of data cleansing, it is vital to understand the criteria of qualified data first. In general, qualified data should meet at least the following quality standards(Fig. 7)[33]. 1)Validity: The data must conform to valid constraints defined by the business rules or to the effective range of measures. They include constraints conforming to the data range, data uniqueness constraints, valid value constraints, and cross-field validation. 2)Accuracy: Degree of conformity to the measurements or standard, and to the true value, uniqueness, and nonrepetition. In general, it is sometimes necessary to verify values by accessing external data sources that contain true values as reference. 3)Integrity: Default or missing data and the complete distribution of data values across the ranges shall have a corresponding impact on the results of machine learning. If the system insists that certain fields should not be empty, it is possible to specify a value that represents“unknown” or“missing”. Merely providing the default value does not mean that the data is complete. 4)Consistency: The equivalences of a set of measurements throughout a system. Inconsistency occurs when two data items in a data set are contradictory. Data consistency includes consistency in data content, format, and unit.

It is apparent that different types of data require different types of cleansing algorithms. After evaluating the characteristics and initial quality of this livability data set for machine learning, the proposed data shall be cleansed using the following methods: 1) Delete unwanted or unrelated observation results. 2) Fix structural errors. Structural errors emerge during measurement, data transmission, or other“poor internal management” processes. 3) Check label errors of the data, i.e. initiate unified treatment of different labels with the same meanings. 4) Filter unwanted outliers. Outliers may cause problems in certain types of models. The learning model shall perform better if outliers have been deleted or replaced for good reason. However, this should be carried out with caution. 5) Carry out data deduplication to avoid the overfitting of machine learning. 6) Handle missing data is a challenging issue in machine learning. As most existing algorithms do not accept missing values, they have to be imputed through“Data Imputation” techniques such as: deleting the rows with missing values; replacing the missing numeric vale with 0, average or median values. Missing values can also be estimated using variables of other nonmissing values based on special algorithm. Actual specific treatment shall be determined according to the actual meanings and application scenarios of the values.

Application of the aforementioned professional operations shall result in the merging of community units with duplicate names or those with different names. Different digital labels of the same community are automatically compared and integrated. Error labels and data are corrected. Some outliers are deleted after reconfirmation, and repeated data are removed. After missing data has been processed according to the“Data Imputation”, the data shall basically meet the requirements of machine learning.

3.3 Feature Engineering

As an important part of data preprocessing, feature engineering helps to build the subsequent machine learning model, as well as a key window for knowledge discovery. Feature engineering is a process to search for relevant features that maximize the effectiveness of machine learning with AI algorithms and expertise. It also serves as the basis for machine learning application programs. However, it is very difficult and time-consuming to extract features, and the process requires a lot of expertise. Stanford professor Andrew Ng pointed out that, “‘Applied machine learning’ is basically feature engineering.”[34]

In this machine learning model for livability, the features of different domains of dimensional tables have been investigated to obtain the ranking of local features. This was followed by a research of the dimensional tables of the whole domains to acquire the ranking of global features. The local feature ranking allows the understanding of the impact of local features on livability, thus facilitating the discovery of new knowledge hidden in big data. Global features are applied to construct specific algorithms for machine models and they strive for the highest accuracy.

The machine learning discovered the influences from demographic dimension, social dimension, economic dimension, housing dimension, service facility dimension, as well as the land use& environmental dimension have very varied influences on livability(Fig. 8). In general, the impact of land use& environmental dimension appears the most uneven.

In the first set of dimensions(demographic dimension), the livability factors with the most weighted were the community marital status, the population density, the ratio of people aged 25-44 years, and the number of families with children(in this order). It suggested that only a certain population density was able to form livability, while the young and middle-aged population, stable marital status, and the number of families with children wield a positive effect on the livability of the community.

In the second set of dimensions(social dimension), the livability factors with the most weighted suggested that the proportion of population receiving social relief, non-western immigration ratio from Morocco, Turkey and Suriname, as well as community crime rates wield greater negative effects on the livability of the community.

In the third set of dimensions(economic dimension), the livability factors with the most weighted were the average annual household income, the average number of cars per home, and the household’s purchasing power(in this order).

In the fourth set of dimensions(housing dimension), the livability factors with the most weighted were the ratio of governmental social housing, the number of homeowners, the number of new housing, and the housing vacancy rate(in this order).

In the fifth set of dimensions(facilities dimension), factors such as the number of supermarkets in the community, the number of schools and childcare institutions, the number of health care institutions, the number of fitness facilities, the number of catering and entertainment facilities, and the distances to these facilities have a greater impact on the livability of the community.

In the sixth set of dimensions(land use dimension), the level of urbanization, the total amounts of parks, green corridors, water bodies, transportation facilities, and the distances to these facilities have a greater impact on the livability of the community.

The global features group has been applied to establish specific algorithms for the construction of the machine learning model, and it strives for the highest prediction accuracy. Among the 140 collectible variables that had been ranked by global variable impact factors, only the variables of the second set of dimensions(social dimension), the third set of dimensions(economic dimension), and fourth set of dimensions(housing dimension) have been ranked within the top 20 list. It suggested that as a whole, the social, economic and housing dimensions have a greater impact on the livability of the community. With regards to specific ranking, the following factors have been identified in the top 10 in this order after a simplification, thereby representing the most decisive factors affecting the livability of the community: the ratio of population receiving social relief, the ratio of non-western immigration, the ratio of government low-rent housing, the average market price of purchased housing for residence, the ratio of high-income people, the number of households with fixed incomes, the number of new houses, the overall crime rate, the annual consumption of natural gas, and the annual consumption of electricity(Fig. 9).

After completing the necessary workflows stated above, we shall move on to the core stage of machine learning to develop the algorithms and optimize the models, so as to obtain the best prediction results.

4 Research Results

4.1 Generation of Key Process of Machine Learning

As it is necessary to labelize data to specify the predicted target before machine learning, this research has chosen supervised machine learning. Prior to machine learning, a preliminary conclusion of data evaluation was obtained through necessary data scanning and research. According to the aforementioned principles of data cleansing engineering, large-scale data cleansing was carried out to obtain data that met the basic standard of machine learning. By adhering to the aforementioned principles of data feature engineering, the top 10 important factors were obtained and used as predictors in subsequent algorithm modeling in the machine learning.

Subsequently, the data sets were split into a training dataset and a test dataset, at a ratio of 7:3. The first dataset(training data) was entered into the machine learning algorithm to obtain the training model and the corresponding score. The second dataset(test data) was entered into the training model for comparison and evaluation. This is a type of multi-classification problem in machine learning and the goal is to obtain different grades of livability. Two groups of commonly used decision forest algorithms were planned for selection and optimization: Multiclass Decision Jungle and Multiclass Decision Forest. The two generic algorithms work by building multiple decision trees before voting on the most common output categories. The voting process serves as a form of aggregation, in which each tree in the classification decision forest outputs a non-normalized frequency histogram of the label. The aggregation process sums the histograms and normalizes the results to obtain the“probability” of each label. Trees with higher predictive confidence have greater weight in the final decision ensemble. In general, decision forests are nonparametric models, which means that they support data with different distributions. Within each tree, a series of simple tests were performed for each category, thus increasing the level of the tree structure until the leaf node(decision making) is reached, to best meet the predicted target.

The decision forest classifier of this machine learning is composed of the ensemble of the decision tree. In general, the ensemble models provide better coverage and accuracy, as compared with a single decision tree. Specific workflows of this machine learning through the back-ends codes running in the Cloud are shown in Fig. 10. Following the deployment to the cloud, the algorithm is still required to be improved with newly inputted data at the back-ends regularly, in another word, to update the data model and algorithm through retraining. The entire life cycle of machine learning is shown in Fig. 11.

4.2 Primary Results of Machine Learning

The confusion matrices of the two sets of algorithms can be extracted from the backends of machine learning(Fig. 12). The Multiclass Decision Forest algorithm had better performance as compared to the Multiclass Decision Jungle algorithm. The main errors in the Multiclass Decision Jungle algorithm were that some of the 1-2 grades of livability were overrated as the 3-4 grades. Meanwhile, the Multiclass Decision Jungle algorithm has a lower accuracy in predicting the livability grade 4-9. Similar errors had seldom occurred in the Multiclass Decision Forest, which thus provided better overall performance. In addition, the results showed that the overall prediction accuracy of Multiclass Decision Angle was 76%, and the overall prediction accuracy of Multiclass Decision Forest was 96%. Given the latter being higher than the former, the decision was made to deploy the Multiclass Decision Forest algorithm in the production environment in the Cloud(Tab. 2).

Tab. 2 A comparison of predictive performances of two different machine learning algorithms

Following the retraining of machine learning and prediction of livability in human settlements throughout The Netherlands, livability could be visualized and monitored on the national map(Fig. 13). The darker green areas are more livable, while the red areas are less livable. The figure shows a relatively balanced distribution of key predicted livable regions across the country, with relatively dense concentrations in the megalopolis(Randstad) and in some livable regions near eastern Germany. The relatively low livability regions are concentrated in the new province of Flevoland, which had been formed by land reclamation in recent years. It may be a result of low population density and poor infrastructure and services.

In addition, this prediction tool is able to perform in-depth research and local prediction of urban clusters at the meso-level and community blocks at the micro-level. Fig. 14 shows the prediction of livability in the Greater Rotterdam Area and the Greater Hague Area. The results indicated that livability in downtown areas of some old cities is not high, while that of the suburbs is generally higher. In particular, the northern suburbs of Rotterdam and the Delft junction, as well as the coastal areas of northwest Hague, are relatively livable and densely-concentrated.

5 Conclusion and Prospect

The primary results of this research on the prediction of the livability of human settlements by machine learning are generated from the fourth paradigm. It showed that the AI algorithm was able to directly deduce the top 10 factors that affect the livability of human settlements based on available data sources and necessary data engineering. These 10 factors were simplified as the ratio of population receiving social relief, the ratio of non-western immigration, the ratio of government low-rent housing, the average market price of purchased housing for residence, the ratio of high-income people, the number of households with fixed incomes, the number of new houses, the overall crime rate, the annual consumption of natural gas, and the annual consumption of electricity. Furthermore, the latest variables can be updated according to the latest datasets and the improved model in machine learning developed by retraining to perform live predictions of environmental livability.

The results of this research can be applied in the four stages of livability analysis(Fig. 15). It thereby leads to the monitoring, diagnosis, prediction, and early intervention of livability in human settlements based on timely updated big data and retrained algorithms.

By comparing this research, which was based on the fourth paradigm, with that of aforementioned traditional paradigms, we have found that effective knowledge discovery and high-accuracy prediction models could be obtained without relying heavily on the traditional AI expert system or the long-term studies by professional researchers. These dominant factors were basically consistent with the relevant qualitative research of RIVM and RIGO on livability, either locally or globally focused. Furthermore, the research was able to rank the most decisive factors in a quantitative manner for forecasting, thus allowing scientific researches to be more efficient, faster, foreseeable, and more live data-driven.

In this research, the land-use cluster in the available datasets was relatively small, hence resulting in a sharp cone diagram. This shortcoming should be overcome in future research by collecting more related variables of land-use, carrying out enhanced learning and broadening the observational horizons of the prediction model.

In addition, a greater amount of data, greater processing capabilities, greater computing power, and a more complex computing environment are expected to collect and process large amounts of real-time data. With new technologies, such as the latest 5G, IoT, and quantum computing, we shall be able to gather even more complex and unstructured real-time data to expand the current research in the future, so as to provide it with broader prospects for the applications in Smart City.

Sources of Figures and Tables:

Fig.1 © reference[12]; Fig. 2 © reference[27]; Fig. 3, 5, 6, 8-14 © Wu Jun; Fig. 4 © reference[31] ; Fig. 7 © reference[33]; Fig. 15 was drawn by Wu Jun according to Gartner concepts; Tab. 1-2 © Wu Jun.