基于深度学习的森林虫害无人机实时监测方法

孙 钰,周 焱,袁明帅,刘文萍,骆有庆,宗世祥

基于深度学习的森林虫害无人机实时监测方法

孙 钰1,周 焱1,袁明帅1,刘文萍1※,骆有庆2,宗世祥2

(1. 北京林业大学信息学院,北京 100083;2. 北京林业大学林学院,北京 100083)

无人机遥感是监测森林虫害的先进技术,但航片识别的实时性尚不能快速定位虫害爆发中心、追踪灾情发生发展。该文针对受红脂大小蠹危害的油松林,使用基于深度学习的目标检测技术,提出一种无人机实时监测方法。该方法训练精简的SSD300目标检测框架,无需校正拼接,直接识别无人机航片。改进的框架使用深度可分离卷积网络作为基础特征提取器,针对航片中目标尺寸删减预测模块,优化默认框的宽高比,降低模型的参数量和运算量,加快检测速度。试验选出的最优模型,测试平均查准率可达97.22%,在移动图形工作站图形处理器加速下,单张航片检测时间即可缩短至0.46 s。该方法简化了无人机航片的检测流程,可实现受害油松的实时检测和计数,提升森林虫害早期预警能力。

无人机;监测;虫害;目标检测;深度学习

0 引 言

森林资源在维持生态平衡、促进经济发展中发挥着重要作用。在过去十年中,中国主要林业有害生物年均发生面积超过千万公顷,年均直接经济损失超过千亿元[1-2]。如何对受虫害区域定位及对危害程度快速准确地监测预警,成为多年来国内外林业专家研究的重要课题。

随着计算机技术的不断发展,结合计算机分析的现代遥感技术在森林监测中得到广泛应用。在航空航天遥感监测领域,从卫星图像或高光谱图像中提取归一化植被指数[3-4],被普遍用来衡量森林健康程度;此外,结合图像分析[5]、模式识别[6-7]及机器学习[8-10]等计算机算法,实现森林虫害监测、受灾害等级分类和统计森林死亡率等,成为研究学者的研究重点。

相较于高空遥感技术,无人机作为一种低空遥感工具,具有操作灵活、拍摄时间自由、飞行成本低等优点[11-13],因此无人机遥感成为森林虫害监测的研究热点。无人机遥感使用深度学习技术,已实现高精度的树木及杂草识别[14-16]和地类[17-19]识别,但尚未应用于森林虫害识别。目前,森林虫害航片的主要识别方法包括:从无人机采集的彩色图像中提取颜色特征[20],对森林树冠密度、分布等信息进行统计分析;通过计算机图像分析技术中的图像分割算法[21-23]提取无人机航拍图像中的虫害区域;利用面向对象的分类算法[24],以及最邻近、支持向量机和随机森林等机器学习分类算法[25-27],对无人机高光谱图像中植被品种和受灾害等级分类。

应用上述计算机技术实现森林虫害监测,需经巡航拍摄、校正拼接或边缘剔除等预处理和图像识别3步。校正拼接和图像识别需无人机降落后,在第二现场使用工作站及专业软件完成,用时远大于飞行时间,极大地延长了监测周期,导致机组每次外业的针对性差,飞行架次及监测成本居高不下。无人机监测红脂大小蠹虫害的窗口期仅为每年6月下旬-9月上旬,期间新一代幼虫开始危害油松林。现有方法的实时性不足以在较短的窗口期使用有限的无人机覆盖广阔的油松林,难以及时定位虫害爆发中心,无法追踪灾情发生发展。另外,基于图像分析技术的无人机监测方法要求航拍光影条件一致,监测精度依赖预处理。因此,已有的无人机森林虫害监测方法存在监测效率低、外业成本高、依赖预处理等问题,制约了无人机遥感在森林虫害早期预警的实际应用。

现阶段的目标检测框架中,SSD(single shot multibox detector)[28]作为一种轻量级目标检测框架,具有可实时、准确率高2个优点。SSD300框架在COCO[29]数据集测试,mAP(mean average precision)[30]达到41.2%,与基于区域候选的重量级目标检测框架Faster R-CNN[31]相当。同时使用NVIDIA TITAN X显卡,检测速度达到59 fps,远快于Faster R-CNN的7 fps,甚至比实时目标检测框架YOLO[32]的21 fps更快。

本文以受红脂大小蠹危害的油松作为研究对象,针对无人机监测森林虫害的挑战,进一步精简基于深度学习的SSD300目标检测框架,在林区无人机遥控现场架设移动图形工作站,实时监测受害油松数量和分布。

1 数据材料

1.1 研究区域概况

本文试验点位于辽宁省凌源市,如图1所示。该试验点样地共6块,每块样地大小为30 m×30 m,样地坡度约为30°,主要树种为油松。在该地区,红脂大小蠹是需要重点监测的虫害之一。

图1 样地位置

1.2 数据采集

本文以受红脂大小蠹危害的油松作为测试对象。数据采集时间为2017年8月,使用大疆“悟”系列第二代四旋翼航拍机,搭载大疆X5S云台相机,详细参数见表1。无人机挂载镜头为奥林巴斯25 mm F1.8定焦镜头,飞行高度为50~75 m,扫描拍摄1~6号样地,航片为含有地理坐标、飞行高度等元信息的JPEG格式图像,图像分辨率为5 280×3 956像素。

表1 无人机与大疆 X5S云台相机主要参数

1.3 数据集建立

本文划分1、3~6号共5块样地的航片作为训练集,2号样地的航片作为测试集。其中,1号样地航片23张,4号样地11张,其余样地均为12张。数据集共82张图像,其中训练集图像70张,测试集图像12张。图像标注通过标注工具完成,标注内容为包围受害油松边界的矩形框坐标及分类信息。标注由采集人员完成后,再经林学专家复核,部分有异议的图像结合人工地面调查确认是否为受害油松。

2 检测方法

2.1 无人机实时监测系统

森林虫害无人机实时监测方法主要由航拍无人机、Android无人机遥控器和移动图形工作站3部分组成,监测过程如图2所示:首先无人机进行定点飞行,对受虫害林区进行扫描,间隔拍摄一张分辨率为5 280×3 956像素的林区航片;无人机遥控器的Android客户端实时接收并存储航拍图像,无需正射校正和拼接,经缩小及裁剪后,将12张300×300像素的图像作为一个批次,通过Tensorflow Serving系统[33],向移动图形工作站请求受害油松的检测识别服务;移动图形工作站运行精简的SSD300模型,在图形处理器(graphics processing unit,GPU)的并行加速下批量完成该批次的受害油松检测。

图2 森林虫害无人机实时监测系统架构

2.2 SSD目标检测框架

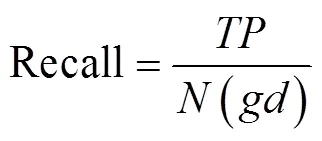

SSD目标检测框架是使用深度神经网络作为特征提取器的轻量级一阶段目标检测方法[28]。如图3a所示,文献[22]中SSD300框架使用VGG16[34]作为基础特征提取器,并在VGG16末尾增加抽象程度更高的特征层,最终以多尺度特征图P1~P6上的默认框为锚点,预测目标的类别及位置。P1~P6的每个单元都与一组默认框相关联,每组默认框在一个正方形基础框上,覆盖宽高比为{2,1/2,3,1/3}的默认框。用基础框与输入图像的面积比作为该组默认框的基础比例,各层基础比例分别为{0.1,0.2,0.37,0.54,0.71,0.88}。图3b为不同尺度特征图生成的基础框示例。图3c和图3d为特征图P2和P3生成的一组默认框示例,白色框为受害油松的标注框,黑色网格表示特征图P2、P3的单元数目,分别为19×19和10×10。虚线框表示以单元红色中心点为基准生成的一组默认框,其中蓝色框为基础框,黄色框为其他宽高比的默认框。P2与P3生成的默认框与标注框的IoU(intersection over union)最高。

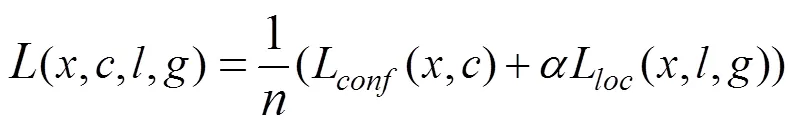

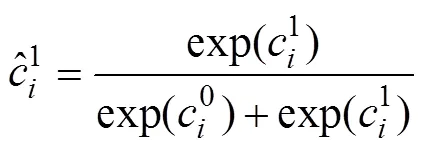

SSD的损失函数由位置损失(L)和分类损失(L)组成,定义为

注:图3a中Conv表示卷积层,参数s1、s2表示卷积步长分别1和2。图3c和图3d中,白色框为受害油松的标注框,黑色网格表示特征图P2、P3的单元数目。虚线框表示以单元红色中心点为基准生成的一组默认框,其中蓝色框为基础框,黄色框为其他宽高比的默认框。

2.3 基于深度可分离卷积结构的受害油松检测框架

2.4 评价指标

本文采用单张航片的检测时间及受害油松的测试平均查准率(average precision,AP)[30]作为检测速度和精确度的评价指标。AP为精确率(precision)和召回率(recall)曲线下的面积,精确率和召回率的定义为

注:图4a中,dw为深度卷积,pw为点卷积;图4b中,D表示特征图及滤波器分辨率大小,M、N均为通道数。

3 结果与分析

3.1 模型训练与部署

训练模型的深度学习服务器安装为Ubuntu 16.04 LTS 64位系统,采用TensorFlow[37]深度学习开源框架。服务器搭载AMD Ryzen 1700X CPU(64GB内存),并采用NVIDIA TITAN Xp GPU(12GB显存)。训练阶段采用动量为0.9的随机梯度下降算法进行优化,设置初始学习率为0.001,正则化系数设为0.000 04,以16张图像为一个批次,共训练100 000次,每35 000次学习率下降原来的0.1倍。训练过程采用的数据扩充方式为随机水平翻转和随机图像裁剪。

训练完毕后,模型经计算图精简和常量化后,部署至火影影刃Z5移动图形工作站。工作站搭载Intel i7-8750H CPU(16GB内存)及NVIDIA GTX 1050Ti GPU(4GB显存)。

3.2 基础特征提取器对检测速度及精确度的影响

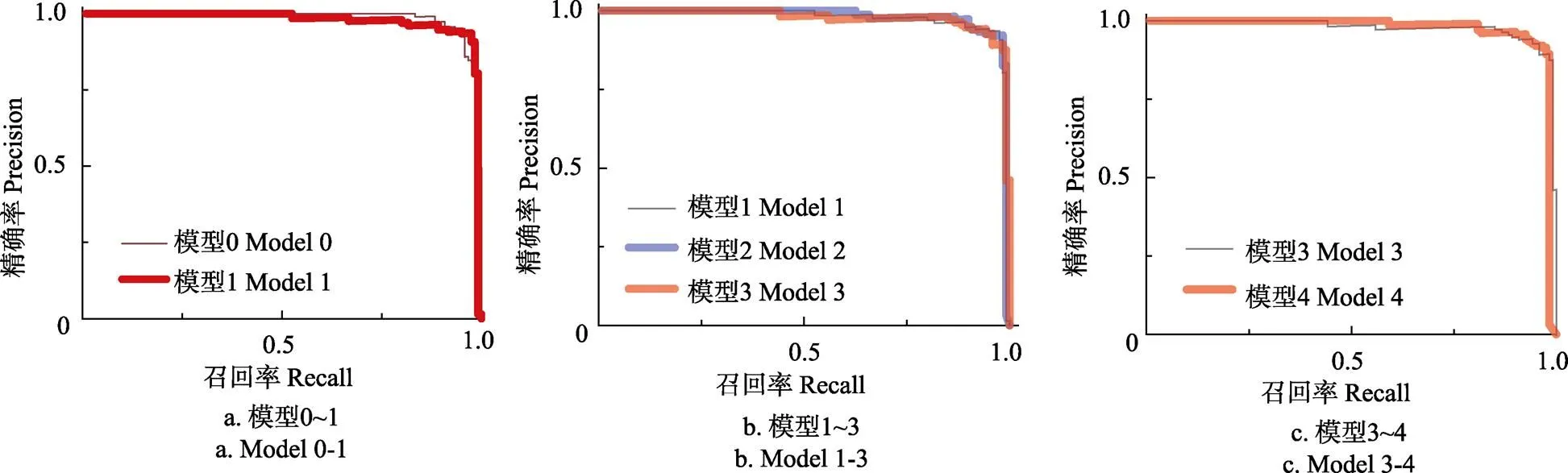

基础特征提取器是影响模型检测速度的因素之一。表2为不同目标检测框架的测试时间及测试AP,由表2可知,模型1将深度可分离卷积网络作为基础特征提取器,相比基于VGG16的SSD300目标检测框架,参数量减少约528 MB,单张图像检测时间提高了4 s。如5a的PR曲线所示,模型0的AP为98.70%,模型1比模型0的AP只降低了1.01%。基础特征提取框架的改变对精确度的影响较小,但会大幅提升检测速度。

3.3 精简SSD300预测模块

精简SSD300目标检测框架的预测模块可加快检测速度。如表2所示,预测模块保留了P2、P3的模型3、4,相比预测模块完整的模型1和保留了P2~P4的模型2,参数量更少,模型检测时间缩短至0.46 s。而由图5b可知,模型3的精确度与模型1相比,几乎没有降低。模型3和模型4删减预测模块各层默认框宽高比后,检测速度最快,均为0.46 s。如图5c所示,对比模型3,模型4的AP只降低了0.68%,而参数量进一步降低至18.8 MB。试验表明,针对本文数据集,模型4只保留SSD300目标检测框架预测模块中P2、P3层,以及适合数据集检测目标的默认框宽高比,AP达到97.22%,相比原模型仅降低了1.48%,可在保证精确度的前提下,降低模型参数量,最大程度地提升检测速度。

表2 不同检测框架的单张图像检测时间及测试平均查准率

图5 模型0~4的测试集Precision-Recall曲线

3.4 检测结果与典型错误分析

由表2可知,与重量级的二阶段目标检测框架Faster R-CNN相比,模型4的参数量仅为Faster R-CNN的10.85%,单张航片检测时间仅为Faster R-CNN的7.32%,而测试AP相当,仅降低0.69%。选择分类置信度>0.6为阈值,此时模型4的精确率和召回率分别为98.04%和83.33%。无人机在75 m高度拍摄的图像地面覆盖范围为38.18 m×50.95 m,无人机以经济速度15 m/s飞行,移动到无重叠的下一拍摄点需3.4 s,本系统完成一个批次的检测仅需0.46 s,可实现对森林虫害的实时监测。

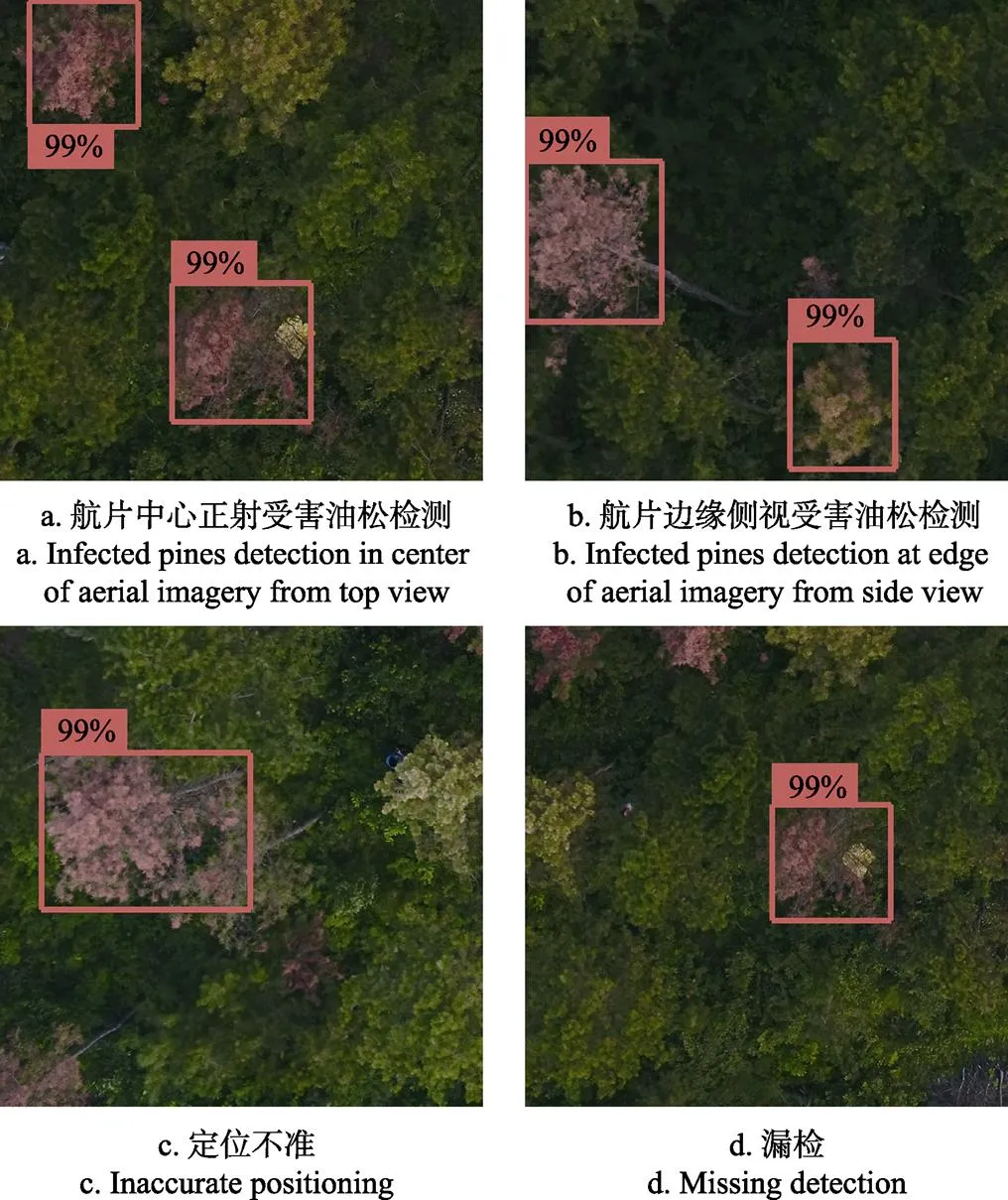

不经正射校正,坡地油松航片边缘为侧视,并存在镜头畸变。图6a和图6b分别为航片中心正射受害油松和航片边缘侧视受害油松的检测结果,可知本文方法不仅能准确地检测出受害油松的正射树冠,对边缘侧视的坡地树冠也有较强的鲁棒性。

本文影响受害油松检测精确度的主要原因如下:1)松油分布密集情况下,多株油松被归并而造成检测框的定位不准,如图6c所示;2)位于图像边缘的不完整受害油松不易检测而造成漏检,如图6d所示。

图6 典型测试样本的检测结果示例

4 结 论

针对传统航片识别技术监测效率低、外业成本高、依赖预处理等问题,本文使用深度学习技术,提出了一种面向红脂大小蠹的无人机实时监测方法。本文在SSD300目标检测框架的基础上,将深度可分离卷积网络作为基础特征提取器,预测模块精简至P2和P3,且默认框的宽高比只保留{1,2,1/2}。结果表明:无需航片拼接、正射校正及边缘剔除等预处理,受害油松检测平均查准率可达97.22%,相比原模型仅降低1.48%,而模型参数量从550.1降低至18.8 MB,在移动图形工作站的图形处理器加速下,单张图像的检测时间仅为0.46 s,实现了受害油松的实时无人机监测。本方法简化了无人机航片的检测流程,可提高机组每次外业的针对性,及时定位虫害爆发中心,追踪灾情发生发展,满足森林虫害早期预警对时效性的需求。

本文目前仅对受害油松进行单分类检测,未对油松的受害等级分类,未来将进一步探索细粒度森林虫害监测方法。

[1] 刘萍,梁倩玲,陈梦,等. 林业有害生物灾害损失评估指标体系构建[J]. 林业科学,2016,52(6):101-107. Liu Ping, Liang Qianling, Chen Meng,et al. Construction on index system of forest pest disaster loss assessment[J]. Scientia Silvae Sinicae, 2016, 52(6): 101-107. (in Chinese with English abstract)

[2] 史洁青, 冯仲科, 刘金成. 基于无人机遥感影像的高精度森林资源调查系统设计与试验[J]. 农业工程学报, 2017, 33(11): 82-90. Shi Jieqing, Feng Zhongke, Liu Jincheng. Design and experiment of high precision forest resource investigation system based on UAV remote sensing images[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2017, 33(11): 82-90. (in Chinese with English abstract)

[3] Gooshbor L, Bavaghar M P, Amanollahi J,et al. Monitoring infestations of oak forests by tortrix viridana (Lepidoptera: Tortricidae) using remote sensing[J]. Plant Protection Science, 2016, 52(4): 270-276.

[4] Olsson P O, Lindström J, Eklundh L. Near real-time monitoring of insect induced defoliation in subalpine birch forests with MODIS derived NDVI[J]. Remote Sensing of Environment, 2016, 181: 42-53.

[5] Patil J K, Kumar R. Advances in image processing for detection of plant diseases[J]. Journal of Advanced Bioinformatics Applications and Research, 2011, 2(2): 135-141.

[6] Liaghat S, Ehsani R, Mansor S,et al. Early detection of basal stem rot disease (Ganoderma) in oil palms based on hyperspectral reflectance data using pattern recognition algorithms[J]. International Journal of Remote Sensing, 2014, 35(10): 3427-3439.

[7] Niteshkpoona, Riyadismail. Discriminating the occurrence of pitch canker fungus in Pinus radiata trees using QuickBird imagery and artificial neural networks[J]. Journal of the South African Forestry Association, 2013, 75(1): 29-40.

[8] 赵玉,王红,张珍珍. 基于遥感光谱和空间变量随机森林的黄河三角洲刺槐林健康等级分类[J]. 遥感技术与应用,2016,31(2):359-367. Zhao Yu, Wang Hong, Zhang Zhenzhen. Forest healthy classification of robinia pseudoacacia in the yellow river delta, China based on spectral and spatial remote sensing variables using random forest[J]. Remote Sensing Technology and Application, 2016, 31(2): 359-367. (in Chinese with English abstract)

[9] Belgiu M, Drăguţ L. Random forest in remote sensing: A review of applications and future directions[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2016, 114: 24-31.

[10] Omer G, Mutanga O, Elfatih M Abdel-Rahman,et al. Performance of support vector machines and artificial neural network for mapping endangered tree species using worldview-2 data in Dukuduku Forest, South Africa[J]. IEEE Journal of Selected Topics in Applied Earth Observations & Remote Sensing, 2016, 8(10): 4825-4840.

[11] Pajares G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs)[J]. Photogrammetric Engineering & Remote Sensing, 2015, 81(4): 281-329.

[12] 付卓新,姚锐. 浅谈森林资源调查中无人机遥感的应用[J]. 南方农业,2015,9(36):246,248.

[13] Dash J P, Watt S M, Pearse G D,et al. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak[J]. International Journal of Photogrammetry & Remote Sensing, 2017, 131: 1-14.

[14] Fan Zhun, Lu Jiewei, Gong Maoguo,et al. Automatic tobacco plant detection in uav images via deep neural networks[J]. IEEE Journal of Selected Topics in Applied Earth Observations & Remote Sensing, 2018, 11(3): 876-887.

[15] Ribera J, Chen Y, Christopher Boomsma, et al. Counting plants using deep learning[C]// IEEE Global Conference on Signal and Information Processing. 2017: 1344-1348.

[16] Li Liujun, Fan Youheng, Huang Xiaoyun, et al.Real-time UAV weed scout for selective weed control by adaptive robust control and machine learning algorithm[C]// Orlando: American Society of Agricultural and Biological Engineers, 2016.

[17] 孙钰,韩京冶,陈志泊,等. 基于深度学习的大棚及地膜农田无人机航拍监测方法[J]. 农业机械学报,2018,49(2):133-140. Sun Yu, Han Jingye, Chen Zhibo,et al. Monitoring method for uav image of greenhouse and plastic-mulched landcover based on deep learning[J]. Transactions of the Chinese Society for Agricultural Machinery, 2018,49(2): 133-140. (in Chinese with English abstract)

[18] 党宇,张继贤,邓喀中,等. 基于深度学习AlexNet的遥感影像地表覆盖分类评价研究[J]. 地球信息科学学报,2017,19(11):1530-1537. Dang Yu, Zhang Jixian, Deng Kazhong,et al. Study on the evaluation of land cover classification using remote sensing images based on AlexNet[J]. Journal of Geo-information Science, 2017, 19(11): 1530-1537. (in Chinese with English abstract)

[19] 金永涛, 杨秀峰, 高涛,等. 基于面向对象与深度学习的典型地物提取[J]. 国土资源遥感, 2018, 30(1): 22-29.

Jin Yongtao, Yang Xiufeng, Guo Tao, et al. The typical object extraction method based on object - oriented and deep learning[J]. Remote Sensing for Land and Resources, 2018, 30(1): 22-29. (in Chinese with English abstract)

[20] Chianucci F, Disperati L, Guzzi D,et al. Estimation of canopy attributes in beech forests using true colour digital images from a small fixed-wing UAV[J]. International Journal of Applied Earth Observation & Geoinformation, 2016, 47: 60-68.

[21] Liu Wenping, Fei Yunqiao, Wu Lan. A marker-based watershed algorithm using fractional calculus for unmanned aerial vehicle image segmentation[J]. Journal of Information & Computational Science, 2015, 12(14): 5327-5338.

[22] Zhong Tingyu, Liu Wenping, Luo Youqing,et al. A new type-2 fuzzy algorithm for unmanned aerial vehicle image segmentation[J]. International Journal of Multimedia & Ubiquitous Engineering, 2017, 12(5): 75-90.

[23] 张军国,冯文钊,胡春鹤,等. 无人机航拍林业虫害图像分割复合梯度分水岭算法[J]. 农业工程学报,2017,33(14):93-99. Zhang Junguo, Feng Wenzhao, Hu Chunhe,et al. Image segmentation method for forestry unmanned aerial vehicle pest monitoring based on composite gradient watershed algorithm[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2017, 33(14): 93-99. (in Chinese with English abstract)

[24] Chenari A, Erfanifard Y, Dehghani M, et al. Woodland mapping at single-tree levels using object-oriented classification of unmanned aerial vehicle (UAV) images[J]. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2017, 42: 43-49.

[25] Hung C, Zhe X, Sukkarieh S. Feature learning based approach for weed classification using high resolution aerial images from a digital camera mounted on a UAV[J]. Remote Sensing, 2014, 6(12): 12037-12054.

[26] Yuan Huanhuan, Yang Guijun, Li Changchun, et al. Retrieving soybean leaf area index from unmanned aerial vehicle hyperspectral remote sensing: Analysis of RF, ANN, and SVM regression models[J]. Remote Sensing, 2017, 9(4): 309.

[27] Cao Jingjing, Leng Wanchun, Liu Kai, et al. Object-based mangrove species classification using unmanned aerial vehicle hyperspectral images and digital surface models[J]. Remote Sensing, 2018, 10(1): 89.

[28] Liu Wei, Anguelov D, Erhan D, et al. SSD: Single shot multibox detector[C]// European Conference On Computer vision. 2016: 21-37.

[29] Lin Tsung Yi, Maire M, Belongie S, et al. Microsoft COCO: Common objects in context[J]. European Conference on Computer Vision. Springer International Publishing, 2014, 8693: 740-755.

[30] Everingham M, Eslami S M A, Gool L V, et al. The pascal visual object classes challenge: A retrospective[J]. International Journal of Computer Vision, 2015, 111(1): 98-136.

[31] Ren S, He K, Girshick R,et al. Faster R-CNN: towards real-time object detection with region proposal networks[C]// International Conference on Neural Information Processing Systems. 2015: 91-99.

[32] Redmon J, Divvala S, Girshick R, et al. You only look once: Unified, real-time object detection[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016: 779-788.

[33] Olston C, Fiedel N, Gorovoy K, et al. Tensorflow-serving: Flexible, high-performance ML serving [C]//31st Conference on Neural Information Processing Systems. 2017.

[34] Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition [J/OL]. 2015, https://arxiv.org/abs/1409.1556.

[35] Girshick R. Fast R-CNN[C]// Computer Vision (ICCV), 2015 IEEE International Conference on. IEEE, 2015: 1440-1448.

[36] Howard A G, Zhu M, Chen B, et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications [J/OL]. 2017, https://arxiv.org/abs/1704.04861.

[37] Abadi M, Barham P, Chen J, et al. TensorFlow: A System for Large-Scale Machine Learning[C]// 12th USENIX Symposium on Operating Systems Design and Implementation, 2016: 265-283.

UAV real-time monitoring for forest pest based on deep learning

Sun Yu1, Zhou Yan1, Yuan Mingshuai1, Liu Wenping1※, Luo Youqing2, Zong Shixiang2

(1.100083,2.100083,)

The unmanned aerial vehicle (UAV) remote sensing featured by low cost and flexibility offers a promising solution for pests monitoring by acquiring high resolution forest imagery. So the forest pest monitoring system based on UAV is essential to the early warning of red turpentine beetle (RTB) outbreaks. However, the UAV monitoring method based on image analysis technology suffers from inefficiency and depending on pre-processing, which prohibits the practical application of UAV remote sensing. Due to the long process flow, traditional methods can not locate the outbreak center and track the development of epidemic in time. The RTB is a major forestry invasive pest which damages the coniferous species of pine trees in northern China. This paper focuses on the detection of pines infected by RTBs. A real-time forest pest monitoring method based on deep learning is proposed for UAV forest imagery. The proposed method was consisted of three steps: 1) The UAV equipped with prime lens camera scans the infected forest and collects images at fixes points. 2) The Android client on UAV remote controller receives images and then requests the mobile graphics workstation for infected trees detection through TensorFlow Serving in real time. 3) The mobile graphics workstation runs a tailored SSD300 (single shot multibox detector) model with graphics processing unit (GPU) parallel acceleration to detect infected trees without orthorectification and image mosaic. Compared with Faster R-CNN and other two-stage object detection frameworks, SSD, as a lightweight object detection framework, shows the advantages of real-time and high accuracy. The original SSD300 object detection framework uses truncated VGG16 as basic feature extractor and the 6 layers (named P1-P6) prediction module to detect objects with different sizes. The proposed tailored SSD300 object detection framework includes two parts. First, a 13-layer depthwise separable convolution is used as basic feature extractor, which reduces several times computation overhead compared with the standard convolutions in VGG16. Second, most loss is derived from positive default boxes and these boxes mainly concentrated in P2 and P3 due to the constraints of crown size, UAV flying height and lens’ focal length. Therefore, the tailored SSD300 retains only P2 and P3 as prediction module and the other prediction layers are deleted to further reduce computation overhead. Besides, aspect ratio of default boxes is set to {1, 2, 1/2}, since the aspect ratio of crown is approximate 1. The UAV imagery is collected on 6 experimental plots at 50-75 m height. The photos of No.2 experimental plot are considered as test set and the rest are train set. A total of 82 aerial photos are used in the experiment, including 70 photos in the train set and 12 photos in the test set. The AP and run time of five models are evaluated. The average precision (AP) of the tailored SSD300 model reaches up to 97.22%, which is lower than the AP of original SSD300. While the proposed model has only 18.8 MB parameters, reducing above 530 MB compared with the original model. And the run time is 0.46 s on a mobile workstation equipped with NVIDIA GTX 1050Ti GPU, while the original model needs 4.56 s. Experimental results demonstrate that the downsize of basic feature extractor and prediction module speed up detection with a little impact on AP. The maximum coverage of aerial photo captured at 75 m height is 38.18 m×50.95 m. When the UAV has a horizontal speed of 15 m/s, it takes 3.4 s to move to the next shooting point without overlap, longer than the detection time. Therefore, the proposed method can simplify the detection process of UAV monitoring and realizes the real-time detection of RTB damaged pines, which introduces a practical and applicable solution for early warning of RTB outbreaks.

unmanned aerial vehicle; monitoring; diseases; object detection; deep learning

10.11975/j.issn.1002-6819.2018.21.009

TP391.41

A

1002-6819(2018)-21-0074-08

2018-06-13

2018-09-10

北京市科技计划“影响北京生态安全的重大钻蛀性害虫防控技术研究与示范”(Z171100001417005)

孙 钰,副教授,主要从事林业物联网与人工智能研究。 Email:sunyv@bjfu.edu.cn

刘文萍,教授,博士生导师,主要从事计算机图像及视频分析与处理、模式识别与人工智能研究。Email:wendyl@vip.163.com

孙 钰,周 焱,袁明帅,刘文萍,骆有庆,宗世祥. 基于深度学习的森林虫害无人机实时监测方法[J]. 农业工程学报,2018,34(21):74-81. doi:10.11975/j.issn.1002-6819.2018.21.009 http://www.tcsae.org

Sun Yu, Zhou Yan, Yuan Mingshuai, Liu Wenping, Luo Youqing, Zong Shixiang. UAV real-time monitoring for forest pest based on deep learning[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(21): 74-81. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2018.21.009 http://www.tcsae.org