Multimodal Medical Image Fusion Methods Based on Improved Discrete Wavelet Transform

XU Lei, TIAN Shu-chng, CUI Cn, MENG Qing-le, YANG Rui, JIANG Hong-ing, WANG Feng

a.Department of Medical Equipment; b.Department of Nuclear Medicine, Nanjing First Hospital, Nanjing Medical University, Nanjing Jiangsu 210006, China

Multimodal Medical Image Fusion Methods Based on Improved Discrete Wavelet Transform

XU Leia, TIAN Shu-changa, CUI Canb, MENG Qing-leb, YANG Ruib, JIANG Hong-binga, WANG Fengb

a.Department of Medical Equipment; b.Department of Nuclear Medicine, Nanjing First Hospital, Nanjing Medical University, Nanjing Jiangsu 210006, China

ObjectiveThis paper proposed a novel algorithm of discrete wavelet transform (DWT) which is used for multimodal medical image fusion.MethodsThe source medical images are initially transformed by DWT followed by fusing low and high frequency sub-images. Then, the “coefficient absolute value” that can provide clear and detail parts is adapted to fuse high-frequency coeffcients, where as the “region energy ratio” which can effciently preserve most information of source images is employed to fuse low-frequency coeffcients. Finally, the fused image is reconstructed by inverse wavelet transform.ResultsVisually and quantitatively experimental results indicate that the proposed fusion method is superior to traditional wavelet transform and the existing fusion methods.ConclusionThe proposed method is a feasible approach for multimodal medical image fusion which can obtain more effcient and accurate fusions results even in the noise environment.

discrete wavelet transform; multimodal; image fusion; fusion rule

0 INTRODUCTION

With the development of medical imaging technology, computer technology and biomedical engineering, medical imaging equipment can provide multimodality medical image which contains the image of computed tomography (CT), magnetic resonance imaging (MRI), single photon emission computed tomography (SPECT), positron emission tomography (PET), and so on. Since each modality has unique imaging principle, the information of different modal imaging is complementary and redundant[1-2]. For instance, CT is used in tumor and anatomical detection whereas MRI can obtain information about soft tissues. Thus, none of these modalities can cover all complementary and relevant information in a single image. Due to image fusion could combine and merge multiple modality images into one image, which makes it be widely used in clinical applications, such as non-invasive diagnosis, radiotherapy plan, and treatment plan.

Image fusion techniques can be classifed into three levels: pixel level fusion, feature level fusion, and decision-making level fusion[3]. At present, most of the image fusion algorithms are based on pixel level which deals with each pixel directly, it can provide more abundant and reliable information than the other two fusion level, and is conducive to the further analysis,procession and comprehension of the image. According to the research achievements of image fusion at home and abroad in recent years, generally pixel level image fusion can be divided into spatial domain and frequency domain[4-6]. In spatial domain fusion, the fusion rule is directly carried out by the intensity values of the source images[7]. Weighted averaging, principal component analysis (PCA), and neural network model are a few examples of the spatial domain fusion scheme. Since medical image has poor contrast, and the gray level is out of average distribution, hence the fused image based on spatial domain is easy to introduce spatial distortion and can be affected by noise[8].

Therefore, the fusion algorithm based on frequency domain can overcome the limitations of fusion algorithm in spatial domain. Several frequency domain methods have been employed by using multiscale transform, including pyramid transform, wavelet transform, curvelet transform and contourlet transform, and so forth. Pyramid transform like Gaussian pyramid, Laplacian pyramid and Contrast pyramid suffer from blocking effect in the regions, and can’t provide any directional information[9]. Several lectures stated that curvelet transform and contourlet transform are superior to wavelet transforms[10-12]. However, these require huge computation and consume a large memory. The discrete wavelet transform (DWT) is the most widely used wavelet transform in medical image fusion for the advantages of multiresolution, multiscale, rapidcal cal-culations, and in conformity with the people visual spatial frequency channel[13].

In this paper, we proposed an improved fusion framework based on DWT for multimodal medical images. The main contribution lies in the proposed fusion rule, “coefficient absolute value” that can provide clear and detail parts is adapted to fuse high-frequency coeffcients, whereas the “region energy ratio” which can efficiently preserve most information of source images is employed to fuse low-frequency coeffcients. The combinations of these two techniques can preserve more details from source images and improve the quality of the fused images.

1 MULTIMODAL IMAGE FUSION ALGORITHM BASED ON WAVELET TRANSFORM

Recently, wavelet transform has emerged as a powerful signal and image processing tool which can provide an effcient way of fusion using multiresolution analysis[14]. This section explains the basics of DWT and its usefulness in image fusion.

1.1 Mechanism of image fusion based on wavelet transform

The DWT of an image is performed by using high pass and low pass flters, and then the image is decomposed into different frequency bands[15]. The decomposition and reconstruction of an image can be made by Mallat pyramid algorithm.

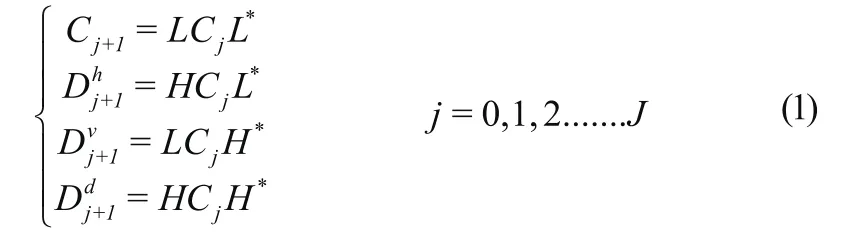

The two dimension decomposition of wavelet is shown as follows[16]:

Wherejis the resolution of an image decomposition,h,v,dmeans the components of horizontal, diagonal, vertical directions;L,His low-pass flter and high-pass flter operator, respectively;L*,H* is the hermite compose of matrixL,H.

The source image is decomposed into low and high frequency component, it isiLL,iHL,iLHandiHH. The low frequency componentiLLrepresents the approximate information of image, where as high frequencyiHL,iLH,iHHrepresents detailed information of the image. The two-dimensional decomposition process using DWT is shown in Figure 1, the subscript is the level of image decomposition.

Figure 1 2D decomposition process in discrete wavelet transform. a: Decomposition up to level 3; b: Three-level wavelet decomposition of Lena image.

Reconstruction of image based on DWT can be made by using different fusion rules. Due to the energy of source image only concentrated on the contour component, the sub-band imageiLLcould be more distinct after extraction. If we continue to employ the wavelet decomposition, (3j+1) frequency bands would be acquired byjlevels decomposition. For the high frequency bands of,iHL,iLH,iHHthe fusion strategy principally extracts the detailed information which contains edges and textures. The wavelet reconstruction algorithm is formulated as follows[17]:

1.2 General image fusion scheme based on discrete wavelet transform

The DWT is an effcient way for performing image fusion at multiscale with several characteristics, which containslocality, multiresolution analysis, edge detection, energy compaction, and so forth[18]. The general image fusion process is shown in Figure 2. One can easily understand that each source image is frst decomposed into a sequence of multiscale coefficients, and then various fusion rules are employed in the selection of these coefficients, which are synthesizedviainverse transforms to form the fused image.

Figure 2 A general image fusion scheme based on discrete wavelet transform.

2 PROPOSED FUSION METHOD

Image fusion rule is crucial to the quality of fused image[19]. The traditional low frequency fusion rules include weighted average (WAV), coefficient absolute value (CAV) and regional energy (REN). Whereas regional coefficient absolute values (RCAV), regional energy (REN) and regional variance (RVA) are several examples of traditional high frequency fusion rules. However, traditional fusion rules can’t reach the ideal effect.

2.1 Fusion rule of low-frequency coefficients

Due to the useful information of an image is concentrated on regional feature rather than in one pixel, in order to preserve the image feature information, we proposed “region energy ratio” as fusion rule for the low frequency coefficients. The defnition of region energy is frst given out[20].

Where (M,N) is the region size,ω(l,k) is the weighted of low frequency coeffcient,is the coeffcient of image I at j scale.

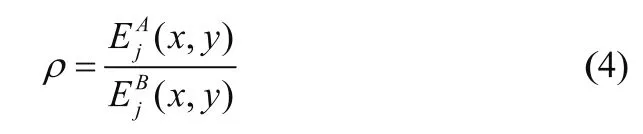

The defnition of region energy ratio which can refect the similarity of images is formulated as follows:

Ifρis close to 1, it is considered that the two regions have a higher degree of similarity, otherwise the two regions have significant difference. The low frequency coefficient of fused image can be obtained by comparing the similarity threshold (T) value with region energy ratio ρ.

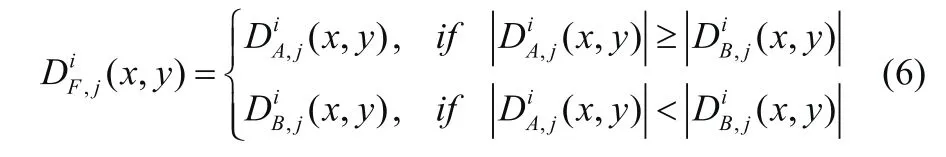

2.2 Fusion rule of high-frequency coefficients

Bigger absolute values of wavelet coeffcients correspond to the relatively stronger gray scale changes of image, and human eyes are sensitive to these changes[21]. To enhance the information of edges, textures and contours, the “coefficient absolute value” is applied to high frequency coeffcients fusion. Fusion rule is described as below:

2.3 Consistency check

Consistency check is introduced to deal with the fused image for better quality[22]. The detail operation is as follows, in the fused image, if the coeffcient of one region is from subimage A, however, most of coefficients were from the subimage B in its neighborhood, the coeffcient would be replaced by the corresponding coeffcient in sub-image B.

3 FUSION RESULTS AND ANALYSIS

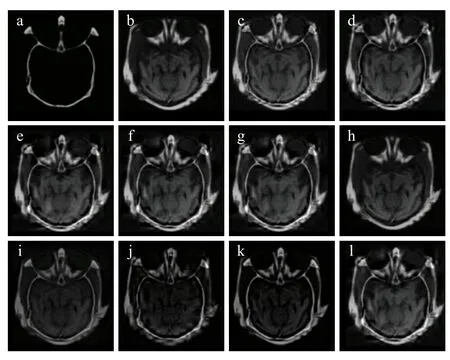

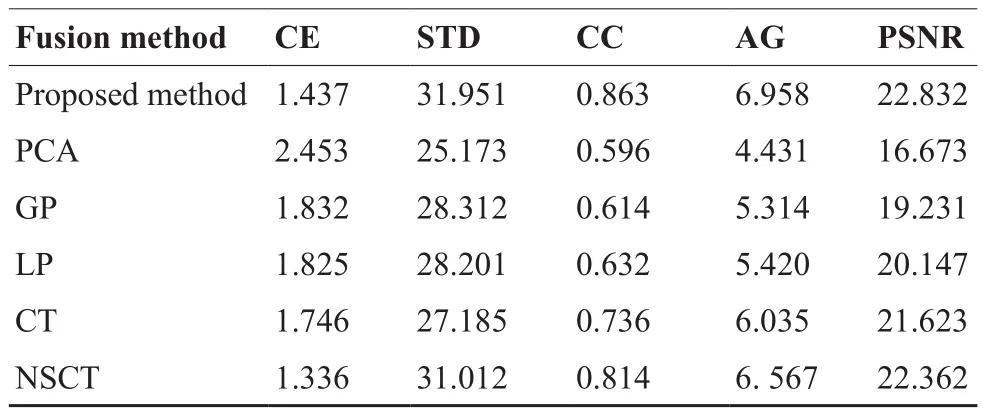

This section shows subjective and objective comparisons to evaluate fusion results obtained by the proposed method. The experiments have been performed over three groups of medical images with the same size of pixel, and fusion results have been shown in Figures 3-5 respectively. To verify the superiority, effectiveness and noise-resistance of the proposed fusion method, we have compared the proposed method to traditional wavelet transform methods with different fusion rules and existing stateof-the-art fusion methods which include principal component analysis (PCA), Gaussian pyramid (GP), Laplace pyramid (LP), contourlet transform (CT) and non-subsampled contourlet transform (NSCT). The comparative analysis of the fusion results has been performed with cross entropy (CE), standard deviation (STD), correlation coeffcient (CC), average gradient (AG) and peak signal to noise ratio (PSNR). High values of SD, CC, AG and PSNR show the high-quality of the fused image, whereas the lower value of CE correspond to the better fusion result[23].

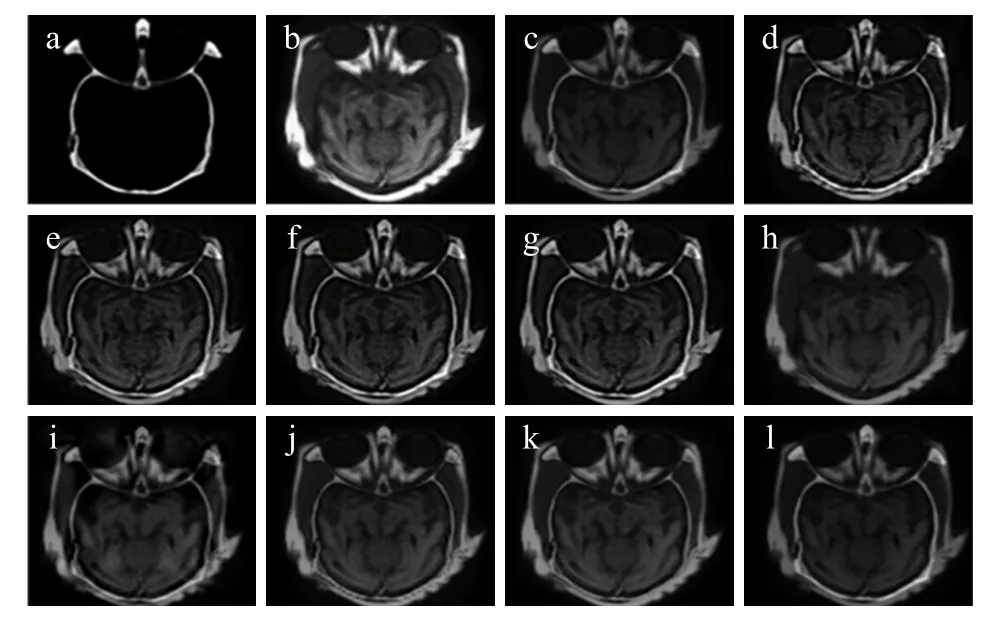

Figure 3Resultsfor the first set medical images based on discrete wavelet transform with different fusion rules. a: CT image; b: MRI image; c: the proposed method; d: WAV+CAV; e: WAV+REN; f: WAV+RVA; g: CAV+CAV; h: CAV+REN; i: CAV+RAV; j: REN+CAV; k: REN+RVA; l: REN+RCAV. The traditional low frequency fusion rules include weighted average (WAV), coefficient absolute value (CAV) and regional energy (REN). Whereas regional coefficient absolute values (RCAV), regional energy (REN) and regional variance (RVA) are several examples of traditional high frequency fusion rules.

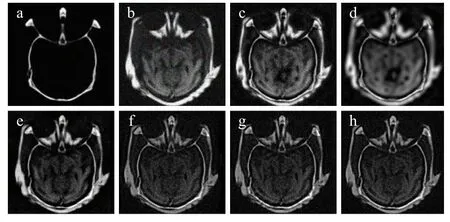

Figure 4 Fusion results for the second set medical images. a: CT image; b: MRI image; c-g: Fused images with proposed method from level 2 to level 6; h: PCA method; i: LP method; j: GP method; k: CT method; l: NSCT-1 method. State-of-the-art fusion methods which include principal component analysis (PCA), Gaussian pyramid (GP), Laplace pyramid (LP), contourlet transform (CT) and non-subsampled contourlet transform (NSCT).

Figure 5 Fusion results for multimodal images with Gaussian noise. a: CT image; b: MRI image; c: DWT method; d: PCA method; e: LP method; f: GP method; g: CT method; h: NSCT-1 method.

In both experiments and lectures, “db3” wavelet has been found suitable for decomposition[24-25]. Therefore, we used “db3” wavelet for the proposed fusion scheme. The information of medical image is mainly concentrated in the low frequency part; the high frequency information is not abundant. Hence, the decomposition level is chosen as 4 in consideration of calculate amount and precision.

3.1 Subjective evaluation

The first set of medical images is brain CT and MRI, shown in Figure 3a and b. The results for proposed multiscale fusion method has been shown in Figure 3c. On comparing the obtained fused image with several traditional wavelet transform methods using different fusion rules, the fused images are shown in Figure 3d-l, one can easily conclude that the proposed method outperforms these fusion methods and has good visual representation of fused image.

Similarly, the second set of medical images is relatively same as the first set (Figure 4a and b). The fusion results for these images are shown in Figure 4c-l, clearly implies that the proposed method has higher definition than existing state-of the-art fusion methods.

In addition, the third set of medical images is CT and MRI corrupted by Gaussian noise with a standard deviation of 5%, which is shown in Figure 5a and b. The comparison of proposed fusion results with PCA, GP, LP, CT and NSCT, shown in Figure 5c-h, we can easily verify the fact that the fused images with proposed method have better quality and contrast in comparison to other fusion methods even in noisy environment.

3.2 Objective evaluation

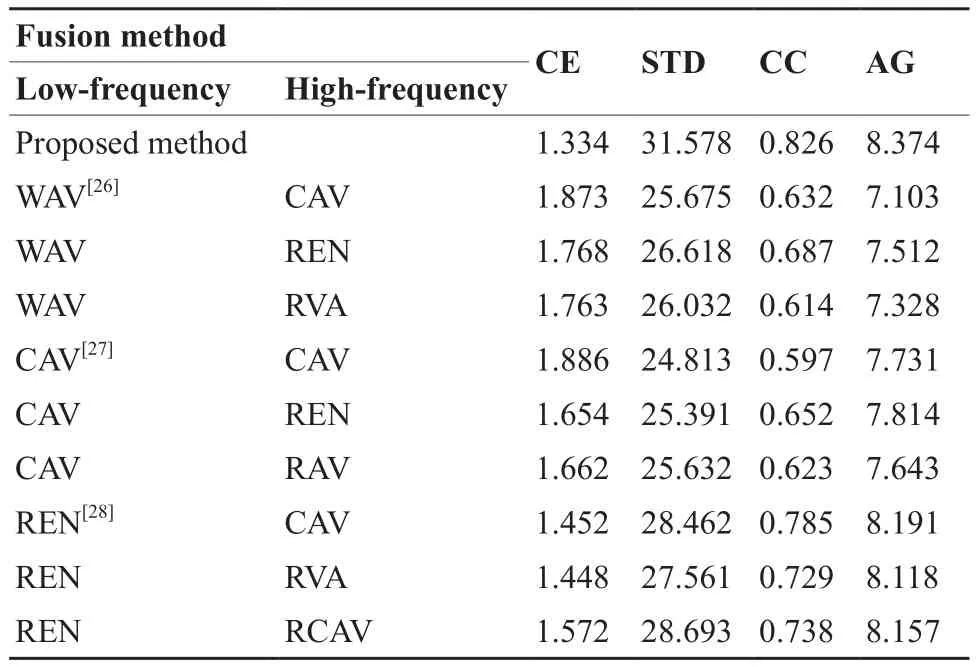

Comparison of the image fusion with different fusion rules: On observing Table 1, it can be easily concluded that different fusion rules have a great effect on the performance of fusion. The fusion measures of proposed multiscale fusion method have higher values of STD, CC and AG than other traditional wavelet transform methods, and with the lowest value of CE in all results. When WAV is applied as fusion rule of low frequency, no matter what fusion rule of high frequency, the values of STD, CC and AG are less than others, and with higher CE, therefore, the fused images have poor contrast and edge. When CAV is selected as the fusion rule both in the low frequency and high frequency coefficient, the CE is the highest in the results, which caused poor contrast and brightness of the fused image. The combination of REN and CAV can give a good performance, all fusion measures improved a lot, and the detailed information has been strengthened to some extent.

Table 1 Comparison on quantitative evaluation of different fusion rules

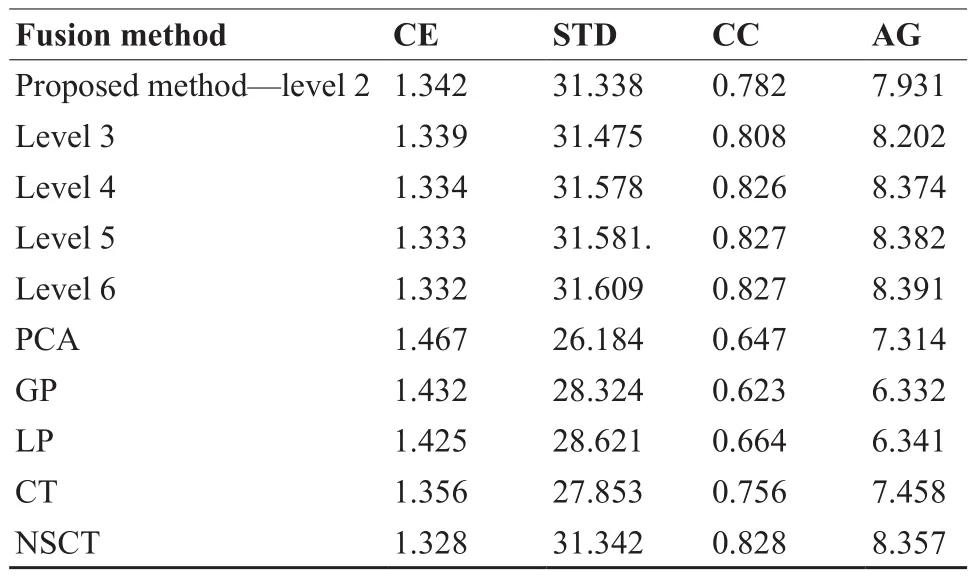

Comparison on quantitative evaluation of different methods: Similarly, on observing Table 2 indicated that when decomposition level is lower, the fusion measures of proposed method have no much difference from other methods. As the decomposition level is up to 4, the measures of fused method has higher values of STD, AG than any of the PCA, GP, LP, CT, and NSCT fusion methods. CE is 1.332 which is close to the lowest value 1.328, and value of CC is the third highest in the results. When the decomposition level is greater than 4, the measures of proposed method would turn to be stable. However, an overall comparison shows the superiority of the proposed fusion method.

Table 2 Comparison on quantitative evaluation of different fusion methods

Fusion of multimodal medical noisy images: To further evaluate the performance of the proposed method in noisy environment, the second set of medical images has been corrupted with Gaussian noise, shown in Figure 5a and b. For quantitative comparison, apart from evaluation criteria of STD, CC, AG and CE, peak signal to noise ratio (PSNR) is introduced to evaluate the performance of the fusion methods in the terms of the noise transmission. The larger the value of PSNR means the less the image distortion[29]. PSNR is formulated as follows[30]:

WhereRMSEis the root mean square error between the fused image and the reference image, the reference image is selected from Figure 4e.

On observing Table 3 showed that the proposed method has the highest value of PSNR in the fusion methods, and the values of STD, AG and CC are higher than any of PCA, GP, LP, CT, and NSCT fusion methods, the value of CE is the second lowest in the results. From the analysis above, it can be implied that the proposed scheme provides the best performance and has superiority to other algorithms in the noise environment.

Table 3 Comparison on quantitative evaluation of different fusion methods with Gaussian noise

4 CONCLUSION

Discrete wavelet transform has emerged as a powerful image processing tool which provides an effcient way of fusion using multiresolution analysis. In this work, we presented a novel and effective multimodal image fusion method using DWT based on maximum and region energy ratio fusion rule. The subjective and objective comparison of simulation results clearly demonstrate that: ① A new couple of fusion rules based on coefficient absolute value and region energy ratio are employed to preserve more useful information in the fused image and overcome the limitations of the traditional fusion rules; ② The proposed method can provide more encouraging and satisfactory performance than other existing fusion methods regardless the source images are clean or noisy;③ A good performance could be achieved by applying suitable fusion rules even in lower decomposition level. In the future, we will introduce the method into clinical application and design a medical image fusion platform.

[REFERENCES]

[1] Singh R,Khare A.Multiscale medical image fusion in wavelet domain[J].Scientific World J,2013.

[2] Yang Y,Tong S,Huang S,et al.Log-gabor energy based multimodalmedical image fusion in NSCT domain[J].Comput Math Methods Med,2014.

[3] Dong J,Zhuang D,Huang Y,et al.Advances in multi-sensor data fusion:algorithms and applications[J].Sensors(Basel), 2009,9(10):7771-7784.

[4] Bedi SS,Khandelwal R.Comprehensive and comparative study of image fusion techniques[J].IJSCE,2013:2231-2307.

[5] Yang Y,Zheng W,Huang S.Effective Multifocus Image Fusion Based on HVS and BP Neural Network[J].Scientific World J,2014.

[6] Jameel A,Ghafoor A,Riaz MM.Improved guided image fusion for magnetic resonance and computed tomography imaging[J].Scientific World J,2014.

[7] Javed U,Riaz MM,Ghafoor A,et al.Mri and pet image fusion using fuzzy logic and image local features[J].Scientific World J,2014.

[8] Zhang P,Tang YX,Liang YH,et al.Multi focus image fusion based on wavelet transformation[J].Journal of Harbin Institute of Technology(New Series),2013,20:124-128.

[9] Liu G,Lü X.Performance measure for image fusion considering region information[J].Journal of Zhejiang University SCIENCE A, 2007,8(4):559-562.

[10] Teng J,Wang X,Zhang J,et al.A multimodality medical image fusion algorithm based on wavelet transform[C].Advances in Swarm Intelligence. Springer Berlin Heidelberg,2010:627-633.

[11] Deng A,Wu J,Yang S.An image fusion algorithm based on discrete wavelet transform and canny operator[C].Advanced Research on Computer Education,Simulation and Modeling. Springer Berlin Heidelberg,2011:32-38.

[12] Sayed S,Jangale S.Multimodal medical image fusion using wavelet transform[EB/OL].Thinkquest 2010.Springer India,2011:301-304.

[13] Singh R,Khare A.Redundant Discrete Wavelet Transform Based Medical Image Fusion[C].Advances in Signal Processing and Intelligent Recognition Systems.Springer International Publishing,2014:505-515.

[14] Sahu DK,Parsai MP.Different image fusion techniques–a critical review[J].IJMER,2012,2(5):4298-4301.

[15] Li S,Yang B,Hu J.Performance comparison of different multi-resolution transforms for image fusion[J].Informatio Fusion,2011,12(2):74-84.

[16] Singh Y,Rajput A.Wavelet-Based Multi-Modality Medical Image Fusion CT/MRI for Medical Diagnosis Purpose Human Visual Perception Compute-Processing Image[J].Int J Elec eng Educ,2014,3(2):24.

[17] Li S,Yang B,Hu J.Performance comparison of different multiresolution transforms for image fusion[J].Inform Fusion, 2011,12(2):74-84.

[18] Singh R,Khare A.Fusion of multimodal medical images using Daubechies complex wavelet transform–A multiresolution approach[J].Inform Fusion,2014,19:49-60.

[19] Wang N,Ma Y,Zhan K,et al.Multimodal medical image fusion framework based on simplified PCNN in nonsubsampled contourlet transform domain[J].J Multimedia,2013,8(3):270-276.

[20] James AP,Dasarathy BV.Medical image fusion:a survey of the state of the art[J].Inform Fusion,2014,19:4-19.

[21] Singh Y,Rajput A.Wavelet-Based High-Frequency Texture Fusion Low Energy CT/MRI Images[J].Int J Elec Eng Educ,2014,3(1):183.

[22] Li S,Yang B,Hu J.Performance comparison of different multiresolution transforms for image fusion[J].Inform Fusion,2011, 12(2):74-84.

[23] Rodrigues D,Virani HA,Kutty S.Multimodal Image Fusion Techniques for Medical Images using Wavelets[J].Image, 2014,4:2.

[24] James AP,Dasarathy BV.Medical image fusion:a survey of the state of the art[J].Inform Fusion,2014,19:4-19.

[25] Liu Z,Yin H,Chai Y,et al.A novel approach for multimodal medical image fusion[J].Expert Syst Appl,2014,41(16):7425-7435.

[26] Bhatnagar G,Wu QMJ,Liu Z.A new contrast based multimodal medical image fusion framework[J].Neurocomputing,2015, 157:143-152.

[27] Patel R,Rajput M,Parekh P.Comparative Study on Multi-focus Image Fusion Techniques in Dynamic Scene[J].Int J Comput Appl,2015,109(6).

[28] Singh Y,Rajput A.Wavelet-Based High-Frequency Texture Fusion Low Energy CT/MRI Images[J].Int J Elec Eng Educ, 2014,3(1):183.

[29] Phamila YAV,Amutha R.Discrete Cosine Transform based fusion of multi-focus images for visual sensor networks[J].Signal Process,2014,95:161-170.

[30] Wang L,Li B,Tian L.Multimodal Medical Volumetric Data Fusion Using 3-D Discrete Shearlet Transform and Global-to-Local Rule[J].Biomed Eng,IEEE Trans,2014,61(1):197-206.

专栏——个体化精准诊疗(Part Ⅱ)

编者按:面向临床的个体化精准诊疗依托生物医学、数字信息及精密器械技术,通过精确的术前评估、精细的术中操作和精良的药物选择,实现针对个体的高精度智能化诊断治疗。其目标是利用病变的结构与功能信息,为每个病人量身打造最优治疗方案。个体化精准诊疗涉及的领域主要包括智能医学信息诊断、精准诊疗整合系统,以及个体化介入与生物药物治疗等领域的技术创新与整合。精准诊疗和智能医疗近年在国际上已成为医疗健康领域的重点发展目标,而在结合中国国情的精准诊疗技术和设备的研发中我们将面临更多的机遇和挑战。本栏目遴选个体化精确诊疗领域研究、开发及临床应用方面的最新成果进展,这些研究以实施精密治疗从而提高治愈率和患者生命质量为目的,融合多种智能型诊断治疗技术,为彻底清除异常组织并最大程度保留正常组织提供新型精确方法。在创建新型同步诊断治疗方法的同时,我们也期待为将来微创医疗的发展,以及我国医疗器械产业的竞争力提升提供新的方向。

栏目主编:廖洪恩

廖洪恩,清华大学医学院生物医学工程系教授,分别于1996年获北京大学学士学位,2000年和2003年获日本东京大学硕士和博士学位。曾任日本学术振兴学会特别研究员,日本东京大学工学院特任教员、助理教授、准教授,及美国哈佛大学医学院客座研究员。2010年入选国家“千人计划”(创新人才),获国家特聘专家称号。翌年起任清华大学医学院生物医学工程系教授、博士生导师。廖洪恩教授主持了30余项三维立体医学影像、微创手术器械与机器人、尖端微创诊疗一体化系统、长距离三维立体显示等重要研发项目。在世界上首次实现微创手术治疗的立体空间透视导航,并成功研制出首台用肉眼即可观察到的具有5米以上图像纵深的立体图像显示装置。该系列成果被美国、英国、德国、俄罗斯、日本等国际媒体广泛深入报道,并在德国汉诺威世界博览会、日本科学未来馆等十几家展览会和博物馆展出。在包括MedIA, Nature Photonics, IEEE Trans系列刊物等发表了190余篇国际期刊和学会Proceedings论文、20余篇综述性文章、280余篇会议摘要,合著和编辑专业书籍9部,拥有专利27项。曾获得国际医学生物工程联合会颁发的IFMBE青年学者奖、日本文部科学大臣表彰、爱立信青年科学家奖、荻野奖等十余项国际/地区性奖项。受邀担任荷兰、加拿大、日本、新加坡等国家科研基金评审委员,以及国家自然科学基金、“长江学者奖励计划”、国家“千人计划”、“万人计划”等评审专家、专家组组长。现任亚洲计算机辅助外科学会副会长、国际医学生物工程联合会亚太组秘书长等职务。

R197.39 [Document code] A

10.3969/j.issn.1674-1633.2016.06.001

1674-1633(2016)06-0001-06

Jiang Hong-bing, Researcher-level Senior Engineer.

E-mail: cmdjhb@126.com