A Parameter⁃Detection Algorithm for Moving Ships

Yaduan Ruan,Juan Liao,Jiang Wang,Bo Li,and Qimei Chen

(School of Electronic and Engineering,Nanjing University,Nanjing 210093,China)

A Parameter⁃Detection Algorithm for Moving Ships

Yaduan Ruan,Juan Liao,Jiang Wang,Bo Li,and Qimei Chen

(School of Electronic and Engineering,Nanjing University,Nanjing 210093,China)

In traffic⁃monitoring systems,numerous vision⁃based approaches have been used to detect vehicle parameters.However,few of these approaches have been used in waterway transport because of the complexity created by factors such as rippling water and lack of calibration object.In this paper,we present an approach to detecting the parameters of a moving ship in an inland river. This approach involves interactive calibration without a calibration reference.We detect a moving ship using an optimized visual foreground detection algorithm that eliminates false detection in dynamic water scenarios,and we detect ship length,width,speed,and flow.We trialed our parameter⁃detection technique in the Beijing⁃Hangzhou Grand Canal and found that detection accuracy was greater than 90%for all parameters.

video analysis;interactive calibration;foreground detection algorithm;traffic parameter detection

1 Introduction

Waterway transport is important in China be⁃cause it is low cost[1],[2].Compared with road transport,waterways are more susceptible to changes in natural conditions.A dry season lowers water levels and narrows channels.A rainy season rais⁃es water levels and lowers the clearance for ships passing un⁃der bridges.There are fewer restrictions on ships as there are on vehicles.Additionally,because of the profit motive,high⁃tonnage ships are often navigated through low⁃grade channels at high speed,which causes congestion within the channel and increases the likelihood of a collision.Therefore,the size,speed,and flow of ships need to be monitored and regulated[3].At present,there the only way of determining whether a ship is oversized is to stop the ship and take manual measure⁃ments,and speed and flow are measured by visual estimation. Automated video surveillance has been used on freeways but has drawbacks when used on waterways.For example,there are no marking lines that can be used for calibration.Such lines help transform data from the image plane to real ground plane.In a channel,ripples hamper efforts to detect the ship’s size and ship that are sheltering each other when close togeth⁃er.In[4],the authors propose a method for extracting the ship’s edge and generating a minimum bounding rectangle that ap⁃proximates the ship’s size at the pixel level.However,it doesnot give the real size of the ship.In[5],the authors propose a method for photographing the side and top of a ship and using column scanning to obtain the ship’s length and width at the pixel level.Then,the real size can be calculated according to the image resolution.However,this method is only suitable for stationary ships and a static background.In[6],the authors use background modeling to extract ships and a perspective transformation model to calculate the real size of the ships. However this method assumes that the axis of the ship is paral⁃lel or perpendicular to the river boundary,which is not always the case.

All these detection methods are easy to realize and are reli⁃able in real time;however,they are too ideal to be used in com⁃plex river scenarios where there are no reference objects for au⁃to⁃calibration.In this paper,we introduce an interactive cali⁃bration method for mapping image coordinates to real⁃world co⁃ordinates.A visual background extractor algorithm(ViBe)is used for accurate foreground detection and precise extraction of ships in an aquatic scenario.Then the size,speed,and flow are measured using detection models.This method,which has been used in China’s shipping network,predicts ship size,speed,and flow with 90%of the accuracy of field measure⁃ments.

2 Algorithm Framework

The existing parametric detection algorithm for a moving ship is not suitable in complex scenarios.Because it lacks ef⁃fective camera calibration,it cannot accurately calculate theparameters for a moving ship.To obtain accurate traffic param⁃eters,we propose a new parameter⁃detection algorithm for in⁃land river scenarios.The basic framework of this algorithm is shown in Fig.1.

In the pre⁃processing stage,the region of interest needs to be set to remove redundant information while monitoring the scenario,threshold values need to be determined to provide cri⁃teria for ship classification,and interactive grid calibration is required to translate the image⁃plane coordinate system into the real⁃world coordinate system.Then,we use an optimized ViBe algorithm to extract the moving ship,we track every ship using a Kalman filter[7],and we record the center point of ev⁃ery ship in each frame image.We obtain the navigation path through these center points by using the least squares function. Finally,the parameters,including width,length,speed and flow,are calculated.

3 Interactive Calibration

Camera calibration is the basis of intelligent video analysis(IVA).Parameters of moving objects,such as size and speed,need to be translated into a physical value by calibration.Be⁃cause there are no reference objects in our river scenario,we use an interactive method that involves a handler and terminal interface for calibration.This allows us to translate the image coordinates into real⁃world coordinates and calculate physical sizes in real time without any reference objects.

Fig.2 shows the transformation relations between the image plane coordinatesu⁃vand real⁃world 3D coordinates Xw⁃Yw⁃Zw. These relations are specified by the camera⁃imaging model,and three coordinates are defined.Of these,Xw⁃Yw⁃Zwand cam⁃era coordinates Xc⁃Yc⁃Zcdescribe the three⁃dimensional space,and u⁃v describes the camera image plane.The origin of u⁃v is the center of the camera image,and the origin of Xw⁃Yw⁃Zwis the point where the optical axis of the camera intersects the ground plane.

To obtain the transform relations between the u⁃v and Xw⁃Yw⁃Zw,four parameters must be obtained by camera calibration. These parameters are:focal length f,height of the camera H,pitch angle of the camera t,and deviation angle p.

Therefore,we introduce an interactive calibration technique that does not require a calibration reference.We superimpose a virtual regular grid on the ground,as in Fig.3a,but allowing for perspective,this appears as in Fig.3b.We can build thetransform relations from the ground grid to the image grid when obtaining the four required parameters.According to[8],translation between the u⁃v and Xw⁃Yw⁃Zwis given by:

If W=1 m and L=2 m,a corresponding grid can be generat⁃ed as long as H,t,s,and f have been given initial values.We describe the grid image as g(H,t,s,f).If any of these four pa⁃rameters changes,g(H,t,s,f)changes.Fig.4 shows the grid image on the interactive calibration interface.The handler ad⁃justs the four parameters until the grid fits the river plane and obtains the parameters.

4 Ship Extraction and Tracking

Before a ship can be tracked and its behavior analyzed,it first much be extracted.In[10],a Universal Visual Back⁃ground Extractor(ViBe)represents background features of each pixel with neighboring observed samples and uses a sto⁃chastic updating policy to diffuse the current pixel value into its neighboring pixels.In[11],the authors compare the applica⁃bility and effectiveness of several background modeling algo⁃rithms,including Mixture of Gaussian(MOG),codebook,and ViBe.With ViBe,the decision threshold and randomness pa⁃rameter are fixed for all pixels,which is too inflexible when there are variations in the background.To make background model more adaptable to dynamic backgrounds,the decision threshold is dynamically adjusted according to the degree of background changes in each pixel.The main steps in ship ex⁃traction and tracking are as follows.

First,a nonparametric background model is built.A single frame at time t is denoted It,and Bt(i,j)of pixel(i,j)observed at time t is defined by a collection of N recently observed pixel values:

Second,the decision threshold R(i,j)of each pixel is adap⁃tively set according to the degree of variation of the back⁃ground,which is measured by the mean of a collection of N minimum Euclidean distances,defined as follows:

wheredk(i,j)=minkdist[It(i,j),Bk(i,j)]is the minimum Euclidean distance between the pixel values of pixel(i,j)and N back⁃ground values.The mean value of Dt(i,j)is estimated using:

As in(4),the greater the number of background changes,the greater the value of dmin(i,j).Using dmin(i,j),the decision threshold Rt(i,j)of pixel(i,j)is:

whereα1,α2and ηare fixed parameters.The decision thres⁃ hold Rt(i,j)can dynamically adapt to background changes.For static backgrounds,Rt(i,j)slowly approximates to a constant ηdmin(i,j).However,for dynamic backgrounds,Rt(i,j)increases in order to not integrate background pixels into the foreground.

Third,we compare the pixel value It(i,j)of pixel(i,j)at time t with N background values of Bt(i,j).The pixel(i,j)is a fore⁃ground pixel if the distance between It(i,j)and N background values of Bt(i,j)is:

where dist{·}is the Euclidean distance between It(i,j)and Bt(i,j),and num{·}is the number of background values satisfying the condition dist{·}<Rt(i,j).The foreground segmentation mask is denoted Ft.IfFt(i,j)=1,(i,j)in the current frame is a foreground pixel.IfFt(i,j)=0,the corresponding pixel belongs to the background.

Fourth,noise is filtered out using morphological operations,and virtual targets are removed through area features.From Fig.5,it can be found that our method removes the interfer⁃ence of water ripples and extracts the contour of the ship more clearly than ViBe.

Fifth,the background model Bt(i,j)should be updated in re⁃al time to adapt to the changing background.If the pixel value of(i,j)at time t matches its background model Bt(i,j),the pixel is classified as background and is used to randomly update Bt(i,j).We randomly choose a background value bk(i,j)(k∈1,...,N)and replace it with the current pixel value It(i,j),which has a probabilityφ.

Sixth,a Kalman filter is used when tracking ship to guaran⁃tee accuracy and continuity.

5 Traffic Parameter Detection

Here,we introduce our approach to calculating ship parame⁃ters:length and width,speed,and flow.

5.1 Length and Width

We extract a contour of the binary image of a moving ship by using background subtraction algorithm with a Canny opera⁃tor[12].Frame by frame,the center of a ship’s contour is pin⁃pointed and these points are recorded as the navigation path. The least squares approach is used to draw a straight line(main line)from these center points.As shown in Fig.6,the main line and ship’s contour intersect at two points,and the distance between the two points is the ship’s length.We draw the line so that it crosses through the center point and is per⁃pendicular to the main line.The main line and ship’s contour intersect at another two points,and the distance between these two points is the ship’s width.

5.2 Speed

Here,we calculate the ship’s average speed through a de⁃tected region.The average speed is determined by two horizon⁃tal parallel lines in the image.When a ship passes over one of two parallel lines,we record the time and ship’s center point. Once the ship has touch the other line,the time and ship’s center point are recorded again.By comparing the two records,we can de⁃termine the time difference T and dis⁃tance S between the two center points. The ship’s average speed V is given by V=S/T.

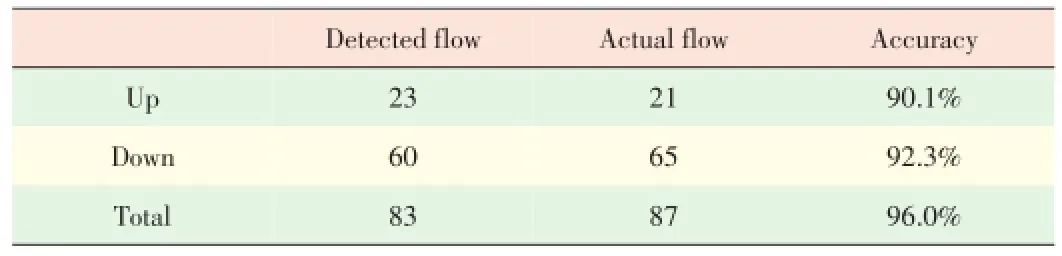

5.3 Flow

Flow detection involves understand⁃ing the dynamic image sequence.The traditional approach to flow detection is to place a gate line in the surveil⁃lance image and add up the number of ships whose centers cross the gate line. Large ships often cover smaller ones at the gate line when the ships are close together,which causes flow detection errors.To solve this problem,we use two horizontal gate lines in the image. These gate lines are used together to count ships.Fig.7 shows what hap⁃pens when two adjacent separate from each other.The small ship is moving upriver and the larger ship is moving downriver.The larger ship covers the smaller one when they come to the upper gate line.The smaller ship misses by upper gate line but has been recorded by the lower gate line.Therefore,the flow is determined correctly.

6 Experimental Results

We trialed our parameter⁃detection algorithm in an inland river in Nantong,Jiangsu province.The test sequence was ob⁃tained on September 17,2013.The camera calibration parame⁃ters were:H=34,f=700,t=0.32,and p=0.39.Ship length and width,speed,and flow were detected using our algorithm(Tables 1 to 3,respectively),and the accuracy was measured against the actual ship parameters.As shown in Tables 1 to 3,the accuracy of our algorithm was greater than 90%.Our algo⁃rithm is therefore more accurate than previous manual meth⁃ods of determining ship parameters and is better for waterway traffic management and emergency rescue.

▼Table 1.Length and width detection

▼Table 2.Speed detection

▼Table 3.Flow detection

7 Conclusion

Intelligent video image processing can be used to determine ship size,speed,flow,and other parameters.We can make full use of video resources of inland waterways and access to ship and channel information in order to make better management decisions.In this paper,we introduced a human⁃machine cam⁃era calibration technique and optimized the ViBe algorithm to create a model for determining ship parameters such as length,width,speed,and flow.The experimental results showed that our algorithm can obtain these parameters in real⁃time with an accuracy of more than 90%.This means the algorithm can be implemented in real applications and also has important theo⁃retical value for the development of intelligent waterway trans⁃portation.

[1]A.Caris,S.Limbourg,C.Macharis,et al.,“Integration of inland waterway trans⁃port in the intermodal supply chain:a taxonomy of research challenges,”Journal of Transport Geography,vol.41,pp.126-136,2014.doi: 10.1016/j. jtrangeo.2014.08.022.

[2]L.Guo,The Research of Collection of Traffic Information Based on Information Fusion.Hefei,China:Press of University of Science and Technology of China,2007.

[3]V.Bugarski,T.Bacˇkalic,'and U.Kuzmanov,“Fuzzy decision support system for ship lock control,”Expert Systems with Applications,vol.40,no.10,pp.3953-3960,2013.doi:10.1016/j.eswa.2012.12.101.

[4]J.Wang,Wavelet Analysis and its Application in the Measuring of Size of the Boat Image.Nanjing,China:Press of HoHai University,2006.

[5]J.Wang,The Research of Intelligent detection method and Application based on Image Fusion technology.Nanjing,China:Press of HoHai University,2009.

[6]X.Zhang,L.Xu,and A.Shi,“Visual measurement system for traffic flow of in⁃land waterway,”Journal of Image and Graphics,vol.16,no.7,pp.1219-1225,2011.

[7]S.Kluge,K.Reif,and M.Brokate,“Stochastic stability of the extended Kalman filter with intermittent observations,”IEEE Transactions on Automatic Control,vol.55,no.2,pp.514-518,2010.doi:10.1109/TAC.2009.2037467.

[8]R.Dong,B.Li and Q.Chen,“An automatic calibration method for PTZ camera in expressway monitoring system,”World Congress on Computer Science and In⁃formation Engineering,Los Angeles,USA,2009,pp.636-640.

[9]O.Barnich and MV.Droogenbroeck,“ViBe:A universal background subtraction algorithm for video sequences,”IEEE Transaction on Image Processing,vol.20,no.6,pp.1709-1724,2011.doi:10.1109/TIP.2010.2101613.

[10]X.Fang,The Research on Background Modeling Algorithm of Moving Target in Ship Video Sequences.Wuhan,China:Press of Wuhan University of Technolo⁃gy,2013.

[11]E.Li,B.Zhang,and X.Zhou.“The Research on Adaptive Canny edge detec⁃tion algorithm,”Science of Surveying and Mapping,vol.33,no.6,pp.119-121,2008.

[12]R.Biswas and J.Sil.“An improved canny edge detection algorithm based on type⁃2 fuzzy sets,”Procedia Technology,vol.4,pp.820-824,2012.doi:10.1016/j.protcy.2012.05.134.

Manuscript received:2015⁃03⁃29

Biographiesphies

Yaduan Ruan(ruanyaduan@163.com)received the PhD degree from Nanjing Uni⁃versity in 2014.She is a lecturer at School of Electronic Science and Engineering,Nanjing University.Her research interests include image processing and machine learning.

Juan Liao(liaojuan308@163.com)received the BS and MS degrees from Anhui Uni⁃versity of Science and Technology in 2008 and 2011.She is currently a PhD candi⁃date at Nanjing University.Her research interests include computer vision and im⁃age processing.

Jiang Wang(jiangnju_edu@sina.com)received the BS degree from Nanjing Univer⁃sity in 2012.He is an MS degree candidate at Nanjing University.His research in⁃terests include computer vision and image processing.

Bo Li(liboee@nju_edu.cn)received the PhD degree in signal and information pro⁃cessing from Nanjing University in 2009.He is an associate professor at Nanjing University.His research interests include computer vision and machine learning.

Qimei Chen(chenqimei@nju_edu.cn)received the MS degree from Tsinghua Uni⁃versity,Beijing,in 1982.He is a professor at Nanjing University.His research inter⁃ests include visual surveillance,image and video processing.

This work was supported by Fund of National Science&Technology monumental projects under Grants NO.61401239,NO.2012⁃364⁃641⁃209.

- ZTE Communications的其它文章

- Using Artificial Intelligence in the Internet of Things

- I2oT:Advanced Direction of the Internet of Things

- An Instance⁃Learning⁃Based Intrusion⁃Detection System for Wireless Sensor Networks

- Forest Fire Detection Using Artificial Neural Network Algorithm Implemented in Wireless Sensor Networks

- WeWatch:An Application for Watching Video Across Two Mobile Devices

- Inter⁃WBAN Coexistence and Interference Mitigation