Software-Defined Data Center

Ghazanfar Ali,Jie Hu,and Bhumip Khasnabish

(1.ZTECorporation,Nanjing210012,China;

2.ZTEUSA,Morristown,NJ 07960,USA)

Abstract Defining a software-defined data center is a vision of the future.An SDDCbrings together software-defined compute,software-defined network,software-defined storage,software-defined hypervisor,software-defined availability,and software-defined security.It also unifies the control planes of each individual software-defined component.A unified control plane enables rich resource abstractions for purpose-fit orchestration systems and/or programmable infrastructures.This enables dynamic optimization according to business requirements.

Keyw ords cloud computing;virtualization;security;software-defined;data center

1 Software-Defined Data Center Architecture

A software-defined data center(SDDC)architecture defines data center resources in terms of software.Specifically,it releases compute,network,storage,hypervisor,availability,and security from hardware limitations and increases service agility.This can be considered an evolution from server virtualization to complete virtualization of thedata center.

1.1 Software-Defined Compute

Software-defined compute(SDC),also called server virtualization,releases CPU and memory from the limitations of underlying physical hardware.As a standard infrastructure technology,server virtualization is the basis of the SDDC,which extendsthesameprinciplestoall infrastructureservices.

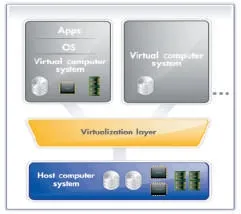

The basic elements of a virtualized environment[1]are shown in Fig.1.The resources that comprise this environment are typically provided by one or more host computer systems.A virtualization layer(typically firmware or software,but sometimes hardware)manages the lifecycle of a virtual computer system,which comprises virtual resources that are allocated or assigned to it from the physical host computer system.A virtual computer system may be active and run an operating system and applications with a full complement of defined,allocated virtual devices.

The virtual computer system may also be inactive with no softwarerunningand only asubset of thevirtual devicesactually allocated.In this environment,a primary responsibility of the administrator is to manage the operational lifecycle of thesevirtual systems.

Resources of the virtual computer system may have properties or qualities that are different to those of the underlying physical resources.For example,virtual resources may have different capacities or QoS(for performance or reliability)than the underlying physical resources.Managing relationships between virtual and physical resources makes administration tasksin avirtualized environment morecomplex.

1.2 Software-Defined Network

▲Figure1.Elementsof virtualized system management.

In a software-defined network(SDN),the network control plane is moved from the switch to the software running on a server.This improves programmability,efficiency,and extensibility.

There has been much technical development and implementation of SDN.This paper does not delve into the details of this vibrant software-defined component.

1.3 Software-Defined Storage

Software-defined storage(SDS)is an ecosystem of products that decouple software from underlying storage network hardware and places it in a centralized controller or hypervisor.This centralized software makes visible all physical and virtual resources and enables programmability and automated provisioning based on consumption or need.The software can live on a server or be part of an operating system or hypervisor,but it is no longer firmware on a hardware device.The software can alsocontrol hardwarefrommultiplevendorsand enableengineerstobuild non-proprietary environments[2].

In other words,SDSseparates the control plane from the data plane and dynamically leverages heterogeneity of storage to respond to changing workload demands.The SDSenables the publishing of storage service catalogs and enables resources to be provisioned on-demand and consumed according to policy.The characteristics of software-defined storage could include any or all of the following[3],[4]:

·pooling and abstraction of the logical storage services and capabilities from the underlying physical storage systems.Thisisreflected in theformerly used term,storagevirtualization.

·automation with policy-driven storage provisioning.This requires management interfaces that span traditional storagearray products,and it requires defining the separation of the control plane from the data plane(in the spirit of Open-Flow).This issue is not new.Prior industry standardization efforts,such as SMI-S,began in 2002.

·virtual volumes for better performance and optimized data management.This is not a new capability for virtual infrastructure administrators(it is already possible using NFS),but it does give arrays using iSCSIor Fibrechannel a path to higher administrator leverage for cross-array management appsthat arewritten tothevirtual infrastructure.

·commodity hardwarewith storage logic abstracted intoasoftware layer.This is conventionally described as a clustered file systemfor converged storage.·scaled-out storagearchitecture.

VMWare defines software-defined storage as a fundamental component of the SDDC.With software-defined storage,resources are abstracted to enable pooling,replication,and ondemand distribution.The result is a storage layer much like that of virtualized compute:aggregated,flexible,efficient,and scalable.The benefits are across-the-board reduction of the cost and complexity of storageinfrastructure[5].

International Data Corporation(IDC)defines SDS as any storage software stack that can be installed on commodity resources(e.g.x86 hardware,hypervisors,or cloud)and/or offthe-shelf computing hardware.Furthermore,to conform to this definition,software-based storage stacks should offer a full suite of storage services and integration of the underlying persistent data placement resources so that tenants can move freely between these resources[6].

IDC asserts that SBS platforms offer a compelling proposition for both incumbent and upcoming storage suppliers[7].It is in thelong-terminterest of incumbentstochange their hardware-centric mind frame and join the ranks of emerging startups.This will bring about a paradigm shift.With the proliferation of SDSplatforms,the delineation between hardware,software,and cloud storage suppliers will blur and eventually disappear[7].

IDCalso observes that the SDSmarket has picked up steam.Nexenta Systems,based in Santa Clara,CA,raised$24 million in February 2013,to help advance its NexentaStor open storage platform[8].VMware also made waves that month by snapping up virtual-storage specialist Virsto for an undisclosed amount.In December 2012,storage startup ScaleIOannounced that it had raised$12 million to boost its ScaleIO ECSsoftware operations[6].

SDS,on the other hand,was conceived with cloud environments in mind.According to Debbie Moynihan,VP of marketing at InkTank(a storage vendor),“Software-defined storage wasdesigned to scale-out to thousands of nodes and to support multi-petabytes of data,which will be the norm as the amount of stored data continues to grow exponentially and as more and morestoragemovestothecloud”[2].

The Storage Networking Industry Association(SNIA)Cloud Data Management Interface(CDMI)standard defines the functional interface that applications use to create,retrieve,update,and delete data elements from the cloud.As part of this interface,the client can discover the capabilities of the cloud storage offering and use the interface to manage containers and the data placed in them.Metadata can also be set on containers and the contained data elements[9].

1.3.1 Storage Virtualization versus SDS

SDSis similar to other software-defined elements,such as SDN,of thedatacenter[10],[11].

In many respects,SDSis more about packaging and how IT users think about and design data centers.Storage has been largely software-defined for more than a decade:the vast majority of storage features have been designed and delivered as software components within a specific storage-optimized environment.

SDS is sometimes referred to as a storage hypervisor,although the two concepts are somewhat different.Both terms are evolving,and vendors use them to describe different aspectsof their storagesystems.

Now the question arises,“Does SDSenable you to do something that you cannot do with traditional storage?”For the most part,SDSis an attempt to provide the same functions as those in traditional storage systems.What isdifferent is the abstraction,which providestwokey capabilities.

First,the storage control function now can execute on any server hardware.That means a storage system can be built with commodity hardware and using commodity disks.This makes the purchase and implementation of a storage system more“kit-like,”but it also means that system implementation and management requires more skill and time.This investment,however,can significantly reduceacquisition costs.

In addition,the storage controller can now be placed anywhere,it does not have to be installed on dedicated hardware.A growing trend is to implement the software storage controller within a virtual server infrastructure and use available compute power from the host or hosts within that infrastructure.This reduces costs further and creates a simpler scaling model.If a virtual storage controller is installed every time a host is added to the infrastructure,storage processing and capacity increasesin lockstep with server growth.

In many ways,a storage hypervisor is part of SDS;it is the core element of an entire storage software stack.Again,vendorsusethetermdifferently,soitsmeaningisnot standard.

1.3.2 Storage Virtualization and Server Virtualization

Storage vendors are trying to do for storage what server virtualization did for servers.Many of the things vendors are aiming at result in server hypervisors,where one big server is turned into multiple virtual machines.With a storage hypervisor,the opposite is true.Many disparate storage parts are combined it into one pool.The result is similarin terms of efficiency[12].

1.3.3 Open-Source Storage Software

Another emerging theme is open-source storage software.The commoditization of storage hardware and new economic imperative to do more with less has spurred activity in opensourcestorage in recent months[13].

It is unlikely that open-source storage will transform the storage industry overnight;after all,storage strategy is still dominated by conservative,risk-averse thinking.There is already plenty of momentum in areas where open-source may offer adequate performance and functionality at a much better pricethan traditional approaches.

Another area of interest is the cloud,where service providers offering storage as a service have turned to open-source storage in order to compete on price with the economies of scaleenjoyed by cloud giants,such as Amazon.

This is a particularly active space right now,especially from an object storage perspective.The main area of interest is OpenStack-based efforts from companies such as Rackspace,HP,and Dell.Other companies,such as Basho Technologies and DreamHost spin-off,InkTank(with Ceph),are also lining up open-source object storage stacks that can underpin costeffective,large-scale cloud storage services and potentially enhanceor replacethe Swift storageelement of OpenStack.

Many other object storage suppliers are considering the open source route,so activity in this area is likely to increase.Open source storage may have its niche in small businesses and service providers,but it has yet to penetrate mediumsized and large enterprises in a meaningful way.

1.4 Software-Defined Security

In software-defined security(SDSec),protection is based on logical policies and is not tied to any server or specialized security device.Adaptive,virtualized security is achieved by abstracting and pooling security resources across boundaries so that regardless of where a user resource is located,it can be protected.It is not assumed that the user resource will remain in thesamelocation.

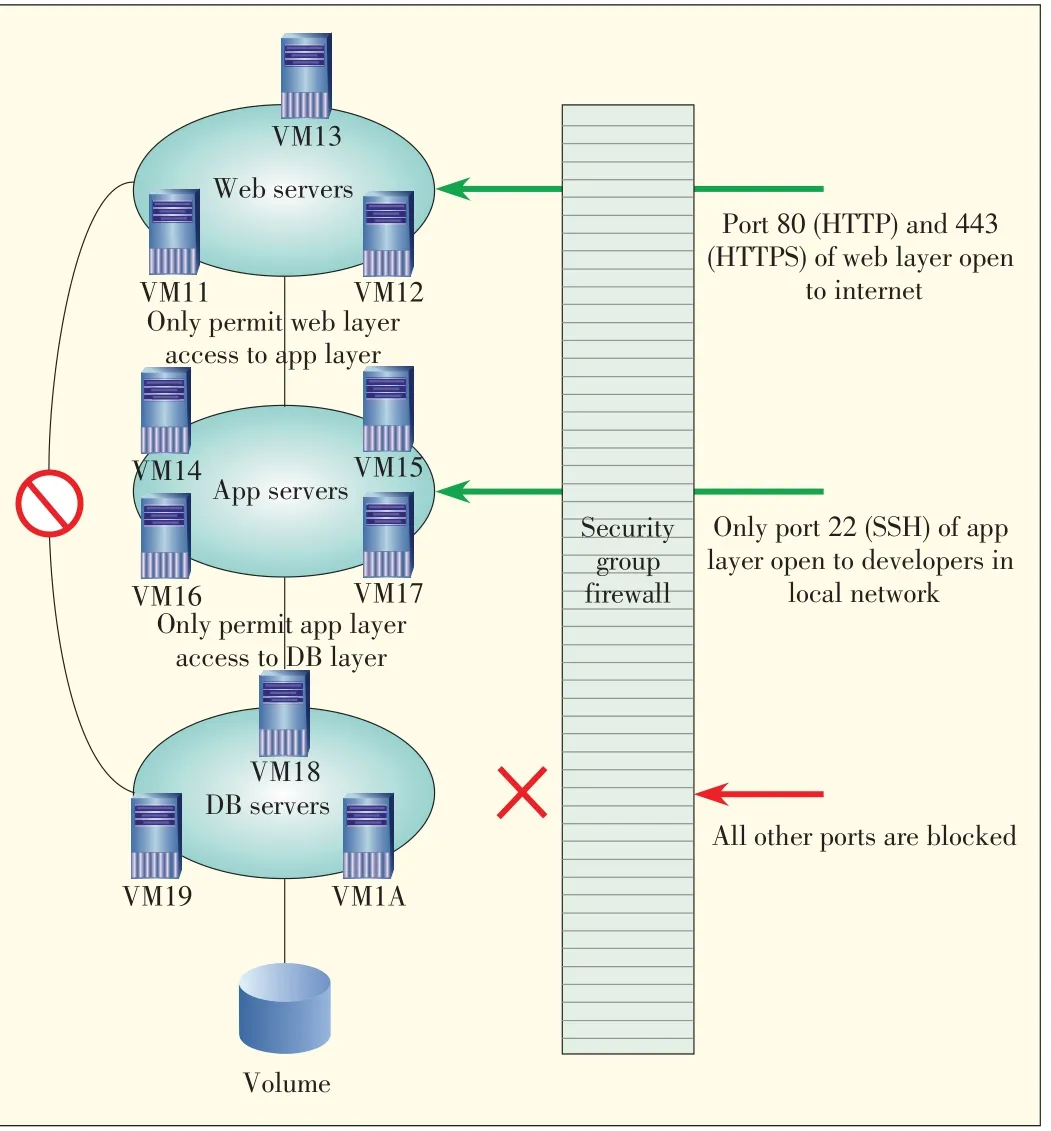

One or more instance/virtual machine,storage volume,etc.can be grouped into a logical resource security group that shares a common set of rules for controlling who can access the instances.This set of rules specifies the protocols,ports,and source IPrangesfor traffic filtering.

Fig.2 shows a basic three-tier web-hosting architecture and describes inbound and outbound traffic control using security groups.Each tier hasa different security group.

The web server SG only allows access from hosts over TCP on ports 80(HTTP)and 443(HTTPS)and from instances in theapp server SGon port 22(SSH)for direct host management.

▲Figure2.Inbound and outbound traffic control using a security group.

The app server SGallows access from the web server SGfor web requests and from local subnet over TCPon port 22(SSH)for direct host management.Developers can directly log into the application servers from the local network.The database server SG permits only the app servers SG to access the databaseservers.

1.5 Software-Defined Hypervisor

In virtualization,a hypervisor is a software program that manages multiple operating systems,or multiple instances of the same operating system,on a single computer system.The hypervisor manages the system's processor,memory,and other resources in order to allocate the resources that each operating system requires.Hypervisors are designed for a particular processor architecture and may also be called virtualization managers.

Software-defined hypervisor provides enables virtualization of the hypervisor layer and decouples it from underlying virtualization management.This enables selective use of other hypervisors,such as Hyper-V,KVM and VSphere,in response tobusinessrequirements.

1.6 Software-Defined Availability

Software-defined availability(SDavailability)enables two or more virtual systems to be deployed on different platforms or at two or more locations according to availability or disaster-recovery[14].Table1 givesagranular view of availability.

In VMWare's vision of SDavailability,the SDDC provides availability for all applications,independent of the platform stack.This enables customers to establish a consistent first line of defense for the customer's entire IT infrastructure.SDavailability can automatically detect and recover from any software or operating system failure that affects virtual appliance[5].

2 DMTF Open SDDCIncubator

The SDDC[15]is an emerging area of technology that could revolutionize the IT infrastructure over the next several years.New technologies such as SDN and SDShave begun appearing on the market.Although there are many management standards for physical,virtual,and cloud-based systems,there are currently no standard architectures or definitions for SDDC.According to Dave Bartoletti of Forrester Research,“At the core of the software-defined datacenter is an abstracted and pooled set of shared resources.But the secret sauce is in the automation that slices up and allocates those shared resources on-demand,without manual tinkering”[16].

To address this demand,DMTF has proposed an Open Software Defined Data Center(OSDDC)incubator that will develop use cases,reference architectures,and requirements based on real-world customer requirements.With these inputs,the incubator will help in the development of a set of white papers and recommendationsfor industry standardization.

DMTF OSDDC is a pool of compute,network,storage and other resources that can be dynamically discovered,provisioned,and configured according to workload.SDDC provides abstraction that enables policy-driven orchestration of workloads as well as management and measurement of resources consumed.SDDCcomprisesaset of features,including[17]:

·apool of compute,network,storageand other resources

·discovery of resourcecapabilities

·automated provisioning of logical resources based on workload requirements

·management and measurement of resourcesconsumed

·policy-driven orchestration of resources to meet SLOs of the workloads.

3 OpenStack Software-Defined Infrastructure

OpenStack is a cloud operating system,which means that it is the software that manages the computer resources in a cloud datacenter[18].

Currently,there is no data center that is entirely standardized on a single virtualization technology.Looking at compute virtualization alone,one is likely to encounter a mix of PowerVM,z/VM,KVM,VMware ESX server,Microsoft Hyper-V,and perhapsahandful of other technologiesin any given data center.How is it possible to create a programming layer acrosssuch adiverseset of server virtualization technologies?

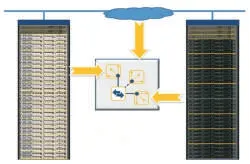

An answer to this is OpenStack,which the IT industry has settled on for software-defined infrastructure(SDI).OpenStack SDIspansall computevirtual environmentsand enablestheintegration of heterogeneous compute,storage,and network environments into a single,programmable infrastructure to support(rack of)virtual appliances.

To achieve this goal,OpenStack promotes a disaggregated resource model of three independent device controllers(Fig.3)[19]:compute(NOVA),network(NEUTRON),and storage(CINDER).

Nova,also known as OpenStack Compute,is the software that controls the IaaScloud computing platform.It is similar in scope to Amazon EC2 and Rackspace Cloud Servers.Nova does not include any virtualization software;rather,it defines drivers that interact with underlying virtualization mechanisms that run on the host operating system,and it provides functionality over aweb API.

▲Figure3.OpenStack software-defined infrastructuremodel.

Neutron is an OpenStack project designed to provide network connectivity as a service between interface devices(e.g.vNICs)managed by other Openstack services(e.g.NOVA).A neutron server provides a webserver that exposes the Neutron API and passes all web service calls to the Neutron plugin for processing.

CINDER is an OpenStack project intended to provide block storage as a service.

Each device controller comprises the control API,resource allocator,and devicemanager.

4 OASISTopology and Orchestration Specification for Cloud Applications

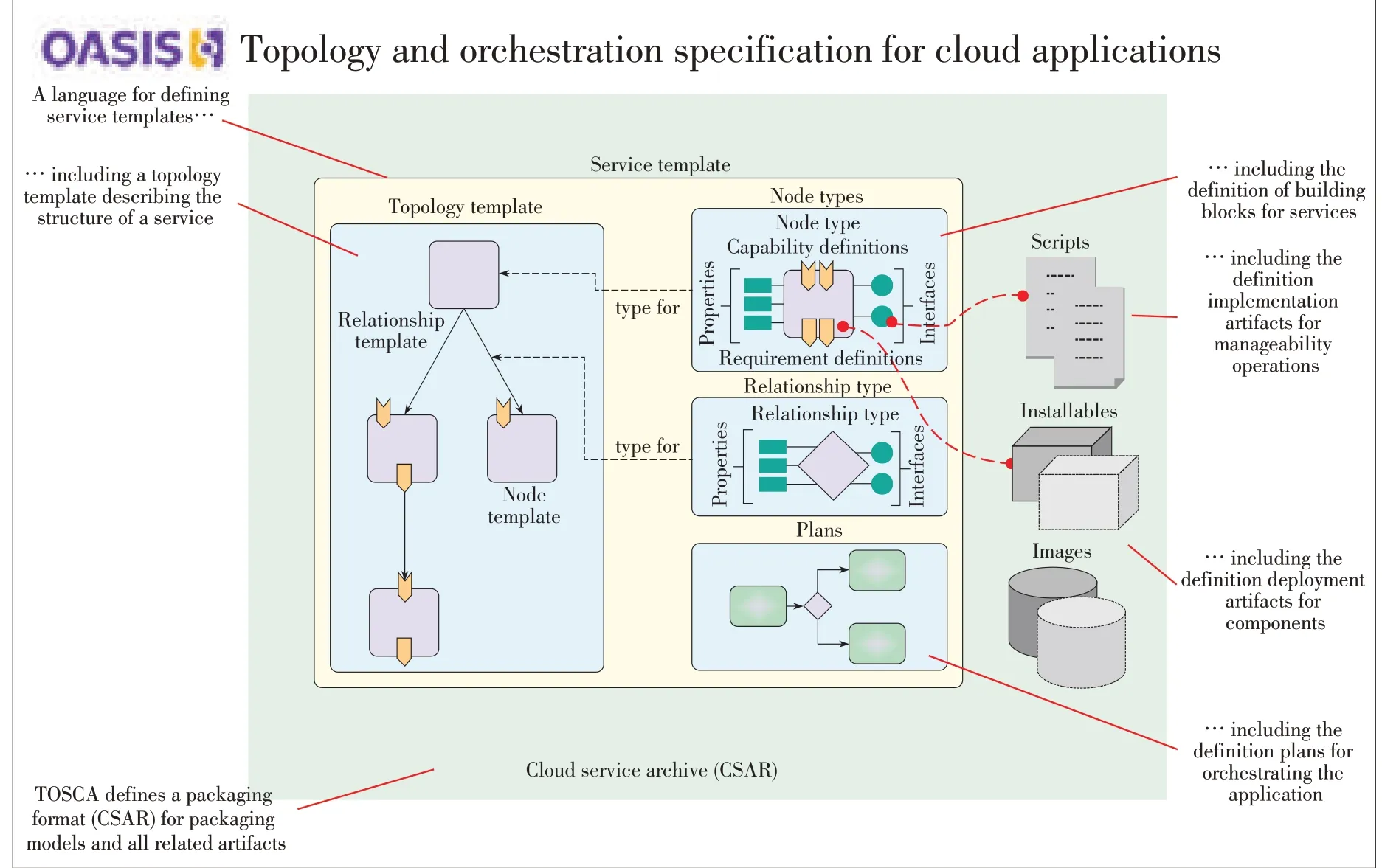

Topology and Orchestration Specification for Cloud Applications(TOSCA)is a proposed OASISstandard for portability of applications/cloud services across diverse cloud infrastructures[20].

TOSCA isintended tobe thestandard to describe ITservices that go beyond IaaS.It is also intended to describe service templates across*aaSlayers,which are built on the resourceabstraction layer comprising SDC,SDS,and SDN.

Fig.4 gives a technical overview of TOSCA and the software-defined component model.

TOSCA defines a metamodel for defining IT services.This metamodel defines both the structure of a service as well as how to manage it.A topology template,also called the topology model,defines the structure of a service.Plans define the process models used to create and terminate a service as well as managetheserviceduringitslifetime.

A topology template comprises a set of node templates and relationship templates.Together,all of these templates define the topology of a service as a directed graph(not necessarily a connected graph).A node in this graph is represented by a node template,which specifies the occurrence of a node type as a component of a service.A node type defines the properties of such a component(via node type properties)and the operations(via interfaces)available to manipulate the component.

Figure4.▶TOSCA technicaloverview.

5 Conclusion

To realize an SDDC,data center resources,such as computer,network,storage,security and availability,are expressed as software.They also need to have certain characteristics,such as multitenancy;rapid resource provisioning;elastic scaling;policy-driven resource management;shared infrastructure;instrumentation;and self service,accounting,and auditing.This ultimately entails a programmable infrastructure.that enables valuable resources to be automatically cataloged,commissioned and decommissioned,repurposed,and repositioned.

- ZTE Communications的其它文章

- Cloud Computing

- Computation Partitioning in Mobile Cloud Computing:A Survey

- MapReducein the Cloud:Data-Location-Aware VM Scheduling

- Preventing Data Leakagein a Cloud Environment

- CPPL:A New Chunk-Based Proportional-Power Layout with Fast Recovery

- Virtualizing Network and Service Functions:Impact on ICT Transformation and Standardization