Open Augmented Reality Standards:Current Activities in Standards-Development Organizations

Christine Perey

(PEREY Researchamp;Consulting,Route de Chernex 2B,1820 Montreux,Switzerland)

Abstract Augmented reality(AR)has emerged from research laboratories and is now being accepted in other domains as an attractive way of visualizing information.Before ARcan be used in the mass market,there are a number of obstacles that need to be overcome.Several of these can be overcome by adopting open standards.A globalgrassroots community seeking open,interoperable ARcontent and experiences began to take shape in early 2010.This community is working collaboratively to reduce the barriers to the flow of data from content provider to ARend user.Standards development organizations and industry groups that provide open interfaces for ARmeet regularly to provide updates,identify complementary work,and seek harmonization.The community also identifies deployer and implementer needs,communicates requirements,and discusses emerging challenges that could be resolved with standards.In this article,we describe current activities in international standards-development organizations.We summarize the ARstandards gap analysis and shed light on special considerations for using standards in mobile AR.

Keyw ords augmented reality,standards,open interfaces,ARreference model

1 Introduction

S ince late 2008,augmented reality(AR)has rapidly gone from being a topic of research and pilot projects to being a promising new way of providing value to almost any user scenario that involves the physical world and digital data.By providing digital information in real time and in tight association with the user's physical surroundings,ARenhances the experience of“the present”for pleasure,learning,and professional goals.

As technologies mature and solutions for producing and experiencing the world with ARproliferate,companies must work harder to define and protect their unique contributions to the ARecosystem.In most markets,standards emerge once a sufficient number of organizations see market and business value in interoperating with the solutions or services of others.Standards frequently provide a shared platform for technology and market development.They ensure the smooth running of an ecosystem,in which different segments contribute to and benefit from the success of the whole,and they are commonly the basis for robust,economically viable value chains.Widespread adoption of standards throughout the ARecosystem will ensure interoperability between traditional and emerging content-creation and management systems.It will also increase the variety and amount of data available for use in AR-enhanced end-user experiences.

At the time of writing of this article,interoperable,open-standards-based ARcontent creation and management platforms do not exist.Applications for experiencing ARusing standards-compliant interfaces and protocols also do not exist.This is not to say that there has been no change in the ARecosystem over the past year or that there has been a lack of progress towards open-standards-based ARexperiences.

Over the past year,the number of single-vendor technology“silos”(technology that is closed and managed by a single vendor)has increased,and the challenge of publishing once for use across fragmented markets has grown more complex.At the same time,an increasing number of important industry groups and standards-development organizations(SDOs)have begun working on new,open frameworks and extensions of existing standards in which AR is the primary use case or one of the most important use cases.Finally,the number of users with AR-compatible devices has also increased,making the need for standards more clearly to participants in the ecosystem who seek to reach the mass market.

In 2012,there has been widespread interest in and increasing requests for standards-compliant ARsolutions.Content publishers that can provide high-value AR experiences are motivated to make their data available to users of various devices that are connected to all types of networks or that are not connected at all.By adapting the assets used by many terminals,and by adapting content delivery options,publishers can monetize content for different use cases across substantially larger markets.This maximizes the audience size and potentialrevenue.Adoption of standards also reduces implementation costs and mitigates investment risks by making devices and use cases independent.By the beginning of 2013,leading technology providers willrespond to these needs by introducing interfaces that comply with open standards and by introducing applications that provide ARexperiences based on content authored in or delivered by platforms of competitors or partners.

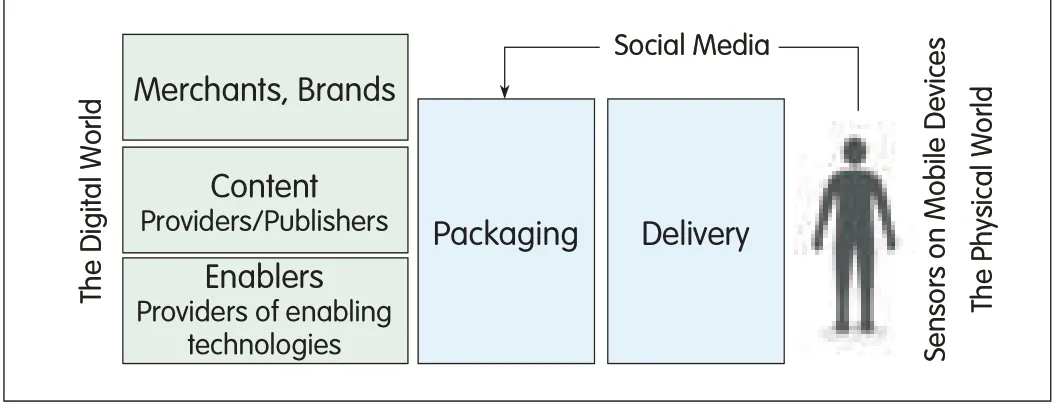

▲Figure 1.Ecosystem of mobile ARSegments.

2 Guiding Principles of an Open AR Industry

Open AR—interoperable systems for viewing content overlaid on the physical world in real time—is necessary for the continued evolution of the ARmarket.There are numerous standards that can be used to develop and deploy open AR;however,there are still interoperability gaps in the ARvalue chain.Work needs to be done on determining best practices for using existing internationalstandards to build and deliver AR.Where there are interoperability gaps,new standards are being or need to be defined,documented,and tested.Pushing new standards through the development process of a standards organization may not suit the needs of the AR community,and profiles of existing standards may be more appropriate.Further,the development of a framework of standards for designing,building,and delivering ARis beyond the resources of any single body.Because AR requires the convergence of so many technologies,there are numerous interoperability challenges,and there will be no universal ARstandard.Instead,ARwill leverage hardware and software based on a suite of standards.

Many developers of ARtechnology seek to leverage existing standards that solve different interoperability issues.In order to address new issues raised by AR,we must extend standards that have been proven and optimized to permit applications to learn user locations,display objects on the screen,timestamp every frame of a video,and use inputs to manage behaviors.

One of the strongest motivations for cross-standard,multiconsortium,open discussions about standards and ARis time-to-market.Repurposing existing content and applications is critical.Existing standards(or profiles of standards)are used to avoid making mistakes,and currently deployed and proven technologies,including emerging technologies,should be used to address urgent issues for AR publishers,developers,and users.

However,the time-to-market argument is only valid if most or all members of the ecosystem agree that having open AR,the opposite of technology silos,is a good thing.It is evident from the participation of academic and institutional researchers,companies of all sizes,and industry consortia representing technology groups,that there is agreement across many parts of the ARecosystem about the need for standards[1].

2.1 Suggested Model of a General AR Ecosystem

The ARecosystem comprises at least six interlocking,interdependent groups of technologies.Fig.1 shows how these groups bridge the space between the digital and physical worlds.

On the far right of Fig.1,there is the client device in the end-to-end system.The user holds or wears the client,which provides an interface for interacting with one or more services or applications in the digital world.The device also incorporates an array of real-time sensors,such as cameras,pressure sensors,or microphones.The sensors in the device detect the surrounding environment and,in some cases,also the user's input.There may also be sensors in the environment that could be accessed via the application.The client device is also the output for the user,permitting visualization or other forms of augmentation,such as sounds or haptic feedback.

Manufacturers of components and AR-capable devices such as smartphones may be present in both the client segment of the ecosystem as well as the enabler segment at the far left of Fig.1.At the right side,client devices are frequently tightly connected to the networks.

Network providers,application store providers,government and commercial portals,social networks,spatial data infrastructures,and geo-location service providers provide the discovery and delivery channel by which the user experiences AR.This segment,like the device segment,overlaps with other segments so that companies may be content providers as well as device manufacturers.

Packaging companies provide tools and services that permit viewing of any published and accessible content.In this segment,there are subsegments such as ARSDK and toolkit providers,Web-hosted content platforms,and content developers who provide professional services to agencies,brands,and merchants.

Packaging companies provide technologies and services to organizations that have content suitable for context-driven visualization.Unlike other segments in the ARecosystem,which existed prior to ARbecoming possible on consumer devices,this segment is new.It developed rapidly between 2008 and the end of 2011.In this segment,there is the greatest number of proprietary ARsolutions.The packaging segment must adapt rapidly in the near future because open-standard interfaces will be incorporated into client software,including the device operating systems,and will be used by content providers.

Packaging companies sometimes rely on providers of enabling technologies(Fig.1).As with the packaging segment,there are subsegments of the enabler segment.These subsegments include semiconductor,sensor,and algorithm providers.The enabler segment is rich in existing standards that are being assimilated by the packaging segment companies.

Content providers have public,proprietary,and user-provided data.Traditionally,proprietary content was the primary content used in AR.More recently,content such as traffic data and base maps has been provided by government agencies,and content such as Open Street Map has been provided by volunteer sources.An excellent example of the evolution from proprietary to mixed-content platform is map data used in AR.

Traditional content providers,brands,and merchants seeking to provide digital information to users of AR-enabled devices were previously reluctant to enter the ARecosystem because investment in a proprietary solution for a small audience was seen as a dead end.Two trends are stimulating content providers to examine ARmore closely.First,companies have begun to realize that a dedicated application,such as an application for trying on glasses,gives real value to users and increases their engagement with a brand.This,in turn,increases sales.ARis now seen as a good investment for understanding end-user behavior and as a good investment for being perceived as forward-looking company.Second,in 2013,content providers will be looking carefully at how widely standards have been adopted for content encoding and for providing platforms and packaging companies with access to content.Content providers are waiting for this clear indication of market maturity.Standard interfaces and encoding will also allow content providers to access content from many more sources,including proprietary,user-provided,and government sources.Content providers and those who experience ARwill benefit when ARcontent standards are available to present the source content in a consistent and high-quality manner.

3 AR Requirements and Use Cases

For the proper development of ARstandards,there needs to be a very clear understanding of ARrequirements and use cases.Different domains have different ARrequirements;for example,mass-market mobile ARused in tourism has different requirements from ARused by first responders in emergencies.However,analyses have been done to identify the most common requirements.By specifying use cases and requirements,standards organizations are beginning to obtain information about which standards are best for given situations or workflows.Given that different ARsegments have different needs for standards,there are a number of different standards bodies and industry consortia that have been working on different aspects of the ARstandards stack.Stronger collaboration between these bodies is required.By holding open,face-to-face meetings between parties such as ARDevCamps and the International ARStandards Community,people from vastly different backgrounds are convening to exchange and share information about their successes and how these may be used to overcome challenges in interoperable and open AR.Having a common set of use cases and related requirements provides a lingua-franca for collaboration and discussion.

Collaboration between SDOs and their related expert communities is crucial at this juncture in the growth of AR.If we can benefit from the experience of those who have written,implemented,and optimized today's most popular standards for AR-like applications such as OpenGL ES,JSON,HTML5,KML,GML,City GML,and X3D,the goalof interoperable AR will be more quickly achieved and many costly errors may be avoided.

In discussions on standards,it is generally agreed that developing standards specifically for ARapplications is only necessary in a small number of cases.Instead,repurposing(creating profiles)and better understanding standards that have already been developed by organizations such as the Khronos Group,Web3D Consortium,World Wide Web Consortium(W3C),Open Geospatial Consortium(OGC),ETSI 3GPP,Open Mobile Alliance(OMA),National Information Standards Organization(NISO),British Information Standards Organization(BISO),and Society for Information Display(SID)will ensure the greatest impact,largest markets,and most stable systems in the future.

3.1 Requirements of AR Content Publishers

Publishers who produce and deploy ARcontent are extremely varied.They include multinationalmedia conglomerates,device manufacturers,governments at all levels,users themselves,and individual content developers who wish to share their personal trivia or experiences.Publisher subsegments have needs that align with their specialized markets or use cases.Broadly speaking,a content publisher seeks to

•reach the maximum audience with the same content

•provide content in formats that are suited to special-use cases(also called repurposing)

•provide accurate,up-to-date content

•control access,for example,to billing,and limit pirating.Content publishers desire a simple,lightweight mark-up language that is easily integrated into existing content-management systems and that attracts a large community of developers who will customize the content.Extending or creating profiles of the NISO information standards could be very useful;however,this has yet to be.Standards that specify the relationship between AR-ready content and KML could also be very useful.In 2011,members of the OGC,which is responsible for keyhole mark-up language(KML),initiated extensions to KMLand ARML 2.0.This could be highly relevant to the content-provider segment.

3.2 Requirements of the Packaging Segment

This is the segment of the ARecosystem in which proprietary technology silos are most evident and where control of content-development platforms is highly competitive.The needs of providers of tools and platforms are significantly different from(and should be differentiated from)those of professionalservice providers,who use tools to gain their livelihoods.

Tools and platform providers seek to

•access and process content from distributed repositories and sensor networks.This may include repackaging for efficiency.However,for content such as maps or location content,the ability to access the content closest to the source gives the end user the latest,best quality.

•offer their tools and platforms to a large(preferably existing)community of developers who develop commercial solutions for customers

•integrate and fuse real-time sensor observations into the ARapplication

•quickly develop and bring to market new,innovative features that make their system more desirable than a competitor's or a free solution.

Professional developers of content are service providers who use the SDKs and platforms for publishing.They seek to

•make ARversions of(repurpose)existing tools and content.This helps manage costs and promote learning curves.

•provide end users with rich experiences that leverage an ARplatform but,at the same time,have features that tie users to existing platforms for social networking,communications,navigation,content administration,and billing.

The packaging segment has a central role in designing and preparing ARexperiences for end users.They also have a central role in the eventual propagation of open-standards-based tools and will be sensitive to and impacted by the development of standards in SDOs.Activities relevant to ARin OGC,W3C,Web3D Consortium,SID,Khronos Group,and OMA should proceed with strong input from this segment.

3.3 Discovery and Delivery Segment Requirements

Companies that provide connectivity(access and transport)between ARplatforms and end-user devices have previously been defined as the“discovery and delivery”segment of the ARecosystem.This segment provides value to ubiquitous AR experiences by ensuring that users can promptly and securely access the content necessary for AR.It also ensures that statistics about ARexperiences are systematically and accurately captured.

Those in the delivery segment seek to

•receive and transmit requests from user devices to subscribe to/unsubscribe from ARexperiences.These requests are processed according to the user's context,for example,location and detection of marker,in a manner that is scalable,reliable,and flexible to localnetwork conditions.

•suspend and resume(hold/unhold)ARsessions in a way that makes optimal use of communications infrastructure

•access,cache,retrieve,and distribute content(assets)for use in ARexperiences in a way that makes optimal use of distributed,low-cost resources

•adapt the ARcontent delivery to the capabilities of the user device.

The OMAis working on a definition of the Mob AREnabler.In conjunction with W3C,OGC and other SDOs,they are taking into account the requirements of the delivery segment in the ARecosystem[2].

3.4 AR System and Content Users

This is the most diverse segment in the ARecosystem.Users include allpeople,related services,and organizations involved in future scenarios.It is natural that users of AR systems and content want to have experiences that leverage the latest technologies and the best,most up-to-date content without losing any of the benefits they have grown accustomed to.

Regardless of the use case or final objectives,end users seek to

•request and receive content in the context of their surroundings and in tight association with local points of interest.This may involve the use of filters,formats,and resolutions suited to the user's device and connectivity.

•receive content in ARview from multiple sources as part of a single,integrated ARexperience

•rate and give feedback to the publisher about content

•create personal media and associate it with the realworld(“social”AR).

In addition,the OMAhas stated that end users should be able to

•personalize ARsettings according to their preferences•permit or prevent the collection of end-user metrics by ARcontent and system providers

•use ARin conjunction with reliable,secure billing mechanisms that are directly linked to the subscriber's account.

4 Approaches to the Challenge of AR Standards

To meet the needs of developers,content publishers,platform and toolproviders,network operators,device providers,and users of the ARecosystem,experts in hardware-accelerated graphics,imaging and computing,cloud computing and Web services,digital data formats,navigation,sensors,geospatial content and services management,and hardware and software devices must collaborate.

4.1 Basic Tools of the Standards Trade

Standards that are usefulto ARsegments leverage the know-how that is gained from creation of and experimentation with open-source,commercial and precommercial implementations.The process of recommending standardization usually begins with the development of a core set of requirements and use cases.This work is then followed by the establishment of a vocabulary(terms and definitions),information models,and abstract architectures.Principle objectives must also be agreed upon.

The results of one or more gap analyses,combined with known or emerging ecosystem requirements,reveal where the community should concentrate their standardization efforts.Guided by these directions,new standards work done by existing standards organizations can begin to fill the gaps and support ARexperiences.

4.2 Standards Gap Analysis

Agap analysis begins with detailed examination of available standards in order to determine which standards are close to meeting ARecosystem requirements and which are not.Gap analysis began as a community-driven activity involving developers and providers of tools and content.It began in October 2010 at the International ARStandards Meeting in Seoul and continued throughout 2011 and into the first half of 2012.

In gap analysis,problems are divided into those related to content and software and those related to infrastructure,such as device hardware and networks.The following describes the gap analysis to date.

5 Content-Related Standards

5.1 Declarative and Imperative Approaches to Content Encoding

First,for a gap analysis to succeed,it is important to clarify differences between the declarative and imperative approaches used in standards to describe and deliver content.

The imperative approach usually defines how something is computed;for example,it may define the code.Such definitions may cover storage,variables,states,instructions,and flow control.The imperative approach usually benefits from many options and user-centered variations.Imperative code can be designed in any manner as long as it conforms to the common rules of the interpreting background system.A typical example is JavaScript,which is a highly popular implementation of the ECMA Script(ECMA-262)language standard and a part of every Web browser on a mobile or desktop today.

Declarative approaches are more restrictive,and their design usually follows a strict behavior scheme and structure.They consist of implicit operational semantics that are transparent in their references and describe what is computed.Declarative approaches usually do not deliver states;thus,it is more difficult to create a dynamic system using a declarative approach.On the other hand,declarative approaches tend to be more transparent and easy to generate and use.A common declarative language in use today is the W3CXMLstandard,which defines a hierarchical presentation of elements and attributes.Another coding form for declarative data is JavaScript Object Notation(JSON),which is a lightweight,easy-to-port data interchange format.It builds on the ECMAScript specification.

Both approaches,either individually or in combination,are likely to be used to create ARcontent encoding and payload standards.

5.2 Existing Standards

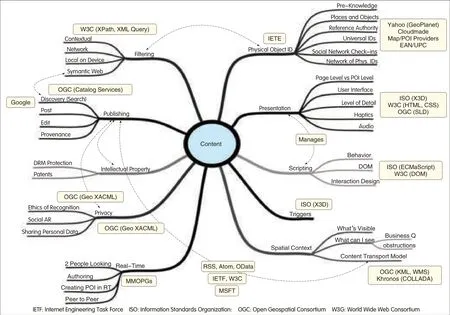

There are many standards related to content or payload encoding that could be used for ARapplications.Fig.2 shows an initial inventory of these standards and their possible relationships.

At the time of this analysis,there are at least ten mature standards based on geo-information system(GIS).These standards could be used in ARservices and applications that rely on the user's location.There are also another five to six ratified and widely adopted international standards that define the position and interactivity of digital objects in a user's visual space.

5.3 New Standards Activities

In addition to the numerous existing standards,at least two new AR-specific standards are being developed.

The W3C Points of Interest Working Group is drafting the first version of the POIspecification.The POIdraft specification has been completed,willbe voted on in late 2012,and is estimated to be available in early 2013.It will provide a simple data model for describing a POIthat includes but is not limited to

•an anchor

•an extent

•a URI

•a category

•an address.

In September 2011,the OGC ARML 2.0 Working Group began examining and harmonizing different approaches to using extensions or profiles of KMLto create ARexperiences.The charter of the ARMLWG is to provide an open-standards approach to using KLM to encode the placement and interactivity of a digital object.The group's work is advancing and results may be available for testing in late 2012.

5.4 Gap Analysis and Work in Progress

The gap analysis suggested in section 4.2 has been performed by the International ARStandards Community.The analysis revealed four very important issues in content-related standards:•Terminology and architectural frameworks related to content(ARin particular)are not consistent across SDOs.This makes comparing standards,conducting ongoing activities,and using standards for open ARvery difficult.•There are multiple description systems for 3D that could be appropriate for AR,and these must be examined for suitability.Some of the existing 3D standards may be extended to describe ARexperiences involving 3D objects.

•Asuitable encoding standard for 3D object transmission is needed for many applications.This could be developed with minimal new investment,and it could be based on existing standards.

•An appropriate set of standards for indoor positioning and navigation are lacking.This is significantly hampering the development of certain ARuse cases that require positioning of the user both indoors and outdoors.

▲Figure 2.Standards landscape and effecton mobile AR.

To address the first of these issues,the International AR Standards Community has begun to develop the Augmented Reality Reference Modelto describe ARfrom perspectives defined by the ISO/IEC 10746 standard for reference models.

To address the second of these issues,the Declarative 3D Community Group of the W3C has begun holding meetings.It will discuss approaches with members of other WGs in the W3C as well as members of the Khronos Group,ISO/JTC1 SC 29 WG 11,and Web3DConsortium.

To address the third of these issues,the Khronos Group,Web 3D Consortium,and ISO/IEC JTC 1 SC 29 WG 11(MPEG)began a dialog in December 2011.In March 2012,at the ARStandards Community meeting in Austin,TX,they drafted a joint proposal for development.Work on a new 3D compression and transmission standard is underway in a cross-SDO working group established through a series of liaison memorandums for this purpose.The subject of indoor positioning and navigation has begun to be discussed in standards groups.In January 2012,the OGCboard approved the formation of the Indoor GML Standards Working Group,which conducted its first meeting in Austin,TX,in March 2012.Further development of a middleware APIfor abstracting between the positioning technology and depiction of indoor spaces will be very valuable to the ARdevelopment community.

6 Considerations for Mobile AR Standards

Many promising technologies have been developed and integrated into mobile device hardware.New sensor technologies allow data for mobile ARapplications to be delivered in a format that can be processed.The processing power of mobile devices has increased exponentially in recent years,as has network and memory bandwidth to support the latest mobile devices and applications.Software frameworks and platforms for mobile application development have also advanced greatly,permitting developers to create new user experiences very quickly.This creates huge potential for context-and location-aware ARexperiences or applications to be extended to take advantage of new capabilities.

Although these developments have accelerated growth in the number and diversity of mobile AR-ready devices,this growth has come at a cost.There are numerous standards available,but they are not well adapted for mobile platforms or use in AR.New work needs to be done to implement lighter profiles of these existing standards for use in mobile AR experiences so that content is delivered consistently across multiple,different platforms and in different use scenarios.

6.1 Mobile ARand Sensors

Asensor outputs information about an observed property.It uses a combination of physical,chemical,or biological means to estimate the underlying observed property.Asensor observes external phenomena and represents it with an identifier or description,usually in the form of an estimated value.Satellites,cameras,seismographs,water temperature and flow monitors,and accelerometers are examples of sensors.Sensors may be in-situ,such as those in an air pollution monitoring station,or they may be dynamic,such as a camera in an unmanned aerialvehicle.The sensor detects a property at a specific point in time and at a specific location,that is,within a temporal and spatial context.Further,the location of the sensor might be different from the location of the observed property.This is the case for all remote-observing sensors,such as cameras or radar.

From a mobile ARperspective,sensors may be in the device(onboard)or outside the device(external)and may be accessed by the ARapplication as required.Regardless,all sensors have descriptions of the processes by which observations and measurements are generated.They also have other related metadata,such as quality,time of last calibration,and time of measurement.Metadata,or characteristics,are critical for developers and applications that require sensor observations.Astandard language for describing a sensor,its metadata,and processes will allow for greater flexibility and ease of implementation when accessing and using sensor observations in ARapplications.

Sensors behave differently in different devices and platforms.Even when the same phenomenon is observed,dynamic(mobile)sensor observations may be inconsistent because of differences in manufacturing tolerances or measurement processes.Calculating a user's indoor location is one example where wide variation may occur.Different location-measurement technologies provide different levels of accuracy and quality.Inconsistency is exacerbated by factors such as interference from other devices and materials in the building.

Approaches that use computer-vision algorithms to improve the accuracy of geo-positioning data in mobile AR are promising.However,these approaches require new models for recognition,sensor-fusion,and pose reconstruction to be defined.Ideally,an abstraction layer that defines these different“sensor services”with a well-defined format and sensor characteristics would address existing performance limitations.

For some ARapplications,real-time processing of vision data is crucial.In these cases,raw camera data cannot be processed in a high-level programming environment and should be processed on a lower level.When processing is done at a lower level,algorithms that create an abstracted sensor-data layer for pose are beneficial.

Sensors with different processing needs can contribute to the final ARexperience.At the same time,higher-level application logic may benefit by taking data from multiple sensors into account.Standards may provide direct access to sensor data or higher-level semantic abstractions of it.

Acritical source of content for many ARexperiences,regardless of domain,willbe near-real-time observations from in-situ and dynamic sensors.In-situ sensors are used as traffic,weather,fixed video,and stream gauges.Dynamic sensors are used in unmanned aerial vehicles,mobile human contexts,and satellites.Already,some content used in ARis obtained via sensor technology,such as LIDAR.This content is processed and stored in a content management system.There are many other sources of sensor data that are,or will be,available on demand or by subscription.These sensor observations need to be fused into the ARenvironment in real time as well.There is a need for standards that enable the description,discovery,access,and tasking of sensors within the user's environment so that these sensors can be included in ARexperiences.

6.2 Mobile AR Processing Standards

Considerable work has previously been done on standards for situationalawareness,sensor fusion,and service workflows.This is a large field in which many existing standards are suitable for use by ARdevelopers and content publishers.Some of these standards can be applied to processes in an ARworkflow.For example,the OGC Web Processing Service provides rules for standardizing inputs and outputs(requests and responses)for geospatial processing services,such as polygon overlay.The standard also defines how a client can request the execution of a process and how the output of the process is handled.

Other approaches to describing vision-based tracking environments with visual(camera)constraints were first taken by Pustka et al.,who introduced spatial relationship patterns for ARenvironment descriptions[3].

There are several standards that could be used for the presentation and visualization workflow stack for AR experiences.An AR-enabled application can use a service interface to access content such as a map.There are also lower-level standards for rendering content on the device.Not all ARrequires use of 3D.In some cases,especially in devices with low-power processors that cannot render 3D objects,2D annotations are preferable.Avery convenient declarative standard for describing 2D annotation is W3C's scalable vector graphic(SVG)standard or even HTML.

6.3 Mobile AR Acceleration and Presentation Standards

Augmented reality is highly demanding in terms of computation and graphics performance.Truly compelling AR on mobile devices requires advanced computation and graphics capabilities in today's smartphones.

Many mobile ARapplications make direct and/or indirect use of hardware to accelerate computationally complex tasks.Because device hardware varies,standard application programming interfaces(APIs)reduce the need to customize software for specific hardware platforms.

The Khronos Group is an industry standards body that defines open APIs that enable software to access high-performance silicon for graphics,imaging,and computation.A typical ARsystem with 3Dgraphics uses several Khronos standards and some that are under development.

6.4 Using HTML5 for a Universal Mobile AR Viewer

All smartphones and most other mobile devices have a Web browser.Many already support elements of the HTML5 standard,and the trend towards full HTML 5 support will continue.HTML5 is a very clear and simple option for AR developers.Within a Web browser,JavaScript allows access to the document object model(DOM)so that declarative elements can be observed,created,manipulated,and removed.An option for standardizing ARcould be integrating declarative standards and data directly into the DOM.

X3Dom is a complete 3D renderer that uses JavaScript.It is built on Web GL,a JavaScript interface for encapsulating OpenGL ES 2.X3Dom is completely independent of the mobile platform on which the application runs.This does not imply that an output solution,such as X3Dom,is allthat is needed to make a universal viewer for AR.A convenient interface extension for distributed access to real-time processed,concrete,or distributed sensor data is required.

7 Conclusions and Future Directions

In the creation of ARexperiences,disciplines such as computer vision recognition and tracking,geospatial,and 3D converge.Algorithms for computer vision and sensor fusion are provided by researchers and software engineers,or they are provided as third-party services.Content for presentation and application logic is likely to be created by experienced designers.In this paper,we have discussed standards that can be used for interoperable and open AR.We have discussed issues in ARstandards development and the use of internationalstandards in ARapplications and related services.We have identified the different standards bodies and players in different fields that already supply standards that are highly relevant to AR.

Although extending and using existing standards is highly beneficialto the objectives of the ARcommunity,there must also be room for new ideas and evolving technologies.Therefore,standardization meetings such as International Standards Community meetings have been held to find the best interconnection and synergies between SDOs.

These standards coordination meetings enable all players in the ARecosystem to discuss requirements,use cases,and issues and also establish focused working groups to address specific issues in ARstandardization.This approach enhances ARstandardization in specialized fields.

In the future,technologies will be able to filter reality to allow a user to see things differently.This implies that a person can view a scene from different positions without having to move.Perspective and context are maintained in order to expose or diminish other elements in the scene.Work in this area will help maintain privacy,especially in collaborative applications,or help users focus their attention on the main task in the context.

Applying existing standards(or their extensions)for ARon the sensor side in mobile devices requires more research,specifically,on the delivery of improved context for fixed objects.Processing moving objects in the scene and processing variable light conditions also needs to be improved.Such improvements involve both hardware and software and are being researched in many laboratories.

These potential ARimprovements and use cases require deep interoperability and integration of sensors so that data can be properly acquired and presented.ARimprovements also require enhanced use of advanced silicon for imaging,video,graphics,and computationalacceleration and must operate at battery-friendly power levels.ARwill benefit greatly from the emergence of interoperable standards in divergent domains.

Acknowledgements

This article is an adaptation of“Current Status of Standards for Augmented Reality,”a chapter co-authored by Christine Perey and published by Springer in Mobile collaborative augmented reality:Recent trends(ISBN 978-1-4419-9844-6,pp.19-34).The author wishes to thank Neil Trevett(NVIDIA),Timo Engelke(Fraunhofer IGD),and George Percivall and Carl Reed(OGC)for their continued support and contributions to the international ARstandards community.

- ZTE Communications的其它文章

- 100Gand Beyond:Trends in Ultrahigh-Speed Communications(Part II)

- FSKModulation Scheme for High-Speed Optical Transmission

- Computationally Efficient Nonlinearity Compensation for Coherent Fiber-Optic Systems

- Flipped-Exponential Nyquist Pulse Techniqueto Optimize PAPRin Optical Direct-Detection OFDMSystems

- 100 Gbit/s Nyquist-WDM PDM 16-QAM Transmission over 1200 km SMF-28 with Ultrahigh Spectrum Efficiency

- Field Transmission of 100Gand Beyond:Multiple Baud Ratesand Mixed Line Rates Using Nyquist-WDMTechnology