Juxtapose of System Performance for Food Calorie Estimation Using IoT and Web-based Indagation

Anusuya S and Sharmila K

(1. Department of Computer Science, VISTAS, Chennai 600117, India; 2. Department of Computer Science, Dr. MGR Janaki College for Women, Chennai 600028, India)

Abstract:A rudimentary aspect of human life is the health of an individual, and most commonly the wellbeing is impacted in a colossal manner through the consumption of food.The intake of calories therefore is a crucial aspect that must be meticulously monitored.Various health gremlins can be largely circumvented when there is a substantial balance in the number of calories ingested versus the quantity of calories expended.The food calorie estimation is a popular domain of research in recent times and is meticulously analyzed through various image processing and machine learning techniques.However, the need to scrutinize and evaluate the calorie estimation through various platforms and algorithmic approaches aids in providing a deeper insight on the bottlenecks involved, and in improvising the bariatric health of an individual.This paper pivots on comprehending a juxtaposed approach of food calorie estimation through the use of employing Convolution Neural Network (CNN) incorporated in Internet of Things (IoT), and using the Django framework in Python, along with query rule-based training to analyze the subsequent actions to be followed post the consumption of food calories in the constructed webpage.The comparative analysis of the food calorie estimate implemented in both platforms is analyzed for the swiftness of identification, error rate and classification accuracy to appropriately determine the optimal method of use.The simulation results for IoT are carried out using the Raspberry Pi 4B model, while the Anaconda prompt is used to run the server holding the web page.

Keywords:food calorie estimation; convolution neural network; Django framework; internet of things; raspberry Pi

0 Introduction

The prevalence of obesity and the consumption of unhealthy junk food have emerged as significant global health concerns.Obesity, being a growing problem worldwide, is attributed to a lack of physical activity and an imbalanced diet, resulting in adverse health conditions such as hypertension, diabetes, and heart attacks.Accurate measurement of dietary intake plays a crucial role in maintaining a balanced diet and preventing these diseases.However, manually measuring the nutrient content of each food item is challenging and time-consuming.Therefore, the development of automated systems to assist individuals in making informed food choices is of utmost importance.Several machine learning-based calorie prediction approaches have been proposed in earlier works to address this need.However, it has come to our attention that the previous version of the paper lacked a comprehensive discussion of the relevant literature and failed to provide a comprehensive overview of the developments in the field.Existing methodologies have incorporated image processing, deep learning, and other techniques to analyze food calorie content.Algorithmic approaches and mobile applications have been developed to cater to the needs of users.Clarity and accuracy in the input data play a crucial role in the successful implementation of these methodologies.The World Health Organization (WHO) shows that the number of individuals suffering from concomitant diseases with respect to obesity is higher than those who are underweight.The existing surveys and study data evince the obese population to be constantly increasing, with almost 30% holding high morbidity rate.The analyzed data also explicates that about three million are prone to dependent problems associated with the food intake such as heart diseases, cancer and diabetes.The energy imbalance between the ingested calories and the calories expended is considered as the outset of obesity and uncurbed fat.Therefore, this identification of food calories can aid a great deal in evading various diseases such as obesity, diabetes and cardio-vascular problems.While the domains relevant to developing fitness, bariatric treatments, cosmetic commodities and practices have escalated gargantuanly, the meticulous necessitation to scrutinize the intake of calories through different methodologies holds a rudimentary progression toward improvisation of healthy living standards.In neoteric times, the negligence to keep oneself active, along with colossal change in food habits impacts the health parameters of individuals swiftly.The recent pandemic played a large role in influencing the obesity problems in individuals, and have contrived various discussion pertaining the standards of well-being.

This paper proposes a systematic bi-platform approach combining the Internet of Things (IoT) and the Django framework in Python to develop a web page called "Foodie".Foodie enables individuals to track their calorie intake and provides recommendations for maintaining a balanced diet.The IoT component utilizes the Convolutional Neural Network (CNN) algorithm to identify the calorie content of food items, while the web page is constructed using Python scripts and the Django architecture.The comparative analysis of these platforms focuses on their accuracy, processing speed, and error rates, which help users understand any misclassifications in the systems.

This paper is structured with the elaboration of empirical review compiled to be delineated in Section 1.Section 2 presents the implemented methodology with the framework and comparative analysis, with the subsequent section illustrating the obtained outcome apropos to the proposed indagation.Section 3 discusses the experimental evaluation process of the proposed work.Section 4 concludes the paper with further relevant dimensions that can aid in augmenting the research performance of this domain.

1 Related Work

This section presents the literature review of the various simulations pertaining food calorie estimation, and their incorporation in various system platforms that have been developed in the last few years.It is evident that the manual approach for measuring the nutrient information is inefficient since the patients cannot remember all time the diet she/he has taken.Many machine learning based method has been developed by several researchers where the input image of a food is given and the output will be the amount of calories exist in that image[1-2].Some of the pros and cons of the previous works are discussed below.

Siva Senthil[3]elaborated on the architecture of the proposed model using quadruple modules such as the registration for a user, input obtaining of the nutritional values, QR code and the weighing sensor.The primary goal of the research is to analyze the calories of the taken food by quantifying the amount of food commodity entailed.The sensor to weigh the food is a portable machine that computes the calories[4-7], and renders a message to analyze the threshold of the ingested versus the expended calories.The QR scanner than computes the calories of packed food in order to render precise outcomes.While the proposed model by Kamakshi et al.[8]incorporates important components for calorie monitoring, there are certain limitations that need to be addressed.Firstly, the use of a weighing sensor may pose practical challenges in terms of portability and convenience for users.Additionally, the reliance on QR code scanning limits the system’s applicability to packaged foods, potentially overlooking homemade or restaurant-prepared meals.

Sun et.al[9]proposed a machine learning based automatic classification of nutrient measurement based on CNN.Prediction of the dietary value is done with the help of YOLO framework.The work shows an efficient classification of food image.But the computational complexity increases during the training and testing process.Another work in Ref.[10] uses deep learning framework for the efficient recognition of food image and dietary measurement and the proposed model is compared with other models such as AlexNet, ResNet, VGG.The measurement of calories is done through digital assistance where the details are recorded.However, the people will be of no interest to carry this digital assistance always before taking a food.

Image shape, size, colour and other characteristics are used for dietary level measurement using food images[11].SVM is used as a classification technique to compute the calories measurement based on nutrition table.They used mobile phones for providing the dietary information to the users.However, they are not efficient enough since they cannot cop up with the measurement of semi solid food and liquid food.

Authors of Ref.[12] elaborated through the pivoted construction of the IoT Based Automated Nutrition Monitoring and Calorie Estimation System in the mobile environment.The registration involves the collection of user information and effectuating them through the MyMqtt broker interface which activates the microcontroller module.The USB camera is used to procure the food image which is on the weighing sensor, and thereby aids in timestamping the same.In our proposed methodology, we aim to further enhance the capabilities of calorie estimation by leveraging deep learning techniques and the Django framework to develop the "Foodie" web application.Our system utilizes advanced neural network architectures to accurately estimate the nutritional content of various food items.While both approaches address the challenges of nutrition monitoring and calorie estimation using IoT and mobile technologies, our work extends the research by focusing on the integration of deep learning algorithms and web-based user interfaces.These additions provide a more advanced and user-friendly platform for individuals to monitor and manage their dietary intake.

The IoT ThingSpeak cloud is used in Ref.[13] to load the collected data, and further analyze the same.The CNN model is contrived in the IoT network to classify the obtained food images[14].The nutritional data is obtained from the USDA database, and the weigh sensor computes the calories.The deep learning method is further used through the pre-trained models to further contrive predictive classifications for the entailed food object.The accuracy of prediction was evaluated to be 90.69% with a loss ratio of 0.23.The study focuses to analyze obesity and pragmatic datasets, along with incorporating food cuisines from various geographical locations to comprehend the correlation established through the stratification parameters, and the common diseases that may be triggered due to excessive calorie consumption.

We conducted an extensive review of literature on calorie estimation using machine learning and deep learning methods.Many of the earlier works[15-18]have emphasized the use of deep learning frameworks to enhance the accuracy of calorie prediction.These frameworks have shown promising results in improving the efficiency of the training process while maintaining the integrity of the central layers.In our work, we have integrated IoT and deep learning strategies, allowing for effective training processes without compromising the performance of low-powered devices.

2 Proposed Work

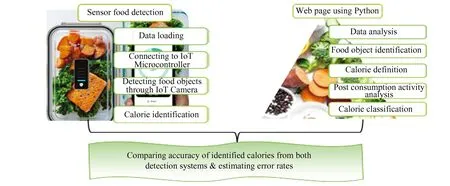

The proposed methodology for this investigation involves the implementation of food calorie estimation through a bimodal platform of IoT and web page construction.The overall architecture that this study utilizes is presented in Fig.1.

Fig.1 Overall Architecture of the proposed study

2.1 Data Collection

The dataset used in this study consists of food images collected from various online sources, including recipe websites and social media platforms.The dataset contains over 10000 images of different foods, including fruits, vegetables, grains, meat, and dairy products.Each image is labeled with the food type and the corresponding calorie content.The dataset is split into training, validation, and testing sets with a ratio of 80∶10∶10.

2.2 Data Processing

Before training the model, the images in the dataset are preprocessed to improve the quality of the data.First, the images are resized to 224×224 pixels to match the input size of the Inception_v2 architecture.Then, the images are normalized to have zero mean and unit variance to ensure that the model converges faster during training.Data augmentation techniques, such as random cropping and horizontal flipping, are also applied to increase the diversity of the training data.To estimate the volume of food items in the images, we utilized the K-means clustering algorithm.Specifically, we utilized the processed mathematical structure of each image and tested differentKvalues to find the best estimation ofK.To select the optimalKvalue, we calculated the root mean square error (RMSE) for eachKvalue from 1 to 100.TheKvalue that produced the minimum RMSE was selected as the bestKvalue.

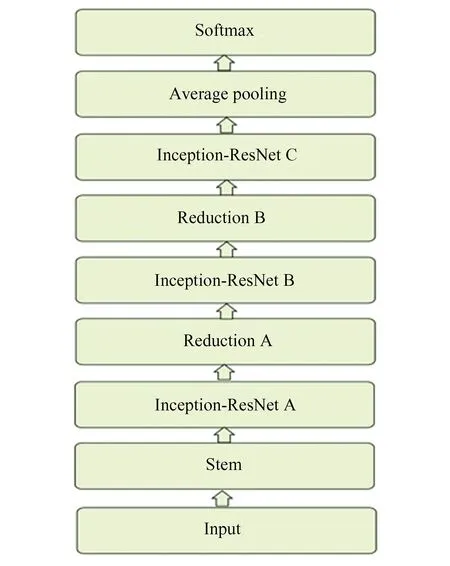

2.3 Model Architecture

The Inception_v2 architecture consists of multiple layers of convolutional, pooling, and activation functions.The architecture uses a series of filters with different sizes to capture features at different scales.The work chose the Inception_v2 architecture for its high accuracy and speed, making it a suitable choice for image recognition on IoT devices with limited computational resources.The Inception_v2 architecture is comprised of multiple modules called "Inception modules".

Each Inception module consists of multiple branches of convolutions and pooling operations that are merged together at the end.The output of each Inception module is fed into the next Inception module, allowing the network to learn increasingly complex features as the image is processed through the layers.The work used pre-trained weights for the Inception_v2 network, which were trained on a large dataset of images.They fine-tuned the network on a smaller dataset of images relevant to their use case.This approach helped to improve the accuracy of the network while reducing the training time.Fig.2 shows the inception architecture.

Fig.2 Inception architecture

In the food calorie estimation work, the Inception_v2 architecture is used to extract features from food images, which are then used to estimate the calorie content of the food.The architecture consists of multiple blocks, including the input layer, stem, 9 inception blocks, pooling and dropout layers, and output layer.

The inception blocks contain multiple convolutional layers with different filter sizes, as well as 1×1 convolutions used for dimensionality reduction, to extract features at different scales.The output of the final inception block is passed through a global average pooling layer to generate a fixed-size feature vector, followed by a fully connected layer with 1024 units and a dropout layer to prevent overfitting.

The final output layer consists of a fully connected layer with a single output unit, which estimates the calorie content of the food.A custom ReLU activation function with a negative slope of 0.01 is used for the convolutional layers in the Inception_v2 architecture, which helps prevent the "dying ReLU" problem by ensuring that all neurons receive a non-zero gradient during training.This custom ReLU function outperformed standard ReLU and LeakyReLU functions for the specific task of food calorie estimation.

2.4 Network Training

During training, the model is optimized using the stochastic gradient descent (SGD) optimizer with a learning rate of 0.001 and a momentum of 0.9.The model is trained for 50 epochs, and the weights with the lowest validation loss are saved as the final model.

1) The first step in tracking calorie intake with images is to recognize the food being consumed, which can be challenging due to the diversity of cuisines and dishes.To address this challenge, neural networks are used due to their ability to identify complex, non-linear patterns and handle factors such as image noise.Once the food is classified, the next step is to estimate its caloric content by searching online for the average calorie values per weight unit.To maintain consistency, the average calorie values for each food category are calculated per 100 g serving.

2)The model utilizes the ImageNet database and the Food-101 dataset for image classification, resulting in a total of 101 categories.The model’s specifications include an input size of 299×299×3, with a Max pooling downscale of 2 in each spatial dimension and a dropout rate of 0.4.The softmax activation function is used, and the optimization method used is stochastic gradient descent.

3)In the image processing stage, a one-hot encoding technique is used to obtain a set of binary features for each label.An image augmentation pipeline, including cropping tools and the Inception image preprocessor, is implemented.To maximize GPU utilization, a multiprocessing tool is employed.

2.5 Implementation of IoT for Food Calorie Estimation using CNN

To implement IoT for food calorie estimation, a specific cloud space is allocated to load the data, and the IoT device is connected to act as a microcontroller for further processing.The Raspberry Pi 4B hardware with four USB 2.0 ports, built-in Ethernet, 1.2 GHz 64-bit quad-core processor, 802.11 Wireless LAN, Bluetooth 4.1 Low Energy, 4GB RAM, and a sensor camera is used to capture food objects and evaluate the number of calories they may hold.To track calorie intake with images, the first step is to capture an image of the food you are about to eat using a camera or smartphone.Then, the image is processed through a machine learning algorithm that analyzes the features of the food in the image, such as its shape, size, texture, and color, to identify the type of food and estimate its calorie content.

The algorithm uses a deep neural network, which consists of multiple layers of artificial neurons, to learn from a large dataset of labeled food images.Each layer of the network extracts more complex and abstract features from the input image, gradually building a hierarchical representation of the food that captures its unique characteristics.

Once the network has learned to recognize different types of food and estimate their calorie content, it can be used to predict the calorie content of new, unseen food images.This can be done using a mobile app or a web-based tool that allows users to take a picture of their food and receive an estimate of its calorie content.By tracking their calorie intake over time, users can monitor their diet and make more informed choices about their eating habits.

2.6 Construction of Web Page Using Python

After training the deep learning model on the dataset, we deployed the model on a Raspberry Pi for use in the web application.The Raspberry Pi is a low-cost, credit-card-sized computer that is widely used in educational and prototyping projects.To ensure compatibility and consistency, we decided to use the Django web framework for the construction of the web page.

To deploy the model, we first converted it into a format that could be loaded onto the Raspberry Pi using TensorFlow Lite, a lightweight version of the TensorFlow framework designed for mobile and embedded devices.We optimized the model for the Raspberry Pi’s ARM processor architecture and reduced its size to minimize memory usage.

For the web application, we designed a user-friendly interface that allows users to take a photo of their food and upload it to the application.The application then utilizes the deployed model to estimate the volume of the food item in the image and calculate its calorie content.The estimated volume and calorie content are displayed to the user on the web page.

To construct the web page, we utilized the Django framework in conjunction with HTML, CSS, and JavaScript.The Django framework offers a robust set of tools and features for building web applications.It provides a consistent and efficient development environment, ensuring the seamless integration of the deployed model into the web application.

3 Experimental Analysis

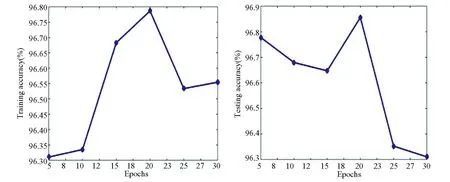

This section presents the various outcomes generated after the application of the above explained techniques.The below figure corresponds to a compilation of food objects along with the calories analyzed through the IoT implementation, and Fig.3 clearly explicates the training and testing accuracy obtained through CNN in IoT.

Fig.3 Training and testing accuracy of CNN implemented in IoT

The below compilation of results depict the outcome of food calorie analysis through the query-driven Gjango framework in Python implemented in the web page.

The experiment was conducted with the help of Python environment.Numby, Pandas, NLTK, Keras are the python library files to conduct this experiment.The performance evaluation for the proposed work is measured in terms of accuracy, error rate and implementation time.The requirements of the hardware are 8 GB RAM, 1 TB HDD, 4 GB GDD with Intel i7 core processor.The performance metrics considered in the work are error rate, accuracy, and time.The variance of predicted value and original value is AAE and is calculated as follows.

whereXirepresent the predicted value andXrepresent the original value andnis the number of parameters used in the calculation.

ARE is used to measure the whole size of the object with absolute error.

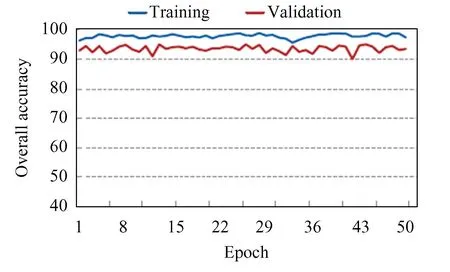

The training process generates the overall accuracy as shown in Fig.4 with the training and the validation parameters.The classification accuracy is the ratio between the number of individuals accurately predicted as having the disease and the number of individuals who are actually affected by the disease.The accuracy is calculated as follows:

Fig.4 Overall accuracy

A comparative chart of the overall accuracy estimate through both platform implementations, and their corresponding error rates are presented through the chart below.

From Fig.5, we observed that the proposed work achieves 98% which is better than the other compared models.

Fig.5 Comparative analysis of proposed work

In our experiments, we utilized two publicly available and challenging datasets, namely UEC-100/UEC-256 and Food-101.The proposed approach outperformed all existing techniques, as demonstrated in the sub-sections below.The UEC dataset comprises two sub-datasets: UEC-100 and UEC-256, which were developed by the DeepFoodCam project.This dataset includes a substantial number of food categories with textual annotation, primarily consisting of Asian foods (i.e., Japanese foods).UEC-100 contains 100 categories, with a total of 8643 images, approximately 90 images per category.UEC-256 includes 256 categories, with a total of 28375 images, approximately 110 images per category.All images are correctly labeled with their respective food categories and bounding-box coordinates indicating the positions of the labeled food partition.We chose UEC-256 as the baseline dataset due to the requirement of a large-scale training dataset.All images were divided into 5 folds, with 3 folds used for training and 2 folds used for testing.In our experiment, we initially used the pre-trained model with a 1000-class category from the ImageNet dataset, which is publicly available in the model zoo from Caffe’s community.The pre-trained model was trained using 1.2 million images for training and 100000 images for testing.We further fine-tuned the model based on the UEC-256 dataset, whose output category number is 256.The model was fine-tuned (ft) with a base-learning rate of 0.01, a momentum of 0.9, and 100000 iterations.The authors in Ref.[19] reported an accuracy of 91.73% for predicting calorie intake from food images using a CNN model trained on a dataset of 277016 images.Another study[20]used an inception model to estimate calorie intake from food images in a free-living environment, and achieved an accuracy of 88.85% on a dataset of 2793 images.The model in Ref.[21] achieved an accuracy of 97.6% on a test set of 1500 food images.Ref.[22] achieved a top-1 accuracy of 81.5% and a top-5 accuracy of 84.7% on the Food-101 dataset.

To estimate the volume of food items in the images, we utilized the K-means clustering algorithm.Specifically, we utilized the processed mathematical structure of each image and tested differentKvalues to find the best estimation ofK.To select the optimalKvalue, we calculated the root mean square error (RMSE) for eachKvalue from 1 to 100.TheKvalue that produced the minimum RMSE was selected as the bestKvalue.In this study, we found that the optimalKvalue for theK-nearest neighbor technique was 7.For each food item, we selected the seven food items that were most mathematically similar to estimate the volume of the food item.We then took the average volume of these seven food items as the estimated volume for the given food item.The ground truth for the volume was obtained by physically measuring the volume of the food item using a measuring cup.The food volume estimation errors for each food item can be found in Table 1.

Table 1 Food volume estimation error

For the second approach to calorie estimation, we directly assessed the calories based on the same set of features used for food volume estimation.This method reduced the root mean square error from 32.52 to 30.37.The calorie calculation was performed using mathematical formulas that estimated the total calorie content.The formula used was:C=c×ρ×v, whereCrepresents the estimated calorie,cis the calories per gram,ρis the average density of food, andvis the estimated volume.The ground truth for calorie estimation was obtained by measuring the food items using a food scale and consulting food databases for the caloric content per gram of each food item[23].The mean total calorie error was 45.79.The calorie estimation errors for each food item can be found in Table 2.

The results thus delineate that implementation of algorithmic techniques, and analysis through differing platforms can render a deeper insight on the food calorie estimation, and parallelly identify the optimal platform through which this assessment can be carried out.The performance is compared with standard metrics such as precision, recall, and F1-score.To calculate the precision, recall, and F1-score, we first created a confusion matrix for the food volume estimation model.The confusion matrix shows the number of true positive, false positive, true negative, and false negative predictions for each food item.From the confusion matrix, we calculated the precision, recall, and F1-score for each food item using the following formulas:

Precision=True positive/(True positive+False positive)

Recall = True positive / (True positive + False negative)

F1-score = 2×(Precision×Recall) / (Precision+Recall)

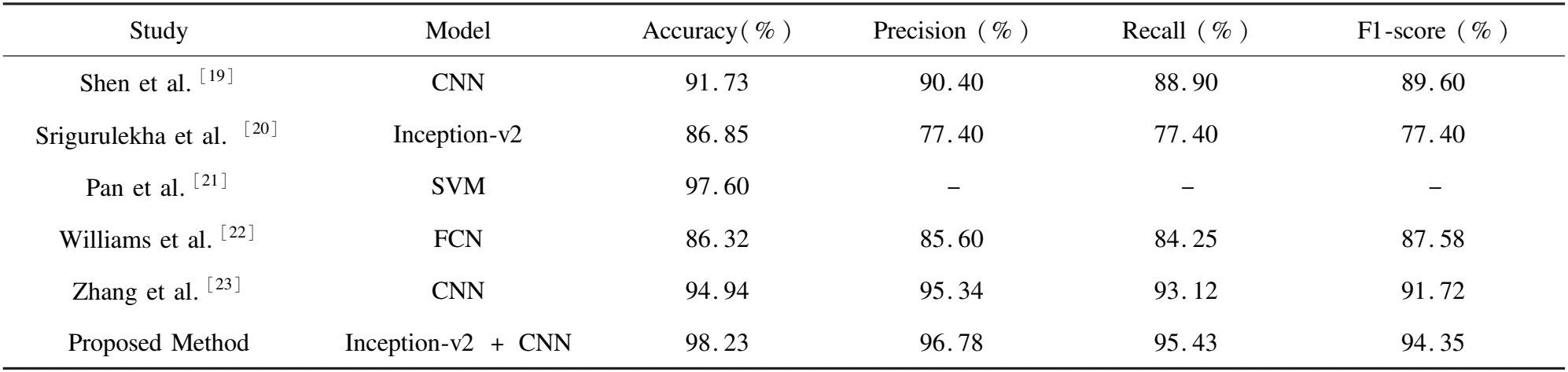

Table 3 shows the proposed method using Inception and CNN is compared with other recent approaches, which use SVM, CNN, and other classifiers.In addition to accuracy, the table includes metrics such as precision, recall, and F1-score.The proposed method using Inception and CNN achieves the highest accuracy of 0.98.

Table 3 Comparative analysis

4 Conclusions

Food calorie analysis is a burgeoning domain especially due to the colossal gremlins that are triggered through the working and external circumstances that an individual goes through.This indagation pivots on the methodological implementations of assessing the calories present in food through two different platforms.The IoT microcontroller operated through the sensor camera, and the cloud aids in secured storage of the data, along with dissecting the features to explicitly classify them.The CNN algorithm used evinces an average of 96.68% accuracy in both the training and testing aspects of classification.While the classification of food objects driven through query-based approach augments the classification and identification of the food objects explicitly.The overall accuracy of 98.23% is rendered through the Django framework in Python.A clustered platter of real-time food differentiating between healthy and unhealthy constituents could be incorporated through algorithmic effectuation, thereby augmenting the opportunities of expanding the domain’s analysis in the future.

Journal of Harbin Institute of Technology(New Series)2024年1期

Journal of Harbin Institute of Technology(New Series)2024年1期

- Journal of Harbin Institute of Technology(New Series)的其它文章

- Improved Scatter Search Algorithm for Multi-skilled Personnel Scheduling of Ship Block Painting

- Fabrication of Graphene/Cu Composite by Chemical Vapor Deposition and Effects of Graphene Layers on Resultant Electrical Conductivity

- Comprehensive Overview and Analytical Study on Automatic Bird Repellent Laser System for Crop Protection

- Design of Fully Automatic Specification Selection System for Resistance Welding Equipment

- Evaluation of Dielectric Properties of CCTO-BT/Epoxy Composites for Electronic Applications

- Intelligent Energy Utilization Analysis Using IUA-SMD Model Based Optimization Technique for Smart Metering Data