Image Feature Extraction and Matching of Augmented Solar Images in Space Weather*

WANG Rui BAO Lili CAI Yanxia

1(State Key Laboratory of Space Weather, National Space Science Center, Chinese Academy of Sciences, Beijing 100190)

2(University of Chinese Academy of Sciences, Beijing 100049)

3(Key Laboratory of Science and Technology on Environment Space Situation Awareness, Chinese Academy of Sciences, Beijing 100190)

Abstract Augmented solar images were used to research the adaptability of four representative image extraction and matching algorithms in space weather domain.These include the scale-invariant feature transform algorithm, speeded-up robust features algorithm, binary robust invariant scalable keypoints algorithm, and oriented fast and rotated brief algorithm.The performance of these algorithms was estimated in terms of matching accuracy, feature point richness, and running time.The experiment result showed that no algorithm achieved high accuracy while keeping low running time, and all algorithms are not suitable for image feature extraction and matching of augmented solar images.To solve this problem, an improved method was proposed by using two-frame matching to utilize the accuracy advantage of the scale-invariant feature transform algorithm and the speed advantage of the oriented fast and rotated brief algorithm.Furthermore, our method and the four representative algorithms were applied to augmented solar images.Our application experiments proved that our method achieved a similar high recognition rate to the scale-invariant feature transform algorithm which is significantly higher than other algorithms.Our method also obtained a similar low running time to the oriented fast and rotated brief algorithm, which is significantly lower than other algorithms.

Key words Augmented reality, Augmented image, Image feature point extraction and matching,Space weather, Solar image

0 Introduction

Solar eruptions cause perturbations in the near-Earth space weather[1], which in turn has an impact on the regular operation of space-based technology systems[2]and even ground-based electric power systems[3], climate,weather[4], and human life and health[5].Augmented Reality (AR) is a new visualization technology that integrates virtual elements with the real world[6].AR technology is now being used in a variety of industries, such as education[7], medicine[8], and even the military[9].The simulation model of the space weather situation can be integrated into the space weather popularization, forecast conference room or astronaut training venue by using AR technology, and provide the user with a more immersive experience.Registration technology is the core of the AR system[10], ensuring that model registration and scene tracking can still be accurately realized when images are scaled, rotated, view changed, or partially occluded.The key algorithm of registration technology is the image feature extraction and matching algorithm, which directly affects the accuracy and efficiency of the registration technology[11].Solar activity is an important factor that causes disturbances in space weather, and solar image data represents space weather data.There are blurred and inconspicuous color transition areas in solar images that easily cause the aggregation of image feature points and mismatches.The ability of the image feature extraction and matching algorithm to correctly match and identify the same sun image in all cases is affected and made more difficult by the blurred and inconspicuous color transition areas.Therefore,while applying image feature extraction and matching algorithms to solar images, the emphasis is on exploring an algorithm that ensures the success rate of quick model registration and scene tracking under this effect and difficulty.This paper experimented with the adaptability of four representative image feature extraction and matching algorithms with solar image data and proposed an improved method by using two-frame matching to utilize the accuracy advantage of the scale-invariant feature transform algorithm and the speed advantage of the oriented fast and rotated brief algorithm.

The remainder of this paper is organized as follows.This paper introduces the four representative image feature extraction and matching algorithms which are commonly used in AR technology and then applies these algorithms to experiment with the adaptability of solar images.And then, the improved method is proposed by using two-frame matching to utilize the accuracy advantage of the scale-invariant feature transform algorithm and the speed advantage of the oriented fast and rotated brief algorithm.

1 Image Feature Extraction and Matching Algorithms

Representative image feature extraction and matching algorithms include the Scale-Invariant Feature Transform(SIFT), Speeded-Up Robust Features (SURF), Binary Robust Invariant Scalable Keypoints (BRISK), and Oriented Fast and Rotated Brief (ORB) algorithms.The adaptability of the four image feature extraction and matching algorithms differs when applied to different domains.

1.1 SIFT

Lowe proposed the SIFT algorithm to extract distinctive invariant features from images, which can be used to perform reliable matching between different views of an object or scene[12,13].SIFT uses Gaussian filters to perform convolution operations on the image and constructs the Difference of Gaussian (DOG) pyramid of the image to ensure scale invariance of the feature points[12].Meanwhile, SIFT uses a gradient histogram to select the main direction of the feature points and constructs a floating-point 128-dimensional descriptor to guarantee the rotation invariance of the feature points.The construction of the image pyramid and the calculation of the feature directions lead to a longer running time for SIFT,but the image matching accuracy and feature point richness are high.

1.2 SURF

To solve the time-consuming problem of the SIFT algorithm, Bay and Shenget al.[14,15]proposed the SURF algorithm by simplifying and improving SIFT.SURF obtains grayscale images by preprocessing, and establishes image pyramids by approximating the computational Gaussian filter with box filters and integral images.Therefore, SURF preserves scale invariance and reduces the algorithm running time at the expense of image matching accuracy.

1.3 BRISK

Leutenegger and Siegwart proposed the BRISK algorithm to further improve the computational efficiency of SURF[16,17].BRISK substantially improves the operational efficiency using Features from the Accelerated Segment Test (FAST) and binary descriptors.However,it is prone to mismatches and performs poorly in terms of scale invariance and rotation invariance.

1.4 ORB

Rublee and Rabaudet al.[18,19]proposed the ORB algorithm to satisfy the real-time requirements of the system.ORB uses the Rotation-aware Binary Robust Independent Elementary Features (rBRIEF) descriptor, which has the shortest running time among the four algorithms.However, it has many pseudo-featured points of similar features and low accuracy, and is insensitive to scene changes.

Because the four representative image-feature extraction and matching algorithms have different characteristics, they apply to different domains.For example,Xianget al.[20]pointed out that SIFT has been successfully applied to both optical image registration and Synthetic Aperture Radar (SAR) image registration, and proposed an advanced SIFT-like algorithm to perform Optical-to-SAR (OS) image registration.Chenget al.[21]pointed out that SURF for unmanned aerial vehicle image registration has better robustness and efficiency.Al Taeeet al.[22]pointed out that BRISK is used to extract significant information from corner points in fingerprints and can pick a large amount of information when compared with minutiae points.Pan[23]pointed out that ORB has the advantages of a smaller calculation amount and simple feature point information description; thus,ORB has been widely used in SLAM algorithms based on the computer vision algorithms.Then four representative algorithms using solar image data were evaluated and analyzed to explore the adaptability of image feature extraction and matching algorithm in the space weather domain.

2 Adaptability Experiments for Solar Images

2.1 Experimental Design

In this study, solar images captured by the Atmospheric Imaging Assembly (AIA) onboard the Solar Dynamics Observatory (SDO) in the Extreme Ultraviolet (EUV)wave bands (for example, the solar images captured by SDO/AIA in EUV wave bands, were downloaded from the SDO official** website https://sdo.gsfc.nasa.gov/data/)[24].

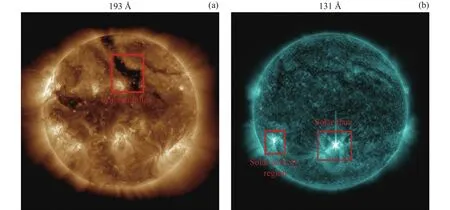

To comprehensively evaluate and analyze the performance of image feature extraction and matching algorithms in solar images, solar images were selected with representative feature areas of solar active regions, solar flares[25], and coronal holes[26]for experimentation.Therefore, the solar images of one solar rotation week from 1 to 27 May 2022 were selected for the experiment because the solar image in this solar rotation week has all representative feature areas.This paper selected the solar images in the EUV 193 Å and EUV 131 Å wave bands for the experiments, as shown in Figure 1.

As shown in Figure 1, the solar images in the EUV 193 Å wave band can clearly show coronal holes, and the solar images in the EUV 131 Å wave band can clearly show solar flares and solar active regions.Solar images are updated on SDO official website every 10 min.However, because of slowly changing solar activities such as coronal holes and solar active regions, most images within a day are similar, it is meaningless and ineffective to use similar images as samples.For the eruptive solar activities such as solar flares, a few solar images contain suddenly bright areas and these images are important and need to be selected.Considering both slowly changing and eruptive solar activities, we extracted a solar image at 13:00 UT from the daily images in each EUV 193 Å and 131 Å wave band as the target image for the experiment.

Fig.1 Example solar images in two EUV wave bands

The target images were processed using scale change, rotation change, view change, and partial occlusion to obtain the input images.In scale change, the scale ratio was 0.75 for image reduction and 1.24 for magnification.In rotation change, each solar image was rotated by 45° and 90° counterclockwise around the center point of the image respectively.In view change, to simulate viewing solar images from the left side, the left part of each solar image was stretched by 1.5 times after being rotated by 2° counterclockwise around the center point.In partial occlusion, the upper left corner of each solar image was occluded by a black area.

Four representative image feature extraction and matching algorithms were evaluated and analyzed in terms of matching accuracy, feature point richness, and running time.The feature point richnessRis calculated using Eq.(1), wheremis the number of total matched feature point pairs andais the number of remaining available feature point pairs after removing feature point pairs with unreasonably large distances[27]:

The correct matching rate is calculated using,

whereris the number of remaining correct matching feature point pairs after the incorrect matching feature points have been removed froma[27].

2.2 Experimental Results and Analyses

The experiments in this study were conducted using a Windows 10 operating system.The computer CPU model was an Intel(R) Core(TM) i7-10700, CPU frequency was 2.90 GHz, memory capacity was 16.0 GByte, and system type was a 64-bit operating system.The programming environment was PyCharm, the compilation platform was x64, and the programming language was python3.6, based on the open-source library pythonopencv3.4.This paper sets the parameter of the number of feature points detected for each algorithm at 500 magnitudes in order to ensure the consistency of the variables and the comparability of the experimental results.In all experiments, the brute force algorithm was used to match feature points[27].The Random Sample Consensus Algorithm (RANSAC) was used to eliminate mismatched points, and the threshold value used in this paper was set to 2[27,28].

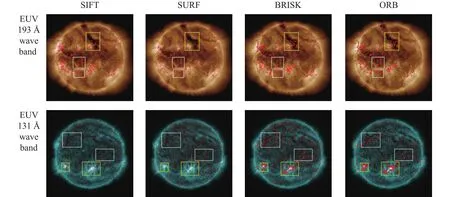

The feature points extracted by four representative image feature extraction and matching algorithms are illustrated in Figure 2.As shown in the yellow frames of Figure 2, a lot of feature points exist in the area of coronal holes, flare events and active regions, because these areas always contain algorithms concerned points such as corner points, edge points, bright points in dark areas,and dark points in bright areas.Because of the blurred and inconspicuous color transition regions in solar images, a large number of similar feature points are aggregated, as in white frames in Figure 2.

Fig.2 Examples of solar images with feature points extracted by four representative image feature extraction and matching algorithms.Yellow frames illustrated feature points caused by coronal holes, flare events and active regions.White frames illustrated feature point aggregation caused by the blurred and inconspicuous color transition regions

2.2.1 Matching Accuracy Results and Analyses

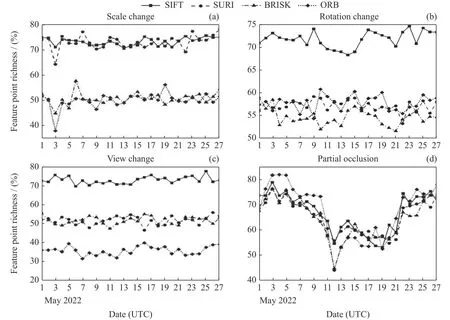

The matching accuracy results of the four representative algorithms of solar images in the EUV 193 Å and EUV 131 Å wave bands are shown in Figures 3 and 4.The results of both solar images in these two EUV wavebands show almost the same trend.SIFT significantly outperformed the other algorithms in cases of scale change, rotation change, and view change.The matching accuracy performance of SIFT in partial occlusion was as good as SURF and ORB.Therefore, SIFT had a better performance than SURF, BRISK, and ORB in terms of matching accuracy, which was consistent with our expectations.SIFT ensured the robustness of scale change by selecting extreme points as feature points in the DOG pyramid of the image.SIFT also enhanced the robustness of the rotation change and view change by using a gradient histogram to select the main direction such that it can adjust to the feature point position change caused by solar rotation.SURF performed second only to the SIFT in terms of scale and rotation change.However,SURF performed poorly in view change, which was caused by erroneous feature localization using box filtering and the approximate calculation of the integrated image.BRISK outperformed ORB in terms of scale change but was prone to instability when the image was partially occluded.

2.2.2 Feature Point Richness Results and Analyses

Fig.3 Comparative matching accuracy results of four representative image feature extraction and matching algorithms in the EUV 193 Å solar images

Fig.4 Comparative matching accuracy results of four representative image feature extraction and matching algorithms in the EUV 131 Å solar images

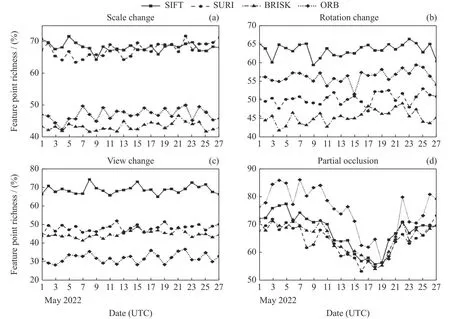

Fig.5 Comparative feature point richness results of four representative image feature extraction and matching algorithms in the EUV 193 Å solar images

Fig.6 Comparative feature point richness results of four representative image feature extraction and matching algorithms in the EUV 131 Å solar images

The four representative algorithms’ feature point richness results of solar images in the EUV 193 Å and EUV 131 Å wave bands are shown in Figures 5 and 6.The results of both solar images in these two EUV wave bands show almost the same trend.When the image is partially occluded, all four algorithms showed instability owing to the loss of the local feature points.SIFT performed best in terms of rotation and view change and performed significantly better than BRISK and ORB in scale change, with the best overall performance.This was because SIFT reduced, as much as possible, the generation of a large number of similar feature points caused by the blurred boundaries of regions, such as solar flares and coronal holes on solar images.Conversely,SIFT reduced the occurrence of mismatches by precisely constructing features and descriptors.ORB performed better than BRISK in rotation change because ORB created rotation-invariant feature points using the rBRIEF combined with the grayscale prime method for feature point direction calculation and feature description.However, ORB had low feature point richness in view change because it roughly located the feature points such that large numbers of similar feature points were likely to gather at the far end of the line of sight.

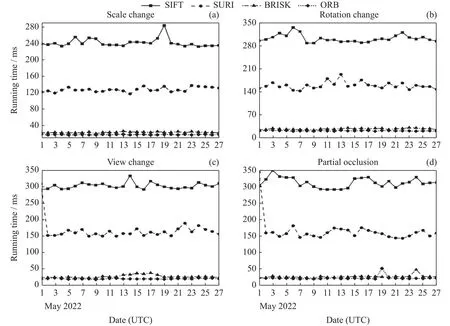

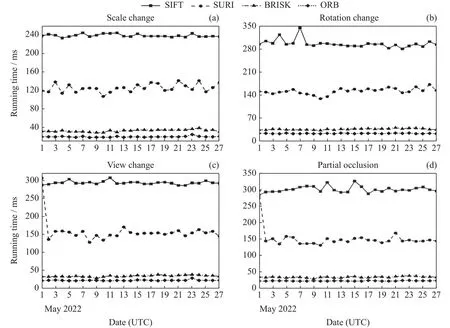

2.2.3 Running Time Results and Analyses

The running time results of the four representative algorithms of solar images in the EUV 193 Å and EUV 131 Å wave bands are shown in Figures 7 and 8.The results of both solar images in these two EUV wavebands show almost the same trend.SIFT had the longest running time, with an average running time of approximately 300 ms.SURF had a shorter running time than SIFT.ORB and BRISK had the shortest running times, both below 50 ms.As described in 1.1, SIFT had the longest running time because it had a floating-point 128-dimensional descriptor, which was computationally intensive.SURF reduced the dimensions of the descriptors to 64 dimensions based on SIFT and was, therefore, slightly faster than SIFT.Both BRISK and ORB used binary descriptors; therefore, the running times of both algorithms were significantly less than those of SIFT and SURF.

As shown above, SIFT had the best matching accuracy and feature point richness overall, but the longest running time.ORB had the shortest running time, but the bad matching accuracy.Therefore, no algorithm could meet the requirements of accuracy and speed and all algorithms could not be directly applied to augmented solar images.To solve this problem, we proposed an improved method, SIFT combined with ORB based on two-frame matching (SIFT-TF-ORB).

3 SIFT Combined with ORB Based on Two-frame Matching Method

3.1 Basic Idea

Fig.7 Comparative running time results of four representative image feature extraction and matching algorithms in the EUV 193 Å solar images

Fig.8 Comparative running time results of four representative image feature extraction and matching algorithms in the EUV 131 Å solar images

In order to take the accuracy advantage of SIFT and the speed advantage of ORB, we utilized two-frame matching to combine these two algorithms based on the temporal coherence in video frames[29].Based on two-frame matching, at first because of the large difference between the target image and video frames, the complicated SIFT was chosen to recognize with target solar image and register the solar model.After recognizing successfully, each frame was matched with the previous frame by ORB to calculate the accumulated transform matrix[29].Because of temporal coherence and high similarity between consecutive frames, ORB with the simplest descriptor could totally satisfy the accuracy requirement.Considering the error accumulation, the maximum number of frames dealt with ORB was set.Then SIFT was applied again and the process was repeated.

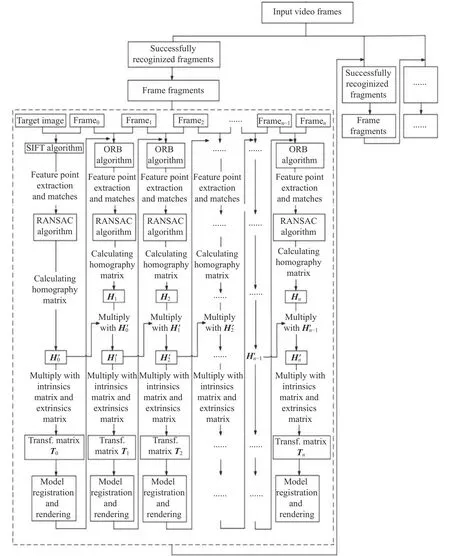

3.2 Framework

Fig.9 Framework of SIFT-TF-ORB method

The framework of SIFT-TF-ORB is illustrated in Figure 9.The input video frames were dealt with as fragments.SIFT was used to match the target image and the input video frames until successfully recognizing the first matched input frame, and this frame was labeled as frame0.βwas selected as the maximum number of frames processed by ORB in a single fragment.In this paper,βwas set to 40 according to several tests.Let framenpresent the followingnth frame in a single fragment and setn= 1.Then the process was as follows.

(1) The target image and frame0were features extracted and matched by using SIFT.RANSAC was used to calculate the basic homography matrixH0between the target image and the frame0.Combining with the camera intrinsics matrix and extrinsic matrix, the transformation matrixT0can be calculated and the solar model can be registered and rendered.

(2) ORB was used to match the consecutive framen-1and the framen.RANSAC was used to calculate the homography matrixHnbetween frames.The homography matrixHn′between the target image and the framento be calculated as

(3) To obtain the transformation matrixTnof the solar model, we multipliedH′and the camera intrin

nsics matrix and extrinsic matrix.

(4) We registered and rendered the model with virtual-reality fusion.

(5) If =β, stop; otherwise, go to (2).

After a fragment was processed, SIFT was used to search the next fragment and the process was repeated.

3.3 Experiment Results of Augmented Solar Images

3.3.1 Experimental Design

The development environment of the augmented solar images was the same as that described in Section 2.2.In this study, as described in Section 3.2, the SIFT-TFORB was applied to augmented solar images in the EUV 193 Å and EUV 131 Å wave bands and refer to[27]realize four representative algorithms for comparison.This paper processed the input video stream with 120 frames.The three-dimensional model of the sun in this application was based on 3 Dsmax by spherical texture mapping, and the texture images were solar full-disk images using the fit data of SDO/AIA[30].

The image recognition rate and the time used to process each input video frame were selected to evaluate the effect of augmented solar images.The recognition rateSwas calculated using Eq.(4), whereCis the number of frames determined to be recognized successfully after reaching the matching accuracy threshold, andNis the total number of frames used in the experiment:

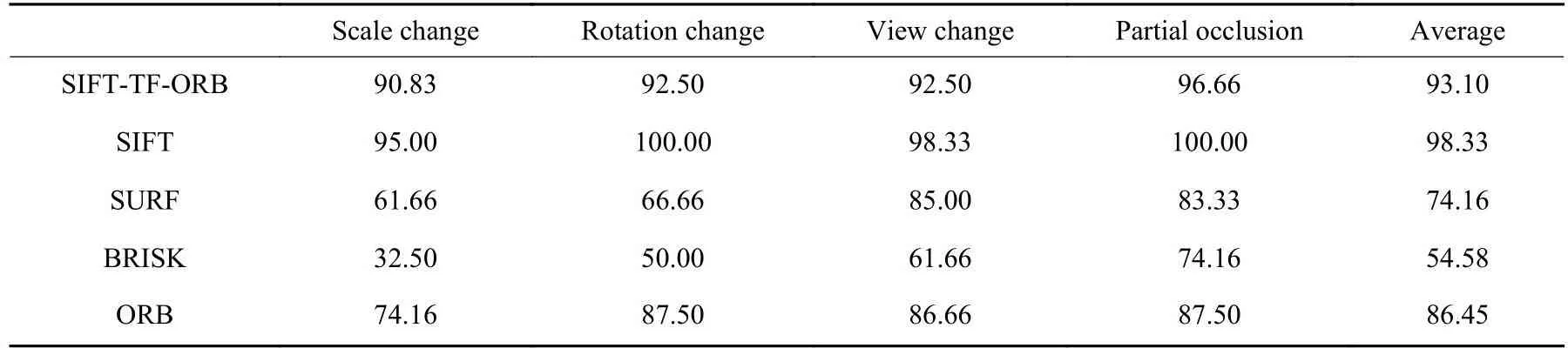

3.3.2 Experimental Results and Analyses

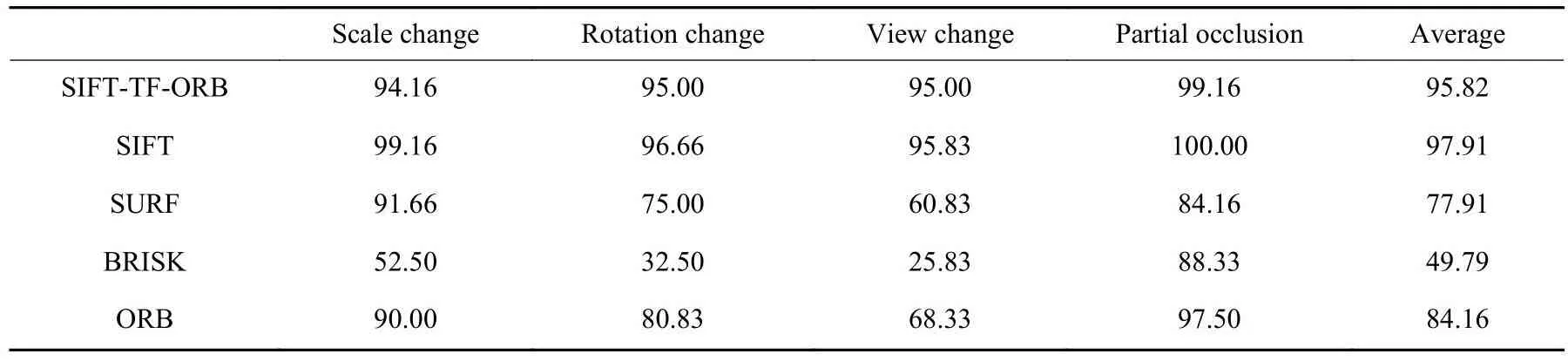

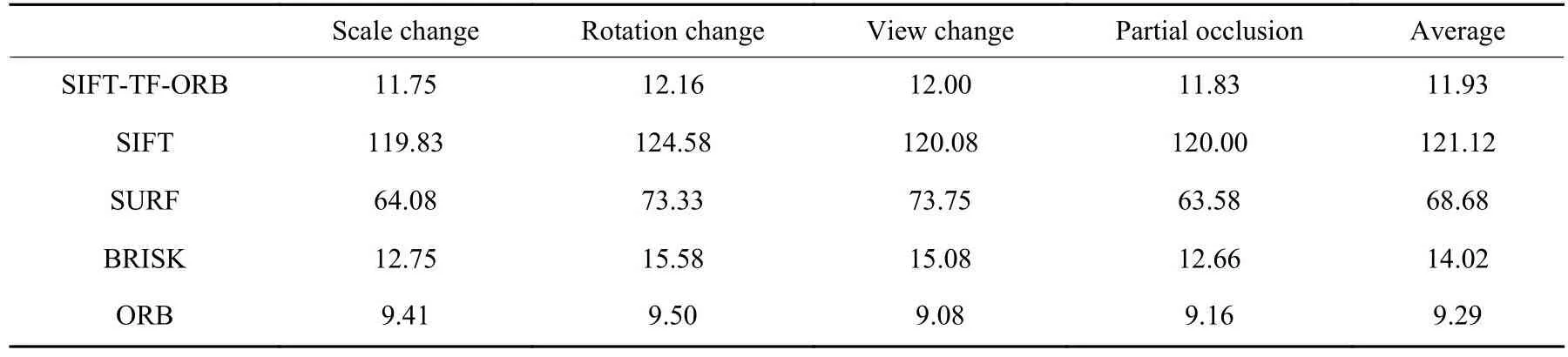

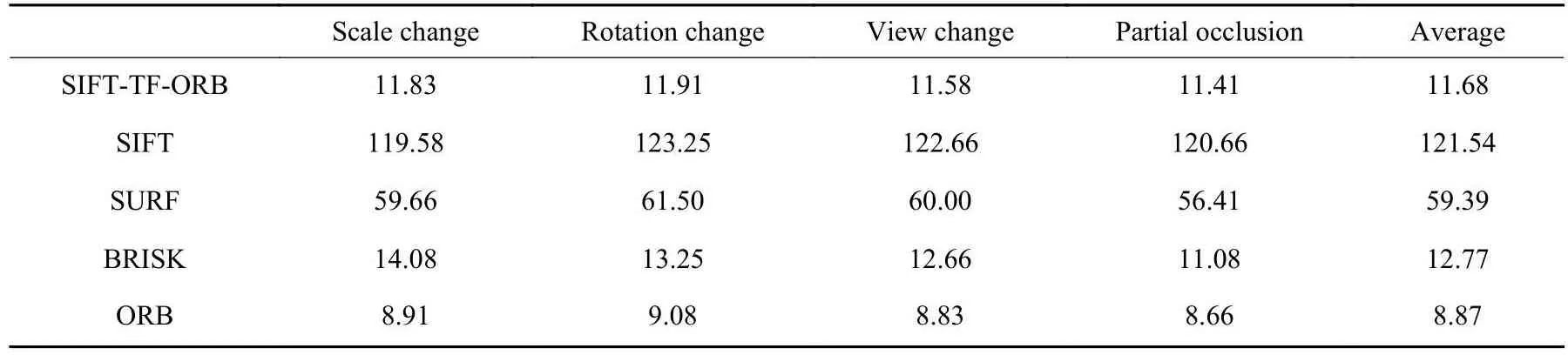

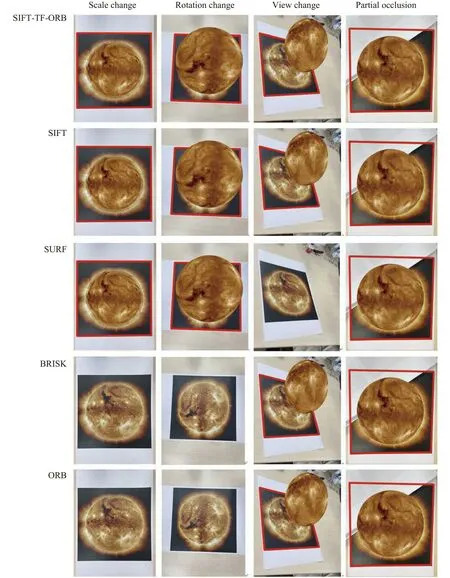

Renderings of augmented solar images are illustrated in Figure 10.Image recognition rate and running time results are recorded in Tables 1~4.As shown in Figure 10,Table 1 and Table 2, in all cases of scale change, rotation change, view change, and partial occlusion, the image recognition rates of the SIFT-TF-ORB were significantly higher than SURF, BRISK, and ORB, and similar to the image recognition rate of SIFT.As the result shown in Table 3 and Table 4, the running time of SIFTTF-ORB was obviously less than SIFT, SURF, and BRISK, and was very close to ORB.Therefore, SIFTTF-ORB costs low running time without scarifying image recognition rate.

Table 1 Image recognition rate (unit [%]) results of augmented solar images in the EUV 193 Å wave band

Table 2 Image recognition rate (unit [%]) results of augmented solar images in the EUV 131 Å wave band

Table 3 Results of running time (unit ms) used to process each input video frame in the EUV 193 Å wave bands

Table 4 Results of running time (unit ms) used to process each input video frame in the EUV 131 Å wave bands

4 Conclusion

In this paper, we experimented and analyzed the adaptability of four representative image feature extraction and matching algorithms applied in augmented solar images.Regarding the experiment results, this paper concluded that no algorithm achieved high accuracy while spending low running time.To solve this problem, the SIFT-TF-ORB method was proposed by utilizing twoframe matching to combine SIFT and ORB, based temporal coherence characteristic of video frames.Finally,our method and the four representative algorithms were applied to augmented SDO/AIA solar images in the EUV 193 Å and EUV 131 Å wave bands.The results of our application experiments proved that SIFT-TF-ORB achieved a high recognition rate and low running time at the same time compared with other algorithms.In the future, this paper will research the disturbance of feature points from unconcerned objects in the input frames and also apply our method to other space weather data.

Fig.10 Comparison of rendering augmented solar images using SIFT-TF-ORB and four representative algorithms

AcknowledgmentsThe solar image used in this study was captured by the Atmospheric Imaging Assembly (AIA)onboard the Solar Dynamics Observatory (SDO) in Extreme Ultraviolet (EUV) wave bands (https://sdo.gsfc.nasa.gov/data/).This work was supported by the Key Research Program of the Chinese Academy of Sciences (Grant No.ZDRE-KT-2021-3).